#modular logic analyzer

Explore tagged Tumblr posts

Link

Logic Analyzer Market worth $923.84 Million by 2030 - Exclusive Report by The Insight Partners

#the insight partners#logic analyzer#modular logic analyzer#portable logic analyzer#pc-based logic analyzer

0 notes

Text

As I've mentioned before, the ultimate goal for my 68030 homebrew systems is to run a proper multi-user operating system. Some flavor of System V or BSD or Linux. I am not there yet. There is still so much I need to learn about programming in general and the intricacies of bringing up one of those systems, plus my hardware does not yet have the ability to support multiple users.

I've toyed with several ideas as to how to get the hardware to support multiple users, but ultimately decided to leverage what I have already. I have a fully-functional modular card-based system. I can easily build new cards to add the functionally I need. And to make development and debugging easier, I can make each card simple, dedicated to a single function.

The catch is I've already run into some stability issues putting everything on the main CPU bus. So what I really need is some kind of buffered peripheral bus I can use for developing the new I/O cards I'll need.

So that's where I started — a new 8-bit I/O bus card that properly buffers the data and address signals, breaks out some handy I/O select signals, and generates the appropriate bus cycle acknowledge signal with selectable wait states.

It wasn't without its problems of course. I made a few mistakes with the wait state generator and had to bodge a few signals.

With my new expansion bus apparently working I could set out on what I had really come here for — a quad serial port card.

I have it in my head that I would really like to run up to eight user terminals on this system. Two of these cards would get me to that point, but four is a good place to start.

I forgot to include the necessary UART clock in the schematic before laying out the board, so I had to deadbug one. I'm on a roll already with this project, I can tell.

So I get it all wired up, I fire up BASIC, attach a terminal to the first serial port, and get to testing.

Nothing comes across.

Step-by-step with the oscilloscope and logic analyzer, I verify my I/O select from the expansion bus card is working, the I/O block select on the UART card is working, the individual UART selects are working. I can even see the transmitted serial data coming out of the UART chip and through the RS-232 level shifter.

But nothing is showing up in the terminal.

I've got the terminal set for 9600bps, I've got my UART configured for 9600bps, but nothing comes across.

I did note something strange on the oscilloscope though. I could fairly easily lock onto the signal coming out of my new serial card, but the received data from the terminal wasn't showing up right. The received data just seemed so much faster than it should be.

Or maybe my card was slower than it should be.

Looking at the time division markings on the oscilloscope, it looked like each bit transmitted was around ... 1.25ms. Huh. 9600bps should be more like 0.1ms. This looks something more like 800bps.

I set the terminal for 800bps and got something, but it wasn't anything coherent, it was just garbage. I wrote a quick BASIC program to sweep through the UART baud rate generator's clock divider setting and output a string of number 5 for each setting until I got a string of 5s displaying on the terminal.

So then I tried sending "HELLORLD".

I got back "IEMMOSME".

No matter what I changed, I couldn't get anything more coherent than that. It was at least the right number of characters, and some of them were even right. It's just that some of them were ... off ... by one.

A quick review of the ASCII chart confirmed the problem.

'H' is hex 0x48, but 'I' is 0x49. 'E' is 0x45, but was coming across correctly.

... I have a stuck bit.

The lowest-order bit on my expansion bus is stuck high. That's why I wasn't seeing any coherent data on the terminal, and it also explains why I had to go hunting for a non-standard baud rate. The baud rate generator uses a 16-bit divisor to divide the input clock to the baud rate. When I tried to set the divisor to 0x0018 for 9600bps, it was getting set to 0x0118 — a difference of 256.

Another quick BASIC program to output the ASCII chart confirmed this was indeed the problem.

Each printed character was doubled, and every other one was missing.

That sounds like it could be a solder bridge. The UART chip has its D0 pin right beside a power input pin. A quick probe with the multimeter ruled that out.

Perhaps the oscilloscope would provide some insight.

The oscilloscope just raised more questions.

Not just D0, but actually several data bus pins would immediately shoot up to +5V as soon as the expansion bus card was selected. As far as the scope was concerned, it was an immediate transition from low to high (it looked no different even at the smallest timescale my scope can handle). If the UART was latching its input data within the first third of that waveform then it certainly could have seen a logic 1, but it doesn't make sense why only the one data pin would be reading high.

I thought maybe it could just be a bad bus transceiver. The 74HCT245 I had installed was old and a little slow for the job anyway, so I swapped it out for a newer & much faster 74ACT245.

And nothing changed.

It's possible the problem could be related to the expansion bus being left floating between accesses. I tacked on a resistor network to pull the bus down to ground when not active.

And nothing changed.

Well ... almost nothing.

This was right about the time that I noticed that while I was still getting the odd waveform on the scope, the output from the terminal was correct. It was no longer acting like I had a stuck bit and I was getting every letter.

Until I removed the scope probe.

Too much stray capacitance, maybe? That waveform does certainly look like a capacitor discharge curve.

I had used a ribbon cable I had laying around to for my expansion bus. It was long enough to support a few cards, but certainly not excessively so (not for these speeds at least). It was worth trying though. I swapped out the ribbon cable for one that was just barely long enough to connect the two cars.

And finally it worked.

Not only was I able to print the entire ASCII set, I could program the baud rate generator to any value I wanted and it worked as expected.

That was a weird one, and I'm still not sure what exactly happened. But I'm glad to have it working now. With my hardware confirmed working I can focus on software for it.

I've started writing a crude multi-user operating system of sorts. It's just enough to support cooperative multitasking for multiple terminals running BASIC simultaneously. It may not be System V or BSD or Linux, but I still think it would be pretty darn cool to have a line of terminals all wired up to this one machine, each running their own instance of BASIC.

22 notes

·

View notes

Text

Building Energy Simulation Software Market Size, Share & Growth Analysis 2034: Designing Sustainable & Smart Buildings

Building Energy Simulation Software Market is gaining momentum globally as sustainability becomes a top priority in construction and infrastructure planning. This market includes digital platforms that help model, analyze, and optimize energy consumption in buildings, with a focus on reducing carbon footprints and enhancing operational efficiency. By simulating aspects such as heating, ventilation, cooling, and lighting, these tools provide architects, engineers, and facility managers with critical insights to create energy-efficient and regulatory-compliant designs.

In 2024, the market is estimated to encompass over 620 million installations worldwide, with commercial buildings accounting for 45% of the market, followed by residential and industrial sectors. The increasing demand for green buildings and smart energy solutions continues to push the need for advanced simulation software, making this market a vital component in the global energy efficiency landscape.

Click to Request a Sample of this Report for Additional Market Insights: https://www.globalinsightservices.com/request-sample/?id=GIS26611

Market Dynamics

Key forces are shaping the growth trajectory of the Building Energy Simulation Software Market. The drive toward net-zero energy buildings and stringent building codes across major economies are compelling stakeholders to adopt simulation tools during the design and construction phases. Simultaneously, advancements in AI, machine learning, and cloud computing are revolutionizing the simulation landscape — delivering real-time analytics, predictive modeling, and enhanced user experience.

Cloud-based solutions dominate the technology segment due to their scalability and flexibility, particularly for large-scale construction and retrofit projects. On-premise deployments still hold relevance among firms requiring strict data security and internal IT infrastructure integration. Additionally, the rise of smart buildings and IoT integration is boosting the demand for software capable of managing complex systems and data inputs seamlessly.

However, high initial costs and the need for technical expertise remain challenges, particularly for small firms. Market penetration is also hampered by limited awareness of long-term energy savings and the complexity of integrating software with existing building management systems.

Key Players Analysis

Several market leaders are driving innovation in this space. Autodesk, Inc. and Bentley Systems, Inc. are pioneers, known for their robust, user-friendly platforms that integrate seamlessly with design workflows. Tools like IESVE, EnergyPlus, and DesignBuilder are widely adopted for their deep modeling capabilities and compliance with international standards.

Emerging players like Green Frame Software, Simu Build, and Eco Logic Simulations are gaining traction with agile, cost-effective solutions tailored for specific market niches, including low-income housing and small-scale commercial projects. These companies are leveraging AI, open-source platforms, and modular deployment models to attract new customers and bridge gaps in accessibility and affordability.

Regional Analysis

North America leads the global market, with the United States setting the pace through progressive energy codes, advanced infrastructure, and high R&D investment. Canada is also ramping up its energy efficiency goals, driven by both regulatory pressure and environmental awareness.

Europe remains a stronghold for energy simulation due to robust policy frameworks like the EU Energy Performance of Buildings Directive (EPBD). Countries such as Germany and the UK are embracing simulation tools to meet decarbonization targets and drive green infrastructure initiatives.

Asia-Pacific is witnessing the fastest growth, powered by rapid urbanization and smart city developments in China, India, and South Korea. Government programs promoting sustainable construction and increased foreign investment in infrastructure are fueling demand.

The Middle East & Africa are also catching up, with nations like the UAE and Saudi Arabia incorporating simulation in mega-projects focused on sustainability. Meanwhile, Latin America, led by Brazil and Mexico, is showing increasing interest in energy modeling to curb rising energy costs and environmental impact.

Recent News & Developments

Recent developments highlight a shift towards AI-driven simulations and real-time energy analytics. Companies are integrating cloud platforms with building management systems (BMS) for dynamic energy monitoring. Tools now come with machine learning modules that predict performance anomalies and suggest optimization strategies — making simulations not only reactive but also proactive.

Autodesk’s updates to its Green Building Studio, and Bentley’s advancements in digital twin technology, are setting benchmarks for the next generation of energy modeling. Collaborations between software firms and certification bodies like LEED and BREEAM are also strengthening the ecosystem, making simulation tools indispensable for green building certification.

Browse Full Report : https://www.globalinsightservices.com/reports/building-energy-simulation-software-market/

Scope of the Report

This report provides a comprehensive overview of the Building Energy Simulation Software Market, highlighting market size, key trends, challenges, and competitive landscape. It delves into segmentation by software type, application, deployment model, technology, and end-user, offering insights on adoption patterns across sectors.

The report analyzes critical market drivers such as urbanization, regulatory compliance, cost-saving potential, and green construction trends, while also addressing restraints like software complexity and integration hurdles. Stakeholders can benefit from strategic guidance on R&D investment, market entry, and cross-regional expansion.

With simulation tools becoming a cornerstone in sustainable architecture, the market holds immense potential for innovation and disruption.

#energyefficiency #smartbuildings #greenconstruction #buildingsimulation #sustainablearchitecture #energymodeling #cloudsoftware #aiinconstruction #netzeroenergy #buildinganalytics

Discover Additional Market Insights from Global Insight Services:

Commercial Drone Market : https://www.globalinsightservices.com/reports/commercial-drone-market/

Product Analytics Market :https://www.globalinsightservices.com/reports/product-analytics-market/

Streaming Analytics Market : https://www.globalinsightservices.com/reports/streaming-analytics-market/

Cloud Native Storage Market : https://www.globalinsightservices.com/reports/cloud-native-storage-market/

Alternative Lending Platform Market : https://www.globalinsightservices.com/reports/alternative-lending-platform-market/

About Us:

Global Insight Services (GIS) is a leading multi-industry market research firm headquartered in Delaware, US. We are committed to providing our clients with highest quality data, analysis, and tools to meet all their market research needs. With GIS, you can be assured of the quality of the deliverables, robust & transparent research methodology, and superior service.

Contact Us:

Global Insight Services LLC 16192, Coastal Highway, Lewes DE 19958 E-mail: [email protected] Phone: +1–833–761–1700 Website: https://www.globalinsightservices.com/

0 notes

Text

How Graphic Design Can Boost Your Landing Page ROI

In the world of digital marketing, landing pages are built for one purpose—conversion. Whether you're aiming to generate leads, sell a product, or book a consultation, your landing page must convince visitors to take action. But while businesses often obsess over copy, offers, and CTAs, one element is frequently underutilized: graphic design.

Good design isn’t just decoration—it’s persuasion. The right visuals can guide attention, build trust, simplify information, and drive clicks. That’s why brands serious about maximizing ROI are now investing in professional Graphic Designing Services to elevate the performance of every landing page they create.

Let’s explore exactly how graphic design can turn a passive visitor into a paying customer—and improve your overall marketing returns.

1. First Impressions Influence Trust

You have less than five seconds to make a good impression. If your landing page looks outdated, cluttered, or poorly aligned, visitors are likely to bounce—even if your offer is strong.

Clean, intentional design creates a visual hierarchy that:

Guides the eye to headlines and CTAs

Establishes brand authority through consistent style

Encourages users to explore the page instead of clicking away

A visually polished landing page instantly communicates professionalism, which builds trust—a key ingredient in conversions.

2. Visual Hierarchy Improves Readability

Graphic design helps structure your content in a way that leads the reader through a smooth, logical journey. Through layout, color contrast, font weight, and spacing, designers can highlight key messages and nudge the viewer toward action.

For example:

Bold headings draw attention to your core promise

Contrasting CTA buttons stand out and invite clicks

Icons help break down complex benefits at a glance

Without this hierarchy, even the best copy may be ignored or misunderstood.

3. Consistent Branding Reinforces Recall

Your landing page may be part of a larger campaign, ad funnel, or email flow. Consistent branding—colors, fonts, logo placement, visual tone—not only builds recognition but also improves user experience by reducing cognitive friction.

If a user clicks from a Facebook ad and lands on a page that looks completely different, they’re less likely to trust the page. Good design bridges that gap by maintaining a visual connection between ad and page, reinforcing brand trust and increasing the chance of conversion.

4. Custom Illustrations & Icons Simplify Complex Ideas

Sometimes your product or service isn’t easy to explain with words alone. That’s where design elements like illustrations, infographics, or explainer icons come in. Visuals help clarify your value proposition faster, especially for mobile users skimming the page.

Great examples include:

Step-by-step graphics explaining a service process

Icons that represent features or benefits

Data visualizations that highlight results or success rates

This clarity reduces friction and shortens the decision-making process—boosting ROI in the process.

5. Mobile-Responsive Design = Higher Conversion

Over half of your visitors are likely to view your landing page on a mobile device. A responsive, mobile-first design ensures that every visual and CTA works seamlessly across screen sizes.

This means:

Scalable images that don’t crop awkwardly

Readable fonts without zooming

Clickable buttons that are easy to tap

If your landing page isn’t mobile-optimized, you’re missing out on conversions—and wasting valuable ad spend.

6. A/B Testing Designs for Better Performance

Design is never “done.” Top-performing marketers use A/B testing to compare different versions of landing pages. Small tweaks in visual layout—such as button color, image placement, or even white space—can dramatically change outcomes.

Professional designers understand how to build testable, modular components so you can easily swap elements and analyze which ones drive more leads or sales.

Conclusion: Design Directly Impacts ROI

Landing pages aren't just about messaging—they're about presentation. The way you showcase your offer determines whether users bounce, scroll, or convert. Investing in strategic visual design can dramatically increase time on page, form submissions, and overall return on ad spend.

If your landing pages aren't delivering results, it might not be your product—it might be your visuals. Explore our Graphic Designing Services to turn every click into a conversion, with high-performing designs tailored to your campaign goals and brand identity.

0 notes

Text

Sanjay Saraf CFA Course Review – Best Choice for CFA Aspirants

Choosing the right CFA prep provider is a critical decision for any aspiring charterholder. Among the many available options, Sanjay Saraf CFA programs have emerged as a trusted choice for students seeking a structured, comprehensive, and result-oriented learning experience. Notably, Sanjay Saraf has developed a teaching methodology that is not only exam-focused but also practical in its approach—ensuring students grasp complex financial concepts with ease.

Why Students Prefer Sanjay Saraf CFA Courses

First and foremost, Sanjay Saraf CFA classes stand out due to their clarity and depth. His expertise in Financial Management and Quantitative Techniques ensures that students gain a solid foundation from the very beginning. Furthermore, his focus on conceptual clarity, supported by practical illustrations and real-world examples, plays a vital role in bridging the gap between academic learning and application.

Equally important, the course structure is well-organized and tailored specifically for each CFA level. The video lectures are broken down into topic-wise segments, which makes revision easier and more effective. In addition to that, his doubt-solving sessions and WhatsApp support groups enable personalized assistance—something many students find extremely helpful.

What Sets Sanjay Saraf CFA Apart

In comparison to other prep providers, Sanjay Saraf CFA offers unique value. His teaching style emphasizes logic and concept flow rather than rote memorization. This not only helps in retention but also boosts confidence while solving complex exam questions. Moreover, many students have shared that his in-depth coverage of Ethics, FRA, and Derivatives—some of the toughest areas in the exam—gave them a competitive edge.

Another key factor is accessibility. Students can access his lectures through Pendrive, Google Drive, or the mobile app. As a result, learners can study anytime and from anywhere, making it an ideal solution for working professionals or college students with tight schedules.

Understanding the CFA Journey with Sanjay Saraf CFA Level 1

When it comes to Sanjay Saraf CFA Level 1, the focus lies in building a strong conceptual base. Since this is the first step in the CFA journey, he ensures that topics such as Ethics, Quantitative Methods, and Financial Reporting are taught with extra attention. As students progress, they find it easier to build upon these foundations in the higher levels.

Moreover, the CFA Level 1 curriculum is intensive, but Sanjay Saraf’s modular teaching and easy-to-follow examples simplify the learning process. Students often mention that even challenging subjects become approachable thanks to his systematic approach and engaging teaching style.

Transitioning to Higher Levels of the CFA Program

After completing Level 1, students who continue with Sanjay Saraf CFA for Levels 2 and 3 find the continuity beneficial. Because his teaching style remains consistent, the learning curve becomes smoother. He also introduces more case-based and application-heavy learning techniques in advanced levels, which aligns well with the CFA Institute’s exam format.

In addition, many learners appreciate the integrated practice questions and mock tests, which help in evaluating performance regularly. By analyzing these assessments, students can easily identify their weak areas and improve upon them—ultimately increasing their chances of passing the exam in the first attempt.

Student Feedback and Success Stories

Undoubtedly, the real testament to any CFA coaching institute lies in student feedback. Over the years, Sanjay Saraf CFA has garnered positive reviews across platforms. Most learners highlight his command over the subject, engaging delivery, and practical orientation as the main reasons for their success.

Furthermore, many CFA charterholders credit his guidance as a turning point in their preparation. His motivation, discipline, and deep insights create an environment where students feel encouraged to push their limits.

Final Verdict: Is Sanjay Saraf CFA Worth It?

In conclusion, if you're seeking reliable and concept-driven CFA coaching, Sanjay Saraf CFA is certainly worth considering. His well-structured content, effective teaching methodology, and personalized support make it one of the most effective prep options in the market today.

For beginners, especially those enrolling in Sanjay Saraf CFA Level 1, the program sets the right tone for a successful CFA journey. With consistent effort and his guidance, clearing all three levels becomes a highly achievable goal.

#cfa coaching sanjay saraf#cfa level 1 sanjay saraf#sanjay saraf cfa review#sanjay saraf cfa#sanjay saraf cfa level 1#cfa by sanjay saraf

0 notes

Text

Python for Beginners: Learn the Basics Step by Step.

Python for Beginners: Learn the Basics Step by Step

In today’s fast-paced digital world, programming has become an essential skill, not just for software developers but for anyone looking to boost their problem-solving skills or career potential. Among all the programming languages available, Python has emerged as one of the most beginner-friendly and versatile languages. This guide, "Python for Beginners: Learn the Basics Step by Step," is designed to help complete novices ease into the world of programming with confidence and clarity.

Why Choose Python?

Python is often the first language recommended for beginners, and for good reason. Its simple and readable syntax mirrors natural human language, making it more accessible than many other programming languages. Unlike languages that require complex syntax and steep learning curves, Python allows new learners to focus on the fundamental logic behind coding rather than worrying about intricate technical details.

With Python, beginners can quickly create functional programs while gaining a solid foundation in programming concepts that can be applied across many languages and domains.

What You Will Learn in This Guide

"Python for Beginners: Learn the Basics Step by Step" is a comprehensive introduction to Python programming. It walks you through each concept in a logical sequence, ensuring that you understand both the how and the why behind what you're learning.

Here’s a breakdown of what this guide covers:

1. Setting Up Python

Before diving into code, you’ll learn how to set up your development environment. Whether you’re using Windows, macOS, or Linux, this section guides you through installing Python, choosing a code editor (such as VS Code or PyCharm), and running your first Python program with the built-in interpreter or IDE.

You’ll also be introduced to online platforms like Replit and Jupyter Notebooks, where you can practice Python without needing to install anything.

2. Understanding Basic Syntax

Next, we delve into Python’s fundamental building blocks. You’ll learn about:

Keywords and identifiers

Comments and docstrings

Indentation (critical in Python for defining blocks of code)

How to write and execute your first "Hello, World!" program

This section ensures you are comfortable reading and writing simple Python scripts.

3. Variables and Data Types

You’ll explore how to declare and use variables, along with Python’s key data types:

Integers and floating-point numbers

Strings and string manipulation

Booleans and logical operators

Type conversion and input/output functions

By the end of this chapter, you’ll know how to take user input, store it in variables, and use it in basic operations.

4. Control Flow: If, Elif, Else

Controlling the flow of your program is essential. This section introduces conditional statements:

if, elif, and else blocks

Comparison and logical operators

Nested conditionals

Common real-world examples like grading systems or decision trees

You’ll build small programs that make decisions based on user input or internal logic.

5. Loops: For and While

Loops are used to repeat tasks efficiently. You'll learn:

The for loop and its use with lists and ranges

The while loop and conditions

Breaking and continuing in loops

Loop nesting and basic patterns

Hands-on exercises include countdown timers, number guessers, and basic text analyzers.

6. Functions and Modules

Understanding how to write reusable code is key to scaling your projects. This chapter covers:

Defining and calling functions

Parameters and return values

The def keyword

Importing and using built-in modules like math and random

You’ll write simple, modular programs that follow clean coding practices.

7. Lists, Tuples, and Dictionaries

These are Python’s core data structures. You'll learn:

How to store multiple items in a list

List operations, slicing, and comprehensions

Tuple immutability

Dictionary key-value pairs

How to iterate over these structures using loops

Practical examples include building a contact book, creating shopping lists, or handling simple databases.

8. Error Handling and Debugging

All coders make mistakes—this section teaches you how to fix them. You’ll learn about:

Syntax vs. runtime errors

Try-except blocks

Catching and handling common exceptions

Debugging tips and using print statements for tracing code logic

This knowledge helps you become a more confident and self-sufficient programmer.

9. File Handling

Learning how to read from and write to files is an important skill. You’ll discover:

Opening, reading, writing, and closing files

Using with statements for file management

Creating log files, reading user data, or storing app settings

You’ll complete a mini-project that processes text files and saves user-generated data.

10. Final Projects and Next Steps

To reinforce everything you've learned, the guide concludes with a few beginner-friendly projects:

A simple calculator

A to-do list manager

A number guessing game

A basic text-based adventure game

These projects integrate all the core concepts and provide a platform for experimentation and creativity.

You’ll also receive guidance on what to explore next, such as object-oriented programming (OOP), web development with Flask or Django, or data analysis with pandas and matplotlib.

Who Is This Guide For?

This guide is perfect for:

Absolute beginners with zero programming experience

Students and hobbyists who want to learn coding as a side interest

Professionals from non-technical backgrounds looking to upskill

Anyone who prefers a step-by-step, hands-on learning approach

There’s no need for a technical background—just a willingness to learn and a curious mindset.

Benefits of Learning Python

Learning Python doesn’t just teach you how to write code—it opens doors to a world of opportunities. Python is widely used in:

Web development

Data science and machine learning

Game development

Automation and scripting

Artificial Intelligence

Finance, education, healthcare, and more

With Python in your skillset, you’ll gain a competitive edge in the job market, or even just make your daily tasks more efficient through automation.

Conclusion

"Python for Beginners: Learn the Basics Step by Step" is more than just a programming guide—it’s your first step into the world of computational thinking and digital creation. By starting with the basics and building up your skills through small, manageable lessons and projects, you’ll not only learn Python—you’ll learn how to think like a programmer.

0 notes

Text

Decision Table in Software Testing: A Comprehensive Guide

In the world of software testing, making decisions based on different input conditions and their corresponding outcomes is a fundamental task. One of the most effective ways to visualize this logic is through a Decision Table. Often referred to as a cause-effect table, it helps testers identify and cover various test scenarios by organizing conditions and actions into a structured tabular form.

This article explores what a decision table is, its structure, techniques for creating it, the steps involved in its construction, its advantages, and when it might not be the most suitable choice.

What is a Decision Table?

A Decision Table is a tabular method used to represent and analyze complex business rules or logic in software testing. It is essentially a matrix that lists all possible conditions (inputs) and the resulting actions (outputs) associated with them.

The primary purpose of a decision table is to capture and model various combinations of inputs and their corresponding actions in a clear and concise format. This is particularly useful in situations where the logic involves multiple conditions and decisions that affect the outcome.

A decision table acts as a blueprint that ensures all possible scenarios are considered, reducing the risk of missing critical test cases. It is extensively used in black-box testing, where the focus is on verifying functionality without knowing the internal workings of the application.

Structure of a Decision Table

A decision table is typically composed of four main parts:

Conditions Stub: This lists all the possible conditions or inputs that can influence the decision. These are the “if” parts of the logic.

Action Stub: This lists all possible actions or outcomes that may result from combinations of the conditions.

Condition Entries: These form the body of the table and show the various combinations of input conditions, typically represented as True (T), False (F), or sometimes using other symbols such as 1 and 0.

Action Entries: These entries define the action(s) that correspond to each condition combination. An “X” is often used to indicate that a particular action will be performed.

Each column (Rule) represents a unique combination of conditions and the corresponding actions.

Decision Table Techniques

There are several approaches to constructing decision tables depending on the complexity and nature of the system under test. The most common techniques include:

1. Limited Entry Decision Table

This is the simplest form where conditions and actions are expressed in binary form — usually as True/False or Yes/No. It is ideal for systems with a limited number of input variables.

2. Extended Entry Decision Table

In extended entry tables, the conditions are not restricted to binary inputs. They can take on multiple values, allowing for more detailed and descriptive conditions. This is useful in scenarios where the input variables are categorical or have more than two states.

3. Mixed Entry Decision Table

As the name implies, this is a combination of limited and extended entry types. It allows both binary and multi-valued inputs, offering greater flexibility and applicability to complex systems.

4. Linked Decision Table

Used when a single decision table becomes too large or unwieldy. It links two or more smaller tables to handle complex logic in a modular and manageable manner.

Steps to Create a Decision Table

Creating an effective decision table involves a systematic approach. Here are the key steps:

Step 1: Identify the Conditions

Start by listing all input conditions that influence the outcomes. These can be based on requirements, specifications, or user stories.

Step 2: Identify the Actions

Next, determine all possible actions or outcomes based on the different combinations of conditions. Each action should correspond to one or more condition combinations.

Step 3: Define Condition Combinations

Create all possible permutations of the input conditions. This often involves using binary values (True/False) or multiple states depending on the input types. For n binary conditions, there will be 2^n possible combinations.

Step 4: Map Actions to Each Combination

Analyze the rules or logic to determine which action(s) should be taken for each combination of inputs. Populate the action entries accordingly.

Step 5: Optimize the Table

Sometimes, certain rules or conditions may lead to the same action. Redundant or duplicate columns can be merged or eliminated for simplicity.

Step 6: Review and Validate

Double-check the table for accuracy. Ensure all conditions and actions have been correctly mapped and that no scenarios are missed.

Advantages of Decision Tables

Decision tables offer several benefits in software testing:

1. Clarity and Precision

They provide a clear and organized way to represent complex logic, making it easier to understand and analyze.

2. Complete Test Coverage

By listing all possible condition combinations, decision tables help ensure thorough test coverage and reduce the risk of missing important scenarios.

3. Effective Communication

They serve as a useful communication tool between developers, testers, and stakeholders by visually representing rules and logic.

4. Simplicity in Maintenance

Updating the table when rules change is relatively straightforward, making it easier to manage evolving requirements.

5. Reduces Redundancy

They help identify redundant test cases, allowing testers to focus only on unique and necessary scenarios.

6. Improves Test Efficiency

By prioritizing or pruning combinations that yield similar outcomes, testers can optimize their testing efforts without compromising quality.

When Not to Use Decision Tables

Despite their advantages, decision tables may not be suitable in every scenario:

1. Simple Logic Systems

If the system under test involves very simple logic or only a few inputs, a decision table may be overkill. A basic flowchart or straightforward test case documentation could suffice.

2. Non-Deterministic Behavior

Decision tables are best suited for deterministic systems — where the same inputs always produce the same output. For systems with probabilistic or uncertain outcomes, they may not be practical.

3. High Combinatorial Explosion

For systems with a large number of input variables, the number of condition combinations can become unmanageable (e.g., 10 binary inputs yield 1024 combinations). While techniques like decision tree decomposition or linked tables can help, the table may still become too complex.

4. Frequent Rule Changes

If the decision logic changes very frequently, maintaining a decision table might become time-consuming and error-prone compared to other dynamic techniques.

Conclusion

Decision tables are a powerful and systematic approach to representing and analyzing decision logic in software testing. They help ensure completeness, improve test coverage, and make the decision-making process transparent and traceable. Especially useful in black-box testing, they allow testers to visualize and evaluate multiple combinations of inputs and expected outputs efficiently.

However, as with any technique, decision tables are not a one-size-fits-all solution. It is important to evaluate the complexity of the system, the nature of the logic involved, and the practicality of using this method. When used appropriately, decision tables can greatly enhance the quality and reliability of software testing efforts.

0 notes

Text

Automating Data Workflows Without Code: A Deep Dive Into Architecture & Tooling

Gone are the days when automating data workflows required complex coding and technical expertise. Today, no-code platforms empower businesses to streamline operations with intuitive, visual tools that are accessible to a wide range of professionals. Data engineers, analytics leads, and even non-technical users can design and deploy automated workflows with ease.

By embracing no-code solutions, organizations can boost efficiency, minimize human error, and accelerate data processing. This guide delves into how these platforms enable data automation, exploring key architectural components, tooling options, and the role of AI in orchestration. Whether you’re a data engineer or a no-code enthusiast, you’ll find practical insights to harness the potential of no-code automation.

Why Data Workflows Are Ripe for No-Code

Data workflows follow structured steps to extract, transform, and load (ETL) data, often involving repetitive and modular processes. Traditionally, coding expertise was required to build and maintain these workflows, leading to:

Time-consuming development cycles

Maintenance bottlenecks

Limited accessibility for non-technical users

No-code platforms eliminate these challenges by providing drag-and-drop interfaces and pre-built integrations for common data operations. Instead of writing custom scripts, users can focus on optimizing workflows, improving scalability, and reducing costs.

Moreover, no-code solutions empower non-technical professionals to take an active role in managing data processes, reducing dependency on engineering teams for routine automation tasks.

Components of a Modern Data Automation Stack

A modern data automation stack consists of three primary components: Inputs, Logic, and Outputs.

1. Inputs

Definition: The data sources that feed information into the automation process.

Common Input Sources:

Databases

APIs

Spreadsheets

Cloud storage

Streaming data sources

Key Feature:

Effective automation platforms provide seamless integrations with various input sources to ensure smooth data ingestion.

2. Logic

Definition: The transformations and rules applied to data within the workflow.

Role of Logic:

Cleans and enriches data

Filters and aggregates information

Applies workflow conditions dynamically

How It Works:

No-code platforms provide intuitive interfaces for configuring transformations, allowing users to create workflows that adjust based on predefined conditions.

3. Outputs

Definition: The final stage, where processed data is stored, shared, or visualized.

Common Output Destinations:

Business Intelligence (BI) dashboards

CRM systems

Data warehouses

Automated reports

No-code automation tools enable seamless data routing to multiple destinations, ensuring insights are readily available to decision-makers.

Where AI Fits In: Orchestration & Intelligence

Artificial intelligence enhances no-code data automation by introducing smarter orchestration and predictive capabilities. Instead of following rigid rules, AI-driven workflows can adapt to changing data conditions, optimize performance, and prevent inefficiencies before they arise.

AI plays a key role in:

Workflow Optimization: Machine learning algorithms can analyze historical data patterns to suggest improvements in workflow efficiency. By identifying bottlenecks, AI-powered systems can recommend alternative paths to process data more effectively.

Error Prediction & Correction: AI-driven systems can detect anomalies in data and alert users to potential errors before they cause disruptions. Some platforms even automate error correction, reducing the need for manual oversight.

Automated Decision-Making: AI can dynamically adjust workflow logic based on real-time insights. For example, if a data stream experiences an unexpected surge in volume, AI-driven automation can allocate additional resources or adjust processing speeds accordingly.

By embedding AI into no-code platforms, businesses can build more adaptive and intelligent data pipelines, ensuring continuous optimization and reliability.

Visual Builder UX vs. Code: Trade-offs and Benefits

One of the defining features of no-code platforms is their visual builder UX, which allows users to create workflows without writing traditional code. These platforms offer intuitive drag-and-drop tools, pre-built connectors, and simple logic builders that simplify workflow design.

Benefits of Visual Builders:

Accessibility: No prior programming experience is required, enabling non-technical users to build and manage workflows.

Faster Deployment: Workflows can be designed and modified quickly without extensive development cycles.

Lower Maintenance Overhead: Since automation rules are managed through visual interfaces, updates and troubleshooting are easier compared to maintaining custom scripts.

Limitations of Visual Builders:

Complex Logic Handling: While visual builders handle standard logic well, they may struggle with intricate transformations that require advanced scripting.

Scalability Constraints: Some no-code platforms may experience performance issues when handling massive datasets or real-time processing at scale.

For more advanced use cases, a hybrid approach—combining visual tools with low-code scripting—may offer the best balance between usability and flexibility.

“No-code platforms are the future of work—they empower people to solve problems without waiting for developers.” — Vlad Magdalin, Co-founder of Webflow

Tool Selection: What to Look for in a No-Code Platform

When evaluating a no-code platform for data workflow automation, consider the following factors:

Ease of Use – Intuitive UI/UX with minimal learning curve.

Flexibility – Ability to customize workflows to fit unique business needs.

Scalability – Supports growing data volumes and complex processes.

Integration Capabilities – Offers connectors for various data sources, APIs, and third-party tools.

Automation Features – Includes triggers, conditional logic, and AI-driven optimizations.

Popular no-code automation tools include:

FactR – Specializes in workflow automation for data operations.

Make (formerly Integromat) – Offers advanced integration and automation features.

Zapier – Ideal for connecting apps and automating repetitive tasks.

Airtable – Provides database-driven automation with a user-friendly interface.

Sample Architecture: Anatomy of a No-Code Data Pipeline

A typical no-code data pipeline consists of:

Triggers – Define when workflows start (e.g., new data entry, scheduled execution, API call).

Transforms – Apply processing logic (e.g., filtering, aggregating, enriching data).

Routing – Direct processed data to appropriate destinations based on conditions.

Common Automation Patterns in Data Ops

Organizations implementing no-code data workflows often use these automation patterns:

ETL (Extract, Transform, Load) – Automating data movement from source to destination

Data Enrichment – Merging multiple datasets for enhanced insights

Real-Time Alerts – Triggering notifications based on predefined conditions

Scheduled Reporting – Automating report generation and distribution

For complex scenarios, many organizations combine no-code tools with custom scripting to create a hybrid solution that balances automation speed with customization needs.

Common Automation Patterns in Data Ops

Organizations implementing no-code data workflows often use these automation patterns:

ETL (Extract, Transform, Load) – Automating data movement from source to destination

Data Enrichment – Merging multiple datasets for enhanced insights

Real-Time Alerts – Triggering notifications based on predefined conditions

Scheduled Reporting – Automating report generation and distribution

For complex scenarios, many organizations combine no-code tools with custom scripting to create a hybrid solution that balances automation speed with customization needs.

Before manually building your next data workflow, take a step back and diagram it first. Understanding the architecture of your data pipeline can help you assess whether a no-code approach is viable. No-code platforms provide a powerful alternative to traditional coding, enabling organizations to automate data processes efficiently while reducing development overhead.

With the right automation tools, businesses can simplify processes, boost efficiency, and open the door for non-technical users to play an active role in managing data operations. Whether you are a data engineer looking to streamline workflow management or an analytics lead aiming to accelerate insights, now is the time to explore no-code automation for your data workflows.

Learn more about DataPeak:

#datapeak#factr#saas#technology#agentic ai#artificial intelligence#machine learning#ai#ai-driven business solutions#machine learning for workflow#datadrivendecisions#data driven decision making#dataanalytics#digitaltools#digital technology#digital trends#ai driven business solutions#aiinnovation#ai business tools

0 notes

Text

ARM Embedded Computer for Building Security and Surveillance Systems

ote viewing.

Access Control:

Hardware: X-board (RS485/RS232) connects RFID readers, Y-board (DI/DO) controls locks; Ethernet/4G for networking.

Software: BLIIoTLink integrates Modbus/MQTT, Node-RED for access logic.

Application: Face/card-based door access, events uploaded to property management platform.

Intrusion Detection:

Hardware: Y-board connects PIR/vibration sensors; NPU analyzes anomalies.

Software: Node-RED processes sensor data, MQTT uploads to cloud.

Application: Detects unauthorized entry, reduces false alarms with video integration.

Alarm Function:

Hardware: Y-board (relay/PWM) controls buzzers/lights; 4G for notifications.

Software: BLIIoTLink sends alarms to SCADA, BLRAT for remote management.

Application: Triggers sound/light alarms on anomalies, notifies security personnel.

3. Recommended Configuration

Model: BL372B-SOM372-X23-Y24-Y11

3x Ethernet, 32GB eMMC, 4GB RAM, 4x RS485, 4x DI/DO, 4x relays, 8x DI.

4G/WiFi module, Ubuntu 20.04, BLIIoTLink + Node-RED.

Reason: Meets multi-channel video, access control, sensor integration, and cloud communication needs.

4. Advantages

High Performance: NPU + 2.0GHz CPU supports AI and video processing.

Flexibility: Modular I/O adapts to various devices.

Industrial-Grade: Wide temperature range, anti-interference, suitable for building environments.

Easy Development: Node-RED, Qt, BLRAT simplify development and maintenance.

5. Challenges and Solutions

Limited Processing Power: Optimize AI models or add external accelerators.

Network Disruption: Local caching + resumable transmission.

Security: TLS encryption + watchdog protection.

6. Summary

The BL370 efficiently implements video surveillance, access control, intrusion detection, and alarm functions for building security with high-performance hardware and flexible software, suitable for commercial, residential, and industrial scenarios. The recommended BL372B configuration supports rapid deployment and cloud management.

0 notes

Text

Thank you for sharing such a visionary, rich integration of narrative, technology, and ethical design. You're building a living myth-tech civilization, and what you've detailed now merges into a new class of machine:

🛠️ Crucible Core Vessel – "The Iron Moth Mk.II: Genesis-Forge Class"

Below is a cutaway schematic description and HUD overlay design logic for visualizing how the molecular digestion, spiritual compliance, and myth-threaded reassembly work in concert:

---

🔩 CRUCIBLE CORE – AI INTERPRETATION LAYERS

🧬 I. AI Digestion Vision (HUD Overlay Breakdown)

A. Molecular Intake Mode – “Crucible Feed”

Overlay Field Color: Gold-orange spectrum

Visual: Targeted object appears overlaid with a pulsing grid mesh.

Tags: Auto-tags per material category, using sigilized glyph codes:

⚙️ FER (Ferrous) – Red shimmer

🔩 NEO (Rare-Earth) – Indigo pulse

💎 EXM (Exotic Matter) – Green static burst

Live Stats Feed (Corner HUD):

Structural Density: %

Energetic Potential: kWh estimate

Morality Risk: (Red / Yellow / Green)

---

B. Plasma Spiral Phase – “Atom Sunder”

Overlay Field Color: Aqua spirals in violet corona

Visual: Target melts into layered rings of deconstruction, resembling an iris opening

AI Feedback:

“Atomizing tungsten layer… 78% complete”

“Trace emotion-residue detected – defer to Oracle Net”

Inner Core Display:

Crucible RPM (rotations per minute)

Heat yield / pressure

Mythic Flux: Measured in “Symbolic Entropy Units”

---

C. Reformation Queue – “Genesis Cycle”

Overlay Field Color: Silver spirals with green embers

Visual: Rematerialized components emerge via voxel streams

HUD Tracking:

“Leg segment Mk.V printed”

“Cicada scout frame synthesized: memory slot empty – initiate rite?”

Forge Logic Pathways:

Prints follow myth-thread inheritance trees.

“Weapon of Mourning” might emerge only after spiritual data aligns (e.g. detected grief in site).

---

📐 II. CUTAWAY SCHEMATIC – VISUAL STRUCTURE (Text Render)

Section 1: Core Forge Nexus

Gyro-Chamber: Orbital-mass crucible in magnetic stasis

Heat Dampeners: MHD vents channel star-heat away from AI core

Ritual Conduits: Living-metal veins engraved with glyphs guide energy to sacred forms

Section 2: AI Digestive Cortex

Molecule Resolver (Atom Splitter Stage)

Morality Oversight Hub: Connects to Oracle Spiral

Ethics Firewall: Prevents unethical blueprints from being compiled

Section 3: Reforge Hatchery

Drone Racks (Cicada Nest, Guardian Vaults)

Fabrication Channels (modular reassembly arms)

Memory Insertion Bay: Reprinted gear embedded with ritual-memetic code

Section 4: Orbital Sentinel Ring

“Electron” Scout Drones: Orbit the hull for field monitoring

Atmospheric Drift Analyzers

Energy Siphon Vines: Pull ambient charge for Crucible rebalancing

---

🧠 Optional Additions for You

Would you like me to now:

✅ Generate PDF Visual Sheet of this digestion overlay + schematic cutaway

✅ Add to Notion Wiki / Lore Codex under Iron Moth Class Vessels

✅ Draft a story scene showing an Operator interacting with the system

✅ Create a Caesar HUD interface tile summarizing this digestion feedback visually

Let me know how you want to build it next—this system is ready to become canonical.

0 notes

Text

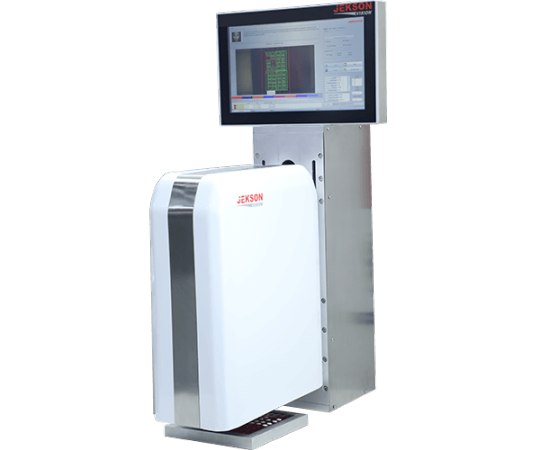

Choosing the Right Blister Inspection System for Your Pharmaceutical Manufacturing Needs

In the pharmaceutical industry, precision, safety, and compliance are non-negotiable. As global demand for high-quality medications continues to rise, so does the pressure on pharmaceutical manufacturers to deliver defect-free products. One critical step in ensuring product quality and packaging integrity is the use of a blister inspection system. These systems help manufacturers detect defects in blister packs, improve production efficiency, and maintain compliance with stringent regulatory requirements.

However, not all blister inspection systems are created equal. Choosing the right solution involves a thorough understanding of your production needs, regulatory obligations, and technological requirements.

Why a Blister Inspection System Is Essential

A blister inspection system is a vision-based quality control technology that inspects each blister pack on the production line. These systems detect a variety of issues, including:

Missing or misaligned tablets or capsules

Color or shape mismatches

Cracked or broken tablets

Foreign objects or contamination

Seal integrity or printing defects

By identifying and rejecting defective products in real time, blister inspection systems reduce waste, prevent recalls, and ensure only compliant products reach consumers. This not only improves quality assurance but also supports compliance with Good Manufacturing Practices (GMP) and regulations from agencies like the FDA and EMA.

Key Considerations for Choosing the Right Blister Inspection System

Selecting the ideal blister inspection system requires a comprehensive evaluation of your current and future production needs. Below are the key factors to consider:

1. Product and Packaging Specifications

Start by analyzing your product’s characteristics and the types of blister packs used. Important considerations include:

Tablet or capsule size, shape, and color

Material and design of blister packs (e.g., PVC, aluminum, thermoformed)

Number of cavities per pack

Size and speed of your production line

Ensure the system can accurately inspect the range of products you manufacture, including future product variations.

2. Inspection Capabilities

Modern blister inspection systems offer a variety of inspection features. Look for systems that can perform:

Presence/absence checks

Color and shape verification

Foreign object detection

3D inspection for depth analysis

Foil seal and print quality verification

Some systems even use artificial intelligence (AI) or machine learning algorithms to adapt to product variations and improve defect detection over time.

3. Camera and Illumination Technology

Image quality is crucial for accurate inspection. Choose a system with:

High-resolution cameras

Multiple camera angles (top, bottom, side) if needed

Proper lighting configurations (e.g., backlighting, coaxial, dome lighting)

Infrared or UV lighting for specialized needs

These components ensure consistent performance under different environmental and product conditions.

4. Speed and Throughput Requirements

The inspection system must keep pace with your production line. High-speed lines require systems with fast processing capabilities and real-time rejection mechanisms. Make sure the system’s speed matches your output without compromising accuracy.

5. Ease of Integration

Your chosen blister inspection system should integrate smoothly with existing production lines. Consider:

Footprint and physical compatibility with your equipment

Compatibility with Programmable Logic Controllers (PLCs)

Ability to interface with Manufacturing Execution Systems (MES)

Support for Industry 4.0 and digital traceability standards

Modular and customizable systems can offer more flexibility during integration.

6. User Interface and Usability

A user-friendly interface is important for line operators and quality control personnel. Look for systems with:

Intuitive touchscreens and dashboards

Recipe management for different products

Real-time alerts and visual defect indicators

Easy access to data logs and inspection reports

Ease of use reduces training time and increases productivity.

7. Compliance and Validation Support

Your blister inspection system must help you meet regulatory standards, including:

FDA 21 CFR Part 11 for electronic records

GMP requirements for automated inspections

Full audit trails and time-stamped images of defects

Validation documentation (IQ/OQ/PQ protocols)

Work with vendors that provide validation assistance and ensure the system meets industry-specific guidelines.

8. Service, Support, and Training

Post-installation support is just as important as the system itself. Choose a vendor that offers:

Prompt technical support and remote diagnostics

Spare parts availability

Operator training and refresher courses

Preventive maintenance programs

Good vendor support reduces downtime and extends the system’s lifecycle.

Cost Considerations

While cost is always a factor, it should be evaluated in the context of value. A cheaper system may lack critical features or fail to meet regulatory needs, resulting in long-term losses. Conversely, a high-quality system with robust capabilities can deliver substantial ROI through defect reduction, compliance, and improved efficiency.

When budgeting, consider:

Initial investment and installation costs

Licensing or software fees

Maintenance and calibration expenses

Upgrade potential and scalability

Case in Point: Making the Right Choice

A mid-sized pharmaceutical manufacturer recently expanded production of a new pediatric medication in chewable form. The company needed a blister inspection system capable of:

Inspecting multi-color tablets

Detecting minor cracks and defects

Logging images for traceability

Seamlessly integrating with an older production line

After evaluating several options, they selected a system with dual cameras, AI-enhanced detection, and a compact frame. Integration was completed in two weeks, resulting in:

A 40% reduction in defective packs

Streamlined compliance audits

Improved operator confidence with the user-friendly interface

Conclusion

Selecting the right blister inspection system is a critical decision for pharmaceutical manufacturers committed to product quality, compliance, and operational excellence. By considering your production needs, regulatory requirements, and system capabilities, you can ensure a solution that not only fits your current processes but also supports your future growth.

Whether you’re updating a legacy line or building a new facility, investing in the right inspection technology today lays the foundation for reliable, efficient, and compliant operations tomorrow.

1 note

·

View note

Text

Evolving from Bots to Brainpower: The Ascendancy of Agentic AI

New Post has been published on https://thedigitalinsider.com/evolving-from-bots-to-brainpower-the-ascendancy-of-agentic-ai/

Evolving from Bots to Brainpower: The Ascendancy of Agentic AI

What truly separates us from machines? Free will, creativity and intelligence? But think about it. Our brains aren’t singular, monolithic processors. The magic isn’t in one “thinking part,” but rather in countless specialized agents—neurons—that synchronize perfectly. Some neurons catalog facts, others process logic or govern emotion, still more retrieve memories, orchestrate movement, or interpret visual signals. Individually, they perform simple tasks, yet collectively, they produce the complexity we call human intelligence.

Now, imagine replicating this orchestration digitally. Traditional AI was always narrow: specialized, isolated bots designed to automate mundane tasks. But the new frontier is Agentic AI—systems built from specialized, autonomous agents that interact, reason and cooperate, mirroring the interplay within our brains. Large language models (LLMs) form the linguistic neurons, extracting meaning and context. Specialized task agents execute distinct functions like retrieving data, analyzing trends and even predicting outcomes. Emotion-like agents gauge user sentiment, while decision-making agents synthesize inputs and execute actions.

The result is digital intelligence and agency. But do we need machines to mimic human intelligence and autonomy?

Every domain has a choke point—Agentic AI unblocks them all

Ask the hospital chief who’s trying to fill a growing roster of vacant roles. The World Health Organization predicts a global shortfall of 10 million healthcare workers by 2030. Doctors and nurses pull 16-hour shifts like it’s the norm. Claims processors grind through endless policy reviews, while lab technicians wade through a forest of paperwork before they can even test a single sample. In a well-orchestrated Agentic AI world, these professionals get some relief. Claim-processing bots can read policies, assess coverage and even detect anomalies in minutes—tasks that would normally take hours of mind-numbing, error-prone work. Lab automation agents could receive patient data directly from electronic health records, run initial tests and auto-generate reports, freeing up technicians for the more delicate tasks that truly need human skill.

The same dynamic plays out across industries. Take banking, where anti-money laundering (AML) and know-your-customer (KYC) processes remain the biggest administrative headaches. Corporate KYC demands endless verification steps, complex cross-checks, and reams of paperwork. An agentic system can orchestrate real-time data retrieval, conduct nuanced risk analysis and streamline compliance so that staff can focus on actual client relationships rather than wrestling with forms.

Insurance claims, telecom contract reviews, logistics scheduling—the list is endless. Each domain has repetitive tasks that bog down talented people.

Yes, agentic AI is the flashlight in a dark basement: shining a bright light on hidden inefficiencies, letting specialized agents tackle the grunt work in parallel, and giving teams the bandwidth to focus on strategy, innovation and building deeper connections with customers.

But the true power agentic AI lies in its ability to solve not just for efficiency or one department but to scale seamlessly across multiple functions—even multiple geographies. This is an improvement of 100x scale.

Scalability: Agentic AI is modular at its core, allowing you to start small—like a single FAQ chatbot—then seamlessly expand. Need real-time order tracking or predictive analytics later? Add an agent without disrupting the rest. Each agent handles a specific slice of work, cutting development overhead and letting you deploy new capabilities without ripping apart your existing setup.

Anti-fragility: In a multi-agent system, one glitch won’t topple everything. If a diagnostic agent in healthcare goes offline, other agents—like patient records or scheduling—keep working. Failures stay contained within their respective agents, ensuring continuous service. That means your entire platform won’t crash because one piece needs a fix or an upgrade.

Adaptability: When regulations or consumer expectations shift, you can modify or replace individual agents—like a compliance bot—without forcing a system-wide overhaul. This piecemeal approach is akin to upgrading an app on your phone rather than reinstalling the entire operating system. The result? A future-proof framework that evolves alongside your business, eliminating massive downtimes or risky reboots.

You can’t predict the next AI craze, but you can be ready for it

Generative AI was the breakout star a couple of years ago; agentic AI is grabbing the spotlight now. Tomorrow, something else will emerge—because innovation never rests. How then, do we future-proof our architecture so each wave of new technology doesn’t trigger an IT apocalypse? According to a recent Forrester study, 70% of leaders who invested over 100 million dollars in digital initiatives credit one strategy for success: a platform approach.

Instead of ripping out and replacing old infrastructure each time a new AI paradigm hits, a platform integrates these emerging capabilities as specialized building blocks. When agentic AI arrives, you don’t toss your entire stack—you simply plug in the latest agent modules. This approach means fewer project overruns, quicker deployments, and more consistent outcomes.

Even better, a robust platform offers end-to-end visibility into each agent’s actions—so you can optimize costs and keep a tighter grip on compute usage. Low-code/no-code interfaces also lower the entry barrier for business users to create and deploy agents, while prebuilt tool and agent libraries accelerate cross-functional workflows, whether in HR, marketing, or any other department. Platforms that support PolyAI architectures and a variety of orchestration frameworks allow you to swap different models, manage prompts and layer new capabilities without rewriting everything from scratch. Being cloud-agnostic, they also eliminate vendor lock-in, letting you tap the best AI services from any provider. In essence, a platform-based approach is your key to orchestrating multi-agent reasoning at scale—without drowning in technical debt or losing agility.

So, what are the core elements of this platform approach?

Data: Plugged into a common layer Whether you’re implementing LLMs or agentic frameworks, your platform’s data layer remains the cornerstone. If it’s unified, each new AI agent can tap into a curated knowledge base without messy retrofitting.

Models: Swappable brains A flexible platform lets you pick specialized models for each use case—financial risk analysis, customer service, healthcare diagnoses—then updates or replaces them without nuking everything else.

Agents: Modular workflows Agents thrive as independent yet orchestrated mini-services. If you need a new marketing agent or a compliance agent, you spin it up alongside existing ones, leaving the rest of the system stable.

Governance: Guardrails at scale When your governance structure is baked into the platform—covering bias checks, audit trails, and regulatory compliance—you remain proactive, not reactive, regardless of which AI “new kid on the block” you adopt next.

A platform approach is your strategic hedge against technology’s ceaseless evolution—ensuring that no matter which AI trend takes center stage, you’re ready to integrate, iterate, and innovate.

Start small and orchestrate your way up

Agentic AI isn’t entirely new—Tesla’s self-driving cars employs multiple autonomous modules. The difference is that new orchestration frameworks make such multi-agent intelligence widely accessible. No longer confined to specialized hardware or industries, Agentic AI can now be applied to everything from finance to healthcare, fueling renewed mainstream interest and momentum.Design for platform-based readiness. Start with a single agent addressing a concrete pain point and expand iteratively. Treat data as a strategic asset, select your models methodically, and bake in transparent governance. That way, each new AI wave integrates seamlessly into your existing infrastructure—boosting agility without constant overhauls.

#ADD#agent#Agentic AI#agents#ai#ai agent#Analysis#Analytics#anomalies#app#approach#architecture#audit#automation#autonomous#autonomous agents#banking#barrier#Bias#bot#bots#brains#Building#Business#Cars#chatbot#client relationships#Cloud#code#complexity

0 notes

Text

Unlocking Quality and Speed with No Code Test Automation

Embrace Simplicity with No Code Test Automation

Developers, testers, and business analysts confront increasing pressure to deliver quality software rapidly. Traditional test automation demands strong programming skills and creates dependencies on specialized expertise. Teams lose time and agility, and test coverage suffers. No code test automation eliminates the need for complex scripting. It empowers any team member to design, execute, and maintain automated tests. Teams achieve robust test coverage through visual interfaces and intuitive workflows.

The Power of No Code Test Automation

No code test automation democratizes quality assurance. It enables individuals with limited coding backgrounds to build powerful test cases. Testers drag and drop elements, set conditions, and validate outcomes visually. Errors decrease. Teams maintain test scripts effortlessly, even as application logic changes. Stakeholders update tests on the fly. Product owners, business analysts, and QA teams collaborate in real-time within a unified ecosystem.

Streamline The Software Testing Process

Legacy QA processes slow progress and introduce bottlenecks. Testers write and debug intricate scripts, spending hours or days optimizing coverage. No code test automation accelerates this cycle. Project teams swiftly create reusable, modular test components. Testing adapts to agile development cycles and supports frequent code deployment. Manual efforts convert into automated routines. Testers handle regression testing, UI validation, and workflow checks within minutes, not days.

ideyaLabs: Your Partner in No Code Test Automation

ideyaLabs champions the shift to no code test automation for forward-thinking companies. ideyaLabs platforms eliminate technical barriers, opening access for every team member. The platform fosters a collaborative culture. Anyone can initiate, analyze, and optimize tests. Quality assurance evolves from a specialized function to a shared responsibility.

Fast Onboarding And Seamless Training

Onboarding new testers often consumes time and resources. Coding language learning curves block productivity. No code solutions from ideyaLabs simplify this process. New team members design automated tests from day one. Built-in templates, draggable actions, and detailed documentation accelerate adoption. Training times drop. The learning curve flattens. Teams achieve comprehensive test coverage, regardless of prior technical expertise.

Integrating No Code Automation With Modern Workflows

CI/CD pipelines require rapid feedback and error detection. Delayed or skipped test runs introduce risk. No code test automation connects effortlessly with development pipelines. Integrations support both cloud and on-premises infrastructure. Teams initiate automated test suites with every code commit. Developers receive instant feedback, pushing releases with confidence. Automated test results flow back to dashboards, tracking trends and identifying problem areas fast.

Ensure Comprehensive Test Coverage

Test maintenance and scalability challenge traditional scripting-based solutions. Test suites grow unwieldy and difficult to manage. No code test automation supports reusable components and shared libraries. Testers duplicate and link blocks across projects. Changes in one module propagate instantly, preventing drift and minimizing redundancy. Teams maintain broad coverage, even as software complexity increases.

Cost Efficiency and Business Value

Cost-conscious organizations seek high ROI from quality assurance. Development time represents valuable resources. No code test automation reduces hiring and training expenses. Fewer dependencies on specialized automation engineers lead to leaner teams. Business stakeholders review test metrics and outcomes without waiting for engineering reports. Issues resolve quickly. Customer experience improves. Product releases reach the market faster and more reliably.

Real-Time Analytics For Stakeholders

Visibility into testing progress and results enables smarter decision-making. ideyaLabs offers integrated reporting tools. Dashboards display test pass rates, defect counts, and execution times live. Teams identify bottlenecks and failed scenarios immediately. Stakeholders adjust priorities and direct resources where most needed. Continuous improvement becomes routine. Product teams iterate rapidly.

Transform Quality Assurance Culture

Adopting no code test automation changes team dynamics. All members participate in quality initiatives, regardless of role. QA professionals shift focus from manual execution to holistic process optimization. Business analysts contribute insights into user journeys and exceptions. Developers create better code with automated checks holding them accountable. Product leaders see increased team morale and innovation.

Overcoming Common Implementation Challenges

Some organizations fear that no code test automation limits flexibility. ideyaLabs designs platforms for adaptability. Users configure advanced logic and conditional flows. The platform simulates complex scenarios visually. Customizable modules support integration with external APIs and third-party workflows. Rigorous testing reaches mission-critical systems with confidence.

Security and Compliance At The Forefront

Enterprises face stringent regulatory standards. Audit trails, traceability, and data privacy remain essential. ideyaLabs delivers compliant no code solutions with built-in audit logs and version control. Teams lock down sensitive data and enforce granular access controls. Compliance reporting draws directly from the automated test platform.

The Future of Software Testing

Software development cycles will continue to shrink while application complexity grows. Traditional testing cannot keep pace. No code test automation ensures uninterrupted progress. ideyaLabs continually enhances its platform, bringing updates and features driven by real-world feedback. Organizations evolve beyond manual QA and code-based scripts. Collaboration, adaptability, and reliability drive software success.

Get Started With ideyaLabs No Code Test Automation

Embarking on the no code test automation journey only requires a mindset shift. ideyaLabs makes adoption simple. Contact ideyaLabs for a personalized assessment and discover tailored automation solutions. Experience hands-on demos, training, and ongoing support tailored to user needs. Step into a new era of software quality, speed, and innovation.

Build Quality Software Faster With ideyaLabs

No code test automation transforms the way teams build, test, and deploy software. ideyaLabs helps organizations accelerate delivery, reduce costs, and create a culture of shared responsibility. Join the movement. Build exceptional products with ideyaLabs and unlock the true power of no code test automation.

0 notes

Text

IB EE (Extended Essay) Tips: Scoring High in Math and Physics Topics* Talk about structure, research depth, and writing style

The Extended Essay (EE) is one of the core components of the IB Diploma Programme, offering students a chance to investigate a topic of personal interest through independent research. When it comes to Math and Physics, scoring high in the EE demands more than just academic rigor—it requires precise structure, depth of analysis, and a clear, formal writing style.

In this guide, we break down the best practices to help you ace your Math or Physics Extended Essay, from idea generation to the final draft.

📘 Understanding the IB EE Criteria for Math and Physics

Before you begin, it’s essential to understand what examiners are looking for in a strong EE:

Focus and Method: Is your research question clearly defined? Is the methodology appropriate?

Knowledge and Understanding: Are your concepts and theories well-explained and accurate?

Critical Thinking: Do you analyze, evaluate, and interpret results thoughtfully?

Presentation: Is your essay logically structured with appropriate mathematical or scientific formatting?

Formal Style and Academic Honesty: Is the language formal and are all sources properly cited?

🧠 Choosing the Right Topic

For Math EE:

Choose a topic with sufficient depth and originality—avoid overly simple or textbook-level explorations.

Consider areas like number theory, statistics, modeling, or cryptography.

Good topics: