#octree

Explore tagged Tumblr posts

Text

50% discount for Gimme DOTS Geometry on the Asset Store! 🔥

KD-Trees, Polygons with holes, Octrees and a lot more! Added different Quadtree and Octree variants recently. Check it out!

0 notes

Text

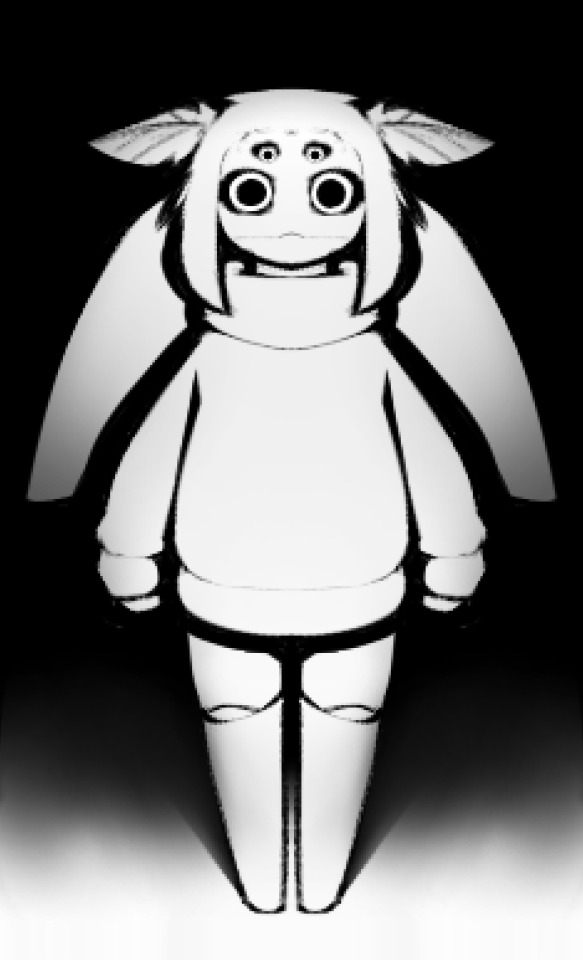

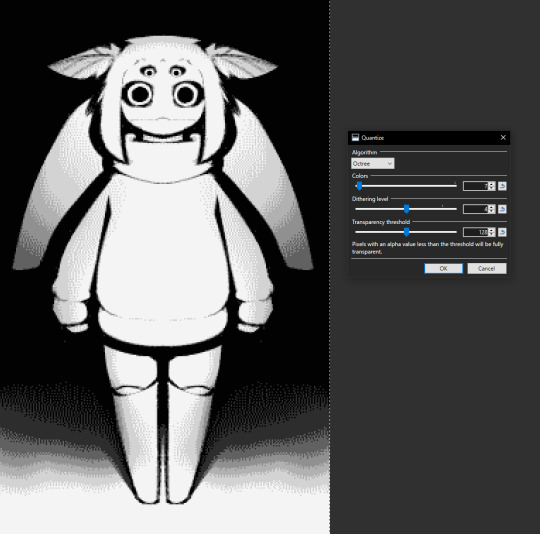

I saw some people in my last post commenting on the grainy/low color effect on the last post like I did something special

it's actually very easy

first u draw something in a shitty resolution, sharp colors, I used black and white

then u slap this idiot in paint dot net and run it through the quantize effect (effects > colors > quantize)

I used the Median Cut algorithm as it tends to add in colors where there aren't any, Octree will give me something closer to the original colors (greyscale in this case, I don't want that)

ta-da your drawing is crusty now

111 notes

·

View notes

Text

Edits of November 05, 1980 (from the November 1980 page)

Double up just because I wanted to!

Quantize > Algorithm: Octree, Colors: 4, Dithering Level: 3 in Paint.Net for the second one. I wanted to just magic wand everything to be black or red, but it looked too ugly so I kept in two (2) greens

7 notes

·

View notes

Text

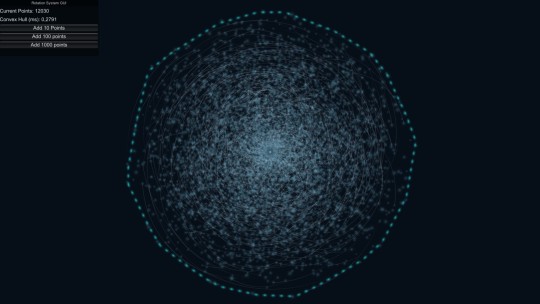

I have a set of points distributed on the surface of a sphere, just like on this page. I have their Delaunay triangulation and Voronoi diagram as well. I want to draw a texture, at reasonably good resolution, showing the Voronoi diagram on an equirectangular projection. For 25 points, it looks something like this:

The naive way to draw this would be to take each coordinate on the texture, and check it against every point in the point list, either for whether it is closest or whether it lies inside the bounds of the Voronoi cell for that point. The latter is slightly more efficient, since you can just break off checking once you find a match, but you still might have to check the whole map.

This is obviously slow as balls, especially because I want to be able to generate such a texture for many thousands of points. I need a heuristic or a search structure that reduces how many points I have to check against. A nearest-neighbor search via octree gets a result like this:

because each division in the subtree creates arbitrary boundaries. I think I could combine an octree search with a does-this-coordinate-actually-lie-inside-the-polygon-of-its-point search, but so far my attempts to do this have been... flawed:

Which, to be clear, may be a result of my implementation rather than the approach being fundamentally in error. But this method also seems to be pretty slow for some reason.

(The reason I want to do all this is that when the player clicks on the surface of the sphere, I want a fast way to detect what cell/point is closest to where they clicked. The image is a human-readable proxy for an array of values which are the actual point IDs; by translating the coordiantes of the click to the array, and checking the nearest value on that array, we can easily get the ID of the point without having to do any complicated lookup stuff once all this data has been generated and saved.)

25 notes

·

View notes

Text

Speaking of spaceflight, I've been playing this game called Space Engineers that was on sale recently.

It's a sandbox game combining voxel mining with a grid-based vehicle/basebuilding game. The physics can be pretty finicky to put it mildly, but they've accomplished some crazy impressive technical stuff - procedural voxel terrain on the scale of actual planets. We spawned 'close together' which means kilometres of mountainous terrain.

For the voxels it uses smoothed marching cubes, and I think is doing something clever with octrees under the hood given how low detail transitions into high detail terrain. Since my current work involves working with those same algorithms I'm pretty blown away by how smoothly it runs. And all this with solid multiplayer netcode physics! I've been able to host a game with three players at 1440p ultrawide, with a rock-solid 120fps. I'd love to find some technical articles on how they made it.

Here's some stuff I've made in survival mode:

My little mountain base, soon to expand into another module. Mining all the silica for that glass took forever but it's worth it for pretty windows.

The Mk. 7 Extractivist. Good at getting itself stuck in holes in the ground.

Mk.2 Scootabooter, prototype of a flying scout vehicle. Design had to be revised several times, but I don't have a good screenshot of the final version.

The really big stuff is yet to come. But the 6DOF flight mechanics are fun, there's a good variety of models to play around with, and the vehicle physics are interesting if rather finicky.

Ultimately it's a 'make your own fun' sort of game - there's something of a progression of things you can build, to mine more, to build more, etc., but the 'challenge' comes in making the contraptions you build actually work.

It's honestly a bit of a dangerous timesink of a game for me, scratching way too many itches at once, so I'm trying to limit how much I play it. But here I go posting about it instead...

8 notes

·

View notes

Text

Semantic enrichment of octree structured point clouds for multi-story 3D pathfinding - Florian W. Fichtner, Abdoulaye A. Diakité, Sisi Zlatanova, Robert Voûte

3 notes

·

View notes

Text

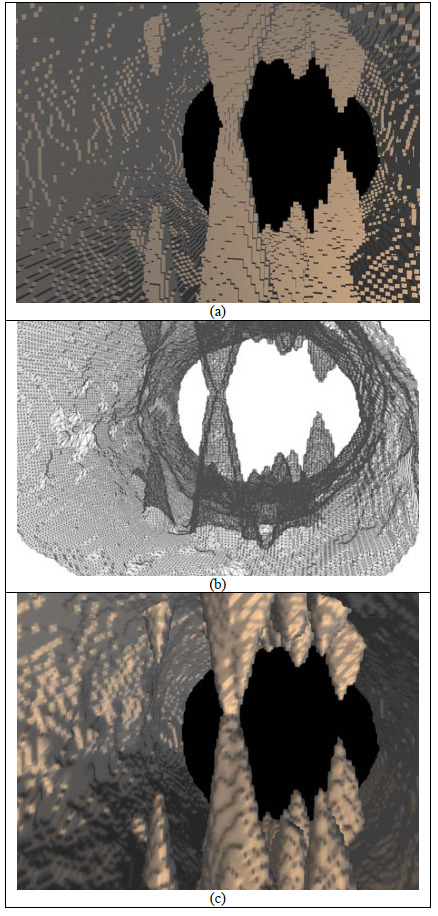

Source notes: Procedural Generation of 3D Cave Models with Stalactites and Stalagmites

(Cui, Chow, and Zhang, 2011)

Type: Journal Article

Keywords: Procedural Content Generation, Caves, Stalactite, Stalagmite

(Cui, Chow, and Zhang, 2011) tackle the problem, and address the underserved area of, 3D cave generation head on by developing a method of producing models containing polygon configurations recognisable as stalactites and stalagmites.

The authors note the paucity of research on procedural techniques for the generation of underground and cavernous environments despite being natural and common settings for films and computer games. As is the aim of the Masters project, the developed method is intended to generate visually plausible 3D cave models sans the requirement that the constituent techniques be physically-based. They build upon their previous work involving the storage of 3D cave structures in voxel-based octrees by smoothing a triangle mesh representation of cave walls without introducing cracks and implementing the addition of stalactites and stalagmites.

The method works by rendering polygonal cave wall surfaces from low resolution voxels stored in a voxel-based octree that has been generated using 3D noise functions. That mesh, made up of voxel cubes, is then smoothed using a Laplacian smoothing function, effectively moving vertices closer towards adjacent vertices, rounding corners in the process. A nuance of the work by (Cui, Chow, and Zhang, 2011) is to remove cracks that result from the smoothing process (due to non-uniform polygons resulting from differing octree voxel node sizes) by subdividing polygons to cover gaps before the smoothing is executed. The smoothing work detailed in the paper influenced the development of the Masters project method because without a smoothing pass the extracted isosurface is unnaturally jagged.

Stalactites and stalagmites are generated using a physically-based equation approximating the relationship between the radius and length of those speleothems. It is stated that they can be randomly placed on the ceiling and floor of the cave structure, with variation achieved by altering the parameters of the equation. In order to further differentiate between stalactites and stalagmites, a scaling factor was added to the equation. This need for this detail has been factored into the Masters project method, with stalagmites being generated in a slightly different way to stalactites to make them appear stockier, mimicking the effects of gravity.

Analysis of the results partly focuses on the smoothing enhancement added to the previous work by the authors, showing the crack-free, yet still naturally rough and realistic cave walls. The generated speleothems are showcased, demonstrating the diverse shapes that can be produced by modifying the equation parameters.

The work by (Cui, Chow, and Zhang, 2011) is a significant contribution to the area of procedural methods for generating 3D caves. The models produced are recognisably cave-like, largely due to the inclusion of speleothems and rough walls. Variability of the shape of the cave environment as a whole appears low from the included results, and there is a lack of other visually interesting features (the authors note in their future work section the need to investigate techniques for generating such mineral deposit structures as columns, flowstones and scallops). User control and parametrisation of the cave model is not discussed, however different shapes of visually plausible speleothems can be produced by altering parameters, which is a strength of the method presented.

0 notes

Text

youtube

youtube

youtube

youtube

The convergence of voxel modelling and VR promises to create rich, dynamic worlds where users can interact with every detail. This blend of technology is exciting because it pushes the boundaries of what virtual environments can be, making them more detailed, interactive, and immersive than ever before.

Performance Optimisation in Voxel-Based Models Sparse Voxel Octrees (SVOs): This technique involves creating a hierarchical data structure to efficiently manage large amounts of voxel data. By organising voxels into octrees, where each node represents a cubic volume that can be subdivided, only the visible or relevant parts of the voxel model are rendered, significantly improving performance.

Compressing voxel data helps reduce the memory footprint and increases rendering efficiency. Techniques like run-length encoding (RLE) and other compression algorithms can store voxel information more compactly.

Utilising the power of modern GPUs for voxel rendering can greatly enhance performance. Graphics cards are well-suited for handling the parallel processing required for voxel calculations and rendering.

Although it starts with relatively simple graphics, recent updates and mods have significantly improved the detail and complexity of voxel-based assets in Minecraft. Shaders and texture packs can add realistic lighting, shadows, and detailed textures.

0 notes

Text

The world isn't flat but the collision is, just so it can use a faster binary search partition and not an octree

If the world is flat, maybe they implemented all the worlds on the same map? So if you can get past that Great Ice Wall, you'll end up on the moon, Mars, Venus, or Mercury, depending on each way you go.

We just need to try running a boat backwards until it gains enough speed to clip right through to the Sea of Tranquility.

and since the tile map doesn't reload if you don't go through the proper load point, you can keep sailing right through the regolith just like it was salt water. Real handy for getting to Ceres and Eros.

506 notes

·

View notes

Photo

Gradient town

23 notes

·

View notes

Text

Testing the performance of different octree variants😄

New variants will be included in the next update of Gimme DOTS Geometry (update already scheduled)

0 notes

Text

gifs have a maximum of 256 colors and it's an old enough format that most of software designed to make animated gifs use palette-building functions that are approaching 30-years-old and which were never intended to handle images with anything approaching modern definitions or color depth

you can correct for this if you're willing to put in some work (and you know how) but by default optimized octree ain't gonna be kind to a 4k screenshot

why do anime giffers always fuck up the color so bad

3 notes

·

View notes

Photo

This project is still active, though I didn’t have as much time to work on it as I wanted during the pandemic. The one major piece that’s missing is some way to animate the models, and that’s still on the roadmap somewhere.

I’ve worked a lot on various bug fixes. To mention just a couple of major ones that come to mind: there was an important fix for the shift-reduce parser that reads the modeling script. And another one involved the function that simplifies the voxel octrees that are (sometimes) used to define a visible surface and render models. There are two screenshots here, and the first one is supposed to show the latter fix. This is a set of voxels (cubes) of various sizes. If it hadn’t been simplified to remove voxels that had no useful data in them, all of the voxels would have an edge length of 1. But as you can see, the voxel at the upper right has an edge length as large as 8. Being able simplify these voxel trees like this will be useful however they are ultimately used, even if it’s only to create models for use later.

Another thing I’ve worked on is refinements to the modeling script. The second screenshot shows a simple function being called in this script. It’s passed floating-point arguments that represent the dimensions of a cuboid. The function then creates a box with those dimensions (the long purple box). The function itself is in a different text file, and it’s written in the very same modeling script. This is a simple example to demonstrate and debug the basic functionality.

8 notes

·

View notes

Text

Shadows and dust

youtube

Welcome, dear reader to the first blog post of the development of our first game project “Mutants” using our in-house game engine “Cellbox”. This blog will cover some of our technical advancements during development.

Cellbox is a voxel engine of which you can read more about on our site: http://www.cellbox.io/

This blog post is about shadows and lighting. Our graphics programmers implemented shadows utilizing the variance shadow maps (VSM) technique this week.

The way we render our geometry is with the help of a raycaster and raymarcher to detect visible voxels that are resident in our data structures. Previously, we haven’t been able to implement shadow mapping due to the fact that we only could detect voxels inside the main camera’s viewing frustum. For the shadow mapping to work we had to develop a new way of handling our voxel data and upload all of it to the GPU, this is done with sophisticated compression methods and level of detail schemes but that is to be covered in another blog post. If you are familiar with shadow mapping techniques you know that the scene needs to be rendered from the point of view of the light source.

#cellbox#mutants#game engine#game#pc games#pc gaming#computer graphics#blog#graphics#shadow#lighting#sandbox#voxel#sparse voxel octree#gpu

1 note

·

View note

Text

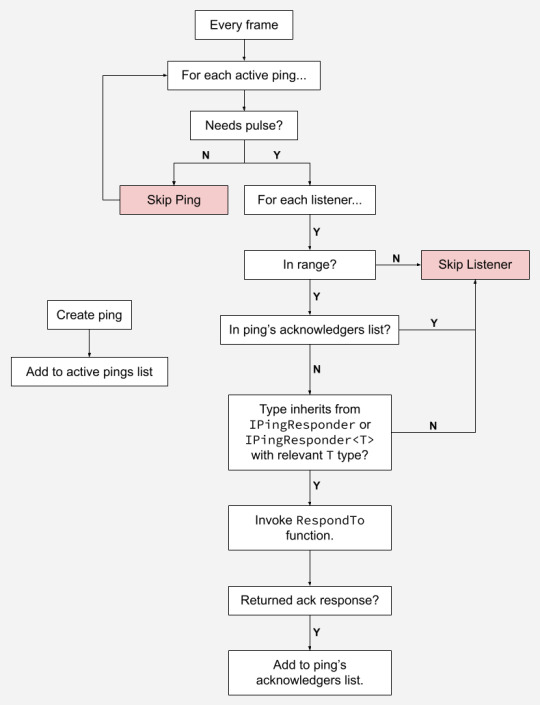

Multi-Dimensional Game Events

I'm currently working on a prototype for a project and needed a super flexible and reusable event system. I took some inspiration from Far Cry's dynamic, emergent systems (and this wonderful GMTK video) and made this... thing (GIF at 1/4 speed):

Breakdown

So what the heck is happening here?

Well, right now I call them "pings", but they are definitely worthy of a more descriptive name in the future. They act as a "multi-dimensional event system" for games. They aren't 4D in the way that you're probably thinking, however. The basic gist is:

A ping is fired off in code and added to an internal list to be regularly "pulsed".

Every "pulse", all ping listeners within range of the ping that can respond are notified.

Each valid responder will receive a notification every pulse unless it returns a response, after which it will no longer be considered as a responder for that specific ping.

So, how are these useful? In so many ways I never even realized until I implemented this system. Here's a few examples of the types of problems this solves in my current project:

AI senses. This works for sight, hearing, heck, even smell. For example, the player's feet can create pings for each step.

Reduces coupling. Pings can have any amount of data associated with them, and can thus track the "source" of the ping. I use this to, for example, have the player create a "wants reload" ping with a very small radius. The player's gun will pick up this ping and trigger the reload. Using this model, the player doesn't need a reference to the gun. The gun's resulting "reload" ping can be then picked up by nearby enemies, as if they are "hearing" the reload and know to fire on the now vulnerable player. It's also picked up by the UI, which can show a reload animation in the HUD.

Extremely easy to write debug tools for. The above GIF shows how much information can be very easily visually parsed by rendering debug gizmos.

It should be noted that this system separates the concerns of "listening" and "responding" -- they are two separate interfaces. This is because a single listener might be able to hear multiple different types of pings.

Now with all of that out of the way, time to dive deeper into the individual elements of the equation: pings, listeners, and responders.

Pings

Ping is an abstract class that you create a derived class from, such as TakingCoverPing, or ReloadingWeaponPing. They are basically like regular code driven events, except with 5 distinctive differences. Pings by default are:

Positional: They have a 3D position in the world.

Radial: They are spherical in shape and have a radius.

Temporal: They have a start time and duration.

Continual: They don't have to just be fired once. Instead they “pulse” at a regular, custom interval (if desired).

Global: Responders don't register to specific instances of these pings. I'll get into this more later.

There are some hidden bonuses that can be derived from the above properties. For example, since pings are positional and radial you can calculate a signal attenuation value for any 3D position. When used with AI senses this attenuation value can then be fed into RNG systems to determine if an enemy "heard" a sound that they were a bit far away from.

The continual nature of these events isn't a very widely applicable property, but does allow for very slick implementations of specific behaviors. A good example can be found in the Hitman games (specifically the trilogy started in 2016). Any weapon not in an expected location will attract the attention of NPCs. Instead of engineering a specific AI sense to accomplish this, weapons that are dropped outside of specific locations could create a ping with an infinite duration and regular pulse rate. While you still would need to write the response behavior, you don't need specific code to have the AI pick up on these sort of gameplay conditions.

By default Pings can also store the source GameObject that fired the ping. This is used by the debug visualizers to draw a line from the ping to the object that created it. It's also used by many of the responders to determine if specific pings are relevant to them. Going back to the gun reloading example, each gun only responds to a WantsReloadPing if the source of the ping is in their transform hierarchy (in this case it's the character that wants the reload).

Listening

Listeners inherit from the IPingListener interface, which just describes the listener's Position and Radius.

Yep, just like pings, listeners are also positional and radial in nature. This means that determining which listeners can "hear" a ping is done via a very simple sphere overlap check.

Where L is listener, P is ping, p is position and r is radius:

This is a very important distinction between pings and other event systems. In a typical Observer-pattern-like event system, each handler registers itself to specific instances of events. It will always receive a callback for those instances of those events as long as it remains registered.

A typical event bus implementation will remove the coupling between the owner (publisher) of the event and the handler (subscriber). Instead, anything can fire an event of any type via the event bus, which then selects handlers based on the type of event. This is also known as the Mediator pattern.

Pings are closer to an event bus in nature. However, with regards to selecting handlers in this system, I refer to this process as "Listener Discovery" due to how varying the resulting list of handlers can be based on game state.

Some of the information derived during listener discovery is stored in a struct called PingLocality and passed to the responder. It stores the square distance from the listener to the ping, as well as the attenuation of the ping based on the listener's position.

Its very easy to abuse listener setup to achieve more flexible behavior. For example, a global listener just needs a radius of float.PositiveInfinity (this is how the UI listens to events in the world). Listeners can be attached to transforms by returning transform.position as the Listener's Position property.

Responding

The inheritor of IPingListener will also need to inherit at least once from IPingResponder<T> in order to do anything beyond "hear" an event. This interface looks like this:

public interface IPingResponder<in T> where T : Ping { void RespondToPing(T ping, in PingLocality locality, ref PingResponse response); }

The first two arguments have been discussed, but the last needs explanation. As I previously mentioned, whether or not a listener will continue to receive notifications about a nearby ping is related to the response given. PingResponse is an enum with 3 values:

None: This responder needs further notifications for future pulses for this ping.

Acknowledge: The responder no longer needs to know about this ping.

SoftAcknowledge: A very specific response, I'll explain in a bit.

When the system receives a "none" response, it'll make sure to notify that responder again during the ping's next pulse. If it receives a "acknowledge" response, it'll log some information about the acknowledgement, and add that responder to the ping's list of acknowledgers to ensure that it's ignored during future pulses. A "soft acknowledge" is like a "none" response, except it is logged in the same way as an "acknowledge" response. Again, this is very specific and is only used for cases where you want to know that the ping was potentially relevant to a receiver.

As a reminder, the primary reason why listening and responding are treated as separate concerns is so classes can inherit multiple times from IPingResponder<T> with different types for T. This allows a single object to respond to multiple different types of pings without having to specify unique listener position and radius values for each response.

Wrap Up

Everything above can be summarized into this potentially overwhelming flow chart:

That's about it! There's some other details not really worth mentioning, such as using object pooling for Ping subclass instances, or potential future optimizations on listener discovery (i.e. using an octree). I also plan on writing a "history visualizer" to show historical ping information. This is important due to how transitory these pings can get.

I'm curious to know what people think about this sort of system. If you want to chat about it, here's where you can find me.

1 note

·

View note

Text

Source notes: Procedural feature generation for volumetric terrains using voxel grammars

(Dey, Doig, and Gatzidis, 2018)

Type: Journal Article

Keywords: Procedural generation, Terrain, Voxels, Grammar

(Dey, Doig, and Gatzidis, 2018) present a semi-automated method of generating overhangs and caves in existing volumetric terrains using a rule-based procedural generation system utilising a grammar — describe in the paper as "a set of axioms and rules that recursively rewrite the initial state until a termination condition is met" — composed of user-created operators. The application of the system over a voxel-based terrain to create the terrain features is performed on the CPU, with the final mesh being generated real-time using a GPU-based Surface Nets algorithm (adding to the examples of iso-surface methods in the discovered literature).

As in the work by (Becher et al., 2019), a volumetric approach is used as the basis for the method to overcome the limitations of heightmaps. The relative merits of various data structures, such as Sparse Voxel Octrees and Brickmaps, are discussed, with the authors stating the suitability of Volumetric Dynamic B+ trees for dynamically changing terrains, due to "a number of key advantages to volumetric representations, such as good cache coherence and fast random access capabilities, including insertion, traversal and deletion operators". The justification behind a shape grammar based system over a fully automated noise utilising approach is given, noting the former's ability to control variation by altering input state and/or rules as well as previous use of such systems for procedural generation. Shape grammars were also used by (Zawadzki, Nikiel, and Warszawski, 2012), as discussed previously.

To create real-world terrain features through the manipulation of existing volumetric data, the authors created a method that operates on a voxel grid representing the bounds of a terrain, formed by creating voxels from a heightfield generated with 2D Perlin noise. Voxels have density values to represent their contained material. A subset of voxels is sampled at a time, offset each iteration by a user-defined parameter, to check if they satisfy any rule criteria within the grammar. If a rule match occurs, shape transformations are performed on the voxels within the subset. Start and end positions within the grid can also be determined by the user. The rules within the grammar consist of: symbols — an array of dimension the same as the voxel subset, containing conditions to test voxel density values; transformations — an array with same dimension as symbols specifying an operation to perform on the voxel density values. If multiple conditions pass for a subset of voxels then the condition with the highest priority (a value assigned by designers) is chosen, or the choice is stochastic if priority values are equal.

The authors constructed two separate grammars with different rulesets — one used to create cliffs and overhangs and the other caves. The ruleset used to create caves works by having a first rule to create an initial point for a cave to be generated, emulating an entry point for groundwater that would form a cave via erosion in reality. Second and third rules lower the density value of the lowest vertical point within a terrain cavity (further mimicking gravitational forces and erosion) and a forth rules widens cavities as well as providing variation to the internal structure.

Multiple compute shaders (for calculating centre of mass of voxel edge intersections, building vertex and index buffers, and calculating normals) are used to execute a Naive Surface Nets algorithm to extract a polygon mesh from the voxel grid and density data. This approach is beneficial as it capitalises on the parallel nature of the algorithm - many threads can work on individual segments of the voxel grid data concurrently. Triplanar texturing is used when rendering the mesh to blend textures (a similar technique is used in the Masters project method).

The results of the method are discussed in terms of their structure and visual appearance. Different types of caves can be produced by changing the voxel subset size and the offset between subsets. Additional cave features such as speleothems and tunnels are not shown in the paper, and the description of the method suggests they would not be trivial to create. It could be postulated that columns and tunnels could be created by forming caves beside each other that eventually meet inside the surface of the terrain.

The performance of the method is analysed in terms of generation, surface extraction and rendering times at different grid resolutions and for each type of result (cave or overhang). Grid resolution appears to be the dominant controlling factor for generation time. The researchers state as a possible improvement parallelisation of the grammar system. It is a single-threaded CPU implementation as presented. This consideration influenced the decision to implement a degree of parallelism in the Masters project method.

(Dey, Doig, and Gatzidis, 2018) present a method that generates a mesh that is recognisably cave-like, and has the advantage of being usable on existing volumetric data (albeit dependent on the structure of said data). Variation can be achieved by editing the rules of the grammar system, if the requisite knowledge is possessed. It could be argued that more focus on, and further development of, the method with regard to cave features specifically would be required for it achieve results that are sufficiently realised, structurally complex and explorable for use in modern computer games.

0 notes