#realtime 3d with generative ai

Explore tagged Tumblr posts

Text

Learn Real-time 3D animation with Arena Shyambazar

Step into the future of animation with Arena Animation Shyambazar's cutting-edge workshop on Real-Time 3D with Generative AI. Dive into the dynamic intersection of creativity and technology, where every pixel pulses with innovation.

#animation courses#best animation institute#animation course#realtime 3d with generative ai#3d animation course in kolkata

0 notes

Text

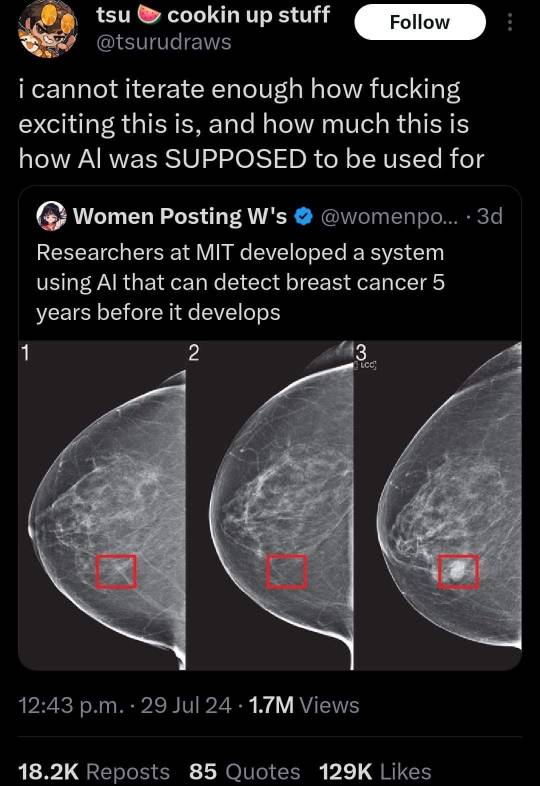

The breast cancer detection research is legitimately very cool, but the third screenshot is incredibly misinformed about what AI is and how it works, to a degree that I think is actively harmful. I'll take it line by line.

"Analytical ai vs generative ai": these are not actually meaningful or distinct categories. They are use cases. "AI" is essentially a shorthand for a set of techniques that computer scientists call "deep learning" - basically, running certain kinds of calculations on large data sets to create statistical models that can, we hope, make predictions about similar data. This is still a pretty broad field, and there's a lot of different techniques and ways they can be applied - you probably can't just take ChatGPT's model and retrain it to identify breast cancer (and even if you could, you definitely shouldn't). But ALL deep learning is based on the same principles and the same kinds of calculations. It's a bit like the difference between two different 3D game engines - say, Unity and Unreal Engine. There are distinctions between them, sure. But both of them ultimately solve the same problems: that is, rendering objects in a 3D space and simulating physics in realtime. The basic concepts are the same, and they use the same hardware to get there.

"one of these is doing cataloguing and diagnoses and a whole bunch of stuff that's super useful": No! No no no! I'm not saying there aren't very cool, useful ways to use AI for data analysis, but there's nothing inherently good about "analytical" AI. For example, police departments using it for "predictive policing" (effectively just technologically assisted profiling), or facial recognition (which is known to be less accurate for people of color!), or government surveillance, or insurance companies rejecting claims based on AI analysis.

Even in cases that aren't as clear-cut and harmful as these, you have to be really careful. The breast cancer detection system from the original post is legitimately amazing, and has the potential to save so many lives! But it would still be profoundly irresponsible to rely solely on this technology for diagnosis. Remember, AI/deep learning is ultimately just applied statistics. There will always be outliers, false positives and false negatives, and unexpected real-world scenarios that the AI model's training data did not account for. This technology is a tool that has the potential to improve medical experts' ability to make early cancer diagnoses. It is not, itself, a medical expert. And while I don't think anyone reading this post will disagree with that, it's very easy to imagine organizations cutting costs by over-reliance on technology like this, while cutting human experts out of the process. And in the context of cancer detection and treatment, that would absolutely cost people their lives.

"The other is... being sold everywhere as solving problems but insofar as I'm concerned it mostly hallucinates language": Yeah, I do pretty much agree with this! Aside from the false dichotomy of "generative"/"analytical" AI, it IS true that AI is currently an overhyped marketing buzzword, and in that marketing hype context it usually means LLMs (large language models), such as ChatGPT. Investors and venture capitalists are fucking obsessed with this stuff, cramming it into use cases that don't really work and don't really make sense, and driving the rest of us crazy (even me, someone who works in tech and is generally interested in deep learning).

Do I think LLMs are inherently bad and useless? No, not really. There are a number of LLMs designed to write code, for example; these aren't a substitute for knowing how to code, yourself (you must know how to catch mistakes they will inevitably make) but some people do find them useful as a sort of smarter autocomplete, speeding up the more tedious and repetitive parts of writing code. Not revolutionary, rather unremarkable really, but genuinely helpful for devs who choose to use them responsibly. A far cry from AI-generated search results, or a chatbot inexplicably crowbarred into one's weather forecast app, or any of the other bullshit "features" are the hot new gimmick of the week. Nobody asked, nobody wants them, and they don't even work as advertised because they are being used for things LLMs can't fucking do, so please stop making them, we are all begging you.

"Analytical AI is also less resource intensive because it trains its model and that's it": This is COMPLETELY incorrect and makes absolutely no sense. There is no such thing as an "AI" that trains its model and then does nothing else ever. What would even be the point? Remember, an "AI" is just a VERY large and complex statistical prediction model. The larger and more complex, the more resources it will take for a computer to run those calculations and make the predictions it's been trained to make. That's true whether the prediction is "does this tissue sample show early signs of breast cancer?" or "what text should come next after this prompt?" Even if the output is very "simple," it'll still take a lot of computation power to produce that result if the statistical model is large and complex.

I'm not an expert about energy costs, but there are a lot of wild, overblown claims going around about AI energy use that aren't particularly well sourced. A lot of people seem to have the perception that AI uses energy in similar quantities (and with similar wastefulness) as NFTs/crypto, which isn't really true. Other people who are better informed than me have talked about this in more detail! For now, I'll just say that training AI models will always be more resource-intensive than using them, and there are definitely conversations to be had about best practices there... I, personally, am most critical of companies with enormous proprietary models who are not especially transparent about how they are trained or how much they cost to run. It's better for everyone - for the environment AND for science - if we can share this stuff and learn from one another rather than reinventing the wheel each time, at great expense.

No matter what your feelings are on "AI", I encourage everyone to learn a little about how deep learning/machine learning works - there are so many misconceptions flying around in the discourse, and it's not helpful to anyone. It's not some earth-shattering innovation that'll magically make everyone's lives better, and it's not some monstrous threat either - it's just a kind of technology, and it's humans who decide to use it in helpful or harmful ways - or, most realistically, a mixture of both.

#misinformation#ai discourse#i Really hope i don't regret posting this lmao#but seeing “analytic AI” held up as Good And Noble kind of made me insane#when in fact some of the most concerning uses of AI currently would fall into that category!#on my hands and knees begging everyone to be normal about deep learning. i am in hell.

179K notes

·

View notes

Text

Top 10 Logistics and Supply Chain Trends: Reshaping the Future of the Industry

The logistics and supply chain industry is undergoing a rapid transformation driven by technological advancements, changing consumer demands, and global disruptions. Businesses that stay ahead of these trends will gain a competitive advantage by improving efficiency, reducing costs, and enhancing customer satisfaction. Here are the top 10 trends shaping the future of logistics and supply chain management:

1. Artificial Intelligence and Automation in Logistics Industry

By facilitating predictive analytics, route optimization, warehouse automation, and demand forecasting, artificial intelligence (AI) and automation are transforming supply chain activities. Businesses can use artificial intelligence logistics software to cut costs, enhance delivery speed, and lower mistakes.

As robots are boosting warehouse activities, artificial intelligence chatbots are simplifying customer inquiries.

2. Blockchain for Transparency and Security.

Blockchain technology improves supply chain visibility, cuts down on fraud, and secures tamperproof transactions. Automated and error free documentation enabled by smart contracts results in faster and more dependable processes.

For example, Maersk and IBM are using blockchain to generate a clear and traceable supply chain system.

3. Green and sustainable logistics.

As environmental worries rise, businesses are devoting themselves to cutting their carbon footprint by means of green technologies. Becoming typical in logistics are electric and hydrogen powered trucks, optimized delivery routes, and recyclable packaging.

Case in point: Amazon and UPS are putting money into electric delivery vans to help meet their environmental targets.

4. Real Time monitoring with Internet of Things (IoT)

Using GPS tracking and IoTenabled sensors offer real time insight into shipping, therefore lowering chances of theft and sloppiness. For perishable products, these wise gadgets also assist with temperature monitoring.

For instance, realtime tracking of packages helps to enhance delivery accuracy on IoTenabled logistics systems like DHL SmartSensor.

5. The emergence of 3D printing in logistic networks

By allowing on demand manufacturing 3D printing is lessening reliance on worldwide supply networks. Industries needing bespoke goods with short lead times especially benefit from this trend.

Case in point: 3D printing is used by car and aerospace businesses for quick prototyping and spare parts creation.

6. Self Driving cars and delivery drones

Last Mile delivery is ready for transformation with drone deliveries and autonomous trucking established. These advances will cut operational expenses, speed up delivery times, and limit human involvement.

For speedier order fulfillment, companies like FedEx, Amazon, and UPS are testing drone shipments.

7. Big Data and Forecasting Models

Big Data analytics lets businesses examine enormous quantities of data to refine logistics processes. Demand prediction, stock control, and supply chain risk minimization are aided by predictive analysis.

Retail behemoths like Walmart leverage Big Data to maximize stock levels and simplify supply distribution channels.

8. Resilience in supply chains and managing risks.

Resilient supply chains are clearly needed given global interruptions such as pandemics and political problems. Diversification of suppliers, acceptance of digital twin technology, and better contingency planning are among the trends in business now.

Toyota used a multisourcing approach after semiconductor shortages caused supply chain problems.

9. Online trade and omnichannel logistics

The growth of ecommerce has seen logistics firms using omnichannel fulfillment techniques like microwarehouses, same day delivery, and curbside pickups to satisfy customer demands.

Case in point: To speed up ecommerce deliveries, businesses including Flipkart and Shopify are supporting warehouse automation.

10. Hyperpersonalization in logistics

Using personal route optimization, intelligent logistics systems are allowing hyper personalized delivery experiences via flexible delivery choices and automatic alerts.

Amazon's AI-driven recommendation engine forecasts client choices and recommends best delivery options.

Final Thoughts

Advanced logistics technologies, sustainability projects, and changing customer expectations are all shaping the future of logistics and supply chain management. Companies that follow these trends will improve competitiveness in the fast changing logistics scene as well as efficiency.

By integrating digital transformation in your logistics operations, you will stay ahead of the competition.

#logistics software#freight software#freight forwarding software#software for freight forwarding software#warehouse management system#warehouse software#wms

1 note

·

View note

Text

Reflections on Flux Festival 2024

Photo credit: Sarah M. Golonka

Of course I'm biased, but not in a bad way. Having witnessed the hundreds of hours of curating and organizing that Holly and her collaborators – the old team from RES Magazine and RESFest – put into this amazing four-day festival, I knew it would be unlike any of the other public events I have been to that are struggling to come to terms with the impact and potentials of generative AI. It also makes sense that FluxFest extends the logic of the RESFest in terms of capturing a moment, however fleeting and transient it may be – this I regard as a feature, not a bug, by the way – in the unfolding evolution of digital culture. This is where the genius of Flux lies. Industry-sponsored AI events and those promoted by organizations that want to be recognized as a source of investment advice are all inextricably cathected with the self-serving logic of venture capital, which thrives on being right about what's cool, what's next, and who to watch and listen to.

Photo credit: Sarah M. Golonka

Flux, as the name suggests, embraces the indeterminacy of any given moment, with its fickle basis in technologies, each of which promises to be the next big thing, and instead looks for cultural resonances and significance; a curated experience that is agnostic about form and largely indifferent to newness and coolness even when it is capturing things that are new and cool. As such, it is also appropriate that the event unfolded over a series of days at venues scattered around the city -- from UCLA's Hammer Museum to the School of Cinematic Arts at USC, to the Audrey Irmas Pavilion (AIP) somewhere in between.

Photo credit: Sarah M. Golonka

Although I managed to make it to all four events, the highlight was definitely the day-long series of performances, screenings, talks and installations at the AIP, where close to a thousand people converged - the event's sponsorship by USC and Holly's research unit AIMS (AI for Media and Storytelling) skewed the demographic toward students, but well-mixed with Flux loyalists and a sizable number who either remembered or were old enough to remember the RES days of the early 2000s.

Although my plan had been to help with documenting the event in 360 video, I instead found myself assisting with the VR installations on the second floor, where multiple exhibits were set up for attendees to experience projects ranging from the VR version of Carlos López Estrada's “For Mexico, For All Time,” which accompanied the 2024 UFC championships at The Las Vegas Sphere, to Scott Fisher's Mobile and Environmental Media Lab's (MEML) collaboration with Pau Garcia of Domestic Data Streamers, which used a variety of image synthesis technologies to recreate memories conjured by visitors to the installation.

Dubbed "Synthetic Memories" and working in near-realtime, a team of USC students from Fisher's lab created 180-degree memory spaces and Gaussian splat-based 3D environments, all of which were experienced in a head-mounted display. My own childhood memory of being menaced by a possum from the treehouse in my grandparents' backyard yielded two equally striking image spaces. Unfortunately, the generative AI system never quite captured the threat posed by the possum as I experienced it. The creature that was supposed to be snarling up at me in the printed souvenir of the experience (below) looks more like a friendly, overfed otter, but this image will nonetheless probably one day entirely replace my biological memories of the situation.

Of course, the environments created with both the Gaussian splat and gen AI software uncritically naturalize arbitrary conventions for the aesthetics of memory: soft-focus, muted color palette, fuzzy borders, etc., all of which are rooted in a cinematic vocabulary that is more semiotic convention than anything organically related to remembering. But for now, these experiments are making lemonade out of the indistinct yet still compellingly dimensionalized aesthetics of the environments created by Fisher's team.

After having my own memory space conjured by this apparatus, I had not expected to spend the next 9 hours informally staffing López Estrada's adjacent VR installation, but I made the mistake of admitting that I knew how to set up and operate a Quest 3 headset. Although I haven't taught my VR class at UCLA for over a year and was a little out of practice troubleshooting the Quests, there was something uniquely rewarding about spending the day within a few yards of Scott Fisher, some 40 years after his team at NASA Ames developed the first functional headmounted display in the mid-1980s. The way I see it, if Scott Fisher – one of VR's true visionaries and pioneers – is willing spend 9 hours ushering dozens of visitors through a VR exhibit, who am I to sneak out to take in the rest of the Flux events?

Photo credit: Sarah M. Golonka That said, some of the more memorable elements of the festival, for me were Kevin Peter He's live cinema performance with Jake Oleson, which was preceded by a how-to workshop at USC's IMAX theater, exposing some of the secrets of his live, VJ-style navigation of procedurally generated, landscapes populated with infernal, humanoid specters.

Another perverse highlight was Jan Zuiderveld's self-serve Coffee Machine installed in the lobby outside the VR exhibits, which served up AI-generated insults to users along with a pretty decent cup of espresso. Although it was admittedly a relief for everyone that the festival came to such a successful conclusion, I won't be surprised if the legacy of the RESFest lives on in the form of a traveling, international version of the Flux Festival. Whatever its afterlife may be, the 2024 Flux Festival has set a bar that seems unlikely to soon be surpassed by the bright-eyed evangelists and doomsaying haters who dominate much of the current landscape of generative AI.

0 notes

Text

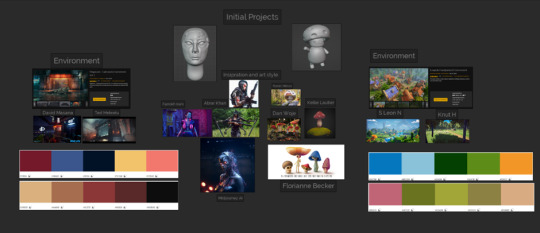

Development Projects – Week 1/2

03/12/2023

Research and Inspiration

Mood board of inspiration (Masana, 2020) (TedMebratu, 2019) (Irani, 2021) (Khan, 2018) (Khan, 2021) (Mikhail, 2023) (Woje, 2016) (Lautier, 2023) (Becker, 2020) (N, 2021) (Knut H, 2021)

For my development projects I will be focusing on my ideas from my places of the mind weekly project and my 3D printing weekly project. For my places of the mind project, I would like to develop my futuristic female character. I have found some concept art that I think would be good references to work from, as well as an AI generated. I would like to create a bust model rather than a whole body because I think that with the time constraints I would have a stronger outcome by making it overall smaller. For my 3D printing project I went back to the mushroom model and want to further develop that model specifically. I’ve found myself especially inspired by the concept art by Florianne Becker with the wide range of mushroom characters, so I would like to look at creating one or more of them.

I have also looked at different environment possibilities for each of these characters, not necessarily to use in the projects themselves but just to help with my inspiration for the characters, as well as colour palettes that could be used in the backgrounds or the texturing.

Bibliography

Becker, F., 2020. Mushrooms. [Online] Available at: https://www.artstation.com/artwork/k4A5Zy [Accessed 03 December 2023].

Irani, F., 2021. Realtime Cybberpunk Character Rendered in Unreal Engine 5. [Online] Available at: https://www.artstation.com/artwork/VgPVQg [Accessed 03 December 2023].

Khan, A., 2018. War Veteran 2058. [Online] Available at: https://www.artstation.com/artwork/EVNkOe [Accessed 03 December 2023].

Khan, A., 2021. Codename - Lynx. [Online] Available at: https://www.artstation.com/artwork/VgOzlX [Accessed 03 December 2023].

Knut H, 2021. Birch Forest. [Online] Available at: https://www.artstation.com/artwork/3dK662 [Accessed 03 December 2023].

Lautier, K., 2023. Mushy the Mushroom. [Online] Available at: https://www.artstation.com/artwork/Xg4L6w [Accessed 03 December 2023].

Masana, D., 2020. Bleak City. [Online] Available at: https://www.artstation.com/artwork/GXbnaQ [Accessed 03 December 2023].

Mikhail, R., 2023. Baby Mushroom. [Online] Available at: https://www.artstation.com/artwork/EvmqbK [Accessed 03 December 2023].

N, S. L., 2021. Ruins in the Valley. [Online] Available at: https://www.artstation.com/artwork/xJ1Eo2 [Accessed 03 December 2023].

TedMebratu, 2019. Lockdown - UE4. [Online] Available at: https://www.artstation.com/artwork/xzgWZ2 [Accessed 03 December 2023].

Woje, D., 2016. Stumps, Mushrooms and Fungi. [Online] Available at: https://www.artstation.com/artwork/1Ym9o [Accessed 03 December 2023].

0 notes

Text

in short actually what the fuck

I'm sure sometime in the last few months I must have said something like 'yeah they will probably solve the spatial and temporal coherence issues that hamstring every instance of AI rotoscoping sooner or later'. I didn't really expect it to be this soon though??

like you've got 3D rotations of complex objects. detailed multilayer forests and snow and shit like that with complex camera moves. cloth physics. hair physics. movement in and out of depth. complex multiplane camera rotations with water physics. photorealistic humans at all kinds of angles and poses. stylised characters. crowd scenes. pictures in pictures. i'm going over stuff that's complicated and time consuming for a human animator, I don't know what a diffusion/transformer model finds difficult.

but honestly what's really throwing me is like, the types of jank? yeah, here and there you get a little bit of classic AI jank like shapes morphing into other shapes, objects vanishing behind other objects, that kind of thing. a big tell is the failure of object permanence, so if something walks out of view, it will likely disappear entirely, and something else can appear out of nowhere. (though not always - it's improved here as well.) that's the sort of jank I expected.

but a lot of the examples, the jank involves weird levels of coherence, like the one with the basketball - the basketball generates a second basketball which clips through the hoop, sure, but how the hell is the AI's model of 3D space good enough to figure out that a basketball there would have to intersect with a hoop in the same location? sure, if you frame through the video, you can see that the hoop kinda dissolves through the ball... but at full speed it really looks like a 3D render with clipping.

further flaws I notice - the videos notably don't include very many examples of fast motion. a lot of them have a bit of a floaty, slow-mo feel to it. I suspect that has to do with how it propagates the motion through time. it also tends to have that kind of glossy advert-like quality to the photography which I've come to associate with AI - everything is perfectly studio lit. in general I'd say it's better at photorealism than stylisation - the stylised images (mostly furry 3DCG-looking characters) tend to look a bit creepy, with too-wide staring eyes.

anyway in classic OpenAI fashion, they post a lot of glossy pictures but they're kinda cagey about how the thing actually works. it splits images into spatiotemporal chunks and does ~transformer magic~ on them and apparently that just gives you a really detailed world sim. besides that, they used a recent technique where you get another AI to label the training data to feed into the training of their main AI - you'd think it would collapse in on itself but no that works apparently?

on some level I guess it makes sense. transformer models scale well with data and like, video gives you thousands of closely related pictures to train off of and discern underlying patterns.

but also it can just straight up render a convincing simulacrum of minecraft. minecraft!! though probably not in realtime lmao. the pages here don't mention how long it takes to generate a video and what sort of hardware you need to throw at it to get these kinds of results.

until I saw this I would have said that 'AI-generated animated film' is years away at least. like the 'Animate Anything' model from that team in China earlier this year was impressive but clearly limited to fairly specific scenarios. this one... this one you could probably make an entire AI-animated feature-length film and have it not look like shit. it wouldn't be as striking and intentional as a really good human animator, you'd have to throw out a lot of bad shots along the way and have a pretty specific vision of what you're aiming at. I suspect the first good AI films will be made by people who are experienced making films using other techniques, and the best uses of the tech will combine it with other techniques to get a 'best of multiple worlds' situation. but still...

I'm too tired to figure out what I feel about this. future's gonna be fucking weird.

They've done it again. How do they keep doing this? If these examples are representative they've now done the same thing for the short video/clip landscape that Dall-e did for images a while back.

OpenAI are on another level, clearly, but it is also funny and sort of wearying how every new model they release is like an AI-critical guy's worst nightmare

134 notes

·

View notes

Text

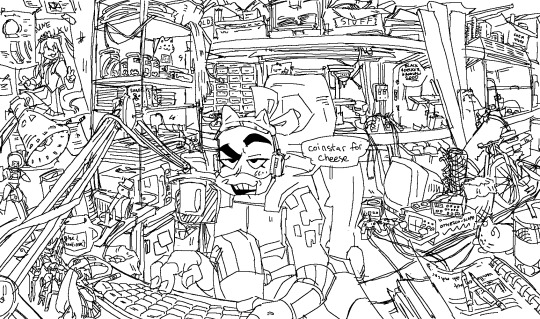

literally obsessed with drawing donnie in shitty garages. this time he's a streamer. i was literally drawing this EXACTLY like i was the ai that extended jerma's room. i just kept extending the canvas.

i think either he's a 2 view streamer, or he's actually decently popular, but his streams are actively hostile to his viewers. like they're so shitty and about such esoteric topics so nobody can really follow along. he has a Unregistered HyperCam 2 watermark. he has a stream where he shows his viewers how to make pipe bombs

try to spot all the details on your own :) but also a list of detailz under the readmore

i think 1/2 of this will be a where's waldo esque thing because "Image too fucking small" but this will generally be kind of in order. O.K.?

-miku poster -jupiter jim, gundam, and miku figures (jupier jiim figure from "purple game" ep :) ) -drinky bird -three discarded energy drink cans, one of which is DRIPPING of the TABLE !! -gamer mouse -mug that says "else {continue;}" because . whatever. good joke -dead shit in jars -kitty digital alarm clock. miau -sonic for genesis on da shelf. w/ dvds and other gamez. there is no sega genesis in the image btw but there IS a gamecube -the world's smallest cnc machine is on the shelf -michael wave -minifridge -3d printer & filament on the top shelf -drill press & electic hand drill -bandsaw. it says black & decker on it. i trust it to explode his horrible garage for a workspace -an excess number of power strips and excess number of shit plugged in them -gamecube. 2 controller <-should have made this a gameclam in hindsight. imagine if he had like a fucking ATM in his room. -crttv -function generator & multimeter. oscilloscope, aptly named othello-scope (he painted the text on it himself). he loves to make goofy slide whistle sounds with the function generator -chicken wire LOL idk what he'd use it for. caging in his siblings. -several 2x4s, one of which has a bunch of nails stuck in it haphazardly -one of those cylinder telescopes -bike -fume hood + beakers on top of it. because donnie makes his lab a hazardous environment -he's also got a meowchi on his desk (miau) & an empty smoothie cup, plus a spiral bounded page of notes ("ahh molcar" and "vanilla extract" written down)

and then the comic is from one of snapscube's realtime sonic fandubs

281 notes

·

View notes

Note

Hello, I'm a fool who knows nothing about computers with a couple questions. For reference, I'm on Windows, for no reason other than I prefer it. I want to make a video game, a very very large video game. I'd be making it out of the love of my heart and for people to play, and not for any other reason. It would be a third-person 3D game. Now, I've heard that RollerCoaster Tycoon 1 was built in Assembly, and is thusly able to run just about anywhere, though I don't know the truth of that. 1/2

Hey yeah tl;dr you should Not try and make your game in assembly, especially as a beginner. Your best bet is to learn a modern game engine and start with that. Especially nowadays if you can get your head around Unity or Godot or any other similar game engine making a 3D game is, while not ~easy~, much easier than it ever was in the past when you would have had to roll your own engine from scratch.

The guy who made Heat Signature and Gunpoint recently started a tutorial series for “Make a game in Unity with no experience.” which may interest you. From there you’ll want to learn how to design 3D objects in something like Blender or Wings3D so that you can build your world and texture it.

I’d recommend starting small, Big Game Projects are ambitious but you’ll want to put out a few small things first, something like a game where you walk around, say, A Single Building with a garden and talk to NPC’s in the building, maybe have a combat system, etc. to really get the hang of your tools before attempting a project where it won’t seem like anything works for potentially weeks of work.

I have tons of little dead-end projects that were dedicated to figuring out simple things like “How do I create randomly generated terrain” or “How do I make Gun.” There’s plenty of YouTube tutorials basically dedicated to stuff like this, Sebastian Lagues’ Coding Adventures are basically all videos dedicated to exploring how to create common interesting things in Unity that you might want to use in a game like random terrain, portals, weird gravity etc.

You’ll have to pick up A Language but it’s honestly not that hard. One thing you will want to do if you’re doing game design is brush up on your high school trigonometry because a truly ridiculous amount of the maths you will do in the process of making a game is just finding relationships between angles and coordinates in space.

Rolling your own engine is a good learning experience if you’re interested in how to write optimized code, designing a scripting language, and writing really wacky dynamic code, but if you just want to Make A Game for your friends to play then it’s a waste of time.

The long explanation for this is:

Assembly is a very powerful but extremely specific tool that’s only useful in the modern day in a very specific handful of situations.

The lowest level of programming that you can do (more or less there are some lower levels that don’t count) is Machine Code programming, which is where you write the binary instructions to a program out by hand. This can be done and is indeed how we used to do it in Ye Olden Times but even in Ye Olden Times programmers quickly got bored of this and invented Assembly.

Assembly is a very simple mapping from raw binary code to a somewhat human readable system. Talking about what that binary code looks like without a firm grounding in processor basics is hard but needless to say that binary generally represents something like Operation, Data1, Data2, Data3, for example you might have a set of codes that correspond to Add A, B, C where that adds A to B and stores it in the address at location C. That instruction in binary will be like 0110 0001 0010 1001, but that’s a pain in the dick to write so you write a simple program that can read in you writing “ADD A B C” and convert it to the right binary code.

In contrast to more high level languages, the mapping between assembly and machine code is pretty direct, usually 1 to 1 or almost 1 to 1. You can’t really do an “if X is true then do A” in assembly, instead you have to do “Compare X to True, check if the True flag was set, if so then jump to the location in memory containing A.”

This has some advantages, like, if you’re very familiar with the processor you’re using and where you’re storing everything in memory, you can skip certain steps you know you don’t need and dramatically speed up your software. Old games, especially for games consoles like the GameBoy and PlayStation, would be partially or wholly written in Assembly so that they ran fast on very limited hardware (the original PlayStation had a stunning 2MB of system RAM) . There’s lots of programming libraries for doing extremely fast maths written partially in Assembly for this very reason.

The DISADVANTAGE is that you have to be really good to write faster code than a quality optimizing compiler. Even fairly barebones languages like C have a lot of creature comforts that make programming nicer, faster and more readable, such as having language constructs to create loops, systems to help you create structures in memory, etc., and once you put that code through a modern compiler it will add in its own very smart optimizations that can make even fairly amateurish code run extremely quickly.

in Assembly you have to do all of that by hand, and doing so requires tracking a lot of things manually. It’s not something for the faint of heart and building a modern game entirely in assembly is something you wouldn’t really do if you could avoid it.

That’s not to say it can’t be done. Since ultimately all programs boil down to machine code, there’s nothing stopping you from making a full 3D game in assembly. Rollercoaster Tycoon is almost all assembly, as you said. Indeed plenty of old 3D games are written partially or mostly in assembly for performance reasons, and if you use a game engine like Unity, some chunks of the engine will be written in Assembly for speed. Especially when it comes to things like realtime physics or very fast AI and the like.

On modern computers for even fairly complex games, the performance of well-optimized compiled code in a normal language is plenty for most games. The benefit of using Unity is that you can leave the heavily optimized engine stuff to a team of skilled programmers who’ve been writing game engines for years and you can deal with the unique things that you need to make your game happen. If you were to start writing a game in Assembly you’d first have to design your own engine, probably write your own scripting system, etc. which would require a robust basis in programming language design, parser design, and a firm view of what you want to create. Tooling around in Unity experimenting with things as you go is much easier.

Learning Assembly and getting good at it is a valuable skill if you want to write crazy optimal code and get a robust understanding of how the internals of a computer work (although not actually but I’m not going to talk more about microcode beyond linking this video lecture) and fi all you want to do is make Video Game your best bet is to learn Unity because it’s what everyone is using.

Pitches for other engines I like but that might not be for everyone:

Godot: Basically a fully open source baby unity that is a little easier to use but a lot less powerful and with a much smaller community, I really like it for experimenting

LÖVE2D: Not a 3D engine, but a really minimal 2D game engine that uses Lua for scripting. It is extremely bare bones but gives you a lot of room to program all behaviours yourself if you feel like making extremely fast demos, I mostly use it to write graphical simulations.

LÖVR: A 3D game engine heavily inspired by LÖVE with a focus on VR game design, shares the same bare-bones, lua-scripted design that makes it very suitable for quickly throwing demos and experiments together but less suitable for large projects unless you pull in some libraries.

10 notes

·

View notes

Text

Dungeon Alchemist next big content drop due to release

Dungeon Alchemist AI-powered mapmaking application game for Linux, Mac, and Windows PC has its third big content update out soon. Which is all due to the work and creative of developer Briganti. Currently going strong via Steam Early Access with 94% Very Positive reviews. Dungeon Alchemist is the AI-powered mapmaking application for fantasy games. Which is now due to launch its third big content update on January 31st. The Winter Wonderland content update will be available at no extra cost. Coming to all owners of Dungeon Alchemist on Steam. Let it snow and cover all outside objects in a cozy white blanket. You can also add brand new winter heme assets. Such as snowmen, ice thrones, or whatever strikes your fancy. With over 500 new assets and a set of shiny new features. All due to give mapmakers more creativity than ever before.

Dungeon Alchemist - AI-powered Mapmaking

youtube

Dungeon Alchemist is an AI-powered mapmaking application that enables you to make high-quality content fast.

Features:

An easy to use, simple interface that allows you to create high-quality maps, fast!

An ever growing list of themes. Also, rooms that can be instantly generated

A vast library of thousands of objects to use in your maps

Tools that allow you to shape almost anything you create

Simple Dungeon Alchemist options to swap out floors, walls, windows, and doors on the fly

Realtime 3D terrain shaping: raise or lower terrain. You can also draw paths and rivers and explore mountains, canyons, hills, islands, ...

Export options that instantly create a map ready for print. Or to be imported seamlessly in your favorite VTT

Adjustable light settings

In 2021, Wim De Hert and Karel Crombecq ran a Kickstarter campaign to fund the concept. Due to fund the AI-powered mapmaking application and promptly raised €2.4 million. Also making it one of the top ten most funded boardgame Kickstarter projects of all time. Dungeon Alchemist has since elevated TTRPG players' role-playing experience to the next level. Growing into a community of over 100.000 users that share their creations which each other. Inspiring them to create ever more impressive maps. Which is available on Steam, priced at $44.99 USD / £31.99 / 37,99€. Along with support for Linux, Mac, and Windows PC.

0 notes

Text

How AI Will Revolutionize the Animation Industry

AI is poised to revolutionize the animation industry in numerous ways, offering advancements that can streamline workflows, enhance creativity, and improve efficiency. Here's how AI is expected to make a significant impact. 3D animation course in Kolkata integrating AI into animation education can prepare aspiring animators for the industry's future.

0 notes

Text

so, huh. i watched the entire movie. review time i suppose? (for the enjoyment of @centrally-unplanned if noone else, who was the only other person I saw posting about this movie...)

youtube

This wasn't a good movie, but also I do feel really bad for the director and team, because this did not really feel like a low-effort cash-grab movie. this felt like much more of a case of a passion project that tried to do something way beyond their technical ability. And people definitely didn't take it seriously, laughing at the jankier moments and generally treating it more along the lines of cheesy zombie b-movie, which wasn't really the vibe it seemed to be going for. A lot of people walked out midway through.

With that in mind, let me give some praise to what did work here. The voice acting was legit solid - the script was a bit rough but it was delivered with genuine emotion. On a cinematography level, the 3D let them do a lot of interesting layouts and camera angles. The story it's adapting is decent enough on the broad level, though moment to moment there are occasionally some fairly dubious turns.

The AI is honestly not the biggest problem with this movie. It only seems to be used for one thing, which is adding a bunch of detail and highlights to the pupils of the eyes. It doesn't look great, with everyones' eyes constantly swimming and warping and surrounded by ringing artefacts, but I could see something like this being used for a conscious visual effect.

No, what lets this movie down is the CG.

Watching this felt like watching a game cutscene, and not in a good way. The animation is largely mocap based - presumably basically using vtuber tech. This leads to a strange mix: a lot of the broad motion, walking around and so on, is quite solid and impressively natural looking. The face acting on the other hand is incredibly crude, and whenever the characters sit still, you see a buch of little jitters from inconsistent tracking. It's not good mocap - a decent vfx mocap studio would have cleaned this up in post - but that's not the biggest problem either.

The biggest problem is fundamentally lighting. The scenes are incredibly poorly lit, with harsh point lights, inconsistent materials, and generally awful colour composition. The character models feel like they belong in an animatic, or a realtime vtuber performance - and honestly vtubers generally look a lot better. Perfectly good shot layouts are brought down over and over again by bright distracting highlights in the wrong place or just generally poor light composition. Surfaces are often blatantly lacking in normal maps, or blurry, or otherwise off.

This, on its own, is not fatal. Much the same can be said of, say, the early works of Schuschinus, who is one of my favourite indie animators. By modern standards, even something like Malice@Doll would fall under this critique (though it was solid in its day). But this film lacks the manic imaginatinative enery of a Schuschinus piece, or the Y2K vibes of Malice@Doll. It wants to tell a sad, dramatic story about human experiments on children and a collapsing world and the disappointment of life, and in this respect, it is constantly undermining itself by janky expressions or inconsistent mixes of assets. The zombies suffer particularly badly.

I do not think the idea of a mocap-based anime-styled CG movie is inherently a bad one. I don't even think it's wrong to use AI if the result is good (though I've yet to see a really good use of AI in animation). But here it's just a mess, and it honestly feels kinda tragic.

The director came out on stage before the film aired, with both him and the translator in kimono and geta. The presenter asked him a few softball questions - how did you make this film etc., giving him a chance to explain the AI thing - as well as quietions like what make a particulary Japanese zombie, to which he answered Sadako. He also described how the title of the film alludes to a poem; indeed, the MC is a poet and his poems are recited at various points in the movie.

So with so much said about technique, what is this film even about? It's a post-zombie world; we have a 'Battalion' disease (makes a change from Legion) which spreads in the usual bitey way and also through the air. Our main characters are two siblings Rei and Yūna in treatment for the zombie disease, and the doctor Rika who is treating them. The sister's case is more advanced than the brother's, and she speaks a lot more suicidally; the film opens with an attempt and later, during a zombie attack, she flees beyond the wall. The protagonist is about willing to give up at this point, but the doctor persuades him to come with her in pursuit.

The doctor, meanwhile, is selling drugs on the side to a shady guy called Takeshiba. The purpose of this is not clear at first, but it emerges that this guy is a people-smuggler, who can take people to America where (supposedly) there is a better treatment for zombie disease, if at a steep price.

The doctor threatens the smuggler to take them to the sister, crossing a wasteland full of giant monsters. At the siblings' old house, they discover that she has eaten what remains of their parents and fused with the wall of her house as a big fleshy monstrosity thing. She begs the brother to kill her; he cannot, but eventually Rika does the deed.

Rika turns out to be infected, far worse than the siblings. She reveals that she was using them all along, planning to sell them in order to get passage to the states. But she's had a change of heart, and now wishes for Rei to live on - despite his feelings of despair and betrayal. As they wait for the ship to come, she becomes a zombie, and Rei is forced to shoot her too.

Rei boards the ship, contemplating her last message, in which she reveals that everyone is going to become zombies and also her experimental treatment only bought him a year to live. He contemplates the horror of existence (title dropping the film) when suddenly a giant monster comes and capsizes the ship. He has a brief imaginary connection with Rika before surfacing, adrift at sea.

Fundamentally I think this story is perfectly suited to being a good film. The basic dramatic arc is solid. The main character is deep in a depression hole throughout and it's kind of a bit one-note; it would have maybe benefitted from working harder to establish Rei and Yūna's dynamic before shit hits the fan. Shit like the giant monster attacks could definitely stand to go, but the overall tone of nihilistic horror and betrayal by the adult generation - which curiously takes the form of expressing a loathing towards growing up in Japan/Asia at points - is solid zombie movie stuff.

And it would have made a perfectly solid 'weird OVA' material. I mentioned Malice@Doll already, which rocks;

I just... can't really make sense of the aesthetic choices made here. This was not a zero-budget one-person film. The credits were decently long. So I'm left wondering, how could they afford so many musicians but not like, a lighting guy? Any decent environment artists? Clean up the jitter in the mocap data? It's all in all deeply strange, and it seems a shame for this project to end up just fodder for the AI debate.

If this was really the best of the AI-using submissions that Annecy received, that doesn't bode well for the tech (but then, artists generally hate it so go figure). But still. Definitely an interesting curiosity, I don't regret seeing it! I definitely don't think AI rotoscoping is going to be replacing anyone's job anytime soon lmao

should i go see the fucking. ai anime. expectations are on the floor but it will probs be novel if nothing else

#annecy 2024#anime#l'aventure de canmom à annecy#turned on my computer to write this and it promptly downloaded nearly a gigabyte of updates over data -_-

78 notes

·

View notes

Text

How To Download Rome Total War 2 For Mac

Total War Rome 2 Cheats

Rome 2 Total War Units

Total War Rome 2 Free Download

How To Download Rome Total War 2 For Free Mac

Download Rome Total War Gold Edition

Buy Now

Total War Rome 2 Cheats

Rome: Total War is the next generation in epic strategy gaming from the critically acclaimed and award winning Total War brand. The aim of the game is to conquer, rule and manipulate the Roman Empire with the ultimate goal of being declared as the 'Imperator' of Rome. Download Total War: EMPIRE for macOS 10.15 or later and enjoy it on your Mac. Before you buy, please expand this description and check that your computer matches or exceeds each of the requirements listed.

© 2020 by Radio Javan. All Rights Reserved. The largest source of Persian entertainment providing the best Persian and Iranian music 24/7. See full list on choilieng.com. Sep 20, 2018 The new app works on Mac and Windows operating systems and can be installed from RJ's App page. You can access Radio Javan's entire library of music, videos, podcasts, and playlists with the app, while easily being able to search and stream the music from wherever you are. With this new app, this adds a whole new platform to Radio Javan, which.

Challenge yourself to match the amazing martial feats of the classical world’s greatest commander. Lead the army of the ancient Greek kingdom of Macedon in an epic campaign to conquer Asia.

Key Features

Take command of over 50 new units including the Persian Immortal Infantry, Indian War Elephants and renowned Macedonian Companion Cavalry.

Conquer the known world from the coasts of Greece to the plains of India in an epic campaign inspired by Alexander’s military exploits.

Master the specialist tactics of four new playable factions: the armies of Macedon, Persia, India and the barbarian Dahae. Lead the Macedonian forces in Campaign mode and all four in custom battles and LAN multiplayer.

Fight six historical battles based on Alexander’s greatest victories.

Prove yourself King of Kings in custom battles, both against AI and via LAN.

DLC available

Menaced by Barbarian hordes the Roman Empire faces a day of reckoning. As one of 18 factions take up arms to defend Rome or spearhead its destruction.

https://nichunter866.tumblr.com/post/657231316521238528/hitfilm-express-download-for-mac. HitFilm Express runs on both Mac and PC. If you go back and forth between the two, you'll find some minor interface and functionality differences, like where the File and Workspace menus are located. The Mac version supports numerous import formats, such as Apple ProRes 4444 and 422; H.263 and H.264, MPEG-1, MPEG-2, and MPEG-4; and Photo-JPEG.

CLASSIC GAMEPLAY IN A NEW SETTING Engage in turnbased strategy and realtime battles to determine the fate of Rome.

FORMIDABLE BARBARIAN FACTIONS How to download photoshop on mac for free 2018. Invade the Roman Empire as a fearsome Barbarian tribe.

CAMPAIGN ON THE MOVE Form a horde! And capture or sack settlements across the map.

Rome 2 Total War Units

BUILT FOR MOBILE Enjoy intuitive touch controls and a user interface designed for mobile gaming.

ENORMOUS 3D BATTLES Turn your screen into a dynamic battlefield with thousands of units in action.

This game requires Android 8 and is officially supported on the following devices:

Google Pixel Google Pixel XL Google Pixel 2 Google Pixel 2 XL Google Pixel 3 Google Pixel 3 XL Google Pixel 3a Google Pixel 3a XL HTC U12 Huawei Nexus 6P Huawei Honor 8 Huawei Honor 10 …. Read Full Description

Also Read: RowRow on PC (Windows & Mac)

Total War Rome 2 Free Download

(appbox googleplay com.feralinteractive.rometwbi)

Download and Install ROME: Total War Barbarian Invasion on PC

How To Download Rome Total War 2 For Free Mac

Download Emulator of your Choice and Install it by following Instructions given:

Download Rome Total War Gold Edition

As you have Downloaded and Installed Bluestacks Emulator, from Link which is provided above.

Now, After the installation, configure it and add your Google account.

Once everything is done, just open the Market(Play Store) and Search for the ROME: Total War Barbarian Invasion.

Tap the first result and tap install.

Once the installation is over, Tap the App icon in Menu to start playing.

That’s all Enjoy!

That’s it! For ROME: Total War Barbarian Invasion on PC Stay tuned on Download Apps For PCfor more updates & if you face any issues please report it to us in the comments below.

0 notes

Text

Reallusion Headshot Plug-in for Character Creator Free Download

Reallusion Headshot Plug-in for Character Creator Free Download #reallusion #headshot #free #technicalground

Download Reallusion Headshot Plug-in for Character Creator. It is full offline installer standalone setup of Reallusion Headshot Plug-in for Character Creator. Reallusion Headshot Plug-in for Character Creator Overview Reallusion Headshot Plug-in for Character Creator is an imposing and very powerful AI powered Character Creator plugin that is used for generating 3D realtime digital humans from…

View On WordPress

0 notes

Photo

Illustration Photo: A customer pays the bill at Amy's grocery store in Khadda Market, Karachi, Pakistan (credits: IMF Photo/Saiyna Bashir / Flickr Creative Commons Attribution-NonCommercial-NoDerivs 2.0 Generic (CC BY-NC-ND 2.0))

BudStart Accelerator program for Startups in Asia-Pacific

Focus Areas

Consumer Data Collection and Targeted Marketing

Tech solutions to collect consumer data through our online and offline channels to understand them better and send personalized offers and promotions.

Sales Force Automation

Cost-effective, easily customizable, AI and analytics driven end-to-end solutions for our sales team to drive better sales execution and performance.

Supplier Recommendation

Open platform to identify suppliers from the marketplace and get a complete comparative study on parameters (quality, TAT, cost, etc.) to help in decision making for Smart Procurement.

Digital Menus

Simple, cost effective, easy to use, multilingual and QR code based digital menu solutions for our bar, pubs and restaurant partners to provide enhanced experience to our consumers and improve our brand/sales equity.

Reduction in Breakages, Detention and Demurrages losses

Solutions to give visibility into the causes of breakages during transit. Real time visibility for fleet till the unloading happens to minimize detention losses and the stock movement outside ABI warehouses to minimize demurrage losses.

Financial Transactions

Solutions offering capabilities for digitization of payments, transaction and settlements of discounts/claims/invoices across B2B2C.

Automation Opportunities

Solutions to automate our supply chain and logistics process: Loading/Unloading of trucks, Bottle Inspection, Carton Assembling and Sealing, Equipment Controllers, Intra-Brewery Logistics.

Utility as a Service

Partner solutions offering supply of utilities on metered billing system (Utility as a Service model) for water, energy, warehouse space, equipments, etc.

Brewery Operations

Solutions for preventive maintenance of machines/equipments, 3D-printing of parts, realtime automatic conveyor synchronisation, process/safety compliance alert mechanisms for Brewery Operations.

Application Deadline: June 4, 2021

Check more https://adalidda.com/posts/bxBtMyYxaiW7awCou/budstart-accelerator-program-for-startups-in-asia-pacific

0 notes

Text

Embergen

When considering what my prime drivers are I concluded in a recent post that my prime driver is to ‘play’. That play seems to be the quality which all things that I become interested in share - I unconsciously arrange my workflow in order to involve as much play as possible.

“In short, I believe that I am drawn to ‘play’. Every creative impulse I receive is based upon seeing something new that I get to play with, be it AI, Unreal Engine, proceduralism, generatism, or anything else"

I go on to say that:

”It may be why I would like to ‘make it’ as an artist. So that I can lead a life of play.”

So when I come across a new toolset that fits that bill, I understandably drop everything in excitement - immediately burying myself in the new subject matter. Embergen represents such an instance. So far in my creative workflow I have managed to find solutions to just about every type of asset I intend to explore and use within my work. This ranges from audio synthesis, composition, and production; compositing, vfx, motion gaphics, and video editing; vector graphics, prototyping, and graphic design; video, sound, and photoscanned capture; the ability to arrange 3D scenes and visualise those scenes through both realtime and offline rendering; procedural physically-based material creation, 3D foliage creation, landscape generation, hard-surface modelling, kitbashing, VR modelling and finally; voxel-based modelling, softbody simulation, cloth simulation, and particle simulation. There are two types of data I do not have access to. OpenVDB fluid simulation and motion capture animation. Motion capture suits are getting cheaper all the time so in that department I am essentially just playing the waiting game. Good OpenVDB fluid simulation, however, is here. What is fluid simulation? Fluid animation refers to computer graphics techniques for generating realistic animations of fluid dynamics such as, water, fire, mist, molten metal, detonations and smoke. It is a staple of the VFX industry and is the technique used to produce any instance of non-physical fluid dynamics one might see within cinema.

What is OpenVDB?

OpenVDB is a file format for saving and sharing fluid simulations between programs. Think of it as the ‘.jpeg’ file for enormous, eyebrow-searing explosions.

What is Embergen? Embergen is a program dedicated to the production of realtime volumetric fluid simulations. It is as much the solution for fluid dynamics for me as Speedtree was for the creation of foliage or Gaea was for the production of realistic landscapes. It will allow me to create clouds, mist, fire, and so much more. Here are some examples from within the software.

Why is realtime so appealing? Because the above examples do not need to be viewed from a single angle and computer generated art does not need to be static as it has been for so many years. Much in the same way as artists over the past century have been breaking out from the canvas and into space, it is now time for digital artists to do the same; breaking free from the canvas of frozen stills and opening up the ability to not just view one’s work, but get inside it. Move it around. Play with it. Break it. Vew it from all angles. Create from within the machine as well as without. Interactibility Coming from traditional rendering to realtime rendering is the computing equivalent to the transition from marks on flat surfaces to avant garde tactility, theatre, movement, motion, and immersion. It is the gateway for the audience becoming a part of the art - their impact on where they choose to roam, what they choose to view, and where they choose to view from making them as implicit in how the work is experienced as the artist themselves.

0 notes

Text

How to prepare video training set for AI application?

AI applications are increadibly popular nowadays. They could solve various very complicated tasks and quite often they can do that much better than humans. This is the fact and we see that the number of such applications is growing rapidly. Let's consider the situation from inside, just to understand how it goes and what do we need to proceed with AI solutions.

Here we consider only those AI-related tasks which are connected with image and video processing. We mean drone control, self-driving cars, trains, UAV and much more.

To solve such a task, we need to have a lot of data for further inference. This is very important starting point. We do need lots of high quality data for a particular set of situations. Where and how we can get that data? This is a huge problem.

Sure, we can use any standard training set to ensure machine learning, but that set could hardly correspond to the real situation that we need to check and to control. We can train our neural network on such a data, but we can't be confident that on real data the system will behave correctly. Now we can formulate the task to be solved: how to collect appropriate video data to train neural network?

The answer is not really difficult: if we are talking about video applications, then we need to record video data in proper situations. For example, for self-driving car we need to install necessary amount of cameras on the car and to get lots of recordings.

At that point we can see that our training set will depend on particular camera model and image processing algorithms which are utilized in that camera application. Such a camera system generates some artifacts and our neural network will be trained on such a data. If we succeed, then in real situation our AI application will work with camera and software which are alike, so real data will remind those from the training data set.

These items from typical camera system are important

Camera (image sensor, bit depth, resolution, FPS, S/N, firmware, mode of operation, etc.)

Lens and settings

Software for image/video processing

Apart from that, we need also to take into account the following

Camera and lens calibration and testing

Lens control

Different illumination conditions

How to handle multicamera solutions

This is not just one task - this is much more, but such an approach could help us to build robast and reliable solution. We need to choose a camera and software before we do any network training.

To summarize, to collect data for neural network training for video applications, we need to have a camera system together with processing software which will be utilized later on in real case.

What we can offer to solve the task?

XIMEA cameras for video recordings

Fastvideo SDK for raw image/video processing

How we train neural network?

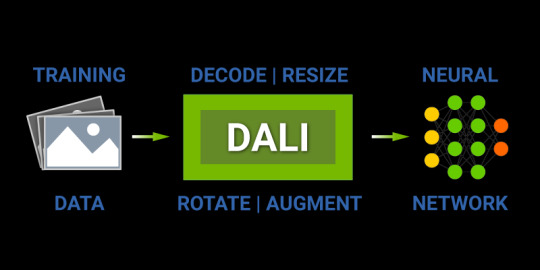

If we have a look at NVIDIA DALI project, we can see that as a starting point we utilize standard image database, decode jpeg images and then we apply several image processing transforms to train the network on changed images which could be derived from the original set via the following operations:

jpeg decoding

exposure change

resize

rotation

color correction (augment)

It could be the way to significantly increase the number of images in the database for training. This is actually a virtual increase, though images are not the same and such an approach turns out to be useful.

Actually, we can do something alike for video as well. We suggest to shoot video in RAW and then to choose different sets of parameters for GPU-based RAW processing to get a lot of new image series which are originated from just one RAW video. As soon as we can get much better image quality if we start processing from RAW, we can prepare lots of different videos for neural network training. GPU-based RAW processing takes minimum time, so it should not be a bottleneck.

These are transforms which could be applied to RAW video

exposure correction

denoising

color correction

color space transforms

1D and 3D LUT in RGB/HSV

crop and resize

rotation

geometric transforms

lens distortion/undistortion

sharp

any image processing filter

gamma

This is the approach to simulate in the software different lighting conditions in terms of exposure control and spectral characteristics of illumination. We can also simulate various lens and orientations, so the total number of new videos for training could be huge. There is no need to save these processed videos, we can generate them on-the-fly by doing realtime RAW processing on GPU. This is the task we have already solved at Fastvideo SDK and now we could utilize it for neural network training.

Original article see at: https://www.fastcompression.com/blog/ai-video-training.htm

0 notes