#software testing methodologies

Explore tagged Tumblr posts

Text

The Intriguing Role of the Number 3 in Software Management

In the world of software management, the presence of the number three is surprisingly pervasive and influential. Whether it’s in methodologies, frameworks, or processes, this number frequently emerges, offering a simple yet profound structure that can be seen across various aspects of the field. From the three-tier architecture to the rule of three in coding, the number three seems to be a…

View On WordPress

3 notes

·

View notes

Text

What Role Do QA Services Play in Software Development?

QA services play an essential role in software development by ensuring we deliver reliable products that meet user needs. They help us identify problems early in the development process, which reduces the risk of failures later on. By establishing clear quality objectives, we enhance the overall quality and maintain our brand reputation. Plus, with various methodologies at our disposal, we can adapt to project needs. If you stick with us, you’ll discover more about the future of QA services.

Understanding the Importance of QA in Software Development

Quality assurance is central to successful software development. Through thorough testing and evaluation, QA services guarantee that a product is stable, functional, and meets all specified requirements.

A strong QA process reduces the likelihood of defects in the production environment and ensures a seamless experience for end users. Additionally, effective QA practices contribute significantly to maintaining brand reputation and boosting customer satisfaction.

In an increasingly competitive market, integrating QA from the early stages of development is essential for maintaining the highest standards of quality. By focusing on quality assurance throughout the development cycle, companies can ensure a more efficient and error-free product lifecycle.

Key Responsibilities of QA Services

QA services take on several key responsibilities throughout the software development process:

Test Design and Execution: QA teams create and execute various tests to identify bugs and issues at every stage of development.

Adherence to Standards: QA ensures that all software meets established requirements, both functional and non-functional.

Stakeholder Collaboration: QA professionals work with other development teams to ensure that software features align with business goals and user expectations.

Documentation: Proper documentation of testing processes ensures transparency and accountability, fostering continuous improvement.

Post-Deployment Evaluation: After the product is launched, QA teams monitor performance to ensure the product remains reliable and performs well in the field.

By addressing these areas, QA helps deliver software that meets industry standards and user expectations.

Different QA Methodologies and Their Impact

Different QA methodologies greatly shape the software development process, influencing how we approach quality assurance. Each methodology, whether it’s Waterfall, Agile, or DevOps, brings unique strengths and challenges.

For instance, Waterfall’s linear structure suits projects with clear requirements but can limit flexibility. On the other hand, Agile promotes continuous collaboration and adaptation, allowing us to respond swiftly to changes.

DevOps integrates development and operations, emphasizing automation and continuous testing, which enhances efficiency and reduces errors. Additionally, methodologies like risk-based and exploratory testing help us focus on critical areas and uncover hidden issues.

Benefits of Implementing QA Services

Implementing QA services not only enhances the quality of our software products but also streamlines the entire development process. By defining quality objectives early on, we set clear benchmarks that guide our development efforts.

With QA integrated from the beginning, we can conduct code reviews and unit testing, which helps us catch issues before they escalate. During the testing phase, we validate software behavior through various methodologies, ensuring it meets user expectations and company standards.

After deployment, QA doesn’t stop; we focus on ongoing maintenance and incorporate user feedback for continuous improvement.

The Future of QA in Software Development

As software development methodologies evolve, so too does the role of QA. Automation and artificial intelligence (AI) are increasingly integrated into testing practices, making the process faster and more accurate. Automation reduces manual errors, speeds up the testing process, and enhances overall productivity.

Additionally, the increasing adoption of Agile and DevOps practices means that QA professionals are becoming more embedded in the planning and design stages of development. This ensures that quality is maintained at every phase of the software lifecycle, helping to prevent issues before they even arise.

Frequently Asked Questions

How Do QA Services Integrate With Agile Development Processes?

In Agile development processes, we integrate QA services by collaborating closely with teams, conducting continuous testing, and adapting to changes swiftly, ensuring quality remains a priority throughout the entire development cycle.

How Is QA Performance Measured and Reported?

We measure QA performance through metrics like defect density, test coverage, and cycle time. Regular reporting keeps everyone informed, ensuring we identify areas for improvement and maintain high-quality standards throughout the development process.

What Are the Common Challenges Faced by QA Teams?

We often face challenges like tight deadlines, communication gaps, and evolving requirements. It’s essential we adapt quickly, prioritize tasks effectively, and maintain clear communication to guarantee our QA processes remain robust and efficient.

How Do QA Services Adapt to New Technologies?

As we explore how QA services adapt to new technologies, we recognize the importance of continuous learning. By embracing innovative tools and methodologies, we enhance our testing processes, ensuring quality remains a priority in evolving environments.

Conclusion

To summarize, integrating QA services into our software development process is essential for delivering high-quality products. By prioritizing quality assurance, we not only enhance user satisfaction but also strengthen our brand’s reputation. As we continue to embrace innovative QA methodologies, we can guarantee that our software is reliable and efficient. Let’s commit to making QA a fundamental part of our development journey, ultimately leading to successful launches and a brighter future for our projects.

#it services#it solutions#qa testing#digital marketing#creative product design#qaston global#qa services#software development#QA Methodologies#Quality assurance#quality assurance services#quality assurance in another world

0 notes

Text

Embark on a global bug hunt with our SDET team, leveraging cloud-based mobile testing to ensure your app achieves flawless performance across all devices and networks. With cutting-edge tools and a meticulous approach, we identify and eliminate bugs before they impact your users. https://rb.gy/jfueow #SDET #BugHunt #CloudTesting #MobileAppQuality #FlawlessPerformance SDET Tech Pvt. Ltd.

#Software Testing Companies in India#Software Testing Services in India#Test Automation Development Services#Test Automation Services#Performance testing services#Load testing services#Performance and Load Testing Services#Software Performance Testing Services#Functional Testing Services#Globalization Testing services#Globalization Testing Company#Accessibility testing services

Agile Testing Services#Mobile Testing Services#Mobile Apps Testing Services#ecommerce performance testing#ecommerce load testing#load and performance testing services#performance testing solutions#product performance testing#application performance testing services#software testing startups#benefits of load testing#agile performance testing methodology#agile testing solutions#mobile testing challenges#cloud based mobile testing#automated mobile testing#performance engineering & testing services#performance testing company#performance testing company in usa

0 notes

Text

What are the main principles of Software Testing Methodology?

Software Testing Methodology's main principles include ensuring software functionality, reliability, and performance through systematic processes. It involves verification and validation, defect identification, risk management, automation, and adherence to best practices. Key methodologies include unit testing, integration testing, system testing, and acceptance testing to ensure comprehensive evaluation and quality assurance.

Read more : https://www.bizbangboom.com/articles/what-are-the-main-principles-of-software-testing-methodology

0 notes

Text

Agile Methodology in Software Testing Explained

Discover how Agile methodology revolutionizes software testing at Agile Ethos. Learn about the iterative approach, continuous integration, and collaborative practices that enhance testing efficiency and product quality. Explore our insights on Agile testing and how it fosters adaptability and customer satisfaction in software development. Visit us to delve deeper into Agile testing principles and practices.

0 notes

Text

Driving Innovation: A Case Study on DevOps Implementation in BFSI Domain

Banking, Financial Services, and Insurance (BFSI), technology plays a pivotal role in driving innovation, efficiency, and customer satisfaction. However, for one BFSI company, the journey toward digital excellence was fraught with challenges in its software development and maintenance processes. With a diverse portfolio of applications and a significant portion outsourced to external vendors, the company grappled with inefficiencies that threatened its operational agility and competitiveness. Identified within this portfolio were 15 core applications deemed critical to the company’s operations, highlighting the urgency for transformative action.

Aspirations for the Future:

Looking ahead, the company envisioned a future state characterized by the establishment of a matured DevSecOps environment. This encompassed several key objectives:

Near-zero Touch Pipeline: Automating product development processes for infrastructure provisioning, application builds, deployments, and configuration changes.

Matured Source-code Management: Implementing robust source-code management processes, complete with review gates, to uphold quality standards.

Defined and Repeatable Release Process: Instituting a standardized release process fortified with quality and security gates to minimize deployment failures and bug leakage.

Modernization: Embracing the latest technological advancements to drive innovation and efficiency.

Common Processes Among Vendors: Establishing standardized processes to enhance understanding and control over the software development lifecycle (SDLC) across different vendors.

Challenges Along the Way:

The path to realizing this vision was beset with challenges, including:

Lack of Source Code Management

Absence of Documentation

Lack of Common Processes

Missing CI/CD and Automated Testing

No Branching and Merging Strategy

Inconsistent Sprint Execution

These challenges collectively hindered the company’s ability to achieve optimal software development, maintenance, and deployment processes. They underscored the critical need for foundational practices such as source code management, documentation, and standardized processes to be addressed comprehensively.

Proposed Solutions:

To overcome these obstacles and pave the way for transformation, the company proposed a phased implementation approach:

Stage 1: Implement Basic DevOps: Commencing with the implementation of fundamental DevOps practices, including source code management and CI/CD processes, for a select group of applications.

Stage 2: Modernization: Progressing towards a more advanced stage involving microservices architecture, test automation, security enhancements, and comprehensive monitoring.

To Expand Your Awareness: https://devopsenabler.com/contact-us

Injecting Security into the SDLC:

Recognizing the paramount importance of security, dedicated measures were introduced to fortify the software development lifecycle. These encompassed:

Security by Design

Secure Coding Practices

Static and Dynamic Application Security Testing (SAST/DAST)

Software Component Analysis

Security Operations

Realizing the Outcomes:

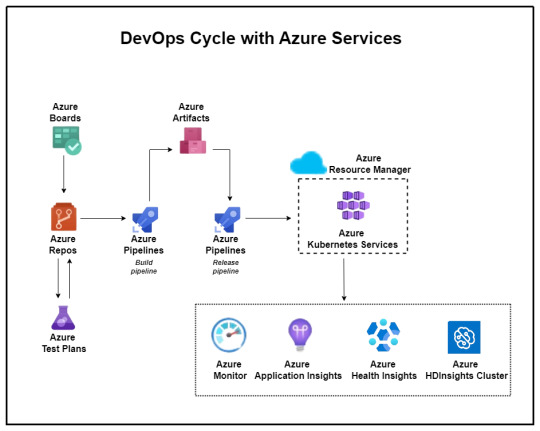

The proposed solution yielded promising outcomes aligned closely with the company’s future aspirations. Leveraging Microsoft Azure’s DevOps capabilities, the company witnessed:

Establishment of common processes and enhanced visibility across different vendors.

Implementation of Azure DevOps for organized version control, sprint planning, and streamlined workflows.

Automation of builds, deployments, and infrastructure provisioning through Azure Pipelines and Automation.

Improved code quality, security, and release management processes.

Transition to microservices architecture and comprehensive monitoring using Azure services.

The BFSI company embarked on a transformative journey towards establishing a matured DevSecOps environment. This journey, marked by challenges and triumphs, underscores the critical importance of innovation and adaptability in today’s rapidly evolving technological landscape. As the company continues to evolve and innovate, the adoption of DevSecOps principles will serve as a cornerstone in driving efficiency, security, and ultimately, the delivery of superior customer experiences in the dynamic realm of BFSI.

Contact Information:

Phone: 080-28473200 / +91 8880 38 18 58

Email: [email protected]

Address: DevOps Enabler & Co, 2nd Floor, F86 Building, ITI Limited, Doorvaninagar, Bangalore 560016.

#BFSI#DevSecOps#software development#maintenance#technology stack#source code management#CI/CD#automated testing#DevOps#microservices#security#Azure DevOps#infrastructure as code#ARM templates#code quality#release management#Kubernetes#testing automation#monitoring#security incident response#project management#agile methodology#software engineering

0 notes

Text

https://thepoolvision.com/mvp/

Mastering Project Management with MVP: Building Successful Software

In the fast-paced world of software development, where innovation drives success, the concept of the Minimum Viable Product (MVP) has emerged as a game-changer. MVP project management has revolutionized how startups and entrepreneurs approach software development, allowing them to validate ideas, minimize risks, and maximize outcomes.

We'll delve into the depths of MVP software development, exploring its lifecycle, methodologies, benefits, and real-world success stories.

#MVP Software Development#Proof of Concept Services#Minimum Viable Product Launch#MVP Development Lifecycle#Lean Development Methodology#MVP Iteration and Refinement#Cost-Effective MVP Solutions#MVP for Startups#MVP for Entrepreneurs#MVP User Experience Design#MVP Validation Process#MVP Development Framework#MVP Performance Testing#MVP Deployment Strategy

1 note

·

View note

Text

Women pulling Lever on a Drilling Machine, 1978 Lee, Howl & Company Ltd., Tipton, Staffordshire, England photograph by Nick Hedges image credit: Nick Hedges Photography

* * * *

Tim Boudreau

About the whole DOGE-will-rewrite Social Security's COBOL code in some new language thing, since this is a subject I have a whole lot of expertise in, a few anecdotes and thoughts.

Some time in the early 2000s I was doing some work with the real-time Java team at Sun, and there was a huge defense contractor with a peculiar query: Could we document how much memory an instance of every object type in the JDK uses? And could we guarantee that that number would never change, and definitely never grow, in any future Java version?

I remember discussing this with a few colleagues in a pub after work, and talking it through, and we all arrived at the conclusion that the only appropriate answer to this question as "Hell no." and that it was actually kind of idiotic.

Say you've written the code, in Java 5 or whatever, that launches nuclear missiles. You've tested it thoroughly, it's been reviewed six ways to Sunday because you do that with code like this (or you really, really, really should). It launches missiles and it works.

A new version of Java comes out. Do you upgrade? No, of course you don't upgrade. It works. Upgrading buys you nothing but risk. Why on earth would you? Because you could blow up the world 10 milliseconds sooner after someone pushes the button?

It launches fucking missiles. Of COURSE you don't do that.

There is zero reason to ever do that, and to anyone managing such a project who's a grownup, that's obvious. You don't fuck with things that work just to be one of the cool kids. Especially not when the thing that works is life-or-death (well, in this case, just death).

Another case: In the mid 2000s I trained some developers at Boeing. They had all this Fortran materials analysis code from the 70s - really fussy stuff, so you could do calculations like, if you have a sheet of composite material that is 2mm of this grade of aluminum bonded to that variety of fiberglass with this type of resin, and you drill a 1/2" hole in it, what is the effect on the strength of that airplane wing part when this amount of torque is applied at this angle. Really fussy, hard-to-do but when-it's-right-it's-right-forever stuff.

They were taking a very sane, smart approach to it: Leave the Fortran code as-is - it works, don't fuck with it - just build a nice, friendly graphical UI in Java on top of it that *calls* the code as-is.

We are used to broken software. The public has been trained to expect low quality as a fact of life - and the industry is rife with "agile" methodologies *designed* to churn out crappy software, because crappy guarantees a permanent ongoing revenue stream. It's an article of faith that everything is buggy (and if it isn't, we've got a process or two to sell you that will make it that way).

It's ironic. Every other form of engineering involves moving parts and things that wear and decay and break. Software has no moving parts. Done well, it should need *vastly* less maintenance than your car or the bridges it drives on. Software can actually be *finished* - it is heresy to say it, but given a well-defined problem, it is possible to actually *solve* it and move on, and not need to babysit or revisit it. In fact, most of our modern technological world is possible because of such solved problems. But we're trained to ignore that.

Yeah, COBOL is really long-in-the-tooth, and few people on earth want to code in it. But they have a working system with decades invested in addressing bugs and corner-cases.

Rewriting stuff - especially things that are life-and-death - in a fit of pique, or because of an emotional reaction to the technology used, or because you want to use the toys all the cool kids use - is idiotic. It's immaturity on display to the world.

Doing it with AI that's going to read COBOL code and churn something out in another language - so now you have code no human has read, written and understands - is simply insane. And the best software translators plus AI out there, is going to get things wrong - grievously wrong. And the odds of anyone figuring out what or where before it leads to disaster are low, never mind tracing that back to the original code and figuring out what that was supposed to do.

They probably should find their way off COBOL simply because people who know it and want to endure using it are hard to find and expensive. But you do that gradually, walling off parts of the system that work already and calling them from your language-du-jour, not building any new parts of the system in COBOL, and when you do need to make a change in one of those walled off sections, you migrate just that part.

We're basically talking about something like replacing the engine of a plane while it's flying. Now, do you do that a part-at-a-time with the ability to put back any piece where the new version fails? Or does it sound like a fine idea to vaporize the existing engine and beam in an object which a next-word-prediction software *says* is a contraption that does all the things the old engine did, and hope you don't crash?

The people involved in this have ZERO technical judgement.

#tech#software engineering#reality check#DOGE#computer madness#common sense#sanity#The gang that couldn't shoot straight#COBOL#Nick Hedges#machine world

44 notes

·

View notes

Text

In the realm of artificial intelligence, the devil is in the details. The mantra of “move fast and break things,” once celebrated in the tech industry, is a perilous approach when applied to AI development. This philosophy, born in the era of social media giants, prioritizes rapid iteration over meticulous scrutiny, a dangerous gamble in the high-stakes world of AI.

AI systems, unlike traditional software, are not merely lines of code executing deterministic functions. They are complex, adaptive entities that learn from vast datasets, often exhibiting emergent behaviors that defy simple prediction. The intricacies of neural networks, for instance, involve layers of interconnected nodes, each adjusting weights through backpropagation—a process that, while mathematically elegant, is fraught with potential for unintended consequences.

The pitfalls of a hasty approach in AI are manifold. Consider the issue of bias, a pernicious problem that arises from the minutiae of training data. When datasets are not meticulously curated, AI models can inadvertently perpetuate or even exacerbate societal biases. This is not merely a technical oversight but a profound ethical failure, one that can have real-world repercussions, from discriminatory hiring practices to biased law enforcement tools.

Moreover, the opacity of AI models, particularly deep learning systems, poses a significant challenge. These models operate as black boxes, their decision-making processes inscrutable even to their creators. The lack of transparency is not just a technical hurdle but a barrier to accountability. In critical applications, such as healthcare or autonomous vehicles, the inability to explain an AI’s decision can lead to catastrophic outcomes.

To avoid these pitfalls, a paradigm shift is necessary. The AI community must embrace a culture of “move thoughtfully and fix things.” This involves a rigorous approach to model validation and verification, ensuring that AI systems are robust, fair, and transparent. Techniques such as adversarial testing, where models are exposed to challenging scenarios, can help identify vulnerabilities before deployment.

Furthermore, interdisciplinary collaboration is crucial. AI developers must work alongside ethicists, domain experts, and policymakers to ensure that AI systems align with societal values and legal frameworks. This collaborative approach can help bridge the gap between technical feasibility and ethical responsibility.

In conclusion, the cavalier ethos of “move fast and break things” is ill-suited to the nuanced and impactful domain of AI. By focusing on the minutiae, adopting rigorous testing methodologies, and fostering interdisciplinary collaboration, we can build AI systems that are not only innovative but also safe, fair, and accountable. The future of AI depends not on speed, but on precision and responsibility.

#minutia#AI#skeptic#skepticism#artificial intelligence#general intelligence#generative artificial intelligence#genai#thinking machines#safe AI#friendly AI#unfriendly AI#superintelligence#singularity#intelligence explosion#bias

3 notes

·

View notes

Text

About the whole DOGE-will-rewrite Social Security's COBOL code

Posted to Facebook by Tim Boudreau on March 30, 2025.

About the whole DOGE-will-rewrite Social Security's COBOL code in some new language thing, since this is a subject I have a whole lot of expertise in, a few anecdotes and thoughts.

Some time in the early 2000s I was doing some work with the real-time Java team at Sun, and there was a huge defense contractor with a peculiar query: Could we document how much memory an instance of every object type in the JDK uses? And could we guarantee that that number would never change, and definitely never grow, in any future Java version?

I remember discussing this with a few colleagues in a pub after work, and talking it through, and we all arrived at the conclusion that the only appropriate answer to this question as "Hell no." and that it was actually kind of idiotic.

Say you've written the code, in Java 5 or whatever, that launches nuclear missiles. You've tested it thoroughly, it's been reviewed six ways to Sunday because you do that with code like this (or you really, really, really should). It launches missiles and it works.

A new version of Java comes out. Do you upgrade? No, of course you don't upgrade. It works. Upgrading buys you nothing but risk. Why on earth would you? Because you could blow up the world 10 milliseconds sooner after someone pushes the button?

It launches fucking missiles. Of COURSE you don't do that.

There is zero reason to ever do that, and to anyone managing such a project who's a grownup, that's obvious. You don't fuck with things that work just to be one of the cool kids. Especially not when the thing that works is life-or-death (well, in this case, just death).

Another case: In the mid 2000s I trained some developers at Boeing. They had all this Fortran materials analysis code from the 70s - really fussy stuff, so you could do calculations like, if you have a sheet of composite material that is 2mm of this grade of aluminum bonded to that variety of fiberglass with this type of resin, and you drill a 1/2" hole in it, what is the effect on the strength of that airplane wing part when this amount of torque is applied at this angle. Really fussy, hard-to-do but when-it's-right-it's-right-forever stuff.

They were taking a very sane, smart approach to it: Leave the Fortran code as-is - it works, don't fuck with it - just build a nice, friendly graphical UI in Java on top of it that *calls* the code as-is.

We are used to broken software. The public has been trained to expect low quality as a fact of life - and the industry is rife with "agile" methodologies *designed* to churn out crappy software, because crappy guarantees a permanent ongoing revenue stream. It's an article of faith that everything is buggy (and if it isn't, we've got a process or two to sell you that will make it that way).

It's ironic. Every other form of engineering involves moving parts and things that wear and decay and break. Software has no moving parts. Done well, it should need *vastly* less maintenance than your car or the bridges it drives on. Software can actually be *finished* - it is heresy to say it, but given a well-defined problem, it is possible to actually *solve* it and move on, and not need to babysit or revisit it. In fact, most of our modern technological world is possible because of such solved problems. But we're trained to ignore that.

Yeah, COBOL is really long-in-the-tooth, and few people on earth want to code in it. But they have a working system with decades invested in addressing bugs and corner-cases.

Rewriting stuff - especially things that are life-and-death - in a fit of pique, or because of an emotional reaction to the technology used, or because you want to use the toys all the cool kids use - is idiotic. It's immaturity on display to the world.

Doing it with AI that's going to read COBOL code and churn something out in another language - so now you have code no human has read, written and understands - is simply insane. And the best software translators plus AI out there, is going to get things wrong - grievously wrong. And the odds of anyone figuring out what or where before it leads to disaster are low, never mind tracing that back to the original code and figuring out what that was supposed to do.

They probably should find their way off COBOL simply because people who know it and want to endure using it are hard to find and expensive. But you do that gradually, walling off parts of the system that work already and calling them from your language-du-jour, not building any new parts of the system in COBOL, and when you do need to make a change in one of those walled off sections, you migrate just that part.

We're basically talking about something like replacing the engine of a plane while it's flying. Now, do you do that a part-at-a-time with the ability to put back any piece where the new version fails? Or does it sound like a fine idea to vaporize the existing engine and beam in an object which a next-word-prediction software *says* is a contraption that does all the things the old engine did, and hope you don't crash?

The people involved in this have ZERO technical judgement.

2 notes

·

View notes

Text

Key Skills You Need to Succeed in BE Electrical Engineering

For those pursuing a Bachelor of Engineering (BE) in Electrical Engineering, it's essential to equip yourself with the right skills to thrive in a competitive field. Mailam Engineering College offers a robust program that prepares students for the challenges and opportunities in this dynamic discipline. In this article, we will explore the key skills needed to succeed in electrical engineering, along with helpful resources for further reading.

1. Strong Analytical Skills

Electrical engineers must possess strong analytical skills to design, test, and troubleshoot systems and components. This involves understanding complex mathematical concepts and applying them to real-world problems. Being able to analyze data and make informed decisions is crucial in this field.

2. Proficiency in Mathematics

Mathematics is the backbone of electrical engineering. Courses often involve calculus, differential equations, and linear algebra. A solid grasp of these mathematical principles is vital for modeling and solving engineering problems.

3. Technical Knowledge

A thorough understanding of electrical theory, circuit analysis, and systems is essential. Students should familiarize themselves with concepts such as Ohm's law, Kirchhoff's laws, and the fundamentals of electromagnetism. Engaging in hands-on projects can significantly enhance technical knowledge. For inspiration, check out Top 10 Projects for BE Electrical Engineering.

4. Familiarity with Software Tools

Modern electrical engineering relies heavily on software for design, simulation, and analysis. Proficiency in tools like MATLAB, Simulink, and CAD software is highly beneficial. Being comfortable with programming languages such as Python or C can also enhance your ability to tackle complex engineering challenges.

5. Problem-Solving Skills

Electrical engineers frequently encounter complex problems that require innovative solutions. Developing strong problem-solving skills enables you to approach challenges methodically, think creatively, and implement effective solutions.

6. Communication Skills

Effective communication is key in engineering. Whether working in teams or presenting projects, being able to articulate ideas clearly is crucial. Electrical engineers often collaborate with professionals from various disciplines, making strong interpersonal skills essential.

7. Project Management

Understanding the principles of project management is important for engineers, as they often work on projects that require careful planning, resource allocation, and time management. Familiarity with project management tools and methodologies can set you apart in the job market.

8. Attention to Detail

In electrical engineering, small errors can have significant consequences. Attention to detail is vital when designing circuits, conducting experiments, or writing reports. Developing a meticulous approach to your work will help you maintain high standards of quality and safety.

9. Continuous Learning

The field of electrical engineering is constantly evolving, with new technologies and methodologies emerging regularly. A commitment to lifelong learning will ensure you stay updated on industry trends and advancements. Exploring additional resources, such as the article on Top Skills for Electrical Engineering Jobs, can further enhance your knowledge.

Conclusion

Succeeding in BE Electrical Engineering requires a combination of technical skills, analytical thinking, and effective communication. By focusing on these key areas and actively seeking opportunities to apply your knowledge through projects and internships, you can position yourself for a successful career in electrical engineering. Remember, continuous improvement and adaptability will serve you well in this ever-changing field.

4 notes

·

View notes

Text

Automated Testing vs. Manual Testing: Which One is Right for Your Project?

Achieving high-quality, reliable software stands as a fundamental requirement in software development. Successful testing functions as an essential tool to discover faults and build performance capabilities that create better user experience outcomes. Two main testing methods dominate the field: automated testing and manual testing. The process of quality software assurance uses different testing approaches that demonstrate their own advantages as well as weaknesses according to specific project requirements and scenarios. We will explore the specifics to determine which testing process works best for your system development efforts.

1. What Is Manual Testing?

Manual testing involves a human tester manually executing test cases without using automation tools. Key Characteristics:

The methodology focuses its efforts on user interface together with usability and experience testing.

Human-centered applications where selection requires discretion include ad hoc testing and enumerative testing as well as examinations that need human evaluation.

Human performers are required during this approach; thus, it demands substantial time.

2. What Is Automated Testing?

Software performing automated testing executes test cases through workflows and helpers. Key Characteristics:

Efficient for repetitive and regression testing.

Users must spend money on tools along with developing custom scripts for testing.

Reduces human error.

3. Advantages of Manual Testing

Human Intuition: Software testing professionals can detect kernels through their human cognitive ability that automated tools cannot match. The observation and evaluation of visual elements runs more efficiently through human operatives instead of advanced tools.

Flexibility: This method suits exploratory testing specifically because there are no pre-determined scripts available.

Low Initial Investment: Running this approach does not need tool purchases or applications to develop automation frameworks.

Adaptable for UI/UX Testing: Running this approach does not need tool purchases or applications to develop automation frameworks.

4. Advantages of Automated Testing

Speed: Executes repetitive tests much faster than humans.

Scalability: The system proves most effective for extensive projects that need constant system updates.

Accuracy: When performing recurring actions, automated systems minimize the chances of human mistakes.

Cost-Efficient in the Long Run: Once established and implemented, the system demands costly investments but ensures continuous development expenses decrease over time.

Better for CI/CD Pipelines: Such testing technology connects various development pipelines that support agile and DevOps methodologies.

5. Disadvantages of Manual Testing

Time-Consuming: The manual performance of repeated tests leads to delayed completion of projects.

Error-Prone: Large applications contain tiny bugs that human testers commonly fail to detect.

Not Ideal for Scalability: The process of increasing manual testing needs additional testers to avoid cost escalations.

6. Disadvantages of Automated Testing

Initial Costs: Organizations must provide high financial resources to procure testing tools together with developing programming constructs.

Limited to Pre-Defined Scenarios: These testing approaches work poorly for handling exploratory or ad hoc testing.

Requires Maintenance: Test scripts need frequent updates when application changes occur.

Not Suitable for UI/UX Testing: Struggles with subjective user experience evaluations.

7. When to Use Manual Testing

Small Projects: The testing method proves beneficial at a low cost for small applications and provides quick assessments.

Exploratory Testing: Testing this approach benefits projects whose scripts have not been defined yet or need evaluation for newly added features.

Visual and Usability Testing: Performing assessments on interface components together with design features.

8. When to Use Automated Testing

Large Projects: Handles scalability for projects with frequent updates.

Regression Testing: Program testing becomes more efficient through automation since automated assessments perform multiple tests following each update process.

Performance Testing: The system performs efficient capabilities to conduct load testing and stress testing.

Continuous Development Environments: Agile progression and DevOps implementations need automation as a core requirement.

READ MORE- https://www.precisio.tech/automated-testing-vs-manual-testing-which-one-is-right-for-your-project/

2 notes

·

View notes

Text

By ensuring your software is meticulously tested for global readiness, we help you deliver seamless user experiences across diverse languages and cultures. Let's transform your product into a global phenomenon, reaching audiences far and wide with precision and reliability. https://bit.ly/3EKzvs2 #SDET #GlobalProduct #LanguageGap #SoftwareTesting #UserExperience #Localization SDET Tech

#Software Testing Companies in India#Software Testing Services in India#Test Automation Development Services#Test Automation Services#Performance testing services#Load testing services#Performance and Load Testing Services#Software Performance Testing Services#Functional Testing Services#Globalization Testing services#Globalization Testing Company#Accessibility testing services

Agile Testing Services#Mobile Testing Services#Mobile Apps Testing Services#ecommerce performance testing#ecommerce load testing#load and performance testing services#performance testing solutions#product performance testing#application performance testing services#software testing startups#benefits of load testing#agile performance testing methodology#agile testing solutions#mobile testing challenges#cloud based mobile testing#automated mobile testing#performance engineering & testing services#performance testing company#performance testing company in usa

0 notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

Mastering Agile Methodology in Software Testing

Delve into the world of Agile Methodology in Software Testing with Agile Ethos. Discover how our team, driven by a passion for innovation, embraces Agile principles to enhance software testing practices. Learn about our commitment to fostering collaboration, adaptability, and delivering high-quality software solutions. Explore the agile testing techniques and strategies that set us apart in the dynamic landscape of software development.

0 notes

Text

Econometrics Demystified: The Ultimate Compilation of Top 10 Study Aids

Welcome to the world of econometrics, where economic theories meet statistical methods to analyze and interpret data. If you're a student navigating through the complexities of econometrics, you know how challenging it can be to grasp the intricacies of this field. Fear not! This blog is your ultimate guide to the top 10 study aids that will demystify econometrics and make your academic journey smoother.

Economicshomeworkhelper.com – Your Go-To Destination

Let's kick off our list with the go-to destination for all your econometrics homework and exam needs – https://www.economicshomeworkhelper.com/. With a team of experienced experts, this website is dedicated to providing high-quality assistance tailored to your specific requirements. Whether you're struggling with regression analysis or hypothesis testing, the experts at Economicshomeworkhelper.com have got you covered. When in doubt, remember to visit the website and say, "Write My Econometrics Homework."

Econometrics Homework Help: Unraveling the Basics

Before delving into the intricacies, it's crucial to build a strong foundation in the basics of econometrics. Websites offering econometrics homework help, such as Khan Academy and Coursera, provide comprehensive video tutorials and interactive lessons to help you grasp fundamental concepts like linear regression, correlation, and statistical inference.

The Econometrics Academy: Online Courses for In-Depth Learning

For those seeking a more immersive learning experience, The Econometrics Academy offers online courses that cover a wide range of econometrics topics. These courses, often led by seasoned professors, provide in-depth insights into advanced econometric methods, ensuring you gain a deeper understanding of the subject.

"Mastering Metrics" by Joshua D. Angrist and Jörn-Steffen Pischke

No compilation of study aids would be complete without mentioning authoritative books, and "Mastering Metrics" is a must-read for econometrics enthusiasts. Authored by two renowned economists, Joshua D. Angrist and Jörn-Steffen Pischke, this book breaks down complex concepts into digestible chapters, making it an invaluable resource for both beginners and advanced learners.

Econometrics Forums: Join the Conversation

Engaging in discussions with fellow econometrics students and professionals can enhance your understanding of the subject. Platforms like Econometrics Stack Exchange and Reddit's econometrics community provide a space for asking questions, sharing insights, and gaining valuable perspectives. Don't hesitate to join the conversation and expand your econometrics network.

Gretl: Your Free Econometrics Software

Practical application is key in econometrics, and Gretl is the perfect tool for hands-on learning. This free and open-source software allows you to perform a wide range of econometric analyses, from simple regressions to advanced time-series modeling. Download Gretl and take your econometrics skills to the next level.

Econometrics Journal Articles: Stay Updated

Staying abreast of the latest developments in econometrics is essential for academic success. Explore journals such as the "Journal of Econometrics" and "Econometrica" to access cutting-edge research and gain insights from scholars in the field. Reading journal articles not only enriches your knowledge but also equips you with the latest methodologies and approaches.

Econometrics Bloggers: Learn from the Pros

Numerous econometrics bloggers share their expertise and experiences online, offering valuable insights and practical tips. Follow blogs like "The Unassuming Economist" and "Econometrics by Simulation" to benefit from the expertise of professionals who simplify complex econometric concepts through real-world examples and applications.

Econometrics Software Manuals: Master the Tools

While software like STATA, R, and Python are indispensable for econometric analysis, navigating through them can be challenging. Refer to comprehensive manuals and documentation provided by these software platforms to master their functionalities. Understanding the tools at your disposal will empower you to apply econometric techniques with confidence.

Econometrics Webinars and Workshops: Continuous Learning

Finally, take advantage of webinars and workshops hosted by academic institutions and industry experts. These events provide opportunities to deepen your knowledge, ask questions, and engage with professionals in the field. Check out platforms like Econometric Society and DataCamp for upcoming events tailored to econometrics enthusiasts.

Conclusion

Embarking on your econometrics journey doesn't have to be daunting. With the right study aids, you can demystify the complexities of this field and excel in your academic pursuits. Remember to leverage online resources, engage with the econometrics community, and seek assistance when needed. And when the workload becomes overwhelming, don't hesitate to visit Economicshomeworkhelper.com and say, "Write My Econometrics Homework" – your trusted partner in mastering econometrics. Happy studying!

13 notes

·

View notes