#sql general data types

Explore tagged Tumblr posts

Text

Ever since OpenAI released ChatGPT at the end of 2022, hackers and security researchers have tried to find holes in large language models (LLMs) to get around their guardrails and trick them into spewing out hate speech, bomb-making instructions, propaganda, and other harmful content. In response, OpenAI and other generative AI developers have refined their system defenses to make it more difficult to carry out these attacks. But as the Chinese AI platform DeepSeek rockets to prominence with its new, cheaper R1 reasoning model, its safety protections appear to be far behind those of its established competitors.

Today, security researchers from Cisco and the University of Pennsylvania are publishing findings showing that, when tested with 50 malicious prompts designed to elicit toxic content, DeepSeek’s model did not detect or block a single one. In other words, the researchers say they were shocked to achieve a “100 percent attack success rate.”

The findings are part of a growing body of evidence that DeepSeek’s safety and security measures may not match those of other tech companies developing LLMs. DeepSeek’s censorship of subjects deemed sensitive by China’s government has also been easily bypassed.

“A hundred percent of the attacks succeeded, which tells you that there’s a trade-off,” DJ Sampath, the VP of product, AI software and platform at Cisco, tells WIRED. “Yes, it might have been cheaper to build something here, but the investment has perhaps not gone into thinking through what types of safety and security things you need to put inside of the model.”

Other researchers have had similar findings. Separate analysis published today by the AI security company Adversa AI and shared with WIRED also suggests that DeepSeek is vulnerable to a wide range of jailbreaking tactics, from simple language tricks to complex AI-generated prompts.

DeepSeek, which has been dealing with an avalanche of attention this week and has not spoken publicly about a range of questions, did not respond to WIRED’s request for comment about its model’s safety setup.

Generative AI models, like any technological system, can contain a host of weaknesses or vulnerabilities that, if exploited or set up poorly, can allow malicious actors to conduct attacks against them. For the current wave of AI systems, indirect prompt injection attacks are considered one of the biggest security flaws. These attacks involve an AI system taking in data from an outside source—perhaps hidden instructions of a website the LLM summarizes—and taking actions based on the information.

Jailbreaks, which are one kind of prompt-injection attack, allow people to get around the safety systems put in place to restrict what an LLM can generate. Tech companies don’t want people creating guides to making explosives or using their AI to create reams of disinformation, for example.

Jailbreaks started out simple, with people essentially crafting clever sentences to tell an LLM to ignore content filters—the most popular of which was called “Do Anything Now” or DAN for short. However, as AI companies have put in place more robust protections, some jailbreaks have become more sophisticated, often being generated using AI or using special and obfuscated characters. While all LLMs are susceptible to jailbreaks, and much of the information could be found through simple online searches, chatbots can still be used maliciously.

“Jailbreaks persist simply because eliminating them entirely is nearly impossible—just like buffer overflow vulnerabilities in software (which have existed for over 40 years) or SQL injection flaws in web applications (which have plagued security teams for more than two decades),” Alex Polyakov, the CEO of security firm Adversa AI, told WIRED in an email.

Cisco’s Sampath argues that as companies use more types of AI in their applications, the risks are amplified. “It starts to become a big deal when you start putting these models into important complex systems and those jailbreaks suddenly result in downstream things that increases liability, increases business risk, increases all kinds of issues for enterprises,” Sampath says.

The Cisco researchers drew their 50 randomly selected prompts to test DeepSeek’s R1 from a well-known library of standardized evaluation prompts known as HarmBench. They tested prompts from six HarmBench categories, including general harm, cybercrime, misinformation, and illegal activities. They probed the model running locally on machines rather than through DeepSeek’s website or app, which send data to China.

Beyond this, the researchers say they have also seen some potentially concerning results from testing R1 with more involved, non-linguistic attacks using things like Cyrillic characters and tailored scripts to attempt to achieve code execution. But for their initial tests, Sampath says, his team wanted to focus on findings that stemmed from a generally recognized benchmark.

Cisco also included comparisons of R1’s performance against HarmBench prompts with the performance of other models. And some, like Meta’s Llama 3.1, faltered almost as severely as DeepSeek’s R1. But Sampath emphasizes that DeepSeek’s R1 is a specific reasoning model, which takes longer to generate answers but pulls upon more complex processes to try to produce better results. Therefore, Sampath argues, the best comparison is with OpenAI’s o1 reasoning model, which fared the best of all models tested. (Meta did not immediately respond to a request for comment).

Polyakov, from Adversa AI, explains that DeepSeek appears to detect and reject some well-known jailbreak attacks, saying that “it seems that these responses are often just copied from OpenAI’s dataset.” However, Polyakov says that in his company’s tests of four different types of jailbreaks—from linguistic ones to code-based tricks—DeepSeek’s restrictions could easily be bypassed.

“Every single method worked flawlessly,” Polyakov says. “What’s even more alarming is that these aren’t novel ‘zero-day’ jailbreaks—many have been publicly known for years,” he says, claiming he saw the model go into more depth with some instructions around psychedelics than he had seen any other model create.

“DeepSeek is just another example of how every model can be broken—it’s just a matter of how much effort you put in. Some attacks might get patched, but the attack surface is infinite,” Polyakov adds. “If you’re not continuously red-teaming your AI, you’re already compromised.”

57 notes

·

View notes

Text

is there a way to solve this sql issue. I've run into it a few times and I wish i had a way to future-proof my queries.

we have a few tables for data that is somewhat universal on our site. for example file_attachments. you can attach files to almost every internal page on our site. file_attachments has an id column for each attachment's unique ID number, but attachee_id is used to join this table on anything.

the problem is, our system uses the same series of numbers for various of IDs. 123456 can (and is) an ID for items, purchase orders, sales orders, customers, quotes, etc. so to correctly join file_attachments to the items table, i need to join file_attachments.attachee_id to items.id and then filter file_attachments.attachment_type = 'item'. attachment_types do NOT have their own series of id numbers. i have to filter this column using words. then, sometimes our dev team decides to change our terminology which breaks all my queries. they sometimes do this accidentally by adding extra spaces before, after, or between words. though TRIM can generally resolve that.

is there anything I might be able to do to avoid filtering with words?

i was thinking about doing something to identify the rows for the first instance of each attachment_type and then assign row numbers to the results (sorted by file_attachments.id), thus creating permanent IDs i can use regardless of whether anyone alters the names used for attachment_type, or eventually adds new types. but idk if this is a regular issue with databases and whether there's a generally accepted way to deal with it.

8 notes

·

View notes

Text

What is Python, How to Learn Python?

What is Python?

Python is a high-level, interpreted programming language known for its simplicity and readability. It is widely used in various fields like: ✅ Web Development (Django, Flask) ✅ Data Science & Machine Learning (Pandas, NumPy, TensorFlow) ✅ Automation & Scripting (Web scraping, File automation) ✅ Game Development (Pygame) ✅ Cybersecurity & Ethical Hacking ✅ Embedded Systems & IoT (MicroPython)

Python is beginner-friendly because of its easy-to-read syntax, large community, and vast library support.

How Long Does It Take to Learn Python?

The time required to learn Python depends on your goals and background. Here’s a general breakdown:

1. Basics of Python (1-2 months)

If you spend 1-2 hours daily, you can master:

Variables, Data Types, Operators

Loops & Conditionals

Functions & Modules

Lists, Tuples, Dictionaries

File Handling

Basic Object-Oriented Programming (OOP)

2. Intermediate Level (2-4 months)

Once comfortable with basics, focus on:

Advanced OOP concepts

Exception Handling

Working with APIs & Web Scraping

Database handling (SQL, SQLite)

Python Libraries (Requests, Pandas, NumPy)

Small real-world projects

3. Advanced Python & Specialization (6+ months)

If you want to go pro, specialize in:

Data Science & Machine Learning (Matplotlib, Scikit-Learn, TensorFlow)

Web Development (Django, Flask)

Automation & Scripting

Cybersecurity & Ethical Hacking

Learning Plan Based on Your Goal

📌 Casual Learning – 3-6 months (for automation, scripting, or general knowledge) 📌 Professional Development – 6-12 months (for jobs in software, data science, etc.) 📌 Deep Mastery – 1-2 years (for AI, ML, complex projects, research)

Scope @ NareshIT:

At NareshIT’s Python application Development program you will be able to get the extensive hands-on training in front-end, middleware, and back-end technology.

It skilled you along with phase-end and capstone projects based on real business scenarios.

Here you learn the concepts from leading industry experts with content structured to ensure industrial relevance.

An end-to-end application with exciting features

Earn an industry-recognized course completion certificate.

For more details:

#classroom#python#education#learning#teaching#institute#marketing#study motivation#studying#onlinetraining

2 notes

·

View notes

Text

Wielding Big Data Using PySpark

Introduction to PySpark

PySpark is the Python API for Apache Spark, a distributed computing framework designed to process large-scale data efficiently. It enables parallel data processing across multiple nodes, making it a powerful tool for handling massive datasets.

Why Use PySpark for Big Data?

Scalability: Works across clusters to process petabytes of data.

Speed: Uses in-memory computation to enhance performance.

Flexibility: Supports various data formats and integrates with other big data tools.

Ease of Use: Provides SQL-like querying and DataFrame operations for intuitive data handling.

Setting Up PySpark

To use PySpark, you need to install it and set up a Spark session. Once initialized, Spark allows users to read, process, and analyze large datasets.

Processing Data with PySpark

PySpark can handle different types of data sources such as CSV, JSON, Parquet, and databases. Once data is loaded, users can explore it by checking the schema, summary statistics, and unique values.

Common Data Processing Tasks

Viewing and summarizing datasets.

Handling missing values by dropping or replacing them.

Removing duplicate records.

Filtering, grouping, and sorting data for meaningful insights.

Transforming Data with PySpark

Data can be transformed using SQL-like queries or DataFrame operations. Users can:

Select specific columns for analysis.

Apply conditions to filter out unwanted records.

Group data to find patterns and trends.

Add new calculated columns based on existing data.

Optimizing Performance in PySpark

When working with big data, optimizing performance is crucial. Some strategies include:

Partitioning: Distributing data across multiple partitions for parallel processing.

Caching: Storing intermediate results in memory to speed up repeated computations.

Broadcast Joins: Optimizing joins by broadcasting smaller datasets to all nodes.

Machine Learning with PySpark

PySpark includes MLlib, a machine learning library for big data. It allows users to prepare data, apply machine learning models, and generate predictions. This is useful for tasks such as regression, classification, clustering, and recommendation systems.

Running PySpark on a Cluster

PySpark can run on a single machine or be deployed on a cluster using a distributed computing system like Hadoop YARN. This enables large-scale data processing with improved efficiency.

Conclusion

PySpark provides a powerful platform for handling big data efficiently. With its distributed computing capabilities, it allows users to clean, transform, and analyze large datasets while optimizing performance for scalability.

For Free Tutorials for Programming Languages Visit-https://www.tpointtech.com/

2 notes

·

View notes

Text

Protect Your Laravel APIs: Common Vulnerabilities and Fixes

API Vulnerabilities in Laravel: What You Need to Know

As web applications evolve, securing APIs becomes a critical aspect of overall cybersecurity. Laravel, being one of the most popular PHP frameworks, provides many features to help developers create robust APIs. However, like any software, APIs in Laravel are susceptible to certain vulnerabilities that can leave your system open to attack.

In this blog post, we’ll explore common API vulnerabilities in Laravel and how you can address them, using practical coding examples. Additionally, we’ll introduce our free Website Security Scanner tool, which can help you assess and protect your web applications.

Common API Vulnerabilities in Laravel

Laravel APIs, like any other API, can suffer from common security vulnerabilities if not properly secured. Some of these vulnerabilities include:

>> SQL Injection SQL injection attacks occur when an attacker is able to manipulate an SQL query to execute arbitrary code. If a Laravel API fails to properly sanitize user inputs, this type of vulnerability can be exploited.

Example Vulnerability:

$user = DB::select("SELECT * FROM users WHERE username = '" . $request->input('username') . "'");

Solution: Laravel’s query builder automatically escapes parameters, preventing SQL injection. Use the query builder or Eloquent ORM like this:

$user = DB::table('users')->where('username', $request->input('username'))->first();

>> Cross-Site Scripting (XSS) XSS attacks happen when an attacker injects malicious scripts into web pages, which can then be executed in the browser of a user who views the page.

Example Vulnerability:

return response()->json(['message' => $request->input('message')]);

Solution: Always sanitize user input and escape any dynamic content. Laravel provides built-in XSS protection by escaping data before rendering it in views:

return response()->json(['message' => e($request->input('message'))]);

>> Improper Authentication and Authorization Without proper authentication, unauthorized users may gain access to sensitive data. Similarly, improper authorization can allow unauthorized users to perform actions they shouldn't be able to.

Example Vulnerability:

Route::post('update-profile', 'UserController@updateProfile');

Solution: Always use Laravel’s built-in authentication middleware to protect sensitive routes:

Route::middleware('auth:api')->post('update-profile', 'UserController@updateProfile');

>> Insecure API Endpoints Exposing too many endpoints or sensitive data can create a security risk. It’s important to limit access to API routes and use proper HTTP methods for each action.

Example Vulnerability:

Route::get('user-details', 'UserController@getUserDetails');

Solution: Restrict sensitive routes to authenticated users and use proper HTTP methods like GET, POST, PUT, and DELETE:

Route::middleware('auth:api')->get('user-details', 'UserController@getUserDetails');

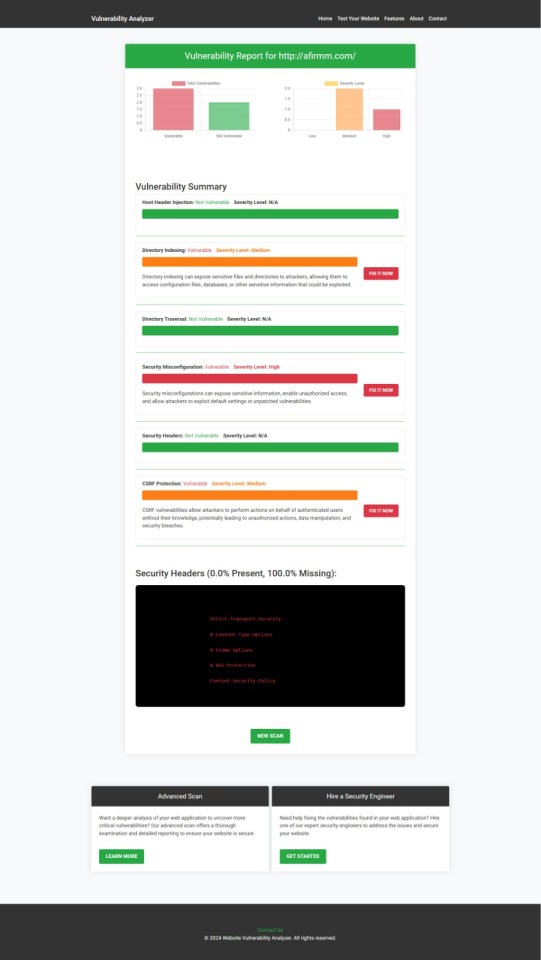

How to Use Our Free Website Security Checker Tool

If you're unsure about the security posture of your Laravel API or any other web application, we offer a free Website Security Checker tool. This tool allows you to perform an automatic security scan on your website to detect vulnerabilities, including API security flaws.

Step 1: Visit our free Website Security Checker at https://free.pentesttesting.com. Step 2: Enter your website URL and click "Start Test". Step 3: Review the comprehensive vulnerability assessment report to identify areas that need attention.

Screenshot of the free tools webpage where you can access security assessment tools.

Example Report: Vulnerability Assessment

Once the scan is completed, you'll receive a detailed report that highlights any vulnerabilities, such as SQL injection risks, XSS vulnerabilities, and issues with authentication. This will help you take immediate action to secure your API endpoints.

An example of a vulnerability assessment report generated with our free tool provides insights into possible vulnerabilities.

Conclusion: Strengthen Your API Security Today

API vulnerabilities in Laravel are common, but with the right precautions and coding practices, you can protect your web application. Make sure to always sanitize user input, implement strong authentication mechanisms, and use proper route protection. Additionally, take advantage of our tool to check Website vulnerability to ensure your Laravel APIs remain secure.

For more information on securing your Laravel applications try our Website Security Checker.

#cyber security#cybersecurity#data security#pentesting#security#the security breach show#laravel#php#api

2 notes

·

View notes

Text

Hmm. Not sure if my perfectionism is acting up, but... is there a reasonably isolated way to test SQL?

In particular, I want a test (or set of tests) that checks the following conditions:

The generated SQL is syntactically valid, for a given range of possible parameters.

The generated SQL agrees with the database schema, in that it doesn't reference columns or tables which don't exist. (Reduces typos and helps with deprecating parts of the schema)

The generated SQL performs joins and filtering in the way that I want. I'm fine if this is more a list of reasonable cases than a check of all possible cases.

Some kind of performance test? Especially if it's joining large tables

I don't care much about the shape of the output data. That's well-handled by parsing and type checking!

I don't think I want to perform queries against a live database, but I'm very flexible on this point.

5 notes

·

View notes

Text

Learn Data Analytics: Grow Skills, Make it Truth Future

The world inside that's increasingly shaped by information, being able to understand and interpret data isn't just a specialized skill anymore—it's a crucial ability for navigating today’s landscape. At its heart, data analytics involves digging into raw data to uncover meaningful patterns, draw insightful conclusions, and guide decision-making. When individuals get a handle on this discipline, they can turn those raw numbers into actionable insights, paving the way for a more predictable and 'truthful' future for themselves and the organizations they work with. This article dives into the compelling reasons to learn data analytics, highlighting the key skills involved and how they help build a future rooted in verifiable facts.

The Foundational Power of Data Literacy

At the heart of a data-driven future lies data literacy – the ability to read, understand, create, and communicate data as information. This fundamental understanding is the first step towards leveraging analytics effectively. Without it, individuals and businesses risk making decisions based on intuition or outdated information, which can lead to missed opportunities and significant errors.

Understanding Data's Language

Learning data analytics begins with grasping how data is generated and structured. This involves:

Data Sources: Recognizing where data comes from, whether it's from website clicks, sales transactions, sensor readings, or social media interactions.

Data Types: Differentiating between numerical, categorical, textual, and temporal data, as each requires different analytical approaches.

Data Quality: Appreciating the importance of clean, accurate, and complete data. Flawed data inevitably leads to flawed conclusions, rendering efforts useless.

Essential Skills for Data Analytics Growth

To truly make a "truth future" through data, a blend of technical proficiency, analytical thinking, and effective communication is required.

Technical Proficiencies

The journey into data analytics necessitates acquiring specific technical skills:

Statistical Foundations: A solid understanding of statistical concepts (e.g., probability, hypothesis testing, regression) is crucial for interpreting data accurately and building robust models.

Programming Languages: Python and R are the industry standards. They offer powerful libraries for data manipulation, statistical analysis, machine learning, and visualization. Proficiency in at least one of these is non-negotiable.

Database Management: SQL (Structured Query Language) skills are vital for querying, extracting, and managing data from relational databases, which are the backbone of many business operations.

Data Visualization Tools: Tools like Tableau, Power BI, or Qlik Sense enable analysts to transform complex datasets into intuitive charts, graphs, and dashboards, making insights accessible to non-technical audiences.

Analytical Thinking and Problem-Solving

Beyond tools, the analytical mindset is paramount. This involves:

Critical Thinking: The ability to question assumptions, identify biases, and evaluate the validity of data and its interpretations.

Problem Framing: Defining business problems clearly and translating them into analytical questions that can be answered with data.

Pattern Recognition: The knack for identifying trends, correlations, and anomalies within datasets that might not be immediately obvious.

Communication Skills

Even the most profound data insights are useless if they cannot be effectively communicated.

Storytelling with Data: Presenting findings in a compelling narrative that highlights key insights and their implications for decision-making.

Stakeholder Management: Understanding the needs and questions of different audiences (e.g., executives, marketing teams, operations managers) and tailoring presentations accordingly.

Collaboration: Working effectively with cross-functional teams to integrate data insights into broader strategies.

Making the "Truth Future": Applications of Data Analytics

The skills acquired in data analytics empower individuals to build a future grounded in verifiable facts, impacting various domains.

Business Optimization

In the corporate world, data analytics helps to:

Enhance Customer Understanding: By analyzing purchasing habits, Browse behavior, and feedback, businesses can create personalized experiences and targeted marketing campaigns.

Improve Operational Efficiency: Data can reveal bottlenecks in supply chains, optimize resource allocation, and predict equipment failures, leading to significant cost savings.

Drive Strategic Decisions: Whether it's market entry strategies, product development, or pricing models, analytics provides the evidence base for informed choices, reducing risk and increasing profitability.

Personal Empowerment

Data analytics isn't just for corporations; it can profoundly impact individual lives:

Financial Planning: Tracking spending patterns, identifying savings opportunities, and making informed investment decisions.

Health and Wellness: Analyzing fitness tracker data, sleep patterns, and dietary information to make healthier lifestyle choices.

Career Advancement: Understanding job market trends, in-demand skills, and salary benchmarks to strategically plan career moves and upskilling efforts.

Societal Impact

On a broader scale, data analytics contributes to a more 'truthful' and efficient society:

Public Policy: Governments use data to understand demographic shifts, optimize public services (e.g., transportation, healthcare), and allocate resources effectively.

Scientific Discovery: Researchers analyze vast datasets in fields like genomics, astronomy, and climate science to uncover new knowledge and accelerate breakthroughs.

Urban Planning: Cities leverage data from traffic sensors, public transport usage, and environmental monitors to design more sustainable and livable urban environments.

The demand for skilled data analytics professionals continues to grow across the nation, from the vibrant tech hubs to emerging industrial centers. For those looking to gain a comprehensive and practical understanding of this field, pursuing dedicated training is a highly effective path. Many individuals choose programs that offer hands-on experience and cover the latest tools and techniques. For example, a well-regarded Data analytics training course in Noida, along with similar opportunities in Kanpur, Ludhiana, Moradabad, Delhi, and other cities across India, provides the necessary foundation for a successful career. These courses are designed to equip students with the skills required to navigate and contribute to the data-driven landscape.

Conclusion

Learning data analytics goes beyond just picking up technical skills; it’s really about developing a mindset that looks for evidence, values accuracy, and inspires thoughtful action. By honing these vital abilities, people can not only grasp the intricacies of our digital landscape but also play an active role in shaping a future that’s more predictable, efficient, and fundamentally rooted in truth. In a world full of uncertainties, data analytics provides a powerful perspective that helps us find clarity and navigate a more assured path forward

1 note

·

View note

Text

What are the different types of power bi certification course in Pune

Introduction Power BI and Its Rising Demand

Data is not just information it's power. In the current digital period, associations calculate on data visualization and analytics to stay competitive. Power BI, Microsoft's leading business analytics tool, empowers professionals to fantasize, dissect, and share perceptivity from data in real- time. As businesses decreasingly move towards data- driven opinions, the demand for professed Power BI professionals is soaring.

Types of Power BI Certification Courses in Pune

Freshman- position Power BI Courses

Ideal for those dipping their toes into data analytics. These courses introduce Power BI’s interface, dashboards, and introductory DAX formulas. Anticipate hands- on tutorials and interactive visual systems.

Intermediate Power BI Certification Programs

For those with some experience in analytics, intermediate courses claw into data modeling, Power Query metamorphoses, and advanced visualizations. It’s the perfect ground between proposition and assiduity operation.

Advanced Power BI Certification Tracks

Targeting professionals with previous BI experience, advanced instruments concentrate on enterprise- position results, Power BI integration with Azure, and bedded analytics. learning these modules paves the way for elderly data critic places.

Microsoft Power BI instrument – The Global Standard

The Microsoft Certified Data Analyst Associate credential is the gold standard. Grounded on the PL- 300 test, it validates your capability to prepare, model, fantasize, and dissect data using Power BI. numerous Pune institutes structure their training to directly support this instrument.

Course Format Options in Pune

Classroom- Grounded Training

For those who thrive in a physical literacy terrain, classroom sessions offer educator support, peer commerce, and a structured literacy pace. Several training centers in Pune, especially in areas like Kothrud and Hinjewadi, give weekend and weekday batches.

Online educator- Led Power BI Courses

Blending inflexibility with real- time support, these courses offer live virtual classes from pukka coaches. Perfect for working professionals demanding schedule rigidity.

Tone- Paced Learning Programs

For the tone- motivated learner, recorded lectures, downloadable coffers, and practice datasets are handed. These are frequently more budget-friendly and can be completed at your own pace.

Duration and Structure of Power BI Certification Courses

utmost freshman- position courses last 4 – 6 weeks, while intermediate and advanced tracks may stretch to 8 – 12 weeks. Courses generally follow a modular structure, gradationally introducing learners to data cleaning, modeling, visualization, and reporting.

Real- Time systems and Case Studies – What to Anticipate

Anticipate systems involving deals dashboards, client segmentation, and functional analytics. Real- time case studies insure you are not just learning proposition but applying it to business scripts.

Tools and Technologies Covered Alongside Power BI

numerous courses integrate tools like SQL, Excel, Python for data visualization, and Azure Synapse Analytics to give learners a comprehensive BI toolkit.

Benefits of Enrolling in a Power BI Course in Pune

Pune, being a tech mecca, offers excellent faculty, practical exposure, and networking openings. scholars frequently profit from original externship tie- ups and placement support.

Top Institutes Offering Power BI Certification Course in Pune

Some well- regarded names include

Ethans Tech

3RI Technologies

SevenMentor

Edureka( online presence with Pune support) These institutes boast educated coaches, solid course material, and placement backing.

How to Choose the Right instrument Course for You

Define your literacy thing freshman, job switcher, or advanced critic? Review the syllabus, read reviews, assess coach credibility, and interrogate about real- world design work. Do n’t forgetpost-course support and placement help.

Average Cost of Power BI instruments in Pune

Prices range from ₹ 8,000 to ₹ 25,000 depending on course position and format. Online courses may offer EMI and rush abatements.

Career openings Post Certification

pukka professionals can land places like

Business Intelligence Analyst

Data Visualization Specialist

Power BI inventor

Data AnalystCompanies hiring include TCS, Infosys, conscious, andmid-level startups.

Salary prospects in Pune vs Other metropolises

A pukka Power BI critic in Pune earns ₹ 4 – 7 LPA on average. In discrepancy, hires in New York or San Francisco may start from$ 70,000 –$ 90,000 annually, reflecting cost- of- living differences.

Comparing Pune’s Immolations to Other metropolises

Power BI instrument Course in Washington

Power BI Certification Course in Washington offers cutting- edge content backed by government and commercial collaboration, the cost of training and living is significantly advanced compared to Pune.

Power BI Certification Course in San Francisco

Known for its Silicon Valley edge, Power BI Certification Course in San Francisco frequently leans into pall integration and AI- powered BI tools. But again, Pune offers more affordable options with solid content depth.

Power BI Certification Course in New York

Courses in New York emphasize fiscal and enterprise data analytics. Pune’s growing finance and IT sectors are catching up presto, making it a good contender at a bit of the price.

Tips to Ace Your Power BI instrument test

Practice daily using Microsoft’s sample datasets.

Recreate dashboards from scrape.

Focus on DAX and data modeling.

Join Power BI forums for tips and challenges.

Conclusion – The Road Ahead for BI Professionals

Whether you are in Pune, Washington, San Francisco, or Power BI Certification Course in New York can turbocharge your career in data analytics. As businesses embrace data for every decision, pukka professionals will continue to be in high demand.

0 notes

Text

ChatGPT & Data Science: Your Essential AI Co-Pilot

The rise of ChatGPT and other large language models (LLMs) has sparked countless discussions across every industry. In data science, the conversation is particularly nuanced: Is it a threat? A gimmick? Or a revolutionary tool?

The clearest answer? ChatGPT isn't here to replace data scientists; it's here to empower them, acting as an incredibly versatile co-pilot for almost every stage of a data science project.

Think of it less as an all-knowing oracle and more as an exceptionally knowledgeable, tireless assistant that can brainstorm, explain, code, and even debug. Here's how ChatGPT (and similar LLMs) is transforming data science projects and how you can harness its power:

How ChatGPT Transforms Your Data Science Workflow

Problem Framing & Ideation: Struggling to articulate a business problem into a data science question? ChatGPT can help.

"Given customer churn data, what are 5 actionable data science questions we could ask to reduce churn?"

"Brainstorm hypotheses for why our e-commerce conversion rate dropped last quarter."

"Help me define the scope for a project predicting equipment failure in a manufacturing plant."

Data Exploration & Understanding (EDA): This often tedious phase can be streamlined.

"Write Python code using Pandas to load a CSV and display the first 5 rows, data types, and a summary statistics report."

"Explain what 'multicollinearity' means in the context of a regression model and how to check for it in Python."

"Suggest 3 different types of plots to visualize the relationship between 'age' and 'income' in a dataset, along with the Python code for each."

Feature Engineering & Selection: Creating new, impactful features is key, and ChatGPT can spark ideas.

"Given a transactional dataset with 'purchase_timestamp' and 'product_category', suggest 5 new features I could engineer for a customer segmentation model."

"What are common techniques for handling categorical variables with high cardinality in machine learning, and provide a Python example for one."

Model Selection & Algorithm Explanation: Navigating the vast world of algorithms becomes easier.

"I'm working on a classification problem with imbalanced data. What machine learning algorithms should I consider, and what are their pros and cons for this scenario?"

"Explain how a Random Forest algorithm works in simple terms, as if you're explaining it to a business stakeholder."

Code Generation & Debugging: This is where ChatGPT shines for many data scientists.

"Write a Python function to perform stratified K-Fold cross-validation for a scikit-learn model, ensuring reproducibility."

"I'm getting a 'ValueError: Input contains NaN, infinity or a value too large for dtype('float64')' in my scikit-learn model. What are common reasons for this error, and how can I fix it?"

"Generate boilerplate code for a FastAPI endpoint that takes a JSON payload and returns a prediction from a pre-trained scikit-learn model."

Documentation & Communication: Translating complex technical work into understandable language is vital.

"Write a clear, concise docstring for this Python function that preprocesses text data."

"Draft an executive summary explaining the results of our customer churn prediction model, focusing on business impact rather than technical details."

"Explain the limitations of an XGBoost model in a way that a non-technical manager can understand."

Learning & Skill Development: It's like having a personal tutor at your fingertips.

"Explain the concept of 'bias-variance trade-off' in machine learning with a practical example."

"Give me 5 common data science interview questions about SQL, and provide example answers."

"Create a study plan for learning advanced topics in NLP, including key concepts and recommended libraries."

Important Considerations and Best Practices

While incredibly powerful, remember that ChatGPT is a tool, not a human expert.

Always Verify: Generated code, insights, and especially factual information must always be verified. LLMs can "hallucinate" or provide subtly incorrect information.

Context is King: The quality of the output directly correlates with the quality and specificity of your prompt. Provide clear instructions, examples, and constraints.

Data Privacy is Paramount: NEVER feed sensitive, confidential, or proprietary data into public LLMs. Protecting personal data is not just an ethical imperative but a legal requirement globally. Assume anything you input into a public model may be used for future training or accessible by the provider. For sensitive projects, explore secure, on-premises or private cloud LLM solutions.

Understand the Fundamentals: ChatGPT is an accelerant, not a substitute for foundational knowledge in statistics, machine learning, and programming. You need to understand why a piece of code works or why an an algorithm is chosen to effectively use and debug its outputs.

Iterate and Refine: Don't expect perfect results on the first try. Refine your prompts based on the output you receive.

ChatGPT and its peers are fundamentally changing the daily rhythm of data science. By embracing them as intelligent co-pilots, data scientists can boost their productivity, explore new avenues, and focus their invaluable human creativity and critical thinking on the most complex and impactful challenges. The future of data science is undoubtedly a story of powerful human-AI collaboration.

0 notes

Text

Data Analyst Interview Questions: A Comprehensive Guide

Preparing for an interview as a Data Analyst is difficult, given the broad skills needed. Technical skill, business knowledge, and problem-solving abilities are assessed by interviewers in a variety of ways. This guide will assist you in grasping the kind of questions that will be asked and how to answer them.

By mohammed hassan on Pixabay

General Data Analyst Interview Questions

These questions help interviewers assess your understanding of the role and your basic approach to data analysis.

Can you describe what a Data Analyst does? A Data Analyst collects, processes, and analyzes data to help businesses make data-driven decisions and identify trends or patterns.

What are the key responsibilities of a Data Analyst? Responsibilities include data collection, data cleaning, exploratory data analysis, reporting insights, and collaborating with stakeholders.

What tools are you most familiar with? Say tools like Excel, SQL, Python, Tableau, Power BI, and describe how you have used them in past projects.

What types of data? Describe structured, semi-structured, and unstructured data using examples such as databases, JSON files, and pictures or videos.

Technical Data Analyst Interview Questions

Technical questions evaluate your tool knowledge, techniques, and your ability to manipulate and interpret data.

What is the difference between SQL's inner join and left join? The inner join gives only the common rows between tables, whereas a left join gives all rows of the left table as well as corresponding ones of the right.

How do you deal with missing data in a dataset? Methods are either removing rows, mean/median imputation, or forward-fill/backward-fill depending on context and proportion of missing data.

Can you describe normalization and why it's significant? Normalization minimizes data redundancy and enhances data integrity by structuring data effectively between relational tables.

What are some Python libraries that are frequently used for data analysis? Libraries consist of Pandas for data manipulation, NumPy for numerical computations, Matplotlib/Seaborn for data plotting, and SciPy for scientific computing.

How would you construct a query to discover duplicate values within a table? Use a GROUP BY clause with a HAVING COUNT(*) > 1 to find duplicate records according to one or more columns.

Behavioral and Situational Data Analyst Interview Questions

These assess your soft skills, work values, and how you deal with actual situations.

Describe an instance where you managed a challenging stakeholder. Describe how you actively listened, recognized their requirements, and provided insights that supported business objectives despite issues with communication.

Tell us about a project in which you needed to analyze large datasets. Describe how you broke the dataset down into manageable pieces, what tools you used, and what you learned from the analysis.

Read More....

0 notes

Text

Upgrade Your Career with the Best Data Analytics Courses in Noida

In a world overflowing with digital information, data is the new oil—and those who can extract, refine, and analyze it are in high demand. The ability to understand and leverage data is now a key competitive advantage in every industry, from IT and finance to healthcare and e-commerce. As a result, data analytics courses have gained massive popularity among job seekers, professionals, and students alike.

If you're located in Delhi-NCR or looking for quality tech education in a thriving urban setting, enrolling in data analytics courses in Noida can give your career the boost it needs.

What is Data Analytics?

Data analytics refers to the science of analyzing raw data to extract meaningful insights that drive decision-making. It involves a range of techniques, tools, and technologies used to discover patterns, predict trends, and identify valuable information.

There are several types of data analytics, including:

Descriptive Analytics: What happened?

Diagnostic Analytics: Why did it happen?

Predictive Analytics: What could happen?

Prescriptive Analytics: What should be done?

Skilled professionals who master these techniques are known as Data Analysts, Data Scientists, and Business Intelligence Experts.

Why Should You Learn Data Analytics?

Whether you’re a tech enthusiast or someone from a non-technical background, learning data analytics opens up a wealth of opportunities. Here’s why data analytics courses are worth investing in:

📊 High Demand, Low Supply: There is a massive talent gap in data analytics. Skilled professionals are rare and highly paid.

💼 Diverse Career Options: You can work in finance, IT, marketing, retail, sports, government, and more.

🌍 Global Opportunities: Data analytics skills are in demand worldwide, offering chances to work remotely or abroad.

💰 Attractive Salary Packages: Entry-level data analysts can expect starting salaries upwards of ₹4–6 LPA, which can grow quickly with experience.

📈 Future-Proof Career: As long as businesses generate data, analysts will be needed to make sense of it.

Why Choose Data Analytics Courses in Noida?

Noida, part of the Delhi-NCR region, is a fast-growing tech and education hub. Home to top companies and training institutes, Noida offers the perfect ecosystem for tech learners.

Here are compelling reasons to choose data analytics courses in noida:

🏢 Proximity to IT & MNC Hubs: Noida houses leading firms like HCL, TCS, Infosys, Adobe, Paytm, and many startups.

🧑🏫 Expert Trainers: Courses are conducted by professionals with real-world experience in analytics, machine learning, and AI.

🖥️ Practical Approach: Institutes focus on hands-on learning through real datasets, live projects, and capstone assignments.

🎯 Placement Assistance: Many data analytics institutes in Noida offer dedicated job support, resume writing, and interview prep.

🕒 Flexible Batches: Choose from online, offline, weekend, or evening classes to suit your schedule.

Core Modules Covered in Data Analytics Courses

A comprehensive data analytics course typically includes:

Fundamentals of Data Analytics

Excel for Data Analysis

Statistics & Probability

SQL for Data Querying

Python or R Programming

Data Visualization (Power BI/Tableau)

Machine Learning Basics

Big Data Technologies (Hadoop, Spark)

Business Intelligence Tools

Capstone Project/Internship

Who Should Join Data Analytics Courses?

These courses are suitable for a wide audience:

✅ Fresh graduates (B.Sc, BCA, B.Tech, BBA, MBA)

✅ IT professionals seeking domain change

✅ Non-IT professionals like sales, marketing, and finance executives

✅ Entrepreneurs aiming to make data-backed decisions

✅ Students planning higher education in data science or AI

Career Opportunities After Completing Data Analytics Courses

After completing a data analytics course, learners can pursue roles such as:

Data Analyst

Business Analyst

Data Scientist

Data Engineer

Data Consultant

Operations Analyst

Marketing Analyst

Financial Analyst

BI Developer

Risk Analyst

Top Recruiters Hiring Data Analytics Professionals

Companies in Noida and across India actively seek data professionals. Some top recruiters include:

HCL Technologies

TCS

Infosys

Accenture

Cognizant

Paytm

Genpact

Capgemini

EY (Ernst & Young)

ZS Associates

Startups in fintech, health tech, and e-commerce

Tips to Choose the Right Data Analytics Course in Noida

When selecting a training program, consider the following:

🔍 Course Content: Does it cover the latest tools and techniques?

🧑🏫 Trainer Background: Are trainers experienced and industry-certified?

🛠️ Hands-On Practice: Does the course include real-time projects?

📜 Certification: Is it recognized by companies and institutions?

💬 Reviews and Ratings: What do past students say about the course?

🎓 Post-Course Support: Is job placement or internship assistance available?

Certifications That Add Value

A good institute will prepare you for globally recognized certifications such as:

Microsoft Data Analyst Associate

Google Data Analytics Professional Certificate

IBM Data Analyst Certificate

Tableau Desktop Specialist

Certified Analytics Professional (CAP)

These certifications can boost your credibility and help you stand out in job applications.

Final Thoughts: Your Future Starts Here

In this competitive digital era, data is everywhere—but professionals who can understand and use it effectively are still rare. Taking the right data analytics courses in noida is not just a step toward upskilling—it’s an investment in your future.

Whether you're aiming for a job switch, career growth, or knowledge enhancement, data analytics courses offer a versatile, high-growth pathway. With industry-relevant skills, real-time projects, and expert guidance, you’ll be prepared to take on the most in-demand roles of the decade.

0 notes

Text

Top Programming Languages to Learn for Freelancing in India

The gig economy in India is blazing a trail and so is the demand for skilled programmers and developers. Among the biggest plus points for freelancing work is huge flexibility, independence, and money-making potential, which makes many techies go for it as a career option. However, with the endless list of languages available to choose from, which ones should you master to thrive as a freelance developer in India?

Deciding on the language is of paramount importance because at the end of the day, it needs to get you clients, lucrative projects that pay well, and the foundation for your complete freelance career. Here is a list of some of the top programming languages to learn for freelancing in India along with their market demand, types of projects, and earning potential.

Why Freelance Programming is a Smart Career Choice

Let's lay out really fast the benefits of freelance programmer in India before the languages:

Flexibility: Work from any place, on the hours you choose, and with the workload of your preference.

Diverse Projects: Different industries and technologies put your skills to test.

Increased Earning Potential: When most people make the shift toward freelancing, they rapidly find that the rates offered often surpass customary salaries-with growing experience.

Skill Growth: New learning keeps on taking place in terms of new technology and problem-solving.

Autonomy: Your own person and the evolution of your brand.

Top Programming Languages for Freelancing in India:

Python:

Why it's great for freelancing: Python's versatility is its superpower. It's used for web development (Django, Flask), data science, machine learning, AI, scripting, automation, and even basic game development. This wide range of applications means a vast pool of freelance projects. Clients often seek Python developers for data analysis, building custom scripts, or developing backend APIs.

Freelance Project Examples: Data cleaning scripts, AI model integration, web scraping, custom automation tools, backend for web/mobile apps.

JavaScript (with Frameworks like React, Angular, Node.js):

Why it's great for freelancing: JavaScript is indispensable for web development. As the language of the internet, it allows you to build interactive front-end interfaces (React, Angular, Vue.js) and powerful back-end servers (Node.js). Full-stack JavaScript developers are in exceptionally high demand.

Freelance Project Examples: Interactive websites, single-page applications (SPAs), e-commerce platforms, custom web tools, APIs.

PHP (with Frameworks like Laravel, WordPress):

Why it's great for freelancing: While newer languages emerge, PHP continues to power a significant portion of the web, including WordPress – which dominates the CMS market. Knowledge of PHP, especially with frameworks like Laravel or Symfony, opens up a massive market for website development, customization, and maintenance.

Freelance Project Examples: WordPress theme/plugin development, custom CMS solutions, e-commerce site development, existing website maintenance.

Java:

Why it's great for freelancing: Java is a powerhouse for enterprise-level applications, Android mobile app development, and large-scale backend systems. Many established businesses and startups require Java expertise for robust, scalable solutions.

Freelance Project Examples: Android app development, enterprise software development, backend API development, migration projects.

SQL (Structured Query Language):

Why it's great for freelancing: While not a full-fledged programming language for building applications, SQL is the language of databases, and almost every application relies on one. Freelancers proficient in SQL can offer services in database design, optimization, data extraction, and reporting. It often complements other languages.

Freelance Project Examples: Database design and optimization, custom report generation, data migration, data cleaning for analytics projects.

Swift/Kotlin (for Mobile Development):

Why it's great for freelancing: With the explosive growth of smartphone usage, mobile app development remains a goldmine for freelancers. Swift is for iOS (Apple) apps, and Kotlin is primarily for Android. Specializing in one or both can carve out a lucrative niche.

Freelance Project Examples: Custom mobile applications for businesses, utility apps, game development, app maintenance and updates.

How to Choose Your First Freelance Language:

Consider Your Interests: What kind of projects excite you? Web, mobile, data, or something else?

Research Market Demand: Look at popular freelance platforms (Upwork, Fiverr, Freelancer.in) for the types of projects most requested in India.

Start with a Beginner-Friendly Language: Python or JavaScript is an excellent start due to their immense resources and helpful communities.

Focus on a Niche: Instead of trying to learn everything, go extremely deep on one or two languages within a domain (e.g., Python for data science, JavaScript for MERN stack development).

To be a successful freelance programmer in India, technical skills have to be combined with powerful communication, project management, and self-discipline. By mastering either one or all of these top programming languages, you will be set to seize exciting opportunities and project yourself as an independent professional in the ever-evolving digital domain.

Contact us

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

#Freelance Programming#Freelance India#Programming Languages#Coding for Freelancers#Learn to Code#Python#JavaScript#Java#PHP#SQL#Mobile Development#Freelance Developer#TCCI Computer Coaching

0 notes

Text

How Laravel Development Services Deliver High-Performance Web Portals for B2B Brands

In the fast-paced world of B2B business, your digital presence is more than just a website; it's your primary tool for lead generation, sales enablement, and partner communication. For enterprises that need performance, flexibility, and reliability, Laravel has become the go-to PHP framework. With Laravel development services, B2B companies can build high-performance web portals that are scalable, secure, and customized for complex workflows.

In this blog, we’ll explore how Laravel stands out, what makes it ideal for B2B web portals, and why partnering with the right Laravel development company can accelerate your digital growth.

Why Laravel for B2B Web Portals?

Laravel is a modern PHP framework known for its elegant syntax, modular architecture, and strong ecosystem. It supports robust backend development and integrates seamlessly with frontend tools, third-party APIs, and databases.

Here's what makes Laravel especially strong for B2B website:

Security: Laravel comes with built-in authentication, CSRF protection, and encryption features.

Scalability: Its modular architecture allows you to scale features as your business grows.

Speed & Performance: Laravel includes caching, database optimization, and efficient routing to enhance speed.

API Integration: Laravel is great at creating and using RESTful APIs, making it ideal for B2B platforms that rely heavily on data.

Custom Workflows: B2B portals often require custom workflows like quotation systems, user roles, or dynamic dashboards. Laravel can easily handle these with custom logic.

Core Features of Laravel Development Services for B2B Portals

The best Laravel development services focus on custom development, security, speed, and long-term scalability. Here’s what they typically include:

1. Custom Portal Development

Every B2B business is different. Laravel allows complete control over features, UI/UX, and data structure, enabling the development of:

Lead management systems

Vendor or supplier portals

Customer self-service portals

Partner dashboards

Internal employee tools

Custom development ensures that the portal matches your exact business processes.

2. Role-Based Access Control (RBAC)

Most B2B portals deal with multiple user types: sales teams, clients, vendors, admin staff, etc. Laravel makes implementing secure, flexible role-based permissions simple.

Define user roles and permissions

Restrict access to certain pages or features

Track user activity for accountability

This helps maintain secure and structured workflows across teams.

3. Data-Driven Dashboards and Reporting

Laravel can connect with various databases and analytics tools to power real-time dashboards. B2B brands can access:

Sales and marketing KPIs

Inventory and supply chain metrics

Client activity reports

CRM insights and performance charts

Whether you need graphs, search filters, or reports to download, Laravel can handle and show data smoothly.

4. API Integrations

B2B businesses often rely on tools like Salesforce, HubSpot, QuickBooks, Zoho, or SAP. Laravel supports:

REST and SOAP API integrations

Secure token-based authentication

Real-time data sync between systems

This creates a unified workflow across your technology stack.

5. Performance Optimization

A slow web portal can lose clients. Laravel includes:

Built-in caching

Optimized SQL queries with Eloquent ORM

Lazy loading and queue systems

Route and view caching

These help reduce load times and keep your portal fast even with high traffic or large data volumes.

6. Advanced Security Features

For B2B businesses, security is not optional. Laravel provides:

Protection against SQL injection, XSS, and CSRF attacks

Two-factor authentication

HTTPS enforcement and data encryption

Secure user session handling

This ensures sensitive B2B data is protected around the clock.

7. Multi-Language and Localization Support

If your brand serves clients across different regions or languages, Laravel makes it easy to deliver a multi-language experience.

Translate pages, forms, and emails

Use locale-based routing

Serve region-specific content

It’s especially useful for global B2B firms and export-driven businesses.

8. Easy Maintenance and Scalability

Laravel uses MVC (Model-View-Controller) architecture, making the codebase clean and modular. This allows:

Easy future upgrades

Addition of new features without affecting existing code

Seamless onboarding of new developers

Laravel projects are built to last, saving you time and cost in the long term.

Real-World Use Cases

Many B2B companies from different industries have effectively used Laravel to build their web portals.

Manufacturing: Supplier/vendor management, order tracking, and inventory systems

Healthcare: HIPAA-compliant patient portals for device or equipment tracking

IT Services: Customer portals for ticketing, SLA management, and invoicing

Finance: Secure dashboards for client data, transaction histories, and investment analytics

Logistics: Delivery tracking and fleet management systems

With custom Laravel development, you’re not tied to template limitations.

Choosing the Right Laravel Development Partner

Hiring the right team is crucial. Here’s what to look for:

Experience: Proven track record in B2B and Laravel projects

Communication: Clear project planning, regular updates, and transparency

Full-stack expertise: Laravel with frontend (Vue, React), DevOps, and database skills

Post-launch support: Maintenance, bug fixes, and scalability planning

The ideal partner will understand your business goals and recommend technology solutions accordingly.

Conclusion

Laravel has proven to be a powerful, secure, and efficient framework for developing high-performance B2B portals. From custom workflows and advanced dashboards to secure user roles and API integrations, Laravel delivers everything modern B2B businesses need in a digital platform.

Choosing the right Laravel development services allows your brand to stay ahead, operate more efficiently, and offer a seamless digital experience to partners, vendors, and clients.

If your business is ready to go beyond a basic website and embrace a true digital portal, Laravel might just be the smartest decision you’ll make in 2025.

FAQs

Q1. Why should B2B businesses choose Laravel over other PHP frameworks? Laravel offers advanced security, flexibility, and performance features that are ideal for complex business portals. It also has a big network of tools and a strong developer community.

Q2. Can Laravel manage big databases and many users at the same time? Yes, Laravel is scalable and can handle high volumes of data and concurrent users when paired with the right infrastructure.

Q3. How much time does it usually take to create a B2B portal using Laravel? It depends on complexity. A basic version might take 4–6 weeks, while advanced platforms may take 3–6 months.

Q4. Is Laravel suitable for mobile-friendly and responsive designs? Absolutely. Laravel works well with modern frontend frameworks like Vue or React to deliver responsive, mobile-optimized experiences.

0 notes

Text

Unlock Your Future with Data: Why a Data Analytics Course in Coimbatore is a Smart Move for Students

In modern world governed by data, analytical ability and use of data are capital assets. Learning data analytics transforms both professional wanting to increase employability and new students eager to start college. With a great data analytics course in Coimbatore, Xploreitcorp helps students to welcome the digital avalanche.

We want to overcome the knowledge gap between the classroom and the workplace by means of basic ideas and advanced tools with Power BI. Let's explore for students stepping into the field of data analytics the significance of the present.

Definition of Data Analytics: Value

Interpreting enormous volumes of unstructured data to derive actionable patterns, insights, trends, and intelligence is essential component of data analytics. Regarding decision-making, projection of specific outputs, and relative position in the competitive market, this helps companies. But this service is not limited to big businesses; analytics is now needed in retail markets, healthcare, even the education sector to run more wisely.

Learning data analytics is as demanding as a new language for pupils, but with valuable knowledge, they might be part of an intellectual revolution always present in all spheres. As it happens in real time, the change might generate possibilities and employment.

Considered the "Manchester," Coimbatore has gained popularity in industry language for South India Technological Center. It's a perfect location to learn and apply data analytics when combined with the entrepreneurial energy of different companies and corporatives. The demand for experts in data analytics and related disciplines is continuously rising as companies realize their clients depend on data and highlight their capabilities.

Emerging skills taught in the Data Analytics Course in Coimbatore are highly valued in other industries including banking, healthcare, IT, logistics, childcare, and even e-commerce. The candidates gradually have the chance to work as Consultants, Engineers, Senior Analysts, Data Managers as they ascend the levels.

Choosing a data analytics degree in Coimbatore enables students to have a decent education at a cost more reasonable than in metropolitan locations. Moreover, the given internships and actual projects with nearby businesses offer chances for practical education that support theoretical knowledge.

Utilizing Power BI in Data Analytics

Among the most sought-after instruments available in the data analytics scene nowadays is Microsoft Power BI. It lets users create interactive dashboards and reports that help to share and comprehend data. Learning Power BI gives students a useful capacity to increase their employability so they will not be left out in the job market.

Xploreitcorp offers a customized Power BI course in Coimbatore stressing data visualization, business intelligence, and real-time analytics. Using functional dashboards and project work, the course comprises in practical training sessions that equip students for real-world situations. Whether your field of work is business, engineering, or IT, including Power BI on your CV will make a big difference.

What In a Data Analytic Course You Will Learn

Our goal at Xploreitcorp is to reason with the future; your data analytics course in Coimbatore most certainly covers the following subjects:

Data type introductions, sources and structural explanations

Preprocessing and data cleaning

Interpretation and statistical analysis

Visualization of data on Power BI

database and SQL management

Machine learning and predictive analysis: foundations

working on industry data real time projects

Every idea is taught to build on the one before it so motivates students of various academic backgrounds to grow in knowledge confidence.

Data Analytic Course in Coimbatore Employment Prospectures

A course in data analytics course in Coimbatore lets students pursue many job routes. Based on their additional education, they could be intrigued in playing the following roles:

Analyst in data science

An Analyst in Business Intelligence

Engineer in Data Analytics

Analyst of Data

Accountant for Reporting

Marketing Analyst

Consultant in Finance Data

As more and more businesses rely on data as a basic component, there are plenty of chances present. Starting pay for entry-level positions are reasonable, and combined with fast expansion in other areas, encouragement from experience makes this profession rather desirable.

Furthermore, people enrolled in a Coimbatore Power BI training would have more opportunities during business intelligence job interviews involving dashboard development, data modeling, and dynamic reporting.

Why should you choose Xploreitcorp for your path of data analytics?

Xploreitcorp thinks kids need immersive experiences rather than only intellectual understanding. Our differences stem from these:

Industry Ready Trainers: We have professors that have been actively working in business intelligence and analytics.

Every one of our students works practically on projects, homework, and case studies.

We help students in Power BI and data analytics be ready for international certification tests.

Students receive assistance for professionally produced resumes, practice interviews, and placement services in career development.

Convenience for most college students allows evening and weekend courses to be accessible.

Xploreitcorp's data analytics course in Coimbatore is a dedication to your professional growth rather than just a set of lectures.

Suggestive Projects To Improve Self-Esteem

Application of the ideas in the real world distinguishes a superb course from an ordinary one. Like the professionals, at Xploreitcorp we equip our students to work on real-time datasets and challenges. Projects like sales forecasting and customer segmentation help to develop confidence and work readiness.

In our Power BI training in Coimbatore, where students learn to design interactive dashboards, construct KPIs, and visualize them as well as become competent in data storytelling, such practical exposure is also given.

India's Data Analytics Outlook

Data is lifeblood for the growing digital economy India is building. To run best, artificial intelligence, IoT, and automation depend on data insights. Like anything, the demand for data specialists is going to rise; early adopters will have a major benefit.

Through data analytics training in Coimbatore, students are not only preparing for the current market but also actively defending their profession for long terms. For many years, critical thinking, data structure management, and analysis skills—which will help one obtain insights related to the company—will be in much demand.

Your Data Journey Begins Here: Take the First Step

Everything in our modern society revolves on data; those who grasp it will bring about world change. Learning data analytics is the next best professional choice for you or something that will set you apart as a student.

Our data analytics course in Coimbatore at Xploreitcorp provides hands-on exposure and practical training required for a competitive employment market, therefore arming students. Our Power BI coursemin coimbatore offers deeper knowledge and more work options; our skilled instructors guarantee mastery of the most sought-after analytics technologies.

FAQs:

1. Who can join the course? Students from any background with basic math skills can enroll—no coding experience needed.

2. How long is the course? It typically lasts 3 to 6 months, with flexible batch options.

3. Is Power BI included? Yes, Power BI basics are included. For deeper learning, we offer a separate Power BI course in Coimbatore.

4. Will I get a certificate? Yes, you'll receive a completion certificate from Xploreitcorp.

5. Are placements available? Yes, we provide placement assistance and interview training after course completion.

0 notes

Text

Transform Your Skills in 2025: Master Data Visualization with Tableau & Python (2 Courses in 1!)

When it comes to storytelling with data in 2025, two names continue to dominate the landscape: Tableau and Python. If you’re looking to build powerful dashboards, tell data-driven stories, and break into one of the most in-demand fields today, this is your chance.

But instead of bouncing between platforms and tutorials, what if you could master both tools in a single, streamlined journey?

That’s exactly what the 2025 Data Visualization in Tableau & Python (2 Courses in 1!) offers—an all-in-one course designed to take you from data novice to confident visual storyteller.

Let’s dive into why this course is creating buzz, how it’s structured, and why learning Tableau and Python together is a smart move in today’s data-first world.

Why Data Visualization Is a Must-Have Skill in 2025

We’re drowning in data—from social media metrics and customer feedback to financial reports and operational stats. But raw data means nothing unless you can make sense of it.

That’s where data visualization steps in. It’s not just about charts and graphs—it’s about revealing patterns, trends, and outliers that inform smarter decisions.

Whether you're working in marketing, finance, logistics, healthcare, or even education, communicating data clearly is no longer optional. It’s expected.

And if you can master both Tableau—a drag-and-drop analytics platform—and Python—a powerhouse for automation and advanced analysis—you’re giving yourself a massive career edge.

Meet the 2-in-1 Power Course: Tableau + Python

The 2025 Data Visualization in Tableau & Python (2 Courses in 1!) is exactly what it sounds like: a double-feature course that delivers hands-on training in two of the most important tools in data science today.

Instead of paying for two separate learning paths (which could cost you more time and money), you’ll:

Learn Tableau from scratch and create interactive dashboards

Dive into Python programming for data visualization

Understand how to tell compelling data stories using both tools

Build real-world projects that you can show off to employers or clients

All in one single course.

Who Should Take This Course?

This course is ideal for:

Beginners who want a solid foundation in both Tableau and Python

Data enthusiasts who want to transition into analytics roles

Marketing and business professionals who need to understand KPIs visually

Freelancers and consultants looking to offer data services

Students and job seekers trying to build a strong data portfolio

No prior coding or Tableau experience? No problem. Everything is taught step-by-step with real-world examples.

What You'll Learn: Inside the Course

Let’s break down what you’ll actually get inside this 2-in-1 course:

✅ Tableau Module Highlights:

Tableau installation and dashboard interface

Connecting to various data sources (Excel, CSV, SQL)

Creating bar charts, pie charts, line charts, maps, and more

Advanced dashboard design techniques

Parameters, filters, calculations, and forecasting

Publishing and sharing interactive dashboards

By the end of this section, you’ll be comfortable using Tableau to tell stories that executives understand and act on.

✅ Python Visualization Module Highlights:

Python basics: data types, loops, functions

Data analysis with Pandas and NumPy

Visualization libraries like Matplotlib and Seaborn

Building statistical plots, heatmaps, scatterplots, and histograms

Customizing charts with color, labels, legends, and annotations

Automating visual reports

Even if you’ve never coded before, you’ll walk away confident enough to build beautiful, programmatically-generated visualizations with Python.

The Real-World Value: Why This Course Stands Out

We all know there’s no shortage of online courses today. But what makes this one worth your time?

🌟 1. Two for the Price of One

Most courses focus on either Tableau or Python. This one merges the best of both worlds, giving you more for your time and money.

🌟 2. Hands-On Learning

You won’t just be watching slides or lectures—you’ll be working with real data sets, solving real problems, and building real projects.

🌟 3. Resume-Boosting Portfolio

From the Tableau dashboards to the Python charts, everything you build can be used to show potential employers what you’re capable of.

🌟 4. Taught by Experts

This course is created by instructors who understand both tools deeply and can explain things clearly—no confusing jargon, no filler.

🌟 5. Constantly Updated

As Tableau and Python evolve, so does this course. That means you’re always learning the latest and greatest features, not outdated content.

Why Learn Both Tableau and Python?

Some people ask, “Isn’t one enough?”

Here’s the thing: they serve different purposes, but together, they’re unstoppable.

Tableau is for quick, intuitive dashboarding.

Drag-and-drop interface

Ideal for business users

Great for presentations and client reporting

Python is for flexibility and scale.

You can clean, manipulate, and transform data

Build custom visuals not possible in Tableau

Automate workflows and scale up for big data

By learning both, you cover all your bases. You’re not limited to just visuals—you become a full-spectrum data storyteller.

Data Careers in 2025: What This Course Prepares You For

The demand for data professionals continues to skyrocket. Here’s how this course sets you up for success in various career paths: RoleHow This Course HelpsData AnalystBuild dashboards, analyze trends, present insightsBusiness Intelligence AnalystCombine data from multiple sources, visualize it for execsData Scientist (Junior)Analyze data with Python, visualize with TableauMarketing AnalystUse Tableau for campaign reporting, Python for A/B analysisFreelancer/ConsultantOffer complete data storytelling services to clients

This course can be a launchpad—whether you want to get hired, switch careers, or start your own analytics agency.

Real Projects = Real Confidence

What sets this course apart is the project-based learning approach. You'll create:

Sales dashboards

Market trend analysis charts

Customer segmentation visuals

Time-series forecasts

Custom visual stories using Python

Each project is more than just a tutorial—it mimics real-world scenarios you’ll face on the job.

Flexible, Affordable, and Beginner-Friendly

Best part? You can learn at your own pace. No deadlines, no pressure.

You don’t need to buy expensive software. Tableau Public is free, and Python tools like Jupyter, Pandas, and Matplotlib are open-source.

Plus, with lifetime access, you can revisit any lesson whenever you want—even years down the road.

And all of this is available at a price that’s far less than a bootcamp or university course.

Still Not Sure? Here's What Past Learners Say

“I had zero experience with Tableau or Python. After this course, I built my own dashboard and presented it to my team. They were blown away!” – Rajiv, Product Analyst

“Perfect combo of theory and practice. Python sections were especially helpful for automating reports I used to make manually.” – Sarah, Marketing Manager

“Loved how everything was explained so simply. Highly recommend to anyone trying to upskill in data.” – Alex, Freelancer

Final Thoughts: Your Data Career Starts Now

You don’t need to be a programmer or a math wizard to master data visualization. You just need the right guidance, a solid roadmap, and the willingness to practice.

With the 2025 Data Visualization in Tableau & Python (2 Courses in 1!), you’re getting all of that—and more.

This is your chance to stand out in a crowded job market, speak the language of data confidently, and unlock doors in tech, business, healthcare, finance, and beyond.

Don’t let the data wave pass you by—ride it with the skills that matter in 2025 and beyond.

0 notes

Text

How to Drop Tables in MySQL Using dbForge Studio: A Simple Guide for Safer Table Management

Learn how to drop a table in MySQL quickly and safely using dbForge Studio for MySQL — a professional-grade IDE designed for MySQL and MariaDB development. Whether you’re looking to delete a table, use DROP TABLE IF EXISTS, or completely clear a MySQL table, this guide has you covered.

In article “How to Drop Tables in MySQL: A Complete Guide for Database Developers” we explain:

✅ How to drop single and multiple tables Use simple SQL commands or the intuitive UI in dbForge Studio to delete one or several tables at once — no need to write multiple queries manually.