#strangler design pattern

Explore tagged Tumblr posts

Text

Monolith to Microservices – How Database Architecture Must Evolve

The journey from monolith to microservices is like switching from a one-size-fits-all outfit to a tailored wardrobe—each piece has a purpose, fits perfectly, and works well on its own or with others. But here's the catch: while many teams focus on refactoring application code, they often forget the backbone that supports it all - database architecture.

If you're planning a monolithic to microservices migration, your database architecture can't be an afterthought. Why? Because traditional monolithic architectures often tie everything to one central data store. When you break your app into microservices, you can't expect all those services to keep calling back to a single data source. That would kill performance and create tight coupling. That’s the exact problem microservices are meant to solve.

What does evolving database architecture really mean?

In a monolithic setup, one large relational database holds everything—users, orders, payments; you name it. It's straightforward, but it creates bottlenecks as your app scales. Enter microservices database architecture, where each service owns its data. Without this, maintaining independent services and scaling seamlessly becomes difficult.

Here is how Microservices Database Architecture looks like:

Microservices Data Management: Strategies for Smarter Database Architecture

Each microservice might use a different database depending on its needs—NoSQL, relational, time-series, or even a share database architecture to split data horizontally across systems.

Imagine each service with its own custom toolkit, tailored to handle its unique tasks. However, this transition isn't plug-and-play. You’ll need solid database migration strategies. A thoughtful data migration strategy ensures you're not just lifting and shifting data but transforming it to fit your new architecture.

Some strategies include:

· strangler pattern

· change data capture (CDC)

· dual writes during migration

You can choose wisely based on your service’s data consistency and availability requirements.

What is the one mistake teams often make? Overlooking data integrity and synchronization. As you move to microservices database architecture, ensuring consistency across distributed systems becomes tricky. That’s why event-driven models and eventual consistency often become part of your database architecture design toolkit.

Another evolving piece is your data warehouse architecture. In a monolith, it's simple to extract data for analytics. But with distributed data, you’ll need pipelines to gather, transform, and load data from multiple sources—often in real-time.

Wrapping Up

Going from monolith to microservices isn’t just a code-level transformation—it’s a paradigm shift in how we design, access, and manage data. So, updating your database architecture is not optional; it's foundational. From crafting a rock-solid data migration strategy to implementing a flexible microservices data management approach, the data layer must evolve in sync with the application.

So, the next time you’re planning that big monolithic to microservices migration, remember: the code is only half the story. Your database architecture will make or break your success.---

Pro Tip: Start small. Pick one service, define its database boundaries, and apply your database migration strategies thoughtfully. In the world of data, small, strategic steps work better than drastic shifts.

Contact us at Nitor Infotech to modernize your database architecture for a seamless move to microservices.

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Delve into the second edition to master serverless proficiency and explore new chapters on security techniques, multi-regional deployment, and optimizing observability.Key FeaturesGain insights from a seasoned CTO on best practices for designing enterprise-grade software systemsDeepen your understanding of system reliability, maintainability, observability, and scalability with real-world examplesElevate your skills with software design patterns and architectural concepts, including securing in-depth and running in multiple regions.Book DescriptionOrganizations undergoing digital transformation rely on IT professionals to design systems to keep up with the rate of change while maintaining stability. With this edition, enriched with more real-world examples, you'll be perfectly equipped to architect the future for unparalleled innovation.This book guides through the architectural patterns that power enterprise-grade software systems while exploring key architectural elements (such as events-driven microservices, and micro frontends) and learning how to implement anti-fragile systems.First, you'll divide up a system and define boundaries so that your teams can work autonomously and accelerate innovation. You'll cover the low-level event and data patterns that support the entire architecture while getting up and running with the different autonomous service design patterns.This edition is tailored with several new topics on security, observability, and multi-regional deployment. It focuses on best practices for security, reliability, testability, observability, and performance. You'll be exploring the methodologies of continuous experimentation, deployment, and delivery before delving into some final thoughts on how to start making progress.By the end of this book, you'll be able to architect your own event-driven, serverless systems that are ready to adapt and change.What you will learnExplore architectural patterns to create anti-fragile systems.Focus on DevSecOps practices that empower self-sufficient, full-stack teamsApply microservices principles to the frontendDiscover how SOLID principles apply to software and database architectureGain practical skills in deploying, securing, and optimizing serverless architecturesDeploy a multi-regional system and explore the strangler pattern for migrating legacy systemsMaster techniques for collecting and utilizing metrics, including RUM, Synthetics, and Anomaly detection.Who this book is forThis book is for software architects who want to learn more about different software design patterns and best practices. This isn't a beginner's manual - you'll need an intermediate level of programming proficiency and software design experience to get started.You'll get the most out of this software design book if you already know the basics of the cloud, but it isn't a prerequisite.Table of ContentsArchitecting for InnovationsDefining Boundaries and Letting GoTaming the Presentation TierTrusting Facts and Eventual ConsistencyTurning the Cloud into the DatabaseA Best Friend for the FrontendBridging Intersystem GapsReacting to Events with More EventsRunning in Multiple RegionsSecuring Autonomous Subsystems in DepthChoreographing Deployment and DeliveryOptimizing ObservabilityDon't Delay, Start Experimenting Publisher : Packt Publishing; 2nd ed. edition (27 February 2024) Language : English Paperback : 488 pages ISBN-10

: 1803235446 ISBN-13 : 978-1803235448 Item Weight : 840 g Dimensions : 2.79 x 19.05 x 23.5 cm Country of Origin : India [ad_2]

0 notes

Video

youtube

Strangler Design Pattern Tutorial with Examples for Programmer & Beginners

Full video link https://youtu.be/HLz4dF1scKU

Hello friends, new #video on #stranglerdesignpattern with #examples is published on #codeonedigest #youtube channel. Learn #microservice #strangler #designpatterns #programming #coding with codeonedigest.

@java #java #aws #awscloud @awscloud @AWSCloudIndia #Cloud #CloudComputing @YouTube #youtube #azure #msazure #stranglerpattern #stranglerpatternexample #stranglerpatternorvinepattern #stranglerpatternimplementation #stranglerpatterns #stranglerpatternvsfaçade #stranglerpatternfaçade #stranglerpatternrefactoring #stranglerpatternjava #stranglerpatternsoftwaredevelopment #stranglerpatternmonolith #stranglerpatterndatamigration #stranglerpatternmicroservice #stranglerdesignpattern #stranglerdesignpatternmicroservices #microservice #microservicearchitecture #microservicedesignpatterns #microservicedesignpatterns #microservicedesignpatternsspringboot #microservicedesignpatternssaga #microservicedesignpatternsinterviewquestions #microservicedesignpatternsinjava #microservicedesignpatternscircuitbreaker #microservicedesignpatternsorchestration #decompositionpatternsmicroservices #decompositionpatterns #monolithicdecompositionpatterns #integrationpatterns #integrationpatternsinmicroservices #integrationpatternsinjava #integrationpatternsbestpractices #databasepatterns #databasepatternsmicroservices #microservicesobservabilitypatterns #observabilitypatterns #crosscuttingconcernsinmicroservices #crosscuttingconcernspatterns #servicediscoverypattern #healthcheckpattern #sagapattern #circuitbreakerpattern #cqrspattern #commandquerypattern #proxypattern #apigatewaypattern #branchpattern #eventsourcingpattern #logaggregatorpattern

#youtube#strangler#strangler design pattern#strangler pattern#java#microservice#microservice design patterns

1 note

·

View note

Note

Here’s something I’ve been wondering!

I know you’ve written about Sara’s reckless behavior in the early days, especially in seasons 3-4, but Grissom makes some less-than-stellar personal safety choices early on as well. For a little while he makes it a yearly habit to confront someone he knows to be a serial killer unarmed, with no backup, and purposefully not having told the team where he’s gone.

(I mean, the only reason Grissom survives the season 1 finale is because Catherine knows his exact brand of stupid.)

At the same time, he absolutely will not let Sara put herself on the line in the same way.

In Grissom’s mind, what’s the different between Sara using herself as bait for the Strip Strangler when she fits the profile versus Grissom confronting the same killer, or for that matter Paul Milander, where Grissom has the exact birthday needed to make him fit the victim pattern? Other than that he’s in love with her, obviously.

Is this really the best way for him to go about his work? Otherwise, Grissom is not an especially confrontational person outside of the controlled setting of the interrogation room, so I’m curious if you have any thoughts as to what’s going on under the hood here.

I always enjoy your answers! Thanks!

hi, @clintbeifong!

so to take the last part of your question (“is this really the best way for him to go about his work?”) first:

no, gallivanting off to secret crime scenes without even telling dispatch where he’s going while not even carrying a gun on him, having at least some inkling as he does so that he might encounter a murderous suspect while there, is not the best way for grissom to go about his work.

it’s reckless as hell.

were any other team member to willingly assume the same risks that he does, in terms of physically confronting dangerous killers sans backup or even weapons, he would absolutely lose his mind about it.

and especially if said team member were sara.

in no way is what he does safe.

in no way is what he does smart.

for a certifiable genius, he makes some impressively poor decisions over the years down these lines, and he is only saved by a) the fact that he is possessed of “main character immortality” status, b) sheer luck, and c) catherine, as you so aptly frame things, knowing “his exact brand of stupid.”

that said, within the world of the story, do i think that grissom himself would find a way to rationalize this reckless behavior on his part, even for as foolhardy as it is?

yeah.

yeah, i do.

because for as generally levelheaded and pragmatic as he is, he does have some blind spots when it comes to his own comportment.

discussion after the “keep reading,” if you’re interested.

__

so if one were to ask grissom how he can defend his own recklessness while getting so upset about sara’s, to my mind, he would argue that any time he finds himself in a dangerous situation, it’s not necessarily because he’s gone “looking for it.”

he’d view every instance of him doing so as a discrete occurrence and something that happened to him largely inadvertently.

essentially, his defense would be, “well, i didn’t mean to—or at least it wasn’t my end goal.”

and at least in terms of diagnosing his own intentions, he’d probably be correct in so saying.

he wouldn’t be able to articulate as much about himself, but the fact is that his tendency to fixate is what gets the better of him here.

sure, he sometimes finds himself facing off against dangerous serial killers, but when he does, he doesn’t do so by design. it’s more because he fails to factor himself into scenarios. while he isn’t naïve or necessarily ignorant that his is a dangerous world, he is so tunnel-visioned and convinced of his own capability—nine times out of ten, he is the smartest person in any room, and the fact that he is is enough to get him by—that when he is on a hot case, he will sometimes fail to heed warning signs and instead just charge full-speed ahead in the name of getting a break.

he'll be deep in the investigation, and some sudden epiphany will come to him that leads him away by himself, and rather than taking the time to gather the team, he’ll just wander off, and suddenly he’ll find himself confronted by the suspect, not because that was what he necessarily set out to do but because he let himself get “too far down the rabbit hole” without ever once looking up.

as i talk about here,

by no means is he suicidal, and he isn’t even habitually careless with his own safety in the same way sara oftentimes is with hers, but he can sometimes be heedless of certain risks because he trusts so much in his own intelligence, believing that he will always be able to think his way out of any tight spot.

sometimes he is less scared than he should be of the sheer brutality and unpredictability and irrationality of the criminals he faces down, relying on his own cleverness to save him even in situations where it doesn’t factor in and can’t save him because scissors beats paper, you know?

with goggle, you can almost see grissom thinking that if he can just keep goggle talking, he can trick him into making a mistake. he thinks they’re playing mental chess. that’s why it takes him so completely by surprise when goggle “flips the board” and clocks him with the wrench. for him, it was a purely intellectual exercise, but goggle “broke the rules” and turned it physical.

that tendency in him comes from his cerebral nature—from the fact that his obsessions so enrapture him that sometimes that he thinks of nothing outside of them, including his own safety.

it’s like his self-preservation instincts and even his sense of himself as a living creature with a body (as opposed to just a brain having thoughts) take a backseat to his compulsion to solve the puzzle. he can almost forget himself if he thinks he’s on the verge of cracking a case.

if any of his team members—and particularly sara—were to be as incautious as he in this regard, of course, he would be furious with them.

but in his own case, he just almost can’t help himself but keep putting one foot in front of the other because he is so compelled to get the solve, and he constantly rationalizes to himself as he goes along, handwaving away his poor decisions and downplaying the danger even though he really ought to know better the whole time.

so should he, on a logical level, realize that if he continues to investigate a case where the killer just so happens to target men who share his same birthday, he will likely get on said killer’s radar and himself become a target? absolutely.

and to an extent, he does.

however, does he also feel like since he’s the best candidate to solve the case—because he and said killer have this freaky kind of “mind meld” going on that gives him a sort of prescience about what moves he might make next—he has an obligation to keep going with it? definitely.

and does he reason that since he’s already in for a penny, he might as well go in for a pound (because the killer is aware of him anyhow, and even were he to recuse himself, at this point, it’s unlikely that the killer would just let him walk away unscathed)? for sure.

and does he console himself by thinking that, in any case, he’s smart enough to go toe-to-toe with this guy, so he’s not even in that much danger anyway because he’ll just outthink him? more likely than not, at least subconsciously.

and so does he end up at the killer’s house, eating dinner with the killer’s family, with no one there to back him up and no exit strategy in place should things go south? you bet.

but did he wake up that day thinking, “i'm going to confront paul millander”? no.

and did he intend to place himself in such danger? not really.

he would say that while ending up in danger is a consequence of his actions, it was never something he necessarily wanted to do. he was just following a lead, and, well—

he ended up there.

ditto for the strip strangler case.

yeah, in hindsight, grissom would probably be willing to admit that going to goggle’s apartment without backup wasn’t either standard protocol or a move that was particularly smart, but then he would also rationalize that he had a lead, and he wasn’t really in a position to call for backup because technically he was off the case and the department considered the case closed, so what else could he have done?

he'd try to explain that while maybe he could have put another team member on the job, he was the one with the most experience dealing with signature killers, so he was the one who knew what to look for; ergo, he had to go.

plus, he’d point out that he had a rapport with goggle from that first meeting at the crime scene, so it made sense to think he might’ve been able to play him.

he knew he was smarter than goggle; he just didn’t count on the fact that once goggle realized as much, he’d resort to brute force.

he’d say he just wanted to poke around.

talk to the guy a bit.

he’d be vehement that he never meant to incite an altercation.

certainly, he didn’t intend for catherine to have to shoot goggle on his behalf—

it just, uh, happened.

he followed the evidence, and it led him to that basement.

and if you pressed him to explain how his tendency to wind up in dangerous situations were any different than sara’s, he’d probably get a bit flustered and huffy, but what he’d settle on is that whereas his behavior is largely incidental and occasional, hers seems often to be purposeful and is definitely a pattern.

while grissom often somewhat stumbles into dangerous situations—meaning that it’s not necessarily his intent to find them, even if that’s exactly what ends up happening in consequence of his actions—sara in some ways seeks them out.

to his mind, there would be a difference between him “going on a fishing expedition” to discover material evidence at goggle’s apartment complex and her volunteering to be the bait in the fbi sting operation because on his end, he never meant to have a confrontation, while she was actively looking for one.

of course, faced with this accusation, sara would likely point out that whether or not grissom intended to confront goggle, that’s exactly what he ended up doing, and a confrontation is a confrontation is a confrontation, regardless of intention, so how is what he elected to do any different than what she volunteered for?

and, of course, in the early seasons, he wouldn’t be able to explain why he was so blasé about risking his own life but so adamant about not risking hers.

even if she pointed out the contradiction to him, he wouldn’t be able to acknowledge it.

as i talk about here,

gil grissom lives very much in his head, a trait which is simultaneously his great strength and great weakness.

the same intense cerebral quality which makes him an excellent criminalist also makes him socially awkward and even difficult at times to interact with. while he certainly has the capacity for empathy, as he demonstrates both with his cases and in certain instances regarding members of his team, sometimes he can’t see past the end of his own nose—or, as catherine puts it, “lift [his] head up out of that microscope”—particularly if he is not first prompted to do so…

grissom tends to be hyperfocused to the point of myopia on whatever is going on inside his mind, meaning that he oftentimes fails to realize how his behavior affects others and that he sometimes will behave hypocritcally (because he can justify behaviors in himself that he doesn’t understand when they come from other people).

the real answer to the question would be that, deep down, he has this innate sense that while it’s okay for him to assume such risks for himself, it’s not okay for sara to do so, not because he views her as incapable—he knows that sara is smart and tough and skilled; he just also knows she isn’t invincible—but rather because he views her as too damn valuable.

he’s not suicidal, and he’s not looking for a gut-check; he is convinced—in what is undoubtedly a very unexamined, unself-conscious way—that it’s all right for him to sometimes walk up to the line of what’s safe and what’s not and peer over, whereas it’s never similarly okay for sara to do so, because—and here’s the hypocrisy part—he’d never be able to live with himself if something bad were to happen to her (ignoring the fact that she’d be just as devastated if something bad were to happen to him).

you know that conversation that he and sara have in episode 01x19 “gentle, gentle” about the danger of allowing certain victims to become “special”? recall, if you will, how sara points out that grissom counseled her not to let herself feel that way regarding her cases but now he’s doing the exact same thing with one of his. remember how his response to her is literally to say, “excuse me, but this victim is special,” without a hint of irony in his voice? remember how unself-aware he is throughout that whole exchange?

well, that’s pretty similar to how things are between him and sara with this recklessness issue.

when she does something that puts her life at risk, he freaks the fuck out about it because she is so incredibly precious to him that he can’t stand to think that he might lose her. her flip attitude toward her own safety frustrates him because he can’t believe she doesn’t realize that no matter how smart or capable she is, all it would take would be one bad moment for her to be seriously hurt or killed. when faced with that possibility, he ceases to care about case outcomes or what their duties are as criminalists because she’s more important to him than any sort of professional obligation. although of course he’s too scared to actually do so, he nevertheless does want very much to grab her by the shoulders and repeat to her again and again until she understands just exactly what he means, “you are the most important thing. you have to be careful. you have to be safe. the risk isn’t worth it. i care about you too much.”

he can rationalize away all of his own rash behavior as being in service to the job (and therefore an acceptable form of risk-taking), but he can’t do likewise when it comes to her.

there is probably something interesting to be said here about how often he conflates himself and “the work,” talking about himself—particularly to sara—as being synonymous with the lab (“the lab needs you”), but he thinks of her as something which transcends that milieu (see, for example, the dichotomy between his work and “the beautiful life” that he sets up in his monologue from episode 04x12 “butterflied”); he can excuse away his own actions as a function of the work, but he can’t do so in her case because she is more important than the work to him.

and yet somehow it doesn’t really occur to him that she would feel the same way about him being reckless that he does about her being reckless, were the situation reversed.

is this “do as i say, not as i do” attitude from him nonsensical? yes.

is it a double-standard? yes.

but particularly in the early seasons—when he is at his most reckless—grissom is prone to this kind of irrationality where sara is concerned; it’s part and parcel of how deeply he sublimates his feelings for her and how much he (consequently) ends up acting on instinct when it comes to her, letting his deepest-seated impulses win out over his logic.

anyway.

to wrap this discussion up nicely with a bow, i think it’s worth noting that after they get together, both grissom and sara become far less reckless with themselves. while of course there are still some instances of bravado and derring-do from both of them even once they are a couple, on the whole, they are both far less likely to put themselves in harm’s way once they know for a fact that there is someone who cares about them and champions their safety.

*insert all my fangirl tears here*

thanks for the question, friend! please feel welcome to send another any time.

10 notes

·

View notes

Text

Interior Designer Au Part 3

*Let’s see if my HGTV binge helps me... After writing literally another version of this which then lead to a 4th part, and then debated whether or not to post this, I can happily say I’m finished even though I’m not sure if I’ll make another story that dives after the remodel and stuff, but it is on the back of mind. Also, feel free to create your own version of this AU, I would love to read it.

Part 1 | 2

It wasn’t long for Sabine and Aimee to make an agreement for Marinette to start her interior designing lessons. With the work of Marinette’s room in progress, Aimee decided that with each step, she would make sure that Marinette has a say and is willing to learn.

A couple of days has passed since the meeting between the fashion and interior designers and Marinette couldn’t wait for Aimee and her crew to come in to transform her bedroom. After her parents gave her the budget for this project, Marinette knew some of her stuff will be reused and updated to match the décorum.

“Marinette, Aimee and her crew are here!” Sabine shouts as her husband was taking care of the bakery today because she wanted to oversee the project.

It wasn’t long before Aimee and her crew step their way into the bedroom and start placing things down. Aimee looks around impressed.

“I’m surprised to manage to clear this place out, normally there would be a few stranglers here and there.” Aimee jokes as Marinette beams in delight.

“The only place I didn’t get to clean out was my loft.” Marinette confessed, which Aimee didn’t mind as the loft was going to be Marinette’s personal project in the future.

Soon the tarp was being laid on the floor and painter’s tape was being placed around the windows and beams; then the painters were able to work. Marinette started lining the tape to make a geometric pattern on the wall that was going to become her accent wall with a variety of pink. Once the first coat of paint was on the walls, the crew went to take a break leaving Aimee and Marinette in her room to discuss furniture.

“I know you wanted to wait until the paint was on the walls first, but do you have any idea what style you’re going for the lower level?” Aimee wonders as she walks over to the teen.

Marinette turns to face the older woman, “I was linking something modern?” She shrugs which was a red flag to Aimee. Aimee pulls out her phone and began to search up some styles.

“Marinette, when looking at your room, I’m thinking of urban modern with gestures to nautical.” She shows Marinette some photos of the two styles. “You can easily use urban modern for the decorum as nautical would work for the walls and perhaps some of the furnishings. You need a very creative space and your future clients would like to see something that doesn’t scream a teen girl’s bedroom.” Aimee offers.

Marinette stares at the two styles and she couldn’t help but agree; however, she felt that the style is missing something. “Are there any other styles that I could look at and potential take something from each to create my space?”

Aimee nods, “How about we hold off on furniture for another day and finish with the paint, you can meet me at my office after school, if that will help.”

Marinette quickly agrees. It wasn’t much but with her budget and the amount of fabric she would need to upgrade her chaise and desk chair, she wants to know what style she’s going for.

That night after the last layer of paint was done, Marinette searched for styles that she knew would fit in with her own style. In the end, she went with the urban modern feel with touches of her own designs that she’ll make in the future.

The next day, as soon as Marinette was out of class, she ran down to Aimee’s office. There she and her interior designer started talking and ordering new furniture. Thankfully, everything that was ordered was just enough to be under the budget that her parents had set.

“I can’t wait to be done with this project, Aimee.” Marinette sighs slouching down into the armchair.

“Well, we have a few days before the furniture is delivered, how about I show you some of my designs, I created to give some inspiration for your own projects.” Aimee pulls out a large photo album and hands it over to the teen. “You have the skills, Marinette.”

Marinette flips through the pages, she’s right...I do have the skills.

When the furniture was delivered. Marinette had a lot on her plate and couldn’t wait for Aimee to show up for the furniture to be put into place. Thankfully, the chaise was redone through a fresh coat of paint and a new cushion that took Marinette a few trial and errors. In the end, she was happy how the chaise came out to be.

Aimee came over as soon as she heard and within the hour everything was in place.

The space underneath her lofted bed is now the fashion designer’s dream space. The desk was shorted to half of one wall underneath the stairs, with a new standalone desk easel right against the wall away from the window. A storage dresser stands between the two desk and next to the easel was an armchair with a throw pillow. In the far corner stands the mannequin with a newly finished design.

Away from the area under the loft begins the lounge area with the chaise (now with storage underneath) right under the circle window. The standalone mirror sits closer to the sink on the other side. Across from the chaise is now a small storage coffee table and another armchair close to it.

All in all, Marinette was happy with this room makeover as this was the fresh start she needed before inviting any of her new friends over and potentially start a small business.

Sabine and Tom were the first, besides Aimee and Marinette, to see the room’s transformation. Tom was proud and surprised that his daughter manages to do all this in a matter of two weeks.

“Thank you, Aimee. Marinette really needed this.” Sabine told the designer over a cup of tea.

“It was nothing, Sabine. Also, Marinette is welcome to spend time at my office if she decides to add interior designing to her resume. I’m always looking for help and she would be a wonderful addition.” Aimee sips her tea as two adult dives into a conversation surrounding the upcoming designer that was currently working on new projects in her newly redone room.

#miraculous ladybug#miraculous#miraculous au#miraculous fanfic#miraculous ladybug au#ml marinette#ml#mlb#marinette dupain cheng#interior design au#interior designer au#miraculous ladybug fanfic

307 notes

·

View notes

Text

Strangulating bare-metal infrastructure to Containers

Change is inevitable. Change for the better is a full-time job ~ Adlai Stevenson I

We run a successful digital platform for one of our clients. It manages huge amounts of data aggregation and analysis in Out of Home advertising domain.

The platform had been running successfully for a while. Our original implementation was focused on time to market. As it expanded across geographies and impact, we decided to shift our infrastructure to containers for reasons outlined later in the post. Our day to day operations and release cadence needed to remain unaffected during this migration. To ensure those goals, we chose an approach of incremental strangulation to make the shift.

Strangler pattern is an established pattern that has been used in the software industry at various levels of abstraction. Documented by Microsoft and talked about by Martin Fowler are just two examples. The basic premise is to build an incremental replacement for an existing system or sub-system. The approach often involves creating a Strangler Facade that abstracts both existing and new implementations consistently. As features are re-implemented with improvements behind the facade, the traffic or calls are incrementally routed via new implementation. This approach is taken until all the traffic/calls go only via new implementation and old implementation can be deprecated. We applied the same approach to gradually rebuild the infrastructure in a fundamentally different way. Because of the approach taken our production disruption was under a few minutes.

This writeup will explore some of the scaffolding we did to enable the transition and the approach leading to a quick switch over with confidence. We will also talk about tech stack from an infrastructure point of view and the shift that we brought in. We believe the approach is generic enough to be applied across a wide array of deployments.

The as-is

###Infrastructure

We rely on Amazon Web Service to do the heavy lifting for infrastructure. At the same time, we try to stay away from cloud-provider lock-in by using components that are open source or can be hosted independently if needed. Our infrastructure consisted of services in double digits, at least 3 different data stores, messaging queues, an elaborate centralized logging setup (Elastic-search, Logstash and Kibana) as well as monitoring cluster with (Grafana and Prometheus). The provisioning and deployments were automated with Ansible. A combination of queues and load balancers provided us with the capability to scale services. Databases were configured with replica sets with automated failovers. The service deployment topology across servers was pre-determined and configured manually in Ansible config. Auto-scaling was not built into the design because our traffic and user-base are pretty stable and we have reasonable forewarning for a capacity change. All machines were bare-metal machines and multiple services co-existed on each machine. All servers were organized across various VPCs and subnets for security fencing and were accessible only via bastion instance.

###Release cadence

Delivering code to production early and frequently is core to the way we work. All the code added within a sprint is released to production at the end. Some features can span across sprints. The feature toggle service allows features to be enabled/disable in various environments. We are a fairly large team divided into small cohesive streams. To manage release cadence across all streams, we trigger an auto-release to our UAT environment at a fixed schedule at the end of the sprint. The point-in-time snapshot of the git master is released. We do a subsequent automated deploy to production that is triggered manually.

CI and release pipelines

Code and release pipelines are managed in Gitlab. Each service has GitLab pipelines to test, build, package and deploy. Before the infrastructure migration, the deployment folder was co-located with source code to tag/version deployment and code together. The deploy pipelines in GitLab triggered Ansible deployment that deployed binary to various environments.

Figure 1 — The as-is release process with Ansible + BareMetal combination

The gaps

While we had a very stable infrastructure and matured deployment process, we had aspirations which required some changes to the existing infrastructure. This section will outline some of the gaps and aspirations.

Cost of adding a new service

Adding a new service meant that we needed to replicate and setup deployment scripts for the service. We also needed to plan deployment topology. This planning required taking into account the existing machine loads, resource requirements as well as the resource needs of the new service. When required new hardware was provisioned. Even with that, we couldn’t dynamically optimize infrastructure use. All of this required precious time to be spent planning the deployment structure and changes to the configuration.

Lack of service isolation

Multiple services ran on each box without any isolation or sandboxing. A bug in service could fill up the disk with logs and have a cascading effect on other services. We addressed these issues with automated checks both at package time and runtime however our services were always susceptible to noisy neighbour issue without service sandboxing.

Multi-AZ deployments

High availability setup required meticulous planning. While we had a multi-node deployment for each component, we did not have a safeguard against an availability zone failure. Planning for an availability zone required leveraging Amazon Web Service’s constructs which would have locked us in deeper into the AWS infrastructure. We wanted to address this without a significant lock-in.

Lack of artefact promotion

Our release process was centred around branches, not artefacts. Every auto-release created a branch called RELEASE that was promoted across environments. Artefacts were rebuilt on the branch. This isn’t ideal as a change in an external dependency within the same version can cause a failure in a rare scenario. Artefact versioning and promotion are more ideal in our opinion. There is higher confidence attached to releasing a tested binary.

Need for a low-cost spin-up of environment

As we expanded into more geographical regions rapidly, spinning up full-fledged environments quickly became crucial. In addition to that without infrastructure optimization, the cost continued to mount up, leaving a lot of room for optimization. If we could re-use the underlying hardware across environments, we could reduce operational costs.

Provisioning cost at deployment time

Any significant changes to the underlying machine were made during deployment time. This effectively meant that we paid the cost of provisioning during deployments. This led to longer deployment downtime in some cases.

Considering containers & Kubernetes

It was possible to address most of the existing gaps in the infrastructure with additional changes. For instance, Route53 would have allowed us to set up services for high availability across AZs, extending Ansible would have enabled multi-AZ support and changing build pipelines and scripts could have brought in artefact promotion.

However, containers, specifically Kubernetes solved a lot of those issues either out of the box or with small effort. Using KOps also allowed us to remained cloud-agnostic for a large part. We decided that moving to containers will provide the much-needed service isolation as well as other benefits including lower cost of operation with higher availability.

Since containers differ significantly in how they are packaged and deployed. We needed an approach that had a minimum or zero impact to the day to day operations and ongoing production releases. This required some thinking and planning. Rest of the post covers an overview of our thinking, approach and the results.

The infrastructure strangulation

A big change like this warrants experimentation and confidence that it will meet all our needs with reasonable trade-offs. So we decided to adopt the process incrementally. The strangulation approach was a great fit for an incremental rollout. It helped in assessing all the aspects early on. It also gave us enough time to get everyone on the team up to speed. Having a good operating knowledge of deployment and infrastructure concerns across the team is crucial for us. The whole team collectively owns the production, deployments and infrastructure setup. We rotate on responsibilities and production support.

Our plan was a multi-step process. Each step was designed to give us more confidence and incremental improvement without disrupting the existing deployment and release process. We also prioritized the most uncertain areas first to ensure that we address the biggest issues at the start itself.

We chose Helm as the Kubernetes package manager to help us with the deployments and image management. The images were stored and scanned in AWS ECR.

The first service

We picked the most complicated service as the first candidate for migration. A change was required to augment the packaging step. In addition to the existing binary file, we added a step to generate a docker image as well. Once the service was packaged and ready to be deployed, we provisioned the underlying Kubernetes infrastructure to deploy our containers. We could deploy only one service at this point but that was ok to prove the correctness of the approach. We updated GitLab pipelines to enable dual deploy. Upon code check-in, the binary would get deployed to existing test environments as well as to new Kubernetes setup.

Some of the things we gained out of these steps were the confidence of reliably converting our services into Docker images and the fact that dual deploy could work automatically without any disruption to existing work.

Migrating logging & monitoring

The second step was to prove that our logging and monitoring stack could continue to work with containers. To address this, we provisioned new servers for both logging and monitoring. We also evaluated Loki to see if we could converge tooling for logging and monitoring. However, due to various gaps in Loki given our need, we stayed with ElasticSearch stack. We did replace logstash and filebeat with Fluentd. This helped us address some of the issues that we had seen with filebeat our old infrastructure. Monitoring had new dashboards for the Kubernetes setup as we now cared about both pods as well in addition to host machine health.

At the end of the step, we had a functioning logging and monitoring stack which could show data for a single Kubernetes service container as well across logical service/component. It made us confident about the observability of our infrastructure. We kept new and old logging & monitoring infrastructure separate to keep the migration overhead out of the picture. Our approach was to keep both of them alive in parallel until the end of the data retention period.

Addressing stateful components

One of the key ingredients for strangulation was to make any changes to stateful components post initial migration. This way, both the new and old infrastructure can point to the same data stores and reflect/update data state uniformly.

So as part of this step, we configured newly deployed service to point to existing data stores and ensure that all read/writes worked seamlessly and reflected on both infrastructures.

Deployment repository and pipeline replication

With one service and support system ready, we extracted out a generic way to build images with docker files and deployment to new infrastructure. These steps could be used to add dual-deployment to all services. We also changed our deployment approach. In a new setup, the deployment code lived in a separate repository where each environment and region was represented by a branch example uk-qa,uk-prod or in-qa etc. These branches carried the variables for the region + environment. In addition to that, we provisioned a Hashicorp Vault to manage secrets and introduced structure to retrieve them by region + environment combination. We introduced namespaces to accommodate multiple environments over the same underlying hardware.

Crowd-sourced migration of services

Once we had basic building blocks ready, the next big step was to convert all our remaining services to have a dual deployment step for new infrastructure. This was an opportunity to familiarize the team with new infrastructure. So we organized a session where people paired up to migrate one service per pair. This introduced everyone to docker files, new deployment pipelines and infrastructure setup.

Because the process was jointly driven by the whole team, we migrated all the services to have dual deployment path in a couple of days. At the end of the process, we had all services ready to be deployed across two environments concurrently.

Test environment migration

At this point, we did a shift and updated the Nameservers with updated DNS for our QA and UAT environments. The existing domain started pointing to Kubernetes setup. Once the setup was stable, we decommissioned the old infrastructure. We also removed old GitLab pipelines. Forcing only Kubernetes setup for all test environments forced us to address the issues promptly.

In a couple of days, we were running all our test environments across Kubernetes. Each team member stepped up to address the fault lines that surfaced. Running this only on test environments for a couple of sprints gave us enough feedback and confidence in our ability to understand and handle issues.

Establishing dual deployment cadence

While we were running Kubernetes on the test environment, the production was still on old infrastructure and dual deployments were working as expected. We continued to release to production in the old style.

We would generate images that could be deployed to production but they were not deployed and merely archived.

Figure 2 — Using Dual deployment to toggle deployment path to new infrastructure

As the test environment ran on Kubernetes and got stabilized, we used the time to establish dual deployment cadence across all non-prod environments.

Troubleshooting and strengthening

Before migrating to the production we spent time addressing and assessing a few things.

We updated the liveness and readiness probes for various services with the right values to ensure that long-running DB migrations don’t cause container shutdown/respawn. We eventually pulled out migrations into separate containers which could run as a job in Kubernetes rather than as a service.

We spent time establishing the right container sizing. This was driven by data from our old monitoring dashboards and the resource peaks from the past gave us a good idea of the ceiling in terms of the baseline of resources needed. We planned enough headroom considering the roll out updates for services.

We setup ECR scanning to ensure that we get notified about any vulnerabilities in our images in time so that we can address them promptly.

We ran security scans to ensure that the new infrastructure is not vulnerable to attacks that we might have overlooked.

We addressed a few performance and application issues. Particularly for batch processes, which were split across servers running the same component. This wasn’t possible in Kubernetes setup, as each instance of a service container feeds off the same central config. So we generated multiple images that were responsible for part of batch jobs and they were identified and deployed as separate containers.

Upgrading production passively

Finally, with all the testing we were confident about rolling out Kubernetes setup to the production environment. We provisioned all the underlying infrastructure across multiple availability zones and deployed services to them. The infrastructure ran in parallel and connected to all the production data stores but it did not have a public domain configured to access it. Days before going live the TTL for our DNS records was reduced to a few minutes. Next 72 hours gave us enough time to refresh this across all DNS servers.

Meanwhile, we tested and ensured that things worked as expected using an alternate hostname. Once everything was ready, we were ready for DNS switchover without any user disruption or impact.

DNS record update

The go-live switch-over involved updating the nameservers’ DNS record to point to the API gateway fronting Kubernetes infrastructure. An alternate domain name continued to point to the old infrastructure to preserve access. It remained on standby for two weeks to provide a fallback option. However, with all the testing and setup, the switch over went smooth. Eventually, the old infrastructure was decommissioned and old GitLab pipelines deleted.

Figure 3 — DNS record update to toggle from legacy infrastructure to containerized setup

We kept old logs and monitoring data stores until the end of the retention period to be able to query them in case of a need. Post-go-live the new monitoring and logging stack continued to provide needed support capabilities and visibility.

Observations and results

Post-migration, time to create environments has reduced drastically and we can reuse the underlying hardware more optimally. Our production runs all services in HA mode without an increase in the cost. We are set up across multiple availability zones. Our data stores are replicated across AZs as well although they are managed outside the Kubernetes setup. Kubernetes had a learning curve and it required a few significant architectural changes, however, because we planned for an incremental rollout with coexistence in mind, we could take our time to change, test and build confidence across the team. While it may be a bit early to conclude, the transition has been seamless and benefits are evident.

2 notes

·

View notes

Photo

The rhythmicity project by @monad_studio is a design exploration on rhythmical patterns of growth found in temporal processes of species in Florida. According to the design team observations of the strangler fig trees for instance shows that they grow aerial roots attaching themselves to the host trees depriving it of life in the long run. The design concerns itself with the expression of a symbiotic system’s ability to augment robustness of an assemblage with its host. . #researchproject #concept #conceptdesign #monadstudio #3dprint #3dprinting #3dprinted #maquette #fabrication #digitalfabrication #fabricate #digitaldesign #design #designer #parametric #grasshopper3d #rhinoceros3d #parametricarchitecture #parametricdesign #parametricism #architecture #architect #mimar #mimarlik #architectureporn (at Florida) https://www.instagram.com/p/Bssh--elC2_/?utm_source=ig_tumblr_share&igshid=1p7g1yibkg5y7

#researchproject#concept#conceptdesign#monadstudio#3dprint#3dprinting#3dprinted#maquette#fabrication#digitalfabrication#fabricate#digitaldesign#design#designer#parametric#grasshopper3d#rhinoceros3d#parametricarchitecture#parametricdesign#parametricism#architecture#architect#mimar#mimarlik#architectureporn

5 notes

·

View notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] A professional's guide to solving complex problems while designing modern softwareKey FeaturesLearn best practices for designing enterprise-grade software systemsUnderstand the importance of building reliable, maintainable, and scalable systemsBecome a professional software architect by learning the most effective software design patterns and architectural conceptsBook DescriptionAs businesses are undergoing a digital transformation to keep up with competition, it is now more important than ever for IT professionals to design systems to keep up with the rate of change while maintaining stability.This book takes you through the architectural patterns that power enterprise-grade software systems and the key architectural elements that enable change such as events, autonomous services, and micro frontends, along with demonstrating how to implement and operate anti-fragile systems. You'll divide up a system and define boundaries so that teams can work autonomously and accelerate the pace of innovation. The book also covers low-level event and data patterns that support the entire architecture, while getting you up and running with the different autonomous service design patterns. As you progress, you'll focus on best practices for security, reliability, testability, observability, and performance. Finally, the book combines all that you've learned, explaining the methodologies of continuous experimentation, deployment, and delivery before providing you with some final thoughts on how to start making progress.By the end of this book, you'll be able to architect your own event-driven, serverless systems that are ready to adapt and change so that you can deliver value at the pace needed by your business.What you will learnExplore architectural patterns to create anti-fragile systems that thrive with changeFocus on DevOps practices that empower self-sufficient, full-stack teamsBuild enterprise-scale serverless systemsApply microservices principles to the frontendDiscover how SOLID principles apply to software and database architectureCreate event stream processors that power the event sourcing and CQRS patternDeploy a multi-regional system, including regional health checks, latency-based routing, and replicationExplore the Strangler pattern for migrating legacy systemsWho this book is forThis book is for software architects and aspiring software architects who want to learn about different patterns and best practices to design better software. Intermediate-level experience in software development and design is required. Beginner-level knowledge of the cloud will also help you get the most out of this software design book. Publisher : Packt Publishing Limited (30 July 2021) Language : English Paperback : 436 pages ISBN-10 : 1800207034 ISBN-13 : 978-1800207035 Item Weight : 744 g Dimensions : 19.05 x 2.51 x 23.5 cm Country of Origin

: India [ad_2]

0 notes

Text

August 2018 in Review

I have a weird memory. It’s highly pattern-driven and very visual. This means that my memory of films I’ve watched is based on images and series of images that made an impression instead of plot points. It’s why I rewatch movies so often. Even though I’ve been tracking my movie viewing habits for two and a half years, that doesn’t mean I’ve created strong memories for all those movies. That’s why I’m gonna start doing monthly roundups of the new-to-me films that struck me, one way or the other.

[If you wanna know all the films I’m watching, I keep full lists on letterboxd and imdb.]

The reviews below are essentially transcriptions of the notes I took right after watching the films. Because of Summer Under the Stars and my cosplay challenge, this month was pretty TCM heavy for me.

Full Roundup BELOW THE JUMP!

Teen Titans Go to the Movies (2018)

27 July 2018 | 84 min. | Color

Directed and Written by Aaron Horvath and Peter Rida Michail

Starring Greg Cipes, Scott Menville, Khary Payton, Tara Strong, and Hynden Walch

I’m already a fan of the show and the movie kicks it up a notch with its humor and style. [If you liked the original series, give TTG a chance already.] TTG to the Movies is a great superhero movie for anyone who’s down for superhero stories but is fatigued by the current spate of offerings. Grain-of-Salt warning here because I think Superman III (1983) is great.

Fun that they included some gags here and there for the parents out there who’ve had to hear the Waffles song a few too many times. Also, one of the best ending gags for a kid’s movie ever.

Where to Watch: Still in theaters, but I’d imagine Cartoon Network will be playing it soon.

Doctor X (1932)

27 August 1932 | 76 min. | 2-strip Technicolor

Directed by Michael Curtiz

Written by Earl Baldwin and Robert Tasker

Starring Lionel Atwill, Lee Tracy, and Fay Wray

I made the statement that Darkman (1990) is the most comic-book movie that isn’t adapted from a comic book. I hadn’t seen Doctor X yet though.

The set pieces are phenomenal. Each shot is artfully constructed and the way the shots are strung together makes the most of the production design. If one were to do a comic adaptation, it would take some imaginative work to not just mimic the film. The 2-strip technicolor is particularly effective in the laboratory scenes in creating an eerie aura. Sensational.

Lee Tracy is playing, as usual, a press man and he’s doing so perfectly. Tracy is so underrated.

Where to Watch: Looks like the DVD is out of print, so maybe check your local library or video store. TCM plays it every once and a while and, since Warner Bros has a deal with Filmstruck, I wouldn’t be surprised to see it pop up there eventually.

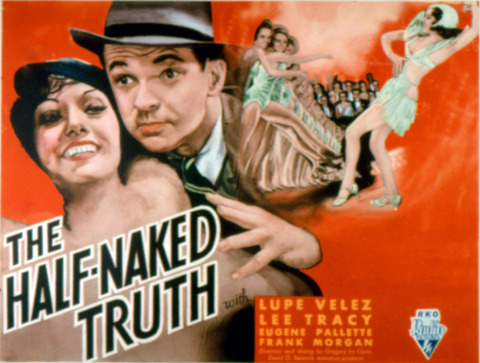

The Half-Naked Truth (1932)

16 December 1932 | 77 min. | B&W

Directed by Gregory La Cava

Written by Corey Ford and Gregory La Cava

Starring Frank Morgan, Eugene Pallette, Lee Tracy, and Lupe Velez

You might very well think Lee Tracy was a featured TCM star this month. (Maybe next SUTS? Pretty please.)

Lupe Velez is so talented and natural it was nice to see her in a film where her wits were matched. I’ll be honest, I’m a big Lupe fan but, for most of her films, she’s the only good reason to watch them. This wasn’t the case here! There are a lot of wonderful moments with small movements and gestures that make Velez and Tracy’s relationship feel very real, as if they’re actually that caught up in one another. Eugene Pallette, Franklin Pangborn, and Frank Morgan round out the ensemble. The running eunuch joke might not be all that funny, but it’s a masterclass in not saying what you mean. Also, very cute chihuahua.

Where to Watch: The DVD is available from the Warner Archive. (So, once again, local library or video store might have a copy.)

The Cuban Love Song (1931)

5 December 1931 | 86 min. | B&W

Directed by W.S. Van Dyke

Written by John Lynch, Bess Meredith, and C. Gardener Sullivan

Starring Jimmy Durante, Lawrence Tibbett, Ernest Torrance, and Lupe Velez

Lupe is wonderful in this. She plays a Cuban woman who sounds an awful lot like a Mexican woman--which might be something you have to overlook to enjoy the film FYI. Lawrence Tibbett has a shocking dearth of charisma in the lead, but Jimmy Durante, Ernest Torrence, and Louise Fazenda take the heat off him well. It’s a little hard to root for Tibbett’s character and the ending is disappointing. (Spoiler: privileging of the affluent “white” couple.)

The songs are great. I love the habit of placing people in musicals so that they are singing full force directly into each other’s faces. I don’t know why I find it so funny, but it’s not a mood ruiner for Cuban Love Song. The editing is fun and energetic. Until the war breaks out, there’s a lot of solid humor.

After watching so many Lupe films this month, I’d love to sit down with people who do and don’t know Spanish to talk about her films. There seem to be some divisions on social media and across blogs about Lupe’s films that might be attributable to whether or not one understands Spanish. I myself understand Spanish reasonably well and I think knowing what Lupe and others are saying makes almost all of her films funnier. And boy, does Lupe like calling men stupid animals.

Where to Watch: This one seems kinda rare. Looks like there may have been a VHS release, but you may just have to wait for TCM to play it again!

The Night Stalker (1972)

11 January 1972 | 74 min. | Color

Directed by John Llewellyn Moxey

Written by Jeffrey Grant Rice and Richard Matheson

Starring Carol Lynley, Darren McGavin, and Simon Oakland

and

The Night Strangler (1973)

16 January 1973 | 74 min. | Color

Directed by Dan Curtis

Written by Jeffrey Grant Rice and Richard Matheson

Starring Darren McGavin, Simon Oakland, and Jo Ann Pflug

I loved that these films are exactly like the Kolchak TV series. My SO and I have been watching the show weekly as it airs on MeTV and so he surprised me by renting the movies that kicked off the series. Honestly, watching backwards may have made the movies even more entertaining. How is Kolchak still working for Vincenzo in Las Vegas?? The answer is in Seattle.

The TV movies were intended as a trilogy, but after the success of the first two films, it was developed into a series instead. It’s cool to see how every piece of the Kolchak formula was in place immediately and how firmly Darren McGavin had a hold on the character. His chemistry with Simon Oakland (Vincenzo) is spectacular--a great comedy duo TBH. If you like their shouting matches on the show, Night Strangler has a humdinger to offer you.

Night Stalker is a pretty straight-forward vampire story, written by Richard Matheson, one of the great spec-fic writers of the 1960s and 1970s. Matheson also wrote one of the best undead novels of all time, I am Legend. What elevates the film over the basic mythology, aside from the great performances, pacing, and editing, is that the story’s really about how suppression actually goes down--how mundane and frustrating it can be even in the face of the supernatural.

Night Strangler is a little more creative with its monster. They integrate the nature and landmarks of Seattle in fun ways. The stripper characters are delightful. Jo Ann Pflug gives a truly funny performance and feels like a natural contender for Kolchak. Even his romantic relationships should be affectionately combative. The ditzy lesbian, Charisma Beauty (Nina Wayne) is hilarious and Wayne’s timing is impeccable. (BTW: they don’t explicitly call her a lesbian but it’s still made very overt.) There’s also a wonderful cameo by Margaret Hamilton.

As far as I can tell, it’s easier to get access to these films than the series. They’re worth seeing even if you haven’t seen the Kolchak TV show. They’re also a good pick if you’re a fan of X-Files, as Kolchak is the mother of that show. Even though I’m an X-Files fan and grew up watching it, Kolchak is edging it out for me lately. Maybe because if you’re telling a story about fighting for truth against the suppression of information, you undercut yourself by making the protagonist a fed.

Where to Watch: Kino Lorber is releasing restored editions of the films on Blu-ray and DVD in October!

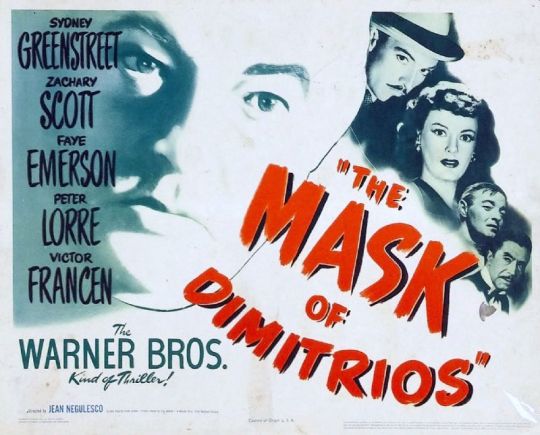

The Mask of Dimitrios (1944)

1 July 1944 | 95 min. | B&W

Directed by Jean Negulesco

Written by Frank Gruber

Starring Sydney Greenstreet, Peter Lorre, and Zachary Scott

This was great! I loved Peter Lorre and Sydney Greenstreet together. If you’re looking for a mystery story that flows and escalates well and presents a parade of interesting characters and locales, Dimitrios is for you. It’s also always nice to see Lorre in the lead.

Where to Watch: The DVD is available from the Warner Archive. (So, once again, local library or video store might have copy.)

Strait-Jacket (1964)

19 January 1964 | 93 min. | B&W

Directed by William Castle

Written by Robert Bloch

Starring Diane Baker and Joan Crawford

I mentioned in my Joan Crawford CUTS post that I’d been meaning to see this for years. My enjoyment of the film didn’t suffer a bit from that length of anticipation.

I like William Castle’s movies a lot. I like the campy humor and quirky stories. This one is campy still, but not as heavy on the humor--unless you have a real weird sense of humor. That’s not a strike against Strait-Jacket though. Castle builds so much tension that by the end of the film, you feel like anyone could be axe-murdered at any moment, which becomes absurdly fun. The ending might be a little predictable, but it’s fun to go along for the ride. I didn’t particularly like the tacked on ending but I guess every JC movie needs to end on JC?

Largely unrelated, but if you’re a Castle fan, have you checked out his TV show Ghost Story/Circle of Fear? The first episode, The New House, in particular is top notch.

Where to Watch: It’s on Blu-ray and DVD from Sony (your local library or video store might have a copy) and it’s for rent on Amazon Prime. It’s also still on-demand via TCM for another few days.

One I didn’t write up: Cairo (1942). I brought up in my Jeanette MacDonald post that I was hoping to find a MacDonald film I enjoyed watching on her Summer Under the Stars day and I did!

#monthly roundup#month in review#Film Review#film recommendation#movie review#movie recommendations#2010s#2018#Teen Titans#Teen Titans Go#Teen Titans Go To The Movies#1930s#pre-code#doctor x#the half-naked truth#cuban love song#the cuban love song#lupe velez#lee tracy#kolchak#the night stalker#kino lorber#the night strangler#television#70s tv#tv#tv movie#1970s#the mask of dimitrios#1940s

6 notes

·

View notes

Photo

Nailed On, Typo Error or Jimmy’s Step Brother? #unsung_tiny_heroes #jj_texture #mytexturefix #textureispoetry #texture_art #hintology_texture #a_2nd_look #patterns #jj_textypographical #jj_typography #tv_textypographical #tv_typography #typography #design #pic #picsart #pics #photo #photoart #photos #photography #photographersgallery #artphotography #conceptualphotography #davidhillgallery #atlasgallery #tristanhoaregallery #huxleyparlourgallery #purdyhicksgallery #michaelhoppengallery (at The Stranglers Convention) https://www.instagram.com/p/CajjKaCoT10/?utm_medium=tumblr

#unsung_tiny_heroes#jj_texture#mytexturefix#textureispoetry#texture_art#hintology_texture#a_2nd_look#patterns#jj_textypographical#jj_typography#tv_textypographical#tv_typography#typography#design#pic#picsart#pics#photo#photoart#photos#photography#photographersgallery#artphotography#conceptualphotography#davidhillgallery#atlasgallery#tristanhoaregallery#huxleyparlourgallery#purdyhicksgallery#michaelhoppengallery

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Delve into the second edition to master serverless proficiency and explore new chapters on security techniques, multi-regional deployment, and optimizing observability.Key FeaturesGain insights from a seasoned CTO on best practices for designing enterprise-grade software systemsDeepen your understanding of system reliability, maintainability, observability, and scalability with real-world examplesElevate your skills with software design patterns and architectural concepts, including securing in-depth and running in multiple regions.Book DescriptionOrganizations undergoing digital transformation rely on IT professionals to design systems to keep up with the rate of change while maintaining stability. With this edition, enriched with more real-world examples, you'll be perfectly equipped to architect the future for unparalleled innovation.This book guides through the architectural patterns that power enterprise-grade software systems while exploring key architectural elements (such as events-driven microservices, and micro frontends) and learning how to implement anti-fragile systems.First, you'll divide up a system and define boundaries so that your teams can work autonomously and accelerate innovation. You'll cover the low-level event and data patterns that support the entire architecture while getting up and running with the different autonomous service design patterns.This edition is tailored with several new topics on security, observability, and multi-regional deployment. It focuses on best practices for security, reliability, testability, observability, and performance. You'll be exploring the methodologies of continuous experimentation, deployment, and delivery before delving into some final thoughts on how to start making progress.By the end of this book, you'll be able to architect your own event-driven, serverless systems that are ready to adapt and change.What you will learnExplore architectural patterns to create anti-fragile systems.Focus on DevSecOps practices that empower self-sufficient, full-stack teamsApply microservices principles to the frontendDiscover how SOLID principles apply to software and database architectureGain practical skills in deploying, securing, and optimizing serverless architecturesDeploy a multi-regional system and explore the strangler pattern for migrating legacy systemsMaster techniques for collecting and utilizing metrics, including RUM, Synthetics, and Anomaly detection.Who this book is forThis book is for software architects who want to learn more about different software design patterns and best practices. This isn't a beginner's manual - you'll need an intermediate level of programming proficiency and software design experience to get started.You'll get the most out of this software design book if you already know the basics of the cloud, but it isn't a prerequisite.Table of ContentsArchitecting for InnovationsDefining Boundaries and Letting GoTaming the Presentation TierTrusting Facts and Eventual ConsistencyTurning the Cloud into the DatabaseA Best Friend for the FrontendBridging Intersystem GapsReacting to Events with More EventsRunning in Multiple RegionsSecuring Autonomous Subsystems in DepthChoreographing Deployment and DeliveryOptimizing ObservabilityDon't Delay, Start Experimenting Publisher : Packt Publishing; 2nd ed. edition (27 February 2024) Language : English Paperback : 488 pages ISBN-10

: 1803235446 ISBN-13 : 978-1803235448 Item Weight : 840 g Dimensions : 2.79 x 19.05 x 23.5 cm Country of Origin : India [ad_2]

0 notes

Text

Diary of a Designer – Part 187 – by Patrick Moriarty

The Paisley Rats design was influenced by Indian paisley patterns, the punk rock band The Stranglers, and contemporary artist Banksy. Patrick Moriarty's unique rodent design will be featured in an exhibition about paisley patterns in an art museum in 2022

Paisley Rats fabrics are bestsellers. They are popular for fashion and home decor. Fashion accessories made with the paisley rat-patterned textiles look original, trendy, and unique. Here is a photo of Mia Lee wearing a Paisley Rats headscarf. mia-lee-wearing-paisley-rats-headscarf-with-her-son Julia in Germany buys Paisley Rats fabrics to make products to sell online. She wrote, ““As always,…

View On WordPress

2 notes

·

View notes

Text

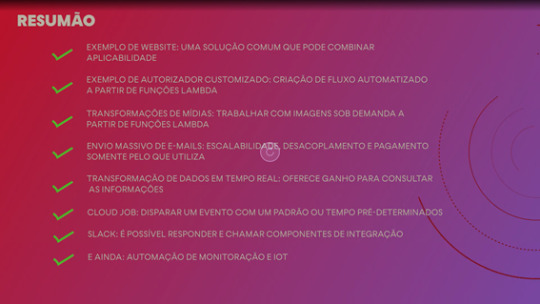

Serverless Computing – Aula 4 – Parte 1

Serverless Computing – Aula 4 – Parte 1

Professor: Fernando Sapata

EP 08. Combinações

Conteúdo:

Possibilidades que serverless conseguem entregar:

Modernização de aplicações por meio do padrão de projeto de “estrangulamento” Strangler pattern.

Na pratica: Recortar pequenas partes da aplicação e mover para novas arquitetura, estratégias ou até mesmo novo paradigma.

A modernização de uma aplicação para por um processo de desidratação da aplicação de forma sequencial e enquanto aplicação monolítica principal ainda esta em execução. Desta forma conseguimos criar pequenos recortes da aplicação em funções que são chamadas de forma individual e que entregam o resultado que se espera para cada uma delas.

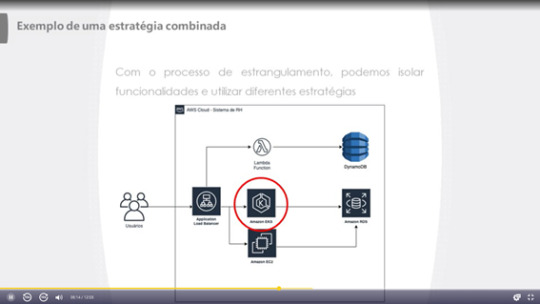

Exemplos de de estratégias combinadas:

1- Primeiro exemplo: Sistema já em nuvem, mas utilizando IaaS somente.

2- Isolando funcionalidades utilizando funções lambdas como mecanismo de processamento: (Hibrido entre IaaS e Faas)

ELB – Faz redirecionamento de portas. (Elastic load balancer)

ALB - Faz load balance de aplicação, atuando na camada 7 do modelo de rede.

3- Cenario onde temos, (FaaS, Paas e Iaas) com Lambda (Faas), EKS(Paas) e Maquinas EC2(IaaS).

4- Neste desenho a aplicação já esta toda redesenhada e temos domínios de dados para cada um dos domínios de funções.

Dica: O processo de modernização não precisa ocorrer do dia para a noite ou vice-versa.

[Resumo]

Serverless Computing – Aula 4 – Parte 2

Professor: Fernando Sapata

EP 09. Boas práticas

Conteúdo:

- Utilize sempre um gateway de API na frente das suas funções.

Motivo: O gateway de API ajuda na abstração da sua função do seu chamador. Isso ajuda não só a proteger a sua função, como também evita quebras de código com futuras e necessárias atualizações das funções.

- Utilize uma única função por rota:

Motivo: Criar funções especializadas e uma rota por função.

- Não esqueça dos testes:

Motivo: Utilize as mesmas estratégias de testes do desenvolvimento tradicional nas funções serverless. Por meio das ferramentas já listadas (Serverless framework, ferramentas para integração com dependências externas e mockup ).

- Desenvolva localmente sempre que possível:

Motivo: Menor tempo de desenvolvimento de time to market.

- Salve configurações da aplicação fora da aplicação:

Motivo: Melhora a portabilidade do código.

- Utilize logs em todas as camadas:

Motivo: Observabilidade do ambitente. Saber exatamente como sua aplicação esta se comportando para poder otimizar sempre que necessário. Identificar comportamentos anormais da sua aplicação.

- Implementar ID único de transações:

Motivo: Ter um ID único preferencialmente criado na borda (inicio) para ter tracing da transação de ponta a ponta e identificar onde parou e por que parou. (Cuidado com LGPD)

- Considerar funções efêmeras:

Motivo: Criar o design da sua função sempre considerando que ela será iniciada a frio e todo o tempo que isso pode levar. Cuidado com dependências e tempo de carga devem ser tomados, pois funções como serviço são descartadas ao fim da sua execução e isso causa tempo e delay de carregamento em quase todas as chamadas.

- Considere sempre o Cold start:

Motivo: Informado no tópico de funções efêmeras. O tempo entre o momento que a função é chamada não é o tempo que de fato ela pode processar são diferentes e isso deve ser considerado.

- Evite Wait time no código:

Motivo: Não coloque eventos de espera no código, isso vai custar dinheiros para a execução da sua aplicação. (Criar orquestração ou coreografia para controlar a execução síncrona ou assíncrona para o seu código).

- Evitar funções monolíticas:

Motivo: Não criar mais de uma função por rota. Problemas de rota e de load (Cold start) podem ser criadas se criar funções com muitas responsabilidades e dependências.

- Gerenciar corretamente as dependências:

Motivo: Evitar carregar muitas dependências para a criação de uma função. Levar somente as dependências necessárias.

- Comece utilizando CI/CD:

Motivo: O custo de tempo e dinheiro para criar uma esteira de desenvolvido sera muito maior se comparado a criação deste modelo desde o início. Considere custos não somente dinheiro, mas riscos e tempo.

- Considere o tempo total de execução das suas funções:

Motivo: Considere monitorar o tempo total de execução de uma função pois se ela variar o custo de execução dela também deve variar e você pode ter surpresas na sua conta. Os fornecedores de cloud permitem o monitoramento destes dados.

- Faça fine tuning das configurações de memória:

Motivo: Existe uma relação entre CPU e memoria nos provedores de cloud, ou seja, como só conseguimos fazer tuning de memória nas funções como serviço, utilize maiores valores de memoria para atender funções que sejam mais dependentes de CPU, pois quanto mais memória, mais CPU estará disponível.

Faça testes constantes e identifique sempre que possível o valor optimal para cada uma das suas funções.

- Considere o custo total da solução:

Motivo: Os custos unitários de funções como serviço são muitas vezes muitos baixos e podem gerar a ideia que tudo será muito barato. Não se engane e calcule tudo muito bem para não ter surpresas. Custo não é valor, quando se deparar com custos divergentes, compare o valor de cada um deles, faça comparações justas considerando as características de cada um dos cenários.

Para comparar Lambda com maquinas EC2, o correto é comparar o 1 Lambda -> 3 EC2, considerando a questão da alta disponibilidade.

- Utilize funções para minificar o seu código:

Motivo: Economia de espaço, tamanho da função, tempo de cold start e consequentemente custo total de execução;

Outro aspecto na minificação é dificultar a interpretação do código e otimizar a camada de segurança do código.

A minificação pode ser implementada diretamente no pipeline de desenvolvimento. (Sempre avaliar se a linguagem permita esta técnica)

- Separar o Handler da função da sua lógica de negócio:

Motivo: Criar mecanismos de portabilidade e evitar vendor lock-in. Este processo pode ser integrado ao pipeline de desenvolvimento do seu código e ser feito de forma automatizada.

- Garantir a idempotência do código:

Motivo: Permitir e criar mecanismos para evitar duplicidade de execução de mecanismos do sistema. Isso é muito importante para não criar problemas de falta de integridade na sua aplicação ou bases de dados.

- Mais coreografia e menos orquestração:

Motivo: Evitar pontos únicos de falha e muita responsabilidade para um componente. A ideia de usar coreografia nos fluxos entre sistemas e funções é dividir a responsabilidade entre os componentes e entregar a cada um deles a responsabilidade de saber o que fazer com a informação recebida e para quem entregar após o processamento.

[Resumo]

Serverless Computing – Aula 4 – Parte 3

Professor: Fernando Sapata

EP 10. Arquiteturas de referência

Conteudo:

“Quais são as aplicações praticas de tudo que a gente viu até agora?”

- Website:

[Resumo]

0 notes

Photo

@monad_studio ’s Rhythmicity is a wall installation developed for Art Center South Florida, as part of the exhibition “Unpredictable Patterns of Behavior” in Miami. The project embodies rhythmical patterns of growth found in temporal processes of species native to Florida, such as the behavior of strangler fig trees—these operate by growing aerial roots that attach to the host tree in multiple bundles, exerting pressure and depleting the host over time. . #miami #florida #3dprint #3dprinting #architecturemodel #structure #installation #fabrication #digitalfabrication #fabricate #digitaldesign #design #designer #parametric #grasshopper3d #rhinoceros3d #parametricarchitecture #parametricdesign #parametricism #architecture #architect #architectureporn #mimar #mimarlik #designprototype #architectureoffice (at Miami, Florida)