#text to speech software windows

Explore tagged Tumblr posts

Text

oh my god i don't speak to my dad anymore cuz hes nutty but i know what he does for a living

and musk is currently pulling a "the software govs use is 50 years old which means there can be no advances"

and that's..... that's what my dad does for a living, he gets paid 500-1k an hour to make software that specifically communicates with old legacy software cause he's a 90s dev who knows the old languages still and it's more efficient to hire a freak who knows how to make something to bridge between the old and new programs than to fully trash the old system

like there's literally consultants that get hired for that specific purpose and as a software guy musk KNOWS this

#personal#im losing a LOT of money and decent work connections cause its less stressful than dealing with the crazy man#who literally called my professors at their personal art studios =_=#but ummm???? um??? hes like a low level linkedin influencer lmfao ._.#for software and THIS specific subject matter#the thing ive been getting raises on at work is making scripts to communicate between adobe software with the spreadsheets our#PLM system at work spits out....to automate a bunch of artwork thru libraries.........????#the way my boss gets me to not leave is by giving me /coolmathgames.com/ as a treat basically#and more money for being able to solve /coolmathgames.com/#i work in corporate and one of our order management systems specifically gets routed thru a windows vista virtual machine#cause they dont feel the need to fix....cuz if its not broke#just make the new things that bridge between the two systems?????#instead of having to transfer over decades of a database it makes 0 sense#idk man im rlly frustrated online cuz one of my dads patents is for a legacy speech to text software#(and the other is for a logistics/shipment thing)#like he wasnt the lead on either project but the speech to text specifically is irritating cuz theres#things ppl call 'AI' and im like....thats a buzzword this is litcherally 90s/00s tech and ive been in the office it was made lol

1 note

·

View note

Note

Hello!!! I hope you don't mind doing this one,

Can you help me write a traumatized person who's having trouble talking because of past trauma? (They can still interact with people, but only with signs and movements, not voice) and also a little anxious

Tell me if you need more details =)

How to Write a Mute / Non-Speaking Character

-> healthline.com

-> verywellhealth.com

-> descriptionary.wordpress.com

Types of Mutism:

selective mutism: having the ability to speak but feeling unable to.

organic mutism: mutism caused by brain injury, such as with drug use or after a stroke.

cerebellar mutism: mutism caused by the removal of a brain tumor from a part of the skull surrounding the cerebellum, which controls coordination and balance.

aphasia: when people find it difficult to speak because of stroke, brain tumor, or head injury.

What Causes Selective Mutism in Adults?

having another anxiety condition, like separation anxiety or social anxiety

experiencing physical, emotional, or sexual abuse

having a family history of selective mutism or social anxiety

having fewer opportunities for social contact

having an extremely shy personality

having a speech or language disorder, learning disability, or sensory processing disorder

parent-child enmeshment, or lack of clear boundaries in the relationship

traumatic experiences

Traumatic Mutism vs Trauma-Induced Selective Mutism

if you have traumatic mutism, you may be unable to talk in all situations following a trauma.

with trauma-induced selective mutism, you may find it impossible to talk only in certain situations-- for example, in front of the person who hurt you or in a setting that resembles the circumstances of your trauma.

Different Ways Individuals with Mutism May Choose to Communicate:

Nonverbal Communication: they may rely on facial expressions, gestures, eye contact, and body language to convey their thoughts, emotions, and intentions.

Writing or Typing: they may use a pen and paper, digital devices, or communication apps to write messages, notes, or responses.

Sign Language: they can convey meaning, emotions, and engage in complex conversations through hand signs, facial expressions, and body movements.

Augmentative and Alternative Communication (AAC) Devices: these devices provide individuals with a range of tools and technologies to support their communication needs. They can include speech-generating devices, picture boards, apps, or software that allows users to select words, phrases, or symbols to generate spoken or written output.

Communication Boards and Visual Aids: Communication boards or charts with pictures, symbols, or words can assist individuals in conveying their messages.

Assistive Technology: various assistive technologies, such as speech-to-text apps, text-to-speech programs, or eye-tracking devices that aid individuals with communication.

Tips on Writing a Mute / Non-Speaking Character:

Explore the vast array of nonverbal cues such as facial expressions, body language, gestures, and eye contact. Use descriptions to convey their intentions and reactions.

Utilize internal dialogue. Offer readers a window into their internal thought process, and turn their internal dialogue into a narrative that reveals their inner struggles, triumphs, and complexities so that reader can connect with the character.

Establish a communication system that is unique to your character (Sign language, written notes, telepathy in a fantasy setting, etc.). Having a communication system allows your character to interact with other characters and contribute to the narrative.

Surround them with Understanding Characters that can aid in communcation and fostering meaningful relationships.

Establish the Barriers/Conflicts They'll Experience. Don't forget to be realistic.

Your character is not defined by their inability to speak. Make sure you do not write stereotypes and cliches. Being mute is only one aspect of their identity rather than their defining trait.

Do your research! Seek out firsthand accounts, experiences, and perspectives. Check out online forums and resources to gain insights into their unique challenges, adaptations, and strengths.

If you like what I do and want to support me, please consider buying me a coffee! I also offer editing services and other writing advice on my Ko-fi! Become a member to receive exclusive content, early access, and prioritized writing prompt requests.

#writing prompts#creative writing#writeblr#how to write#writing tips#writing advice#writing resources#writing help#writing tools#how to write a mute character#how to write a non-speaking character#how to write characters

581 notes

·

View notes

Text

NPT - Windows 7 Operating System

With text-to-speech themes!

Names:

Code

Cody

Seven

Win/dows

Winston

Winnie

Sybil

Keys

Wire/s

Byte

Marvin

Allie

Lia

Elliot

Eddie

Otis

Silver

Ozzy

Pronouns:

32/32s/32self

32/32bits/32bitself

64/64s/64self

64/64bits/64bitself

byte/bytes/bytes

sev/sevens/sevenself

sys/syss/sysself

key/keys/keyself

code/codes/codeself

win/windows/windowself

puter/puters/puterself

Titles:

The Seventh System

The Seventh Operating System

(Prn) of the Programming

(Prn) of the Software

(Prn) of the Code / Coding

The Windows OS

The Windows Operating System

The Seventh Software

Requested by anon!!

#request completed#kinhelp#otherkin#name suggestions#pronoun suggestions#title suggestions#npt pack#npt suggestions#computerkin#techkin#computer kin#tech kin#windows 7

39 notes

·

View notes

Text

The Whole Sort of General Mish Mosh of AI

I’m not typing this.

January this year, I injured myself on a bike and it infringed on a couple of things I needed to do in particular working on my PhD. Because I had effectively one hand, I was temporarily disabled and it finally put it in my head to consider examining accessibility tools.

One of the accessibility tools I started using was Microsoft’s own text to speech that’s built into the operating system I used, which is Windows Not-The-Current-One-That-Everyone-Complains-About. I’m not actually sure which version I have. It wasn’t good but it was usable, and being usable meant spending a week or so thinking out what I was going to write a phrase at a time and then specifying my punctuation marks period.

I’m making this article — or the draft of it to be wholly honest — without touching my computer at all.

What I am doing right now is playing my voice into Audacity. Then I’m going to use Audacity to export what I say as an MP3, which I will then take to any one of a few dozen sites that offer free transcription of voice to text conversion. After that, I take the text output, check it for mistakes, fill in sentences I missed when coming off the top of my head, like this one, and then put it into WordPress.

A number of these sites are old enough that they can boast that they’ve been doing this for 10 years, 15 years, or served millions of customers. The one that transcribed this audio claims to have been founded in 2006, which suggests the technology in question is at least, you know, five. Seems odd then that the site claims its transcription is ‘powered by AI,’ because it certainly wasn’t back then, right? It’s not just the statements on the page, either, there’s a very deliberate aesthetic presentation that wants to look like the slickly boxless ‘website as application’ design many sites for the so-called AI folk favour.

This is one of those things that comes up whenever I want to talk about generative media and generative tools. Because a lot of stuff is right now being lumped together in a Whole Sort of General Mish Mosh of AI (WSOGMMOA). This lump, the WSOGMMOA, means that talking about any of it is used as if it’s talking about all of it in the way that the current speaker wants to be talked about even within a confrontational conversation from two different people.

For people who are advocates of AI, they will talk about how ChatGPT is an everythingamajig. It will summarize your emails and help you write your essays and it will generate you artwork that you want and it will give you the rules for games you can play and it will help you come up with strategies for succeeding at the games you’ve already got all while it generates code for you and diagnoses your medical needs and summarises images and turns photos of pages into transcriptions it will then read aloud to you, and all you have to focus on is having the best ideas. The notion is that all of these things, all of these services, are WSOGMMOA, and therefore, the same thing, and since any of that sounds good, the whole thing has to be good. It’s a conspiracy theory approach, sometimes referred to as the ‘stack of shit’ approach – you can pile up a lot of garbage very high and it can look impressive. Doesn’t stop it being garbage. But mixed in with the garbage, you have things that are useful to people who aren’t just professionally on twitter, and these services are not all the same thing.

They have some common threads amongst them. Many of them are functionally looking at math the same way. Many or even most of them are claiming to use LLMs, or large language models and I couldn’t explain the specifics of what that means, nor should you trust an explainer from me about them. This is the other end of the WSOGMMOA, where people will talk about things like image generation on midjourney and deepseek (pieces of software you can run on your computer) consumes the same power as the people building OpenAI’s data research centres (which is terrible and being done in terrible ways). This lumping can make the complaints about these tools seem unserious to people with more information and even frivolous to people with less.

Back to the transcription services though. Transcription services are an example of a thing that I think represents a good application of this math, the underlying software that these things are all relying on. For a start, transcription software doesn’t have a lot of use cases outside of exactly this kind of experience. Someone who chooses or cannot use a keyboard to write with who wants to use an alternate means, converting speech into written text, which can be for access or archival purposes. You aren’t going to be doing much with that that isn’t exactly just that and we do want this software. We want transcriptions to be really good. We want people who can’t easily write to be able to archive their thoughts as text to play with them. Text is really efficient, and being able to write without your hands makes writing more available to more people. Similarly, there are people who can’t understand spoken speech – for a host of reasons! – and making spoken media more available is also good!

You might want to complain at this point that these services are doing a bad job or aren’t as good as human transcription and that’s probably true, but would you rather decent subtitles that work in most cases vs only the people who can pay transcription a living wage having subtitles? Similarly, these things in a lot of places refuse to use no-no words or transcribe ‘bad’ things like pornography and crimes or maybe even swears, and that’s a sign that the tool is being used badly and disrespects the author, and it’s usually because the people deploying the tool don’t care about the use case, they care about being seen deploying the tool.

This is the salami slicer through which bits of the WSOGMMOA is trying to wiggle. Tools whose application represent things that we want, for good reasons, that were being worked on independently of the WSOGMMOA, and now that the WSOGMMOA is here, being lampreyed onto in the name of pulling in a vast bubble of hypothetical investment money in a desperate time of tech industry centralisation.

As an example, phones have long since been using technology to isolate faces. That technology was used for a while to force the focus on a face. Privacy became more of a concern, then many phones were being made with software that could preemptively blur the faces of non-focal humans in a shot. This has since, with generative media, stepped up a next level, where you now have tools that can remove people from the background of photographs so that you can distribute photographs of things you saw or things you did without necessarily sharing the photos of people who didn’t consent to having their photo taken. That is a really interesting tool!

Ideologically, I’m really in favor of the idea that you should be able to opt out of being included on the internet. It’s illegal in France, for example, to take a photo of someone without their permission, which means any group shot of a crowd, hypothetically, someone in that crowd who was not asked for permission, can approach the photographer and demand recompense. I don’t know how well that works, but it shows up in journalism courses at this point.

That’s probably why that software got made – regulations in governments led to the development of the tool and then it got refined to make it appealing to a consumer at the end point so it could be used as as a selling point. It wouldn’t surprise me if right now, under the hood, the tech works in some similar way to MidJourney or Dall-E or whatever, but it’s not a solution searching for a problem. I find that really interesting. Is this feature that, again, is running on your phone locally, still part of the concerns of the WSOGMMOA? What about the software being used to detect cancer in patients based on sophisticated scans I couldn’t explain and you wouldn’t understand? How about when a glamour model feeds her own images into the corpus of a Midjourney machine to create more pictures of herself to sell?

Because admit it, you kinda know the big reason as a person who dislikes ‘AI’ stuff that you want to oppose WSOGMMOA. It’s because the heart of it, the big loud centerpiece of it, is the worst people in the goddamn world, and they want to use these good uses of this whole landscape of technology as a figleaf to justify why they should be using ChatGPT to read their emails for them when that’s 80% of their job. It’s because it’s the worst people in the world’s whole personality these past two years, when it was NFTs before that, and it’s a term they can apply to everything to get investors to pay for it. Which is a problem because if you cede to the WSOGMMOA model, there are useful things with meaningful value that that guy gets to claim is the same as his desire to raise another couple of billions of dollars so he can promise you that he will make a god in a box that he definitely, definitely cannot fucking do while presenting himself as the hero opposing Harry Potter and the Protocols of Rationality.

The conversation gets flattened around the basically two poles:

All of these tools, everything that labels itself as AI is fundamentally an evil burning polar bears, and

Actually everyone who doesn’t like AI is a butt hurt loser who didn’t invest earlier and buy the dip because, again, these people were NFT dorks only a few years ago.

For all that I like using some of these tools, tools that have helped my students with disability and language barriers, the fact remains that talking about them and advocating for them usefully in public involves being seen as associating with the group of some of the worst fucking dickheads around. The tools drag along with them like a gooey wake bad actors with bad behaviours. Artists don’t want to see their work associated with generative images, and these people gloat about doing it while the artist tells them not to. An artist dies and out of ‘respect’ for the dead they feed his art into a machine to pump out glurgey thoughtless ‘tributes’ out of booru tags meant for collecting porn. Even me, I write nuanced articles about how these tools have some applications and we shouldn’t throw all the bathwater out with the babies, and then I post it on my blog that’s down because some total shitweasel is running a scraper bot that ignores the blog settings telling them to go fucking pound sand.

I should end here, after all, the transcription limit is about eight minutes.

Check it out on PRESS.exe to see it with images and links!

15 notes

·

View notes

Note

Hey-- coming here from your profanity vs vtt post.

How have you found the transition to using voice to text? Has it been frustrating? What have you done to help it understand you better? Are there ways to make it understand specific things you want to say like an odd name, or do you have to swap back to typing? How do you manage swapping between the two?

I have chronic pain in my hands and many people have told me to try vtt bc im a programmer for work and a writer for hobbies and honestly typing too much. I'm curious bc the things I asked about are worries that have held me back from trying it, bc i have a low frustration tolerance when I'm in enough pain to require using it

I... do not know if I am the best person to ask because I am deaf and have a speech impediment that, although minor, is significant enough that I think voice to text is going to be more difficult for me than other people. But I also love giving my opinion on things lol (just bear in mind my experience will skew a little ways off the typical).

I am still very much in the teething stage - getting used to what it can and can't do, and trying really hard to work against my own very low frustration threshold. Everything you've asked about also held me back from it for ages until now, when I had deadlines to meet and no other option. The big thing I have to keep reminding myself is that vtt learns as you use it. I don't know if they all do this, but the built-in Windows one I'm using on my laptop does. So, yes, you can make it more accurate – by using it more often. Also, not all vtt software is made equal: Some other vtt programs have vocab lists so you can add in words and train it, some are better at automatically filtering filler phrases like um or uh, some handle background noise better, etc. at some point I'm going to look more into all the alternatives out there but Im going to get used to it on what I have first.

Coming back to the whole "it gets better the more you use it" thing. At the moment I am making myself use it at least a little bit every day, in short bursts, focusing on repeating and enunciating any phrases that it does not understand. I think I've noticed some improvement over three days! But I really recommend doing this before you are forced to use it out of necessity, which is something I didn't do and I'm now absolutely paying the price for. It's frustrating trying to wrestle with vtt AND deadlines AND pain 🙃

It's pretty easy to swap between vtt and typing. On my phone (how I dictated this answer) I just hit the microphone button. On the laptop a voice to text bar remains on screen so I can toggle it on and off with a click. When using the full voice control feature all I need to do is tell it to enable voice access or disable voice access (then correct or type by pecking with my single pain free finger). Windows vtt also has built in voice commands to delete and select text, insert punctuation, and start new paragraphs. How well these commands works DOES depend on your program though – backspacing doesn't work in Scrivener, for example, but works fine if I dictate a post on Tumblr in browser and the whole thing functions best in Microsoft Word. Of course. Because Microsoft want you to use their products.

So all in all, yeah. It's frustrating. Or at least, it's a serious learning curve. The essay I've been doing for uni using vtt is.. a terrifying mess, but at least vtt now understands me when I say the name Orsino, which is certainly hopeful. And knowing I at least HAVE an alternative to just exacerbating chronic pain + hypermobility has helped with my sharp downward emotional spiral that I always get when my pain is bad. Just wish I'd adjusted to it before I had to learn via trial of fire.

Oh, and top tip from my experience so far: DRINK FLUIDS. water is probably best but I think as long as you do not do what Charles Dickens did and suck down like five different alchocolic drinks before speaking, you're fine with most anything. Point is, if your mouth gets dry/too full of saliva and/or your throat gets sore, enunciating is harder, so the vtt engine will struggle more and then YOU struggle more. So drink. (I do not think I have ever been so well-hydrated)

9 notes

·

View notes

Text

Object permanence

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in DOYLESTOWN TOMORROW (Mar 1), and in BALTIMORE on SUNDAY (Mar 2). More tour dates here. Mail-order signed copies from LA's Diesel Books.

#20yrsago KGB Guide to London released by MI5 https://web.archive.org/web/20050303022107/https://www.nationalarchives.gov.uk/releases/2005/highlights_march/march1/default.htm

#20yrsago Euro software patents reanimated through corrupt officials 0wned by Microsoft https://yro.slashdot.org/story/05/02/28/2223232/eu-commission-declines-patent-debate-restart

#20yrsago Deluded Sony music exec can’t read his own study https://constitutionalcode.blogspot.com/2005/02/us-market-not-antagonistic-towards-drm.html

#20yrsago Greedy DRM vendors want more in royalties than the total market for digital music https://web.archive.org/web/20050912194259/http://www.usatoday.com/tech/news/computersecurity/2005-02-25-drm-infighting_x.htm?POE=click-refer

#10yrsago Ad-hoc museums of a failing utopia: photos of Soviet shop-windows https://memex.craphound.com/2015/02/28/ad-hoc-museums-of-a-failing-utopia-photos-of-soviet-shop-windows/

#10yrsago Your voice-to-text speech is recorded and sent to strangers https://www.vice.com/en/article/strangers-on-the-internet-are-listening-to-peoples-phone-voice-commands/

#5yrsago How to lie with (coronavirus) maps https://pluralistic.net/2020/02/28/pluralistic-your-daily-link-dose-28-feb-2020/#cartonerd

#5yrsago Let's Encrypt issues its billionth cert https://pluralistic.net/2020/02/28/pluralistic-your-daily-link-dose-28-feb-2020/#letsencrypt

#5yrsago Clearview AI's customer database leaks https://pluralistic.net/2020/02/28/pluralistic-your-daily-link-dose-28-feb-2020/#petard

#1yrago When private equity destroys your hospital https://pluralistic.net/2024/02/28/5000-bats/#charnel-house

9 notes

·

View notes

Text

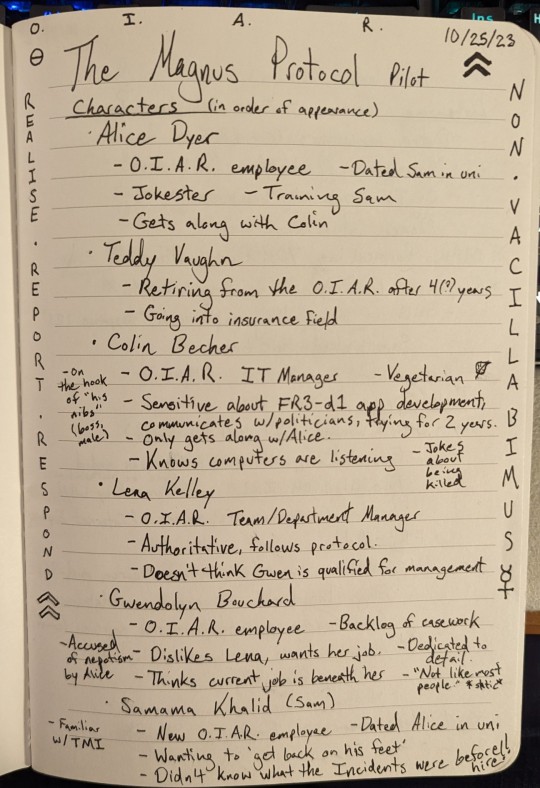

As anticipated, here are my extensive red string notes from the pilot:

God knows how relevant any of this will turn out to be, but I'm nothing if not a collector of trivial information

Very long text beneath the cut:

Page 1

The Magnus Protocol Pilot 10/25/23

Characters (in order of appearance)

Alice Dyer -O.I.A.R. employee -Dated Sam in uni -Jokester -Training Sam -Gets along with Colin

Teddy Vaughn -Retiring from the O.I.A.R. after 4 (?) years -Going into insurance field

Colin Becher -O.I.A.R. IT Manager -Vegetarian 🥬 -On the hook of "his nibs" (boss, male) -Sensitive about FR3-d1 app development, communicates w/politicians, trying for 2 years -Only gets along w/Alice -Knows computers are listening -Jokes about being killed

Lena Kelley -O.I.A.R. Team/Department Manager -Authoritative, follows protocol -Doesn't think Gwen is qualified for management

Gwendolyn Bouchard -O.I.A.R. employee -Backlog of casework -Dislikes Lena, wants her job -Dedicated to detail -Accused of nepotism by Alice -Thinks current job is beneath her -"Not like most people." *static*

Samama Khalid (Sam) -New O.I.A.R. employee -Dated Alice in uni -Wanting to 'get back on his feet' -Familiar with TMI -Didn't know what the Incidents were before hire!!

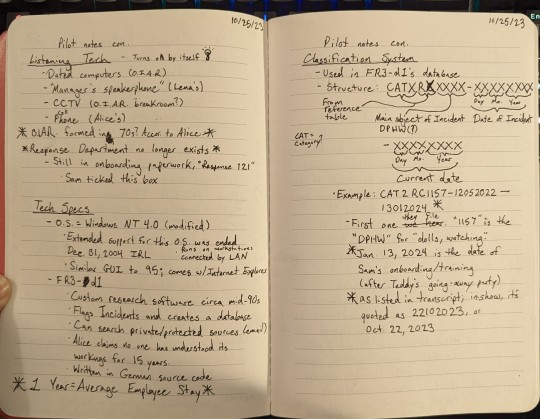

Page 2

Pilot notes con. 10/25/23

Listening Tech - Turns on by itself 💡

Dated computers (O.I.A.R.)

"Manager's speakerphone" (Lena's)

CCTV (O.I.A.R. breakroom?)

Cell phone (Alice's)

*O.I.A.R. formed in 70s? Accor. to Alice*

*Response Department no longer exists*

Still in onboarding paperwork, "Response 121"

Sam ticked this box

Tech Specs

O.S. = Windows NT 4.0 (modified) -Extended support for this O.S. was ended Dec. 31, 2004 IRL -Runs on workstations connected by LAN -Similar GUI to 95; comes w/Internet Explorer

FR3-d1 -Custom research software circa mid-90s -Flags Incidents and creates a database -Can search private/protected sources (email) -Alice claims no one has understood its workings for 15 years -Written in German source code

*1 Year = Average Employee Stay*

Page 3

Pilot notes con. 10/25/23

Classification System

Used in FR3-d1's database

Structure: CATXRXXXXX-XXXXXXXX-XXXXXXXX

CATXRX -> From reference table (CAT = Category?) First four digits -> Main subject of Incident DPHW (?) Next eight digits -> Date of Incident Last eight digits -> Current date

Example: CAT2RC1157-12052022-13012024* -First one we hear they file. "1157" is the "DPHW" for "dolls, watching."

*Jan. 13, 2024 is the date of Sam's onboarding/training (after Teddy's going-away party)

*as listed in transcript; in-show, it's quoted as 22102023, or Oct. 22, 2023

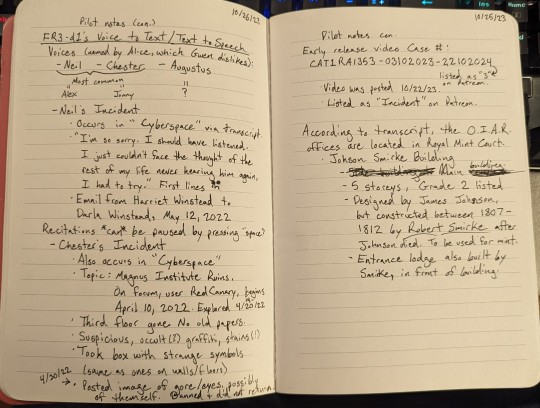

Page 4

Pilot notes (con.) 10/25/23

FR3-d1's Voice to Text/Text to Speech

Voices (named by Alice, which Gwen dislikes):

Neil = Alex

Chester = Jonny

(those two most common)

Augustus = ?

Neil's Incident

Occurs in "Cyberspace" via the transcript

"I'm so sorry. I should have listened. I just couldn't face the thought of the rest of my life never hearing him again, I had to try." First lines 😢

Email from Harriet Winstead to Darla Winstead, May 12, 2022

Recitations *can* be paused by pressing "space"!

Chester's Incident

Also occurs in "Cyberspace"

Topic: Magnus Institute Ruins.

On forum, user RedCanary, begins April 10, 2022. Explored 4/19-20/22.

Third floor gone. No old papers.

Suspicious, occult (?) graffiti, stains (!)

Took box with strange symbols (same as ones on walls/floors)

4/30/22 Posted image of gore/eyes, possibly of themself. Banned + did not return.

Page 5

Pilot notes con. 10/25/23

Early release video Case #: CAT1RA1353-03102023-22102024(listed as 202"3" on Patreon)

Video was posted 10/22/23.

Listed as "Incident" on Patreon.

According to transcript, the O.I.A.R. offices are located in Royal Mint Court.

Johson (sic) Smirke Building

Main building.

5 storeys, Grade 2 listed.

Designed by James Johnson, but constructed between 1807-1812 by Robert Smirke after Johnson died. To be used for mint.

Entrance lodge also built by Smirke, in front of building.

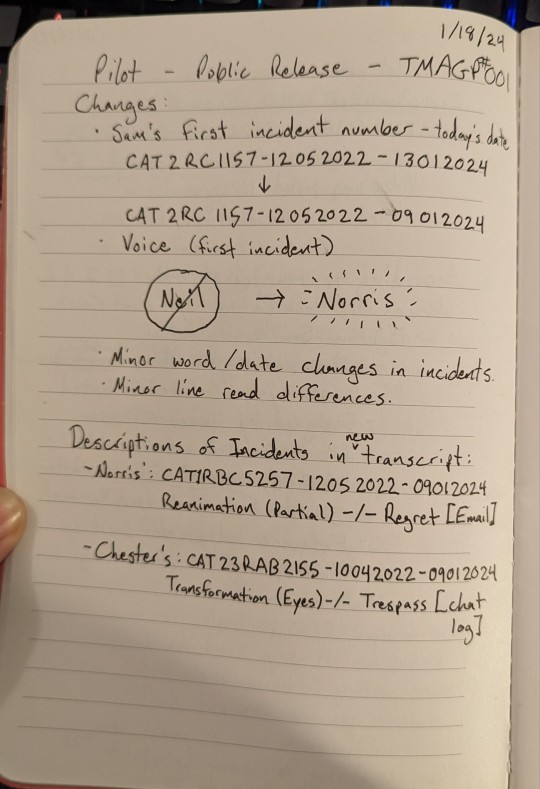

Page 6

Pilot - Public Release -TMAGP#001

Changes:

Sam's first incident number - today's date

CAT2RC1157-12052022-13012024 -> CAT2RC1157-12052022-09012024

Voice (first incident)

Neil -> *Norris*

Minor word/date changes in incidents.

Minor line read differences.

Descriptions of Incidents in new transcript:

Norris': CAT1RBC5257-12052022-09012024 Reanimation (Partial) -/- Regret [Email]

Chester's: CAT23RAB2155-10042022-09012024 Transformation (Eyes) -/- Trespass [chat log]

#will add alt text shortly#also keep in mind a lot of this was written back in October - see the last page for recent changes#tmagp#the magnus protocol#tmagp spoilers#im super stoked to have figured out the royal mint court thing

20 notes

·

View notes

Text

It will definitely take some heavy editing and formatting afterwards, but if you have not yet tried Voice to Text dictation for your writing, I highly encourage everyone who can to try it out! It is only 11 o clock and I've already done over 3k words in my story, and I have to edit the whole thing anyways when I edit my first draft, so the mark where voice to text starts and ends is pretty obvious from the lack of punctuation and inclusion of weird periods and commas in sentences lol.

I've just been typing with Windows speech recognition. , because even though they have ended support for it I don't particularly plan and Windows Speech Recognition specifically allows you to turn off the option for them to use your voice to improve their software.

If you're struggling with typing out your work, try and see if Voice to Text helps you! You' might have to 'll have to edit it for clarity afterwards, but as long as you are patient and enunciate, it is pretty accurate so far.

6 notes

·

View notes

Text

I'm keeping windows 7 on my desktop pc for Sims 2 but it's becoming more and more obsolete 😭 most of the games I want to try on Steam require w10, the text-to-speech softwares are terrible (you can't install additional voices... I have the default English but I'd need an italian one too for some books) + more and more things are becoming incompatible. I need to update both its hardware and software UGH

#but I dislike w10 :(#and I need both some extra RAM and a bigger hard disk or whatever they're called these days#and installing it all would require so. much. work. and money. and time that I don't have#this post is just me complaining. sorry#xwp nonsims#xwp talks#nonsims#I'll ask for help to my crush maybe. he's an electronic engineer in the making#(funny bc I'm a computer engineer in the making LMFAO I should be the helpful one)

7 notes

·

View notes

Text

Why Modern Businesses Can’t Live Without Call Center Software

Every day, millions of customers call businesses seeking help, answers, or reassurance. Yet behind every seamless conversation is an often-overlooked hero: call center software. If you’ve ever dialed into a customer support line and experienced quick routing, helpful agents, or a perfect callback, chances are you’ve benefited from this powerful technology.

But “call center software” can feel like a dry, technical term—something relegated to IT departments and CIOs. The reality? It’s a living, breathing ecosystem that shapes how we connect, empathize, and solve problems in a digital age. Let’s dive into a human-centric view of why modern businesses need robust call center software, how it has evolved, and where it’s heading.

Beyond Dialing: The Human Connection at the Core

Imagine you’re a new parent anxious about your baby’s first cold. You pick up the phone, dial the pediatric hotline, and within seconds, you’re speaking with a compassionate voice who understands. That agent didn’t just press random buttons. They likely relied on call center software that instantly pulled up your account, past inquiries, and the hospital’s triage guidelines—allowing them to answer your concerns with speed and empathy.

That’s the beauty of modern systems: they enable real-time context. When agents see historical data—past support tickets, billing details, or even a customer’s emotional sentiment analysis—they can tailor their response. This isn’t just efficiency. It’s human connection, fueled by technology.

How Did We Get Here? A Brief History of Call Technology

Quick refresher for context: once upon a time, call centers were literally rows of phones, sticky notes, and manual logs. Agents shuffled paper forms and scribbled customer details on yellow pads. Metrics were tallied by hand. The process was slow, error-prone, and impersonal. Today, that concept seems archaic.

The first generation of call center software emerged in the 1980s with rudimentary Automatic Call Distributors (ACD). These systems would simply route incoming calls to the next available agent. In the 1990s, computerized Customer Relationship Management (CRM) tools allowed agents to see customers’ past interactions. Fast-forward to the 2010s—and we have cloud-based, AI-augmented platforms that handle omnichannel support (voice, email, chat, SMS, social media) all in one dashboard. It’s been an evolution of integration, automation, and intelligence.

What Makes Modern Call Center Software Different?

Let’s break down the key pillars that distinguish today’s platforms:

Omnichannel Integration Customers expect to reach out on their terms—whether that’s a phone call, text message, social post, or video chat. A best-in-class call center software consolidates these channels into a single interface. Agents don’t need ten different windows open; they simply click through a unified view of each customer’s journey.

Real-Time Analytics and Reporting In the past, quality assurance meant managers randomly listening to recorded calls. Now, intelligent systems analyze every interaction for sentiment trends, call resolution times, and agent performance. Dashboards update in real time, enabling supervisors to spot bottlenecks—like high call abandonment in certain hours—and make immediate adjustments.

AI-Powered Assistance Speech recognition, natural language processing, and automated chatbots handle routine queries—freeing agents to tackle the complex, emotionally nuanced issues. For instance, if you’re calling your bank to dispute a fraudulent charge, a chatbot can verify your identity and gather preliminary facts, then escalate you to a live agent who already knows the details. That synergy between human and machine is the hallmark of contemporary call center solutions.

Cloud Flexibility Gone are the days of massive on-premise hardware racks. Cloud-based call center software is scalable, cost-effective, and accessible from anywhere with an internet connection. In a post-pandemic world, remote agents can log in from home, coffee shops, or co-working spaces, without missing a beat.

Real-World Impact: Stories Behind the Screens

Take a regional healthcare provider that implemented a modern call center software platform last year. Before the upgrade, patients faced 20-minute hold times and repeated paperwork—often needing to re-explain symptoms to multiple agents. The new system changed the game. Patient data was instantly available, calls were prioritized by urgency, and clinicians could schedule follow-ups directly within the platform. As a result, patient satisfaction scores rose by 35%, and hospital readmission rates dropped by 12%. That’s the kind of direct, measurable impact today’s call center solutions can deliver.

Or consider a fast-growing e-commerce brand. Peak season used to be a nightmare—call volumes spiked, hold times soared, and carts were abandoned. After migrating to a cloud-based call center software, they introduced automated callbacks, AI-driven self-service menus, and predictive staffing tools. Holiday stress? Reduced by 60%. Customer retention? Climbing steadily.

Choosing the Right Call Center Software Partner

With so many options available, how do you pick the ideal solution? Here are some guiding questions:

Scalability: Will the platform grow with your business? Can you add new agents, languages, or regions without ripping and replacing?

Integration: Does it seamlessly connect with your existing CRM, ticketing, and knowledge-base tools? Or will you spend months merging data silos?

Omnichannel Support: Are voice, email, chat, and social channels natively supported—without costly add-ons?

Customization: Can you tailor IVR scripts, agent scripts, and automated workflows to reflect your unique brand voice?

Security and Compliance: Does it meet industry standards—HIPAA in healthcare, PCI DSS in finance, GDPR in Europe?

Remember, selecting a vendor isn’t just a software purchase. It’s a partnership. The right call center software provider becomes an extension of your team—offering strategic guidance, training, and optimization best practices.

The Human Element: Agents Are at the Heart

In all the talk about technology, it’s easy to forget one simple truth: customers still crave genuine human interaction. A well-designed call center software platform doesn’t replace agents; it empowers them.

When agents have a single view of customer history, real-time guidance on next best actions, and AI-suggested knowledge snippets, they aren’t scrambling for answers. They can focus on listening, empathizing, and problem-solving. Imagine being on the other end of the line. Hearing a calm, informed voice who already understands your issue—that’s the difference between frustration and loyalty.

In fact, many top-tier platforms offer built-in coaching modules. Supervisors can whisper best-practice prompts to new agents during live calls, ensuring consistent service quality and faster onboarding. That level of support doesn’t just boost customer satisfaction—it builds agent confidence and reduces burnout.

The Future: AI, Automation, and Beyond

What’s next for call center software? Brace yourself—hybrid human-AI teams are just the beginning.

Emotion AI: Systems that gauge caller mood through tone analysis. If a customer sounds frustrated, the call is escalated to a specialized “tact team” trained in de-escalation.

Predictive Analytics: Not only analyzing historical call data but forecasting spikes in inquiries—for example, identifying a potential product defect trend before it goes viral on social media.

Voice Biometrics: Secure, frictionless authentication that identifies callers by voiceprint, drastically reducing “forgot my password” hang-ups.

Augmented Reality (AR) Integration: Remote troubleshooting where an agent guides customers through visual overlays on their smartphone camera—imagine replacing a filter in a home appliance while the agent sees exactly what you see.

These innovations won’t replace human agents—they’ll make them invaluable by offloading mundane work and augmenting their skills. That’s the promise of the next wave of call center software: true human-AI collaboration.

Final Thoughts: Embrace the Evolution

The journey from manual switchboards to AI-powered, cloud-based omnichannel hubs has been nothing short of extraordinary. And while the phrase “call center software” might sound utilitarian, in practice, it’s the engine of empathy, efficiency, and excellence in customer experience.

Whether you’re a small business trying to stand out, a healthcare provider aiming to reduce patient anxiety, or an enterprise looking to optimize global support operations, investing in the right call center software isn’t optional—it’s a strategic imperative.

So next time you find yourself on hold—or, better yet, speaking with a knowledgeable agent—remember there’s a symphony of technology behind that moment, allowing genuine human connection to shine through. Because at the end of the day, it’s not just about routing calls; it’s about building trust.

0 notes

Text

How are Government Initiatives and Funding Shaping the Screen Readers Software Market Outlook for 2032?

Screen Readers Software Market was valued at USD 96.78 billion in 2023 and is expected to reach USD 405.62 billion by 2032, growing at a CAGR of 17.31% from 2024-2032.

The Screen Readers Software Market is experiencing significant growth, driven by an increasing global focus on digital accessibility and inclusivity. As technology permeates every aspect of daily life, the demand for software solutions that enable visually impaired individuals to access digital content and interfaces is expanding rapidly. This market encompasses a range of applications designed to convert on-screen text and graphical information into spoken output or braille, thereby bridging the accessibility gap for a large user base.

The Screen Readers Software Market is poised for continued expansion, fueled by regulatory mandates, corporate social responsibility initiatives, and the ongoing development of more sophisticated and user-friendly screen reader technologies. The integration of artificial intelligence and machine learning is enhancing the accuracy and naturalness of synthesized speech, further improving the user experience. This dynamic market is crucial for fostering digital equality and empowering individuals with visual impairments.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6710

Market Keyplayers:

Freedom Scientific (JAWS, ZoomText Fusion)

NV Access (NVDA, NVDA Remote)

Dolphin Computer Access Ltd. (SuperNova, Dolphin ScreenReader)

Apple Inc. (VoiceOver, Speak Screen)

Microsoft Corporation (Narrator, Windows Speech Recognition)

Kurzweil Education (Kurzweil 1000, Kurzweil 3000)

Serotek Corporation (System Access, Accessible Event)

Texthelp Ltd. (Read&Write, Snap&Read)

Claro Software Ltd. (ClaroRead, ClaroSpeak)

Baum Retec AG (VisioBraille, COBRA)

Cambium Learning Group (Learning Ally, Kurzweil Education)

TPGi – A Vispero Company (JAWS Inspect, ARC Toolkit)

Sonocent Ltd. (Audio Notetaker, Glean)

Code Factory (Mobile Speak, Eloquence TTS)

HumanWare (Victor Reader, Brailliant)

Market Summary

The screen readers software market is characterized by a strong emphasis on user-centric design and compatibility across various operating systems and applications. The market's expansion is intrinsically linked to the proliferation of digital content, including websites, documents, and mobile applications, all of which require accessible interfaces for visually impaired users. Growth is also being propelled by rising awareness about accessibility needs in educational institutions and workplaces.

Market Analysis

The market's growth is primarily driven by:

Increasing Digitalization: The pervasive nature of digital platforms necessitates robust accessibility tools.

Regulatory Compliance: Governments and organizations worldwide are implementing stricter accessibility standards (e.g., WCAG, ADA).

Technological Advancements: Continuous innovation in speech synthesis, AI, and user interface design enhances product efficacy.

Growing Awareness: Increased understanding of the challenges faced by visually impaired individuals drives demand for inclusive solutions.

Market Trends

Integration with AI and Machine Learning for more natural and intelligent speech output.

Development of cloud-based screen reader solutions for greater accessibility and flexibility.

Emphasis on cross-platform compatibility and mobile accessibility.

Rising demand for screen readers with multi-language support.

Forecast Outlook

The screen readers software market is projected to witness substantial growth over the next decade. This upward trajectory will be supported by ongoing technological advancements, expanding digital ecosystems, and a persistent global push for inclusive design. The increasing adoption of accessible technologies in emerging economies will also contribute significantly to market expansion, making screen readers an indispensable tool for digital inclusion.

Access Complete Report:https://www.snsinsider.com/reports/screen-readers-software-market-6710

Conclusion

Empowerment through accessibility – the Screen Readers Software Market is not just about technology, it's about unlocking the digital world for everyone. Invest in solutions that champion inclusivity and expand your reach to a vital and growing user base.

Related Reports:

U.S Public Safety and Security Market Projected Growth and Key Trends

U.S. E-reader Market Report Digital Content and E-Ink Advancements Drive Growth

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Screen Readers Software Market#Screen Readers Software Market Scope#Screen Readers Software Market Growth

0 notes

Text

Live API For The Development Of Real-Time Interactions

Live API allows real-time interaction. Developers may use the Live API to construct apps and intelligent agents that process text, video, and audio feeds with minimal latency. Creating really engaging experiences requires this speed, which will enable real-time monitoring, educational platforms, and customer support.

Also announced the Live API for Gemini models' preview launch, allowing developers to build scalable and dependable real-time apps. Test new features in Vertex AI and Google AI Studio using the Gemini API.

Updates to Live API

Since the beta debut in December, it has listened to your feedback and added functionality to prepare the Live API for production. Details are in the Live API documentation:

More reliable session control

Longer sessions and interactions are possible with context compression. Set context window compression using a sliding window approach to automatically regulate context duration to avoid context limit terminations.

Resuming sessions: Keep them after minor network cuts. Live API handles (session_resumption) allow you to rejoin and continue where you left off, and server-side session state storage is available for 24 hours.

Gentle disconnect: Get a GoAway server message when a connection is about to end to treat it nicely.

Adjustable turn coverage Choose whether the Live API processes audio and video input constantly or only records when the end-user speaks.

Configurable media resolution: Control input media resolution to optimise quality or token use.

Improved interaction dynamics control

Configurable VAD: Manually control turns using new client events (activityStart, activityEnd) and specify sensitivity levels or disable automated VAD.

Configurable interruption handling: Select if user input interrupts model response.

Flexible session settings: Change system instructions and other configuration options anytime throughout the session.

Enhanced output and features

Choose from 30 additional languages and two new voices for audio output. SpeechConfig now supports output language customisation.

Text streaming: Delivers text replies progressively, speeding up viewing.

Reporting token consumption: Compare token counts by modality and prompt/response stage in server message use information.

Real-world implementations of Live API

The Live API team is spotlighting developers who are using it in their apps to help you start your next project:

Daily.co

The Pipecat Open Source SDKs for Web, Android, iOS, and C++ enable Live API.

Pipecat Daily used Live API to create Word Wrangler, a voice-based word guessing game. Try your description skills in this AI-powered word game to build one for yourself!

Live Kit

LiveKit Agents support Live API. This voice AI agent framework provides an open-source server-side agentic application platform.

Bubba.ai

Hello Bubba is a voice-first, agentic AI software for truckers. The Live API allows seamless, multilingual speech communication for hands-free driving. Some key aspects are:

Find heaps of items and inform.

Calling shippers and brokers.

Market data helps negotiate freight prices.

Rate confirmations and load scheduling.

Finding and booking truck parking and calling hotel to confirm availability.

Setting up receiver-shipper meetings.

Live API powers Bubba's phone conversations for booking and negotiation and driver interaction (function calling and context caching for future pickups). This makes Hey Bubba a full AI tool for the US's largest and most diverse job sector.

#technology#technews#govindhtech#news#technologynews#Live API#Voice activity detection#Gemini Live API#Live Kit#API live

0 notes

Text

Creating a Demo Video Using DemoDazzle: A Step-by-Step Guide

In today’s digital world, video content plays a vital role in marketing, customer education, and product demonstrations. Demo videos are an excellent way to showcase a product’s features, benefits, and usability in a compelling manner. DemoDazzle is a leading demo creation tool that simplifies this process, making it easy for businesses to create high-quality, engaging demo videos without technical expertise.

In this step-by-step guide, we will walk you through the process of creating a demo video using DemoDazzle, ensuring that your audience gets the most out of your product presentation.

For further details, check out the in-depth guide here: Creating a Demo Video Using DemoDazzle.

Why Choose DemoDazzle for Demo Video Creation?

Before diving into the step-by-step process, let’s look at why DemoDazzle is a top choice for creating demo videos:

User-friendly interface – No need for advanced video editing skills.

Customization options – Add branding, voiceovers, and interactive elements.

Screen recording capabilities – Capture real-time product usage.

Cloud-based storage – Access and edit videos from anywhere.

Easy sharing – Seamless integration with websites, social media, and email campaigns.

Step-by-Step Guide to Creating a Demo Video Using DemoDazzle

Step 1: Sign Up and Set Up Your Account

Visit DemoDazzle and create an account. Once registered, log in to access the dashboard. If you already have an account, simply sign in and proceed to the next step.

Step 2: Choose a Demo Video Type

DemoDazzle offers various demo video styles, including:

Product walkthroughs – Show how to use a software or application.

Feature highlights – Focus on specific functionalities.

Customer onboarding videos – Guide new users through the setup process.

Marketing demos – Showcase product benefits to attract potential customers.

Select the demo type that aligns with your objective.

Step 3: Record Your Screen

DemoDazzle provides an intuitive screen recording feature to capture product interactions in real time. Follow these steps:

Click on the Screen Recording option.

Select the window or application you want to record.

Start recording while demonstrating the product features.

Use the pause and resume functions to maintain control over the recording process.

Step 4: Add Voiceover and Annotations

Enhance your demo video by including a professional voiceover. You can either:

Record your voice directly within DemoDazzle.

Upload a pre-recorded audio file.

Use the built-in text-to-speech feature if you prefer an AI-generated voice.

Additionally, add on-screen text, highlights, and arrows to guide viewers through key points.

Step 5: Customize Your Video

To maintain brand consistency, utilize DemoDazzle’s customization features:

Add your company logo and color scheme.

Include intro and outro segments.

Implement interactive elements, such as clickable buttons or forms, for viewer engagement.

Step 6: Edit and Enhance

Use the editing tools to trim unnecessary parts, adjust the timing of annotations, and refine transitions. DemoDazzle’s intuitive editing suite makes it simple to polish your video for a professional finish.

Step 7: Preview and Finalize

Before publishing, preview your demo video to ensure it meets your expectations. Look for any errors, awkward transitions, or missing elements.

Step 8: Publish and Share

Once finalized, choose how you want to distribute your demo video:

Embed on your website – Engage visitors with an informative product demo.

Share on social media – Reach a broader audience.

Email to prospects or clients – Improve lead nurturing.

Upload to YouTube or Vimeo – Expand brand awareness.

DemoDazzle offers direct integration with multiple platforms for seamless sharing.

Conclusion

Creating a demo video with DemoDazzle is a straightforward process that enables businesses to communicate their product’s value effectively. Whether for sales, onboarding, or marketing, DemoDazzle simplifies the video creation process, ensuring high-quality results with minimal effort.

By following this step-by-step guide, you can create an engaging and impactful demo video that resonates with your audience. Ready to get started? Visit DemoDazzle today and create your first demo video with ease!

0 notes

Text

I’m curious what speech-to-text program OP uses, because many exist where this is an option and easy to turn off. (It makes sense to be optionally available so the software can avoid mishearing words as swears if you don’t want it to.) I hadn’t actually heard of one where that was built in and not easy to turn off but I also haven’t looked much.

Edit: Found in the notes they use what’s built into Windows 11. That one either very recently got a way to turn it off, or will soon: https://www.msn.com/en-us/news/technology/windows-11-s-voice-typing-will-now-let-you-speak-your-mind-without-censorship/ar-AA1DEBOj

Holy **** oh right okay. So I was about to make a post about how using speech to text has already been a game changer for me but as you can see by the line of asterix at the start of this post the bloody thing auto censors swear words. (Yet bloody got through, ig Because it is a description and also British slang.). Hint: the word I was trying to say there starts with F and ends with K.

Oh and guess what else you can't say you can't say? **** [Nipples]. had to type that myself. penis is ok but **** [clitoris] isn't, and all my attempts to say "clit" were Misunderstood, which may just be my speech but at this point I am not willing to give the benefit of the doubt. Vagina is OK too but every time I say it there is a moment when an * shows up on screen first before the full word does. this doesn't happen when I say the word penis.

It is completely heinous. Anybody who needs speech to text is immediately forced to comply with the rules set out by people in a position of power and then enforced by a machine — a machine that is a very powerful accessibility tool. Imagine trying to dictate a letter to a doctor or fill in an E consult with speech to text, only to have words of your anatomy censored as if they are taboo. there is already far too much stigma around genital physical health — and note that I could say genital but can't say **** [clitoris] — for it to be okay for these words to be censored.

And even if somebody just wants to swear In a message to their friends or write smut/**** [pornography], they should be able to. There is no justification for this feature. No reason for it to be default.

I'm trying to find a way around this. There is a settings icon on the little speech to text bar that comes up, but this only gives me options For the speech typing launcher, auto punctuation, and to set the default microphone. it's making me extremely angry

46K notes

·

View notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Learn C# in 24 Hours: Fast-Track Your Programming JourneyYour ultimate C# book to master C sharp programming in just one day! Whether you're a beginner or an experienced developer, this comprehensive guide simplifies learning with a step-by-step approach to learn C# from the basics to advanced concepts. If you’re eager to build powerful applications using C sharp, this book is your fast track to success.Why Learn C#?C# is a versatile, modern programming language used for developing desktop applications, web services, games, and more. Its intuitive syntax, object-oriented capabilities, and vast framework support make it a must-learn for any developer. With Learn C# in 24 Hours, you’ll gain the practical skills needed to build scalable and efficient software applications.What’s Inside?This C sharp for dummies guide is structured into 24 hands-on lessons designed to help you master C# step-by-step:Hours 1-2: Introduction to C#, setting up your environment, and writing your first program.Hours 3-4: Understanding variables, data types, and control flow (if/else, switch, loops).Hours 5-8: Mastering functions, object-oriented programming (OOP), and properties.Hours 9-12: Working with collections, exception handling, and delegates.Hours 13-16: LINQ queries, file handling, and asynchronous programming.Hours 17-20: Debugging, testing, and creating Windows Forms apps.Hours 21-24: Memory management, consuming APIs, and building your first full C# project.Who Should Read This Book?This C# programming book is perfect for:Beginners looking for a step-by-step guide to learn C sharp easily.JavaScript, Python, or Java developers transitioning to C# development.Developers looking to improve their knowledge of C# for building desktop, web, or game applications.What You’ll Learn:Setting up your C# development environment and writing your first program.Using control flow statements, functions, and OOP principles.Creating robust applications with classes, interfaces, and collections.Handling exceptions and implementing event-driven programming.Performing CRUD operations with files and REST APIs.Debugging, testing, and deploying C# projects confidently.With clear explanations, practical examples, and hands-on exercises, Learn C# in 24 Hours: Fast-Track Your Programming Journey makes mastering C sharp fast, easy, and effective. Whether you’re launching your coding career or enhancing your software development skills, this book will help you unlock the full potential of C# programming.Get started today and turn your programming goals into reality! ASIN : B0DSC72FH7 Language : English File size : 1.7 MB Text-to-Speech : Enabled Screen Reader : Supported Enhanced typesetting : Enabled X-Ray : Not Enabled Word Wise : Not Enabled

Print length : 125 pages [ad_2]

0 notes

Text

Personal Contribution Update

-Yu Jie, Huang

After being assigned different tasks, Yash and I discussed the script for 2D Paper cutout universe. As we all want the theme to be delightful, the elements of memes are also in this universe. Then, I started to draw what is needed for the visuals.

I am responsible for 2D artwork and the photobash for the spaceship in the early stages of production.

Since the spaceship is viewed from the inside looking out, it needs to have a realistic, 3D-like texture. To find suitable references, I searched NASA’s image library, as their official photos are usually free to use. I used the keyword “window”and successfully found images that could be used for photobashing. I imported the images into my drawing software, removed the unnecessary background, and used the liquify tool to adjust and straighten the frame. I also painted missing parts, such as pipes and structural details.

During testing, my teammates noticed that the handles on the window frame looked too large when projected into the dome, which broke the sense of immersion. To fix this, I removed the handles and focused on keeping the metallic texture of the main frame.

I also created two paper cutout universe planets/stars:

Hourglass Planet – I found the idea of time flowing like sand interesting and thought it would look great as an animation. The design has two layers: the front layer is an hourglass (with different shapes of falling sand to show movement), and the background layer is a soft, flowing planet to represent the passage of time.

Punk Planet – This was inspired by Spider-Punk from Spider-Man: Across the Spider-Verse. His bold, sharp lines and cutout animation style were key influences. To match this style, I used textured brushes with sharp edges and highly saturated colors to create a punk-like energy. The outer ring of the planet has a radio wave effect, adding a dynamic visual element. Another group member later animated these planets, and the final effect looks great!

Stars: Similar to the art styles of two planets, I created two kinds of stars to use in the background of the universe.

I also worked on character heads for a meme sequence based on The Office. We chose four main characters (Michael, Jim, Pam, Dwight) and designed their faces using simple colors and lines to highlight their most recognizable features.

Finally, I created the text effects that appear as speech from the character heads. My computer and Photoshop unexpectedly crashed during the process, but I was able to recreate the effect using Clip Studio Paint’s blur tools to add a glowing outline. We decided to keep the text black and white for clarity, since it will flash quickly across the screen and should remain easy to read.

Overall, I am happy with my contributions so far, and I look forward to seeing everything come together in the final animation!

Reference:

Nasa.gov. (2020). {{ngMeta.title}}. [online] Available at: https://images.nasa.gov.

0 notes