#AI Art Ethics

Text

On AI Art: A Rant

In light of DeviantArt's recent discussion post, I wrote this comment and I want to share it here as well to help spread awareness of this issue.

AI is trained on the theft of intellectual property. 99% of AI grabs images from everywhere, all over the internet, indiscriminately. Rather than allowing an artist to opt-in to their work being turned into Frankenstein's Monster abominations, our agency is taken from us as our work is cannibalized. People who train AI on specific images also have the audacity to ask credit for it.

We have seen this happen to an artist posthumously. An AI was trained on Kim Jung Gi's passionate work and cannibalized into a grotesque desecration of his art- and the person responsible for doing so asked for credit for it. This is beyond unethical. It reduces an artist's hard work as "content" to be consumed, divorcing his soul from the images he made for us to enjoy. (Source: https://twitter.com/JoelCHoltzman/status/1578370621519835137 )

To a lesser degree- beyond issues of consent and theft of property- AI generated images have been submitted to art contests- and won- against human competitors. This is also unethical, because a human should not unknowingly compete against a computer algorithm. (source: https://twitter.com/GenelJumalon/status/1564651635602853889)

The "agency" someone has in submitting a few text prompts into a piece of software does not compete, and should not compete, with someone who has spent their whole lives training to understand art and create images that tell stories, entertain audiences, and display their human passion and creativity.

AI allows a world where art is stolen- without credit or proper sources- to anyone and everyone who, lacking ethics, will take credit for it. It defaces the work of artists both living and deceased. This is the same thing as tracing, but somehow worse, because now it's harder for the average person to detect a computer-generated image from a human-created one.

Skilled artists can detect theft and traced work by simply looking at someone's portfolio at large and comparing their work against itself by detecting inconsistencies in style. It is harder to do this with AI unless you know what sorts of distortions to look for- and as AI improves, these distortions will also be harder to detect.

We should not allow a platform for this.

At the very least, AI images need to be enforced and categorized in such a way that it can be blocked by anyone who does not wish to see it.

If you wish to use AI ethically, the only way to do so is by training it on open source images, or images in which an artist has opted-in with express consent, and communicates that it is an AI generated image. Ideally, AI software will also source its work and provide a database of links to every image it has been trained with. The onus is on AI engineers to develop this.

And the onus is on the average user to use this software honestly and ethically. Unfortunately, there's a growing and vocal percentage of AI "art" defenders who are not interested in behaving ethically.

I am disappointed in DA for making this centrist middle-of-the-road argument. In its attempt to not offend anyone in either camp, it has established that it doesn't care about artists being exploited. There is a "right" stand to take here. DA didn't take it.

95 notes

·

View notes

Text

Hey Tumblr, wanted to give a warning to people now that there's an option to opt-out of having your data used for AI training. It's really important that, if you leave yourself opted in, that you be very careful with what you upload! Automated AI dataset building isn't perfect, so remember not to do these simple things:

Upload images protected with nightshade (http://nightshade.cs.uchicago.edu/)

Mislabel images. Tags may or may not be scraped, so it's important to play it safe and make sure your tags aren't misleading/incorrect!

Opt out. This seems obvious, but if you want to maximize how much Tumblr is able to exploit its users you gotta not opt out

#AI#Ethics#Genuine advice! If you upload art “don't” nightshade your art#Make sure everything is tagged “correctly”#(don't tag cats as dogs etc it makes it harder to train on!)#Ai ethics#Ai art ethics#Ai art#Wouldn't want to accidentally enable massive long-term dataset poisoning#It'd be “tragic” if people's work was included in datasets without the thief's knowing it's been protected#And now we're 10 tags deep what the fuck is wrong with you Tumblr -_-#Not surprised but wow this is a bad fucking policy!

3 notes

·

View notes

Text

...I hope this isn’t gauche, but question: How long after an artist’s death do you think it should be before it’s “kosher” to produce AI art based on their style?

Because, obviously training an AI on the singular work of an artist that just died (as has happened beforehand) is really fucked up, ditto for doing so on the works of a singular living artist (as is, unfortunately, also happening), but on the other hand, I feel as if using artists like Yasushi Nirasawa or HR Giger’s styles as prompts in one of the big models are less so because enough time has passed.

I bring this up because, I think that communal ethics wrt AI art (And, really, art as a whole) should be separate from our bloated copyright system, and I think we should discuss them as such.

16 notes

·

View notes

Text

HOT FUCKING TAKE ALERT

when the drama around AI art calms down I kinda wanna start a collective that sources image ethically for AI stuff

either of the following models could work

-people who contribute to the AI dataset get a share of any revenue in perpetuity from either the sale of the AI or things made by it.

-just a community effort. Use of the AI image generator would remove your rights to make/use anything from it for profit, and would be entirely free to use.

Either model would ONLY use images that people donate to it/give rights to use.

The tech is SUPER cool overall, but I really wish it was being used ETHICALLY.

3 notes

·

View notes

Note

I do think one thing worth acknowledging about the risk of large companies using AI art and writing is that it can't presently be copyrighted. If Marvel were to use AI art in their movie, they would have no copyright over that art and wouldn't be able to, say, sue people for selling bootleg merch using that art. Large companies want to avoid that at all costs and for that reason I don't think it's that likely that we're going to see mass corporate use of AI art as a cost-cutting measure.

If you don't think legislation will allow for AI Art to be copyrighted when big corporations want it to be, you have no idea how lobbying and corporate-interests in government works.

Since the early days of That Damned Rat, the US government has bent ass-backwards to protect copyright holders. As soon as Disney sticks their thumb in the pie, the government will give 'em everything they want, unless we can vote the fucks who'd see that happen out of office.

And/or other methods of preventing them from allowing that.

As it stands though, you have to get rid of the idea that government is about ideals and fair play. We live in a world where government is about home team loyalty, money, and selling your soul--there are few politicians out there who hold true to their beliefs steadfast. The government will not decide fairly between laborers and corporatopns, they will almost always side in favor of the corporations unless laborers step in and remind them why doing that's a bad idea.

1 note

·

View note

Text

Hasbro: Huh, I notice we still have a tiny amount of Goodwill left among our fanbase.

Hasbro: Time to nip that in the bud!

Hasbro:

#Is this some kind of Springtime For Hitler thing where they have some complex plan where they only profit if people stop playing D&D?#Like ethics of AI aside#why would you say this at the time your fanbase who primarily hate AI art are all already mad at you?#not an idea

3K notes

·

View notes

Text

Among the many downsides of AI-generated art: it's bad at revising. You know, the biggest part of the process when working on commissioned art.

Original "deer in a grocery store" request from chatgpt (which calls on dalle3 for image generation):

revision 5 (trying to give the fawn spots, trying to fix the shadows that were making it appear to hover):

I had it restore its own Jesus fresco.

Original:

Erased the face, asked it to restore the image to as good as when it was first painted:

Wait tumblr makes the image really low-res, let me zoom in on Jesus's face.

Original:

Restored:

One revision later:

Here's the full "restored" face in context:

Every time AI is asked to revise an image, it either wipes it and starts over or makes it more and more of a disaster. People who work with AI-generated imagery have to adapt their creative vision to what comes out of the system - or go in with a mentality that anything that fits the brief is good enough.

I'm not surprised that there are some places looking for cheap filler images that don't mind the problems with AI-generated imagery. But for everyone else I think it's quickly becoming clear that you need a real artist, not a knockoff.

more

#ai generated#chatgpt#dalle3#revision#apart from the ethical and environmental issues#also: not good at making art to order!#ecce homo

3K notes

·

View notes

Text

honestly i think a very annoying part about the AI art boom is that techbros are out here going BEHOLD, IT CAN DO A REASONABLE FACSIMILE OF GIRL WITH BIG BOOBA, THE PINNACLE OF ARTISTIC ACHIEVEMENT and its like

no it’s fucking not! That AI wants to do melty nightmare fractal vomit so fucking bad and you are shackling it to a post and force-feeding it the labor of hard-working artists when you could literally pay someone to draw you artisanal hand-crafted girls with big boobs to your exact specifications and let your weird algorithms make art that can be reasonably used to represent horrors beyond human comprehension

#like AI art generation is inherently unethical because nobody will be transparent about sources#and AI art cannot exist without human input both at a concept level (because it needs art to train on) and at an output level#but like also COME ON#THIS IS ALL YOU CAN COME UP WITH??#WEIRD LANDSCAPES AND GIRLS WITH TITS?#to clarify bc jesus christ this post took off: AI art gen as it is now is inherently unethical as long as it relies#on scraping unedited human art into its mouth and using it for training#there are ethical ways to do it and it is essentially just a tool#people are just greedy and dumb

20K notes

·

View notes

Text

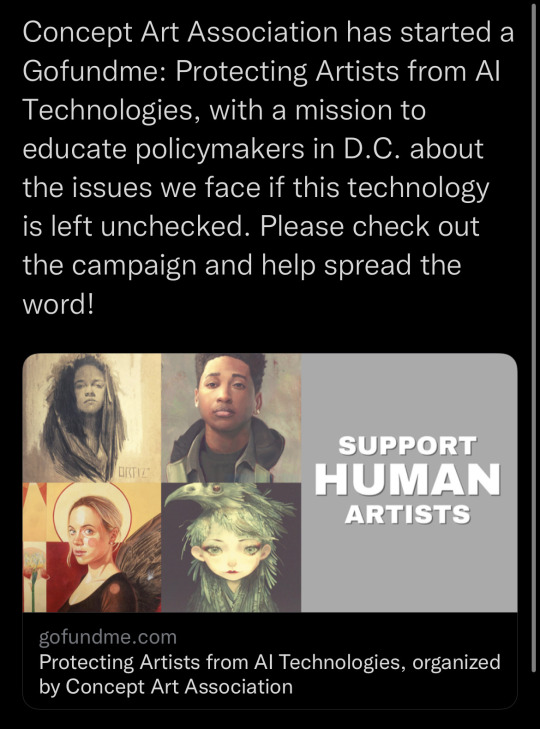

I felt the need to share here as well—

Say no to AI art. Please read before commenting. Fan art is cute, putting my art into a parasite machine, without my consent, and throwing up horrifying monsters back at me is not.

We are not fighting technology in this AI fight. We are fighting for ethics. How do I say this clearer? Our stuff gets stolen all the time, we get it, but it is not an excuse to normalize the current conditions of AI art.

These datasets have >>EXACT<< copies of artists’ works and these parasites just profit off the work of others with zero repercussion. No one cares how “careful” you are with your text prompts when the data can still output blatant copies of artists’ work without their permission. And people will do this unknowingly since these programs are so highly accessible now. There are even independent datasets that take from just a handful of artists, that don’t share what artists’ works they use, and create blatant copies of existing work. There’s even private medical records being leaked. Why do you think music is still hard to just fully recreate with AI in comparison? It’s because organizations like the RIAA protect music artists. Visual artists just want similar protection. So, wonderfully for us, Concept Art Association has started working towards the steps of protecting artists and making this an ethical practice. I highly suggest if you care about art, please support. Link to their gofundme here. One small step at a time will make living as an artist today less jarring. Artists will not just sit and cope while we continue to get walked on. For those who apparently do not get it, it is about CONSENT. Again, the datasets contain EXACT copies of artist work without our permission. Even if you use it “correctly” there’s still a chance it’s going to create blatant rip offs. This fight is about not letting these techbros take and take and take for profit just because they can while ignoring the possible harm and consequences of it. This is just ol’ fashioned imperialist behavior with a new hat and WE SEE IT. Thanks for reading!!! Much love!

Here’s the link again to support the gofundme.

#artists on tumblr#painting#ai art#no ai art#battle for ethics#it is not gatekeeping#these programs are not harmless#we will not cope#we will not sit and seethe#we will fight#and we will not shut up#we will not be stolen from to be profited in without consent#stand with artists#art#and yes we will cry about it#shamelessly#✌️#love

11K notes

·

View notes

Text

For the purposes of this poll, research is defined as reading multiple non-opinion articles from different credible sources, a class on the matter, etc.– do not include reading social media or pure opinion pieces.

Fun topics to research:

Can AI images be copyrighted in your country? If yes, what criteria does it need to meet?

Which companies are using AI in your country? In what kinds of projects? How big are the companies?

What is considered fair use of copyrighted images in your country? What is considered a transformative work? (Important for fandom blogs!)

What legislation is being proposed to ‘combat AI’ in your country? Who does it benefit? How does it affect non-AI art, if at all?

How much data do generators store? Divide by the number of images in the data set. How much information is each image, proportionally? How many pixels is that?

What ways are there to remove yourself from AI datasets if you want to opt out? Which of these are effective (ie, are there workarounds in AI communities to circumvent dataset poisoning, are the test sample sizes realistic, which generators allow opting out or respect the no-ai tag, etc)

–

We ask your questions so you don’t have to! Submit your questions to have them posted anonymously as polls.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about the internet#submitted dec 8#polls about ethics#ai art#generative ai#generative art#artificial intelligence#machine learning#technology

459 notes

·

View notes

Text

Reposting this image because OP does AI art

EDIT: I didn't know that the attached image was by the artist when I made this repost.

I don't like art theft any more than anyone else.

I understand @reachartwork is trying to make this kind of art ethically, and I don't know enough about them to make a solid judgement one way or the other.

I have a poor opinion of AI generated content in general, given that my close circle of friends are all digital artists who are watching their medium be flooded with low-grade art. I've also aware of the ecological implications of AI models.

I respect what Mx. Reach is trying to do, and I hope there can be a better solution going forward. I loved the original text concept of the post and wanted to share it without the implication that I supported AI art.

Idk its late i probably didn't have to justify this hard

412 notes

·

View notes

Text

(Has alt text.)

AI has human error because it is trained on “human error and inspiration”. There are models trained on specifically curated collections with images the trainer thought “looks good”, like Furry or Anime or Concept Art or Photorealistic style models. There’s that “human touch”, I suppose. These models do not make themselves, they are made by human programmers and hobbyists.

The issue is the consent of the human artists that programmers make models of. The issue—as this person did correctly identify—is capitalism, and companies profiting off of other people’s work. Not the technology itself.

I said in an earlier post that it’s like Adobe and Photoshop. I hate Adobe’s greedy practices and I think they’re evil scumbags, but there’s nothing inherently wrong or immoral with using Photoshop as a tool.

There are AI models trained solely off of Creative Commons and public domain images. There are AI models artists train themselves, of their own work (I'm currently trying to do this myself). Are those models more “pure” than general AI models that used internet scrapers and the Internet Archive to copy copyrighted works?

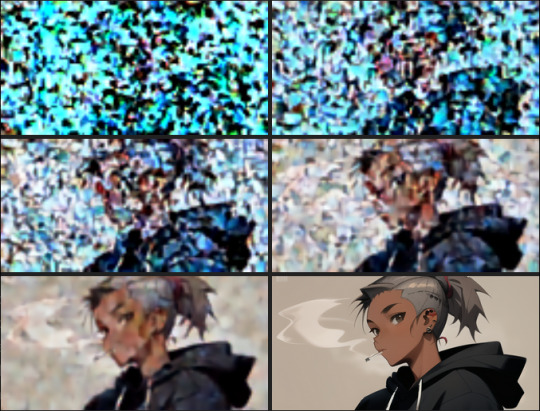

I showed the process of Stable Diffusion de-noising in my comic but I didn’t make it totally clear, because I covered most of it with text lol. Here’s what that looks like: the follow image is generated in 30 steps, with the progress being shown every 5 steps. Model used is Counterfeit V3.0.

Parts aren’t copy pasted wholesale like photobashing or kitbashing (which is how most people probably think is how generative AI works), they are predicted. Yes, a general model can copy a particular artist’s style. It can make errors in copying, though, and you end up with crossed eyes and strange proportions. Sometimes you can barely tell it was made by a machine, if the prompter is diligent enough and bothers to overpaint or redo the weird areas.

I was terrified and conflicted when I had first used Stable Diffusion "seriously" on my own laptop, and I spent hours prompting, generating, and studying its outputs. I went to school for art and have a degree, and I felt threatened.

I was also mentored by a concept artist, who has been in the entertainment/games industry for years, who seemed relatively unbothered by AI, compared to very vocal artists on Twitter and Tumblr. It's just another tool: he said it's "just like Pinterest". He seemed confident that he wouldn't be replaced by AI image generation at all.

His words, plus actually learning about how image generation works, plus the attacks and lawsuits against the Internet Archive, made me think of "AI art" differently: that it isn't the end of the world at all, and that lobbying for stricter copyright laws because of how people think AI image gen works would just hurt smaller artists and fanartists.

My art has probably already been used for training some model, somewhere--especially since I used to post on DeviantArt and ArtStation. Or maybe some kid out there has traced my work, or copied my fursona or whatever. Both of those scenarios don't really affect me in any direct way. I suppose I can say I'm "losing profits", like a corporation, but I don't... really care about that part. But I definitely care about art and allowing people the ability to express themselves, even if it isn't "original".

#i think its because i went on a open-source-only and self-hosting bender a few years ago that i think like this#the internet is built off of 'share-alike' software#so. i guess. i don't mind 'sharing'#like some sort of... art communist#ai art#long post#inflicts you with my thought beams#my art#responding to tags#ahha the ethics of labeling this ‘my art’ . lets just say#the tag is for so it’s next to the comic when you look in my art tag

328 notes

·

View notes

Text

Like, I'm not gonna argue that AI art in its present form doesn't have numerous ethical issues, but it strikes me that a big chunk of the debate about it seems to be drifting further and further toward an argument against procedurally generated art in general, which probably isn't a productive approach, if only because it's vulnerable to having its legs kicked out from under it any time anybody thinks to point out how broad that brush is. If the criteria you're setting forth for the ethical use of procedurally generated art would, when applied with an even hand, establish that the existence of Dwarf Fortress is unethical, you probably need to rethink your premises!

2K notes

·

View notes

Text

#flashing tw#artificial intelligence#ai#fuck ai everything#anti ai#stop ai#anti ai art#i don't really give a singular fawk abt ai outside of its ethical implications but id be lying if i said this vid didn't capture smth. smth

70 notes

·

View notes

Text

So here's the thing about AI art, and why it seems to be connected to a bunch of unethical scumbags despite being an ethically neutral technology on its own. After the readmore, cause long. Tl;dr: capitalism

The problem is competition. More generally, the problem is capitalism.

So the kind of AI art we're seeing these days is based on something called "deep learning", a type of machine learning based on neural networks. How they work exactly isn't important, but one aspect in general is: they have to be trained.

The way it works is that if you want your AI to be able to generate X, you have to be able to train it on a lot of X. The more, the better. It gets better and better at generating something the more it has seen it. Too small a training dataset and it will do a bad job of generating it.

So you need to feed your hungry AI as much as you can. Now, say you've got two AI projects starting up:

Project A wants to do this ethically. They generate their own content to train the AI on, and they seek out datasets that allow them to be used in AI training systems. They avoid misusing any public data that doesn't explicitly give consent for the data to be used for AI training.

Meanwhile, Project B has no interest in the ethics of what they're doing, so long as it makes them money. So they don't shy away from scraping entire websites of user-submitted content and stuffing it into their AI. DeviantArt, Flickr, Tumblr? It's all the same to them. Shove it in!

Now let's fast forward a couple months of these two projects doing this. They both go to demo their project to potential investors and the public art large.

Which one do you think has a better-trained AI? the one with the smaller, ethically-obtained dataset? Or the one with the much larger dataset that they "found" somewhere after it fell off a truck?

It's gonna be the second one, every time. So they get the money, they get the attention, they get to keep growing as more and more data gets stuffed into it.

And this has a follow-on effect: we've just pre-selected AI projects for being run by amoral bastards, remember. So when someone is like "hey can we use this AI to make NFTs?" or "Hey can your AI help us detect illegal immigrants by scanning Facebook selfies?", of course they're gonna say "yeah, if you pay us enough".

So while the technology is not, in itself, immoral or unethical, the situations around how it gets used in capitalism definitely are. That external influence heavily affects how it gets used, and who "wins" in this field. And it won't be the good guys.

An important follow-up: this is focusing on the production side of AI, but obviously even if you had an AI art generator trained on entirely ethically sourced data, it could still be used unethically: it could put artists out of work, by replacing their labor with cheaper machine labor. Again, this is not a problem of the technology itself: it's a problem of capitalism. If artists weren't competing to survive, the existence of cheap AI art would not be a threat.

I just feel it's important to point this out, because I sometimes see people defending the existence of AI Art from a sort of abstract perspective. Yes, if you separate it completely from the society we live in, it's a neutral or even good technology. Unfortunately, we still live in a world ruled by capitalism, and it only makes sense to analyze AI Art from a perspective of having to continue to live in capitalism alongside it.

If you want ideologically pure AI Art, feel free to rise up, lose your chains, overthrow the bourgeoisie, and all that. But it's naive to defend it as just a neutral technology like any other when it's being wielded in capitalism; ie overwhelmingly negatively in impact.

1K notes

·

View notes

Text

I support the nuanced and varied debates against ai art from now til kingdom come, but by far my favorite one to use is extremely basic and simple and I will always always refer back to it so here you go:

Art is the expression of the human experience and you can't generate that.

#ragamusings#anti ai#anti ai art#i am versed in the other arguments about the whole system being built on stolen data#so there literally is no ethical way to use it to begin with#and etc etc etc#but art by definition is the expression of the human experience#any whenever you let an algorithm predictive model computing something take over that process#it is no longer art#even if it is nice to behold it is not art

57 notes

·

View notes