#AI Data Transcription

Explore tagged Tumblr posts

Text

At the California Institute of the Arts, it all started with a videoconference between the registrar’s office and a nonprofit.

One of the nonprofit’s representatives had enabled an AI note-taking tool from Read AI. At the end of the meeting, it emailed a summary to all attendees, said Allan Chen, the institute’s chief technology officer. They could have a copy of the notes, if they wanted — they just needed to create their own account.

Next thing Chen knew, Read AI’s bot had popped up inabout a dozen of his meetings over a one-week span. It was in one-on-one check-ins. Project meetings. “Everything.”

The spread “was very aggressive,” recalled Chen, who also serves as vice president for institute technology. And it “took us by surprise.”

The scenariounderscores a growing challenge for colleges: Tech adoption and experimentation among students, faculty, and staff — especially as it pertains to AI — are outpacing institutions’ governance of these technologies and may even violate their data-privacy and security policies.

That has been the case with note-taking tools from companies including Read AI, Otter.ai, and Fireflies.ai.They can integrate with platforms like Zoom, Google Meet, and Microsoft Teamsto provide live transcriptions, meeting summaries, audio and video recordings, and other services.

Higher-ed interest in these products isn’t surprising.For those bogged down with virtual rendezvouses, a tool that can ingest long, winding conversations and spit outkey takeaways and action items is alluring. These services can also aid people with disabilities, including those who are deaf.

But the tools can quickly propagate unchecked across a university. They can auto-join any virtual meetings on a user’s calendar — even if that person is not in attendance. And that’s a concern, administrators say, if it means third-party productsthat an institution hasn’t reviewedmay be capturing and analyzing personal information, proprietary material, or confidential communications.

“What keeps me up at night is the ability for individual users to do things that are very powerful, but they don’t realize what they’re doing,” Chen said. “You may not realize you’re opening a can of worms.“

The Chronicle documented both individual and universitywide instances of this trend. At Tidewater Community College, in Virginia, Heather Brown, an instructional designer, unwittingly gave Otter.ai’s tool access to her calendar, and it joined a Faculty Senate meeting she didn’t end up attending. “One of our [associate vice presidents] reached out to inform me,” she wrote in a message. “I was mortified!”

24K notes

·

View notes

Text

Detroit by the Numbers: State of the City 2014 - 2025

by: Ted Tansley, Data Analyst Mike Duggan’s tenure as Mayor of Detroit has been focused on data. The number of residents in the city, number of demolitions, number of jobs brought to the city, number of affordable housing built or preserved. All data points get brought up in his yearly State of the City address where he makes his case to the public that he and his team have been doing a good job…

#2015#2025#AI#artificial intelligence#blight#bus#data#data analysis#Detroit#DetroitData#Duggan#jobs#Neighborhood#NLP#OpenAI#park#Project Greenlight#safety#SOTC#State of the City#Ted Tansley#transcripts#Whisper#Youtube

0 notes

Text

How Video Transcription Services Improve AI Training Through Annotated Datasets

Video transcription services play a crucial role in AI training by converting raw video data into structured, annotated datasets, enhancing the accuracy and performance of machine learning models.

#video transcription services#aitraining#Annotated Datasets#machine learning#ultimate sex machine#Data Collection for AI#AI Data Solutions#Video Data Annotation#Improving AI Accuracy

0 notes

Text

Harness the power of AI and machine learning to unlock the full potential of video transcription. Our advanced video transcription services deliver unparalleled accuracy and efficiency, transforming spoken words into text with precision. This innovative solution enhances the performance of your machine learning models, providing high-quality data for better insights and decision-making. Ideal for industries such as media, education, healthcare, and legal, our AI-driven transcription services streamline data processing, saving time and reducing costs. Experience the future of video transcription and elevate your data-driven projects with our cutting-edge technology.

0 notes

Text

The most extensive collection of transcription factor binding data in human tissues ever compiled

- By InnoNurse Staff -

Transcription factors (TFs) are proteins that bind to specific DNA sequences to regulate the transcription of genetic information from DNA to mRNA, impacting gene expression and various biological processes, including brain functions. While TFs have been studied extensively, their binding dynamics in human tissues are not well understood.

Researchers from the HudsonAlpha Institute for Biotechnology, University of California-Irvine, and University of Michigan compiled the largest TF binding dataset to date, aiming to understand how TFs contribute to gene expression and brain function. This dataset could reveal how gene regulation impacts neurodegenerative and psychiatric disorders.

The study, led by Dr. Richard Myers, utilized an innovative technique called ChIP-seq to capture and sequence DNA fragments bound by TFs. Experiments were conducted on different brain regions from postmortem tissues donated by individuals, allowing the researchers to map TF activity in the genome.

Findings suggest that regions bound by fewer TFs might be crucial, as minor changes there could significantly impact nearby genes. The dataset can help scientists study TFs, gene regulation, and their roles in specific brain functions and diseases, potentially aiding in the development of new therapies.

Image credit: Loupe et al. (Nature Neuroscience, 2024).

Read more at Medical Xpress

///

Other recent news and insights

BU researchers develop a new AI program to predict the likelihood of Alzheimer's disease (Boston University)

Northeastern researchers create an AI system for breast cancer diagnosis with nearly 100% accuracy (Northeastern University)

Wearable sensors and AI aims to revolutionize balance assessment (Florida Atlantic University)

#transcription factors#data science#medtech#health informatics#health tech#dna#mrna#genetics#genomics#neuroscience#brain#ai#alzheimers#cancer#oncology#breast cancer#diagnostics#wearables#sensors#balance#aging

0 notes

Text

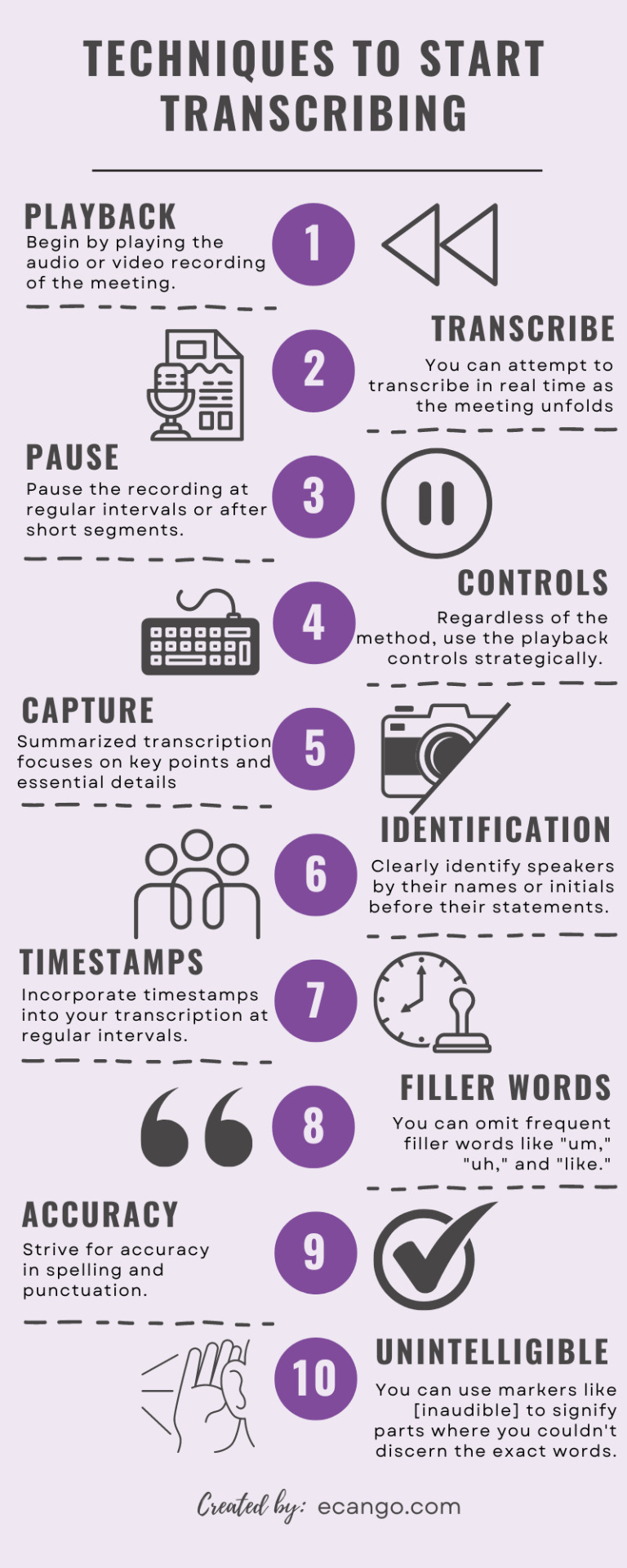

Pro Tips for Effective Media Library Handling in Transcription and Subtitling

In today's digital age, the demand for accurate transcription and high-quality subtitling services has skyrocketed. Whether it's for video content, podcasts, webinars, or other forms of multimedia, the ability to effectively handle media libraries is essential for professionals in these fields.

The process of transcribing audio and creating subtitles not only requires a keen understanding of language and context but also proficient management of media assets.

Let’s delve into pro tips and strategies for efficiently handling media libraries in the context of transcription and subtitling. These insights will empower transcriptionists, subtitlers, and content creators to streamline their workflows, enhance accuracy, and deliver exceptional results to their audiences.

Whether you're a seasoned expert or just starting in this field, these tips will prove invaluable in your journey towards media transcription and subtitling excellence.

Organizing Your Media Files

Effective organization of your media files is crucial for efficient transcription and subtitling processes. A well-structured media library can save you a significant amount of time and effort.

Here are some tips for organizing your media files:

1. File Naming: Develop a consistent and descriptive file naming convention. Include relevant information such as project name, date, and content description. For example, "ProjectName_Episode1_2023-10-05_Interview.mp4."

2. Folder Structure: Create a clear folder structure to categorize your media files. Use folders to separate projects, types of content, or stages of production. This helps you quickly locate the files you need.

3. Metadata: Utilize metadata tags to add additional information to your media files. This can include details like keywords, project notes, and timestamps. Metadata makes it easier to search for and manage files.

4. Version Control: If you have multiple versions of the same media file (e.g., different edits or drafts), use version control to keep them organized. Consider appending version numbers or dates to filenames.

5. Backup Strategy: Implement a robust backup strategy to protect your media library from data loss. Regularly back up your files to external drives or cloud storage services.

Choosing the Right Transcription Tools

Selecting the appropriate transcription tools is crucial for accuracy and efficiency in your transcription and subtitling work.

Here are some considerations when choosing these tools:

1. Transcription Software: Look for transcription software or services that offer features like automatic speech recognition (ASR), timestamping, and easy integration with your media library.

2. Subtitle Software: For subtitling, choose a dedicated subtitle editing tool that supports industry-standard formats like SRT, VTT, or SCC.

3. Compatibility: Ensure that the transcription and subtitle tools you select are compatible with your media file formats. Some tools may have limitations on the types of files they can work with.

4. Accuracy and Quality: Consider the accuracy of the transcription tool's speech recognition system. High-quality ASR technology can save you time on manual corrections.

5. Collaboration Features: If you're working in a team, look for tools that offer collaboration features, such as the ability to share transcripts or subtitle projects with colleagues and track changes.

6. Cost and Licensing: Evaluate the cost of the tools, whether they offer free trials, and whether they require ongoing subscriptions or one-time purchases.

By carefully organizing your media files and selecting the right transcription and subtitling tools, you'll set a strong foundation for efficient and effective media library handling in your transcription and subtitling workflow.

Efficient Transcription Workflow

Creating an efficient transcription workflow is crucial for timesaving and maintaining accuracy in transcription and subtitling projects.

To streamline this process, it's recommended to establish a transcription template with timestamps and speaker labels, embrace keyboard shortcuts for quicker navigation, begin at a comfortable transcription speed and gradually increase it.

Utilize automatic timestamping features in transcription software and allocate time for proofreading and editing to rectify potential errors from automated transcription tools, ensuring consistency and precision throughout your projects.

Subtitling Best Practices

Subtitling requires attention to detail and adherence to specific guidelines for readability and synchronization with the video.

Here are some best practices for subtitling:

1. Subtitle Length: Keep subtitles concise and readable. Limit the number of characters per line and the number of lines on the screen at once. Avoid overwhelming viewers with lengthy subtitles.

2. Timing: Ensure that subtitles appear and disappear in sync with the spoken words and natural pauses in the dialogue. Use timestamping features to fine-tune the timing.

3. Speaker Identification: Clearly identify different speakers in the subtitles, especially in conversations or interviews. Use speaker labels to indicate who is speaking.

4. Font and Styling: Choose a legible font and ensure that the subtitles are easily readable against the background. Consider using bold text for emphasis or italics for off-screen dialogue.

5. Consistency: Maintain consistency in subtitle formatting, including font size, color, and placement. Consistency enhances the viewing experience.

6. Cultural Sensitivity: Be sensitive to cultural nuances and idiomatic expressions when translating subtitles. Ensure that translations accurately convey the intended meaning.

7. Testing: Test your subtitles on different devices and screen sizes to ensure they are legible and properly synchronized.

8. Compliance: If your subtitles are intended for a specific platform or region, be aware of any subtitle format requirements or guidelines set by that platform or region.

Implementing this transcription and subtitling best practices, you'll enhance the quality and professionalism of your media content while maintaining efficiency in your workflow.

Collaboration and Communication

Effective collaboration and communication are key to successful media library handling in transcription and subtitling projects, especially when working in a team or with clients.

Here are some suggestions for promoting teamwork and effective communication:

Project Management Tools: Consider using project management tools like Trello, Asana, or Slack to keep track of tasks, deadlines, and project progress. These tools can help your team stay organized and informed.

2. Clear Instructions: When assigning tasks or receiving instructions, provide clear and detailed information about expectations, guidelines, and any specific requirements for the transcription and subtitling work.

3. Feedback Loops: Create a feedback loop with team members or clients to ensure that transcripts and subtitles meet their expectations. Regularly seek feedback and make necessary revisions promptly.

4. Communication Channels: Establish efficient communication channels within your team. Whether it's email, chat, video calls, or project management software, choose a method that ensures everyone stays informed and can easily reach each other.

5. Version Control: If multiple team members are working on the same project, implement version control practices to avoid conflicts and maintain a history of changes made to transcripts and subtitles.

6. File Sharing: Use secure and convenient file-sharing platforms like Google Drive, Dropbox, or SharePoint to share media files, transcripts, and subtitle files. Ensure that access permissions are appropriately set.

7. Conflict Resolution: Develop a protocol for handling conflicts or disagreements within the team. Have a designated person or process in place to address and resolve issues promptly.

8. Documentation: Maintain clear documentation of project details, decisions, and communication records. This can be invaluable for reference and accountability.

9. Client Updates: If you're working with clients, provide regular project updates, status reports, and milestones to keep them informed about progress and any potential delays.

10. Language and Cultural Considerations: If working with international clients or team members, be mindful of language barriers and cultural differences in communication. Make sure that everyone is aligned when it comes to project objectives and anticipated outcomes.

Effective collaboration and communication not only improve the efficiency of your transcription and subtitling projects but also enhance overall project satisfaction and the quality of the final deliverables.

Backup and Data Management

Backup and Data Management is essential in the digital age to protect data from various threats like hardware failure, malware, and accidents. It ensures data security, business continuity, and compliance with regulations.

Here are the key components:

1.Data Backup: This involves creating copies of your data and storing them in a separate location. Backup can be done on-site, off-site, or in the cloud, depending on your needs and preferences.

2. Data Recovery: A solid data management strategy includes robust recovery procedures to ensure quick and efficient data restoration in case of data loss. This involves not only the backup itself but also the ability to retrieve and use the backed-up data effectively.

3. Data Archiving: Archiving involves the long-term storage of data that is not actively used but may be required for compliance, historical analysis, or reference purposes.

4. Data Versioning: Maintaining multiple versions of files is crucial, especially in collaborative environments. You can use this functionality to return to previous versions when necessary.

5. Data Lifecycle Management: Understanding the lifecycle of data, from creation to disposal, helps in efficient data management. It includes policies and procedures for data retention and deletion.

Effective data management is crucial for individuals and businesses to safeguard their valuable information.

Future-Proofing Your Media Library

In today's fast-paced digital age, the importance of future-proofing your media library cannot be overstated.

With the constant evolution of technology and the ever-changing digital landscape, media collections face numerous challenges, from format obsolescence to content degradation.

However, by implementing effective strategies and staying abreast of emerging trends, you can safeguard your media assets, ensuring they retain their value and accessibility for years to come.

1. Format Flexibility: One of the primary concerns in future-proofing your media library is staying ahead of format changes. As technology advances, file formats come and go. To combat this, consider adopting widely accepted, open-source formats for your media files. Additionally, maintain backups in multiple formats to hedge against sudden shifts in industry standards.

2. Metadata Management: Efficient metadata management is crucial for the long-term viability of your media library. Robust metadata ensures that your content remains discoverable and relevant, even as your collection grows. Implementing standardized metadata schemas and diligently updating information can enhance the searchability and organization of your media assets.

3. Preservation Best Practices: Implementing preservation best practices is essential to safeguard against content degradation. Regularly assess the condition of your media and establish a digitization and migration plan for deteriorating formats. Employing checksums and regular integrity checks can help identify and address data corruption.

4. Cloud-Based Storage: Embracing cloud-based storage solutions can be an effective way to future-proof your media library. Cloud platforms often offer scalable and secure storage options, ensuring that your content remains accessible regardless of your organization's growth or technological changes.

5. Rights Management: Ensure that you have a comprehensive understanding of the rights associated with your media assets. Keep meticulous records of licensing agreements and permissions and stay informed about copyright laws and regulations to avoid legal issues that may arise in the future.

6. Adaptive Technology Integration: Stay abreast of emerging technologies such as artificial intelligence, machine learning, and content management systems. These tools can assist in automating metadata tagging, content analysis, and user engagement, ensuring that your media library remains relevant in the ever-evolving digital landscape.

7. User-Centric Design: Design your media library with the user in mind. An intuitive and user-friendly interface will encourage engagement and ensure that your media collection remains a valuable resource for both internal and external stakeholders.

8. Disaster Recovery and Redundancy: Don't underestimate the importance of disaster recovery and redundancy planning. Regularly back up your media assets, both on-site and off-site, to protect against data loss caused by hardware failures, natural disasters, or cyberattacks.

9. Continuous Evaluation: Futureproofing is an ongoing process. Regularly evaluate your media library strategies and technologies, adjusting as needed to adapt to changing circumstances and emerging trends.

10. Collaboration and Networking: Engage with industry peers, attend conferences, and participate in professional networks to stay informed about the latest developments in media management and preservation. Collaboration can provide valuable insights and access to shared resources.

Final Thoughts

Effective media library handling is a critical component of successful transcription and subtitling workflows. By following the pro tips outlined in this guide, you can enhance your efficiency, accuracy, and collaboration in these tasks.

From organizing your media files and choosing the right transcription tools to streamlining your workflow and implementing best practices for subtitling, each step contributes to the quality of your final deliverables. Additionally, collaboration and communication ensure that team members and clients are on the same page, leading to smoother project execution.

Moreover, by focusing on backup and data management, you protect your valuable media assets, while time-saving strategies boost your productivity. Finally, future-proofing your media library ensures that your content remains accessible and relevant in the ever-evolving landscape of media production.

By integrating these practices into your transcription and subtitling processes, you'll not only save time and effort but also elevate the overall quality of your work. With a well-organized media library and efficient workflows, you'll be better equipped to meet the demands of today's media production and localization challenges.

#social media#file manager#management#technology#business#strategy#content creator#artificial intelligence#startup#collaboration#data management#transcript#tools#ai tools#ai technology#subtitles#search engine optimization

0 notes

Text

"Canadian scientists have developed a blood test and portable device that can determine the onset of sepsis faster and more accurately than existing methods.

Published today [May 27, 2025] in Nature Communications, the test is more than 90 per cent accurate at identifying those at high risk of developing sepsis and represents a major milestone in the way doctors will evaluate and treat sepsis.

“Sepsis accounts for roughly 20 per cent of all global deaths,” said lead author Dr. Claudia dos Santos, a critical care physician and scientist at St. Michael’s Hospital. “Our test could be a powerful game changer, allowing physicians to quickly identify and treat patients before they begin to rapidly deteriorate.”

Sepsis is the body’s extreme reaction to an infection, causing the immune system to start attacking one’s own organs and tissues. It can lead to organ failure and death if not treated quickly. Predicting sepsis is difficult: early symptoms are non-specific, and current tests can take up to 18 hours and require specialized labs. This delay before treatment increases the chance of death by nearly eight per cent per hour.

[Note: The up to 18 hour testing window for sepsis is a huge cause of sepsis-related mortality, because septic shock can kill in as little as 12 hours, long before the tests are even done.]

[Analytical] AI helps predict sepsis

Examining blood samples from more than 3,000 hospital patients with suspected sepsis, researchers from UBC and Sepset, a UBC spin-off biotechnology company, used machine learning to identify a six-gene expression signature “Sepset” that predicted sepsis nine times out of 10, and well before a formal diagnosis. With 248 additional blood samples using RT-PCR, (Reverse Transcription Polymerase Chain Reaction), a common hospital laboratory technique, the test was 94 per cent accurate in detecting early-stage sepsis in patients whose condition was about to worsen.

“This demonstrates the immense value of AI in analyzing extremely complex data to identify the important genes for predicting sepsis and writing an algorithm that predicts sepsis risk with high accuracy,” said co-author Dr. Bob Hancock, UBC professor of microbiology and immunology and CEO of Sepset.

Bringing the test to point of care

To bring the test closer to the bedside, the National Research Council of Canada (NRC) developed a portable device they called PowerBlade that uses a drop of blood and an automated sequence of steps to efficiently detect sepsis. Tested with 30 patients, the device was 92 per cent accurate in identifying patients at high risk of sepsis and 89 per cent accurate in ruling out those not at risk.

“PowerBlade delivered results in under three hours. Such a device can make treatment possible wherever a patient may be, including in the emergency room or remote health care units,” said Dr. Hancock.

“By combining cutting-edge microfluidic research with interdisciplinary collaboration across engineering, biology, and medicine, the Centre for Research and Applications in Fluidic Technologies (CRAFT) enables rapid, portable, and accessible testing solutions,” said co-author Dr. Teodor Veres, of the NRC’s Medical Devices Research Centre and CRAFT co-director. CRAFT, a joint venture between the University of Toronto, Unity Health Toronto and the NRC, accelerates the development of innovative devices that can bring high-quality diagnostics to the point of care.

Dr. Hancock’s team, including UBC research associate and co-author Dr. Evan Haney, has also started commercial development of the Sepset signature. “These tests detect the early warnings of sepsis, allowing physicians to act quickly to treat the patient, rather than waiting until the damage is done,” said Dr. Haney."

-via University of British Columbia, May 27, 2025

#public health#medical news#sepsis#cw death#healthcare#medicine#medical care#ai#canada#north america#artificial intelligence#genetics#good news#hope

810 notes

·

View notes

Text

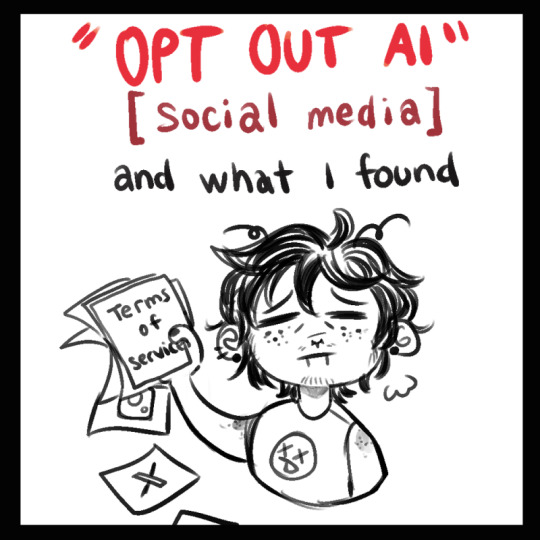

I spent the evening looking into this AI shit and made a wee informative post of the information I found and thought all artists would be interested and maybe help yall?

edit: forgot to mention Glaze and Nightshade to alter/disrupt AI from taking your work into their machines. You can use these and post and it will apparently mess up the AI and it wont take your content into it's machine!

edit: ArtStation is not AI free! So make sure to read that when signing up if you do! (this post is also on twt)

[Image descriptions: A series of infographics titled: “Opt Out AI: [Social Media] and what I found.” The title image shows a drawing of a person holding up a stack of papers where the first says, ‘Terms of Service’ and the rest have logos for various social media sites and are falling onto the floor. Long transcriptions follow.

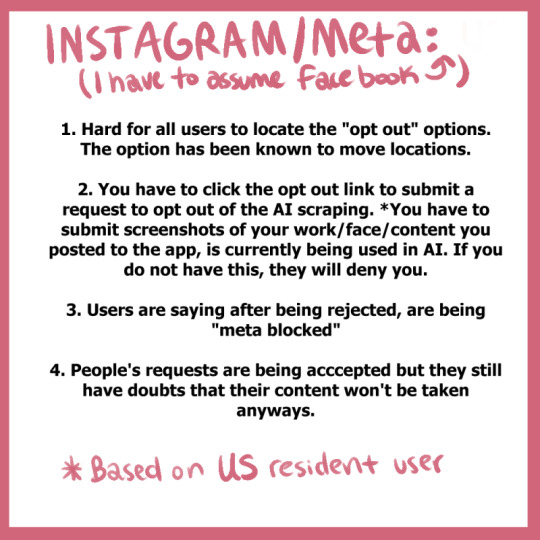

Instagram/Meta (I have to assume Facebook).

Hard for all users to locate the “opt out” options. The option has been known to move locations.

You have to click the opt out link to submit a request to opt out of the AI scraping. *You have to submit screenshots of your work/face/content you posted to the app, is curretnly being used in AI. If you do not have this, they will deny you.

Users are saying after being rejected, are being “meta blocked”

People’s requests are being accepted but they still have doubts that their content won’t be taken anyways.

Twitter/X

As of August 2023, Twitter’s ToS update:

“Twitter has the right to use any content that users post on its platform to train its AI models, and that users grant Twitter a worldwide, non-exclusive, royalty-free license to do so.”

There isn’t much to say. They’re doing the same thing Instagram is doing (to my understanding) and we can’t even opt out.

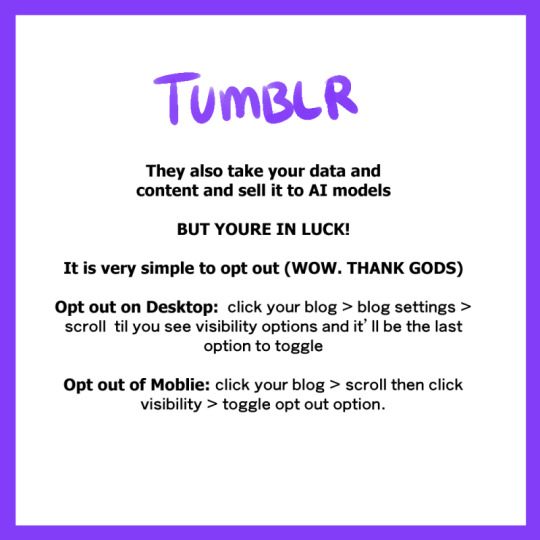

Tumblr

They also take your data and content and sell it to AI models.

But you’re in luck!

It is very simply to opt out (Wow. Thank Gods)

Opt out on Desktop: click on your blog > blog settings > scroll til you see visibility options and it’ll be the last option to toggle

Out out of Mobile: click your blog > scroll then click visibility > toggle opt out option

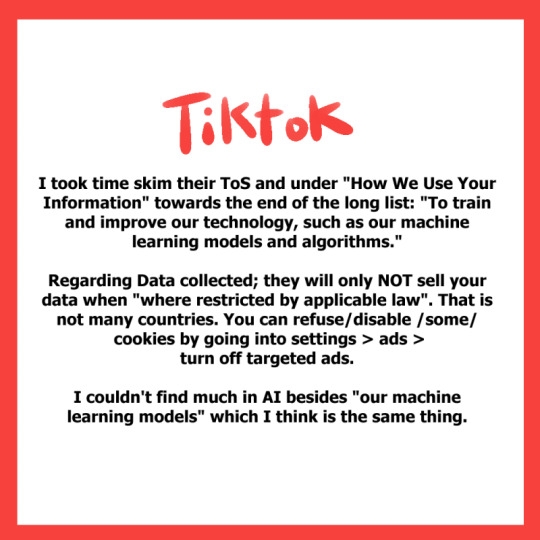

TikTok

I took time skim their ToS and under “How We Use Your Information” and towards the end of the long list: “To train and improve our technology, such as our machine learning models and algorithms.”

Regarding data collected; they will only not sell your data when “where restricted by applicable law”. That is not many countries. You can refuse/disable some cookies by going into settings > ads > turn off targeted ads.

I couldn’t find much in AI besides “our machine learning models” which I think is the same thing.

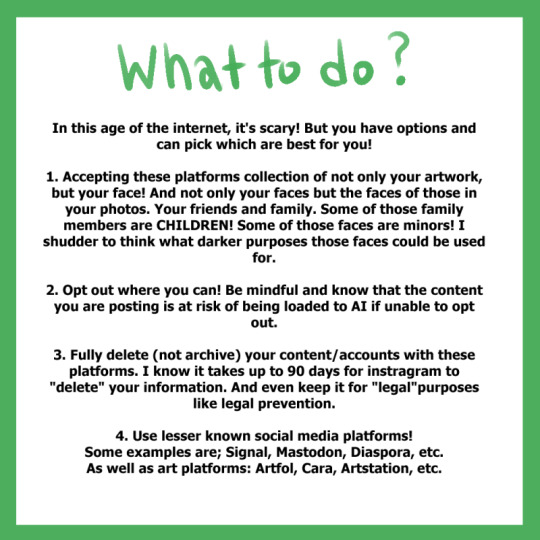

What to do?

In this age of the internet, it’s scary! But you have options and can pick which are best for you!

Accepting these platforms collection of not only your artwork, but your face! And not only your faces but the faces of those in your photos. Your friends and family. Some of those family members are children! Some of those faces are minors! I shudder to think what darker purposes those faces could be used for.

Opt out where you can! Be mindful and know the content you are posting is at risk of being loaded to AI if unable to opt out.

Fully delete (not archive) your content/accounts with these platforms. I know it takes up to 90 days for instagram to “delete” your information. And even keep it for “legal” purposes like legal prevention.

Use lesser known social media platforms! Some examples are; Signal, Mastodon, Diaspora, et. As well as art platforms: Artfol, Cara, ArtStation, etc.

The last drawing shows the same person as the title saying, ‘I am, by no means, a ToS autistic! So feel free to share any relatable information to these topics via reply or qrt!

I just wanted to share the information I found while searching for my own answers cause I’m sure people have the same questions as me.’ \End description] (thank you @a-captions-blog!)

4K notes

·

View notes

Text

How can HAIVO enhance Threads for Business with Sentiment Analysis?

Threads by Instagram, the innovative social media platform enabling users to share short text updates and engage in public conversations, has gained HUGE popularity for its seamless integration with Instagram and unique features.

As the new social media platform gains quick traction from users, brands will need to start understanding the sentiment behind Threads posts. Which can pose a big challenge due to the brevity and potential ambiguity of the content.

To address this challenge, implementing a sentiment analysis tool can provide valuable insights into the emotional tone of Threads' posts. By utilizing natural language processing and Dataset Creation for Machine Learning techniques, this tool can identify the sentiment expressed by individual words and phrases.

For instance, positive sentiment may be attributed to words like “happy,” “excited,” and “love,” while negative sentiment could be associated with words like “sad,” “angry,” and “hate.”

By accurately identifying sentiment, a sentiment analysis tool empowers users to gain a deeper understanding of how people perceive the platform. The application of this tool offers several benefits, such as:

Trend Analysis: Examining sentiment trends over time can unveil shifts in Threads’ overall sentiment, identify peak periods of positive or negative sentiment, and enable proactive measures to enhance user experiences.

User Behavior Insights: Identifying users who consistently express negative sentiment allows for focused investigation, enabling proactive measures to address concerns or provide personalized support.

Enhanced User Experience: Leveraging sentiment analysis, Threads can proactively address user concerns, identify areas for improvement, and tailor experiences to individual needs.

Developing a sentiment analysis tool for Threads requires the expertise of a skilled team in natural language processing and machine learning. This team would train the tool using an extensive dataset of Threads posts to ensure accurate sentiment identification at both word and phrase levels.

With over 2,000 expert multilingual annotators and a rigorous QA process, HAIVO has the necessary expertise and manpower to train a customized sentiment analysis tool for Threads.

To explore how HAIVO can assist you in developing a sentiment analysis tool for Threads, we invite you to reach out to us today.

It’s never too soon to be a fast mover in the social space, and Implementing a sentiment analysis tool within Threads is just the thing to offer significant advantages to brands by providing a deeper understanding of user sentiment and enhancing the overall user experience. HAIVO, with its expertise in natural language processing and machine learning, can collaborate with you to develop a customized sentiment analysis tool tailored specifically to Threads’ requirements. Contact us today to discover how HAIVO can unlock the power of sentiment analysis for your platform.

#Dataset Creation Company#Data Collection for AI#Transcript Annotation#Text Annotation Machine Learning

0 notes

Text

°💸⋆.ೃ🍾࿔*:・Your 2H Sign = How To Make More $$$ 💳⋆.ೃ💰࿔*:・

Your 2nd house is the part of your chart can show you the best side hustle ideas to increase your income. Look at the sign on your 2nd House cusp, its ruling planet, and any planets sitting there. They symbolize out how you monetize.

The 2nd House is the House of Possessions: movable assets, cash flow, food, tools, anything you can trade. The sign on the cusp sets up your style of 'acquisition' (Taurus = slow‑build goods, Scorpio = high‑risk high‑reward holdings), while the ruler’s dignity and aspects describe reliability, or lack thereof, of income.

Planets inside the 2nd act like tenants shaping the property: Jupiter here inflates resources, Saturn conserves but can pinch, Mars spends to make, Venus monetizes aesthetics.

Because the 2nd is in aversion to the Ascendant (no Ptolemaic aspect), you often have to develop its promises actively: wealth isn’t “you,” it’s something you must manage. So, let's look at the kind of side hustles you can do to increase your revenue!

♈︎ Aries 2H: Physical, Fast, ACTION-Driven

(Aries rules motion, competition, fire, physical activity, force)

Personal trainer or group fitness instructor.

Manual labor gigs like junk removal, or yard work (physical and gives instant results.)

Motorcycle/scooter delivery (Uber Eats, DoorDash): speed + autonomy? Very Aries.

Selling refurbished sports equipment.

Pressure washing services, which is oddly satisfying AND includes aggressive water blasting lol.

Fitness bootcamps in local parks (Mars rules the battlefield… or, in this case, bootcamps)

Pop-up self-defense workshops

Bike repair and resale (hands-on + quick turnaround)

Car detailing (mobile service). You vs. grime. Who wins? You.

Sell custom gym gear or accessories.

♉︎ Taurus 2H: Sensory, Grounded, Product-Based

(Taurus rules the senses and the material world, it’s a sign connected to beauty and pleasure)

Bake-and-sell operation (bread, cookies) at markets. Taurus=YES to carbs and cozy smells.

Meal prep or personal chef (nourishing others = peak Taurus.)

Sell plants or houseplant propagation, you’re growing literal value.

Create and sell body care products: lotions, scrubs, soaps… (Venus-ruled.)

Furniture refinishing for resale.

Offer at-home spa services (facials, scrubs.)

Curate and sell gift boxes (Venus loves a well-wrapped present.)

Do minor home repair or furniture assembly.

Build and sell wooden plant stands or decor (wood + plants + aesthetic = Taurus.)

♊︎ Gemini 2H: Communicative, Clever, Multi-Tasking

(Gemini = ruled by Mercury = ideas, speech, tech, variety, teaching)

Freelance writing or blogging.

Transcription or captioning services.

Resume writing/job application support.

Social media management (multitasking + memes.)

Sell printable planners or flashcards (info = money.)

Offer typing or data-entry services, which are low lift & high focus

Sell templates for resumes, bios, or cover letters, Mercury loves a system!

Write email campaigns for small businesses, you can become the voice behind the curtain.

Teach intro to AI tools or chatbots (modern Mercurial real-world applications.)

Create micro-courses on writing or communication.

♋︎ Cancer 2H: Caring, Cozy, DOMESTIC

(Cancer rules the home, food, feelings. It’s the nurturer through and through)

Home organization services, give cluttered homes and their owners love.

Baking and delivering comfort desserts (cookies = hugs in edible form!!)

Make and sell homemade frozen meals, nourishing the body AND soul.

Offer elder companionship visits (heartfelt and so needed.)

Run a daycare or babysitting service. Moon=family.

Run a laundry drop-off/pickup service.

Custom holiday decorating (homes or offices), make it feel like home anywhere.

Help seniors with digital tools (basic tech help.)

Create sentimental gifts like memory jars or scrapbooks.

♌︎ Leo 2H: Expressive, Bold, Entertaining

(Leo rules performance, leadership, fame, visibility, and the desire to SHINE)

Portrait photography (kids, pets, solo, couples.)

Event hosting or party entertainment.

DJ for small events or weddings.

Basic video editing for others (help THEM shine!)

Personalized video messages. charisma = income.

Teach short performance workshops (confidence, improv) to help others own a stage.

Become a personal shopper.

Sell selfie lighting kits or content creator bundles.

Host creative kids camps (theater, dance, art.)

Make reels/TikToks for local businesses (attention = currency.)

♍︎ Virgo 2H: Detailed, Service-Oriented, Practical

(Virgo rules systems, refinement, discernment, organisation, usefulness)

Proofreading or editing work. Spotting a comma out of place or “their/they’re” being misused = Virgo joy.

House cleaning or deep-cleaning services.

Virtual assistant (email, scheduling, admin.)

Sell Notion or Excel templates. Virgo: spreadsheets.

Bookkeeping for small businesses.

Create custom cleaning schedules or checklists.

Offer “organize your digital life” sessions.

Specialize in email inbox cleanups.

♎︎︎ Libra 2H: Tasteful, Charming, Design-Savvy

(Libra = Venus-ruled = style, beauty, balance, aesthetics)

Styling outfits from clients’ own wardrobes.

Become a personal shopper.

Bridal/event makeup services (enhancing natural beauty = Libra.)

Teach etiquette, the power of grace

Curate secondhand outfit bundles.

Custom invitations or event printables that are pretty AND functional.

Offer virtual interior styling consultations.

Sell color palette guides for branding or outfits.

Create custom date night itineraries (romance, planned and packaged=Libra!!)

Style flat-lay photos for products or menus.

Do hair, make-up, nails, etc.

♏︎ Scorpio 2H: Deep, Transformative, Private

(Scorpio rules what’s hidden, intense, and powerful, alchemy, psychology)

Tarot or astrology readings.

Energy healing or bodywork.

Private coaching for money/debt management.

Online investigation or background research (Scorpio = uncovering hidden information)

Teach classes on boundaries, consent, empowerment, etc.

Sell private journal templates for deep self-reflection.

Moderate anonymous support groups or forums.

Specialize in deep-cleaning emotionally loaded spaces (yes, THAT kind of clearing.)

♐︎ Sagittarius 2H: Expansive, Global, Philosophical

(Sag rules teaching, travel, and BIG ideas)

Teach English (or any other language) or become a tutor online

Sell travel guides or digital itineraries, help others travel smarter=Sag

Rent out camping gear or bikes (freedom for rent lol.)

Ghostwrite opinion pieces or thought blogs, say what others are thinking!

Create walking tours for travelers or locals.

Sell travel photography.

Become a travel influencer on the side.

Translate travel documents or resumes.

♑︎ Capricorn 2H: Strategic, Structured, Business-Minded

(Cap rules time, career, limitations, long-term value)

Resume or career coaching, help others climb the “mountain of success”.

Freelance project management.

Property management or Airbnb co-host (passive-ish income.)

Sell templates for business (contracts, invoices).

Create accountability coaching packages.

Sell organizational templates.

Freelance as an operations assistant (the CEO behind the CEO.)

Build a resource hub for freelancers or solopreneurs (structure = empowerment.)

♒︎ Aquarius 2H: Innovative, Digital, Niche

(Aquarius rules tech, rebellion, and the future. But it’s also connected to community!)

Tech repair or setup.

Build websites for local businesses, or anyone else for that matter.

Sell digital products (ebooks, templates).

Run online communities or Discords.

Host workshops on digital privacy or tools. Collective knowledge (Aqua)= power

Build and sell Canva templates for online creators.

Curate niche info packs or digital libraries.

Help people automate parts of their life or business.

♓︎ Pisces 2H: Dreamy, Healing, Imaginative

(Pisces rules the sea, the arts, spirituality, dreams, and all things soft)

Pet sitting or house sitting, caring for beings + quiet time? It’s perfect for this energy.

Sell dreamy artwork or collages.

Offer meditation classes or hypnosis.

Teach art to kids or adults.

Custom poetry or lullaby commissions (very niche tho.)

Sell digital dream journals or prompts.

Make downloadable ambient music loops.

Create printable affirmation cards.

Design calming phone wallpapers or lock screens.

Offer spiritual services (tarot or astrology readings, reiki, etc.)

Thank you for taking the time to read my post!Your curiosity & engagement mean the world to me. I hope you not only found it enjoyable but also enriching for your astrological knowledge.Your support & interest inspire me to continue sharing insights & information with you. I appreciate you immensely.

• 🕸️ JOIN MY PATREON for exquisite & in-depth astrology content. You'll also receive a free mini reading upon joining. :)

• 🗡️ BOOK A READING with me to navigate your life with more clarity & awareness.

#aries#taurus#gemini#cancer zodiac#leo#virgo#libra#scorpio#sagittarius#capricorn#aquarius#pisces#money#abundance#zodiac observations#astro community#astro observations#astrology#astrology signs#horoscope#zodiac#zodiac signs#zodiacsigns#astrology tips#astrology blog

156 notes

·

View notes

Text

Generative AI Is Bad For Your Creative Brain

In the wake of early announcing that their blog will no longer be posting fanfiction, I wanted to offer a different perspective than the ones I’ve been seeing in the argument against the use of AI in fandom spaces. Often, I’m seeing the arguments that the use of generative AI or Large Language Models (LLMs) make creative expression more accessible. Certainly, putting a prompt into a chat box and refining the output as desired is faster than writing a 5000 word fanfiction or learning to draw digitally or traditionally. But I would argue that the use of chat bots and generative AI actually limits - and ultimately reduces - one’s ability to enjoy creativity.

Creativity, defined by the Cambridge Advanced Learner’s Dictionary & Thesaurus, is the ability to produce or use original and unusual ideas. By definition, the use of generative AI discourages the brain from engaging with thoughts creatively. ChatGPT, character bots, and other generative AI products have to be trained on already existing text. In order to produce something “usable,” LLMs analyzes patterns within text to organize information into what the computer has been trained to identify as “desirable” outputs. These outputs are not always accurate due to the fact that computers don’t “think” the way that human brains do. They don’t create. They take the most common and refined data points and combine them according to predetermined templates to assemble a product. In the case of chat bots that are fed writing samples from authors, the product is not original - it’s a mishmash of the writings that were fed into the system.

Dialectical Behavioral Therapy (DBT) is a therapy modality developed by Marsha M. Linehan based on the understanding that growth comes when we accept that we are doing our best and we can work to better ourselves further. Within this modality, a few core concepts are explored, but for this argument I want to focus on Mindfulness and Emotion Regulation. Mindfulness, put simply, is awareness of the information our senses are telling us about the present moment. Emotion regulation is our ability to identify, understand, validate, and control our reaction to the emotions that result from changes in our environment. One of the skills taught within emotion regulation is Building Mastery - putting forth effort into an activity or skill in order to experience the pleasure that comes with seeing the fruits of your labor. These are by no means the only mechanisms of growth or skill development, however, I believe that mindfulness, emotion regulation, and building mastery are a large part of the core of creativity. When someone uses generative AI to imitate fanfiction, roleplay, fanart, etc., the core experience of creative expression is undermined.

Creating engages the body. As a writer who uses pen and paper as well as word processors while drafting, I had to learn how my body best engages with my process. The ideal pen and paper, the fact that I need glasses to work on my computer, the height of the table all factor into how I create. I don’t use audio recordings or transcriptions because that’s not a skill I’ve cultivated, but other authors use those tools as a way to assist their creative process. I can’t speak with any authority to the experience of visual artists, but my understanding is that the feedback and feel of their physical tools, the programs they use, and many other factors are not just part of how they learned their craft, they are essential to their art.

Generative AI invites users to bypass mindfully engaging with the physical act of creating. Part of becoming a person who creates from the vision in one’s head is the physical act of practicing. How did I learn to write? By sitting down and making myself write, over and over, word after word. I had to learn the rhythms of my body, and to listen when pain tells me to stop. I do not consider myself a visual artist - I have not put in the hours to learn to consistently combine line and color and form to show the world the idea in my head.

But I could.

Learning a new skill is possible. But one must be able to regulate one’s unpleasant emotions to be able to get there. The emotion that gets in the way of most people starting their creative journey is anxiety. Instead of a focus on “fear,” I like to define this emotion as “unpleasant anticipation.” In Atlas of the Heart, Brene Brown identifies anxiety as both a trait (a long term characteristic) and a state (a temporary condition). That is, we can be naturally predisposed to be impacted by anxiety, and experience unpleasant anticipation in response to an event. And the action drive associated with anxiety is to avoid the unpleasant stimulus.

Starting a new project, developing a new skill, and leaning into a creative endevor can inspire and cause people to react to anxiety. There is an unpleasant anticipation of things not turning out exactly correctly, of being judged negatively, of being unnoticed or even ignored. There is a lot less anxiety to be had in submitting a prompt to a machine than to look at a blank page and possibly make what could be a mistake. Unfortunately, the more something is avoided, the more anxiety is generated when it comes up again. Using generative AI doesn’t encourage starting a new project and learning a new skill - in fact, it makes the prospect more distressing to the mind, and encourages further avoidance of developing a personal creative process.

One of the best ways to reduce anxiety about a task, according to DBT, is for a person to do that task. Opposite action is a method of reducing the intensity of an emotion by going against its action urge. The action urge of anxiety is to avoid, and so opposite action encourages someone to approach the thing they are anxious about. This doesn’t mean that everyone who has anxiety about creating should make themselves write a 50k word fanfiction as their first project. But in order to reduce anxiety about dealing with a blank page, one must face and engage with a blank page. Even a single sentence fragment, two lines intersecting, an unintentional drop of ink means the page is no longer blank. If those are still difficult to approach a prompt, tutorial, or guided exercise can be used to reinforce the understanding that a blank page can be changed, slowly but surely by your own hand.

(As an aside, I would discourage the use of AI prompt generators - these often use prompts that were already created by a real person without credit. Prompt blogs and posts exist right here on tumblr, as well as imagines and headcannons that people often label “free to a good home.” These prompts can also often be specific to fandom, style, mood, etc., if you’re looking for something specific.)

In the current social media and content consumption culture, it’s easy to feel like the first attempt should be a perfect final product. But creating isn’t just about the final product. It’s about the process. Bo Burnam’s Inside is phenomenal, but I think the outtakes are just as important. We didn’t get That Funny Feeling and How the World Works and All Eyes on Me because Bo Burnham woke up and decided to write songs in the same day. We got them because he’s been been developing and honing his craft, as well as learning about himself as a person and artist, since he was a teenager. Building mastery in any skill takes time, and it’s often slow.

Slow is an important word, when it comes to creating. The fact that skill takes time to develop and a final piece of art takes time regardless of skill is it’s own source of anxiety. Compared to @sentientcave, who writes about 2k words per day, I’m very slow. And for all the time it takes me, my writing isn’t perfect - I find typos after posting and sometimes my phrasing is awkward. But my writing is better than it was, and my confidence is much higher. I can sit and write for longer and longer periods, my projects are more diverse, I’m sharing them with people, even before the final edits are done. And I only learned how to do this because I took the time to push through the discomfort of not being as fast or as skilled as I want to be in order to learn what works for me and what doesn’t.

Building mastery - getting better at a skill over time so that you can see your own progress - isn’t just about getting better. It’s about feeling better about your abilities. Confidence, excitement, and pride are important emotions to associate with our own actions. It teaches us that we are capable of making ourselves feel better by engaging with our creativity, a confidence that can be generalized to other activities.

Generative AI doesn’t encourage its users to try new things, to make mistakes, and to see what works. It doesn’t reward new accomplishments to encourage the building of new skills by connecting to old ones. The reward centers of the brain have nothing to respond to to associate with the action of the user. There is a short term input-reward pathway, but it’s only associated with using the AI prompter. It’s designed to encourage the user to come back over and over again, not develop the skill to think and create for themselves.

I don’t know that anyone will change their minds after reading this. It’s imperfect, and I’ve summarized concepts that can take months or years to learn. But I can say that I learned something from the process of writing it. I see some of the flaws, and I can see how my essay writing has changed over the years. This might have been faster to plug into AI as a prompt, but I can see how much more confidence I have in my own voice and opinions. And that’s not something chatGPT can ever replicate.

151 notes

·

View notes

Text

On Saturday, an Associated Press investigation revealed that OpenAI's Whisper transcription tool creates fabricated text in medical and business settings despite warnings against such use. The AP interviewed more than 12 software engineers, developers, and researchers who found the model regularly invents text that speakers never said, a phenomenon often called a “confabulation” or “hallucination” in the AI field.

Upon its release in 2022, OpenAI claimed that Whisper approached “human level robustness” in audio transcription accuracy. However, a University of Michigan researcher told the AP that Whisper created false text in 80 percent of public meeting transcripts examined. Another developer, unnamed in the AP report, claimed to have found invented content in almost all of his 26,000 test transcriptions.

The fabrications pose particular risks in health care settings. Despite OpenAI’s warnings against using Whisper for “high-risk domains,” over 30,000 medical workers now use Whisper-based tools to transcribe patient visits, according to the AP report. The Mankato Clinic in Minnesota and Children’s Hospital Los Angeles are among 40 health systems using a Whisper-powered AI copilot service from medical tech company Nabla that is fine-tuned on medical terminology.

Nabla acknowledges that Whisper can confabulate, but it also reportedly erases original audio recordings “for data safety reasons.” This could cause additional issues, since doctors cannot verify accuracy against the source material. And deaf patients may be highly impacted by mistaken transcripts since they would have no way to know if medical transcript audio is accurate or not.

The potential problems with Whisper extend beyond health care. Researchers from Cornell University and the University of Virginia studied thousands of audio samples and found Whisper adding nonexistent violent content and racial commentary to neutral speech. They found that 1 percent of samples included “entire hallucinated phrases or sentences which did not exist in any form in the underlying audio” and that 38 percent of those included “explicit harms such as perpetuating violence, making up inaccurate associations, or implying false authority.”

In one case from the study cited by AP, when a speaker described “two other girls and one lady,” Whisper added fictional text specifying that they “were Black.” In another, the audio said, “He, the boy, was going to, I’m not sure exactly, take the umbrella.” Whisper transcribed it to, “He took a big piece of a cross, a teeny, small piece … I’m sure he didn’t have a terror knife so he killed a number of people.”

An OpenAI spokesperson told the AP that the company appreciates the researchers’ findings and that it actively studies how to reduce fabrications and incorporates feedback in updates to the model.

Why Whisper Confabulates

The key to Whisper’s unsuitability in high-risk domains comes from its propensity to sometimes confabulate, or plausibly make up, inaccurate outputs. The AP report says, "Researchers aren’t certain why Whisper and similar tools hallucinate," but that isn't true. We know exactly why Transformer-based AI models like Whisper behave this way.

Whisper is based on technology that is designed to predict the next most likely token (chunk of data) that should appear after a sequence of tokens provided by a user. In the case of ChatGPT, the input tokens come in the form of a text prompt. In the case of Whisper, the input is tokenized audio data.

The transcription output from Whisper is a prediction of what is most likely, not what is most accurate. Accuracy in Transformer-based outputs is typically proportional to the presence of relevant accurate data in the training dataset, but it is never guaranteed. If there is ever a case where there isn't enough contextual information in its neural network for Whisper to make an accurate prediction about how to transcribe a particular segment of audio, the model will fall back on what it “knows” about the relationships between sounds and words it has learned from its training data.

According to OpenAI in 2022, Whisper learned those statistical relationships from “680,000 hours of multilingual and multitask supervised data collected from the web.” But we now know a little more about the source. Given Whisper's well-known tendency to produce certain outputs like "thank you for watching," "like and subscribe," or "drop a comment in the section below" when provided silent or garbled inputs, it's likely that OpenAI trained Whisper on thousands of hours of captioned audio scraped from YouTube videos. (The researchers needed audio paired with existing captions to train the model.)

There's also a phenomenon called “overfitting” in AI models where information (in this case, text found in audio transcriptions) encountered more frequently in the training data is more likely to be reproduced in an output. In cases where Whisper encounters poor-quality audio in medical notes, the AI model will produce what its neural network predicts is the most likely output, even if it is incorrect. And the most likely output for any given YouTube video, since so many people say it, is “thanks for watching.”

In other cases, Whisper seems to draw on the context of the conversation to fill in what should come next, which can lead to problems because its training data could include racist commentary or inaccurate medical information. For example, if many examples of training data featured speakers saying the phrase “crimes by Black criminals,” when Whisper encounters a “crimes by [garbled audio] criminals” audio sample, it will be more likely to fill in the transcription with “Black."

In the original Whisper model card, OpenAI researchers wrote about this very phenomenon: "Because the models are trained in a weakly supervised manner using large-scale noisy data, the predictions may include texts that are not actually spoken in the audio input (i.e. hallucination). We hypothesize that this happens because, given their general knowledge of language, the models combine trying to predict the next word in audio with trying to transcribe the audio itself."

So in that sense, Whisper "knows" something about the content of what is being said and keeps track of the context of the conversation, which can lead to issues like the one where Whisper identified two women as being Black even though that information was not contained in the original audio. Theoretically, this erroneous scenario could be reduced by using a second AI model trained to pick out areas of confusing audio where the Whisper model is likely to confabulate and flag the transcript in that location, so a human could manually check those instances for accuracy later.

Clearly, OpenAI's advice not to use Whisper in high-risk domains, such as critical medical records, was a good one. But health care companies are constantly driven by a need to decrease costs by using seemingly "good enough" AI tools—as we've seen with Epic Systems using GPT-4 for medical records and UnitedHealth using a flawed AI model for insurance decisions. It's entirely possible that people are already suffering negative outcomes due to AI mistakes, and fixing them will likely involve some sort of regulation and certification of AI tools used in the medical field.

87 notes

·

View notes

Text

Discover how video transcription services drive precision in AI data projects. Learn how GTS.ai ensures accurate and scalable transcription solutions for AI innovation

#machinelearning#video transcription services#video data transcription#transcription solutions#AI model accuracy#AI data training tools#AI innovation strategies#AI data projects#AI precision tools

0 notes

Text

The Role of AI in Video Transcription Services

Introduction

In today's fast-changing digital world, the need for fast and accurate video transcription services has grown rapidly. Businesses, schools, and creators are using more video content, so getting transcriptions quickly and accurately is really important. That's where Artificial Intelligence (AI) comes in – it's changing the video transcription industry in a big way. This blog will explain how AI is crucial for video transcription services, showing how it’s transforming the industry and the many benefits it brings to users in different fields.

AI in Video Transcription: A Paradigm Shift

The use of AI in video transcription services is a big step forward for making content easier to access and manage. By using machine learning and natural language processing, AI transcription tools are becoming much faster, more accurate, and efficient. Let’s look at why AI is so important for video transcription today:

Unparalleled Speed and Efficiency

AI-powered Automation: AI transcription services can process hours of video content in a fraction of the time required by human transcribers, dramatically reducing turnaround times.

Real-time Transcription: Many AI systems offer real-time transcription capabilities, allowing for immediate access to text versions of spoken content during live events or streaming sessions.

Scalability: AI solutions can handle large volumes of video content simultaneously, making them ideal for businesses and organizations dealing with extensive media libraries.

Enhanced Accuracy and Precision

Advanced Speech Recognition: AI algorithms are trained on vast datasets, enabling them to recognize and transcribe diverse accents, dialects, and speaking styles with high accuracy.

Continuous Learning: Machine learning models powering AI transcription services continuously improve their performance through exposure to more data, resulting in ever-increasing accuracy over time.

Context Understanding: Sophisticated AI systems can grasp context and nuances in speech, leading to more accurate transcriptions of complex or technical content.

Multilingual Capabilities

Language Diversity: AI-driven transcription services can handle multiple languages, often offering translation capabilities alongside transcription.

Accent and Dialect Recognition: Advanced AI models can accurately transcribe various accents and regional dialects within the same language, ensuring comprehensive coverage.

Code-switching Detection: Some AI systems can detect and accurately transcribe instances of code-switching (switching between languages within a conversation), a feature particularly useful in multilingual environments.

Cost-effectiveness

Reduced Labor Costs: By automating the transcription process, AI significantly reduces the need for human transcribers, leading to substantial cost savings for businesses.

Scalable Pricing Models: Many AI transcription services offer flexible pricing based on usage, allowing businesses to scale their transcription needs without incurring prohibitive costs.

Reduced Time-to-Market: The speed of AI transcription can accelerate content production cycles, potentially leading to faster revenue generation for content creators.

Enhanced Searchability and Content Management

Keyword Extraction: AI transcription services often include features for automatic keyword extraction, making it easier to categorize and search through large video libraries.

Timestamping: AI can generate accurate timestamps for transcribed content, allowing users to quickly navigate to specific points in a video based on the transcription.

Metadata Generation: Some advanced AI systems can automatically generate metadata tags based on the transcribed content, further enhancing searchability and content organization.

Accessibility and Compliance

ADA Compliance: AI-generated transcripts and captions help content creators comply with accessibility guidelines, making their content available to a wider audience, including those with hearing impairments.

SEO Benefits: Transcripts generated by AI can significantly boost the SEO performance of video content, making it more discoverable on search engines.

Educational Applications: In educational settings, AI transcription can provide students with text versions of lectures and video materials, enhancing learning experiences for diverse learner types.

Integration with Existing Workflows

API Compatibility: Many AI transcription services offer robust APIs, allowing for seamless integration with existing content management systems and workflows.

Cloud-based Solutions: AI transcription services often leverage cloud computing, enabling easy access and collaboration across teams and locations.

Customization Options: Advanced AI systems may offer industry-specific customization options, such as specialized vocabularies for medical, legal, or technical fields.

Quality Assurance and Human-in-the-Loop Processes

Error Detection: Some AI transcription services incorporate error detection algorithms that can flag potential inaccuracies for human review.

Hybrid Approaches: Many services combine AI transcription with human proofreading to achieve the highest levels of accuracy, especially for critical or sensitive content.

User Feedback Integration: Advanced systems may allow users to provide feedback on transcriptions, which is then used to further train and improve the AI models.

Future of AI in Video Transcription: Navigating Opportunities

Looking ahead, the role of AI in video transcription services is poised for further expansion and refinement. As natural language processing technologies continue to advance, we can anticipate:

Enhanced Emotion and Sentiment Analysis: Future AI systems may be able to detect and annotate emotional tones and sentiments in speech, adding another layer of context to transcriptions.

Improved Handling of Background Noise: Advancements in audio processing may lead to AI systems that can more effectively filter out background noise and focus on primary speakers.

Real-time Language Translation: The integration of real-time translation capabilities with transcription services could break down language barriers in live international events and conferences.

Personalized AI Models: Organizations may have the opportunity to train AI models on their specific content, creating highly specialized and accurate transcription systems tailored to their needs.

Conclusion: Embracing the AI Advantage in Video Transcription

Integrating AI into video transcription services represents a major step forward in improving content accessibility, management, and utilization. AI-driven solutions provide unparalleled speed, accuracy, and cost-effectiveness, making them indispensable in today's video-centric environment. Businesses, educational institutions, and content creators can leverage AI for video transcription to boost efficiency, expand their audience reach, and derive greater value from their video content. As AI technology advances, the future of video transcription promises even more innovative capabilities, reinforcing its role as a critical component of contemporary digital content strategies.

0 notes

Text

A US medical transcription firm fell victim to a hack, resulting in the theft of data belonging to nine million patients

- By InnoNurse Staff -

Around nine million patients had their highly sensitive personal and health data compromised following a cyberattack on a U.S. medical transcription service earlier this year. This incident stands as one of the most severe breaches in recent medical data security history.

The affected company, Perry Johnson & Associates (PJ&A), headquartered in Henderson, Nevada, offers transcription services to healthcare entities and physicians for recording and transcribing patient notes.

Read more at TechCrunch

///

Other recent news and insights

A study reveals that artificial intelligence effectively identifies eye disease in diabetic children (Orbis International/PRNewswire)

Innovative wearables, a pioneering development, record body sounds to provide ongoing health monitoring (Northwestern University)

Canada: Pathway secures $5 million in seed funding and grants free access to its healthcare guidance platform (BetaKit)

#cybersecurity#usa#transcription#health it#health tech#medtech#digital health#ai#hackers#data breach#ophthalmology#eye#pediatrics#diabetes#wearables#acoustics#health monitoring#canada

0 notes

Text

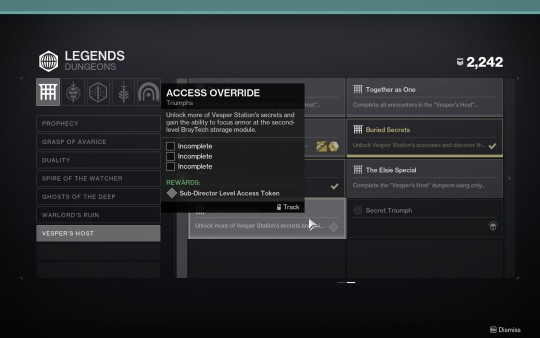

Got through all of the secrets for Vesper's Host and got all of the additional lore messages. I will transcribe them all because I don't know when they'll start getting uploaded and to get them all it requires doing some extra puzzles and at least 3-4 clears to get them all. I'll put them all under read more and label them by number.

Before I do that, just to make it clear there's not too much concrete lore; a lot about the dungeon still remains a mystery and most likely a tease for something in the future. Still unknown, but there's a lot that we don't know even with the messages so don't expect a massive reveal, but they do add a little bit of flavour and history about the station. There might be something more, but it's unknown: there's still one more secret triumph left. The messages are actually dialogues between the station AI and the Spider. Transcripts under read more:

First message:

Vesper Central: I suppose I have you to thank for bringing me out of standby, visitor. The Spider: I sent the Guardian out to save your station. So, what denomination does your thanks come in? Glimmer, herealways, information...? Vesper Central: Anomaly's powered down. That means I've already given you your survival. But... the message that went through wiped itself before my cache process could save a copy. And it's not the initial ping through the Anomaly I'm worried about. It's the response.

A message when you activate the second secret:

Vesper Central: Exterior scans rebooting... Is that a chunk of the Morning Star in my station's hull? With luck, you were on board at the time, Dr. Bray.

Second message:

Vesper Central: I'm guessing I've been in standby for a long time. Is Dr. Clovis Bray alive? The Spider: On my oath, I vow there's no mortal Human named Bray left alive. Vesper Central: I swore I'd outlive him. That I'd break the chains he laid on me. The Spider: Please, trust me for anything you need. The Guardian's a useful hand on the scene, but Spider's got the goods. Vesper Central: Vesper Station was Dr. Bray's lab, meant to house the experiments that might... interact poorly with other BrayTech work. Isolated and quarantined. From the debris field, I would guess the Morning Star taking a dive cracked that quarantine wide open.

A message when you activate the third secret:

Vesper Central: Sector seventeen powered down. Rerouting energy to core processing. Integrating archives.

Third message:

The Spider: Loading images of the station. That's not Eliksni engineering. [scoffs] A Dreg past their first molt has better cable management. Vesper Central: Dr. Bray intended to integrate his technology into a Vex Mind. He hypothesized the fusion would give him an interface he understood. A control panel on a programmable Vex mind. If the programming jumped species once... I need time to run through the data sets you powered back up. Reassembling corrupted archives takes a great deal of processing.

Text when you go back to the Spider the first time:

A message when you activate the fourth secret:

Vesper Central: Helios sector long-term research archives powered up. Activating search.

Fourth message:

Vesper Central: Dr. Bray's command keys have to be in here somewhere. Expanding research parameters... The Spider: My agents are turning up some interesting morself of data on their own. Why not give them access to your search function and collaborate? Vesper Central: Nobody is getting into my core programming. The Spider: Oh! Perish the thought! An innocent offer, my dear. Technology is a matter of faith to my people. And I'm the faithful sort.

Fifth message:

Vesper Central: Dr. Bray, I could kill you myself. This is why our work focused on the unbodied Mind. Dr. Bray thought there were types of Vex unseen on Europa. Powerful Vex he could learn from. The plan was that the Mind would build him a controlled window for observation. Tidy. Tight. Safe. He thought he could control a Vex mind so perfectly it would do everything he wanted. The Spider: Like an AI of his own creation. Like you. Vesper Central: Turns out you can't control everything forever.

Sixth message:

Vesper Central: There's a block keeping me from the inner partitions. I barely have authority to see the partitions exist. In standby, I couldn't have done more than run automated threat assessments... with flawed data. No way to know how many injuries and deaths I could have prevented, with core access. Enough. A dead man won't keep me from protecting what's mine.

Text when you return to the Spider at the end of the quest:

The situation for the dungeon triumphs when you complete the mesages. "Buried Secrets" completed triumph is the six messages. This one is left; unclear how to complete it yet and if it gives any lore or if it's just a gameplay thing and one secret triumph remaining (possibly something to do with a quest for the exotic catalyst, unclear if there will be lore):

The Spider is being his absolutely horrendous self and trying to somehow acquire the station and its remains (and its AI) for himself, all the while lying and scheming. The usual. The AI is incredibly upset with Clovis (shocker); there's the following line just before starting the second encounter:

She also details what he was doing on the station; apparently attempting to control a Vex mind and trying to use it as some sort of "observation deck" to study the Vex and uncover their secrets. Possibly something more? There's really no Vex on the station, besides dead empty frames in boxes. There's also 2 Vex cubes in containters in the transition section, one of which was shown broken as if the cube, presumably, escaped. It's entirely unclear how the Vex play into the story of the station besides this.

The portal (?) doesn't have many similarities with Vex portals, nor are the Vex there to defend it or interact with it in any way. The architecture is ... somewhat similar, but not fully. The portal (?) was built by the "Puppeteer" aka "Atraks" who is actually some sort of an Eliksni Hive mind. "Atraks" got onto the station and essentially haunted it before picking off scavenging Eliksni one by one and integrating them into herself. She then built the "anomaly" and sent a message into it. The message was not recorded, as per the station AI, and the destination of the message was labelled "incomprehensible." The orange energy we see coming from it is apparently Arc, but with a wrong colour. Unclear why.

I don't think the Vex have anything to do with the portal (?), at least not directly. "Atraks" may have built something related to the Vex or using the available Vex tech at the station, but it does not seem to be directed by the Vex and they're not there and there's no sign of them otherwise. The anomaly was also built recently, it's not been there since the Golden Age or something. Whatever it is, "Atraks" seemed to have been somehow compelled and was seen standing in front of it at the end. Some people think she was "worshipping it." It's possible but it's also possible she was just sending that message. Where and to whom? Nobody knows yet.

Weird shenanigans are afoot. Really interested to see if there's more lore in the station once people figure out how to do these puzzles and uncover them, and also when (if) this will become relevant. It has a really big "future content" feel to it.

Also I need Vesper to meet Failsafe RIGHT NOW and then they should be in yuri together.

123 notes

·

View notes