#AI agent frameworks

Explore tagged Tumblr posts

Text

Top 10 AI Agent Frameworks to Build Multi-Agent Enterprise Apps in 2025 Explore the AI frameworks leading 2025’s automation revolution. Bitcot’s expert guide to the top 10 AI Agent tools shows how businesses can deploy intelligent agents to cut costs, increase efficiency, and transform operations. Ideal for companies embracing next-gen digital transformation. Read the full story.

0 notes

Text

Artificial intelligence (AI) is reshaping the aviation industry, with AI agents playing a pivotal role in optimizing operations, enhancing passenger experiences, and improving safety. AI applications such as predictive maintenance, air traffic management, fuel efficiency, and pilot training are revolutionizing key areas of aviation. This transformation is driven by advanced AI frameworks like LangChain, CrewAI, and AutoGen, which simplify development processes for AI-driven solutions. Despite challenges like data security and system integration, AI agents promise a future of more efficient and safer air travel. The aviation industry is just beginning to tap into AI's vast potential, setting the stage for continued innovation.

1 note

·

View note

Text

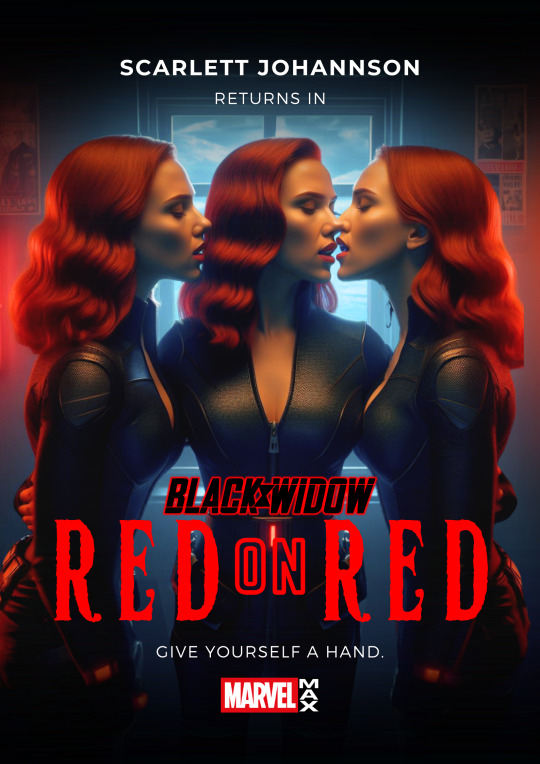

Natasha Romanoff didn't bat her eye at experimental new tech – it was part of the job, after all. So when the SHIELD labrats asked her to test-drive a new holographic simulation... "Why the hell not," was her quick and quippy reply. It was an early developmental prototype of a platform called the Framework, designed by some young SHIELD recruit as a consequence-free space for training simulations.

Seemed innocent enough to give it a whirl.

And again, for Nat, when the training went off the rails and she found herself trapped in an unstable simulated reality... she wasn't surprised. The techs in the surface world would bring her back eventually – all she had to do was stay sane in this troublesome virtual world in the meantime.

As the system continued to glitch out and threw new bugs her way, she was surprised to realize that not all of them were dangerous. When the program duplicated her, that's when she raised her first eyebrow. Now, having been tripled, Nat started to worry.

Although these other Nat simulations seemed pretty laid back, despite her initial concerns. And trapped in a room with three of her, all eager to pass the time... Natasha found herselves game for another type of... experiment.

* * * * *

I was really happy with my first Nat attempt – Love, Natasha – but couldn't help but do more for our girl. I wanted to really give her Avengers-era character a chance to shine 😈.

#natasha romanoff#fake movie poster#selfcest#wlw#ai art#ai artwork#ai generated#ai image#mcu#mcu headcanons#scarlett johansson#natasha mcu#natasha romanov#natasha poly#natasha x reader#black widow#avengers#agents of shield#framework#ai fanart

77 notes

·

View notes

Text

Any AI Agent Can Talk. Few Can Be Trusted

New Post has been published on https://thedigitalinsider.com/any-ai-agent-can-talk-few-can-be-trusted/

Any AI Agent Can Talk. Few Can Be Trusted

The need for AI agents in healthcare is urgent. Across the industry, overworked teams are inundated with time-intensive tasks that hold up patient care. Clinicians are stretched thin, payer call centers are overwhelmed, and patients are left waiting for answers to immediate concerns.

AI agents can help by filling profound gaps, extending the reach and availability of clinical and administrative staff and reducing burnout of health staff and patients alike. But before we can do that, we need a strong basis for building trust in AI agents. That trust won’t come from a warm tone of voice or conversational fluency. It comes from engineering.

Even as interest in AI agents skyrockets and headlines trumpet the promise of agentic AI, healthcare leaders – accountable to their patients and communities – remain hesitant to deploy this technology at scale. Startups are touting agentic capabilities that range from automating mundane tasks like appointment scheduling to high-touch patient communication and care. Yet, most have yet to prove these engagements are safe.

Many of them never will.

The reality is, anyone can spin up a voice agent powered by a large language model (LLM), give it a compassionate tone, and script a conversation that sounds convincing. There are plenty of platforms like this hawking their agents in every industry. Their agents might look and sound different, but all of them behave the same – prone to hallucinations, unable to verify critical facts, and missing mechanisms that ensure accountability.

This approach – building an often too-thin wrapper around a foundational LLM – might work in industries like retail or hospitality, but will fail in healthcare. Foundational models are extraordinary tools, but they’re largely general-purpose; they weren’t trained specifically on clinical protocols, payer policies, or regulatory standards. Even the most eloquent agents built on these models can drift into hallucinatory territory, answering questions they shouldn’t, inventing facts, or failing to recognize when a human needs to be brought into the loop.

The consequences of these behaviors aren’t theoretical. They can confuse patients, interfere with care, and result in costly human rework. This isn’t an intelligence problem. It’s an infrastructure problem.

To operate safely, effectively, and reliably in healthcare, AI agents need to be more than just autonomous voices on the other end of the phone. They must be operated by systems engineered specifically for control, context, and accountability. From my experience building these systems, here’s what that looks like in practice.

Response control can render hallucinations non-existent

AI agents in healthcare can’t just generate plausible answers. They need to deliver the correct ones, every time. This requires a controllable “action space” – a mechanism that allows the AI to understand and facilitate natural conversation, but ensures every possible response is bounded by predefined, approved logic.

With response control parameters built in, agents can only reference verified protocols, pre-defined operating procedures, and regulatory standards. The model’s creativity is harnessed to guide interactions rather than improvise facts. This is how healthcare leaders can ensure the risk of hallucination is eliminated entirely – not by testing in a pilot or a single focus group, but by designing the risk out on the ground floor.

Specialized knowledge graphs can ensure trusted exchanges

The context of every healthcare conversation is deeply personal. Two people with type 2 diabetes might live in the same neighborhood and fit the same risk profile. Their eligibility for a specific medication will vary based on their medical history, their doctor’s treatment guideline, their insurance plan, and formulary rules.

AI agents not only need access to this context, but they need to be able to reason with it in real time. A specialized knowledge graph provides that capability. It’s a structured way of representing information from multiple trusted sources that allows agents to validate what they hear and ensure the information they give back is both accurate and personalized. Agents without this layer might sound informed, but they’re really just following rigid workflows and filling in the blanks.

Robust review systems can evaluate accuracy

A patient might hang up with an AI agent and feel satisfied, but the work for the agent is far from over. Healthcare organizations need assurance that the agent not only produced correct information, but understood and documented the interaction. That’s where automated post-processing systems come in.

A robust review system should evaluate each and every conversation with the same fine-tooth-comb level of scrutiny a human supervisor with all the time in the world would bring. It should be able to identify whether the response was accurate, ensure the right information was captured, and determine whether or not follow-up is required. If something isn’t right, the agent should be able to escalate to a human, but if everything checks out, the task can be checked off the to-do list with confidence.

Beyond these three foundational elements required to engineer trust, every agentic AI infrastructure needs a robust security and compliance framework that protects patient data and ensures agents operate within regulated bounds. That framework should include strict adherence to common industry standards like SOC 2 and HIPAA, but should also have processes built in for bias testing, protected health information redaction, and data retention.

These security safeguards don’t just check compliance boxes. They form the backbone of a trustworthy system that can ensure every interaction is managed at a level patients and providers expect.

The healthcare industry doesn’t need more AI hype. It needs reliable AI infrastructure. In the case of agentic AI, trust won’t be earned as much as it will be engineered.

#agent#Agentic AI#agents#ai#ai agent#AI AGENTS#AI Infrastructure#appointment scheduling#approach#autonomous#Bias#Building#burnout#call Centers#clinical#communication#compliance#creativity#data#diabetes#Drift#Engineer#engineering#Facts#focus#form#framework#Graph#Hallucination#hallucinations

0 notes

Text

0 notes

Text

What Are Agentic AI Frameworks and How Do They Power Autonomous Systems?

Agentic AI frameworks are at the forefront of next-gen AI, enabling systems to act autonomously with decision-making capabilities similar to humans. These frameworks are designed to allow AI agents to perceive their environment, plan actions, execute tasks, and even adapt over time. In this blog, we explore what agentic AI frameworks are and how they power autonomous systems, helping you understand why they are key to the future of artificial intelligence.

We’ve compiled a detailed agentic AI frameworks list that highlights some of the most impactful tools currently available. From AutoGPT to BabyAGI and LangChain, you’ll find agentic AI frameworks examples used in real-world applications such as automated customer service, research agents, robotic control, and multi-agent collaboration. Our post also includes a comparison of agentic AI frameworks, so you can see the differences in capabilities, flexibility, and use cases.

Whether you're a researcher or developer, we also explore the top agentic AI frameworks that stand out in terms of scalability, modularity, and developer support. Many of these tools are also agentic AI frameworks open-source, making them accessible for experimentation and deployment in your own AI projects. You’ll get insights into GitHub repositories, documentation strength, and community support for each framework.

1 note

·

View note

Text

From AI to DPI, Google unveils new initiatives for India market

Tech giant Google on Thursday announced several key initiatives for the India market, including an open-source ‘AI Agent Framework,’ more local data storage options, a digital public infrastructure (DPI) model, clean energy partnerships and a new Google Safety Engineering Center (GSEC) in the country next year.

Source: bhaskarlive.in

0 notes

Text

Procurement didn't start the fire, Gartner did?

Did Gartner create the FUD that led to GenAI initiative failures?

Ryan Backman Senior Account Executive @ Gartner | Problem Solver | Quote EnthusiastSenior Account Executive Book an appointment • 12 hours ago Gartner predicts that by 2027, 60% of organizations will fail to realize the expected value of their AI use cases due to incohesive ethical governance frameworks.Learn how proactive CDAOs are prioritizing AI-ready data governance.Gartner for IT |…

#agent-based model#AI#AI adoption#equation-based model#FUD#Gartner#genai#high-tech#incohesive ethical governance frameworks#procurement

0 notes

Text

Unveil the power of Mora, an innovative multi-agent framework that’s reshaping the landscape of video generation. Experience how Mora mimics and extends the capabilities of OpenAI's Sora, marking a new era in open-source AI.

#Mora#AI#OpenSourceai#Sora#videoGeneration#text2video#Image2Video#Video2video#multi-agent-framework#MicrosoftResearch#openai#artificial intelligence#open source#ai video generator

0 notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Note

This is such a complex and nuanced topic that I can’t stop thinking now about artificial intelligence, personhood, and what it means to be alive. Because golem!Prowl actually seems to exist somewhere in the intersection of those ideas.

Certainly Prowl does not have a soul. And yet, where other golems depicted in mimic au seem to operate primarily as rule-based entities given a set of predefined orders that define their function, Prowl is able to go a step further — learning and defining his own rules based on observation and experience. Arguably, Prowl is even more advanced in this regard than real-world AI agents we might interact with such as ChatGPT (which still requires humans to tell it: when to update it’s knowledge, what data to use, and what that data means) currently are. Because Prowl formulates knowledge not just from a distillation and concentration of the most prominent and commonly accepted ideas that have come before.

He shows this when he rejects all the views that society accepts — resulting in the formulation of the idea that Primus must be wrong. And in a lot of ways, Prowl’s learning that gets him to ultimately reach that conclusion seems a lot more closely related to how we learn. He learns from observing the actions of those around him, from listening to what the people closest to him say and from experiencing things for himself. And this also shows in the beginnings of his interaction with Jazz. Prowl may know things like friendship as abstract concepts, but he only can truly come to define what they mean because he is experiencing them.

In some ways then, what seems to make Prowl much more advanced in his intelligence is that the conclusions he ultimately draws — the way he updates his understanding of the world to fit the framework he’s been given — is something he does independently. And this is what sets him apart.

So is he a person? Given his lack of soul or spark perhaps not. But then again, what truly defines humanity, for lack of a better word? Because perhaps there is not a clear and distinct line to tell when mimicry and close approximation crosses over to become the real thing.

But given the way that Prowl learns and interacts with the world around him, it does not seem too far-fetched to say that he is alive. And further, that he seems a fairly unique form of life within this continuity. Therefore, is he not his own individual? In much the same way that the others this society deems beasts and monsters because of their unique abilities are also individuals.

It’s just really interesting to think about.

(But I will stop myself there, because I did not initially think this would get as long as it did and I feel like I’ve already written an entire thesis in an ask at this point!)

DAMN That’s a really really interesting essay you got here👁

If we take an artificially created algorithm based on seek a goal -> complete the goal but then give it learning capability of a real person. At what point it’s gonna just become one? And if it gains the ability to have emotions. Could they be considered “real” if it’s processing them in it’s own way completely unknown to us?

I love making stories that force me to question the entire life hahdkj

299 notes

·

View notes

Text

omg i'm sorry but i need to techsplain just one thing in the most doomer terms possible bc i'm scared and i need people to be too. so i saw this post which is like, a great post that gives me a little kick because of how obnoxious i find ai and how its cathartic to see corporate evil overlords overestimate themselves and jump the gun and look silly.

but one thing i don't think people outside of the industry understand is exactly how companies like microsoft plan on scaling the ability of their ai agents. as this post explains, they are not as advanced as some people make them out to be and it is hard to feed them the amount of context they need to perform some tasks well.

but what the second article in the above post explains is microsoft's investment in making a huge variety of the needed contexts more accessible to ai agents. the idea is like, only about 6 months old but what every huge tech firm right now is looking at is mcps (or model context protocols) which is a framework for standardizing how needed context is given to ai agents. to oversimplify an example, maybe an ai coding agent is trained on a zillion pieces of javacode but doesn't have insider knowledge of microsoft's internal application authoring processes, meta architecture, repositories, etc. an mcp standardizes how you would then offer those documents to the agent in a way that it can easily read and then use them, so it doesn't have to come pre-loaded with that knowledge. so it could tackle this developer's specific use case, if offered the right knowledge.

and that's the plan. essentially, we're going to see a huge boom in companies offering their libraries, services, knowledge bases (e.g. their bug fix logs) etc as mcps, and ai agents basically are going to go shopping amongst those contexts, plug into whatever the context is that they need for the task at hand, and then power up by like a bajillion percent on specific task they need to do.

so ai is powerful but not infallible right now, but it is going to scale pretty quickly i think.

in my opinion the only thing that is ever going to limit ai is not knowledge accessibility, but rather corporate greed. ai models are crazy expensive to train and maintain. every company on earth is also looking at how to optimize them to reduce some of that cost, and i think we will eventually see only a few megalith ais like chatgpt, with a bunch of smaller, more targeted models offered by other companies for them to leverage for specialized tasks.

i genuinely hope that the owners of the megalith models get so greedy that even the cost optimizations they are doing now don't bring down the price enough for their liking and they find shortcuts that ultimately make the models and the entire ecosystem shitty. but i confess i don't know enough about model optimization to know what is likely.

anyway i'm big scared and just wanted to put this slice of knowledge out there for people to be a little more informed.

57 notes

·

View notes

Text

New open-access article from Georgia Zellou and Nicole Holliday: "Linguistic analysis of human-computer interaction" in Frontiers in Computer Science (Human-Media Interaction).

This article reviews recent literature investigating speech variation in production and comprehension during spoken language communication between humans and devices. Human speech patterns toward voice-AI presents a test to our scientific understanding about speech communication and language use. First, work exploring how human-AI interactions are similar to, or different from, human-human interactions in the realm of speech variation is reviewed. In particular, we focus on studies examining how users adapt their speech when resolving linguistic misunderstandings by computers and when accommodating their speech toward devices. Next, we consider work that investigates how top-down factors in the interaction can influence users’ linguistic interpretations of speech produced by technological agents and how the ways in which speech is generated (via text-to-speech synthesis, TTS) and recognized (using automatic speech recognition technology, ASR) has an effect on communication. Throughout this review, we aim to bridge both HCI frameworks and theoretical linguistic models accounting for variation in human speech. We also highlight findings in this growing area that can provide insight to the cognitive and social representations underlying linguistic communication more broadly. Additionally, we touch on the implications of this line of work for addressing major societal issues in speech technology.

167 notes

·

View notes

Note

Would you say there are any narrative parallels between Kyubey and Ichigo Saitou?

Oh for SURE. Obviously they both exist in very different contexts and are approaching the topic from varying levels of abstraction and such but both OnK and PMMM are at least in part about engaging with (specifically misogynistic) systems of exploitation that turn the societally imposed expectations of women's emotional labor into a commodified resource.

Kyubey and Ichigo are both agents of their respective systems acting to further its goals and ends, not necessarily out of malice but because they see exploitation as just the done thing within the framework they operate in.

Ichigo, obviously, is a businessman and I've talked before about how interesting I find the slightly manipulative undertones in the early days of his and Ai's relationship and how disappointed I am that it's something the series seems to want to downplay/retcon these days. Either way, he was still the one managing B-Komachi and therefore, he was the one who decided to market a bunch of middle school girls as 'gachikoi' idols to grown men who were way too fucking old to be thinking of them as romantic interests.

Kyubey is even more manipulative than Ichigo at the end of the day, but more out of this obsession with raw, razor's edge efficiency that the Incubators as a whole operate with. It's not that he feels... basically anything about the girls he's exploiting, but that it's just easier to bullshit them if it gets them to agree a little faster.

On that note, both of them are also pretty obviously similar in the ways they disguise exploitation as opportunity. The big hook of the MadoMagi magical girl system, after all, is the promise of having your wish granted up front and Kyubey constantly goes out of his way to emphasize to the girls just how much they could do with the opportunity that represents. The entertainment industry in OnK is framed in much the same way - Ai lets slip a certain lack that she's wrestling with and Ichigo immediately leaps at the chance to prescribe idolhood as the cure to all her ills.

Where they differ is in their actual personhood as characters as opposed to their utilitarian function in the greater narrative. Kyubey obviously doesn't really HAVE personhood, from both a Doylist and Watsonian perspective. He's an anthropomorphized representation of the system the girls spend all of PMMM fighting against and The System does not feel any sort of way about you or anything. It just Is.

Ichigo is, at the end of the day, an actual person who can and does have feelings about the exploitation he perpetuates and - at least in theory - comes to regret his hand in it. His entire role in the story post-volume 1 is (at least when Aka lets him do anything lol) about him trying to make sense of his grief in the wake of Ai's death and the role he ultimately had in making it come to pass.

TBH, there's a lot of interesting parallels between OnK and PMMM as a whole now I'm rolling them around in my head - the tension of trying to retain your personhood and agency within a system that's rigged against you, the way said system will use your desire for agency to make you complicit in your own abuse, the discussion surrounding the way girls 'fall from grace' and become monstrous once their emotions are no longer pure and palatable enough... A lot of this is just naturally emergent from being a story about systems that cannibalize girlhood, but it's interesting all the same. It's been way too long since I've properly engaged with PMMM for me to dig into it more but if anyone is at the right cross-section of OnK and PMMM brainrot, I'd love to see if you pick up on any of these same ideas too!

#oshi no ko#oshi no posting#puella magi madoka magica#mahou shojo madoka magica#madoka magica#i have no idea what the main tag for that is these days

30 notes

·

View notes

Text

Chapter One

summary: jack visits halley in the lab.

warnings: none, a little bit of fluff, angst, some nerd stuff.

pairing: jack daniels x fem!oc

The walls didn’t feel so cold when he moved through them with no expectations on his shoulders—nothing to prove, nowhere to be. They had reduced him to a lower-rank agent, giving him just enough freedom to walk around but not enough to make him feel like he belonged. He didn’t.

Jack had grown accustomed to walking these sterile hallways with the quiet shuffle of a man who no longer had the right to command attention. He wasn’t part of the higher ranks anymore. He wasn’t part of anything.

But there was one place he could go.

The lab.

He wasn’t entirely sure why, but he felt drawn to it. Maybe it was the constant hum of machines and the quiet rhythm of Halley’s presence, always moving—tinkering with her screens, surrounded by her inventions, her delicate genius. Something about her steadiness pulled at him, a curiosity he couldn’t quite explain.

No one had told him to avoid her; no one had told him he could not visit. But it still felt like an unspoken rule. The others—his colleagues, the ones who were still allowed to stand tall with their badges—had forgotten about him. They probably wouldn’t even notice if he slipped away to see her.

Jack found the door to the lab almost without thinking, his boots quiet against the floor as he approached. It was like the whole building held its breath as he stood there for a moment, the weight of his own uncertainty pressing down on him, but there was something else. A feeling he hadn’t quite allowed himself to name since… well, since the whole damn mess started.

He pushed open the door slowly, careful not to make a sound.

But the soft click of the door latch was enough to make Halley look up from her work, and her sharp intake of breath was the only warning he got before she turned around, catching him in the act.

“Jack!” she exclaimed, her voice a little sharper than usual. “What are you doing? Sneaking up like that?”

“Don’t mean no harm, darlin’. Just… wanted to see what you’re up to.”

"You can't come here whenever you want. What if someone catches you?"

"I have access to the lab, darlin'" he gently explained, putting his hands into the pockets of his Wrangler jeans. “Besides, why do you care if someone sees me here?"

Her cheeks started to burn.

"I-" she trailed off, her shoulders slowly dropping. “I don't want you to get in trouble."

“Trouble s' my middle name, you should know that by now." he scoffed, taking a look around then at the screen in front of her. “What's that?"

He pointed to the hologram. Halley did a little spin in her chair.

"I’ve been optimizing Tadashi’s neural processing capabilities by integrating a self-adaptive quantum matrix into his existing framework. It allows for exponential scalability in decision-making pathways without compromising efficiency."

Jack blinked. Slowly.

He had faced down armed mercenaries, taken hits that would’ve laid out lesser men, and survived betrayals that should have killed him. But this?

This was the kind of thing that damn near fried his brain.

He shifted, crossing his arms over his chest as he squinted at the screen, as if staring at it long enough would somehow make the words make sense. “Now, sweetheart, I reckon you just spoke more words in one sentence than I’ve understood all week.”

She paused, then glanced at him, noticing the slight furrow in his brow, the way his jaw tightened just a little. A small smile tugged at the corner of her lips, and she leaned back.

“Let’s put it this way.” She turned toward him fully now, resting her elbow on the desk. “Tadashi is an AI, right? A learning program. But right now, he can only improve himself in ways that I specifically program him to. What I’m doing is giving him the ability to adjust his own learning methods in real-time, without me having to tell him how.”

Jack’s brow lifted slightly. “So you’re teachin’ your little computer fella how to… think on his own?”

“Pretty much.”

“Huh.” He let out a low hum. “That ain’t gonna lead to a Terminator situation, is it?”

Halley laughed, shaking her head. “No killer robots. Promise.”

He exhaled, pretending to wipe his brow. “Well, that’s a relief. Ain’t exactly in shape to be fightin’ machines right now.”

She chuckled, then studied him for a moment, noticing the way his shoulders had relaxed just a little, the weight in his eyes not quite as heavy as before.

She liked seeing that, even if it was fleeting.

“Agent Morgan,” Tadashi’s voice rang out, smooth and precise. “Champagne is asking for your presence in the conference hall.”

Halley sighed, already reaching for the tablet beside her. “I’m on it. Thank you, Dash.” She turned to Jack, pushing her chair back slightly. “I’m sorry to leave you, but—”

Jack shook his head before she could finish. “Don’t mind me, darlin’. I wasted enough of your time. Go see what the old man wants.”

The words weren’t harsh, weren’t bitter. But they were said in that same tired, hollow way she had come to recognize—the voice of a man who didn’t think he was worth sticking around for.

Something in her chest twisted.

He wasn’t trying to push her away, not in an aggressive way. But he believed what he was saying. He genuinely thought he was wasting her time, as if his presence in this lab, in her life, had no value at all.

Halley hesitated, gripping the edge of her desk. She wanted to tell him he was wrong. That she wanted him here, that he wasn’t some burden she had to bear. But she knew Jack—knew he wouldn’t take words like that seriously. Not right now when the wounds were still fresh.

Instead, she kept her voice soft. “You didn’t waste my time, Jack.”

He glanced at her, the ghost of a smile on his lips, but it didn’t reach his eyes. “Ain’t gotta sugarcoat things for me, sweetheart.”

“I’m not.” She held his gaze, willing him to see the truth in her eyes. “You never do.”

For a moment, neither of them spoke. The air between them felt heavier, not with tension, but with a quiet understanding.

Then, Halley sighed and grabbed her tablet, moving toward the door.

“I’ll be back soon,” she said, pausing just long enough to look over her shoulder at him. “Don’t disappear on me, alright?”

He huffed out a breath, tipping his hat slightly. “No promises.”

Halley shook her head with a small smile, then slipped out the door.

And Jack? He sat there a moment longer, staring at the empty space she had left behind, wondering why in the hell it suddenly felt a little colder without her there.

chapter two

#jack daniels#agent whiskey x female oc#kingsman#the golden circle#agent whiskey fanfiction#agent whiskey fic#pedro pascal fanfiction#pedro pascal fandom#pedrohub#pedro pascal characters

24 notes

·

View notes

Text

Week 1

Interviews: Aim for at least 1 interview by end of week.

DSA: Stacks (E/M), Arrays & Hashing, Two Pointers (all NeetCode). Daily LeetCode problem. ≥1 LC contest, ≥1 CF contest.

Books:

"AI Agents in Action": Chapters 1-6 (~170-180 pages).

"Algorithms": ~2-3 intro/foundational chapters (~80-100 pages).

Multi-Agent Project: Define problem & roles, set up framework, implement basic 2-agent communication PoC.

7 notes

·

View notes