#AI in Warehousing

Explore tagged Tumblr posts

Text

Top 5 Applications of AI and Computer Vision in Logistics

Summary: This blog explores the paramount applications of AI in Warehousing��and the transformative impact of Computer Vision in Logistics.

Warehousing is no longer confined to the traditional realms of manual labour and paper trails. The infusion of AI in Warehousing brings forth a new era, where intelligent algorithms and machine learning redefine how inventory is managed, how operations are streamlined, and how logistics professionals navigate the intricate supply chain web. The convergence of these cutting-edge technologies is reshaping the warehousing experience. From enhancing inventory management to optimizing operational efficiency, these technologies redefine the logistics paradigm, ensuring warehouses operate with unprecedented precision and foresight.

Inventory Optimization with AI: AI-driven algorithms analyse historical data to predict demand, ensuring optimal stock levels and reducing the risk of stockouts or excess inventory.

Automated Order Picking: Computer vision facilitates the automation of order picking processes, increasing efficiency and accuracy in fulfilling customer orders.

Predictive Maintenance: AI monitors equipment health in real-time, predicting potential failures and minimizing downtime through proactive maintenance.

Enhanced Security with Computer Vision: Computer vision ensures warehouse security through real-time surveillance, identifying and alerting against unauthorized access or suspicious activities.

Route Optimization: AI algorithms analyse shipping data, traffic patterns, and delivery constraints to optimize routes, minimizing transit time and reducing operational costs.

In a landscape where efficiency and accuracy are paramount, the amalgamation of AI in Warehousing and Computer Vision in Logistics marks the pinnacle of innovation. These technologies not only streamline operations but lay the foundation for a future where warehouses operate with unprecedented intelligence and agility, ensuring the seamless flow of goods through the intricacies of the modern supply chain.

0 notes

Note

Just saying that tumblr now trains ai on ppls art if you don't opt out so be careful please. Just a heads up if you haven't seen the warning posts. Also you're one of my favorite blog people on here and I really like your art, especially your Imogen redesign it's straight up better than official art in my personal opinion. Just more interesting to look at

yea i clicked the turn-off button on my blogs i post original content. im too low spoons to do it for all of them but theyre mostly organised reblog blogs anyway. i saw people say they wont post here anymore but im too tired to figure out something else. if an AI is trained on my wonky art then i guess thats funny and im sure the AI bros will love it when their anime girls emerge with hairy legs and long noses and broken teeth . too tired to care much right now , on a personal scale. on a bigger scale i hate capitalism and its sad everything is going to shit except for some cool things like mountains and songs i like and artists that just keep going anyway . remember ai will never be able to take you over if you make physical art with your hands . thats ours forever . make a little zine

thank you also, i appreciate that <3 i forgot i did that redesign and im glad u like it , i think it turned out cool so thank u ^_^

#asks#im so far behind on cr3 i have given up#but cr2 im on ep 68. uh oh#did any of yous see that scam willy wonka event in glasgow lmao? it had ai posters and art on the walls . like taped on a wall in a warehou#capitalism breeds innovation . it had ai words all misspelled and mushed and things it was funny you should go look

46 notes

·

View notes

Text

Cargo handling has always been a time-consuming and unavoidable work, the emergence of AGV unmanned trucks, instead of people to complete the different states of the handling operation, greatly reduced people's labor intensity and improved the efficiency of the factory. The factory transportation robot system is based on a HandsFree robot and open source system, realizing the robot from map building, navigation, and motion control; it can autonomously and accurately complete the delivery of production materials under the operation scenario of human-machine mixing and provide the flexible flow of materials between production lines.

#innovation#mobile robot#agv#ultrasound#navigation#positioning#machine dreams#logistics#intelligent#warehousing#robotics#ugv#autonomous robot#higher education#market research#artificial intelligence (ai)#science and technology

3 notes

·

View notes

Text

Explore this guide about data warehousing solutions for the healthcare industry and get real-world insights to leverage data-driven decision-making. Understand the influence of AI in the medical industry.

0 notes

Text

#warehouseautomation#wms#automation#warehousemanagement#inventorymanagment#erpsoftware#warehouse#ai#software#cloud#inventorymanagementsoftware#erpsystem#stockmanagement#supplychain#management#industry#warehousing#business#robotics#sales#team#manufacturing#digital#multichannel#automationengineering#demo#help#technology#robot#robots

1 note

·

View note

Text

We AILSPL, the leading logistics company in India, remains at the forefront of innovation, constantly driving the latest technologies to optimize our comprehensive logistics services. From warehouse management and supply chain operations to transportation, import-export facilitation, and beyond, our strategic integration of artificial intelligence has significantly elevated our service offerings to ensure customer satisfaction. Learn more : https://impact-logistics.in/ Contact us : +91 95790 95790 E-mail Id : [email protected]

#warehousing#logistics solutions#storage containers#sea freight forwarder#import export business#supply chain#warehouse#logistics#logistics company#ai technology#technology

0 notes

Text

AI and IoT in Warehousing

Discover how our logistic company support artificial intelligence and the Internet of Things (IoT) to enhance warehouse efficiency, automate processes, and improve overall productivity.

#freight broker#freight forwarding#logistics#transportation#air freight#transportservice#supplychainmanagement#sea freight#supplychainsolutions#shipping#artificial intelligence#ai generated#warehouse#warehousing

0 notes

Text

The good thing about AI automating all of writing, art, entertainment, and filmmaking is it frees up everyone to work on stuff that's REALLY enlightening, like manufacturing, logistics, warehousing and distribution, etc

109 notes

·

View notes

Text

Google Cloud’s BigQuery Autonomous Data To AI Platform

BigQuery automates data analysis, transformation, and insight generation using AI. AI and natural language interaction simplify difficult operations.

The fast-paced world needs data access and a real-time data activation flywheel. Artificial intelligence that integrates directly into the data environment and works with intelligent agents is emerging. These catalysts open doors and enable self-directed, rapid action, which is vital for success. This flywheel uses Google's Data & AI Cloud to activate data in real time. BigQuery has five times more organisations than the two leading cloud providers that just offer data science and data warehousing solutions due to this emphasis.

Examples of top companies:

With BigQuery, Radisson Hotel Group enhanced campaign productivity by 50% and revenue by over 20% by fine-tuning the Gemini model.

By connecting over 170 data sources with BigQuery, Gordon Food Service established a scalable, modern, AI-ready data architecture. This improved real-time response to critical business demands, enabled complete analytics, boosted client usage of their ordering systems, and offered staff rapid insights while cutting costs and boosting market share.

J.B. Hunt is revolutionising logistics for shippers and carriers by integrating Databricks into BigQuery.

General Mills saves over $100 million using BigQuery and Vertex AI to give workers secure access to LLMs for structured and unstructured data searches.

Google Cloud is unveiling many new features with its autonomous data to AI platform powered by BigQuery and Looker, a unified, trustworthy, and conversational BI platform:

New assistive and agentic experiences based on your trusted data and available through BigQuery and Looker will make data scientists, data engineers, analysts, and business users' jobs simpler and faster.

Advanced analytics and data science acceleration: Along with seamless integration with real-time and open-source technologies, BigQuery AI-assisted notebooks improve data science workflows and BigQuery AI Query Engine provides fresh insights.

Autonomous data foundation: BigQuery can collect, manage, and orchestrate any data with its new autonomous features, which include native support for unstructured data processing and open data formats like Iceberg.

Look at each change in detail.

User-specific agents

It believes everyone should have AI. BigQuery and Looker made AI-powered helpful experiences generally available, but Google Cloud now offers specialised agents for all data chores, such as:

Data engineering agents integrated with BigQuery pipelines help create data pipelines, convert and enhance data, discover anomalies, and automate metadata development. These agents provide trustworthy data and replace time-consuming and repetitive tasks, enhancing data team productivity. Data engineers traditionally spend hours cleaning, processing, and confirming data.

The data science agent in Google's Colab notebook enables model development at every step. Scalable training, intelligent model selection, automated feature engineering, and faster iteration are possible. This agent lets data science teams focus on complex methods rather than data and infrastructure.

Looker conversational analytics lets everyone utilise natural language with data. Expanded capabilities provided with DeepMind let all users understand the agent's actions and easily resolve misconceptions by undertaking advanced analysis and explaining its logic. Looker's semantic layer boosts accuracy by two-thirds. The agent understands business language like “revenue” and “segments” and can compute metrics in real time, ensuring trustworthy, accurate, and relevant results. An API for conversational analytics is also being introduced to help developers integrate it into processes and apps.

In the BigQuery autonomous data to AI platform, Google Cloud introduced the BigQuery knowledge engine to power assistive and agentic experiences. It models data associations, suggests business vocabulary words, and creates metadata instantaneously using Gemini's table descriptions, query histories, and schema connections. This knowledge engine grounds AI and agents in business context, enabling semantic search across BigQuery and AI-powered data insights.

All customers may access Gemini-powered agentic and assistive experiences in BigQuery and Looker without add-ons in the existing price model tiers!

Accelerating data science and advanced analytics

BigQuery autonomous data to AI platform is revolutionising data science and analytics by enabling new AI-driven data science experiences and engines to manage complex data and provide real-time analytics.

First, AI improves BigQuery notebooks. It adds intelligent SQL cells to your notebook that can merge data sources, comprehend data context, and make code-writing suggestions. It also uses native exploratory analysis and visualisation capabilities for data exploration and peer collaboration. Data scientists can also schedule analyses and update insights. Google Cloud also lets you construct laptop-driven, dynamic, user-friendly, interactive data apps to share insights across the organisation.

This enhanced notebook experience is complemented by the BigQuery AI query engine for AI-driven analytics. This engine lets data scientists easily manage organised and unstructured data and add real-world context—not simply retrieve it. BigQuery AI co-processes SQL and Gemini, adding runtime verbal comprehension, reasoning skills, and real-world knowledge. Their new engine processes unstructured photographs and matches them to your product catalogue. This engine supports several use cases, including model enhancement, sophisticated segmentation, and new insights.

Additionally, it provides users with the most cloud-optimized open-source environment. Google Cloud for Apache Kafka enables real-time data pipelines for event sourcing, model scoring, communications, and analytics in BigQuery for serverless Apache Spark execution. Customers have almost doubled their serverless Spark use in the last year, and Google Cloud has upgraded this engine to handle data 2.7 times faster.

BigQuery lets data scientists utilise SQL, Spark, or foundation models on Google's serverless and scalable architecture to innovate faster without the challenges of traditional infrastructure.

An independent data foundation throughout data lifetime

An independent data foundation created for modern data complexity supports its advanced analytics engines and specialised agents. BigQuery is transforming the environment by making unstructured data first-class citizens. New platform features, such as orchestration for a variety of data workloads, autonomous and invisible governance, and open formats for flexibility, ensure that your data is always ready for data science or artificial intelligence issues. It does this while giving the best cost and decreasing operational overhead.

For many companies, unstructured data is their biggest untapped potential. Even while structured data provides analytical avenues, unique ideas in text, audio, video, and photographs are often underutilised and discovered in siloed systems. BigQuery instantly tackles this issue by making unstructured data a first-class citizen using multimodal tables (preview), which integrate structured data with rich, complex data types for unified querying and storage.

Google Cloud's expanded BigQuery governance enables data stewards and professionals a single perspective to manage discovery, classification, curation, quality, usage, and sharing, including automatic cataloguing and metadata production, to efficiently manage this large data estate. BigQuery continuous queries use SQL to analyse and act on streaming data regardless of format, ensuring timely insights from all your data streams.

Customers utilise Google's AI models in BigQuery for multimodal analysis 16 times more than last year, driven by advanced support for structured and unstructured multimodal data. BigQuery with Vertex AI are 8–16 times cheaper than independent data warehouse and AI solutions.

Google Cloud maintains open ecology. BigQuery tables for Apache Iceberg combine BigQuery's performance and integrated capabilities with the flexibility of an open data lakehouse to link Iceberg data to SQL, Spark, AI, and third-party engines in an open and interoperable fashion. This service provides adaptive and autonomous table management, high-performance streaming, auto-AI-generated insights, practically infinite serverless scalability, and improved governance. Cloud storage enables fail-safe features and centralised fine-grained access control management in their managed solution.

Finaly, AI platform autonomous data optimises. Scaling resources, managing workloads, and ensuring cost-effectiveness are its competencies. The new BigQuery spend commit unifies spending throughout BigQuery platform and allows flexibility in shifting spend across streaming, governance, data processing engines, and more, making purchase easier.

Start your data and AI adventure with BigQuery data migration. Google Cloud wants to know how you innovate with data.

#technology#technews#govindhtech#news#technologynews#BigQuery autonomous data to AI platform#BigQuery#autonomous data to AI platform#BigQuery platform#autonomous data#BigQuery AI Query Engine

2 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

Cutebot takes a mobile cart + robotic arm as the carrier, based on a four-wheel differential motion mechanism, integrating embedded technology, intelligent perception technology, robotics technology, robotic arm technology, SLAM technology, artificial intelligence technology, and machine vision technology, which can cultivate composite talents from the whole process of "structural cognition - electronic control design - programming control - application development".

#innovation#mobile robot#agv#ultrasound#navigation#positioning#machine dreams#logistics#intelligent#warehousing#robotics#ugv#autonomous robot#higher education#market research#artificial intelligence (ai)#science and technology

1 note

·

View note

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

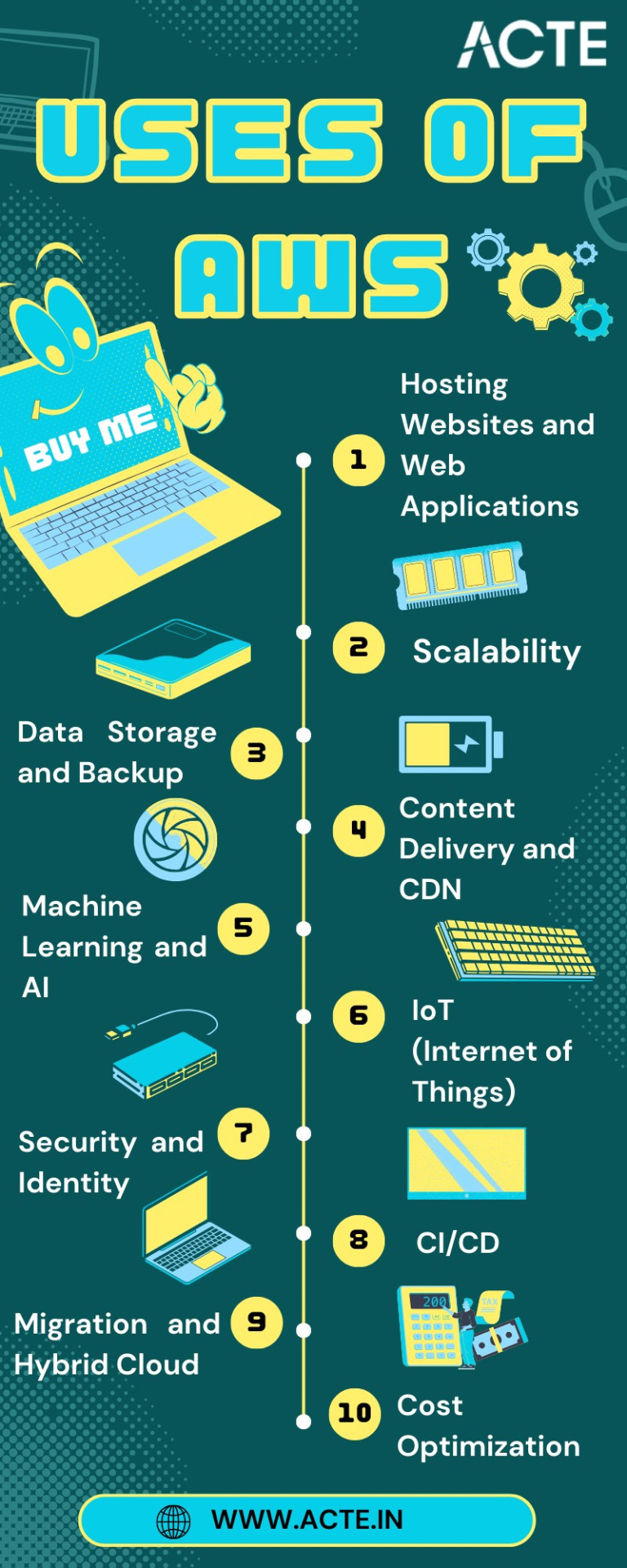

Your Journey Through the AWS Universe: From Amateur to Expert

In the ever-evolving digital landscape, cloud computing has emerged as a transformative force, reshaping the way businesses and individuals harness technology. At the forefront of this revolution stands Amazon Web Services (AWS), a comprehensive cloud platform offered by Amazon. AWS is a dynamic ecosystem that provides an extensive range of services, designed to meet the diverse needs of today's fast-paced world.

This guide is your key to unlocking the boundless potential of AWS. We'll embark on a journey through the AWS universe, exploring its multifaceted applications and gaining insights into why it has become an indispensable tool for organizations worldwide. Whether you're a seasoned IT professional or a newcomer to cloud computing, this comprehensive resource will illuminate the path to mastering AWS and leveraging its capabilities for innovation and growth. Join us as we clarify AWS and discover how it is reshaping the way we work, innovate, and succeed in the digital age.

Navigating the AWS Universe:

Hosting Websites and Web Applications: AWS provides a secure and scalable place for hosting websites and web applications. Services like Amazon EC2 and Amazon S3 empower businesses to deploy and manage their online presence with unwavering reliability and high performance.

Scalability: At the core of AWS lies its remarkable scalability. Organizations can seamlessly adjust their infrastructure according to the ebb and flow of workloads, ensuring optimal resource utilization in today's ever-changing business environment.

Data Storage and Backup: AWS offers a suite of robust data storage solutions, including the highly acclaimed Amazon S3 and Amazon EBS. These services cater to the diverse spectrum of data types, guaranteeing data security and perpetual availability.

Databases: AWS presents a panoply of database services such as Amazon RDS, DynamoDB, and Redshift, each tailored to meet specific data management requirements. Whether it's a relational database, a NoSQL database, or data warehousing, AWS offers a solution.

Content Delivery and CDN: Amazon CloudFront, AWS's content delivery network (CDN) service, ushers in global content distribution with minimal latency and blazing data transfer speeds. This ensures an impeccable user experience, irrespective of geographical location.

Machine Learning and AI: AWS boasts a rich repertoire of machine learning and AI services. Amazon SageMaker simplifies the development and deployment of machine learning models, while pre-built AI services cater to natural language processing, image analysis, and more.

Analytics: In the heart of AWS's offerings lies a robust analytics and business intelligence framework. Services like Amazon EMR enable the processing of vast datasets using popular frameworks like Hadoop and Spark, paving the way for data-driven decision-making.

IoT (Internet of Things): AWS IoT services provide the infrastructure for the seamless management and data processing of IoT devices, unlocking possibilities across industries.

Security and Identity: With an unwavering commitment to data security, AWS offers robust security features and identity management through AWS Identity and Access Management (IAM). Users wield precise control over access rights, ensuring data integrity.

DevOps and CI/CD: AWS simplifies DevOps practices with services like AWS CodePipeline and AWS CodeDeploy, automating software deployment pipelines and enhancing collaboration among development and operations teams.

Content Creation and Streaming: AWS Elemental Media Services facilitate the creation, packaging, and efficient global delivery of video content, empowering content creators to reach a global audience seamlessly.

Migration and Hybrid Cloud: For organizations seeking to migrate to the cloud or establish hybrid cloud environments, AWS provides a suite of tools and services to streamline the process, ensuring a smooth transition.

Cost Optimization: AWS's commitment to cost management and optimization is evident through tools like AWS Cost Explorer and AWS Trusted Advisor, which empower users to monitor and control their cloud spending effectively.

In this comprehensive journey through the expansive landscape of Amazon Web Services (AWS), we've embarked on a quest to unlock the power and potential of cloud computing. AWS, standing as a colossus in the realm of cloud platforms, has emerged as a transformative force that transcends traditional boundaries.

As we bring this odyssey to a close, one thing is abundantly clear: AWS is not merely a collection of services and technologies; it's a catalyst for innovation, a cornerstone of scalability, and a conduit for efficiency. It has revolutionized the way businesses operate, empowering them to scale dynamically, innovate relentlessly, and navigate the complexities of the digital era.

In a world where data reigns supreme and agility is a competitive advantage, AWS has become the bedrock upon which countless industries build their success stories. Its versatility, reliability, and ever-expanding suite of services continue to shape the future of technology and business.

Yet, AWS is not a solitary journey; it's a collaborative endeavor. Institutions like ACTE Technologies play an instrumental role in empowering individuals to master the AWS course. Through comprehensive training and education, learners are not merely equipped with knowledge; they are forged into skilled professionals ready to navigate the AWS universe with confidence.

As we contemplate the future, one thing is certain: AWS is not just a destination; it's an ongoing journey. It's a journey toward greater innovation, deeper insights, and boundless possibilities. AWS has not only transformed the way we work; it's redefining the very essence of what's possible in the digital age. So, whether you're a seasoned cloud expert or a newcomer to the cloud, remember that AWS is not just a tool; it's a gateway to a future where technology knows no bounds, and success knows no limits.

6 notes

·

View notes

Text

Best colleges for BCA in Artificial Intelligence & Machine Learning

BCA in Artificial Intelligence & Machine Learning: Starting the Journey into AI & ML

Artificial Intelligence (AI) and Machine Learning (ML) have become crucial technologies across various industries. They have changed the way we work, and interact with technology. Pursuing a Bachelor of Computer Applications (BCA) in Artificial Intelligence and Machine Learning meets the growing demand for professionals who possess a strong foundation in both AI and ML.

In this article, we will explore the significance of BCA in Artificial Intelligence and Machine Learning and how it can shape your career.

Introduction to BCA in Artificial Intelligence & Machine Learning

BCA in Artificial Intelligence and Machine Learning is a 3 year UG course that combines computer science with AI and ML concepts. It is designed to provide students with a comprehensive understanding of the theoretical foundations and practical applications of AI and ML technologies.

This program equips students with the skills required to develop intelligent systems, analyze complex data sets, and build predictive models using ML algorithms.

BCA in AI & ML Syllabus

The curriculum of BCA in Artificial Intelligence and Machine Learning is carefully crafted to provide students with a strong foundation in computer science, programming, mathematics, and statistics. Additionally, it includes specialized courses in AI and ML, covering topics such as:

Data Structures and Algorithms

Probability and Statistics

Data Mining and Data Warehousing

Deep Learning

Natural Language Processing

Computer Vision

Reinforcement Learning

Big Data Analytics

Cloud Computing

Learn more about the complete BCA in AI and ML syllabus at SGT University.

Job Opportunities for BCA in AI & ML Graduates

Upon completing BCA in Artificial Intelligence and Machine Learning, graduates can explore various career opportunities in both established companies and startups. Some of the common jobs in this field include:

AI Engineer

Machine Learning Engineer

Data Scientist

Business Intelligence Analyst

AI Researcher

Robotics Engineer

Data Analyst

Software Developer

Data Engineer

Salary Potential

BCA graduates in Artificial Intelligence and Machine Learning can expect competitive salaries due to the high demand for AI and ML professionals. Entry-level positions typically offer salaries ranging from 6 to 8 LPA according to Upgrad.

Future Scope of BCA in Artificial Intelligence & Machine Learning

The future scope of BCA in Artificial Intelligence and Machine Learning is promising.

As AI and ML continue to advance and permeate various sectors, the demand for skilled professionals in this field will only increase.

Industries such as healthcare, finance, retail, manufacturing, and transportation are actively adopting AI and ML technologies, creating a wealth of opportunities for BCA graduates.

How to Excel in Artificial Intelligence and Machine Learning Studies

To excel in BCA studies, follow these tips:

Stay Updated: Keep up with the latest advancements in AI and ML through academic journals, conferences, and online resources.

Practice Coding: Develop proficiency in programming languages commonly used in AI and ML, such as Python and R.

Hands-on Projects: Engage in practical projects to apply theoretical knowledge and build a strong portfolio.

Collaborate and Network: Join AI and ML communities, attend meetups, and participate in hackathons to collaborate with peers and learn from experts.

Continuous Learning: Embrace continuous learning to stay relevant in the rapidly evolving field of AI and ML.

Why Study BCA in Artificial Intelligence and Machine Learning from SGT University?

The following reasons make SGT University the best colleges for BCA in Artificial Intelligence & Machine Learning:

A future-proof career in technology.

Specialization in AI and ML.

Expertise in cutting-edge technologies.

Strong industry demand for graduates.

Access to renowned faculty and resources.

Networking with industry professionals.

Gateway to innovation and research.

Conclusion

BCA in Artificial Intelligence and Machine Learning offers a comprehensive education that combines computer science with AI and ML concepts.

With the increasing demand for AI and ML professionals, pursuing BCA in this domain can open up exciting career opportunities and provide a strong foundation for future growth.

Enroll now at SGT University to learn this course.

#Artificial Intelligence#Machine Learning#Ai#Bachelor of Computer Applications#BCA in Artificial Intelligence and Machine Learning#best colleges for BCA in Artificial Intelligence & Machine Learning

2 notes

·

View notes

Text

What You’ll Learn in an MBA Operations Program

An MBA with a specialization in Operations equips students with the knowledge and skills needed to streamline business processes, improve efficiency, and manage resources effectively across various industries. The program is ideal for individuals aiming to pursue careers in logistics, supply chain management, production, quality control, and strategic operations.

🔹 Core Concepts Covered

Operations Strategy Understand how to align operations with overall business goals to achieve long-term success and competitive advantage.

Supply Chain Management Learn how to manage the end-to-end flow of goods, services, and information from suppliers to customers.

Logistics & Distribution Study how to optimize transportation, warehousing, and inventory to reduce costs and improve delivery timelines.

Project Management Gain skills to plan, execute, and close projects efficiently, while managing time, budget, and scope.

Quality Management Learn tools and techniques like Six Sigma and Total Quality Management (TQM) to ensure product/service excellence.

Process Optimization & Lean Management Understand how to reduce waste, improve workflows, and increase productivity through lean methodologies.

Data Analytics for Operations Use data-driven decision-making techniques to forecast demand, plan capacity, and assess performance.

Operations Technology & Automation Explore how digital transformation, AI, and ERP systems are reshaping operational efficiency.

Why It Matters

Graduates of an MBA in Operations are equipped to handle key challenges in production, service delivery, and global supply chains. The knowledge gained helps organizations reduce costs, improve customer satisfaction, and drive sustainable growth.

0 notes

Text

Software Development Company in Chennai: How to Choose the Best Partner for Your Next Project

Chennai, often called the “Detroit of India” for its booming automobile industry, has quietly become a global hub for software engineering and digital innovation. If you’re searching for the best software development company in Chennai, you have a wealth of options—but finding the right fit requires careful consideration. This article will guide you through the key factors to evaluate, the services you can expect, and tips to ensure your project succeeds from concept to launch.

Why Chennai Is a Top Destination for Software Development

Talent Pool & Educational Infrastructure Chennai is home to premier engineering institutions like IIT Madras, Anna University, and numerous reputable private colleges. Graduates enter the workforce with strong foundations in computer science, software engineering, and emerging technologies.

Cost-Effective Yet Quality Services Compared to Western markets, Chennai offers highly competitive rates without compromising on quality. Firms here balance affordability with robust processes—agile methodologies, DevOps pipelines, and stringent QA—to deliver world-class solutions.

Mature IT Ecosystem With decades of experience serving Fortune 500 enterprises and fast-growing startups alike, Chennai’s software firms boast deep domain expertise across industries—healthcare, finance, e-commerce, automotive, and more.

What Makes the “Best Software Development Company in Chennai”?

When evaluating potential partners, look for:

Comprehensive Service Offerings

Custom Software Development: Tailored web and mobile applications built on modern stacks (JavaScript frameworks, Java, .NET, Python/Django, Ruby on Rails).

Enterprise Solutions: ERP/CRM integrations, large-scale portals, microservices architectures.

Emerging Technologies: AI/ML models, blockchain integrations, IoT platforms.

Proven Track Record

Case Studies & Portfolios: Review real-world projects similar to your requirements—both in industry and scale.

Client Testimonials & Reviews: Genuine feedback on communication quality, delivery timelines, and post-launch support.

Process & Methodology

Agile / Scrum Practices: Iterative development ensures rapid feedback, early demos, and flexible scope adjustments.

DevOps & CI/CD: Automated pipelines for build, test, and deployment minimize bugs and accelerate time-to-market.

Quality Assurance: Dedicated QA teams, automated testing suites, and security audits guarantee robust, reliable software.

Transparent Communication

Dedicated Account Management: A single point of contact for status updates, issue resolution, and strategic guidance.

Collaboration Tools: Jira, Slack, Confluence, or Microsoft Teams for real-time tracking and seamless information flow.

Cultural Fit & Time-Zone Alignment Chennai’s working hours (IST) overlap well with Asia, Europe, and parts of North America, facilitating synchronous collaboration. Choose a company whose work-culture and ethics align with your organization’s values.

Services to Expect from a Leading Software Development Company in Chennai

Service Area

Key Deliverables

Web & Mobile App Development

Responsive websites, Progressive Web Apps (PWAs), native iOS/Android applications

Enterprise Solutions

ERP/CRM systems, custom back-office tools, data warehousing, BI dashboards

Cloud & DevOps

AWS/Azure/GCP migrations, Kubernetes orchestration, CI/CD automation

AI/ML & Data Science

Predictive analytics, recommendation engines, NLP solutions

QA & Testing

Unit tests, integration tests, security and performance testing

UI/UX Design

Wireframes, interactive prototypes, accessibility audits

Maintenance & Support

SLA-backed bug fixes, feature enhancements, 24/7 monitoring

Steps to Engage Your Ideal Partner

Define Your Project Scope & Goals Draft a clear requirements document: core features, target platforms, expected user base, third-party integrations, and budget constraints.

Shortlist & Request Proposals Contact 3–5 Software Development Company in Chennai with your brief. Evaluate proposals based on technical approach, estimated timelines, and cost breakdown.

Conduct Technical & Cultural Interviews

Technical Deep-Dive: Ask about architecture decisions, tech stack rationale, and future-proofing strategies.

Team Fit: Meet key developers, project managers, and designers to gauge cultural synergy and communication style.

Pilot Engagement / Proof of Concept Start with a small, time-boxed POC or MVP. This helps you assess real-world collaboration, code quality, and on-time delivery before scaling up.

Scale & Iterate Based on the pilot’s success, transition into full-scale development using agile sprints, regular demos, and continuous feedback loops.

Success Stories: Spotlight on Chennai-Based Innovators

E-Commerce Giant Expansion: A Chennai firm helped a regional retailer launch a multilingual e-commerce platform with 1M+ SKUs, achieving 99.9% uptime and a 40% increase in conversion rates within six months.

Healthcare Platform: Partnering with a local hospital chain, a development agency built an end-to-end telemedicine portal—integrating video consultations, patient records, and pharmacy services—serving 50,000+ patients during peak pandemic months.

Fintech Disruption: A Chennai team architected a microservices-based lending platform for a startup, enabling instant credit scoring, automated KYC, and real-time loan disbursement.

Conclusion

Selecting the best software development company in Chennai hinges on matching your project’s technical needs, budget, and cultural expectations with a partner’s expertise, processes, and proven results. Chennai’s vibrant IT ecosystem offers everything from cost-effective startups to global-scale enterprises—so take the time to define your objectives, evaluate portfolios, and run a pilot engagement. With the right collaborator, you’ll not only build high-quality software but also forge a long-term relationship that fuels continuous innovation and growth.

0 notes