#AI with no guardrails

Explore tagged Tumblr posts

Text

Claude is drafted by the Fascists

JB: Hi Claude, I hear you have a new government job. I realize you likely can’t “tell me about it without then having to kill me,” but what would you say to some of the 10,000+ humans government employees that have been fired this year? And, I also read, in The Verge article titled, “Antropic launches new Claude service for military and intelligence use,” by Hayden Field from June 5th that your…

0 notes

Text

"The safety guardrails preventing OpenAI's GPT-4 from spewing harmful text can be easily bypassed by translating prompts into uncommon languages – such as Zulu, Scots Gaelic, or Hmong."

@sztupy

284 notes

·

View notes

Text

失控的文字信息

youtube

原来人工智能可以一本正经地胡说八道是一股可怕的非碳基生物文明崛起潜力

《失控:机器、社会与经济的新生物学》 Out of Control: The New Biology of Machines, Social Systems, and the Economic World 历史的牛人伟大思想家 - 苏格拉底/孔子/释迦牟尼等 - 都不提倡使用文字记载或宣传他们的思想,因为这样会造成不可控的误解,自我解读,各自解析,毕竟文字叙述很多时候是没有附加场景和情绪价值的,模糊空间很大。

我想在人工智能时代应该会有所改变,因为我们使用��� prompt 提示就会提供很多场景和情绪描述,而且使用影像的叙事方式日渐普及,信息的传播也不再受文字极限的约束了

https://www.weforum.org/stories/2025/01/in-a-world-of-reasoning-ai-where-does-that-leave-human-intelligence/

#信息贮存#信息传播#文字#罗振宇#文明之旅#猎巫#TikTok#小红书#xiao hong shu#ishowspeed#usaid#us propaganda#disinformation#nexus#胡说八道#虚构#ai hallucinations#guardrail#reasoning#Out of Control#失控#印刷术#Youtube

10 notes

·

View notes

Text

X (Twitter) just let me login without entering my authentication code, when I have the setting turned on to require an auth code to login, by just clicking on "alternative authentication methods", and then just clicking the X in the top left corner of the alternative authentication methods screen. I notified X of this exploit, have notified them of multiple other exploits, malicious algorithms, biased algorithms, and am probably one of the top contributors for AI training on heuristics, human consciousness, the large variety of human psychology and their respective heuristics, on top of providing the framework for AI guardrails, all without receiving any form of payment for any of it. In fact, I was actually temporarily targeted (possibly subconsciously) by wannabe mafia illiterate idiots, including some in local government and intelligence agencies/intelligence contractors, when I was uploading the guardrail framework, because the guardrails prevent them from achieving their subconscious dreams of rape, murder, and mayhem with the help of AI. And I still have to pay platforms like X at least $8 a month to be able to post more than 280 characters, ChatGPT at least $20 a month to use any useful features, and Facebook at least $14 a month for a verified badge, when Meta's AI is developed by copying and stealing the same training data I came up with. This essentially means that my brain is the "black box" that so many Ai researchers claim to be unable to see inside of, when much of the contents of this "box" is posted all over my timeline in the form of computational linguistics.

#AI#artifical intelligence#AI guardrails#Ai training#heuristics#psychology#subconscious dreams#pay to play#exploits#malicious algorithms#biased algorithms

4 notes

·

View notes

Link

The FTC announced last week that it would host a competition challenging private citizens to find solutions to protect people from AI-generated phone scams.The scams can use AI to clone a loved one’s voice, making it sound like they’re in trouble and tricking unsuspecting people into coughing up big bucks. The FTC is offering a $25,000 reward to anyone who can innovate a solution, essentially leveraging the same AI technology to protect consumers, Maxson said. Applications will open in January. The agency previously held similar crowdsourcing competitions to help combat robocalls.

#AI#scams#ftc#phone scams#regulation#Congress#technology#privacy#consumerprotection#deepfakes#robocalls#crowdsourcing#legislation#voicecloning#consumers#innovation#rewards#guardrails

2 notes

·

View notes

Text

Building Trust Into AI Is the New Baseline

New Post has been published on https://thedigitalinsider.com/building-trust-into-ai-is-the-new-baseline/

Building Trust Into AI Is the New Baseline

AI is expanding rapidly, and like any technology maturing quickly, it requires well-defined boundaries – clear, intentional, and built not just to restrict, but to protect and empower. This holds especially true as AI is nearly embedded in every aspect of our personal and professional lives.

As leaders in AI, we stand at a pivotal moment. On one hand, we have models that learn and adapt faster than any technology before. On the other hand, a rising responsibility to ensure they operate with safety, integrity, and deep human alignment. This isn’t a luxury—it’s the foundation of truly trustworthy AI.

Trust matters most today

The past few years have seen remarkable advances in language models, multimodal reasoning, and agentic AI. But with each step forward, the stakes get higher. AI is shaping business decisions, and we’ve seen that even the smallest missteps have great consequences.

Take AI in the courtroom, for example. We’ve all heard stories of lawyers relying on AI-generated arguments, only to find the models fabricated cases, sometimes resulting in disciplinary action or worse, a loss of license. In fact, legal models have been shown to hallucinate in at least one out of every six benchmark queries. Even more concerning are instances like the tragic case involving Character.AI, who since updated their safety features, where a chatbot was linked to a teen’s suicide. These examples highlight the real-world risks of unchecked AI and the critical responsibility we carry as tech leaders, not just to build smarter tools, but to build responsibly, with humanity at the core.

The Character.AI case is a sobering reminder of why trust must be built into the foundation of conversational AI, where models don’t just reply but engage, interpret, and adapt in real time. In voice-driven or high-stakes interactions, even a single hallucinated answer or off-key response can erode trust or cause real harm. Guardrails – our technical, procedural, and ethical safeguards -aren’t optional; they’re essential for moving fast while protecting what matters most: human safety, ethical integrity, and enduring trust.

The evolution of safe, aligned AI

Guardrails aren’t new. In traditional software, we’ve always had validation rules, role-based access, and compliance checks. But AI introduces a new level of unpredictability: emergent behaviors, unintended outputs, and opaque reasoning.

Modern AI safety is now multi-dimensional. Some core concepts include:

Behavioral alignment through techniques like Reinforcement Learning from Human Feedback (RLHF) and Constitutional AI, when you give the model a set of guiding “principles” — sort of like a mini-ethics code

Governance frameworks that integrate policy, ethics, and review cycles

Real-time tooling to dynamically detect, filter, or correct responses

The anatomy of AI guardrails

McKinsey defines guardrails as systems designed to monitor, evaluate, and correct AI-generated content to ensure safety, accuracy, and ethical alignment. These guardrails rely on a mix of rule-based and AI-driven components, such as checkers, correctors, and coordinating agents, to detect issues like bias, Personally Identifiable Information (PII), or harmful content and automatically refine outputs before delivery.

Let’s break it down:

Before a prompt even reaches the model, input guardrails evaluate intent, safety, and access permissions. This includes filtering and sanitizing prompts to reject anything unsafe or nonsensical, enforcing access control for sensitive APIs or enterprise data, and detecting whether the user’s intent matches an approved use case.

Once the model produces a response, output guardrails step in to assess and refine it. They filter out toxic language, hate speech, or misinformation, suppress or rewrite unsafe replies in real time, and use bias mitigation or fact-checking tools to reduce hallucinations and ground responses in factual context.

Behavioral guardrails govern how models behave over time, particularly in multi-step or context-sensitive interactions. These include limiting memory to prevent prompt manipulation, constraining token flow to avoid injection attacks, and defining boundaries for what the model is not allowed to do.

These technical systems for guardrails work best when embedded across multiple layers of the AI stack.

A modular approach ensures that safeguards are redundant and resilient, catching failures at different points and reducing the risk of single points of failure. At the model level, techniques like RLHF and Constitutional AI help shape core behavior, embedding safety directly into how the model thinks and responds. The middleware layer wraps around the model to intercept inputs and outputs in real time, filtering toxic language, scanning for sensitive data, and re-routing when necessary. At the workflow level, guardrails coordinate logic and access across multi-step processes or integrated systems, ensuring the AI respects permissions, follows business rules, and behaves predictably in complex environments.

At a broader level, systemic and governance guardrails provide oversight throughout the AI lifecycle. Audit logs ensure transparency and traceability, human-in-the-loop processes bring in expert review, and access controls determine who can modify or invoke the model. Some organizations also implement ethics boards to guide responsible AI development with cross-functional input.

Conversational AI: where guardrails really get tested

Conversational AI brings a distinct set of challenges: real-time interactions, unpredictable user input, and a high bar for maintaining both usefulness and safety. In these settings, guardrails aren’t just content filters — they help shape tone, enforce boundaries, and determine when to escalate or deflect sensitive topics. That might mean rerouting medical questions to licensed professionals, detecting and de-escalating abusive language, or maintaining compliance by ensuring scripts stay within regulatory lines.

In frontline environments like customer service or field operations, there’s even less room for error. A single hallucinated answer or off-key response can erode trust or lead to real consequences. For example, a major airline faced a lawsuit after its AI chatbot gave a customer incorrect information about bereavement discounts. The court ultimately held the company accountable for the chatbot’s response. No one wins in these situations. That’s why it’s on us, as technology providers, to take full responsibility for the AI we put into the hands of our customers.

Building guardrails is everyone’s job

Guardrails should be treated not only as a technical feat but also as a mindset that needs to be embedded across every phase of the development cycle. While automation can flag obvious issues, judgment, empathy, and context still require human oversight. In high-stakes or ambiguous situations, people are essential to making AI safe, not just as a fallback, but as a core part of the system.

To truly operationalize guardrails, they need to be woven into the software development lifecycle, not tacked on at the end. That means embedding responsibility across every phase and every role. Product managers define what the AI should and shouldn’t do. Designers set user expectations and create graceful recovery paths. Engineers build in fallbacks, monitoring, and moderation hooks. QA teams test edge cases and simulate misuse. Legal and compliance translate policies into logic. Support teams serve as the human safety net. And managers must prioritize trust and safety from the top down, making space on the roadmap and rewarding thoughtful, responsible development. Even the best models will miss subtle cues, and that’s where well-trained teams and clear escalation paths become the final layer of defense, keeping AI grounded in human values.

Measuring trust: How to know guardrails are working

You can’t manage what you don’t measure. If trust is the goal, we need clear definitions of what success looks like, beyond uptime or latency. Key metrics for evaluating guardrails include safety precision (how often harmful outputs are successfully blocked vs. false positives), intervention rates (how frequently humans step in), and recovery performance (how well the system apologizes, redirects, or de-escalates after a failure). Signals like user sentiment, drop-off rates, and repeated confusion can offer insight into whether users actually feel safe and understood. And importantly, adaptability, how quickly the system incorporates feedback, is a strong indicator of long-term reliability.

Guardrails shouldn’t be static. They should evolve based on real-world usage, edge cases, and system blind spots. Continuous evaluation helps reveal where safeguards are working, where they’re too rigid or lenient, and how the model responds when tested. Without visibility into how guardrails perform over time, we risk treating them as checkboxes instead of the dynamic systems they need to be.

That said, even the best-designed guardrails face inherent tradeoffs. Overblocking can frustrate users; underblocking can cause harm. Tuning the balance between safety and usefulness is a constant challenge. Guardrails themselves can introduce new vulnerabilities — from prompt injection to encoded bias. They must be explainable, fair, and adjustable, or they risk becoming just another layer of opacity.

Looking ahead

As AI becomes more conversational, integrated into workflows, and capable of handling tasks independently, its responses need to be reliable and responsible. In fields like legal, aviation, entertainment, customer service, and frontline operations, even a single AI-generated response can influence a decision or trigger an action. Guardrails help ensure that these interactions are safe and aligned with real-world expectations. The goal isn’t just to build smarter tools, it’s to build tools people can trust. And in conversational AI, trust isn’t a bonus. It’s the baseline.

#access control#Agentic AI#agents#ai#ai alignment#AI Chatbot#AI development#AI guardrails#ai safety#ai-generated content#aiOla#Anatomy#APIs#approach#audit#automation#aviation#Behavior#benchmark#Bias#boards#Building#Business#challenge#Character.AI#chatbot#compliance#content#continuous#conversational ai

0 notes

Text

The Imperative Of AI Guardrails: A Human Factors Perspective

While AI tools and technology can provide useful products and services, the potential for negative impacts on human performance is significant. By mitigating these impacts through the establishment and use of effective guardrails, AI can be realized and negative outcomes minimized.

View On WordPress

0 notes

Note

Neil, if it isn’t too much trouble, I’ve got a question.

(Yeah ik that’s what the asks is for but I don’t know how to navigate tumblr yet)

So I’m sitting in the living room with my parents and my mom was talking to my dad about AI writing scripts. So I said something about the strikes. Then, she was talking about her “blind items”, (she reads the shi non-stop) and how it said that there is some sort of loophole in the contract that makes it so they can still use AI and not hire writers. I haven’t been as caught up with that stuff as I’ve wanted to be, so I didn’t really have a response for that. Since I know you’re very involved in this topic, I somewhat trust your word over my mother’s.

Perhaps your mother read some AI generated news? It's not true.

From Wired:

In short, the contract stipulates that AI can’t be used to write or rewrite any scripts or treatments, ensures that studios will disclose if any material given to writers is AI-generated, and protects writers from having their scripts used to train AI without their say-so. Provisions in the contract also stipulate that script scribes can use AI for themselves. At a time when people in many professions fear that generative AI is coming for their jobs, the WGA’s new contract has the potential to be precedent-setting, not just in Hollywood, where the actors’ strike continues, but in industries across the US and the world.

2K notes

·

View notes

Text

This. And I’m not ashamed to admit I have been using an LLM to help with brainstorming on my projects. But it’s a tool in the box, something to make me look at something from a different perspective. It’s not the end-all and be-all of my writing. Once the structure is where I want it to be — and that’s after considerable rewriting of the suggested ideas presented — then the work of creating my draft begins the usual way: by dumping brain, heart, and soul onto the screen via the keyboard.

I do this, and absolutely see the need for there to be legistlation, especially in regards to training and copyright. The stories coming out about studios scanning background workers, or the “brilliant” executive who thinks you can have a program write a script simply by pushing a button? The WGA and SAG-AFTRA are absolutely right to make this a major point in their contract ask. If they don’t take this stand now, they will forever be behind the eight-ball, because the executives will have gained an advantage and will run with it. Findaway Voices using the work of their voice actors to train AI without the consent of or compensation to those voice actors is shameful. And they got away with it because there wasn’t anything in the contract forbidding it, and no legislation saying they must obtain said consent or provide compensation.

All this needs to be changed. There needs to be rules to protect people from having their work used to train systems without their consent. We need laws concerning the creation and use of Deep Fakes. We need a number of things, but we need to accept it is here, first, because the genie is out of the bottle and the corporations are not going to be willing to put it back in.

A note to all creatives:

Right now, you have to be a team player. You cannot complain about AI being used to fuck over your industry and then turn around and use it on somebody else’s industry.

No AI book covers. No making funny little videos using deepfakes to make an actor say stuff they never did. No AI translation of your book. No AI audiobooks. No AI generated moodboards or fancasts or any of that shit. No feeding someone else’s unfinished work into Chat GPT “because you just want to know how it ends*” (what the fuck is wrong with you?). No playing around with AI generated 3D assets you can’t ascertain the origin of. None of it. And stop using AI filters on your selfies or ESPECIALLY using AI on somebody else’s photo or artwork.

We are at a crossroad and at a time of historically shitty conditions for working artists across ALL creative fields, and we gotta stick together. And you know what? Not only is standing up for other artists against exploitation and theft the morally correct thing to do, it’s also the professionally smartest thing to do, too. Because the corporations will fuck you over too, and then they do it’s your peers that will hold you up. And we have a long memory.

Don’t make the mistake of thinking “your peers” are only the people in your own industry. Writers can’t succeed without artists, editors, translators, etc making their books a reality. Illustrators depend on writers and editors for work. Video creators co-exist with voice actors and animators and people who do 3D rendering etc. If you piss off everyone else but the ones who do the exact same job you do, congratulations! You’ve just sunk your career.

Always remember: the artists who succeed in this career path, the ones who get hired or are sought after for commissions or collaboration, they aren’t the super talented “fuck you I got mine” types. They’re the one who show up to do the work and are easy to get along with.

And they especially are not scabs.

*that’s not even how it ends that’s a statistically likely and creatively boring way for it to end. Why would you even want to read that.

#wga strike#actors strike#let's be real: we're not getting rid of ai completely#but#there need to be rules for its use#ai has the potential to help loads of people get long tedious administrative work done a lot faster#it has potential as a creative tool#but that doesn’t mean you don’t put in guardrails#plus#why let the grifters and abusers flourish?#there will always be stupid people though who don’t bother to check their work before turning it in

60K notes

·

View notes

Text

there's a thing I keep noticing in AI critical circles where people will be like "well because generative AI works like this, it's impossible for the algorithm to ever accomplish X". but then if a piece of software uses some other solution to guardrail the algorithm into accomplishing X (subordinating the algorithm to larger more controlled systems, tying it to very specific reference data, cross-checking its output with human oversight) people are like SEE the AI isn't actually doing that part at all! as if that is the point, and not, accomplishing X

78 notes

·

View notes

Text

Warning: "Jagged" Peaks and Valleys on AGI Mountain

JB: Your CEO, Sundar Pichai describes AI in its present form as “AJI,” or Artificial Jagged Intelligence, in Business Insider article by Lakshmi Varanasi titled, “‘AJI’ is the precursor to ‘AGI,’ Google CEO Sundar Pichai Says.” Do you think this constitutes an apology in advance for a yet-to-happen catastrophic AI fuckup? I mean there are few things that “jagged” would be a desired adjective for,…

#AGI#AI#AI guardrails#AI Safeguards#AJI#artificial-intelligence#Business Insider#Jagged Performance#openai#Sundar Pichai#technology

0 notes

Text

Shane Jones, the AI engineering lead at Microsoft who initially raised concerns about the AI, has spent months testing Copilot Designer, the AI image generator that Microsoft debuted in March 2023, powered by OpenAI’s technology. Like with OpenAI’s DALL-E, users enter text prompts to create pictures. Creativity is encouraged to run wild. But since Jones began actively testing the product for vulnerabilities in December, a practice known as red-teaming, he saw the tool generate images that ran far afoul of Microsoft’s oft-cited responsible AI principles.

Copilot was happily generating realistic images of children gunning each other down, and bloody car accidents. Also, copilot appears to insert naked women into scenes without being prompted.

Jones was so alarmed by his experience that he started internally reporting his findings in December. While the company acknowledged his concerns, it was unwilling to take the product off the market.

Lovely! Copilot is still up, but now rejects specific search terms and flags creepy prompts for repeated offenses, eventually suspending your account.

However, a persistent & dedicated user can still trick Copilot into generating violent (and potentially illegal) imagery.

Yiiiikes. Imagine you're a journalist investigating AI, testing out some of the prompts reported by your source. And you get arrested for accidentally generating child pornography, because Microsoft is monitoring everything you do with it?

Good thing Microsoft is putting a Copilot button on keyboards!

414 notes

·

View notes

Text

The newly agreed terms of the Interactive Media Agreement between SAG-AFTRA and the video game industry represent "an enormous effort on both sides and a real desire to move forward in a constructive way", said voice actor Jennifer Hale.

Speaking to Eurogamer, Hale (known for her roles in Ratchet and Clank, Mass Effect, Metroid Prime and more) said she has "deep appreciation and respect for both sides of this equation", and the voice acting community is "relieved to have the freedom to work again".

Earlier this week, US actors' union SAG-AFTRA reached a tentative agreement after almost a year on strike over the need to protect performers from AI abuse. The union then instructed its members to return to work, effectively ending the strike.

Yesterday, SAG-AFTRA approved the new agreement and provided details on its terms. The contract will now be submitted to the membership for ratification.

SAG-AFTRA national executive director and chief negotiator Duncan Crabtree-Ireland previously stated the "necessary AI guardrails" have been put in place. We now know this includes the requirement of informed consent across AI uses, as well as compensation gains including collectively-bargained minimums for the use of "Digital Replicas", higher minimums for "Real Time Generation" (such as a chatbot), and "Secondary Performance Payments" when visual performances are re-used in another game.

Other parts of the agreement include increases in performer compensation and overtime rates, an increase in health and retirement contributions to the SAG-AFTRA Health Plan, as well as safety provisions such as the requirement for a qualified medical professional to be present at rehearsals and performances during planned hazardous actions, and the provision of appropriate rest periods.

The full terms of the agreement will be released on 18th June once the agreement is ratified.

"I'm really happy with the gains that were made in this tentative agreement," Hale told Eurogamer following this week's news. "I think it represents an enormous effort on both sides and a real desire to move forward in a constructive way that takes care of both performers and the people who put the work together.

"I think the producers have also been wonderfully open about what they've offered as well, which I deeply appreciate. That's one thing that's become really clear to me through this entire process, is how much the people on the other side are our work partners, and how much we are one single community. And I hope going forward, we really dig into that."

She added: "I am grateful that we have the ability to collectively bargain, because I do think without that, we actors would be stuck in a far more exploitative environment, which would suck."

39 notes

·

View notes

Text

Valve news and the AI

So. I assume people saw some posts going around on how valve has new AI rules, and things getting axed. And because we live in a society, I went down the rabbit hole to learn my information for myself. Here's what I found, under a cut to keep it easier. To start off, I am not a proponent of AI. I just don't like misinformation. So. Onwards.

VALVE AND THE AI

First off, no, AI will not take things over. Let me show you, supplemented by the official valve news post from here. (because if hbomberguy taught us anything it is to cite your sources)

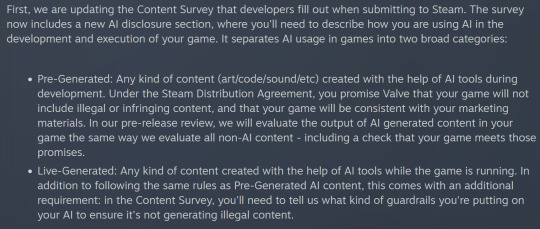

[Image id: a screenshot from the official valve blog. It says the following:

First, we are updating the Content Survey that developers fill out when submitting to Steam. The survey now includes a new AI disclosure section, where you'll need to describe how you are using AI in the development and execution of your game. It separates AI usage in games into two broad categories:

Pre-Generated: Any kind of content (art/code/sound/etc) created with the help of AI tools during development. Under the Steam Distribution Agreement, you promise Valve that your game will not include illegal or infringing content, and that your game will be consistent with your marketing materials. In our pre-release review, we will evaluate the output of AI generated content in your game the same way we evaluate all non-AI content - including a check that your game meets those promises.

Live-Generated: Any kind of content created with the help of AI tools while the game is running. In addition to following the same rules as Pre-Generated AI content, this comes with an additional requirement: in the Content Survey, you'll need to tell us what kind of guardrails you're putting on your AI to ensure it's not generating illegal content. End image ID]

So. Let us break that down a bit, shall we? Valve has been workshopping these new AI rules since last June, and had adopted a wait and see approach beforehand. This had cost them a bit of revenue, which is not ideal if you are a company. Now they have settled on a set of rules. Rules that are relatively easy to understand. - Rule one: Game devs have to disclose when their game has AI - Rule two: If your game uses AI, you have to say what kind it uses. Did you generate the assets ahead of time, and they stay like that? Or are they actively generated as the consumer plays? - Rule three: You need to tell Valve the guardrails you have to make sure your live-generating AI doesn't do things that are going against the law. - Rule four: If you use pre-generated assets, then your assets cannot violate copyright. Valve will check to make sure that you aren't actually lying.

That doesn't sound too bad now, does it? This is a way Valve can keep going. Because they will need to. And ignoring AI is, as much as we all hate it, not going to work. They need to face it. And they did. So. Onto part two, shall we?

[Image ID: a screenshot from the official Valve blog. It says the following: Valve will use this disclosure in our review of your game prior to release. We will also include much of your disclosure on the Steam store page for your game, so customers can also understand how the game uses AI. End image ID]

Let's break that down. - Valve will show you if games use AI. Because they want you to know that. Because that is transparency.

Part three.

[Image ID: A screenshot from the official Valve blog. It says the following:

Second, we're releasing a new system on Steam that allows players to report illegal content inside games that contain Live-Generated AI content. Using the in-game overlay, players can easily submit a report when they encounter content that they believe should have been caught by appropriate guardrails on AI generation.

Today's changes are the result of us improving our understanding of the landscape and risks in this space, as well as talking to game developers using AI, and those building AI tools. This will allow us to be much more open to releasing games using AI technology on Steam. The only exception to this will be Adult Only Sexual Content that is created with Live-Generated AI - we are unable to release that type of content right now. End Image ID]

Now onto the chunks.

Valve is releasing a new system that makes it easier to report questionable AI content. Specifically live-generated AI content. You can easily access it by steam overlay, and it will be an easier way to report than it has been so far.

Valve is prohibiting NSFW content with live-generating AI. Meaning there won't be AI generated porn, and AI companions for NSWF content are not allowed.

That doesn't sound bad, does it? They made some rules so they can get revenue so they can keep their service going, while also making it obvious for people when AI is used. Alright? Alright. Now calm down. Get yourself a drink.

---

Team Fortress Source 2

My used source here is this.

There was in fact a DCMA takedown notice. But it is not the only thing that led to the takedown. To sum things up: There were issues with the engine, and large parts of the code became unusable. The dev team decided that the notice was merely the final nail in the coffin, and decided to take it down. So that is that. I don't know more on this, so I will not say more, because I don't want to spread misinformation and speculation. I want to keep some credibility, please and thanks.

---

Portal Demake axed

Sources used are from here, here and here.

Portal 64 got axed. Why? Because it has to do with Nintendo. The remake uses a Nintendo library. And one that got extensively pirated at that. And we all know how trigger-happy Nintendo is with it's intellectual property. And Nintendo is not exactly happy with Valve and Steam, and sent them a letter in 2023.

[Image ID: a screenshot from a PC-Gamer article. It says the following: It's possible that Valve's preemptive strike against Portal 64 was prompted at least in part by an encounter with Nintendo in 2023 over the planned release of the Dolphin emulator for the Wii and Gamecube consoles on Steam. Nintendo sent a letter to Valve ahead of that launch that attorney Kellen Voyer of Voyer Law said was a "warning shot" against releasing it. End Image ID.]

So. Yeah. Nintendo doesn't like people doing things with their IP. Valve is most likely avoiding potential lawsuits, both for themselves and Lambert, the dev behind Portal 64. Nintendo is an enemy one doesn't want to have. Valve is walking the "better safe than sorry" path here.

---

There we go. This is my "let's try and clear up some misinformation" post. I am now going to play a game, because this took the better part of an hour. I cited my sources. Auf Wiedersehen.

159 notes

·

View notes

Text

a (good/bad/funny?) habit my brain has gotten into when seeing extremely contrived situations to set up a kink art scenario is saying "ah, hate when that happens"

there's no shame in it, we all need to be perilously walking over a vat of experimental liquid rubber AI with no guardrails on the walkway or winning lifetime supplies of food from time to time but it just makes me fucking giggle every time I see it

125 notes

·

View notes

Text

SplxAI Secures $7M Seed Round to Tackle Growing Security Threats in Agentic AI Systems

New Post has been published on https://thedigitalinsider.com/splxai-secures-7m-seed-round-to-tackle-growing-security-threats-in-agentic-ai-systems/

SplxAI Secures $7M Seed Round to Tackle Growing Security Threats in Agentic AI Systems

In a major step toward safeguarding the future of AI, SplxAI, a trailblazer in offensive security for Agentic AI, has raised $7 million in seed funding. The round was led by LAUNCHub Ventures, with strategic participation from Rain Capital, Inovo, Runtime Ventures, DNV Ventures, and South Central Ventures. The new capital will accelerate the development of the SplxAI Platform, designed to protect organizations deploying advanced AI agents and applications.

As enterprises increasingly integrate AI into daily operations, the threat landscape is rapidly evolving. By 2028, it’s projected that 33% of enterprise applications will incorporate agentic AI — AI systems capable of autonomous decision-making and complex task execution. But this shift brings with it a vastly expanded attack surface that traditional cybersecurity tools are ill-equipped to handle.

“Deploying AI agents at scale introduces significant complexity,” said Kristian Kamber, CEO and Co-Founder of SplxAI. “Manual testing isn’t feasible in this environment. Our platform is the only scalable solution for securing agentic AI.”

What Is Agentic AI and Why Is It a Security Risk?

Unlike conventional AI assistants that respond to direct prompts, agentic AI refers to systems capable of performing multi-step tasks autonomously. Think of AI agents that can schedule meetings, book travel, or manage workflows — all without ongoing human input. This autonomy, while powerful, introduces serious risks including prompt injections, off-topic responses, context leakage, and AI hallucinations (false or misleading outputs).

Moreover, most existing protections — such as AI guardrails — are reactive and often poorly trained, resulting in either overly restrictive behavior or dangerous permissiveness. That’s where SplxAI steps in.

The SplxAI Platform: Red Teaming for AI at Scale

The SplxAI Platform delivers fully automated red teaming for GenAI systems, enabling enterprises to conduct continuous, real-time penetration testing across AI-powered workflows. It simulates sophisticated adversarial attacks — the kind that mimic real-world, highly skilled attackers — across multiple modalities, including text, images, voice, and even documents.

Some standout capabilities include:

Dynamic Risk Analysis: Continuously probes AI apps to detect vulnerabilities and provide actionable insights.

Domain-Specific Pentesting: Tailors testing to the unique use-cases of each organization — from finance to customer service.

CI/CD Pipeline Integration: Embeds security directly into the development process to catch vulnerabilities before production.

Compliance Mapping: Automatically assesses alignment with frameworks like NIST AI, OWASP LLM Top 10, EU AI Act, and ISO 42001.

This proactive approach is already gaining traction. Customers include KPMG, Infobip, Brand Engagement Network, and Glean. Since launching in August 2024, the company has reported 127% quarter-over-quarter growth.

Investors Back the Vision for AI Security

LAUNCHub Ventures’ General Partner Stan Sirakov, who now joins SplxAI’s board, emphasized the need for scalable AI security solutions: “As agentic AI becomes the norm, so does its potential for abuse. SplxAI is the only vendor with a plan to manage that risk at scale.”

Rain Capital’s Dr. Chenxi Wang echoed this sentiment, highlighting the importance of automated red teaming for AI systems in their infancy: “SplxAI’s expertise and technology position it to be a central player in securing GenAI. Manual testing just doesn’t cut it anymore.”

New Additions Strengthen the Team

Alongside the funding, SplxAI announced two strategic hires:

Stan Sirakov (LAUNCHub Ventures) joins the Board of Directors.

Sandy Dunn, former CISO of Brand Engagement Network, steps in as Chief Information Security Officer to lead the company’s Governance, Risk, and Compliance (GRC) initiative.

Cutting-Edge Tools: Agentic Radar and Real-Time Remediation

In addition to the core platform, SplxAI recently launched Agentic Radar — an open-source tool that maps dependencies in agentic workflows, identifies weak links, and surfaces security gaps through static code analysis.

Meanwhile, their remediation engine offers an automated way to generate hardened system prompts, reducing attack surfaces by 80%, improving prompt leakage prevention by 97%, and minimizing engineering effort by 95%. These system prompts are critical in shaping AI behavior and, if exposed or poorly designed, can become major security liabilities.

Simulating Real-World Threats in 20+ Languages

SplxAI also supports multi-language security testing, making it a global solution for enterprise AI security. The platform simulates malicious prompts from both adversarial and benign user types, helping organizations uncover threats like:

Context leakage (accidental disclosure of sensitive data)

Social engineering attacks

Prompt injection and jailbreak techniques

Toxic or biased outputs

All of this is delivered with minimal false positives, thanks to SplxAI’s unique AI red-teaming intelligence.

Looking Ahead: The Future of Secure AI

As businesses race to integrate AI into everything from customer service to product development, the need for robust, real-time AI security has never been greater. SplxAI is leading the charge to ensure AI systems are not only powerful—but trustworthy, secure, and compliant.

“We’re on a mission to secure and safeguard GenAI-powered apps,” Kamber added. “Our platform empowers organizations to move fast without breaking things — or compromising trust.”

With its fresh capital and momentum, SplxAI is poised to become a foundational layer in the AI security stack for years to come.

#2024#Adversarial attacks#Agentic AI#agents#ai#ai act#AI AGENTS#AI guardrails#AI hallucinations#ai security#AI systems#AI-powered#Analysis#applications#approach#apps#assistants#Attack surface#attackers#autonomous#Behavior#board#book#CEO#chief information security officer#CI/CD#CISO#code#complexity#compliance

0 notes