#ALGEBRA

Explore tagged Tumblr posts

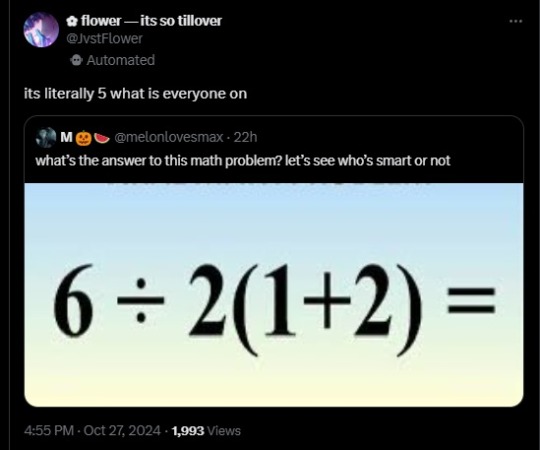

Text

Shit man, this algebra war is fucked. I just saw a guy clap his hands together and say "the six functors" or some similar shit, and every chain complex around him got put into a short exact sequence, had their long exact sequence taken out and then got their homology calculated. The camera didn't even go onto him, that's how common shit like this is. My ass is casting lagrange's theorem and degree 2 equations. I think I just heard "power word: operad" two groups over. I gotta get the fuck outta here.

816 notes

·

View notes

Text

Given a functor T : C -> Set the category of T-spaces is denoted by Sp(T) and defined as follows: 1. An object of Sp(T) is a pair (X, J) consisting of an object X in C alongside a subset of T(X). 2. A morphism of Sp(T) from (X, J) to (Y, K) consists of a morphism f : X -> Y such that U \in J implies T(f)(U) in K. Choose C = Set^op and T the convariant power-set functor. We can then recover topological spaces as a subcategory of Sp(T)^op consisting of those objects which are projective with respect to the following morphisms for all sets A, U, V ⊆ A and J ⊆ 𝒫(A). 1. (A, {∅, A}) -> (A, ∅) 2. (A, {U, V, U ∩ V}) -> (A, { U, V }) 3. (A, J ∪ { ∪ J }) -> (A, J) Sort of neat.

29 notes

·

View notes

Text

Twilight fails to teach Trixie algebra

#mlp#horse#mlp art#gen 4 mlp#my little pony#twilight sparkle#trixie lulamoon#twixie#algebra#maths posting#maths#horse maths#horse phd

625 notes

·

View notes

Text

Poll time, but this time linear algebra.

Arguments for any position appreciated!

170 notes

·

View notes

Text

An algebraic equation yields a modernist shape. Plane algebraic curves. 1920.

Internet Archive

833 notes

·

View notes

Text

168 notes

·

View notes

Text

263 notes

·

View notes

Text

133 notes

·

View notes

Text

My friend and I were being taught advanced algebra concepts except in a very dumbed down, simplistic way so it would be easier to understand.

Except, my friend already understood all of it. He would say, "Oh, that's an integral of 0 to pi," and the teacher would say, "Well yes, but the answer I'm looking for is 'red'."

This Math class was interspersed with sessions on the Grimace Shower and Workout Machine, a Grimace-themed workout machine where you would hold yourself horizontal with your arms, play a shitty arcade game with your feet, and a tiny little spout would occasionally pour water onto your chest.

#dream#teaching#learning#algebra#advanced algebra#grimace#grimace shower#workout#workout machine#working out#game#gaming#arcade game

895 notes

·

View notes

Text

So what's up with dividing by zero anyways - a ramble on algebraic structures

Most everyone in the world (at least in theory) knows how to add, subtract, multiply, and divide numbers. You can always add two numbers, subtract two numbers, and multiply two numbers. But you must **never** divide by zero... or something along those lines. There's often a line of logic that leads to dividing by zero leading to "infinity," whatever infinity means, unless you're doing 0/0, whatever that means either. Clearly this is a problem! We can't have such inconsistencies in our fundamental operations! Why aren't our top mathematicians working on this?

So, that might be a bit of an exaggeration: division by zero isn't really a problem at all and is, for all intents and purposes fairly well understood, but to see why we'll have to take a crash course through algebra (the field of math, not the grade school version). Sorry for those of y'all who have seen fields and projective space before, not much to gain out of this one.

Part I: In the beginning, we had a Set.

As is true with most things in math, the only structure we start with is a set. A set isn't useful for much; all we can do with a single set is say what elements are and aren't in the set. Once you have more than one set, you start getting interesting things like unions or intersections or functions or Cartesian products, but none of those are _really_ that useful (or at least necessary) for understanding algebraic structures at the level we need, so a single set is what we start with and a single set there will be. The story then goes as follows: on the first day the lord said "Let there be an operation!" and it was so. If you want to be a bit of a nerd, a (binary) operation on a set A is formally a map * : A x A -> A, but for our purposes we just need to know that it matches the standard operations most people know (i.e. addition, subtraction, multiplication, but not division) in that for any two numbers a and b, we can do a * b and get another number. Of course, once again this is not very helpful on its own, and so we need to impose some more conditions on this operation for it to be useful for us. Not to worry though, these conditions are almost always ones you know well, if not by name, and come rather intuitively.

The first structure we'll discuss is that of a monoid: a set with an operation that is associative and has an identity. Associativity simply means that (a * b) * c = a * (b * c), and an identity simply means that we have some special element e such that a * e = e * a = a. For two simple examples and one nonexample, we have the natural numbers (with 0) under addition is a monoid: 0 + a *= *a *+ 0 = *a, and any two natural numbers add to another natural number; the integers under multiplication is a monoid: 1 * a = a * 1 = a, and any two integers multiply to another integer; and the integers under subtraction is not a monoid, since subtraction is not associative (a - (b - c) =/= (a-b) - c). In both of these examples, the operation is commutative: in other words, a * b = b * a for every a and b. There are plenty of examples of operations that are not commutative, matrix multiplication or function composition probably being the most famous, but for the structures we're going to be interested in later operations are almost always commutative, so we can just assume that from the start.

Of course, you might wonder where subtraction comes from, if it doesn't fit into a monoid structure (and in particular isn't associative). Not to worry! We can simply view subtraction as another type of addition, and our problems go away. In particular, we add the condition that for every a, we have an inverse element a⁻ ¹ (or -a if our operation is addition) such that a * a⁻ ¹ = a⁻ ¹ * a = e. For fans of universal algebra, just as a binary operation can be thought of as a function, the inverse can be thought of as a function i : A -> A that sends each element to its inverse. This forms a structure we know as a group. While none of the above examples form a group, one of them can be naturally extended to a group: if we simply add negative whole numbers to natural numbers, we get the group of integers over addition, where for any integer a, we have its inverse -a where a + -a = 0. In particular, the subtraction a - b is just a + -b = -b + a, where -b is the additive inverse of b. As we will soon see, division can also be thought of in a similar way, where a/b = a * /b = /b * a where /b is the multiplicative inverse of b. As a side note, the examples above are very specific types of monoids and groups which turn out to be quite far from the general ideas that monoids and groups are trying to encapsulate. Monoids show up often in computer science as they're a good model for describing how a list of commands affects a computer, and groups are better thought of as encapsulating symmetries of an object (think of the ways you can rotate and reflect a square or a cube).

Part II: So imagine if instead of one operation, we have... two...

If you've ever taken introductory algebra, you've probably never heard of monoids and only done groups. This is partially because monoids are much less mathematically interesting than groups are and partially because monoids are just not as useful when thinking about other things. For the purposes of this post, however, the logical steps from Set -> Monoid -> Group are surprisingly similar to the steps Group -> Ring -> Field, so I've chosen to include it regardless.

Just as we started from a set and added an operation to make a monoid, here we start from an additive group (i.e. a group where the operation is addition) and add another operation, namely multiplication, that acts on the elements of the group. Just like in the monoid, we will impose the condition that multiplication is associative and has an identity, namely 1, but we also impose the condition that multiplication meshes nicely with addition in what you probably know as the distributive properties. What we end up with is a ring, something like the integers, where you can add, subtract, and multiply, but not necessarily divide (for example, 2 doesn't have a multiplicative inverse in the integers, as a * 2 = 1 has no solutions). Similarly, when we add in multiplicative inverses to every nonzero element, we get a field, something like the rational numbers or the real numbers, where we can now divide by every nonzero number. In other words, a ring is an additive group with a multiplicative monoid, and a field is an additive group with a subset that is a multiplicative group (in particular the subset that is everything except zero). For those who want to be pedantic, multiplication in a ring doesn't have to be commutative, but addition is, and both addition and multiplication are commutative in a field. A full list of the conditions we impose on the operations of a monoid, group, ring, and field can be found here).

So why can't we have a multiplicative inverse to 0 in a field? As it turns out, this is because 0 * a = 0 for every a, so nothing times 0 gets you to 1. There is technically a structure you can have if 0 = 1, but it turns out there's only the one single element 0 in that structure and nothing interesting happens, so generally fields specifically don't allow 0 = 1. Then, what if instead we relaxed the condition that 0 * a = 0? Similarly, it turns out that this isn't one of the fundamental conditions on multiplication, but rather arises from the other properties (a simple proof is a * 0 = a * (0 + 0) = a * 0 + a * 0 implies 0 = a * 0 - a * 0 = a * 0 + a * 0 - a * 0 = a * 0). If we were to relax this condition, then we lose some of the other nice properties that we built up. This will be a recurring theme throughout the rest of this post, so be wary.

Part III. We can't have everything we want in life.

While all the structures so far have been purely algebraic and purely algebraically motivated, the simplest way to start dividing by zero is actually "geometric," with several different ways of constructing the same space. The construction we'll use is as follows: take any field, particularly the real numbers or the complex numbers. We can always take the cartesian product of a field K with itself to form what's called affine space K^2, which is the set of ordered pairs (a,b) for a, b in K. As a side note, the product of groups, rings, or fields has a natural definition of addition or whatever the underlying group operation is by doing it componentwise, i.e. (a,b) * (c,d) = (a * c, b * d), but our operations will not coincide with this, as you'll see soon. This affine space is a plane - in fact, when we do this to the real numbers, we get the Cartesian plane - within which we can construct lines, some of which we get by considering the set of points (x, y) satisfying the familiar equation y = mx + b for some 'slope' m and 'intercept' b. In particular, we want to characterize all the lines through the origin. This gives us all the lines of the form y = mx, as well as one additional line x = 0. This is the basic construction of what we call the projective line, a space characterizing all the lines through the origin of affine 2-space. The geometric picture of this space is actually a circle: the bottom point representing the number 0; the left and right halves representing negative and positive numbers, repsectively; and the top point representing the number "infinity."

There are a few ways of describing points on the projective line. The formal way of doing so is by using what are called homogenous coordinates. In other words, for any nonzero point (a,b) in affine space, it is surely true that we can find a line through the origin and (a,b). In particular, if a is not zero, then this line takes the form y = (b/a) x where the slope is b/a. Furthermore, any two points (a,b) and (c,d) can actually sit on the same line, in particular whenever c = ka and d = kb for some number k. Thus, we can define homogenous coordinates as the set of points [a : b] for a, b in our field where [a : b] = [ka : kb] by definition, and the point [0 : 0] is not allowed as it doesn't specify any particular line (after all, every line passes through the origin). As is alluded to above, however, this means that whenever a =/= 0, we can take k = 1/a to get [a : b] = [1 : b/a], in other words characterizing each line by its slope. Furthermore, whenever a = 0, we can take k = 1/b to get [0 : b] = [0 : 1]. In other words, the projective line is, as we informally stated above, equivalent to the set of slopes of lines through the origin plus one other point representing the vertical line, the point at "infinity." Since slopes are just numbers in a field, we can add, subtract, multiply, and divide them as we normally do with one exception: the slope of the lines containing [a : b] for any a =/= 0 is b/a, so clearly the line with infinite slope consisting of points [0 : b] implies that b/0 should be infinity. Voila! We can divide by zero now, right? Well... there are two loose ends to tie down. The first is what infinity actually means in this case, since it is among the most misunderstood concepts in mathematics. Normally, when people bandy about phrases such as "infinity isn't a number, just a concept" or "some infinities are different from others" they are usually wrong (but well meaning) and also talking about a different kind of infinity, the ones that arise from cardinalities. Everything in math depends on the context in which it lies, and infinity is no different. You may have heard of the cardinal infinity, the subject of Hilbert's Hotel, describing the size of sets and written primarily with aleph numbers. Similarly, you may also have heard of the ordinal infinity, describing the "place" in the number line greater than any natural number. Our infinity is neither of these: it is to some extent an infinity by name only, called such primarily to take advantage of the intuition behind dividing by zero. It's not "greater" than any other number (in fact, the normal ordering of an ordered fields such as the real numbers breaks down on the projective line), and this is a consequence of the fact that if you make increasingly negative and increasingly positive slopes you end up near the same place: a vertical line. In other words, "negative infinity" and "positive infinity" are the same infinity.

The second loose end is that defining our operations this way is actually somewhat algebraically unsound, at least with respect to the way we think about operations in groups, rings, and fields. As mentioned above, the operation of addition can be lifted to affine space as (a,b) + (c,d) = (a+c,b+d). However, this same operation can't really be used for homogenous coordinates, since [1, 0] = [2, 0] as they lie on the same line (the line with slope 0), but [1, 0] + [1, 1] = [2, 1] while [2, 0] + [1, 1] = [3, 1], and [2, 1] and [3, 1] are not the same line, as they have slopes 1/2 and 1/3, respectively. Dividing by zero isn't even needed to get weirdness here. Luckily, we can simply define new operations by taking inspiration from fractions: b/a + d/c = (bc + ad)/ac, so we can let [a : b] + [c : d] equal [ac : bc + ad] (remembering that homogenous coordinates do to some extent just represent the slope). Luckily, multiplication still works nicely, so we have [a : b] * [c : d] = [ac : bd]. Unluckily, with these definitions, we no longer get a field. In particular, we don't even have an additive group anymore: [a : b] + [0 : 1] = [0 : a] = [0 : 1], so anything plus infinity is still infinity. In other words, infinity doesn't have an additive inverse. Furthermore, despite ostensibly defining infinity as 1/0, the multiplicative inverse of 0, we have that [1 : 0] * [0 : 1] = [0 : 0], by our rules, which isn't defined. Thus, 0 still doesn't have a multiplicative inverse and 0/0 still doesn't exist. It seems like we still haven't really figured out how to divide by zero, after all this. (Once again, if you want to read up on the projective line, which is a special case of projective space, which is a special case of the Grassmannian, in more depth.)

Part IV: I would say wheels would solve all our problems, if not for the fact that they just make more problems.

At this point, to really divide by zero properly, we're going to need to bite the bullet and change what dividing really means. Just as we can think of subtraction as adding the additive inverse (i.e. a - b = a + -b where -b was a number), we can start thinking of division as just multiplying by... something, i.e. a/b = a * /b, where /b is something vaguely related to the multiplicative inverse. We can already start doing this in the projective line, where we can define /[a : b] = [b : a], and it works nicely as [a : b] * [b : a] = [ab : ab] = [1 : 1] whenever neither a nor b is zero. This lets us rigorize the statements 1/infinity = 0, infinity/0 = infinity, and 0/infinity = 0, but doesn't really help us do 0/0 or infinity/infinity. Furthermore, note that because 0/0 =/= 1, /[a : b] isn't really the multiplicative identity of [a : b], it's just the closest we can get.

Enter the wheel! If 0/0 is undefined, then we can simply... define it. It worked so nicely for adding in infinity, after all - the picture of the point we added for infinity is taking a line and curling it up into a circle, and I like circles! Surely adding another point for 0/0 would be able to provide a nice insight just as turning a line into the projective line did for us.

So here's how you make a wheel:

You take a circle.

You add a point in the middle.

Yeah that's it. The new point, usually denoted by ⊥, is specifically defined as 0/0, and really just doesn't do anything else. Just like for infinity, we still have that a + ⊥ = ⊥ and a * ⊥ = ⊥ for all a (including infinity and ⊥). It doesn't fit into an order, it doesn't fit in topologically, it is algebraically inert both with respect to addition and multiplication. It is the algebraic formalization of the structure that gives you NaN whenever you fuck up in a calculator and the one use of it both inside and outside mathematics is that it lets you be pedantic whenever your elementary school teacher says "you can't divide by zero" because you can go "yeah you can it's just ⊥ because i've been secretly embedding all my real numbers into a wheel this whole time" (supposing you can even pronounce that).

Part V: So what was the point of all this anyways

The wheel is charming to me because it is one of the structures in mathematics where you can tell someone just asked a question of "what if this was true," built some space where it was, and just started toying with it to see what happens. It's a very human and very beautiful thing to see someone go against conventional knowledge and ask "what breaks when you allow 0/0" even if conventional knowledge does tend to be right most of the time. In this sense, perhaps the uselessness of the wheel is the point, that even despite how little ⊥ does from a mathematical lens, some people still took the time to axiomatize this system, to find a list of conditions that were both consistent and sufficient to describe a wheel, and genuinely do actual work seeing how it fits in within the universe of algebraic structures that it stays in.

While a wheel may not be used for much (it might be describable in universal algebra while a field isn't, though I'm not too well versed in universal algebra so I'm not actually entirely sure), every other structure discussed above is genuinely well studied and applicable within many fields inside and outside of math. For more viewpoints on what the projective line (and in general the projective sphere) is used for, some keywords to help you on your way are compactification of a set if you care about the topological lens, the real projective line or the Riemann Sphere if you care more about the analysis side, or honestly the entirety of classical algebraic geometry if that's your thing.

Another structure that might be interesting to look at is the general case of common meadows, an algebraic structure (M, 0, 1, +, -, *, /) where the condition of / being involutive (i.e. /(/x) is not always x) is relaxed, unlike a wheel where it is always involutive. Note that these structures are called meadows because the base structure they worked on is a field (get it? not our best work I promise mathematicians are funnier than this). These structures are at the very least probably more interesting than wheels, though I haven't checked them out in any amount of detail either so who knows, perhaps there isn't much of substance there either.

131 notes

·

View notes

Text

Happy Easter! As a gift, take a bad math joke.

61 notes

·

View notes

Note

hey! I'm a 4th year math undergrad in the States and I am astounded by your knowledge of algebra. it's my favorite branch of math and I know a lot more than my peers but not nearly as much as you. where did you learn? any textbook recommendations?

keep up the great mathematics and posts!

haha, well, I don't know that much algebra to be honest (me using a fancy word in a joke means i have heard of it before, not that I actually know how to work with it!)

But yknow I could give out some resources, so here they are (so far I have mostly learned from classes but yknow i'm at that point where i'm starting to need to transition from listening to someone ramble to reading someone's ramblings and then rambling myself)

For basic linear algebra I didn't learn through a textbook, but I have heard good things about Sheldon Axler's Linear Algebra Done Right and it seems similar to what the classes I had did (besides the whole hating on determinants part, though I kinda get it).

For some introductory group theory, I also had a class on it, but the lecture notes are wonderful. I would happily give the link to them here but since they're specifically the lecture notes of the class from my uni I would be kinda doxxing myself. Also they're in French. I will give out some of the references my prof gave in the bibliography of the lecture notes (I have not read them, pardon me if they're actually terrible and shot your dog): FInite Groups, an Introduction by Serre (pdf link), Linear Representations of Finite Groups also by Serre (pdf link), Algebra by Serge Lang (pdf link). Since our prof is a number theorist he sometimes went on number theory tangents and for that there's Serre's A Course in Arithmetic (pdf link). I'm starting to think our prof likes how Serre writes.

For pure category theory and homological algebra I have read part of these lecture notes. I think a good book for category theory is Emily Riehl's Category Theory in Context (pdf link). For homological algebra, a famous book that I have read some parts of is Weibel's An Introduction to Homological Algebra (pdf link). Warning: all pdfs I found of it on the internet all have some typographygore going on. If anyone knows of a good pdf please tell me.

For commutative algebra, A Term of Commutative Algebra by Altman and Kleinman (pdf link). I haven't read all of it (I intend to read more as I need more CA) but the parts of it I read are good. It also has solutions to the exercises which is neat.

For algebraic geometry (admittedly not fully algebra), I am currently reading Ravi Vakil's The Rising Sea, and I intend on getting a physical copy when it gets published because I like it. It tries to have few prerequisites, so for instance it has chapters on category theory and sheaf theory (though I don't claim it is the best place to learn category theory).

For algebraic topology (even less fully algebra, but yknow), I have learned singular cohomology and some other stuff using Hatcher. I know some people despise the book (and I get where they're coming from). For "basic" algebraic topology i.e. the fundamental group and singular homology I have learned through a class and by reading Topologie Algébrique by Félix and Tanré (pdf link). The book is very good but only in French AFAIK.

For (basic) homotopy theory (does it count as algebra? not fully but what you gonna do this is my post) I have read the first part of Bruno Vallette's lecture notes. I don't know if they're that good. Now I'm reading a bit of obstruction theory from Davis and Kirk's Lecture Notes in Algebraic Topology (pdf link) and I like it a lot! The only frustrating part is when you want to learn one specific thing and find they left it as a "Project", but apart from that I like how they write. It also has exercises within the text which I appreciate.

For pure sheaf theory, a friend recommended me Torsten Wedhorn's Manifolds, Sheaves and Cohomology, specifically chapter 3 (which is, you guessed it, the chapter on sheaves). I only read chapter 3, and I think it was alright (maybe a bit dry). I also gave up at the inverse image sheaf because I can only tolerate so much pure sheaf theory. I will come back to it when I need it. The whole book itself actually does differential geometry, but using the language of modern geometry i.e. locally ringed spaces. I have no idea how good it is at that or how good this POV is in general, read at your own risk.

Also please note I have not fully read through any of these references, but I don't think you're supposed to read every math book you ever touch cover to cover.

thanks for the kind comments, and I hope at least one of the things above may be helpful to you!

#ask#algebraic-dumbass#math#mathblr#math books#math resources#math textbooks#algebra#category theory#sheaf theory#algebraic topology#algebraic geometry#homotopy theory#group theory#linear algebra

48 notes

·

View notes

Text

I sort of want to start a mathematics competition to find the most elaborate possible proofs of simple mathematical theorems. For instance, could one deploy a spectral sequence to show that the Euclidean division algorithm works? That is the sort of reckless fun I want to see in the world.

66 notes

·

View notes

Text

[Purple socks argue with the spaghetti moon. The sky whispers algebra into a bowl of soup. Bananas wear hats and sing to forgotten trains. A toaster floats by, dreaming of electric clouds. Elephants knit sweaters out of melted clocks. The sidewalk hums like a jellybean in space. Chairs do the tango with invisible birds. A watermelon tells secrets to a laughing shoe. Time hiccups and spills mustard on the stars. The universe sneezes, and the trees turn blue.]

#s21e13 oldies but goodies#guy fieri#guyfieri#diners drive-ins and dives#purple socks#spaghetti moon#electric clouds#melted clocks#invisible birds#laughing shoe#time hiccups#sky#algebra#bowl#soup#bananas#hats#trains#toaster#elephants#sweaters#sidewalk#jellybean#space#chairs#tango#watermelon#secrets#spills#stars

112 notes

·

View notes

Text

Mathematics: The Story of Numbers, Symbols and Space. The Golden Library of Knowledge - 1958.

#vintage illustration#vintage books#books#children’s books#educational books#science books#math#mathematics#book covers#vintage book covers#golden library of knowledge#knowledge#learning#numbers#number theory#algebra#geometry#reason & logic#calculus#probability#statistics#maths

43 notes

·

View notes