#ArtificialNeuralNetwork

Explore tagged Tumblr posts

Text

Artificial Neural Networks in a Deep Learning Perspective

Artificial Neural Networks (ANNs) are central to deep learning, revolutionizing industries like healthcare, finance, and automotive. The Computer Science and Engineering department at K. Ramakrishnan College of Technology (KRCT) equips students with cutting-edge skills in ANNs, blending theory and hands-on projects. KRCT's focus on advanced AI ensures graduates are prepared to lead future innovations.

Click here:

#ArtificialNeuralNetworks#top college of technology in trichy#krct the top college of technology in trichy#quality engineering and technical education.#k ramakrishnan college of technology trichy#training and engineering placement#DeepLearning#AIRevolution#MachineLearningModels#FutureOfTechnology#ANNsInIndustry#ConvolutionalNeuralNetworks#ArtificialIntelligenceInsights#RecurrentNeuralNetworks#ExplainableAI#AIInHealthcare#AIInEducation

0 notes

Text

The Power of Transfer Learning in Modern AI

Transfer Learning has emerged as a transformative approach in the realm of machine learning and deep learning, enabling more efficient and adaptable model training. Here's an in-depth exploration of this concept:

Concept

Transfer Learning is a technique where a model developed for a specific task is repurposed for a related but different task. This method is particularly efficient as it allows the model to utilize its previously acquired knowledge, significantly reducing the need for training from the ground up. This approach not only saves time but also leverages the rich learning obtained from previous tasks.

Reuse of Pre-trained Models

A major advantage of transfer learning is its ability to use pre-trained models. These models, trained on extensive datasets, contain a wealth of learned features and patterns, which can be effectively applied to new tasks. This reuse is especially beneficial in scenarios where training data is limited or when the new task is somewhat similar to the one the model was originally trained on. - Rich Feature Set: Pre-trained models come with a wealth of learned features and patterns. They are usually trained on extensive datasets, encompassing a wide variety of scenarios and cases. This richness in learned features makes them highly effective when applied to new, but related tasks. - Beneficial in Limited Data Scenarios: In situations where there is a scarcity of training data for a new task, reusing pre-trained models can be particularly advantageous. These models have already learned substantial information from large datasets, which can be transferred to the new task, compensating for the lack of extensive training data. - Efficiency in Training: Using pre-trained models significantly reduces the time and resources required for training. Since these models have already undergone extensive training, fine-tuning them for a new task requires comparatively less computational power and time, enhancing efficiency. - Similarity to Original Task: The effectiveness of transfer learning is particularly pronounced when the new task is similar to the one the pre-trained model was originally trained on. The closer the resemblance between the tasks, the more effective the transfer of learned knowledge ]. - Broad Applicability: Pre-trained models in transfer learning are not limited to specific types of tasks. They can be adapted across various domains and applications, making them versatile tools in the machine learning toolkit. - Improvement in Model Performance: The reuse of pre-trained models often leads to improved performance in the new task. Leveraging the pre-existing knowledge helps in better generalization and often results in enhanced accuracy and efficiency.

Enhanced Learning Efficiency

Transfer learning greatly reduces the time and resources required for training new models. By leveraging existing models, it circumvents the need for extensive computation and large datasets, which is a boon in resource-constrained scenarios or when dealing with rare or expensive-to-label data. - Reduced Training Time: One of the primary benefits of transfer learning is the substantial reduction in training time. By using models pre-trained on large datasets, a significant portion of the learning process is already completed. This means that less time is needed to train the model on the new task. - Lower Resource Requirements: Transfer learning mitigates the need for powerful computational resources that are typically required for training complex models from scratch. This aspect is especially advantageous for individuals or organizations with limited access to high-end computing infrastructure. - Efficient Data Utilization: In scenarios where acquiring large amounts of labeled data is challenging or costly, transfer learning proves to be particularly beneficial. It allows for the effective use of smaller datasets, as the pre-trained model has already learned general features from a broader dataset. - Quick Adaptation to New Tasks: Transfer learning enables models to quickly adapt to new tasks with minimal additional training. This quick adaptation is crucial in dynamic fields where rapid deployment of models is required. - Overcoming Data Scarcity: For tasks where data is scarce or expensive to collect, transfer learning offers a solution by utilizing pre-trained models that have been trained on similar tasks with abundant data. This approach helps in overcoming the hurdle of data scarcity ]. - Improved Model Performance: Often, models trained with transfer learning exhibit improved performance on new tasks, especially when these tasks are closely related to the original task the model was trained on. This improved performance is due to the pre-trained model’s ability to leverage previously learned patterns and features.

Applications

The applications of transfer learning are vast and varied. It has been successfully implemented in areas such as image recognition, where models trained on generic images are fine-tuned for specific image classification tasks, and natural language processing, where models trained on one language or corpus are adapted for different linguistic applications. Its versatility makes it a valuable tool across numerous domains.

Adaptability

Transfer learning exhibits remarkable adaptability, being applicable to a wide array of tasks and compatible with various types of neural networks. Whether it's Convolutional Neural Networks (CNNs) for visual data or Recurrent Neural Networks (RNNs) for sequential data, transfer learning can enhance the performance of these models across different domains.

How Transfer Learning is Revolutionizing Generative Art

Transfer Learning is playing a pivotal role in the field of generative art, opening new avenues for creativity and innovation. Here's how it's being utilized: - Enhancing Generative Models: Transfer Learning enables the enhancement of generative models like Generative Adversarial Networks (GANs). By using pre-trained models, artists and developers can create more complex and realistic images without starting from scratch. This approach is particularly effective in art generation where intricate details and high realism are desired. - Fair Generative Models: Addressing fairness in generative models is another area where Transfer Learning is making an impact. It helps in mitigating dataset biases, a common challenge in deep generative models. By transferring knowledge from fair and diverse datasets, it aids in producing more balanced and unbiased generative art. - Art and Design Applications: In the domain of art and design, Transfer Learning empowers artists to use GANs pre-trained on various styles and patterns. This opens up possibilities for creating unique and diverse art pieces, blending traditional art forms with modern AI techniques. - Style Transfer in Art: Transfer Learning is also used in style transfer applications, where the style of one image is applied to the content of another. This technique has been popularized for creating artworks that combine the style of famous paintings with contemporary images. - Experimentation and Exploration: Artists are leveraging Transfer Learning to experiment with new styles and forms of expression. By using pre-trained models as a base, they can explore creative possibilities that were previously unattainable due to technical or resource limitations.

Set up transfer learning in Python

To set up transfer learning in Python using Keras, you can leverage a pre-trained model like VGG16. Here's a basic example to demonstrate this process: - Import Necessary Libraries: from keras.applications.vgg16 import VGG16, preprocess_input, decode_predictions from keras.preprocessing.image import img_to_array, load_img from keras.models import Model - Load Pre-trained VGG16 Model: # Load the VGG16 model pre-trained on ImageNet data vgg16_model = VGG16(weights='imagenet') - Customize the Model for Your Specific Task: For instance, you can remove the top layer (fully connected layers) and add your custom layers for a specific task (like binary classification). # Remove the last layer vgg16_model.layers.pop() # Freeze the layers except the last 4 layers for layer in vgg16_model.layers: layer.trainable = False # Check the trainable status of the individual layers for layer in vgg16_model.layers: print(layer, layer.trainable) - Add Custom Layers for New Task: from keras.layers import Dense, GlobalAveragePooling2D from keras.models import Sequential custom_model = Sequential() custom_model.add(vgg16_model) custom_model.add(GlobalAveragePooling2D()) custom_model.add(Dense(1024, activation='relu')) custom_model.add(Dense(1, activation='sigmoid')) # For binary classification - Compile the Model: custom_model.compile(loss='binary_crossentropy', optimizer='rmsprop', metrics=) - Train the Model: Here, you would use your dataset. For simplicity, this step is shown as a placeholder. # custom_model.fit(train_data, train_labels, epochs=10, batch_size=32) - Use the Model for Predictions: Load an image and preprocess it for VGG16. img = load_img('path_to_your_image.jpg', target_size=(224, 224)) img = img_to_array(img) img = img.reshape((1, img.shape, img.shape, img.shape)) img = preprocess_input(img) # Predict the class prediction = custom_model.predict(img) print(prediction) Remember, this is a simplified example. In a real-world scenario, you need to preprocess your dataset, handle overfitting, and possibly fine-tune the model further. Also, consider using train_test_split for evaluating model performance. For comprehensive guidance, you might find tutorials like those in Keras Documentation or PyImageSearch helpful.

Boosting Performance on Related Tasks

One of the most significant impacts of transfer learning is its ability to boost model performance on related tasks. By transferring knowledge from one domain to another, it aids in better generalization and accuracy, often leading to enhanced model performance on the new task. This is particularly evident in cases where the new task is a variant or an extension of the original task. Transfer learning stands as a cornerstone technique in the field of artificial intelligence, revolutionizing how models are trained and applied. Its efficiency, adaptability, and wide-ranging applications make it a key strategy in overcoming some of the most pressing challenges in machine learning and deep learning.

🌐 Sources

- Analytics Vidhya - Understanding Transfer Learning for Deep Learning - Machine Learning Mastery - A Gentle Introduction to Transfer Learning for Deep Learning - Wikipedia - Transfer Learning - V7 Labs - A Newbie-Friendly Guide to Transfer Learning - Domino - A Detailed Guide To Transfer Learning and How It Works - GeeksforGeeks - What is Transfer Learning? - Built In - What Is Transfer Learning? A Guide for Deep Learning - SparkCognition - Advantages of Transfer Learning - LinkedIn - Three advantages of using the transfer learning technique - Seldon - Transfer Learning for Machine Learning - Levity - What is Transfer Learning and Why Does it Matter? - arXiv - Fair Generative Models via Transfer Learning - Medium - Generative Adversarial Networks (GANs) or Transfer Learning - Anees Merchant - Unlocking AI's Potential: The Power of Transfer Learning in Generative Models - AAAI - Fair Generative Models via Transfer Learning Read the full article

#artificialneuralnetworks#datasymmetries#graphattributes#GraphNeuralNetworks#incompletegraphs#keywordsearch#latentencoding#lineartimecomplexity#optimizabletransformation#real-worlddatasets

0 notes

Text

LinkedIn AI Academy AI-100: 2 Supervised Learning with Neural Networks

LAOMUSIC ARTS 2025 presents

I just finished the course “LinkedIn AI Academy AI-100: 2 Supervised Learning with Neural Networks” by Ananth Sankar and Daniel Hewlett!

#lao #music #arts #laomusic #laomusicarts #laomusicArts #ai #supervisedlearning #artificialintelligence #artificialneuralnetworks

Check it out:

1 note

·

View note

Text

Artificial Intelligence: Friend or Foe?

Satyen K. Bordoloi weighs in on the disparity between the perception of and the potential inherent in Artificial Intelligence Read More. https://www.sify.com/ai-analytics/artificial-intelligence-friend-or-foe/

0 notes

Text

Artificial Neural Networks . . . visit: https://bit.ly/3YDfRXH for more information

0 notes

Photo

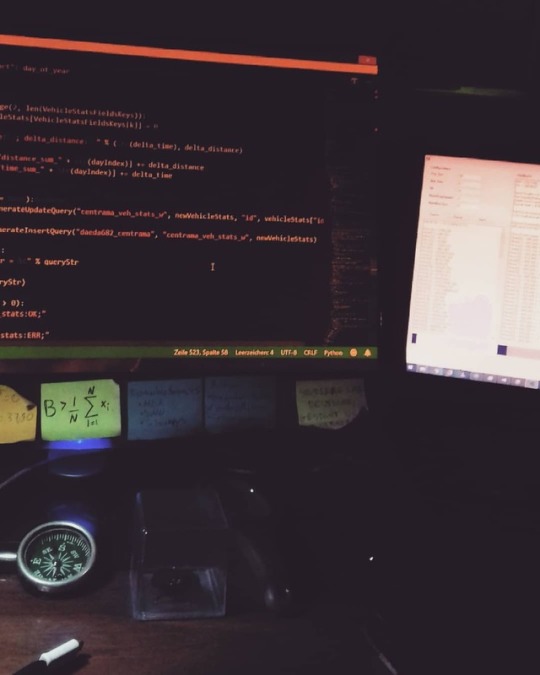

"Study hard what interests you most in the most undisciplined, irreverent and original manner possible." - Richard Feynman #programming #programmer #programmieren #code #coding #programierung #прогаммист #developer #разработчик #Entwickler #开发人员#fullstack #project #csharp #python #ann #neuralnetworks #neuronalenetze #artificialneuralnetwork #mechatronics #mecatronica #мехатроника #softwaredeveloper #软件@worldofcode @codeismylife @coding.engineer @insta_code_official @coderworlds @programmerrepublic @coderworlds

#csharp#прогаммист#programierung#mecatronica#fullstack#project#mechatronics#coding#разработчик#neuralnetworks#developer#programmer#python#软件#artificialneuralnetwork#neuronalenetze#programmieren#code#开发人员#entwickler#мехатроника#softwaredeveloper#ann#programming

2 notes

·

View notes

Video

instagram

DID YOU KNOW? #trickythursday #zarplabs #artificialintelligence #artificialneuralnetwork https://www.instagram.com/p/CL_W0fkAO91/?igshid=cq2vfxk5fqxy

0 notes

Photo

———————————————————————— Backpropagation is a method used in artificial neural networks to calculate a gradient that is needed in the calculation of the weights to be used in the network. It is commonly used to train deep neural networks , a term used to explain neural networks with more than one hidden layer. — The goal of any supervised learning algorithm is to find a function that best maps a set of inputs to their correct output. — An example would be a classification task, where the input is an image of an animal, and the correct output is the name of the animal. — The motivation for backpropagation is to train a multi-layered neural network such that it can learn the appropriate internal representations to allow it to learn any arbitrary mapping of input to output. — Sometimes referred to as the cost function or error function. The loss function is a function that maps values of one or more variables onto a real number intuitively representing some "cost" associated with those values. — For backpropagation, the loss function calculates the difference between the network output and its expected output, after a case propagates through the network. ———————————————————————— #data #bigdata #bigdataanalytics #dataanalysis #datascience #datascientist #datamining #dataprocessing #datavisualization #dataviz #machinelearning #backpropagation #artificialintelligence #algorithm #analysis #analytics #statistics #backpropagation #studygram #learning #study #science #computers #computerscience #research #predictiveanalytics #математика #artificialneuralnetwork #neuralnetwork #статистика (at United States)

#science#algorithm#learning#predictiveanalytics#statistics#study#backpropagation#data#computerscience#analysis#dataviz#artificialneuralnetwork#neuralnetwork#datavisualization#статистика#bigdata#computers#datamining#studygram#datascience#математика#datascientist#machinelearning#dataprocessing#artificialintelligence#research#bigdataanalytics#analytics#dataanalysis

2 notes

·

View notes

Photo

Tensorflow 2 and Keras Deep Learning.Deep Learning with Google TensorFlow|Python.Implement Deep Learning|Python for Deep Learning with Google's latest Tensorflow 2. Check our Info : www.incegna.com Reg Link for Programs : http://www.incegna.com/contact-us Follow us on Facebook : www.facebook.com/INCEGNA/? Follow us on Instagram : https://www.instagram.com/_incegna/ For Queries : [email protected] #deeplearning,#deeplearningpython,#googletensorflow,#tensorflow,#keras,#GANs,#RNN,#API,#visualization,#artificialneuralnetwork,#Naturallanguageprocessing,#statistics,#scikit,#Colab,#cloudcomputing,#numpy,#pandas https://www.instagram.com/p/B-RFFoKgatw/?igshid=1flkxx1t2hubk

#deeplearning#deeplearningpython#googletensorflow#tensorflow#keras#gans#rnn#api#visualization#artificialneuralnetwork#naturallanguageprocessing#statistics#scikit#colab#cloudcomputing#numpy#pandas

0 notes

Photo

The artificial intelligence market will be worth a lot. AI will contribute $15.7 trillion to the global economy by 2030. Let’s find out “10 Mind-Blowing Stats About Artificial Intelligence” YT Link: https://youtu.be/BQyarK3VR_I #artificialintelligence #artificial_intelligence #ai #machinelearning #deeplearning #artificialneuralnetwork #autonomousvehicles #driverless #technology #technologyrocks #kidsofinstagram #kidsyoutube #kidsyoutubechannel #kidsyoutuber #kidsyoutubers #youtubers #youtubekids #youtubekid #youtubekidschannel #funfacts #funfact #facts💯 #factsoflife #factoftheday #aaronplaysfunfacts https://www.instagram.com/p/B-QWnMtAZwH/?igshid=3pb6rinoqoeq

#artificialintelligence#artificial_intelligence#ai#machinelearning#deeplearning#artificialneuralnetwork#autonomousvehicles#driverless#technology#technologyrocks#kidsofinstagram#kidsyoutube#kidsyoutubechannel#kidsyoutuber#kidsyoutubers#youtubers#youtubekids#youtubekid#youtubekidschannel#funfacts#funfact#facts💯#factsoflife#factoftheday#aaronplaysfunfacts

0 notes

Text

The Power of Transfer Learning in Modern AI

Transfer Learning has emerged as a transformative approach in the realm of machine learning and deep learning, enabling more efficient and adaptable model training. Here's an in-depth exploration of this concept:

Concept

Transfer Learning is a technique where a model developed for a specific task is repurposed for a related but different task. This method is particularly efficient as it allows the model to utilize its previously acquired knowledge, significantly reducing the need for training from the ground up. This approach not only saves time but also leverages the rich learning obtained from previous tasks.

Reuse of Pre-trained Models

A major advantage of transfer learning is its ability to use pre-trained models. These models, trained on extensive datasets, contain a wealth of learned features and patterns, which can be effectively applied to new tasks. This reuse is especially beneficial in scenarios where training data is limited or when the new task is somewhat similar to the one the model was originally trained on. - Rich Feature Set: Pre-trained models come with a wealth of learned features and patterns. They are usually trained on extensive datasets, encompassing a wide variety of scenarios and cases. This richness in learned features makes them highly effective when applied to new, but related tasks. - Beneficial in Limited Data Scenarios: In situations where there is a scarcity of training data for a new task, reusing pre-trained models can be particularly advantageous. These models have already learned substantial information from large datasets, which can be transferred to the new task, compensating for the lack of extensive training data. - Efficiency in Training: Using pre-trained models significantly reduces the time and resources required for training. Since these models have already undergone extensive training, fine-tuning them for a new task requires comparatively less computational power and time, enhancing efficiency. - Similarity to Original Task: The effectiveness of transfer learning is particularly pronounced when the new task is similar to the one the pre-trained model was originally trained on. The closer the resemblance between the tasks, the more effective the transfer of learned knowledge ]. - Broad Applicability: Pre-trained models in transfer learning are not limited to specific types of tasks. They can be adapted across various domains and applications, making them versatile tools in the machine learning toolkit. - Improvement in Model Performance: The reuse of pre-trained models often leads to improved performance in the new task. Leveraging the pre-existing knowledge helps in better generalization and often results in enhanced accuracy and efficiency.

Enhanced Learning Efficiency

Transfer learning greatly reduces the time and resources required for training new models. By leveraging existing models, it circumvents the need for extensive computation and large datasets, which is a boon in resource-constrained scenarios or when dealing with rare or expensive-to-label data. - Reduced Training Time: One of the primary benefits of transfer learning is the substantial reduction in training time. By using models pre-trained on large datasets, a significant portion of the learning process is already completed. This means that less time is needed to train the model on the new task. - Lower Resource Requirements: Transfer learning mitigates the need for powerful computational resources that are typically required for training complex models from scratch. This aspect is especially advantageous for individuals or organizations with limited access to high-end computing infrastructure. - Efficient Data Utilization: In scenarios where acquiring large amounts of labeled data is challenging or costly, transfer learning proves to be particularly beneficial. It allows for the effective use of smaller datasets, as the pre-trained model has already learned general features from a broader dataset. - Quick Adaptation to New Tasks: Transfer learning enables models to quickly adapt to new tasks with minimal additional training. This quick adaptation is crucial in dynamic fields where rapid deployment of models is required. - Overcoming Data Scarcity: For tasks where data is scarce or expensive to collect, transfer learning offers a solution by utilizing pre-trained models that have been trained on similar tasks with abundant data. This approach helps in overcoming the hurdle of data scarcity ]. - Improved Model Performance: Often, models trained with transfer learning exhibit improved performance on new tasks, especially when these tasks are closely related to the original task the model was trained on. This improved performance is due to the pre-trained model’s ability to leverage previously learned patterns and features.

Applications

The applications of transfer learning are vast and varied. It has been successfully implemented in areas such as image recognition, where models trained on generic images are fine-tuned for specific image classification tasks, and natural language processing, where models trained on one language or corpus are adapted for different linguistic applications. Its versatility makes it a valuable tool across numerous domains.

Adaptability

Transfer learning exhibits remarkable adaptability, being applicable to a wide array of tasks and compatible with various types of neural networks. Whether it's Convolutional Neural Networks (CNNs) for visual data or Recurrent Neural Networks (RNNs) for sequential data, transfer learning can enhance the performance of these models across different domains.

How Transfer Learning is Revolutionizing Generative Art

Transfer Learning is playing a pivotal role in the field of generative art, opening new avenues for creativity and innovation. Here's how it's being utilized: - Enhancing Generative Models: Transfer Learning enables the enhancement of generative models like Generative Adversarial Networks (GANs). By using pre-trained models, artists and developers can create more complex and realistic images without starting from scratch. This approach is particularly effective in art generation where intricate details and high realism are desired. - Fair Generative Models: Addressing fairness in generative models is another area where Transfer Learning is making an impact. It helps in mitigating dataset biases, a common challenge in deep generative models. By transferring knowledge from fair and diverse datasets, it aids in producing more balanced and unbiased generative art. - Art and Design Applications: In the domain of art and design, Transfer Learning empowers artists to use GANs pre-trained on various styles and patterns. This opens up possibilities for creating unique and diverse art pieces, blending traditional art forms with modern AI techniques. - Style Transfer in Art: Transfer Learning is also used in style transfer applications, where the style of one image is applied to the content of another. This technique has been popularized for creating artworks that combine the style of famous paintings with contemporary images. - Experimentation and Exploration: Artists are leveraging Transfer Learning to experiment with new styles and forms of expression. By using pre-trained models as a base, they can explore creative possibilities that were previously unattainable due to technical or resource limitations.

Set up transfer learning in Python

To set up transfer learning in Python using Keras, you can leverage a pre-trained model like VGG16. Here's a basic example to demonstrate this process: - Import Necessary Libraries: from keras.applications.vgg16 import VGG16, preprocess_input, decode_predictions from keras.preprocessing.image import img_to_array, load_img from keras.models import Model - Load Pre-trained VGG16 Model: # Load the VGG16 model pre-trained on ImageNet data vgg16_model = VGG16(weights='imagenet') - Customize the Model for Your Specific Task: For instance, you can remove the top layer (fully connected layers) and add your custom layers for a specific task (like binary classification). # Remove the last layer vgg16_model.layers.pop() # Freeze the layers except the last 4 layers for layer in vgg16_model.layers: layer.trainable = False # Check the trainable status of the individual layers for layer in vgg16_model.layers: print(layer, layer.trainable) - Add Custom Layers for New Task: from keras.layers import Dense, GlobalAveragePooling2D from keras.models import Sequential custom_model = Sequential() custom_model.add(vgg16_model) custom_model.add(GlobalAveragePooling2D()) custom_model.add(Dense(1024, activation='relu')) custom_model.add(Dense(1, activation='sigmoid')) # For binary classification - Compile the Model: custom_model.compile(loss='binary_crossentropy', optimizer='rmsprop', metrics=) - Train the Model: Here, you would use your dataset. For simplicity, this step is shown as a placeholder. # custom_model.fit(train_data, train_labels, epochs=10, batch_size=32) - Use the Model for Predictions: Load an image and preprocess it for VGG16. img = load_img('path_to_your_image.jpg', target_size=(224, 224)) img = img_to_array(img) img = img.reshape((1, img.shape, img.shape, img.shape)) img = preprocess_input(img) # Predict the class prediction = custom_model.predict(img) print(prediction) Remember, this is a simplified example. In a real-world scenario, you need to preprocess your dataset, handle overfitting, and possibly fine-tune the model further. Also, consider using train_test_split for evaluating model performance. For comprehensive guidance, you might find tutorials like those in Keras Documentation or PyImageSearch helpful.

Boosting Performance on Related Tasks

One of the most significant impacts of transfer learning is its ability to boost model performance on related tasks. By transferring knowledge from one domain to another, it aids in better generalization and accuracy, often leading to enhanced model performance on the new task. This is particularly evident in cases where the new task is a variant or an extension of the original task. Transfer learning stands as a cornerstone technique in the field of artificial intelligence, revolutionizing how models are trained and applied. Its efficiency, adaptability, and wide-ranging applications make it a key strategy in overcoming some of the most pressing challenges in machine learning and deep learning.

🌐 Sources

- Analytics Vidhya - Understanding Transfer Learning for Deep Learning - Machine Learning Mastery - A Gentle Introduction to Transfer Learning for Deep Learning - Wikipedia - Transfer Learning - V7 Labs - A Newbie-Friendly Guide to Transfer Learning - Domino - A Detailed Guide To Transfer Learning and How It Works - GeeksforGeeks - What is Transfer Learning? - Built In - What Is Transfer Learning? A Guide for Deep Learning - SparkCognition - Advantages of Transfer Learning - LinkedIn - Three advantages of using the transfer learning technique - Seldon - Transfer Learning for Machine Learning - Levity - What is Transfer Learning and Why Does it Matter? - arXiv - Fair Generative Models via Transfer Learning - Medium - Generative Adversarial Networks (GANs) or Transfer Learning - Anees Merchant - Unlocking AI's Potential: The Power of Transfer Learning in Generative Models - AAAI - Fair Generative Models via Transfer Learning Read the full article

#artificialneuralnetworks#datasymmetries#graphattributes#GraphNeuralNetworks#incompletegraphs#keywordsearch#latentencoding#lineartimecomplexity#optimizabletransformation#real-worlddatasets

0 notes

Text

Advance Your Skills in AI and Machine Learning

LAOMUSIC ARTS 2024 presents

I just finished the learning path “Advance Your Skills in AI and Machine Learning”! 16 Courses with 21h 46m and my 18 brand new skills: appliedmachinelearning, machinelearning, deeplearning, reinforcementlearning, neuralnetworks, generativeai, generativeadversarialnetworks, artificialneuralnetworks, convolutionalneuralnetworks, itprocessautomation, artificialintelligence, itautomation, mlops, artificialintelligencefordesign, userexperiencedesign, responsibleai, businessprocessautomation, datagovernance

In the rapidly expanding universe of artificial intelligence and machine learning, there's a lot to learn and to stay on top of. Use the range of courses in this learning path to augment your skills related to AI, ML, and data science by understanding generative models and exploring the fields of MLOps and Responsible AI. Gain hands-on training with generative models. Refine your skills in deep learning and neural networks. Explore the developing fields of MLOps and responsible AI.

#lao #music #laomusic #laomusicarts #laomusicArts #ai #appliedmachinelearning #machinelearning #deeplearning #reinforcementlearning #neuralnetworks #python #generativeai #generativeadversarialnetworks #artificialneuralnetworks #convolutionalneuralnetworks #itprocessautomation #artificialintelligence #itautomation #mlops #artificialintelligencefordesign #userexperiencedesign #responsibleai #businessprocessautomation #datagovernance

Check it out:

1 note

·

View note

Photo

Compétences supérieures d'un scientifique de données Expertise des langages de programmation Python, R et SQL, ainsi que familiarité avec les outils d'exploration de données, Excel, les tableaux de bord décisionnels Et aussi des bibliothèques d’analyses en langage Python dans les domaines de machine learning, deep learning برترین مهارتهای یک متخصص علمداده 🚀 تخصص در زبانهای برنامهنویسی پایتون، R و SQL به همراه آشنایی با ابزارهای مطرح دادهکاوی، اکسل، داشبوردهای هوش تجاری و همچنین کتابخانههای تحلیلی زبان پایتون در حوزههای یادگیری ماشین و یادگیری عمیق #datascience #machinelearning #deeplearning #artificial_intelligence #data_science #bigdata #python #datascientist #dataanalysis #data #programmer #coding #artificialneuralnetwork #algorithm #sql #tensorflow #pytorch #numpy #scikit_learn #keras #scipy #pandas #matplotlib #scrapy https://www.instagram.com/p/B4ep_26g9_S/?igshid=1fodbazbgtusz

#datascience#machinelearning#deeplearning#artificial_intelligence#data_science#bigdata#python#datascientist#dataanalysis#data#programmer#coding#artificialneuralnetwork#algorithm#sql#tensorflow#pytorch#numpy#scikit_learn#keras#scipy#pandas#matplotlib#scrapy

0 notes

Photo

Artificial intelligence (AI) is the simulation of human intelligence processes by machines, especially computer systems. These processes include learning (the acquisition of information and rules for using the information), reasoning (using rules to reach approximate or definite conclusions) and self-correction.

.

.

.

Register Now www.infogrex.com

Contact : 9347412456, 040 - 6771 4400/4444

.

.

.

, ,, , ,

#chennai#bangalore#hyderabad#mumbai#kolkata#lucknow#nagpur#ahmedabad#Delhi#F-RCNN#Mask-RCNN#ConvolutionalNeuralNetwork#RecurrentNeuralNetwork#ArtificialNeuralNetwork#techlifestyle#learntocode#developerlife#coding#datascientist#thefutureisfemale#girlswhocode#girlcoder#womenwhocode#girlcodertech#ibm

0 notes

Text

Petition demanding AI lynching comes from primitive human instinct

The witchhunt of artificial 'intelligence' is afoot and shamefully led by some of our smartest people driven by some primal instincts. writes Satyen K. Bordoloi. Read More. https://www.sify.com/ai-analytics/petition-demanding-ai-lynching-comes-from-primitive-human-instinct/

#AI#ArtificialIntelligence#ChatGPT#ArtificialNeuralNetworks#ANN#MachineLearning#DeepLearning#HumanInstinct

0 notes

Link

Artificial Intelligence (AI) is a mimic of human behaviour. It assists people in many ways by integrating with multiple technologies. Also they help in building new solutions to problems that are tough to deal manually. Doing AI Internship will help research fellows and students in their research and studies in AI base systems.

To understand the working of an AI system, one needs to deep dive into the various sub domains of AI. This includes techniques such as Machine Learning, Deep Learning, and Neural Networks. Today there are many advance AI technologies such as Facial recognition, voice assistant, and many more. So this is the perfect time to start an AI Internship. Thus you can build a path to a successful career in Artificial Intelligence.

What exactly is Artificial Intelligence?

Artificial intelligence is the process of simulating human intelligence processes by the use of machines. This will be done mainly using computer systems. AI programming focuses on three important skills: learning, reasoning and also self-correction.

AI can classify into two separate categories: Weak and Strong.

Weak artificial intelligence systems are systems design to perform one particular job. Strong artificial intelligence systems are systems that perform the jobs similar to human beings. These kinds of systems are more complex and also complicated.

What are the benefits of AI Internship?

The motive of AI Internship is to deliver technical and practical information about the implementation of the different technologies. Also this provides huge knowledge on the application of these technologies. This area is vast and diverse. Therefore, it requires you to have a decent amount of technical knowledge.

You can try new stuffs and learn how everything works. If you want to understand the real-life applications of AI, then internships are the best way to do so.

There is a considerable difference between theoretical knowledge and practical knowledge. Internship also shows that you have experience in applying your Artificial Intelligence knowledge in real-time.

After learning AI, you would surely want to get a job in this field. AI Internship can help you in that regard too. They can also help you to show your skills to the interviewers.

Where can you find the best AI Internship Program?

Pantech eLearning Chennai is an Online Learning Service provider. We are offering an AI Internship Program. The internship is provided by Institution of Electronics and Telecommunication Engineers (IETE). After the successful completion of the internship you will be provided with the IETE Certification.

You can join this program now for a 25% discount.The total internship duration will be 30 hours and you will get a lifetime access when you join us. Also, you will get the guidance of our experienced trainers during the internship.

Technologies and Tools Covered

Python Programming

Image processing

Machine learning

Deep learning

Natural Language processing

Visit our Website and Book your Internship Now.

Applications of AI

There are many applications of AI.

Healthcare Industries are using AI to make a faster and efficient diagnosis than humans. Artificial Intelligence can also use for gaming purpose. AI can use to make your data more safe and secure. Self-driven vehicles, which are using Al, can make your travel safe and secure.

0 notes