#Custom AI Model Development Services

Explore tagged Tumblr posts

Text

Custom AI Model Development Services with Team of Keys

Our Generative AI team specializes in Custom AI Model Development Services and is good at making unique AI models for Text Generation, Computer Vision, Generative Adversarial Networks, Transformer-based models, Audio generation etc, using Team of Keys. For more details, visit now!

0 notes

Text

artificial intelligence| ai development companies| ai in business| ai for business automation| ai development| artificial intelligence ai| ai technology| ai companies| ai developers| ai intelligence| generative ai| ai software development| top ai companies| ai ops| ai software companies| companies that work on ai| artificial intelligence service providers in india| artificial intelligence companies| customer service ai| ai model| leading ai companies| ai in customer support| ai solutions for small business| ai for business book| basic knowledge for artificial intelligence| matching in artificial intelligence|

#artificial intelligence#ai development companies#ai in business#ai for business automation#ai development#artificial intelligence ai#ai technology#ai companies#ai developers#ai intelligence#generative ai#ai software development#top ai companies#ai ops#ai software companies#companies that work on ai#artificial intelligence service providers in india#artificial intelligence companies#customer service ai#ai model#leading ai companies#ai in customer support#ai solutions for small business#ai for business book#basic knowledge for artificial intelligence#matching in artificial intelligence

2 notes

·

View notes

Text

#manufacturing support#end-to-end product development#mobile app development#custom ai model development#ai development services company in india#iot embedded systems

0 notes

Text

Think Smarter, Not Harder: Meet RAG

How do RAG make machines think like you?

Imagine a world where your AI assistant doesn't only talk like a human but understands your needs, explores the latest data, and gives you answers you can trust—every single time. Sounds like science fiction? It's not.

We're at the tipping point of an AI revolution, where large language models (LLMs) like OpenAI's GPT are rewriting the rules of engagement in everything from customer service to creative writing. here's the catch: all that eloquence means nothing if it can't deliver the goods—if the answers aren't just smooth, spot-on, accurate, and deeply relevant to your reality.

The question is: Are today's AI models genuinely equipped to keep up with the complexities of real-world applications, where context, precision, and truth aren't just desirable but essential? The answer lies in pushing the boundaries further—with Retrieval-Augmented Generation (RAG).

While LLMs generate human-sounding copies, they often fail to deliver reliable answers based on real facts. How do we ensure that an AI-powered assistant doesn't confidently deliver outdated or incorrect information? How do we strike a balance between fluency and factuality? The answer is in a brand new powerful approach: Retrieval-Augmented Generation (RAG).

What is Retrieval-Augmented Generation (RAG)?

RAG is a game-changing technique to increase the basic abilities of traditional language models by integrating them with information retrieval mechanisms. RAG does not only rely on pre-acquired knowledge but actively seek external information to create up-to-date and accurate answers, rich in context. Imagine for a second what could happen if you had a customer support chatbot able to engage in a conversation and draw its answers from the latest research, news, or your internal documents to provide accurate, context-specific answers.

RAG has the immense potential to guarantee informed, responsive and versatile AI. But why is this necessary? Traditional LLMs are trained on vast datasets but are static by nature. They cannot access real-time information or specialized knowledge, which can lead to "hallucinations"—confidently incorrect responses. RAG addresses this by equipping LLMs to query external knowledge bases, grounding their outputs in factual data.

How Does Retrieval-Augmented Generation (RAG) Work?

RAG brings a dynamic new layer to traditional AI workflows. Let's break down its components:

Embedding Model

Think of this as the system's "translator." It converts text documents into vector formats, making it easier to manage and compare large volumes of data.

Retriever

It's the AI's internal search engine. It scans the vectorized data to locate the most relevant documents that align with the user's query.

Reranker (Opt.)

It assesses the submitted documents and score their relevance to guarantee that the most pertinent data will pass along.

Language Model

The language model combines the original query with the top documents the retriever provides, crafting a precise and contextually aware response. Embedding these components enables RAG to enhance the factual accuracy of outputs and allows for continuous updates from external data sources, eliminating the need for costly model retraining.

How does RAG achieve this integration?

It begins with a query. When a user asks a question, the retriever sifts through a curated knowledge base using vector embeddings to find relevant documents. These documents are then fed into the language model, which generates an answer informed by the latest and most accurate information. This approach dramatically reduces the risk of hallucinations and ensures that the AI remains current and context-aware.

RAG for Content Creation: A Game Changer or just a IT thing?

Content creation is one of the most exciting areas where RAG is making waves. Imagine an AI writer who crafts engaging articles and pulls in the latest data, trends, and insights from credible sources, ensuring that every piece of content is compelling and accurate isn't a futuristic dream or the product of your imagination. RAG makes it happen.

Why is this so revolutionary?

Engaging and factually sound content is rare, especially in today's digital landscape, where misinformation can spread like wildfire. RAG offers a solution by combining the creative fluency of LLMs with the grounding precision of information retrieval. Consider a marketing team launching a campaign based on emerging trends. Instead of manually scouring the web for the latest statistics or customer insights, an RAG-enabled tool could instantly pull in relevant data, allowing the team to craft content that resonates with current market conditions.

The same goes for various industries from finance to healthcare, and law, where accuracy is fundamental. RAG-powered content creation tools promise that every output aligns with the most recent regulations, the latest research and market trends, contributing to boosting the organization's credibility and impact.

Applying RAG in day-to-day business

How can we effectively tap into the power of RAG? Here's a step-by-step guide:

Identify High-Impact Use Cases

Start by pinpointing areas where accurate, context-aware information is critical. Think customer service, marketing, content creation, and compliance—wherever real-time knowledge can provide a competitive edge.

Curate a robust knowledge base

RAG relies on the quality of the data it collects and finds. Build or connect to a comprehensive knowledge repository with up-to-date, reliable information—internal documents, proprietary data, or trusted external sources.

Select the right tools and technologies

Leverage platforms that support RAG architecture or integrate retrieval mechanisms with existing LLMs. Many AI vendors now offer solutions combining these capabilities, so choose one that fits your needs.

Train your team

Successful implementation requires understanding how RAG works and its potential impact. Ensure your team is well-trained in deploying RAG&aapos;s technical and strategic aspects.

Monitor and optimize

Like any technology, RAG benefits from continuous monitoring and optimization. Track key performance indicators (KPIs) like accuracy, response time, and user satisfaction to refine and enhance its application.

Applying these steps will help organizations like yours unlock RAG's full potential, transform their operations, and enhance their competitive edge.

The Business Value of RAG

Why should businesses consider integrating RAG into their operations? The value proposition is clear:

Trust and accuracy

RAG significantly enhances the accuracy of responses, which is crucial for maintaining customer trust, especially in sectors like finance, healthcare, and law.

Efficiency

Ultimately, RAG reduces the workload on human employees, freeing them to focus on higher-value tasks.

Knowledge management

RAG ensures that information is always up-to-date and relevant, helping businesses maintain a high standard of knowledge dissemination and reducing the risk of costly errors.

Scalability and change

As an organization grows and evolves, so does the complexity of information management. RAG offers a scalable solution that can adapt to increasing data volumes and diverse information needs.

RAG vs. Fine-Tuning: What's the Difference?

Both RAG and fine-tuning are powerful techniques for optimizing LLM performance, but they serve different purposes:

Fine-Tuning

This approach involves additional training on specific datasets to make a model more adept at particular tasks. While effective for niche applications, it can limit the model's flexibility and adaptability.

RAG

In contrast, RAG dynamically retrieves information from external sources, allowing for continuous updates without extensive retraining, which makes it ideal for applications where real-time data and accuracy are critical.

The choice between RAG and fine-tuning entirely depends on your unique needs. For example, RAG is the way to go if your priority is real-time accuracy and contextual relevance.

Concluding Thoughts

As AI evolves, the demand for RAG AI Service Providers systems that are not only intelligent but also accurate, reliable, and adaptable will only grow. Retrieval-Augmented generation stands at the forefront of this evolution, promising to make AI more useful and trustworthy across various applications.

Whether it's a content creation revolution, enhancing customer support, or driving smarter business decisions, RAG represents a fundamental shift in how we interact with AI. It bridges the gap between what AI knows and needs to know, making it the tool of reference to grow a real competitive edge.

Let's explore the infinite possibilities of RAG together

We would love to know; how do you intend to optimize the power of RAG in your business? There are plenty of opportunities that we can bring together to life. Contact our team of AI experts for a chat about RAG and let's see if we can build game-changing models together.

#RAG#Fine-tuning LLM for RAG#RAG System Development Companies#RAG LLM Service Providers#RAG Model Implementation#RAG-Enabled AI Platforms#RAG AI Service Providers#Custom RAG Model Development

0 notes

Text

Generative AI for Startups: 5 Essential Boosts to Boost Your Business

The future of business growth lies in the ability to innovate rapidly, deliver personalized customer experiences, and operate efficiently. Generative AI is at the forefront of this transformation, offering startups unparalleled opportunities for growth in 2024.

Generative AI is a game-changer for startups, significantly accelerating product development by quickly generating prototypes and innovative ideas. This enables startups to innovate faster, stay ahead of the competition, and bring new products to market more efficiently. The technology also allows for a high level of customization, helping startups create highly personalized products and solutions that meet specific customer needs. This enhances customer satisfaction and loyalty, giving startups a competitive edge in their respective industries.

By automating repetitive tasks and optimizing workflows, Generative AI improves operational efficiency, saving time and resources while minimizing human errors. This allows startups to focus on strategic initiatives that drive growth and profitability. Additionally, Generative AI’s ability to analyze large datasets provides startups with valuable insights for data-driven decision-making, ensuring that their actions are informed and impactful. This data-driven approach enhances marketing strategies, making them more effective and personalized.

Intelisync offers comprehensive AI/ML services that support startups in leveraging Generative AI for growth and innovation. With Intelisync’s expertise, startups can enhance product development, improve operational efficiency, and develop effective marketing strategies. Transform your business with the power of Generative AI—Contact Intelisync today and unlock your Learn more...

#5 Powerful Ways Generative AI Boosts Your Startup#advanced AI tools support startups#Driving Innovation and Growth#Enhancing Customer Experience#Forecasting Data Analysis and Decision-Making#Generative AI#Generative AI improves operational efficiency#How can a startup get started with Generative AI?#Is Generative AI suitable for all types of startups?#marketing strategies for startups#Streamlining Operations#Strengthen Product Development#Transform your business with AI-driven innovation#What is Generative AI#Customized AI Solutions#AI Development Services#Custom Generative AI Model Development.

0 notes

Text

artificial intelligence| ai development companies| ai in business| ai for business automation| ai development| artificial intelligence ai| ai technology| ai companies| ai developers| ai intelligence| generative ai| ai software development| top ai companies| ai ops| ai software companies| companies that work on ai| artificial intelligence service providers in india| artificial intelligence companies| customer service ai| ai model| leading ai companies| ai in customer support| ai solutions for small business| ai for business book| basic knowledge for artificial intelligence| matching in artificial intelligence|

#artificial intelligence#ai development companies#ai in business#ai for business automation#ai development#artificial intelligence ai#ai technology#ai companies#ai developers#ai intelligence#generative ai#ai software development#top ai companies#ai ops#ai software companies#companies that work on ai#artificial intelligence service providers in india#artificial intelligence companies#customer service ai#ai model#leading ai companies#ai in customer support#ai solutions for small business#ai for business book#basic knowledge for artificial intelligence#matching in artificial intelligence

1 note

·

View note

Text

South Africa’s First AI-Powered Hospital to Open in Johannesburg In an era marked by technological advancements, South Africa is poised to make history by unveiling the continent’s inaugural AI-powered hospital, set to open its doors in Johannesburg in 2024. This pioneering leap in healthcare promises to revolutionize medical diagnosis and treatment, significantly benefiting both healthcare providers and patients. As we delve deeper into this groundbreaking development, it becomes evident that AI is not just the future of healthcare in South Africa; it’s already playing a substantial role in transforming the landscape.

#Artificial Intelligence Solutions#AI Development Services#Advanced AI Technologies#Customized AI Development Services#AI Model Integration#AI Development Process

0 notes

Note

Your thoughts on Seventeen as sex workers and their specialties

Seventeen as Sex Workers | NSFW

💎 Rating: NSFW. Mature (18+) Minors DNI. 💎 Genre: headcanon, imagine, smut. 💎 Warnings: language?

💎 Sexually Explicit Content: this is about sex work, we support sex workers on this blog, if you are not comfortable with that please do not engage with this post. All of these are consented acts, the services are very detailed, and everyone knows what they're getting themselves into (even Gyu).

🗝️Note: I gifted @minttangerines & @minisugakoobies with a preview of the ones I had drunkenly wrote back on Valentines Day. Finally finished the rest.

Disclaimers: This is a work of fiction; I do not own any of the idols depicted below.

Coups Grade A, mothering fucking camboy those eyebrows alone have his viewers coming on command.

Jeonghan This man is a financial dom, that pretty face and scathing looks of disapproval without really getting his hands dirty? (Coups is his number one customer.)

Joshua Similar to Han he sells his perversely used items to the very (large) freaky crowd of humans on the internet. Is he mildly worried one of his specimens will end up at a crime scene? Yes.

Jun Ok the only thing I keep coming back to for Jun is a soft core pornstar. Just thinking about the members giggling over his one kiss scene.

Hoshi THE male stripper to end all other stripper's careers, because Hoshi simply loves to dance, and that magnetism really draws a crowd.

Wonwoo Welcome your happy endings massage.

Woozi Y’all this man makes moaning boy audios on YouTube. And they SELL (in youtube streams that is). He finally collected enough to compile an hour live stream and is showering in the rewards.

DK Our classy escort for the affluent crowd…that sometimes (always) ends up in an additional service.

Mingyu Gyu is kinda clueless (per usual) he makes his money cuckholding for some of the rich gym couples. But has conflicted feelings about if this is really for him.

Hao Hallucinogenic tea ceremonies where he brings you to verbal orgasm.

Boo Ahem. Boo is the purest of doms, this man is clean, by the rule book and concise whenever you require his services. This is the safest domming experience you will ever have.

Vernon Listen it had to be someone, he’s our resident feet pic supplier.

Dino How do I properly explain this one? Dino sold himself to a singular client, one who spoils him, and he makes sure to take care of all their needs. The epitome of an amazing partner. The perfect little subby man.

© COPYRIGHT 2021 - 2024 by kiestrokes All rights reserved. No portion of this work may be reproduced without written permission from the author. This includes translations. No generative artificial intelligence (AI) was used in the writing of this work. The author expressly prohibits any entity from using this for purposes of training AI technologies to generate text, including without the limitation technologies capable of generating works in the same style or genre as this publication. The author reserves all rights to license uses of this work for generative AI training and development of machine learning language models.

#the lucifer to my lokie#the sun to my mars#earth to mars#svt x reader#svt imagines#svt smut#svt headcanons#svt hard hours#svt hard thoughts#deluhrs#choi seungcheol smut#yoon jeonghan smut#wen junhui smut#kwon soonyoung smut#xu minghao smut#lee chan smut#lee seokmin smut#kim mingyu smut#vernon smut#boo seungkwan smut#joshua hong smut#jeon wonwoo smut

244 notes

·

View notes

Text

Hugging Face partners with Groq for ultra-fast AI model inference

New Post has been published on https://thedigitalinsider.com/hugging-face-partners-with-groq-for-ultra-fast-ai-model-inference/

Hugging Face partners with Groq for ultra-fast AI model inference

Hugging Face has added Groq to its AI model inference providers, bringing lightning-fast processing to the popular model hub.

Speed and efficiency have become increasingly crucial in AI development, with many organisations struggling to balance model performance against rising computational costs.

Rather than using traditional GPUs, Groq has designed chips purpose-built for language models. The company’s Language Processing Unit (LPU) is a specialised chip designed from the ground up to handle the unique computational patterns of language models.

Unlike conventional processors that struggle with the sequential nature of language tasks, Groq’s architecture embraces this characteristic. The result? Dramatically reduced response times and higher throughput for AI applications that need to process text quickly.

Developers can now access numerous popular open-source models through Groq’s infrastructure, including Meta’s Llama 4 and Qwen’s QwQ-32B. This breadth of model support ensures teams aren’t sacrificing capabilities for performance.

Users have multiple ways to incorporate Groq into their workflows, depending on their preferences and existing setups.

For those who already have a relationship with Groq, Hugging Face allows straightforward configuration of personal API keys within account settings. This approach directs requests straight to Groq’s infrastructure while maintaining the familiar Hugging Face interface.

Alternatively, users can opt for a more hands-off experience by letting Hugging Face handle the connection entirely, with charges appearing on their Hugging Face account rather than requiring separate billing relationships.

The integration works seamlessly with Hugging Face’s client libraries for both Python and JavaScript, though the technical details remain refreshingly simple. Even without diving into code, developers can specify Groq as their preferred provider with minimal configuration.

Customers using their own Groq API keys are billed directly through their existing Groq accounts. For those preferring the consolidated approach, Hugging Face passes through the standard provider rates without adding markup, though they note that revenue-sharing agreements may evolve in the future.

Hugging Face even offers a limited inference quota at no cost—though the company naturally encourages upgrading to PRO for those making regular use of these services.

This partnership between Hugging Face and Groq emerges against a backdrop of intensifying competition in AI infrastructure for model inference. As more organisations move from experimentation to production deployment of AI systems, the bottlenecks around inference processing have become increasingly apparent.

What we’re seeing is a natural evolution of the AI ecosystem. First came the race for bigger models, then came the rush to make them practical. Groq represents the latter—making existing models work faster rather than just building larger ones.

For businesses weighing AI deployment options, the addition of Groq to Hugging Face’s provider ecosystem offers another choice in the balance between performance requirements and operational costs.

The significance extends beyond technical considerations. Faster inference means more responsive applications, which translates to better user experiences across countless services now incorporating AI assistance.

Sectors particularly sensitive to response times (e.g. customer service, healthcare diagnostics, financial analysis) stand to benefit from improvements to AI infrastructure that reduces the lag between question and answer.

As AI continues its march into everyday applications, partnerships like this highlight how the technology ecosystem is evolving to address the practical limitations that have historically constrained real-time AI implementation.

(Photo by Michał Mancewicz)

See also: NVIDIA helps Germany lead Europe’s AI manufacturing race

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

#Accounts#ai#ai & big data expo#AI assistance#AI development#AI Infrastructure#ai model#AI systems#amp#Analysis#API#applications#approach#architecture#Artificial Intelligence#automation#Big Data#Building#california#chip#chips#Cloud#code#Companies#competition#comprehensive#conference#consolidated#consolidated approach#customer service

0 notes

Text

artificial intelligence| ai development companies| ai in business| ai for business automation| ai development| artificial intelligence ai| ai technology| ai companies| ai developers| ai intelligence| generative ai| ai software development| top ai companies| ai ops| ai software companies| companies that work on ai| artificial intelligence service providers in india| artificial intelligence companies| customer service ai| ai model| leading ai companies| ai in customer support| ai solutions for small business| ai for business book| basic knowledge for artificial intelligence| matching in artificial intelligence|

#artificial intelligence#ai development companies#ai in business#ai for business automation#ai development#artificial intelligence ai#ai technology#ai companies#ai developers#ai intelligence#generative ai#ai software development#top ai companies#ai ops#ai software companies#companies that work on ai#artificial intelligence service providers in india#artificial intelligence companies#customer service ai#ai model#leading ai companies#ai in customer support#ai solutions for small business#ai for business book#basic knowledge for artificial intelligence#matching in artificial intelligence

1 note

·

View note

Text

https://yournewzz.com/top-pcb-design-companies-in-india-transforming-electronics-innovation/

#custom ai model development#best sourcing specialists in india#end-to-end product development#ai development services company in india#iot embedded systems#principles of ui ux design

0 notes

Text

#artificial intelligence services#machine learning solutions#AI development company#machine learning development#AI services India#AI consulting services#ML model development#custom AI solutions#deep learning services#natural language processing#computer vision solutions#AI integration services#AI for business#enterprise AI solutions#machine learning consulting#predictive analytics#AI software development#intelligent automation

0 notes

Text

Stories about AI-generated political content are like stories about people drunkenly setting off fireworks: There’s a good chance they’ll end in disaster. WIRED is tracking AI usage in political campaigns across the world, and so far examples include pornographic deepfakes and misinformation-spewing chatbots. It’s gotten to the point where the US Federal Communications Commission has proposed mandatory disclosures for AI use in television and radio ads.

Despite concerns, some US political campaigns are embracing generative AI tools. There’s a growing category of AI-generated political content flying under the radar this election cycle, developed by startups including Denver-based BattlegroundAI, which uses generative AI to come up with digital advertising copy at a rapid clip. “Hundreds of ads in minutes,” its website proclaims.

BattlegroundAI positions itself as a tool specifically for progressive campaigns—no MAGA types allowed. And it is moving fast: It launched a private beta only six weeks ago and a public beta just last week. Cofounder and CEO Maya Hutchinson is currently at the Democratic National Convention trying to attract more clients. So far, the company has around 60, she says. (The service has a freemium model, with an upgraded option for $19 a month.)

“It’s kind of like having an extra intern on your team,” Hutchinson, a marketer who got her start on the digital team for President Obama’s reelection campaign, tells WIRED. We’re sitting at a picnic table inside the McCormick Place Convention Center in Chicago, and she’s raising her voice to be heard over music blasting from a nearby speaker. “If you’re running ads on Facebook or Google, or developing YouTube scripts, we help you do that in a very structured fashion.”

BattlegroundAI’s interface asks users to select from five different popular large language models—including ChatGPT, Claude, and Anthropic—to generate answers; it then asks users to further customize their results by selecting for tone and “creativity level,” as well as how many variations on a single prompt they might want. It also offers guidance on whom to target and helps craft messages geared toward specialized audiences for a variety of preselected issues, including infrastructure, women’s health, and public safety.

BattlegroundAI declined to provide any examples of actual political ads created using its services. However, WIRED tested the product by creating a campaign aimed at extremely left-leaning adults aged 88 to 99 on the issue of media freedom. “Don't let fake news pull the wool over your bifocals!�� one of the suggested ads began.

BattlegroundAI offers only text generation—no AI images or audio. The company adheres to various regulations around the use of AI in political ads.

“What makes Battleground so well suited for politics is it’s very much built with those rules in mind,” says Andy Barr, managing director for Uplift, a Democratic digital ad agency. Barr says Uplift has been testing the BattlegroundAI beta for a few weeks. “It’s helpful with idea generation,” he says. The agency hasn’t yet released any ads using Battleground copy yet, but it has already used it to develop concepts, Barr adds.

I confess to Hutchinson that if I were a politician, I would be scared to use BattlegroundAI. Generative AI tools are known to “hallucinate,” a polite way of saying that they sometimes make things up out of whole cloth. (They bullshit, to use academic parlance.) I ask how she’s ensuring that the political content BattlegroundAI generates is accurate.

“Nothing is automated,” she replies. Hutchinson notes that BattlegroundAI’s copy is a starting-off point, and that humans from campaigns are meant to review and approve it before it goes out. “You might not have a lot of time, or a huge team, but you’re definitely reviewing it.”

Of course, there’s a rising movement opposing how AI companies train their products on art, writing, and other creative work without asking for permission. I ask Hutchinson what she’d say to people who might oppose how tools like ChatGPT are trained. “Those are incredibly valid concerns,” she says. “We need to talk to Congress. We need to talk to our elected officials.”

I ask whether BattlegroundAI is looking at offering language models that train on only public domain or licensed data. “Always open to that,” she says. “We also need to give folks, especially those who are under time constraints, in resource-constrained environments, the best tools that are available to them, too. We want to have consistent results for users and high-quality information—so the more models that are available, I think the better for everybody.”

And how would Hutchinson respond to people in the progressive movement—who generally align themselves with the labor movement—objecting to automating ad copywriting? “Obviously valid concerns,” she says. “Fears that come with the advent of any new technology—we’re afraid of the computer, of the light bulb.”

Hutchinson lays out her stance: She doesn’t see this as a replacement for human labor so much as a way to reduce grunt work. “I worked in advertising for a very long time, and there's so many elements of it that are repetitive, that are honestly draining of creativity,” she says. “AI takes away the boring elements.” She sees BattlegroundAI as a helpmeet for overstretched and underfunded teams.

Taylor Coots, a Kentucky-based political strategist who recently began using the service, describes it as ��very sophisticated,” and says it helps identify groups of target voters and ways to tailor messaging to reach them in a way that would otherwise be difficult for small campaigns. In battleground races in gerrymandered districts, where progressive candidates are major underdogs, budgets are tight. “We don’t have millions of dollars,” he says. “Any opportunities we have for efficiencies, we’re looking for those.”

Will voters care if the writing in digital political ads they see is generated with the help of AI? “I'm not sure there is anything more unethical about having AI generate content than there is having unnamed staff or interns generate content,” says Peter Loge, an associate professor and program director at George Washington University who founded a project on ethics in political communication.

“If one could mandate that all political writing done with the help of AI be disclosed, then logically you would have to mandate that all political writing”—such as emails, ads, and op-eds—“not done by the candidate be disclosed,” he adds.

Still, Loge has concerns about what AI does to public trust on a macro level, and how it might impact the way people respond to political messaging going forward. “One risk of AI is less what the technology does, and more how people feel about what it does,” he says. “People have been faking images and making stuff up for as long as we've had politics. The recent attention on generative AI has increased peoples' already incredibly high levels of cynicism and distrust. If everything can be fake, then maybe nothing is true.”

Hutchinson, meanwhile, is focused on her company’s shorter-term impact. “We really want to help people now,” she says. “We’re trying to move as fast as we can.”

18 notes

·

View notes

Text

Prometheus Gave the Gift of Fire to Mankind. We Can't Give it Back, nor Should We.

AI. Artificial intelligence. Large Language Models. Learning Algorithms. Deep Learning. Generative Algorithms. Neural Networks. This technology has many names, and has been a polarizing topic in numerous communities online. By my observation, a lot of the discussion is either solely focused on A) how to profit off it or B) how to get rid of it and/or protect yourself from it. But to me, I feel both of these perspectives apply a very narrow usage lens on something that's more than a get rich quick scheme or an evil plague to wipe from the earth.

This is going to be long, because as someone whose degree is in psych and computer science, has been a teacher, has been a writing tutor for my younger brother, and whose fiance works in freelance data model training... I have a lot to say about this.

I'm going to address the profit angle first, because I feel most people in my orbit (and in related orbits) on Tumblr are going to agree with this: flat out, the way AI is being utilized by large corporations and tech startups -- scraping mass amounts of visual and written works without consent and compensation, replacing human professionals in roles from concept art to story boarding to screenwriting to customer service and more -- is unethical and damaging to the wellbeing of people, would-be hires and consumers alike. It's wasting energy having dedicated servers running nonstop generating content that serves no greater purpose, and is even pressing on already overworked educators because plagiarism just got a very new, harder to identify younger brother that's also infinitely more easy to access.

In fact, ChatGPT is such an issue in the education world that plagiarism-detector subscription services that take advantage of how overworked teachers are have begun paddling supposed AI-detectors to schools and universities. Detectors that plainly DO NOT and CANNOT work, because the difference between "A Writer Who Writes Surprisingly Well For Their Age" is indistinguishable from "A Language Replicating Algorithm That Followed A Prompt Correctly", just as "A Writer Who Doesn't Know What They're Talking About Or Even How To Write Properly" is indistinguishable from "A Language Replicating Algorithm That Returned Bad Results". What's hilarious is that the way these "detectors" work is also run by AI.

(to be clear, I say plagiarism detectors like TurnItIn.com and such are predatory because A) they cost money to access advanced features that B) often don't work properly or as intended with several false flags, and C) these companies often are super shady behind the scenes; TurnItIn for instance has been involved in numerous lawsuits over intellectual property violations, as their services scrape (or hopefully scraped now) the papers submitted to the site without user consent (or under coerced consent if being forced to use it by an educator), which it uses in can use in its own databases as it pleases, such as for training the AI detecting AI that rarely actually detects AI.)

The prevalence of visual and lingustic generative algorithms is having multiple, overlapping, and complex consequences on many facets of society, from art to music to writing to film and video game production, and even in the classroom before all that, so it's no wonder that many disgruntled artists and industry professionals are online wishing for it all to go away and never come back. The problem is... It can't. I understand that there's likely a large swath of people saying that who understand this, but for those who don't: AI, or as it should more properly be called, generative algorithms, didn't just show up now (they're not even that new), and they certainly weren't developed or invented by any of the tech bros peddling it to megacorps and the general public.

Long before ChatGPT and DALL-E came online, generative algorithms were being used by programmers to simulate natural processes in weather models, shed light on the mechanics of walking for roboticists and paleontologists alike, identified patterns in our DNA related to disease, aided in complex 2D and 3D animation visuals, and so on. Generative algorithms have been a part of the professional world for many years now, and up until recently have been a general force for good, or at the very least a force for the mundane. It's only recently that the technology involved in creating generative algorithms became so advanced AND so readily available, that university grad students were able to make the publicly available projects that began this descent into madness.

Does anyone else remember that? That years ago, somewhere in the late 2010s to the beginning of the 2020s, these novelty sites that allowed you to generate vague images from prompts, or generate short stylistic writings from a short prompt, were popping up with University URLs? Oftentimes the queues on these programs were hours long, sometimes eventually days or weeks or months long, because of how unexpectedly popular this concept was to the general public. Suddenly overnight, all over social media, everyone and their grandma, and not just high level programming and arts students, knew this was possible, and of course, everyone wanted in. Automated art and writing, isn't that neat? And of course, investors saw dollar signs. Simply scale up the process, scrape the entire web for data to train the model without advertising that you're using ALL material, even copyrighted and personal materials, and sell the resulting algorithm for big money. As usual, startup investors ruin every new technology the moment they can access it.

To most people, it seemed like this magic tech popped up overnight, and before it became known that the art assets on later models were stolen, even I had fun with them. I knew how learning algorithms worked, if you're going to have a computer make images and text, it has to be shown what that is and then try and fail to make its own until it's ready. I just, rather naively as I was still in my early 20s, assumed that everything was above board and the assets were either public domain or fairly licensed. But when the news did came out, and when corporations started unethically implementing "AI" in everything from chatbots to search algorithms to asking their tech staff to add AI to sliced bread, those who were impacted and didn't know and/or didn't care where generative algorithms came from wanted them GONE. And like, I can't blame them. But I also quietly acknowledged to myself that getting rid of a whole technology is just neither possible nor advisable. The cat's already out of the bag, the genie has left its bottle, the Pandorica is OPEN. If we tried to blanket ban what people call AI, numerous industries involved in making lives better would be impacted. Because unfortunately the same tool that can edit selfies into revenge porn has also been used to identify cancer cells in patients and aided in decoding dead languages, among other things.

When, in Greek myth, Prometheus gave us the gift of fire, he gave us both a gift and a curse. Fire is so crucial to human society, it cooks our food, it lights our cities, it disposes of waste, and it protects us from unseen threats. But fire also destroys, and the same flame that can light your home can burn it down. Surely, there were people in this mythic past who hated fire and all it stood for, because without fire no forest would ever burn to the ground, and surely they would have called for fire to be given back, to be done away with entirely. Except, there was no going back. The nature of life is that no new element can ever be undone, it cannot be given back.

So what's the way forward, then? Like, surely if I can write a multi-paragraph think piece on Tumblr.com that next to nobody is going to read because it's long as sin, about an unpopular topic, and I rarely post original content anyway, then surely I have an idea of how this cyberpunk dystopia can be a little less.. Dys. Well I do, actually, but it's a long shot. Thankfully, unlike business majors, I actually had to take a cyber ethics course in university, and I actually paid attention. I also passed preschool where I learned taking stuff you weren't given permission to have is stealing, which is bad. So the obvious solution is to make some fucking laws to limit the input on data model training on models used for public products and services. It's that simple. You either use public domain and licensed data only or you get fined into hell and back and liable to lawsuits from any entity you wronged, be they citizen or very wealthy mouse conglomerate (suing AI bros is the only time Mickey isn't the bigger enemy). And I'm going to be honest, tech companies are NOT going to like this, because not only will it make doing business more expensive (boo fucking hoo), they'd very likely need to throw out their current trained datasets because of the illegal components mixed in there. To my memory, you can't simply prune specific content from a completed algorithm, you actually have to redo rhe training from the ground up because the bad data would be mixed in there like gum in hair. And you know what, those companies deserve that. They deserve to suffer a punishment, and maybe fold if they're young enough, for what they've done to creators everywhere. Actually, laws moving forward isn't enough, this needs to be retroactive. These companies need to be sued into the ground, honestly.

So yeah, that's the mess of it. We can't unlearn and unpublicize any technology, even if it's currently being used as a tool of exploitation. What we can do though is demand ethical use laws and organize around the cause of the exclusive rights of individuals to the content they create. The screenwriter's guild, actor's guild, and so on already have been fighting against this misuse, but given upcoming administration changes to the US, things are going to get a lot worse before thet get a little better. Even still, don't give up, have clear and educated goals, and focus on what you can do to affect change, even if right now that's just individual self-care through mental and physical health crises like me.

#ai#artificial intelligence#generative algorithms#llm#large language model#chatgpt#ai art#ai writing#kanguin original

9 notes

·

View notes

Text

"Welcome to the AI trough of disillusionment"

"When the chief executive of a large tech firm based in San Francisco shares a drink with the bosses of his Fortune 500 clients, he often hears a similar message. “They’re frustrated and disappointed. They say: ‘I don’t know why it’s taking so long. I’ve spent money on this. It’s not happening’”.

"For many companies, excitement over the promise of generative artificial intelligence (AI) has given way to vexation over the difficulty of making productive use of the technology. According to S&P Global, a data provider, the share of companies abandoning most of their generative-AI pilot projects has risen to 42%, up from 17% last year. The boss of Klarna, a Swedish buy-now, pay-later provider, recently admitted that he went too far in using the technology to slash customer-service jobs, and is now rehiring humans for the roles."

"Consumers, for their part, continue to enthusiastically embrace generative AI. [Really?] Sam Altman, the boss of OpenAI, recently said that its ChatGPT bot was being used by some 800m people a week, twice as many as in February. Some already regularly turn to the technology at work. Yet generative AI’s ["]transformative potential["] will be realised only if a broad swathe of companies systematically embed it into their products and operations. Faced with sluggish progress, many bosses are sliding into the “trough of disillusionment”, says John Lovelock of Gartner, referring to the stage in the consultancy’s famed “hype cycle” that comes after the euphoria generated by a new technology.

"This poses a problem for the so-called hyperscalers—Alphabet, Amazon, Microsoft and Meta—that are still pouring vast sums into building the infrastructure underpinning AI. According to Pierre Ferragu of New Street Research, their combined capital expenditures are on course to rise from 12% of revenues a decade ago to 28% this year. Will they be able to generate healthy enough returns to justify the splurge? [I'd guess not.]

"Companies are struggling to make use of generative AI for many reasons. Their data troves are often siloed and trapped in archaic it systems. Many experience difficulties hiring the technical talent needed. And however much potential they see in the technology, bosses know they have brands to protect, which means minimising the risk that a bot will make a damaging mistake or expose them to privacy violations or data breaches.

"Meanwhile, the tech giants continue to preach AI’s potential. [Of course.] Their evangelism was on full display this week during the annual developer conferences of Microsoft and Alphabet’s Google. Satya Nadella and Sundar Pichai, their respective bosses, talked excitedly about a “platform shift” and the emergence of an “agentic web” populated by semi-autonomous AI agents interacting with one another on behalf of their human masters. [Jesus christ. Why? Who benefits from that? Why would anyone want that? What's the point of using the Internet if it's all just AIs pretending to be people? Goddamn billionaires.]

"The two tech bosses highlighted how AI models are getting better, faster, cheaper and more widely available. At one point Elon Musk announced to Microsoft’s crowd via video link that xAI, his AI lab, would be making its Grok models available on the tech giant’s Azure cloud service (shortly after Mr Altman, his nemesis, used the same medium to tout the benefits of OpenAI’s deep relationship with Microsoft). [Nobody wanted Microsoft to pivot to the cloud.] Messrs Nadella and Pichai both talked up a new measure—the number of tokens processed in generative-AI models—to demonstrate booming usage. [So now they're fiddling with the numbers to make them look better.

"Fuddy-duddy measures of business success, such as sales or profit, were not in focus. For now, the meagre cloud revenues Alphabet, Amazon and Microsoft are making from AI, relative to the magnitude of their investments, come mostly from AI labs and startups, some of which are bankrolled by the giants themselves.

"Still, as Mr Lovelock of Gartner argues, much of the benefit of the technology for the hyperscalers will come from applying it to their own products and operations. At its event, Google announced that it will launch a more conversational “AI mode” for its search engine, powered by its Gemini models. It says that the AI summaries that now appear alongside its search results are already used by more than 1.5bn people each month. [I'd imagine this is giving a generous definition of 'used'. The AI overviews spawn on basically every search - that doesn't mean everyone's using them. Although, probably, a lot of people are.] Google has also introduced generative AI into its ad business [so now the ads are even less appealing], to help companies create content and manage their campaigns. Meta, which does not sell cloud computing, has weaved the technology into its ad business using its open-source Llama models. Microsoft has embedded AI into its suite of workplace apps and its coding platform, Github. Amazon has applied the technology in its e-commerce business to improve product recommendations and optimise logistics. AI may also allow the tech giants to cut programming jobs. This month Microsoft laid off 6,000 workers, many of whom were reportedly software engineers. [That's going to come back to bite you. The logistics is a valid application, but not the whole 'replacing programmers with AI' bit. Better get ready for the bugs!]

"These efforts, if successful, may even encourage other companies to keep experimenting with the technology until they, too, can make it work. Troughs, after all, have two sides; next in Gartner’s cycle comes the “slope of enlightenment”, which sounds much more enjoyable. At that point, companies that have underinvested in AI may come to regret it. [I doubt it.] The cost of falling behind is already clear at Apple, which was slower than its fellow tech giants to embrace generative AI. It has flubbed the introduction of a souped-up version of its voice assistant Siri, rebuilt around the technology. The new bot is so bug-ridden its rollout has been postponed.

"Mr Lovelock’s bet is that the trough will last until the end of next year. In the meantime, the hyperscalers have work to do. Kevin Scott, Microsoft’s chief technology officer, said this week that for AI agents to live up to their promise, serious work needs to be done on memory, so that they can recall past interactions. The web also needs new protocols to help agents gain access to various data streams. [What an ominous way to phrase that.] Microsoft has now signed up to an open-source one called Model Context Protocol, launched in November by Anthropic, another AI lab, joining Amazon, Google and OpenAI.

"Many companies say that what they need most is not cleverer AI models, but more ways to make the technology useful. Mr Scott calls this the “capability overhang.” He and Anthropic’s co-founder Dario Amodei used the Microsoft conference to urge users to think big and keep the faith. [Yeah, because there's no actual proof this helps. Except in medicine and science.] “Don’t look away,” said Mr Amodei. “Don’t blink.” ■"

3 notes

·

View notes

Text

Cross-posting from my mention of this on Pillowfort.

Yesterday, Draft2Digital (which now includes Smashwords) sent out an email with a, frankly, very insulting survey. It would be such a shame if a link to that survey without the link trackers were to circulate around Tumblr dot Com.

The survey has eight multiple choice questions and (more importantly) two long-form text response boxes.

The survey is being run from August 27th, 2024 to September 3rd, 2024. If you use Draft2Digital or Smashwords, and have not already seen this in your associated email, you may want to read through it and send them your thoughts.

Plain text for the image below the cut:

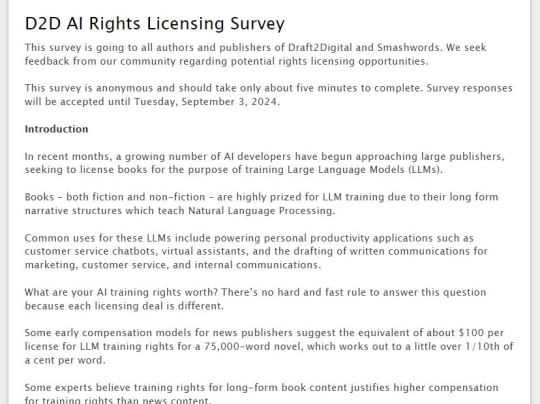

D2D AI Rights Licensing Survey:

This survey is going to all authors and publishers of Draft2Digital and Smashwords. We seek feedback from our community regarding potential rights licensing opportunities.

This survey is anonymous and should take only about five minutes to complete. Survey responses will be accepted until Tuesday, September 3, 2024.

Introduction:

In recent months, a growing number of AI developers have begun approaching large publishers, seeking to license books for the purpose of training Large Language Models (LLMs).

Books – both fiction and non-fiction – are highly prized for LLM training due to their long form narrative structures which teach Natural Language Processing.

Common uses for these LLMs include powering personal productivity applications such as customer service chatbots, virtual assistants, and the drafting of written communications for marketing, customer service, and internal communications.

What are your AI training rights worth? There’s no hard and fast rule to answer this question because each licensing deal is different.

Some early compensation models for news publishers suggest the equivalent of about $100 per license for LLM training rights for a 75,000-word novel, which works out to a little over 1/10th of a cent per word.

Some experts believe training rights for long-form book content justifies higher compensation for training rights than news content.

19 notes

·

View notes