#RAG Model Implementation

Explore tagged Tumblr posts

Text

Think Smarter, Not Harder: Meet RAG

How do RAG make machines think like you?

Imagine a world where your AI assistant doesn't only talk like a human but understands your needs, explores the latest data, and gives you answers you can trust—every single time. Sounds like science fiction? It's not.

We're at the tipping point of an AI revolution, where large language models (LLMs) like OpenAI's GPT are rewriting the rules of engagement in everything from customer service to creative writing. here's the catch: all that eloquence means nothing if it can't deliver the goods—if the answers aren't just smooth, spot-on, accurate, and deeply relevant to your reality.

The question is: Are today's AI models genuinely equipped to keep up with the complexities of real-world applications, where context, precision, and truth aren't just desirable but essential? The answer lies in pushing the boundaries further—with Retrieval-Augmented Generation (RAG).

While LLMs generate human-sounding copies, they often fail to deliver reliable answers based on real facts. How do we ensure that an AI-powered assistant doesn't confidently deliver outdated or incorrect information? How do we strike a balance between fluency and factuality? The answer is in a brand new powerful approach: Retrieval-Augmented Generation (RAG).

What is Retrieval-Augmented Generation (RAG)?

RAG is a game-changing technique to increase the basic abilities of traditional language models by integrating them with information retrieval mechanisms. RAG does not only rely on pre-acquired knowledge but actively seek external information to create up-to-date and accurate answers, rich in context. Imagine for a second what could happen if you had a customer support chatbot able to engage in a conversation and draw its answers from the latest research, news, or your internal documents to provide accurate, context-specific answers.

RAG has the immense potential to guarantee informed, responsive and versatile AI. But why is this necessary? Traditional LLMs are trained on vast datasets but are static by nature. They cannot access real-time information or specialized knowledge, which can lead to "hallucinations"—confidently incorrect responses. RAG addresses this by equipping LLMs to query external knowledge bases, grounding their outputs in factual data.

How Does Retrieval-Augmented Generation (RAG) Work?

RAG brings a dynamic new layer to traditional AI workflows. Let's break down its components:

Embedding Model

Think of this as the system's "translator." It converts text documents into vector formats, making it easier to manage and compare large volumes of data.

Retriever

It's the AI's internal search engine. It scans the vectorized data to locate the most relevant documents that align with the user's query.

Reranker (Opt.)

It assesses the submitted documents and score their relevance to guarantee that the most pertinent data will pass along.

Language Model

The language model combines the original query with the top documents the retriever provides, crafting a precise and contextually aware response. Embedding these components enables RAG to enhance the factual accuracy of outputs and allows for continuous updates from external data sources, eliminating the need for costly model retraining.

How does RAG achieve this integration?

It begins with a query. When a user asks a question, the retriever sifts through a curated knowledge base using vector embeddings to find relevant documents. These documents are then fed into the language model, which generates an answer informed by the latest and most accurate information. This approach dramatically reduces the risk of hallucinations and ensures that the AI remains current and context-aware.

RAG for Content Creation: A Game Changer or just a IT thing?

Content creation is one of the most exciting areas where RAG is making waves. Imagine an AI writer who crafts engaging articles and pulls in the latest data, trends, and insights from credible sources, ensuring that every piece of content is compelling and accurate isn't a futuristic dream or the product of your imagination. RAG makes it happen.

Why is this so revolutionary?

Engaging and factually sound content is rare, especially in today's digital landscape, where misinformation can spread like wildfire. RAG offers a solution by combining the creative fluency of LLMs with the grounding precision of information retrieval. Consider a marketing team launching a campaign based on emerging trends. Instead of manually scouring the web for the latest statistics or customer insights, an RAG-enabled tool could instantly pull in relevant data, allowing the team to craft content that resonates with current market conditions.

The same goes for various industries from finance to healthcare, and law, where accuracy is fundamental. RAG-powered content creation tools promise that every output aligns with the most recent regulations, the latest research and market trends, contributing to boosting the organization's credibility and impact.

Applying RAG in day-to-day business

How can we effectively tap into the power of RAG? Here's a step-by-step guide:

Identify High-Impact Use Cases

Start by pinpointing areas where accurate, context-aware information is critical. Think customer service, marketing, content creation, and compliance—wherever real-time knowledge can provide a competitive edge.

Curate a robust knowledge base

RAG relies on the quality of the data it collects and finds. Build or connect to a comprehensive knowledge repository with up-to-date, reliable information—internal documents, proprietary data, or trusted external sources.

Select the right tools and technologies

Leverage platforms that support RAG architecture or integrate retrieval mechanisms with existing LLMs. Many AI vendors now offer solutions combining these capabilities, so choose one that fits your needs.

Train your team

Successful implementation requires understanding how RAG works and its potential impact. Ensure your team is well-trained in deploying RAG&aapos;s technical and strategic aspects.

Monitor and optimize

Like any technology, RAG benefits from continuous monitoring and optimization. Track key performance indicators (KPIs) like accuracy, response time, and user satisfaction to refine and enhance its application.

Applying these steps will help organizations like yours unlock RAG's full potential, transform their operations, and enhance their competitive edge.

The Business Value of RAG

Why should businesses consider integrating RAG into their operations? The value proposition is clear:

Trust and accuracy

RAG significantly enhances the accuracy of responses, which is crucial for maintaining customer trust, especially in sectors like finance, healthcare, and law.

Efficiency

Ultimately, RAG reduces the workload on human employees, freeing them to focus on higher-value tasks.

Knowledge management

RAG ensures that information is always up-to-date and relevant, helping businesses maintain a high standard of knowledge dissemination and reducing the risk of costly errors.

Scalability and change

As an organization grows and evolves, so does the complexity of information management. RAG offers a scalable solution that can adapt to increasing data volumes and diverse information needs.

RAG vs. Fine-Tuning: What's the Difference?

Both RAG and fine-tuning are powerful techniques for optimizing LLM performance, but they serve different purposes:

Fine-Tuning

This approach involves additional training on specific datasets to make a model more adept at particular tasks. While effective for niche applications, it can limit the model's flexibility and adaptability.

RAG

In contrast, RAG dynamically retrieves information from external sources, allowing for continuous updates without extensive retraining, which makes it ideal for applications where real-time data and accuracy are critical.

The choice between RAG and fine-tuning entirely depends on your unique needs. For example, RAG is the way to go if your priority is real-time accuracy and contextual relevance.

Concluding Thoughts

As AI evolves, the demand for RAG AI Service Providers systems that are not only intelligent but also accurate, reliable, and adaptable will only grow. Retrieval-Augmented generation stands at the forefront of this evolution, promising to make AI more useful and trustworthy across various applications.

Whether it's a content creation revolution, enhancing customer support, or driving smarter business decisions, RAG represents a fundamental shift in how we interact with AI. It bridges the gap between what AI knows and needs to know, making it the tool of reference to grow a real competitive edge.

Let's explore the infinite possibilities of RAG together

We would love to know; how do you intend to optimize the power of RAG in your business? There are plenty of opportunities that we can bring together to life. Contact our team of AI experts for a chat about RAG and let's see if we can build game-changing models together.

#RAG#Fine-tuning LLM for RAG#RAG System Development Companies#RAG LLM Service Providers#RAG Model Implementation#RAG-Enabled AI Platforms#RAG AI Service Providers#Custom RAG Model Development

0 notes

Text

Prompt: Couples will evidently begin to mimic their better half after some time. What traits do you steal from him, and vice versa? Fandom: Twisted Wonderland Characters: Everyone - because I want to and I’m amidst fleshing out all my Yuu/Character dynamics + designs Format: Headcannons. Masterlist: LinkedUP Parts: Heartslabyul (Here) | Savanaclaw | Octavinelle | Scarabia | Pomefiore | Ignihyde | Diasomnia A/N: Putting all my brain rot from my notes into something cohesive. Contrary to my love for ripping your hearts out, I've come with some fluff this time around. BTW you may or may not already do things mentioned - I write my works with a specific Yuu in mind for each character so this is based on them. Just a reminder.

Habits you steal:

Plan-Books (Inherited) : Riddle habitually carries a planner with all his tasks. A physical one, not an app in his cell phone like most students choose. You find it easier to manage and swap to paper-and-pen alternatives at his recommendation.

Tidiness (Inherited): Riddle is a nit-pickier when it comes to physical presentation. His habits of pressing his uniform, laying his clothes out every night, and dressing conservatively rub off. He has a point - ironed trousers do make a difference. Every morning he will redo your uniform tie. It's never knotted to his 'standard', and is his preferred excuse to greet you before class.

"Now, isn't that better? Surely you are more comfortable in ironed linens than those rags you'd been wearing as pajamas. You seriously found them lying in Ramshackle? Were you not given an allowance to buy basic needs? Ridiculous! The Headmaster's irresponsibility holds no bounds!" <- Utterly appalled that you've been sleeping in century-old robes. He supplies you with seven sets of pajamas, a spare uniform, and an iron + board for Ramshackle. All after reaming the Headmaster for neglect in the last dorm-head meeting - either Crowley coughed up the marks or Riddle will supply from his own bank. Seven have mercy if he chooses to become a lawyer instead of a doctor.

No Heels (Developed): Riddle has a height complex. He won't make a show of it, but you wearing heels does emasculate him. Especially if you're already taller naturally. For his sake, you choose to slay your outfits in flats.

"Are those new loafers? Oh - no, they're lovely. The embroidery is exquisite and I can see why Pomefiore's Housewarden models for their brand. I merely thought you preferred the heeled saddle-shoes we saw during the past weekend trip. I must have been mistaken. Never mind me. You look wonderful."

Playing Brain Teasers (Inherited): Riddle has this thing with memory - you don't know if he's really into preventing old-age Alzheimer's or what. He carries a book of teaser games like Sudoku, etc. for when he has downtime and you eventually get into them too.

"Oh! My Rose, would you care to join me for lunch? Trey's siblings recently mailed in a large collection of cross-words. You'll find they are both educational and entertaining - hm? I do not seem the 'type' for word-games? I assure you, even I can relax on occasion. There is no need to look so surprised." <- Riddle's been making a grand effort to do things he enjoys and become more personable. Trey's siblings did not send the collection. Riddle went into town and picked it out on his own. He also found a book on organizing excursions since he's big on quality time. He is dead-set on not being a neglectful or 'boring' partner.

Swear Jar (Developed): Tired of Riddle collaring Ace for his vulgar tongue, you suggest a Heartslabyul swear jar. When the jar gets filled, the money can be used to fund things like study materials and renovations for the dorm. Riddle liked this idea, but now implements it on anyone who sets foot in the Heartslabyul. Considering you spend most of your time there, you've had to develop a vast vocabulary beyond swearing. Oh - you also unironically use the word 'fiddlesticks' now.

Habits he steals:

Useless Expenses (Inherited): You are an enabler without a doubt. Riddle has always functioned with the bare bones - with function and efficiency being the number one priority. Ever so slowly - you've spoiled him with aesthetically pleasing stationary. At first all the needless purchases felt redundant - why buy the pillowcases with flowers when plain white is cheaper? You can invest in a higher quality this way. Yet you've ruined him with gifts that he had no choice but to use. Now he needs to buy the pens with little hedgehogs on them because studying doesn't feel the same with a plain ballpoint.

Slang Dictionary (Developed): With each passing day, all the students in Heartslabyul get more creative at bending the rules. That includes you. Riddle takes it upon himself to carry a 'little-black-book' full of all the sang words he is unfamiliar with. He does want to be a bit more 'hip' to understand you more, but at the same time he wants to bust any student being a smart-mouth. It's an ongoing battle *sigh*.

"Apologies, could you repeat that term for me? Surely it must be relevant to my lecture if you and Ace are whispering. 'Let him cook'? Do you think we are in a culinary lecture?! Have you not been listening to - ah. So it's in reference to letting me finish before interrupting...One moment. I need to make a note."

Chewing Gum (Developed): This is an ode to psychology. In short, eating is tied to a person's fight-or-flight. Instincts dictate that our bodies need to be in a calm state to eat comfortably. One day when Riddle was at his wits end, you tossed him a pack of sugarless gum and told him to chew. Disregarding Trey's unholy dental screeching, Riddle develops a gum dependence for when he's stressed out. On the bright side, his jaw has never been so sharp.

“Mimicry? You must be mistaken. Even if my influence has affected their person, surely there are only positive developments” == Riddle denies any changes if confronted. In truth, he’s well aware of how much you’ve helped him grow. It’s the opposite accusation that spikes concern. Riddle does not want others thinking you’re a mini-version of him. Rumors are not kind and neither is his current reputation. Making those amends is his burden to bare. He is flattered to see you paying attention to his mannerisms, and secretly proud that your bond is strong enough to affect the psyche.

Habits you steal:

Whistling (Inherited): Trey whistles while working in the kitchen or doing general chores around the dorm. He's not very loud with it, so not may students are bothered. Since you laze about in his shadow the tunes he goes through do become repetitive. Now you do the same when cleaning up Ramshackle. Grim wants to knock you both out because he can't take it anymore.

"Ah -- How'd you know it was me in here? Just because I bake for the un-birthday parties doesn't mean I live in the kitchen, you know. My whistling? Huh. Never thought that would be my calling card but there are worse things, haha"

Head-Scratching (Inherited): Trey's got a habit of scratching the back of his head when he's uncomfortable or nervous. That, or rubbing at the nape of his neck while adverting eye contact. You start doing this too whenever you're being scolded or put in a tough situation.

Dental Hygiene (Inherited): By far the most obvious shared trait. Trey enforces his dental habits onto everyone- you are no exception. You now own four different kinds of floss, two toothbrushes (one being electric), and have a strict hygiene routine. Your pearly whites have never been so clean. Eventually you become somewhat of a secondary enforcer, policing anyone who sleeps over your dorm to take care of themselves before bed. All of Heartslabyul learns that there is no going back when you scold Riddle for not brushing after his teatime tart, and live to tell the tale.

"Hey - uh, weird question? Were you handing out floss to the Spelldrive Team yesterday? Seriously? I though Grim was pulling my leg - oh, no! It's not weird at all! Those guys should have a better routine for all the meat they eat when bulking. I'm just shocked you got through to them." <- Very proud. Mildly cocky. He's been itching to get those negligent jocks to floss after their banquets his entire tenure, but steered away from that conflict like the plague. Thank you for making his dreams come true. Now if you could maybe get them to stop picking their gums with toothpicks?

Habits he steals:

Overbuying Food (Developed): Being a baker's son, Trey's good with finances and money. He's also meticulous with the ingredients he purchases for his bakes. You are not. You go to Sam's shop, buy whatever is on sale, and then bring it back home to improvise. This ends poorly more often than not, and behold! Trey has two Ramshackle sluggers snooping around his kitchen for eats. This is unpredictable and therefore he now never knows what amount to buy. You've ruined him.

Phone Calls (Developed): Texting is easier. Especially since phone calls can be a commitment that Trey dislikes being wrapped up in. Whenever Cater's name pops up as the caller, Trey knows he's getting an ear full. The thing is that you never. answer. your. phone. Either the text gets lumped in with the hundreds of missed messages you have, or Grim stole your cell to play mobile games. So Trey gives up and only ever calls. Either Grim will answer or you'll pick up thinking it's the snooze of your alarm.

"Hello? Prefect, where are you? It's me, Trey. Just calling to see if you're still coming to the Un-Birthday party? Riddle's getting a bit nervous since the schedule's set for the next hour. Grim's already here with Ace and Deuce - uh, want Cater to send a double to pick you up? I have a sinking feeling that you're asleep...Call me? Please?" <- He was correct. You called back not a moment after, half-asleep and hauling ass not to be late.

Speaking in Propositions (Inherited): Trey's normally good at keeping neutrality in a conversation, but getting a clear answer out of Yuu you is like solving a rubix cube. Either it's easy and instant, or a long game. Eventually your habit of indecisiveness rubs off on him and he asks questions more than answers them. Evidently this gets his younger classmen to stop asking for favors unless they really need to.

“Aha - really? I didn’t notice at all. Okay. Okay, I picked up on a few hints. What’s so wrong with them taking after me? It’s cute, right?” == Trey is the observant sort that picks up on his influence quickly. Not just anyone carries floss in their pocket at all times - and the looks from his dorm-mates when you offer some up is enough for the realization to click. Trey’s used to playing the respectable sort, and finds it endearing that you’re taking his good notes to heart. In truth, most of Trey’s mimicry is intentional. He’s a flexible guy who doesn’t mind altering his habits to fit your needs. Easier this way, y’know?

Habits you steal:

Speaking in Acronyms(Inherited): Now this is scary. The first time it happened, you had to take a pause and just re-evaluate your entire life. You don't use them nearly as often as Cater does, but somewhere along the line your brain must have rewired to speak in internet lingo. O-M-G you're TOTALLY twinning with him right now, period :)

Nicknames (Inherited): Again, frightening. You once swore against ever calling him Cay-Cay. It isn't very slay-slay. Yet you can only hear him use nicknames for so long until you're unconsciously calling people by them too. Especially since he's always dishing gossip. It starts in your head, which is fine. It's not like they know. Then you call Lilia 'Lils' and that old fart is just grinning behind his sleeve because ohoho~ young love <3

"Did you just- AHA! OMG DO IT AGAIN?! Wait, gotta get my camera out for this - wha? Oh, that's totes not fair! C'mon. Call me Cay-Cay. Just once! I won't even post it to Magicam, please? Lils won't believe me without proof! Pleasssssseeeee - " <- He actually doesn't want you to call him Cay-Cay all the time. Cater likes you using his given name, since it's more personal. Although the way it obviously slipped out on accident is just too cute to ignore.

Reality TV (Inherited): At first you don't like the gossip. It's cheesy, a bit annoying, and the shaky camera-work for nearly every show is headache inducing. Cater likes his dose of drama in his free-time, and Ramshackle has a tv that no one is using. It starts with him watching while you do other things around the dorm. Yet each time you pass the living area, you take longer to leave. Lingering around like one of the ghosts. Then he pulls you in with snacks and starts giving the low-down of what's going on, pulling out a bottle of tangerine shimmer polish to paint your nails. It's just one episode, watch it for him? Please? Oh no. No. No. Suddenly you're invested in who's the baby-daddy of little Ricky and what Chantel is going to do because her sister just lost the house to foreclosure.

"#KingdomOfDeadbeats - am I right? Ugh. I'm so glad we met if that's the dating scene back home...What?! I know it isn't real! Don't be a dummy, I was just joking! Ah! Stop! Don't hit me!" <- Half-hearted jokes about going on one of those talk-shows one day. You're an alien, after all - imagine the juicy drama and views his account would get from doing an interview? It's all jokes though. Cater likes spilling the tea, but hates being it. Don't ever abandon him and go out for milk though, kay? He doesn't want to pay Grim's child support. Otherwise he might have no choice smh

Habits he steals:

Phone/Web Games (Inherited): Cater's phone is mainly full of social media. He's not too into the gaming scene, it's not his peeps y'know? Alas, you download a few dress-up games and one MMO on his phone. First off - props on getting his phone. That's Cay-Cay's lifeline and not just anyone gets to play with it. Pray tell - what is this Wonderstar Planet (props if you know what is being ref.) and how can he become the most influential digital streamer on it? Congrats. He's addicted.

"Who's this Muscle Red and why's he bombing our raid - AH! He just tea-bagged me! So not cool...Prefect? STOP LAUGHING WE HAVE BETS ON THIS MATCH! There goes my collab opportunity, big fail" <- Muscle Red continues to make an appearance. Eventually he becomes Cater's official rival on stream, and Lils is all to invested in the tea cater drops during club meets. Side note. You're the one who gave 'muscle red' Cater's domain code. The lore thickens.

Internet Caution (Developed): This goes without saying, but Cater's well-known in the Magicam scene. He's very forward and knows his way around using charisma. Since you're not in the scene as much, he becomes more cautious of where and when he does streams. The change is so subtle that only the most observant people will pick up on it - but Cay-Cay doesn't want any creepos popping in if y'know what I'm saying. His sisters were the ones to instigate this change.

“Awe~ SRSLY?! That’s fresh news to my ears but good, right? Ne, are there any clips or pics? I need my evidence, y’see. Especially if my cutie is off taking notes from their one and only. C’mon, spill the tea!” == Cheeky Cater is well aware of what’s happening. He’d humor anyone out for some light teasing - after all, he isn’t by your side at all hours. His walls are probably the second most difficult in all of campus to bypass, so he’s both sweetened and nerved to see you picking up on his mannerisms. That’s proof of a strong attachment, after all.

Habits you steal:

Knuckle Cracking (Inherited): Deuce still does this from his biker days. It could be because joint pain from past fights, or possibly air retention in his knuckles from studying. Regardless, Deuce cracks his knuckles at least once every few hours and you began to mimic him. Some people groan at the popping sounds but it really does feel good to release the tension. Let's just hope neither of you dislocate any fingers on accident.

"Stop that! G-geez, you nearly gave me a heart attack. Thought you broke a finger...your hands are stiff? That just means you're studying a lot! I think...uh, let's break? I think there's some leftovers in the kitchen." <- Deuce 100% gets needing to pop those air bubbles. His hands get stiff from studying all the time, but don't crack them too much or you might dislocate something. Side note - he shows you how to wrap your fingers with a soothing salve. He used to do it after fights, but now it's a great help after class.

Double Notes (Developed): Deuce tries. He really does. Yet the lad just isn't great when it comes to book smarts. Seeing that he is dedicated to turning over a new leaf, you make a habit of copying all your notes. He isn't allowed to share them with Ace or Grim - else all bets are off. Sometimes you leave little 'good job' stickers on the last page for him. Is he a toddler? No. Does he peel the stickers off and save them? Totally. He is a good noodle. Suck it Ace.

Sewing (Developed): He breaks things. Most of the time it's an accident. You've learned to carry a mini-sewing kit for all the rips in Deuce's uniform. Same for mini remedies for stains and other problems. It's not like he's trying to get grass stains all over his under-shirt or to split the seam in his gloves (nearly every week). It just happens, and every time he comes to you with a kicked-puppy look with a promise of it being the last time. It is never the last time.

"Uhm...hun'? It happened again. I'm so sorry for bothering you but Housewarden is going to kill me if he sees the tear in my blazer! Can you fix it?! I can't handle another collar with my exam tomorrow! I need to breathe to focus! - really!? I owe you one! Snacks are on me tonight."

Habits he steals:

Bottomless Stomach (Developed): Have leftovers from dinner? Bring them over. He'll get the tubba-ware back in 1-2 days. Coupon for buy-one-get-one at Sam's? He'll take the extra and polish it off in less than a minute. Deuce becomes a human garbage disposal and is taking the unwanted condiments off your sandwich to eat. Just pick them off and leave 'em on the corner of his lunch plate. Even if he dislikes it, he'll down it so you don't have to.

"Mm. Oh, thanks hun' - its that all you're eatin'? You don't like the steam bun? It is a bit dry, but wasting food is disrespectful to the cooks! I'll finish it for you so have my fruit instead. You still need to eat" <- 10/10 very thoughtful and not picky at all. He is grateful to eat your cooking and will gobble up all leftovers at Ramshackle, but doesn't think twice to sharing meals in the cafeteria. He will notice though if you do not eat enough. Restocks the snack cabinet if he sees it's empty. Is touched if you routinely share things you know he enjoys, like saving half your frittata on purpose.

Early Riser (Inherited): See - even if you hate the mornings, there is no choice at Night Raven College. As Ramshackle Prefect you need to be up to take care of business before class. Deuce becomes your personal alarm clock because he wants some time with you before everyone else joins in. Mind you that he lives with three other dudes who threaten to end him every morning because his alarm wakes them up too. Eventually he can wake up without it, but the time leading is unpleasant.

"W-what? Seriously? I've been trying to be more like them! They're a good person and responsible so I've been trying to follow their example. To think we've been doing the same thing this entire time...." == Why would you ever imitate him? He's been trying his damn best to become an honor student worth respecting, and has a long way to go. To think you're comfortable enough with him to mimic his mannerisms? It's a pipe dream, one he doesn't grasp until it's put right in front of his face. You don't let anyone else pick off your plate other than Grim. The next time his clothes tear, he's already handing off his tie before realizing just what's happening. When you wrap his knuckles after a six-hour lock in at the library? He can't help but feel proud at how neat the bandages are. Suddenly the dark memories of hiding bruised knuckles from his mom are pacified with healing balm. Deuce views this development as a gift, and is grateful. Very, very grateful.

Habits you steal:

‘I owe you’ cards (Inherited): Ace's favorite social invention - the 'solid'. Nothing spells new-low like getting your friends to do stuff in exchange for a favor in the future. Most of the time Ace counts on people forgetting he owes them one, but you're not so gullible. The only difference between you both is that while Ace never fulfills his solid, you have a conscience. Give it a few more years. He'll get ya.

"I know this is the third ticket this week but - Oh! C'mon, cut a guy some slack, would you? I'm sorry for bein' late to our date. Yeah, it was shitty. I'm not trying to fight it, aright? I'm here now so let's have some fun and you can chalk three strikes on my tab. I'll even buy ya some candy - Ah! Okay! Two candies but that's where my charity ends!" <- Evidently, the 'I-owe-you' tabs cancel each other out from how often you both call in favors. It's just an excuse to do acts of service or express apologies without being too mushy. Ace is definitely keeping a track record of them though. Expect an ongoing log that dates back to the week you met, when he showed up homeless, collared, and looking to couch surf.

Profanity (Inherited): Ace swears like a sailor. Maybe not so much in his dorm because *cough* he's being policed. He holds no such reservations when you're both alone at Ramshackle. Unfortunately his potty mouth has a mind of it's own - it taints you, and you are a sham of a prefect. Ace earned a week-long collar for teaching you some Twisted-Wonderland exclusive curses. Riddle is not pleased.

Leaving the Windows Unlocked (Developed): There are only so many times he can sneak in through your window before the adrenaline-induced charm wears off. You have class in the morning, and can't be bothered to deal with him on nights he can't pass out in his dorm. Thank seven you have all of Ramshackle to yourself - because Heartslabyul sounds like a nightmare with the roommate situation. You can't leave the front door open for obvious reasons, but most nights the guest-bedroom window will be left slightly ajar in case he needs a place to crash.

"Pssst! Oi! Prefect! ...ugh, Grim! Wake them up, man! The latch is stuck. Don't go back to bed you furball! HEY! IT'S FREAKIN COLD OUT HERE SO LET ME IN ALREADY" <- Please let him in. If Ace has to spend one more night in that stinky dorm with three dudes, he'll string one of their dirty gym socks over your bed. No mercy.

Sleeping with Earplugs (Developed): Bitch Ace snores.

Habits he steals:

Notes Memo (Developed): Ace is bad with remembering things. Anniversaries? Dates? Allergies? He admits to not putting in a great amount of effort, but you can't say he doesn't try at all. He has a notes block on his phone dedicated to things like your go-to takeout orders and preferences. He even has a few alarms set days before any important events because even if you say no-gifts or plans...yeah, he's not that stupid.

Excessive Yawning (Inherited): You're always tired - it wasn't Ace's problem before but now he does feel a bit guilty. Dragging you into his messes felt different when you were just the prefect, y'know? Regardless, it's human instinct to mimic each other's demeanor so he'll openly yawn all the time - normally in succession of you.

"Hey...you're dozing off again. Am I seriously that boring to hang around? - Nah. Just messin' with you. I'd suggest taking a nap during next period but I doubt a goody-goody like you is gonna take that advice. Let's just ditch juice at lunch and go back to the dorm. Don't get mad if I forget to wake you up though"

Medications (Developed): Ace is the last person to become a human apothecary, but he's always got a pack of pain-reliever meds in his pocket with a few bandages, etc. He also attached one of those tiny capsule bottles to his keyring with some stomach meds inside. You took a spill running laps? Dang man. That sucks. Here's a band-aid for your knee. Curse you for making him the slightly-more responsible one.

"Eh..what, like it's a shock? You saying I'm a bad influence? Cause yeah, that checks. Nothin' I can do if they want to take a card outta my deck though," == Ace is entirely neutral on the topic. He is definitely smug that you're coming over to the dark side, but he doesn't need anyone to point it out. He was your first after all. Maybe the start could have been a bit better - but hey, you came around. It's not like he's hurting anyone by helping build your backbone. Although Ace will instantly deny going soft for you in any way, shape, or form.

#twisted wonderland#twst#twst x reader#twst imagines#heartslabyul#twisted wonderland riddle x reader#riddle rosehearts x reader#twst trey clover#trey clover x reader#caterdiamond x reader#twst cater diamond#deuce spade x reader#twst deuce spade x reader#ace trappola x reader#twst ace trappola x reader#heartslabyul x reader#twst x yuu#twst headcanons

2K notes

·

View notes

Text

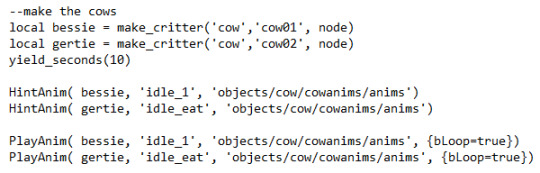

The Sims 2 PSP Cut Content: Part 1

I had been looking for the best way to implement this info on the Sims Wiki (but these are cut Sims, so there's not really a place for them? Or maybe someone else can do it). I've also been working on some videos talking about them. (I love watching these types of videos and prefer that visual format) but at this rate who knows when I'll finish it. So here we go! If you love Strangetown and crave any ounce of lore that you can get like me, here's a few townies. They even have their own secrets! Please read p6tgel's post to get all the info about the cut character TA7, everything I know about him is over there, so I don't have anything to add here. All I'm going to say is... I remember wanting to find out so badly who Mister Smith's friend was after playing The Sims 2 PSP for the first time. I'm so glad they actually did add more to the story. Learning more about the alien society and getting another title (like Pollination Technicians) just makes me want a Sixam neighborhood even more lol go read about it!!

Missing Kine Society Cult Members

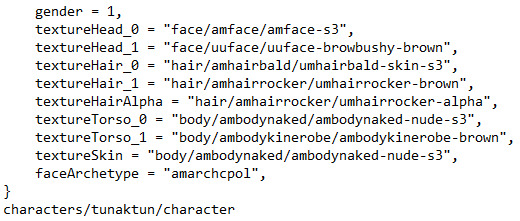

Tunak Tun

Tunak is the only cut character that I could find photo evidence of in an old screenshot. He is a cut Kine Leader (like Sara Starr), as seen in his brown robe talking to Bull Dratch. The schedule file for the Kine Dairy indicates that Tunak would have spawned only during the day.

Unfortunately, it's a view of his back, but his character file confirms this appearance. (just want to say... the details on the Kine robes are actually beautiful. The crunched down quality we got in the final release makes them look like rags) Gender: 0 = female, 1 = male. The eye color is only specified if they're not the default brown. (I'll be using the Sims 2 PC to recreate them, as it shares a lot of assets with the PSP version)

Tunak Tun's Details

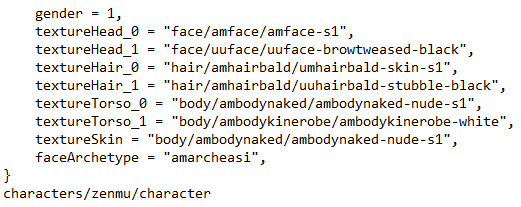

Bio: "A member of the Kine Society." social = 7, intimidation = 1, personality = 1, His social and intimidation scores are on the lower end for Deadtree locals, so social games aren't as difficult. He has the Air personality type. His topic sets (interests) are cow, cow milk, cow bell, cow beast, full moon, and crystal ball. A visual of these:

Tunak Tun's Secrets

(Personal): "Has been known to sneak in a burger or two on the sly."

(Intimate): "Likes to wear loose robes for that 'fresh and ventilated' feeling."

(Dark): "He actually just made up his other two Secrets. He's a pathological liar."

According to the game code, Tunak Tun would also count towards the goal to "Earn the Trust of a Kine Leader [Relationship 4]", just like Sara Starr and Sinjin Balani.

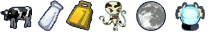

Zen Mu

Zen Mu is regular Kine Society member that wears a white robe. The schedule file for the Kine Dairy indicates that Zen would have spawned only during the night.

I went through every face template available in the Sims 2 PSP CAS and I can't find Zen Mu's (might be hidden like some hairs/clothes are) and I don't see the stubble hair in the PC version.

Zen Mu's Details

Bio: "A member of the Kine Society." social = 7, intimidation = 1, personality = 2, Their social and intimidation scores are on the lower end for Deadtree locals, so social games aren't as difficult. They have the Water personality type. Their topic sets (interests) are cow, cow milk, cow bell, and cow beast. A visual of these:

Zen Mu's Secrets

(Personal): "Severe lactose intolerance has made her unpopular in the Kine Society."

(Intimate): "She is deathly afraid of cows, but don't let the cows find out … they thrive on fear."

(Dark): "When she meditates, her power animal is a horse … the highest form of Kine blasphemy."

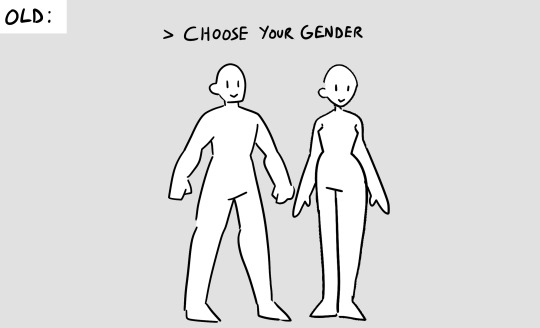

Interestingly, Zen Mu's gender and character model is male, but all 3 of Zen's secrets uses she/her pronouns. Small fun fact - Personal Kine robe for the player: It looks like we would have received our own kine robe at one point, probably after passing inspection, according to the item list file (item 72). Now, we can just go to a wardrobe and buy a robe ourselves.

Extra fun fact that I randomly like to talk about on my twitch streams, but I don't remember if I've said it over here? These two award winning cows were actually given names by the devs in the Kine Dairy level spawning file. Bessie and Gertie! <3

176 notes

·

View notes

Text

Unlock the other 99% of your data - now ready for AI

New Post has been published on https://thedigitalinsider.com/unlock-the-other-99-of-your-data-now-ready-for-ai/

Unlock the other 99% of your data - now ready for AI

For decades, companies of all sizes have recognized that the data available to them holds significant value, for improving user and customer experiences and for developing strategic plans based on empirical evidence.

As AI becomes increasingly accessible and practical for real-world business applications, the potential value of available data has grown exponentially. Successfully adopting AI requires significant effort in data collection, curation, and preprocessing. Moreover, important aspects such as data governance, privacy, anonymization, regulatory compliance, and security must be addressed carefully from the outset.

In a conversation with Henrique Lemes, Americas Data Platform Leader at IBM, we explored the challenges enterprises face in implementing practical AI in a range of use cases. We began by examining the nature of data itself, its various types, and its role in enabling effective AI-powered applications.

Henrique highlighted that referring to all enterprise information simply as ‘data’ understates its complexity. The modern enterprise navigates a fragmented landscape of diverse data types and inconsistent quality, particularly between structured and unstructured sources.

In simple terms, structured data refers to information that is organized in a standardized and easily searchable format, one that enables efficient processing and analysis by software systems.

Unstructured data is information that does not follow a predefined format nor organizational model, making it more complex to process and analyze. Unlike structured data, it includes diverse formats like emails, social media posts, videos, images, documents, and audio files. While it lacks the clear organization of structured data, unstructured data holds valuable insights that, when effectively managed through advanced analytics and AI, can drive innovation and inform strategic business decisions.

Henrique stated, “Currently, less than 1% of enterprise data is utilized by generative AI, and over 90% of that data is unstructured, which directly affects trust and quality”.

The element of trust in terms of data is an important one. Decision-makers in an organization need firm belief (trust) that the information at their fingertips is complete, reliable, and properly obtained. But there is evidence that states less than half of data available to businesses is used for AI, with unstructured data often going ignored or sidelined due to the complexity of processing it and examining it for compliance – especially at scale.

To open the way to better decisions that are based on a fuller set of empirical data, the trickle of easily consumed information needs to be turned into a firehose. Automated ingestion is the answer in this respect, Henrique said, but the governance rules and data policies still must be applied – to unstructured and structured data alike.

Henrique set out the three processes that let enterprises leverage the inherent value of their data. “Firstly, ingestion at scale. It’s important to automate this process. Second, curation and data governance. And the third [is when] you make this available for generative AI. We achieve over 40% of ROI over any conventional RAG use-case.”

IBM provides a unified strategy, rooted in a deep understanding of the enterprise’s AI journey, combined with advanced software solutions and domain expertise. This enables organizations to efficiently and securely transform both structured and unstructured data into AI-ready assets, all within the boundaries of existing governance and compliance frameworks.

“We bring together the people, processes, and tools. It’s not inherently simple, but we simplify it by aligning all the essential resources,” he said.

As businesses scale and transform, the diversity and volume of their data increase. To keep up, AI data ingestion process must be both scalable and flexible.

“[Companies] encounter difficulties when scaling because their AI solutions were initially built for specific tasks. When they attempt to broaden their scope, they often aren’t ready, the data pipelines grow more complex, and managing unstructured data becomes essential. This drives an increased demand for effective data governance,” he said.

IBM’s approach is to thoroughly understand each client’s AI journey, creating a clear roadmap to achieve ROI through effective AI implementation. “We prioritize data accuracy, whether structured or unstructured, along with data ingestion, lineage, governance, compliance with industry-specific regulations, and the necessary observability. These capabilities enable our clients to scale across multiple use cases and fully capitalize on the value of their data,” Henrique said.

Like anything worthwhile in technology implementation, it takes time to put the right processes in place, gravitate to the right tools, and have the necessary vision of how any data solution might need to evolve.

IBM offers enterprises a range of options and tooling to enable AI workloads in even the most regulated industries, at any scale. With international banks, finance houses, and global multinationals among its client roster, there are few substitutes for Big Blue in this context.

To find out more about enabling data pipelines for AI that drive business and offer fast, significant ROI, head over to this page.

#ai#AI-powered#Americas#Analysis#Analytics#applications#approach#assets#audio#banks#Blue#Business#business applications#Companies#complexity#compliance#customer experiences#data#data collection#Data Governance#data ingestion#data pipelines#data platform#decision-makers#diversity#documents#emails#enterprise#Enterprises#finance

2 notes

·

View notes

Text

WHAT IS VERTEX AI SEARCH

Vertex AI Search: A Comprehensive Analysis

1. Executive Summary

Vertex AI Search emerges as a pivotal component of Google Cloud's artificial intelligence portfolio, offering enterprises the capability to deploy search experiences with the quality and sophistication characteristic of Google's own search technologies. This service is fundamentally designed to handle diverse data types, both structured and unstructured, and is increasingly distinguished by its deep integration with generative AI, most notably through its out-of-the-box Retrieval Augmented Generation (RAG) functionalities. This RAG capability is central to its value proposition, enabling organizations to ground large language model (LLM) responses in their proprietary data, thereby enhancing accuracy, reliability, and contextual relevance while mitigating the risk of generating factually incorrect information.

The platform's strengths are manifold, stemming from Google's decades of expertise in semantic search and natural language processing. Vertex AI Search simplifies the traditionally complex workflows associated with building RAG systems, including data ingestion, processing, embedding, and indexing. It offers specialized solutions tailored for key industries such as retail, media, and healthcare, addressing their unique vernacular and operational needs. Furthermore, its integration within the broader Vertex AI ecosystem, including access to advanced models like Gemini, positions it as a comprehensive solution for building sophisticated AI-driven applications.

However, the adoption of Vertex AI Search is not without its considerations. The pricing model, while granular and offering a "pay-as-you-go" approach, can be complex, necessitating careful cost modeling, particularly for features like generative AI and always-on components such as Vector Search index serving. User experiences and technical documentation also point to potential implementation hurdles for highly specific or advanced use cases, including complexities in IAM permission management and evolving query behaviors with platform updates. The rapid pace of innovation, while a strength, also requires organizations to remain adaptable.

Ultimately, Vertex AI Search represents a strategic asset for organizations aiming to unlock the value of their enterprise data through advanced search and AI. It provides a pathway to not only enhance information retrieval but also to build a new generation of AI-powered applications that are deeply informed by and integrated with an organization's unique knowledge base. Its continued evolution suggests a trajectory towards becoming a core reasoning engine for enterprise AI, extending beyond search to power more autonomous and intelligent systems.

2. Introduction to Vertex AI Search

Vertex AI Search is establishing itself as a significant offering within Google Cloud's AI capabilities, designed to transform how enterprises access and utilize their information. Its strategic placement within the Google Cloud ecosystem and its core value proposition address critical needs in the evolving landscape of enterprise data management and artificial intelligence.

Defining Vertex AI Search

Vertex AI Search is a service integrated into Google Cloud's Vertex AI Agent Builder. Its primary function is to equip developers with the tools to create secure, high-quality search experiences comparable to Google's own, tailored for a wide array of applications. These applications span public-facing websites, internal corporate intranets, and, significantly, serve as the foundation for Retrieval Augmented Generation (RAG) systems that power generative AI agents and applications. The service achieves this by amalgamating deep information retrieval techniques, advanced natural language processing (NLP), and the latest innovations in large language model (LLM) processing. This combination allows Vertex AI Search to more accurately understand user intent and deliver the most pertinent results, marking a departure from traditional keyword-based search towards more sophisticated semantic and conversational search paradigms.

Strategic Position within Google Cloud AI Ecosystem

The service is not a standalone product but a core element of Vertex AI, Google Cloud's comprehensive and unified machine learning platform. This integration is crucial, as Vertex AI Search leverages and interoperates with other Vertex AI tools and services. Notable among these are Document AI, which facilitates the processing and understanding of diverse document formats , and direct access to Google's powerful foundation models, including the multimodal Gemini family. Its incorporation within the Vertex AI Agent Builder further underscores Google's strategy to provide an end-to-end toolkit for constructing advanced AI agents and applications, where robust search and retrieval capabilities are fundamental.

Core Purpose and Value Proposition

The fundamental aim of Vertex AI Search is to empower enterprises to construct search applications of Google's caliber, operating over their own controlled datasets, which can encompass both structured and unstructured information. A central pillar of its value proposition is its capacity to function as an "out-of-the-box" RAG system. This feature is critical for grounding LLM responses in an enterprise's specific data, a process that significantly improves the accuracy, reliability, and contextual relevance of AI-generated content, thereby reducing the propensity for LLMs to produce "hallucinations" or factually incorrect statements. The simplification of the intricate workflows typically associated with RAG systems—including Extract, Transform, Load (ETL) processes, Optical Character Recognition (OCR), data chunking, embedding generation, and indexing—is a major attraction for businesses.

Moreover, Vertex AI Search extends its utility through specialized, pre-tuned offerings designed for specific industries such as retail (Vertex AI Search for Commerce), media and entertainment (Vertex AI Search for Media), and healthcare and life sciences. These tailored solutions are engineered to address the unique terminologies, data structures, and operational requirements prevalent in these sectors.

The pronounced emphasis on "out-of-the-box RAG" and the simplification of data processing pipelines points towards a deliberate strategy by Google to lower the entry barrier for enterprises seeking to leverage advanced Generative AI capabilities. Many organizations may lack the specialized AI talent or resources to build such systems from the ground up. Vertex AI Search offers a managed, pre-configured solution, effectively democratizing access to sophisticated RAG technology. By making these capabilities more accessible, Google is not merely selling a search product; it is positioning Vertex AI Search as a foundational layer for a new wave of enterprise AI applications. This approach encourages broader adoption of Generative AI within businesses by mitigating some inherent risks, like LLM hallucinations, and reducing technical complexities. This, in turn, is likely to drive increased consumption of other Google Cloud services, such as storage, compute, and LLM APIs, fostering a more integrated and potentially "sticky" ecosystem.

Furthermore, Vertex AI Search serves as a conduit between traditional enterprise search mechanisms and the frontier of advanced AI. It is built upon "Google's deep expertise and decades of experience in semantic search technologies" , while concurrently incorporating "the latest in large language model (LLM) processing" and "Gemini generative AI". This dual nature allows it to support conventional search use cases, such as website and intranet search , alongside cutting-edge AI applications like RAG for generative AI agents and conversational AI systems. This design provides an evolutionary pathway for enterprises. Organizations can commence by enhancing existing search functionalities and then progressively adopt more advanced AI features as their internal AI maturity and comfort levels grow. This adaptability makes Vertex AI Search an attractive proposition for a diverse range of customers with varying immediate needs and long-term AI ambitions. Such an approach enables Google to capture market share in both the established enterprise search market and the rapidly expanding generative AI application platform market. It offers a smoother transition for businesses, diminishing the perceived risk of adopting state-of-the-art AI by building upon familiar search paradigms, thereby future-proofing their investment.

3. Core Capabilities and Architecture

Vertex AI Search is engineered with a rich set of features and a flexible architecture designed to handle diverse enterprise data and power sophisticated search and AI applications. Its capabilities span from foundational search quality to advanced generative AI enablement, supported by robust data handling mechanisms and extensive customization options.

Key Features

Vertex AI Search integrates several core functionalities that define its power and versatility:

Google-Quality Search: At its heart, the service leverages Google's profound experience in semantic search technologies. This foundation aims to deliver highly relevant search results across a wide array of content types, moving beyond simple keyword matching to incorporate advanced natural language understanding (NLU) and contextual awareness.

Out-of-the-Box Retrieval Augmented Generation (RAG): A cornerstone feature is its ability to simplify the traditionally complex RAG pipeline. Processes such as ETL, OCR, document chunking, embedding generation, indexing, storage, information retrieval, and summarization are streamlined, often requiring just a few clicks to configure. This capability is paramount for grounding LLM responses in enterprise-specific data, which significantly enhances the trustworthiness and accuracy of generative AI applications.

Document Understanding: The service benefits from integration with Google's Document AI suite, enabling sophisticated processing of both structured and unstructured documents. This allows for the conversion of raw documents into actionable data, including capabilities like layout parsing and entity extraction.

Vector Search: Vertex AI Search incorporates powerful vector search technology, essential for modern embeddings-based applications. While it offers out-of-the-box embedding generation and automatic fine-tuning, it also provides flexibility for advanced users. They can utilize custom embeddings and gain direct control over the underlying vector database for specialized use cases such as recommendation engines and ad serving. Recent enhancements include the ability to create and deploy indexes without writing code, and a significant reduction in indexing latency for smaller datasets, from hours down to minutes. However, it's important to note user feedback regarding Vector Search, which has highlighted concerns about operational costs (e.g., the need to keep compute resources active even when not querying), limitations with certain file types (e.g., .xlsx), and constraints on embedding dimensions for specific corpus configurations. This suggests a balance to be struck between the power of Vector Search and its operational overhead and flexibility.

Generative AI Features: The platform is designed to enable grounded answers by synthesizing information from multiple sources. It also supports the development of conversational AI capabilities , often powered by advanced models like Google's Gemini.

Comprehensive APIs: For developers who require fine-grained control or are building bespoke RAG solutions, Vertex AI Search exposes a suite of APIs. These include APIs for the Document AI Layout Parser, ranking algorithms, grounded generation, and the check grounding API, which verifies the factual basis of generated text.

Data Handling

Effective data management is crucial for any search system. Vertex AI Search provides several mechanisms for ingesting, storing, and organizing data:

Supported Data Sources:

Websites: Content can be indexed by simply providing site URLs.

Structured Data: The platform supports data from BigQuery tables and NDJSON files, enabling hybrid search (a combination of keyword and semantic search) or recommendation systems. Common examples include product catalogs, movie databases, or professional directories.

Unstructured Data: Documents in various formats (PDF, DOCX, etc.) and images can be ingested for hybrid search. Use cases include searching through private repositories of research publications or financial reports. Notably, some limitations, such as lack of support for .xlsx files, have been reported specifically for Vector Search.

Healthcare Data: FHIR R4 formatted data, often imported from the Cloud Healthcare API, can be used to enable hybrid search over clinical data and patient records.

Media Data: A specialized structured data schema is available for the media industry, catering to content like videos, news articles, music tracks, and podcasts.

Third-party Data Sources: Vertex AI Search offers connectors (some in Preview) to synchronize data from various third-party applications, such as Jira, Confluence, and Salesforce, ensuring that search results reflect the latest information from these systems.

Data Stores and Apps: A fundamental architectural concept in Vertex AI Search is the one-to-one relationship between an "app" (which can be a search or a recommendations app) and a "data store". Data is imported into a specific data store, where it is subsequently indexed. The platform provides different types of data stores, each optimized for a particular kind of data (e.g., website content, structured data, unstructured documents, healthcare records, media assets).

Indexing and Corpus: The term "corpus" refers to the underlying storage and indexing mechanism within Vertex AI Search. Even when users interact with data stores, which act as an abstraction layer, the corpus is the foundational component where data is stored and processed. It is important to understand that costs are associated with the corpus, primarily driven by the volume of indexed data, the amount of storage consumed, and the number of queries processed.

Schema Definition: Users have the ability to define a schema that specifies which metadata fields from their documents should be indexed. This schema also helps in understanding the structure of the indexed documents.

Real-time Ingestion: For datasets that change frequently, Vertex AI Search supports real-time ingestion. This can be implemented using a Pub/Sub topic to publish notifications about new or updated documents. A Cloud Function can then subscribe to this topic and use the Vertex AI Search API to ingest, update, or delete documents in the corresponding data store, thereby maintaining data freshness. This is a critical feature for dynamic environments.

Automated Processing for RAG: When used for Retrieval Augmented Generation, Vertex AI Search automates many of the complex data processing steps, including ETL, OCR, document chunking, embedding generation, and indexing.

The "corpus" serves as the foundational layer for both storage and indexing, and its management has direct cost implications. While data stores provide a user-friendly abstraction, the actual costs are tied to the size of this underlying corpus and the activity it handles. This means that effective data management strategies, such as determining what data to index and defining retention policies, are crucial for optimizing costs, even with the simplified interface of data stores. The "pay only for what you use" principle is directly linked to the activity and volume within this corpus. For large-scale deployments, particularly those involving substantial datasets like the 500GB use case mentioned by a user , the cost implications of the corpus can be a significant planning factor.

There is an observable interplay between the platform's "out-of-the-box" simplicity and the requirements of advanced customization. Vertex AI Search is heavily promoted for its ease of setup and pre-built RAG capabilities , with an emphasis on an "easy experience to get started". However, highly specific enterprise scenarios or complex user requirements—such as querying by unique document identifiers, maintaining multi-year conversational contexts, needing specific embedding dimensions, or handling unsupported file formats like XLSX —may necessitate delving into more intricate configurations, API utilization, and custom development work. For example, implementing real-time ingestion requires setting up Pub/Sub and Cloud Functions , and achieving certain filtering behaviors might involve workarounds like using metadata fields. While comprehensive APIs are available for "granular control or bespoke RAG solutions" , this means that the platform's inherent simplicity has boundaries, and deep technical expertise might still be essential for optimal or highly tailored implementations. This suggests a tiered user base: one that leverages Vertex AI Search as a turnkey solution, and another that uses it as a powerful, extensible toolkit for custom builds.

Querying and Customization

Vertex AI Search provides flexible ways to query data and customize the search experience:

Query Types: The platform supports Google-quality search, which represents an evolution from basic keyword matching to modern, conversational search experiences. It can be configured to return only a list of search results or to provide generative, AI-powered answers. A recent user-reported issue (May 2025) indicated that queries against JSON data in the latest release might require phrasing in natural language, suggesting an evolving query interpretation mechanism that prioritizes NLU.

Customization Options:

Vertex AI Search offers extensive capabilities to tailor search experiences to specific needs.

Metadata Filtering: A key customization feature is the ability to filter search results based on indexed metadata fields. For instance, if direct filtering by rag_file_ids is not supported by a particular API (like the Grounding API), adding a file_id to document metadata and filtering on that field can serve as an effective alternative.

Search Widget: Integration into websites can be achieved easily by embedding a JavaScript widget or an HTML component.

API Integration: For more profound control and custom integrations, the AI Applications API can be used.

LLM Feature Activation: Features that provide generative answers powered by LLMs typically need to be explicitly enabled.

Refinement Options: Users can preview search results and refine them by adding or modifying metadata (e.g., based on HTML structure for websites), boosting the ranking of certain results (e.g., based on publication date), or applying filters (e.g., based on URL patterns or other metadata).

Events-based Reranking and Autocomplete: The platform also supports advanced tuning options such as reranking results based on user interaction events and providing autocomplete suggestions for search queries.

Multi-Turn Conversation Support:

For conversational AI applications, the Grounding API can utilize the history of a conversation as context for generating subsequent responses.

To maintain context in multi-turn dialogues, it is recommended to store previous prompts and responses (e.g., in a database or cache) and include this history in the next prompt to the model, while being mindful of the context window limitations of the underlying LLMs.

The evolving nature of query interpretation, particularly the reported shift towards requiring natural language queries for JSON data , underscores a broader trend. If this change is indicative of a deliberate platform direction, it signals a significant alignment of the query experience with Google's core strengths in NLU and conversational AI, likely driven by models like Gemini. This could simplify interactions for end-users but may require developers accustomed to more structured query languages for structured data to adapt their approaches. Such a shift prioritizes natural language understanding across the platform. However, it could also introduce friction for existing applications or development teams that have built systems based on previous query behaviors. This highlights the dynamic nature of managed services, where underlying changes can impact functionality, necessitating user adaptation and diligent monitoring of release notes.

4. Applications and Use Cases

Vertex AI Search is designed to cater to a wide spectrum of applications, from enhancing traditional enterprise search to enabling sophisticated generative AI solutions across various industries. Its versatility allows organizations to leverage their data in novel and impactful ways.

Enterprise Search

A primary application of Vertex AI Search is the modernization and improvement of search functionalities within an organization:

Improving Search for Websites and Intranets: The platform empowers businesses to deploy Google-quality search capabilities on their external-facing websites and internal corporate portals or intranets. This can significantly enhance user experience by making information more discoverable. For basic implementations, this can be as straightforward as integrating a pre-built search widget.

Employee and Customer Search: Vertex AI Search provides a comprehensive toolkit for accessing, processing, and analyzing enterprise information. This can be used to create powerful search experiences for employees, helping them find internal documents, locate subject matter experts, or access company knowledge bases more efficiently. Similarly, it can improve customer-facing search for product discovery, support documentation, or FAQs.

Generative AI Enablement

Vertex AI Search plays a crucial role in the burgeoning field of generative AI by providing essential grounding capabilities:

Grounding LLM Responses (RAG): A key and frequently highlighted use case is its function as an out-of-the-box Retrieval Augmented Generation (RAG) system. In this capacity, Vertex AI Search retrieves relevant and factual information from an organization's own data repositories. This retrieved information is then used to "ground" the responses generated by Large Language Models (LLMs). This process is vital for improving the accuracy, reliability, and contextual relevance of LLM outputs, and critically, for reducing the incidence of "hallucinations"—the tendency of LLMs to generate plausible but incorrect or fabricated information.

Powering Generative AI Agents and Apps: By providing robust grounding capabilities, Vertex AI Search serves as a foundational component for building sophisticated generative AI agents and applications. These AI systems can then interact with and reason about company-specific data, leading to more intelligent and context-aware automated solutions.

Industry-Specific Solutions

Recognizing that different industries have unique data types, terminologies, and objectives, Google Cloud offers specialized versions of Vertex AI Search:

Vertex AI Search for Commerce (Retail): This version is specifically tuned to enhance the search, product recommendation, and browsing experiences on retail e-commerce channels. It employs AI to understand complex customer queries, interpret shopper intent (even when expressed using informal language or colloquialisms), and automatically provide dynamic spell correction and relevant synonym suggestions. Furthermore, it can optimize search results based on specific business objectives, such as click-through rates (CTR), revenue per session, and conversion rates.

Vertex AI Search for Media (Media and Entertainment): Tailored for the media industry, this solution aims to deliver more personalized content recommendations, often powered by generative AI. The strategic goal is to increase consumer engagement and time spent on media platforms, which can translate to higher advertising revenue, subscription retention, and overall platform loyalty. It supports structured data formats commonly used in the media sector for assets like videos, news articles, music, and podcasts.

Vertex AI Search for Healthcare and Life Sciences: This offering provides a medically tuned search engine designed to improve the experiences of both patients and healthcare providers. It can be used, for example, to search through vast clinical data repositories, electronic health records, or a patient's clinical history using exploratory queries. This solution is also built with compliance with healthcare data regulations like HIPAA in mind.

The development of these industry-specific versions like "Vertex AI Search for Commerce," "Vertex AI Search for Media," and "Vertex AI Search for Healthcare and Life Sciences" is not merely a cosmetic adaptation. It represents a strategic decision by Google to avoid a one-size-fits-all approach. These offerings are "tuned for unique industry requirements" , incorporating specialized terminologies, understanding industry-specific data structures, and aligning with distinct business objectives. This targeted approach significantly lowers the barrier to adoption for companies within these verticals, as the solution arrives pre-optimized for their particular needs, thereby reducing the requirement for extensive custom development or fine-tuning. This industry-specific strategy serves as a potent market penetration tactic, allowing Google to compete more effectively against niche players in each vertical and to demonstrate clear return on investment by addressing specific, high-value industry challenges. It also fosters deeper integration into the core business processes of these enterprises, positioning Vertex AI Search as a more strategic and less easily substitutable component of their technology infrastructure. This could, over time, lead to the development of distinct, industry-focused data ecosystems and best practices centered around Vertex AI Search.

Embeddings-Based Applications (via Vector Search)

The underlying Vector Search capability within Vertex AI Search also enables a range of applications that rely on semantic similarity of embeddings:

Recommendation Engines: Vector Search can be a core component in building recommendation engines. By generating numerical representations (embeddings) of items (e.g., products, articles, videos), it can find and suggest items that are semantically similar to what a user is currently viewing or has interacted with in the past.

Chatbots: For advanced chatbots that need to understand user intent deeply and retrieve relevant information from extensive knowledge bases, Vector Search provides powerful semantic matching capabilities. This allows chatbots to provide more accurate and contextually appropriate responses.

Ad Serving: In the domain of digital advertising, Vector Search can be employed for semantic matching to deliver more relevant advertisements to users based on content or user profiles.

The Vector Search component is presented both as an integral technology powering the semantic retrieval within the managed Vertex AI Search service and as a potent, standalone tool accessible via the broader Vertex AI platform. Snippet , for instance, outlines a methodology for constructing a recommendation engine using Vector Search directly. This dual role means that Vector Search is foundational to the core semantic retrieval capabilities of Vertex AI Search, and simultaneously, it is a powerful component that can be independently leveraged by developers to build other custom AI applications. Consequently, enhancements to Vector Search, such as the recently reported reductions in indexing latency , benefit not only the out-of-the-box Vertex AI Search experience but also any custom AI solutions that developers might construct using this underlying technology. Google is, in essence, offering a spectrum of access to its vector database technology. Enterprises can consume it indirectly and with ease through the managed Vertex AI Search offering, or they can harness it more directly for bespoke AI projects. This flexibility caters to varying levels of technical expertise and diverse application requirements. As more enterprises adopt embeddings for a multitude of AI tasks, a robust, scalable, and user-friendly Vector Search becomes an increasingly critical piece of infrastructure, likely driving further adoption of the entire Vertex AI ecosystem.

Document Processing and Analysis

Leveraging its integration with Document AI, Vertex AI Search offers significant capabilities in document processing:

The service can help extract valuable information, classify documents based on content, and split large documents into manageable chunks. This transforms static documents into actionable intelligence, which can streamline various business workflows and enable more data-driven decision-making. For example, it can be used for analyzing large volumes of textual data, such as customer feedback, product reviews, or research papers, to extract key themes and insights.

Case Studies (Illustrative Examples)

While specific case studies for "Vertex AI Search" are sometimes intertwined with broader "Vertex AI" successes, several examples illustrate the potential impact of AI grounded on enterprise data, a core principle of Vertex AI Search:

Genial Care (Healthcare): This organization implemented Vertex AI to improve the process of keeping session records for caregivers. This enhancement significantly aided in reviewing progress for autism care, demonstrating Vertex AI's value in managing and utilizing healthcare-related data.

AES (Manufacturing & Industrial): AES utilized generative AI agents, built with Vertex AI, to streamline energy safety audits. This application resulted in a remarkable 99% reduction in costs and a decrease in audit completion time from 14 days to just one hour. This case highlights the transformative potential of AI agents that are effectively grounded on enterprise-specific information, aligning closely with the RAG capabilities central to Vertex AI Search.

Xometry (Manufacturing): This company is reported to be revolutionizing custom manufacturing processes by leveraging Vertex AI.

LUXGEN (Automotive): LUXGEN employed Vertex AI to develop an AI-powered chatbot. This initiative led to improvements in both the car purchasing and driving experiences for customers, while also achieving a 30% reduction in customer service workloads.

These examples, though some may refer to the broader Vertex AI platform, underscore the types of business outcomes achievable when AI is effectively applied to enterprise data and processes—a domain where Vertex AI Search is designed to excel.

5. Implementation and Management Considerations

Successfully deploying and managing Vertex AI Search involves understanding its setup processes, data ingestion mechanisms, security features, and user access controls. These aspects are critical for ensuring the platform operates efficiently, securely, and in alignment with enterprise requirements.

Setup and Deployment

Vertex AI Search offers flexibility in how it can be implemented and integrated into existing systems:

Google Cloud Console vs. API: Implementation can be approached in two main ways. The Google Cloud console provides a web-based interface for a quick-start experience, allowing users to create applications, import data, test search functionality, and view analytics without extensive coding. Alternatively, for deeper integration into websites or custom applications, the AI Applications API offers programmatic control. A common practice is a hybrid approach, where initial setup and data management are performed via the console, while integration and querying are handled through the API.

App and Data Store Creation: The typical workflow begins with creating a search or recommendations "app" and then attaching it to a "data store." Data relevant to the application is then imported into this data store and subsequently indexed to make it searchable.

Embedding JavaScript Widgets: For straightforward website integration, Vertex AI Search provides embeddable JavaScript widgets and API samples. These allow developers to quickly add search or recommendation functionalities to their web pages as HTML components.

Data Ingestion and Management

The platform provides robust mechanisms for ingesting data from various sources and keeping it up-to-date:

Corpus Management: As previously noted, the "corpus" is the fundamental underlying storage and indexing layer. While data stores offer an abstraction, it is crucial to understand that costs are directly related to the volume of data indexed in the corpus, the storage it consumes, and the query load it handles.