#DNS Enumeration

Explore tagged Tumblr posts

Text

👩🏻💻 𝙰𝚛𝚌𝚑𝚒𝚟𝚒𝚘 𝚍𝚒 𝚜𝚝𝚛𝚞𝚖𝚎𝚗𝚝𝚒 𝚙𝚎𝚛 𝚌𝚢𝚋𝚎𝚛𝚜𝚎𝚌𝚞𝚛𝚒𝚝𝚢 𝚌𝚑𝚎 𝚖𝚒 𝚟𝚎𝚗𝚐𝚘𝚗𝚘 𝚌𝚘𝚗𝚜𝚒𝚐𝚕𝚒𝚊𝚝𝚒 𝚘 𝚌𝚒𝚝𝚊𝚝𝚒 𝚗𝚎𝚕 𝚝𝚎𝚖𝚙𝚘

AnyRun: cloud-based malware analysis service (sandbox).

Burp Suite: a proprietary software tool for security assessment and penetration testing of web applications. La community edition, gratis, contiene Burp Proxy and Interceptor (intercetta le richieste effettuate dal browser, consente modifiche on-the-fly e di modificare le risposte; utile per testare applicazioni basate su javascript), Burp Site Map, Burp Logger and HTTP History, Burp Repeater (consente di replicare e modificare le richieste effettuate, aggiungere parametri, rimuoverli, ecc), Burp Decoder, Burp Sequencer, Burp Comparer, Burp Extender (estensioni delle funzionalità di burpsuite, plugin specializzati per individuare bug specifici, automatizzare parte delle attività, ecc) e Burp Intruder (consente di iterare richieste con payload differenti e automatizzare attività di injection).

CyberChef: is a simple, intuitive web app for carrying out all manner of "cyber" operations within a web browser. These operations include simple encoding like XOR and Base64, more complex encryption like AES, DES and Blowfish, creating binary and hexdumps, compression and decompression of data, calculating hashes and checksums, IPv6 and X.509 parsing, changing character encodings, and much more.

DorkSearch: an AI-powered Google Dorking tool that helps create effective search queries to uncover sensitive information on the internet.

FFUF: fast web fuzzer written in Go.

GrayHatWarfare: is a search engine that indexes publicly accessible Amazon S3 buckets. It helps users identify exposed cloud storage and potential security risks.

JoeSandbox: detects and analyzes potential malicious files and URLs on Windows, Mac OS, and Linux for suspicious activities. It performs deep malware analysis and generates comprehensive and detailed analysis reports.

Nikto: is a free software command-line vulnerability scanner that scans web servers for dangerous files or CGIs, outdated server software and other problems.

Nuclei: is a fast, customizable vulnerability scanner powered by the global security community and built on a simple YAML-based DSL, enabling collaboration to tackle trending vulnerabilities on the internet. It helps you find vulnerabilities in your applications, APIs, networks, DNS, and cloud configurations.

Owasp Zap: Zed Attack Proxy (ZAP) by Checkmarx is a free, open-source penetration testing tool. ZAP is designed specifically for testing web applications and is both flexible and extensible. At its core, ZAP is what is known as a “manipulator-in-the-middle proxy.” It stands between the tester’s browser and the web application so that it can intercept and inspect messages sent between browser and web application, modify the contents if needed, and then forward those packets on to the destination. It can be used as a stand-alone application, and as a daemon process.

PIA: aims to help data controllers build and demonstrate compliance to the GDPR. It facilitates carrying out a data protection impact assessment.

SecLists: is the security tester's companion. It's a collection of multiple types of lists used during security assessments, collected in one place. List types include usernames, passwords, URLs, sensitive data patterns, fuzzing payloads, web shells, and many more.

SQLMAP: is an open source penetration testing tool that automates the process of detecting and exploiting SQL injection flaws and taking over of database servers. It comes with a powerful detection engine, many niche features for the ultimate penetration tester and a broad range of switches lasting from database fingerprinting, over data fetching from the database, to accessing the underlying file system and executing commands on the operating system via out-of-band connections.

Subfinder: fast passive subdomain enumeration tool.

Triage: cloud-based sandbox analysis service to help cybersecurity professionals to analyse malicious files and prioritise incident alerts and accelerate alert triage. It allows for dynamic analysis of files (Windows, Linux, Mac, Android) in a secure environment, offering detailed reports on malware behavior, including malicious scoring. This service integrates with various cybersecurity tools and platforms, making it a valuable tool for incident response and threat hunting.

VirusTotal: analyse suspicious files, domains, IPs and URLs to detect malware and other breaches, automatically share them with the security community.

Wayback Machine: is a digital archive of the World Wide Web founded by Internet Archive. The service allows users to go "back in time" to see how websites looked in the past.

Wapiti: allows you to audit the security of your websites or web applications. It performs "black-box" scans of the web application by crawling the webpages of the deployed webapp, looking for scripts and forms where it can inject data. Once it gets the list of URLs, forms and their inputs, Wapiti acts like a fuzzer, injecting payloads to see if a script is vulnerable.

WPScan: written for security professionals and blog maintainers to test the security of their WordPress websites.

✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖

👩🏻💻𝚂𝚒𝚝𝚒-𝚕𝚊𝚋𝚘𝚛𝚊𝚝𝚘𝚛𝚒

flAWS: through a series of levels you'll learn about common mistakes and gotchas when using Amazon Web Services (AWS).

flAWS2: this game/tutorial teaches you AWS (Amazon Web Services) security concepts. The challenges are focused on AWS specific issues. You can be an attacker or a defender.

✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖✖

👩🏻💻𝙱𝚛𝚎𝚟𝚎 𝚕𝚒𝚜𝚝𝚊 𝚍𝚒 𝚜𝚒𝚝𝚒 𝚊𝚙𝚙𝚘𝚜𝚒𝚝𝚊𝚖𝚎𝚗𝚝𝚎 𝚟𝚞𝚕𝚗𝚎𝚛𝚊𝚋𝚒𝚕𝚒 𝚜𝚞 𝚌𝚞𝚒 𝚏𝚊𝚛𝚎 𝚎𝚜𝚎𝚛𝚌𝚒𝚣𝚒𝚘

http://testphp.vulnweb.com

0 notes

Text

A Comprehensive Guide to Bug Hunting

This guide provides a structured, step-by-step approach to bug hunting, focusing on reconnaissance, subdomain enumeration, live domain filtering, vulnerability scanning, and JavaScript analysis.

It incorporates essential tools like SecretFinder, Katana, GetJS, Nuclei, Mantra, Subjs, Grep, and Anew to enhance efficiency and coverage.

1. Initial Reconnaissance

Gather information about the target to identify IP blocks, ASNs, DNS records, and associated domains.

Tools and Techniques:

ARIN WHOIS: Lookup IP blocks and ownership details.

BGP.HE: Retrieve IP blocks, ASNs, and routing information.

ViewDNS.info: Check DNS history and reverse IP lookups.

MXToolbox: Analyze MX records and DNS configurations.

Whoxy: Perform WHOIS lookups for domain ownership.

Who.is: Retrieve domain registration details.

Whois.domaintools: Advanced WHOIS and historical data.

IPAddressGuide: Convert CIDR to IP ranges.

NSLookup: Identify nameservers.

BuiltWith: Discover technologies used on the target website.

Amass: Perform comprehensive information gathering (subdomains, IPs, etc.).

Shodan: Search for exposed devices and services.

Censys.io: Identify hosts and certificates.

Hunter.how: Find email addresses and domain-related data.

ZoomEye: Search for open ports and services.

Steps:

Identify the target domain and associated IP ranges.

Collect WHOIS data for ownership and registration details.

Map out nameservers and DNS records.

Use Amass to enumerate initial subdomains and IPs.

Leverage Shodan, Censys, and ZoomEye to find exposed services.

2. Subdomain Enumeration

Subdomains often expose vulnerabilities. The goal is to discover as many subdomains as possible, including sub-subdomains, and filter live ones.

Tools and Techniques:

Subfinder: Fast subdomain enumeration.

Amass: Advanced subdomain discovery.

Crt.sh: Extract subdomains from certificate transparency logs.

Sublist3r: Enumerate subdomains using multiple sources.

FFUF: Brute-force subdomains.

Chaos: Discover subdomains via ProjectDiscovery’s dataset.

OneForAll: Comprehensive subdomain enumeration.

ShuffleDNS: High-speed subdomain brute-forcing (VPS recommended).

Katana: Crawl websites to extract subdomains and endpoints.

VirusTotal: Find subdomains via passive DNS.

Netcraft: Search DNS records for subdomains.

Anew: Remove duplicate entries from subdomain lists.

Httpx: Filter live subdomains.

EyeWitness: Take screenshots of live subdomains for visual analysis.

Steps:

Run Subfinder, Amass, Sublist3r, and OneForAll to collect subdomains.

Query Crt.sh and Chaos for additional subdomains.

Use FFUF and ShuffleDNS for brute-forcing (on a VPS for speed).

Crawl the target with Katana to extract subdomains from dynamic content.

Combine results into a single file and use Anew to remove duplicates: cat subdomains.txt | anew > unique_subdomains.txt

Filter live subdomains with Httpx: cat unique_subdomains.txt | httpx -silent > live_subdomains.txt

Use EyeWitness to capture screenshots of live subdomains for manual review.

3. Subdomain Takeover Checks

Identify subdomains pointing to unclaimed services (e.g., AWS S3, Azure) that can be taken over.

Tools:

Subzy: Check for subdomain takeover vulnerabilities.

Subjack: Detect takeover opportunities (may be preinstalled in Kali).

Steps:

Run Subzy on the list of subdomains: subzy run --targets live_subdomains.txt

Use Subjack for additional checks: subjack -w live_subdomains.txt -a

Manually verify any flagged subdomains to confirm vulnerabilities.

4. Directory and File Bruteforcing

Search for sensitive files and directories that may expose vulnerabilities.

Tools:

FFUF: High-speed directory brute-forcing.

Dirsearch: Discover hidden directories and files.

Katana: Crawl for endpoints and files.

Steps:

Use FFUF to brute-force directories on live subdomains: ffuf -w wordlist.txt -u https://subdomain.target.com/FUZZ

Run Dirsearch for deeper enumeration: dirsearch -u https://subdomain.target.com -e *

Crawl with Katana to identify additional endpoints: katana -u https://subdomain.target.com -o endpoints.txt

5. JavaScript Analysis

Analyze JavaScript files for sensitive information like API keys, credentials, or hidden endpoints.

Tools:

GetJS: Extract JavaScript file URLs from a target.

Subjs: Identify JavaScript files across subdomains.

Katana: Crawl for JavaScript files and endpoints.

SecretFinder: Search JavaScript files for secrets (API keys, tokens, etc.).

Mantra: Analyze JavaScript for vulnerabilities and misconfigurations.

Grep: Filter specific patterns in JavaScript files.

Steps:

Use Subjs and GetJS to collect JavaScript file URLs: cat live_subdomains.txt | subjs > js_files.txt getjs --url https://subdomain.target.com >> js_files.txt

Crawl with Katana to find additional JavaScript files: katana -u https://subdomain.target.com -o js_endpoints.txt

Download JavaScript files for analysis: wget -i js_files.txt -P js_files/

Run SecretFinder to identify sensitive data: secretfinder -i js_files/ -o secrets.txt

Use Mantra to detect vulnerabilities in JavaScript code: mantra -f js_files/ -o mantra_report.txt

Search for specific patterns (e.g., API keys) with Grep: grep -r "api_key\|token" js_files/

6. Vulnerability Scanning

Perform automated scans to identify common vulnerabilities.

Tools:

Nuclei: Fast vulnerability scanner with customizable templates.

Mantra: Detect misconfigurations and vulnerabilities in web assets.

Steps:

Run Nuclei with a comprehensive template set: nuclei -l live_subdomains.txt -t cves/ -t exposures/ -o nuclei_results.txt

Use Mantra to scan for misconfigurations: mantra -u https://subdomain.target.com -o mantra_scan.txt

7. GitHub Reconnaissance

Search for leaked sensitive information in public repositories.

Tools:

GitHub Search: Manually search for target-related repositories.

Grep: Filter repository content for sensitive data.

Steps:

Search GitHub for the target domain or subdomains (e.g., from:target.com).

Clone relevant repositories and use Grep to find secrets: grep -r "api_key\|password\|secret" repo_folder/

Analyze code for hardcoded credentials or misconfigurations.

8. Next Steps and Analysis

Review EyeWitness screenshots for login pages, outdated software, or misconfigurations.

Analyze Nuclei and Mantra reports for actionable vulnerabilities.

Perform manual testing on promising subdomains (e.g., XSS, SQLi, SSRF).

Document findings and prioritize vulnerabilities based on severity.

Additional Notes

Learning Resources: Complete TryHackMe’s pre-security learning path for foundational knowledge.

Tool Installation:

Install Anew: go install github.com/tomnomnom/anew@latest

Install Subzy: go install github.com/PentestPad/subzy@latest

Install Nuclei: go install github.com/projectdiscovery/nuclei/v2/cmd/nuclei@latest

Install Katana: go install github.com/projectdiscovery/katana/cmd/katana@latest

Optimization: Use a VPS for resource-intensive tools like ShuffleDNS and FFUF.

File Management: Organize outputs into separate files (e.g., subdomains.txt, js_files.txt) for clarity.

1 note

·

View note

Text

The paper "The Core of Hacking" is an outstanding example of an essay on information technology. Footprinting is the technique used in gathering information about a certain computer system and their entities. This is one methodology used by hackers if they wish to gather information about an organization. As a consultant of assessing the network security for a large organization, I must undertake various methodologies and use various tools in this assessment. Network scanning, on the other hand, is the process of identifying any active networks either to attack or assess them. It can be conducted through vulnerability scanning which is an automatic process of identifying any vulnerability to determine whether the computer system can be threatened or exploited (Consultants., 2010). This type of scanning makes use of a software that looks for flaws in the database, testing the system for such flaws and coming up with a report on the same that is useful in tightening the security of the system. In conducting footprinting, such methods as crawling may be used. Here, the network is surfed to get the required information about the targeted system. Here, the consultant surfs on the website, blogs, and social sites of the targeted organization to get the required information about the system. However, open-source footprinting is the best method and easiest to navigate around and come up with an organization’s information. Tools used include domain name system (DNS) which is a naming system for computer systems connected to the internet. It associates various information gathered with their domain names that have been assigned to each participating entities. It then translates the domain names to the IP addresses thus locating computer systems and devices worldwide. The specialist can use it in locating a computer system that they desire to scan. The specialist can also use the network enumeration tool where they can retrieve usernames and group’s information. It enables them to discover the devices of the host on a specific network using discovery protocols such as ICMP. They scan various ports in the system to look for well-known services to identify the function of a remote host. They may then fingerprint the operating system of the remote host. Another tool is port scanning where software is used to probe a server for any open ports. It is mainly used in verifying security policies of their networks and by the attackers to identify any running services on a host to prevent its compromise (Dwivedi, 2014). The specialist may decide to port sweep where they scan multiple hosts to search for a specific service. The specialist can also make use of the SNMP which manages devices on the IP networks. Read the full article

0 notes

Text

Summary

🌐 What is DNS? DNS (Domain Name System) translates human-readable domain names (e.g., google.com) into machine-readable IP addresses, playing a critical role in internet functionality.

🔍 DNS Security Concerns: DNS protocols, being outdated, are susceptible to attacks like DNS cache poisoning, tunneling, and domain generation algorithm (DGA) exploits.

🛡️ DNS Exploits and Mitigation: Examples like SolarWinds illustrate the abuse of DNS for command-and-control (C2) communication. Mitigations include DNSSEC, DNS over HTTPS, and regular hygiene like cleaning up unused records.

📊 Practical Applications: From DNS enumeration in pen-testing to using tools like Pi-hole for DNS sinkholing, understanding DNS is pivotal for network security and effective cybersecurity strategies.

🤖 Future of DNS Security: Emphasizes the importance of secure extensions like DNSSEC and emerging protocols to address evolving threats.

Insights Based on Numbers

📈 Impact Scope: DNS tunneling and poisoning attacks have targeted millions of users globally, leading to breaches in high-profile organizations.

🖥️ Versatility of DNS Tools: Tools like DNS Recon and DNSDumpster simplify DNS enumeration, making them valuable for both attackers and defenders.

0 notes

Text

Omnisci3nt: Unveiling the Hidden Layers of the Web | #Omnisci3nt #Reconnaissance #Web

0 notes

Text

فحص واستغلال سب دومين AzSubEnum

AzSubEnum السلام عليكم متابعين قناة ومدونة Shadow Hacker في هذا المقال سوف نستعرض أداة راعه جداً مخصصه في استخراج subdomain واستغلال الثغرات الموجودة فيها تم تصميم أداة AzSubEnum بهدف فحص النطاقات الفرعية بدقة عاليه جداً وفحص جميع النطاقات الفرعية من خلال مجموعة من التقنيات المتواجدة في الأداة فاذا كنت من المهتمين في مجال اكتشاف الثغرات وأختبار الأخلاقي فهذة الأداة ستكون ذو فائدة كبيرة للك.AzSubEnum

فحص واستغلال سب دومين AzSubEnum

AzSubEnum واحدة من اقوى الأدوات في مجال اصتياد الثغرات الأمنيه في المواقع والسيرفرات وتوفر لك مميزات عديدة مثل فحص العميق الذي يخول لك فحص واستخراج جميع الثغرات المتواجدة في النطاق الفرعي , كما انها تستخرج جميع النطاقات الفرعية بشكل دقيق جداً في الدومين , تعمل أداة AzSubEnum من خلال تحليل DNS بشكل مبتكر وتحليل النطاتقات الفرعية بشكل دقيق عبر قواعد البيانات مثل (including MSSQL, Cosmos DB, and Redis).

بستخدام أداة AzSubEnum يمكن تحليل شامل لـ Subdomain في مفيدة جداً للمهتمين في مجال BUG BUNTY او المهتمين في اكتشاف الثغرات المنية بشتى انواعها.

طريقة أستخدام أداة AzSubEnum

➜ AzSubEnum git:(main) ✗ python3 azsubenum.py --help usage: azsubenum.py [-h] -b BASE [-v] [-t THREADS] [-p PERMUTATIONS] Azure Subdomain Enumeration options: -h, --help show this help message and exit -b BASE, --base BASE Base name to use -v, --verbose Show verbose output -t THREADS, --threads THREADS Number of threads for concurrent execution -p PERMUTATIONS, --permutations PERMUTATIONS File containing permutations

Basic enumeration:

1 note

·

View note

Video

youtube

Fierce on Kali Linux & Raspberry Pi 5: The Art of DNS Enumeration

1 note

·

View note

Text

What is footprinting?

In the realm of cybersecurity, knowledge is power, and the first step in defending against potential threats is understanding them. Footprinting, often regarded as the initial phase of a cyberattack, is the process of gathering information about a target, be it an organization, an individual, or a network. In this blog, we will delve into the concept of footprinting, exploring its significance, methodologies, and ethical considerations.

What is Footprinting?

Footprinting, in the context of cybersecurity, refers to the systematic process of gathering information about a target entity, primarily through open-source intelligence (OSINT) techniques. The goal is to create a digital map that encompasses various aspects of the target, such as its infrastructure, employees, technologies, and online presence. This information is invaluable for both defensive and offensive cybersecurity purposes.

Methodologies of Footprinting

Passive Footprinting:

Website Analysis: Analyzing a target's website for publicly available information like contact details, organizational structure, and technology stack.

Social Media Profiling: Scouring social media platforms for clues about the target's employees, their interests, and connections.

WHOIS Lookup: Querying the WHOIS database to find domain registration information, including domain owners and contact details.

Active Footprinting:

Port Scanning: Actively probing the target's network to discover open ports, services, and potential vulnerabilities.

DNS Enumeration: Gathering information about DNS records to unveil subdomains and network topology.

Network Scanning: Using tools like Nmap to identify network devices, their configurations, and vulnerabilities.

Physical Footprinting:

On-Site Reconnaissance: Physical visits to target locations to gather information about security measures, access points, and potential weaknesses.

Also Read: What is the Scope of Ethical Hacking?

Ethical Considerations

It's imperative to approach footprinting ethically, respecting privacy and legal boundaries. Ethical hackers and cybersecurity professionals use these techniques for defensive purposes, helping organizations strengthen their security posture. Unethical or malicious use of footprinting techniques can lead to privacy violations, data breaches, and legal consequences.

Significance of Footprinting

Security Assessment: Footprinting provides organizations with insights into how much information is readily available to potential attackers. This information helps them assess their security measures and make necessary improvements.

Vulnerability Identification: Footprinting reveals potential vulnerabilities, allowing organizations to address weaknesses before they are exploited.

Incident Response: In the event of a security incident, having a pre-existing digital footprint of the organization can aid in identifying the scope and nature of the breach.

Competitive Intelligence: In the business world, footprinting can be used to gather information about competitors, their products, and strategies.

Conclusion

In the ever-evolving domain of cybersecurity, understanding and mitigating potential threats begin with comprehensive knowledge, and footprinting is a fundamental part of this process. Footprinting, when conducted responsibly and ethically, unveils the digital trail that can be vital for security professionals and organizations.

To truly harness the power of footprinting and use it for ethical and defensive purposes, individuals and organizations should consider enrolling in an online ethical hacking course. These courses provide structured learning environments, imparting knowledge about not only footprinting but also a broader array of cybersecurity practices. Importantly, they emphasize the ethical responsibility that accompanies this knowledge.

In conclusion, footprinting, when employed ethically and in tandem with an ethical hacking course, becomes a potent tool for safeguarding digital assets. It equips individuals with the skills and mindset needed to proactively defend against cyber threats, ultimately enhancing the security and resilience of our increasingly interconnected digital world.

0 notes

Text

Scilla - Information Gathering Tool (DNS/Subdomain/Port Enumeration)

Scilla - Information Gathering Tool (DNS/Subdomain/Port Enumeration) #DNSEnumeration #DNSSubdomainPort #Enumeration

Information Gathering Tool – Dns/Subdomain/Port Enumeration Installation First of all, clone the repo locally git clone https://github.com/edoardottt/scilla.git Scilla has external dependencies, so they need to be pulled in: go get Working on installation… See the open issue. For now you can run it inside the scilla folder with go run scilla.go ... Too late.. : see this Then use the build…

View On WordPress

#DNS Enumeration#DNSSubdomainPort#Enumeration#Gathering#golang#Information#information Gathering#Information Retrieval#linux#Port Enumeration#Scilla#Subdomain Scanner#tool#windows

0 notes

Link

#xss#reconDNS#CyberSecurity#SSRF#SQLi#Fuzzing#DNS#OSINT#Recon#Hacking#Bugbounty#Vulnerability#VAPT#Enumeration#Pentesting#Malware#Exploit#Nuclei

0 notes

Photo

Aquatone By far the best subdomain enumerator I have ever used, I love this tool-set both Aquatone Discover and Aquatone Scan. I would definitely recommend using this tool if you are a bug bounty hunter! Please note that I used the Nmap Scanme site as to avoid doing anything illegal and as you can see it already pulled the nameservers and does some cool stuff like pulling the subdomains and all their IPs and information! I quite often use this in combination with a very powerful program called Photon but for some reason haven’t been able to install Docker on Parrot because of some weird error. Will update as soon as I am able to fix this.

#Aquatone#subdomain#url#websites#webdevelopment#vulnerability scanner#dns#hacking tools#nmap#github#hacking#ethical hacking#ip address#enumeration

0 notes

Photo

DNSExplorer: Automates Enumeration of Domains and DNS Servers | #DNS #Domains #Enumeration #Subdomains #Web

0 notes

Text

The socket module in Python: More than a footgun

Previously: Beginner Problems With TCP & The socket Module in Python, Things To Think About Before You Implement Online Multiplayer

I think I have previously undersold how terrible the socket module in Python is. It's worse. Or rather, what's worse is that often enough, the socket module is all that is available with the "batteries included" with Python 3.X. For anything more, you need to look on PyPI, and there are far too many modules on there that sort of do what you want, but are unmaintained. That is not good for networking, especially on the Internet!

The socket module has a lot of functionality you don't need for Internet applications, and is missing a lot of functionality you do need. Some of that functionality is present with asyncio, but not with socket. This weird feature imparity leads to beginner programmers using asyncio without understanding the use case for asyncio, or without understanding what async does, mixing blocking functions with asyncio.

Even experienced programmers won't be happy with socket. They can see and sidestep some of the pitfalls, but they can't really make productive use of socket on its own. There is no pure-Python way to get your own IP address, network interfaces, or your public IP address. There is no interface for path MTU discovery, and no abstraction layer for handling mixed IPv6/IPv4 connections.

The socket module is not just a footgun. It's little helper gnomes opening your gun safe at light, reloading the footguns you thought safe while you sleep. Even an experienced programmer must treat the socket module as if it can go off at any time!

Solution: A modern Internet-oriented networking module

We need a high-level Internet-oriented networking module as part of Python. Let's call it "networking". It doesn't need any OS-specific networking features, and it doesn't need IPC. It should just have an easy way to opening a socket and communicating over the Internet.

You should be able to query things like IPv4/IPv6 support, and stuff like mobile IP or multi-path TCP, but by default, there should just be a simple interface that takes a DNS name, IPv4 address or IPv6 address, and lets you connect.

There should probably be a "do-what-i-mean multicast" option for UDP, with functions to send and receive broadcast and multicast packets, instead of re-implementing the wrangling of IP addresses and subnet masks every time.

There should probably be a way to extend this, by implementing different message-oriented, datagram-oriented, or stream-oriented protocols, such as SSL and ENet as separate modules.

Most importantly, this module would have its equivalent of Java's BufferedReader. I know that this is super easy to implement, but the lack of a BufferedReader is a major stumbling block for beginners, and it pushes those beginners to use asyncio without understanding what that is or how it works. The "networking" module could have async versions of everything in a separate networking.async namespace. I don't think having awaitable and blocking operations available on the same socket/connection object is sensible, it would be another footgun, so this API duplication seems to me to be the safer option.

We really need full blocking/async parity. I can't stress this enough. Otherwise you end up in bizarre situations where library users write async code inside sync functions and spin up the event loop for every function call, but then call blocking functions in their async code, because to them, async is just a cumbersome way to call blocking functions.

Solution: Interface/Network/Service Discovery

We need a network discovery module as part of Python, maintained and developed in tandem with the old socket and the new networking. It should allow the user/developer to easily enumerate all available network interfaces, their IP addresses, MTU, IPv4/IPv6 status, connectivity, whether they are metered, and whether their networks are connected to the Internet.

Path MTU discovery and ping might also be useful.

Maybe service discovery protocols like Bonjour could live in this module too, or they could be their own module.

Solution: Dealing with NAT

In an ideal world, we would all be using IPv6, and we would somehow know the worldwide unique IP address of our friend's PC - the device we are trying to communicate with. Our router would know to route packets to that IP to our friend's router, and all would be well.

In the real world we are using NAT and VPNs. Some devices have an IPv4 address, others don't. IPv6 usually doesn't help us connect to a friend's PC to play a game.

We need a module (I propose the name "unfirewall" or "traversal") to allow the user/developer to connect or send packets to a computer behind NAT. This module must set up a connection that is either stream-based or datagram-based, and present the same API as raw sockets created with the networking module, or SSL sockets built on top of it.

Whatever system you are using to connect your stream or datagram socket, whether you go through a SOCKS proxy or a TURN server, or you manage to do NAT hole punching via STUN, it should return a networking object that is mostly indistinguishable from one that was connected through your local LAN.

Solution: Common Data Types

Python needs data types for networking addresses, such as IPv4 addresses, IPv6 addresses, DNS names, IDNA, and so on. The above mentioned modules should accept parameters with those types, in addition to old-fashioned 32-bit unsigned integers for IPv4 addresses.

Solution: Network Reliability Simulation

Once we have an ecosystem of modern networking APIs, it becomes easier to write your own network un-reliability simulator. Dropped connections, uneven transmission speeds, partial reads and writes leading to TCP stream read() not lining up with send() from the other end, dropped UDP packets, a whole second of latency, low bandwidth, all those could be simulated on localhost.

Those changes would turn Python networking from a footgun for beginners into something that still causes cursing and frozen GUI widgets when your WLAN connection gets choppy. There is no software fix for radio signal quality, or for an excavator cutting the fiber optic connection between your town and the rest of the world.

14 notes

·

View notes

Text

Hawk Network and pentest utility that I developed so that I could perform different kinds of...

Hawk Network and pentest utility that I developed so that I could perform different kinds of task using the same suite, instead of jumping from one tool to another. Currently, this script can perform a variety of tasks such as ifconfig, ping, traceroute, port scans (including SYN, TCP, UDP, ACK, comprehensive scan, host discovery (scan for up devices on a local network), MAC address detection (get MAC address of a host IP on a local network), banner grabbing, DNS checks (with geolocation information), WHOIS, subdomain enumeration, vulnerability reconnaissance, packet sniffing, MAC spoofing, IP spoofing, SYN flooding, deauth attack and brute-force attack (beta). Other features are still being implemented. Future implementations may include WAF detection, DNS enumeration, traffic analysis, XSS vulnerability scanner, ARP cache poisoning, DNS cache poisoning, MAC flooding, ping of death, network disassociation attack (not deauth attack), OSINT, email spoofing, exploits, some automated tasks and others. https://github.com/medpaf/hawk

-

1 note

·

View note

Text

Web Application Penetration Testing Checklist

Web-application penetration testing, or web pen testing, is a way for a business to test its own software by mimicking cyber attacks, find and fix vulnerabilities before the software is made public. As such, it involves more than simply shaking the doors and rattling the digital windows of your company's online applications. It uses a methodological approach employing known, commonly used threat attacks and tools to test web apps for potential vulnerabilities. In the process, it can also uncover programming mistakes and faults, assess the overall vulnerability of the application, which include buffer overflow, input validation, code Execution, Bypass Authentication, SQL-Injection, CSRF, XSS etc.

Penetration Types and Testing Stages

Penetration testing can be performed at various points during application development and by various parties including developers, hosts and clients. There are two essential types of web pen testing:

l Internal: Tests are done on the enterprise's network while the app is still relatively secure and can reveal LAN vulnerabilities and susceptibility to an attack by an employee.

l External: Testing is done outside via the Internet, more closely approximating how customers — and hackers — would encounter the app once it is live.

The earlier in the software development stage that web pen testing begins, the more efficient and cost effective it will be. Fixing problems as an application is being built, rather than after it's completed and online, will save time, money and potential damage to a company's reputation.

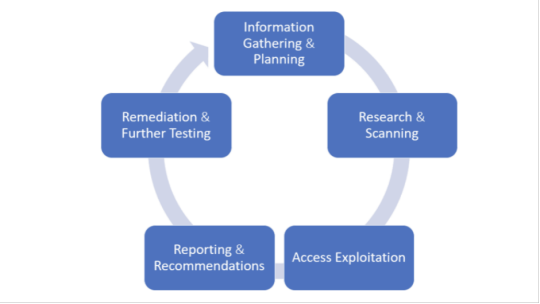

The web pen testing process typically includes five stages:

1. Information Gathering and Planning: This comprises forming goals for testing, such as what systems will be under scrutiny, and gathering further information on the systems that will be hosting the web app.

2. Research and Scanning: Before mimicking an actual attack, a lot can be learned by scanning the application's static code. This can reveal many vulnerabilities. In addition to that, a dynamic scan of the application in actual use online will reveal additional weaknesses, if it has any.

3. Access and Exploitation: Using a standard array of hacking attacks ranging from SQL injection to password cracking, this part of the test will try to exploit any vulnerabilities and use them to determine if information can be stolen from or unauthorized access can be gained to other systems.

4. Reporting and Recommendations: At this stage a thorough analysis is done to reveal the type and severity of the vulnerabilities, the kind of data that might have been exposed and whether there is a compromise in authentication and authorization.

5. Remediation and Further Testing: Before the application is launched, patches and fixes will need to be made to eliminate the detected vulnerabilities. And additional pen tests should be performed to confirm that all loopholes are closed.

Information Gathering

1. Retrieve and Analyze the robot.txt files by using a tool called GNU Wget.

2. Examine the version of the software. DB Details, the error technical component, bugs by the error codes by requesting invalid pages.

3. Implement techniques such as DNS inverse queries, DNS zone Transfers, web-based DNS Searches.

4. Perform Directory style Searching and vulnerability scanning, Probe for URLs, using tools such as NMAP and Nessus.

5. Identify the Entry point of the application using Burp Proxy, OWSAP ZAP, TemperIE, WebscarabTemper Data.

6. By using traditional Fingerprint Tool such as Nmap, Amap, perform TCP/ICMP and service Fingerprinting.

7.By Requesting Common File Extension such as.ASP,EXE, .HTML, .PHP ,Test for recognized file types/Extensions/Directories.

8. Examine the Sources code From the Accessing Pages of the Application front end.

9. Many times social media platform also helps in gathering information. Github links, DomainName search can also give more information on the target. OSINT tool is such a tool which provides lot of information on target.

Authentication Testing

1. Check if it is possible to “reuse” the session after Logout. Verify if the user session idle time.

2. Verify if any sensitive information Remain Stored in browser cache/storage.

3. Check and try to Reset the password, by social engineering crack secretive questions and guessing.

4.Verify if the “Remember my password” Mechanism is implemented by checking the HTML code of the log-in page.

5. Check if the hardware devices directly communicate and independently with authentication infrastructure using an additional communication channel.

6. Test CAPTCHA for authentication vulnerabilities.

7. Verify if any weak security questions/Answer are presented.

8. A successful SQL injection could lead to the loss of customer trust and attackers can steal PID such as phone numbers, addresses, and credit card details. Placing a web application firewall can filter out the malicious SQL queries in the traffic.

Authorization Testing

1. Test the Role and Privilege Manipulation to Access the Resources.

2.Test For Path Traversal by Performing input Vector Enumeration and analyze the input validation functions presented in the web application.

3.Test for cookie and parameter Tempering using web spider tools.

4. Test for HTTP Request Tempering and check whether to gain illegal access to reserved resources.

Configuration Management Testing

1. Check file directory , File Enumeration review server and application Documentation. check the application admin interfaces.

2. Analyze the Web server banner and Performing network scanning.

3. Verify the presence of old Documentation and Backup and referenced files such as source codes, passwords, installation paths.

4.Verify the ports associated with the SSL/TLS services using NMAP and NESSUS.

5.Review OPTIONS HTTP method using Netcat and Telnet.

6. Test for HTTP methods and XST for credentials of legitimate users.

7. Perform application configuration management test to review the information of the source code, log files and default Error Codes.

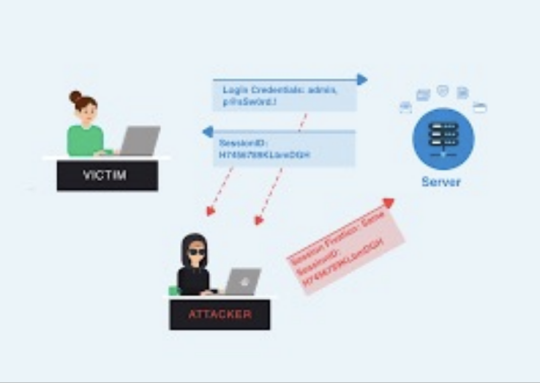

Session Management Testing

1. Check the URL’s in the Restricted area to Test for CSRF (Cross Site Request Forgery).

2.Test for Exposed Session variables by inspecting Encryption and reuse of session token, Proxies and caching.

3. Collect a sufficient number of cookie samples and analyze the cookie sample algorithm and forge a valid Cookie in order to perform an Attack.

4. Test the cookie attribute using intercept proxies such as Burp Proxy, OWASP ZAP, or traffic intercept proxies such as Temper Data.

5. Test the session Fixation, to avoid seal user session.(session Hijacking )

Data Validation Testing

1. Performing Sources code Analyze for javascript Coding Errors.

2. Perform Union Query SQL injection testing, standard SQL injection Testing, blind SQL query Testing, using tools such as sqlninja, sqldumper, sql power injector .etc.

3. Analyze the HTML Code, Test for stored XSS, leverage stored XSS, using tools such as XSS proxy, Backframe, Burp Proxy, OWASP, ZAP, XSS Assistant.

4. Perform LDAP injection testing for sensitive information about users and hosts.

5. Perform IMAP/SMTP injection Testing for Access the Backend Mail server.

6.Perform XPATH Injection Testing for Accessing the confidential information

7. Perform XML injection testing to know information about XML Structure.

8. Perform Code injection testing to identify input validation Error.

9. Perform Buffer Overflow testing for Stack and heap memory information and application control flow.

10. Test for HTTP Splitting and smuggling for cookies and HTTP redirect information.

Denial of Service Testing

1. Send Large number of Requests that perform database operations and observe any Slowdown and Error Messages. A continuous ping command also will serve the purpose. A script to open browsers in loop for indefinite no will also help in mimicking DDOS attack scenario.

2.Perform manual source code analysis and submit a range of input varying lengths to the applications

3.Test for SQL wildcard attacks for application information testing. Enterprise Networks should choose the best DDoS Attack prevention services to ensure the DDoS attack protection and prevent their network

4. Test for User specifies object allocation whether a maximum number of object that application can handle.

5. Enter Extreme Large number of the input field used by the application as a Loop counter. Protect website from future attacks Also Check your Companies DDOS Attack Downtime Cost.

6. Use a script to automatically submit an extremely long value for the server can be logged the request.

Conclusion:

Web applications present a unique and potentially vulnerable target for cyber criminals. The goal of most web apps is to make services, products accessible for customers and employees. But it's definitely critical that web applications must not make it easier for criminals to break into systems. So, making proper plan on information gathered, execute it on multiple iterations will reduce the vulnerabilities and risk to a greater extent.

1 note

·

View note