#Data analysis software

Explore tagged Tumblr posts

Text

Data Analysis: Turning Information into Insight

In nowadays’s digital age, statistics has come to be a vital asset for businesses, researchers, governments, and people alike. However, raw facts on its personal holds little value till it's far interpreted and understood. This is wherein records evaluation comes into play. Data analysis is the systematic manner of inspecting, cleansing, remodeling, and modeling facts with the objective of coming across beneficial information, drawing conclusions, and helping selection-making.

What Is Data Analysis In Research

What is Data Analysis?

At its middle, records analysis includes extracting meaningful insights from datasets. These datasets can variety from small and based spreadsheets to large and unstructured facts lakes. The primary aim is to make sense of data to reply questions, resolve issues, or become aware of traits and styles that are not without delay apparent.

Data evaluation is used in truely every enterprise—from healthcare and finance to marketing and education. It enables groups to make proof-based choices, improve operational efficiency, and advantage aggressive advantages.

Types of Data Analysis

There are several kinds of information evaluation, every serving a completely unique purpose:

1. Descriptive Analysis

Descriptive analysis answers the question: “What happened?” It summarizes raw facts into digestible codecs like averages, probabilities, or counts. For instance, a store might analyze last month’s sales to decide which merchandise achieved satisfactory.

2. Diagnostic Analysis

This form of evaluation explores the reasons behind beyond outcomes. It answers: “Why did it occur?” For example, if a agency sees a surprising drop in internet site visitors, diagnostic evaluation can assist pinpoint whether or not it changed into because of a technical problem, adjustments in search engine marketing rating, or competitor movements.

3. Predictive Analysis

Predictive analysis makes use of historical information to forecast destiny consequences. It solutions: “What is probable to occur?” This includes statistical models and system getting to know algorithms to pick out styles and expect destiny trends, such as customer churn or product demand.

4. Prescriptive Analysis

Prescriptive analysis provides recommendations primarily based on facts. It solutions: “What have to we do?” This is the maximum advanced type of analysis and often combines insights from predictive analysis with optimization and simulation techniques to manual selection-making.

The Data Analysis Process

The technique of information analysis commonly follows those steps:

1. Define the Objective

Before diving into statistics, it’s essential to without a doubt recognize the question or trouble at hand. A well-defined goal guides the entire analysis and ensures that efforts are aligned with the preferred outcome.

2. Collect Data

Data can come from numerous sources which includes databases, surveys, sensors, APIs, or social media. It’s important to make certain that the records is relevant, timely, and of sufficient high-quality.

3. Clean and Prepare Data

Raw information is regularly messy—it may comprise missing values, duplicates, inconsistencies, or mistakes. Data cleansing involves addressing these problems. Preparation may include formatting, normalization, or growing new variables.

Four. Analyze the Data

Tools like Excel, SQL, Python, R, or specialized software consisting of Tableau, Power BI, and SAS are typically used.

5. Interpret Results

Analysis isn't pretty much numbers; it’s about meaning. Interpreting effects involves drawing conclusions, explaining findings, and linking insights lower back to the authentic goal.

6. Communicate Findings

Insights have to be communicated effectively to stakeholders. Visualization tools including charts, graphs, dashboards, and reports play a vital position in telling the story behind the statistics.

7. Make Decisions and Take Action

The last aim of statistics analysis is to tell selections. Whether it’s optimizing a advertising marketing campaign, improving customer support, or refining a product, actionable insights flip data into real-global effects.

Tools and Technologies for Data Analysis

A big selection of gear is available for facts analysis, each suited to distinct tasks and talent levels:

Excel: Great for small datasets and short analysis. Offers capabilities, pivot tables, and charts.

Python: Powerful for complicated facts manipulation and modeling. Popular libraries consist of Pandas, NumPy, Matplotlib, and Scikit-learn.

R: A statistical programming language extensively used for statistical analysis and statistics visualization.

SQL: Essential for querying and handling information saved in relational databases.

Tableau & Power BI: User-friendly enterprise intelligence equipment that flip facts into interactive visualizations and dashboards.

Healthcare: Analyzing affected person statistics to enhance treatment plans, predict outbreaks, and control resources.

Finance: Detecting fraud, coping with threat, and guiding investment techniques.

Retail: Personalizing advertising campaigns, managing inventory, and optimizing pricing.

Sports: Enhancing performance through participant records and game analysis.

Public Policy: Informing choices on schooling, transportation, and financial improvement.

Challenges in Data Analysis

Data Quality: Incomplete, old, or incorrect information can lead to deceptive conclusions.

Data Privacy: Handling sensitive records requires strict adherence to privacy guidelines like GDPR.

Skill Gaps: There's a developing demand for skilled information analysts who can interpret complicated facts sets.

Integration: Combining facts from disparate resources may be technically hard.

Bias and Misinterpretation: Poorly designed analysis can introduce bias or lead to wrong assumptions.

The Future of Data Analysis

As facts keeps to grow exponentially, the sector of facts analysis is evolving rapidly. Emerging developments include:

Artificial Intelligence (AI) & Machine Learning: Automating evaluation and producing predictive fashions at scale.

Real-Time Analytics: Enabling decisions based totally on live data streams for faster reaction.

Data Democratization: Making records handy and understandable to everybody in an business enterprise

2 notes

·

View notes

Text

Understanding the differences between Data Science vs Data Analysis vs Data Engineering is essential for making the right choices in building your data strategy. All three roles have varying benefits, and it is crucial to understand what strategy is needed and when to build your business.

#data engineer vs data scientist#data engineering vs data science#data analyst vs data engineer#data scientist vs data analyst#data analysis software#data engineering services

0 notes

Text

Is Your Analytics Software Lying to You? How to Spot and Correct Data Bias

Data bias occurs when certain elements within a dataset influence the outcomes in a way that misrepresents reality. This can happen at various stages of data collection, processing, and analysis. To keep corporate analytics tools reliable, it is crucial that all data workers understand the need of identifying and removing data bias.

Types of Data Bias

Understanding the different types of data bias helps in identifying potential pitfalls in your data analysis software. Here are some common types:

Sampling Bias: Sampling bias arises when the sample data used for analysis is not representative of the entire population. This can lead to overgeneralization and inaccurate conclusions. For instance, if a business analytics software only analyzes data from urban customers, it may not accurately reflect the preferences of rural customers.

Measurement Bias: Measurement bias occurs when there are systematic errors in data collection methods. This could be due to faulty sensors, inaccurate recording, or biased survey questions. Ensuring your data analysis software can detect and correct such errors is crucial for accurate insights.

Confirmation Bias: Confirmation bias happens when data is interpreted in a way that confirms pre-existing beliefs. Analysts might unconsciously select data that supports their hypotheses while ignoring contradicting information. Top data analytics software UK must incorporate features that promote objective data interpretation.

Algorithmic Bias: Algorithmic bias is introduced by the algorithms themselves, often due to biased training data or flawed algorithm design. Business analytics tools should be regularly audited to ensure that they do not perpetuate or exacerbate existing biases.

Common Sources of Data Bias in Analytics Software

Leveraging accurate and reliable insights is essential for informed decision-making. However, even the most advanced data analysis software can fall prey to data bias, leading to skewed insights and suboptimal strategies. Understanding the common sources of data bias in analytics software is crucial for business users, data analysts, and BI professionals to ensure the integrity of their analyses.

1. Data Collection Methods

Inadequate Sampling: Data bias often begins at the data collection stage. If the sample data is not representative of the entire population, the insights generated by your data analysis software will be biased. For instance, if a retail company collects customer feedback only from its online store, it may miss insights from in-store customers, leading to an incomplete understanding of customer satisfaction. This can result in business analytics tools making recommendations that favor online shoppers, while neglecting the needs and preferences of in-store customers.

Selective Reporting: Selective reporting happens when only certain types of data are collected or reported, while others are ignored. This can result in a skewed dataset that doesn't reflect the true picture. For example, a business might focus on positive customer reviews while neglecting negative feedback, leading to an overly optimistic view of customer satisfaction. This bias can lead to misinformed business decisions and missed opportunities for improvement.

Mitigation Strategies:

Ensure diverse and comprehensive data collection methods.

Use stratified sampling techniques to capture a more representative sample.

Regularly review and update data collection processes to reflect changes in the population.

2. Data Processing Errors

Data Cleaning Bias: Data cleaning is essential for ensuring data quality, but it can also introduce bias. For instance, if outliers are removed without proper justification, valuable insights may be lost. Similarly, if certain data points are consistently corrected or modified based on assumptions, this can skew the results. This is particularly important when using top data analytics software UK, as maintaining data integrity is crucial for accurate insights.

Algorithmic Bias: Bias can be introduced during the data processing phase through flawed algorithms. If the algorithms used by your business analytics tools are not designed to handle bias, they can perpetuate existing biases in the data. This is especially problematic with machine learning algorithms that learn from historical data, which may contain inherent biases.

Mitigation Strategies:

Implement robust data cleaning protocols that are transparent and justified.

Regularly audit algorithms to ensure they are free from bias.

Use advanced data analysis software with built-in bias detection and correction features.

3. User Inputs and Assumptions

Analyst Bias: Human biases can significantly impact data analysis. Analysts may have preconceived notions or expectations that influence how they interpret data. If an analyst has a hypothesis, they may hunt for evidence that backs it up and ignore evidence that challenges it. This can lead to biased conclusions and suboptimal decision-making.

Incorrect Assumptions: Bias can also arise from incorrect assumptions made during data analysis. For instance, assuming that a correlation implies causation can lead to faulty conclusions. Business analytics software must be designed to help users question and validate their assumptions.

Mitigation Strategies:

Encourage objective data interpretation through training and awareness programs.

Use business analytics tools that provide features for validating assumptions and promoting critical thinking.

Foster a culture of transparency and peer review to minimize individual biases.

4. Data Integration Issues

Inconsistent Data Sources: When integrating data from multiple sources, inconsistencies can introduce bias. Different data sources may have varying levels of quality, completeness, and accuracy. These inconsistencies can lead to biased results if not properly managed.

Data Silos: Data silos occur when different departments or systems within an organization do not share data. This can result in an incomplete view of the business, leading to biased insights. Top data analytics software UK should facilitate seamless data integration to provide a holistic view.

Mitigation Strategies:

Standardize data formats and quality checks across all data sources.

Install analytics software for businesses that allows for the smooth integration and harmonization of data.

To eliminate silos, encourage cross-departmental cooperation and data sharing.

What Are Some Warning Signs of Data Bias That You Should Take Care Of?

Unexpected or Inconsistent Results

One of the most apparent warning signs of data bias is when your data analysis software produces results that deviate significantly from expectations or show inconsistencies across different datasets. For example, suppose a business uses sales data from different regions to forecast future performance. If the data analysis software consistently overestimates sales in one region while underestimating in another, it may indicate a bias in the data collection or processing methods.

Inconsistent results can lead to misinformed business decisions, such as over-investing in underperforming regions or neglecting high-potential areas, ultimately affecting overall business strategy and resource allocation. To mitigate this, businesses should conduct regular audits of their data sources and analysis processes, use advanced business analytics tools to cross-validate results with external benchmarks, and implement robust error-checking mechanisms to identify and correct anomalies.

Over-reliance on Certain Data Sources

Bias can also arise from relying too heavily on specific data sources without considering others, leading to a narrow view that doesn't accurately reflect the broader reality. For instance, a company may use only online customer reviews to gauge overall satisfaction, ignoring feedback from other channels like in-store surveys or customer service interactions. This selective data use can skew the insights, resulting in a biased understanding of customer preferences and behavior, and leading to misguided marketing strategies and product development. To prevent this, businesses should integrate multiple data sources to provide a holistic view, use top data analytics software UK that offers seamless data integration capabilities, and regularly review and update data sources to ensure diversity and comprehensiveness.

Lack of Diversity in Data Inputs

When the data inputs used for analysis lack diversity in terms of geography, demographics, or other factors, the resulting insights may not be generalizable or accurate. For example, a business might collect data predominantly from urban areas, neglecting rural regions, which can lead to biased insights that do not accurately represent the entire market. A lack of diverse data inputs can result in a limited understanding of the market, leading to strategies that do not resonate with all customer segments. To address this, businesses should ensure data collection methods capture diverse and representative samples, use business analytics tools that can handle and analyze diverse data sets effectively, and implement stratified sampling techniques to ensure representation across different segments.

Consistently Favorable or Unfavorable Results

If your business analytics software consistently produces overly favorable or unfavorable results, it may indicate a bias in the data or analysis process. For instance, if a performance evaluation tool always shows exceptionally high ratings for certain employees or departments, it might indicate bias in the evaluation criteria or data entry process.

Consistently biased results can lead to complacency, overlooked issues, or unjustified investments, and can erode trust in the data analysis software and the decisions based on its insights. To mitigate this, businesses should use unbiased evaluation criteria and ensure transparency in data entry processes, regularly validate results against independent data sources or benchmarks, and implement checks and balances to ensure fairness and objectivity in the analysis.

Significant Deviations from External Benchmarks

Another warning sign of data bias is when your analysis results significantly deviate from external benchmarks or industry standards. For example, if market trend analyses generated by your data analysis software differ drastically from industry reports, it may indicate bias in your data or analytical methods.

Relying on biased insights can lead to strategies that are out of sync with industry trends, putting the business at a competitive disadvantage. To address this, businesses should cross-validate internal analysis results with external benchmarks and industry reports, use business analytics tools that offer comprehensive benchmarking features, and adjust data collection and analysis methods to align with industry standards.

Here are some ways in which you can correcting the Data Bias

Improving Data Collection

The first step in correcting data bias is addressing issues at the source – data collection. Ensuring that your data collection methods are inclusive and representative is paramount. For example, if your data predominantly comes from urban areas, it is essential to incorporate rural data to get a comprehensive market view. Using diverse sampling techniques can help capture a wide range of perspectives, making your dataset more representative.

Advanced data analysis software can assist in this process by offering features that ensure diverse and comprehensive data collection. Tools that facilitate the integration of various data sources, such as surveys, customer feedback, and transactional data, can help create a more balanced dataset. This approach is crucial for the top data analytics software UK, where businesses often need to integrate data from multiple sources to ensure accuracy and comprehensiveness.

Algorithmic Adjustments

Bias can also be introduced during the data processing phase through flawed algorithms. Regularly updating and testing algorithms to ensure they are free from bias is crucial. This involves using bias mitigation algorithms designed to identify and correct biases within the data. These algorithms can adjust for known biases, ensuring that the insights generated are accurate and reliable.

For instance, machine learning algorithms should be trained on diverse and representative datasets to avoid perpetuating existing biases. Business analytics tools equipped with advanced machine learning capabilities can automatically detect and correct biases, enhancing the reliability of the results. Regular audits of these algorithms are essential to maintain their accuracy and effectiveness.

Enhancing User Training

Human biases can significantly impact data analysis, leading to skewed insights. Educating users on the importance of unbiased data analysis and promoting data literacy can help mitigate this risk. Training programs should focus on helping users understand how to interpret data objectively, avoiding the pitfalls of confirmation bias and other cognitive biases.

Using business analytics software that offers user-friendly interfaces and comprehensive training modules can enhance user competency in data analysis. These tools can guide users through the process of identifying and correcting biases, ensuring that the insights generated are accurate and actionable. Top data analytics software UK often includes these features, providing users with the necessary tools to perform unbiased analysis effectively.

Implementing Continuous Monitoring

Continuous monitoring and regular audits of your data sources and analysis processes are essential to ensure ongoing accuracy. Implementing ongoing bias detection mechanisms can help identify and correct biases as they arise. This proactive approach ensures that your data remains accurate and reliable over time.

Business analytics tools with robust monitoring capabilities can automatically flag potential biases, allowing for timely interventions. These tools can provide real-time insights into the quality and reliability of your data, helping you maintain the integrity of your analysis. By integrating these features, you can ensure that your business analytics software consistently delivers accurate and reliable insights.

Promoting Data Integration

Data silos can lead to incomplete and biased insights, as different departments or systems may not share data effectively. Promoting data integration across the organization is essential to provide a comprehensive view of the business. Business analytics tools that facilitate seamless data integration can help break down these silos, ensuring that all relevant data is considered in the analysis.

Top data analytics software UK often includes advanced data integration features, allowing businesses to combine data from various sources seamlessly. By using these tools, you can ensure that your data analysis software provides a holistic view of the business, enhancing the accuracy and reliability of the insights generated.

Conclusion

Ensuring the accuracy and reliability of your insights is key. Data bias can significantly undermine the effectiveness of your analytics, leading to skewed conclusions and poor business strategies. By understanding how to spot and correct data bias, you can leverage your data analysis software to its full potential, driving better outcomes and maintaining a competitive edge.

Grow BI provides the advanced tools you need to identify and mitigate data bias effectively. Our top data analytics software UK is designed to deliver unbiased, actionable insights, helping you make informed decisions with confidence. With features like robust bias detection, algorithm auditing, and comprehensive data integration, Grow ensures that your business analytics software remains a reliable cornerstone of your strategy.

Take a look at Grow BI and see the results for yourself; we guarantee it will blow your mind. Sign up for a 14-day free trial and discover how our solutions can transform your data analytics. And for further assurance, check out "Grow Reviews from Verified Users on Capterra” to see how our tools have helped other businesses achieve success.

Ensure your analytics software isn't lying to you. Start your journey towards unbiased, accurate data analysis with Grow BI today.

Original Source: https://bit.ly/3Su31K1

#data analysis software#business analytics software#business analytics tools#top data analytics software UK

0 notes

Text

5 Methods of Data Collection for Quantitative Research

Discover five powerful techniques for gathering quantitative data in research, essential for uncovering trends, patterns, and correlations. Explore proven methodologies that empower researchers to collect and analyze data effectively.

#Quantitative research methods#Data collection techniques#Survey design#Statistical analysis#Quantitative data analysis#Research methodology#Data gathering strategies#Quantitative research tools#Sampling methods#Statistical sampling#Questionnaire design#Data collection process#Quantitative data interpretation#Research survey techniques#Data analysis software#Experimental design#Descriptive statistics#Inferential statistics#Population sampling#Data validation methods#Structured interviews#Online surveys#Observation techniques#Quantitative data reliability#Research instrument design#Data visualization techniques#Statistical significance#Data coding procedures#Cross-sectional studies#Longitudinal studies

1 note

·

View note

Text

A map of every single band on the Metal Archives as of March 1st of this year, using the same dataset I used for this site. Each individual dot represents a single band, and each line indicates that two bands have a member in common.

A closeup, showing the lines in a bit more detail.

There are around 177k bands on the Metal Archives, and, of them, about two-thirds can be connected to one another by common members.

#metal#metalarchives#data visualization#social networks#heavy metal#fun fact if you make a graph with 177 thousand nodes the graph visualization software will shit itself and die#so if I wanna visualize any sort of analysis I'm probably gonna need to figure out how I can pare this whole mess down#but I think this kinda looks cool

17 notes

·

View notes

Text

My laptop could kill me right now and it would count as self defence

#humor#tech#i have so many things open#mostly excel spreadsheets#and data analysis software#and google tabs

4 notes

·

View notes

Text

Yall manifest hard with me today that my husband does well in good interview and gets this job. He’s been unemployed since June and this is the first actual interview he’s gotten out of nearly 200 applications

#cress talks too much#For those curious he works in data analysis and software development#And turns out those jobs aren’t exactly hiring right now

8 notes

·

View notes

Text

Our data engineering solutions are designed to grow with your business, ensuring your systems can efficiently handle increasing data volumes, and support expansion without compromising performance or reliability. We integrate data from multiple sources, providing a unified view that makes it easier to manage, analyze, and leverage, improving decision-making, strategic planning, and overall business outcomes.

#data engineering services#data analytics services#data analysis tools#data analysis software#data engineering#data analysis

0 notes

Text

Best Software for 3D Geological Modelling Infographic Discover Geomage's seismic processing software designed for efficient, high-resolution subsurface imaging. Ideal for exploration and development, with advanced algorithms and user-friendly workflows trusted by geophysicists globally.

#seismic imaging#geology software#geological modeling#Seismic Data Processing Companies#Seismic Analysis Software#seismic interpretation software

0 notes

Text

1-Week Web Design Internship

Web Design Limited is offering a 1-week internship for interested students in web design.

📅 Duration: 1 Week 🎯 Who Can Apply: Students interested in web design

📧 Email: [email protected] 📞 Call for more details: 8675719099 / 7373899599

#python course in chathiram bus stand#best python course in trichy#python course in trichy#education#python with datascience#software testing#technology#data analysis#trichy#student

0 notes

Text

Kickstart Your Tech Career: Why Internships Are More Important Than Ever

In the rapidly changing digital economy we live in today, a degree no longer suffices. What truly makes you stand out is practical experience—and that's where internships fit in.

If you are a computer science or IT bachelor's or master's degree holder, applying for a Java internship for freshers can prove to be one of the best decisions you ever took. Java remains a basis of enterprise software, and hence it is extremely important to study Java for those who are interested in working on backend development, application security, or web systems with scalability. Internships provide freshers with hands-on experience in writing optimized code, debugging, version control, and project collaboration.

On the opposite end, the world of technology is also eager for developers who excel at everything. This is why an full stack web development internship is a first preference for future professionals. With these internships, you get exposed to frontend and backend technologies—HTML, CSS, JavaScript, React, Node.js, Express, MongoDB, etc.—and you become a jack-of-all-trades of the world.

But above all, it is not that these internships simply teach you how to code, but how they teach you how to work, manage teams, deadlines, and deployable applications that solve real problems.

From product companies to tech startups or freelance work, the hands-on experience you learn through a concerted internship can define your career path. Theory is fine to learn, but experience is what gets you ready for a job.

#embedded systems course in Kerala#full stack java developer training in Kerala#python full stack developer course in Kerala#data analysis course for beginners#data analytics courses in kerala#full stack java developer course with placement#software developer internship in Kerala#java internship for freshers#full stack web development internship#software training institutes in kochi#best software training institute in kerala#best software training institute in trivandrum#software training institutes in kannur#best software training institute in calicut#data science course in kerala#data science and ai certification course#certification in ai and ml

1 note

·

View note

Text

What Makes Python the Most Student-Friendly Tool for Data Analysis?

Introduction

In the data-driven era of today, Python has become a first-choice programming language for students entering the field of data analysis. Its ease of use, flexibility, and strong ecosystem make it a perfect choice for students. Let’s explore why Python is the most student-friendly tool for data analysis. Read More

0 notes

Text

7 Common Business Challenges Solved by Financial Data Analysis

Businesses today generate enormous amounts of financial data. Yet, many struggle to transform this data into actionable insights. Without proper analysis, companies face cash flow problems, pricing inefficiencies, rising costs, and even fraud risks.

Financial data analysis is the key to unlocking strategic decision-making, improving profitability, and ensuring long-term sustainability. By leveraging data-driven insights, businesses can optimize operations, manage risks, and seize new opportunities.

In this blog, we’ll explore seven common business challenges and how financial data analysis effectively addresses them.

0 notes

Text

Looking to streamline your distribution operations and gain better control over secondary sales? Zylem offers powerful secondary sales tracking and sales tracking software designed to give you real-time insights, improve sales forecasting, and boost productivity. Our secondary sales tracking system is built to handle the complexities of modern distribution networks, while our sales analysis software, data extraction software, and business analytics software help you unlock actionable intelligence from your sales data. From tracking distributor performance to optimizing retail execution, Zylem empowers you with the tools you need to drive smarter, faster business decisions.

#secondary sales management software#distribution management software#secondary sales tracking system#sales tracking software#business analytics software#sales analysis software#sales data analysis software#Data Extraction Software

0 notes

Text

Finished my last midterm for my degree today... feeling dread. Can't get an entry level data scientist position 💔 became a math and statistics machine in the last 4 years but my downfall was only receiving basic training on SQL

#now I have to set aside free time to learn more coding languages#and business data analytics software :(#but hey maybe I can do some cool statistical experiments solo to build my portfolio#get ready for a disco elysium ao3 analysis#gonna webscrape ao3 and analyze for trends in what fics get the most engagement 🫣

0 notes

Text

Happiness, Heartbreak, and Hamlet: Sentiment Analysis in Classic Literature

What do The Odyssey, The Lord of the Rings, and Star Wars have in common? Beyond gripping narratives and unforgettable characters, they all follow a timeless structure—one first outlined by Joseph Campbell in 1949.

Campbell, a scholar of mythology and literature, spent years studying the world’s greatest stories. Drawing from modern psychological methods and centuries of myth, he identified a recurring pattern in heroic tales across cultures. He called this the monomyth, or the Hero’s Journey—a narrative framework that captures the essence of transformation, adventure, and self-discovery.

Joseph Campbell spent five years piecing together the Hero’s Journey, dedicating nine hours a day to reading mythology from around the world. He combed through epic tales, religious texts, and folklore, uncovering a pattern that connected them all. But imagine if he had a shortcut—a tool that could break down the themes, emotions, and narrative arcs across thousands of stories automatically.

That’s where sentiment analysis comes in. While it wouldn’t have handed Campbell his theory on a silver platter, it might have given him a data-driven lens to view storytelling in a whole new way.

Sentiment analysis lets us quantify emotions within text, tracking the emotional highs and lows of a story through computational analysis. It’s already been used to analyze presidential speeches, social media trends, and even Shakespeare’s works. So why not apply it to classic literature and see how emotions shift across different narratives?

In this post, we’ll dive into how sentiment analysis works, highlight fascinating projects that use it, and explore how I built my own model to track the emotional beats in timeless stories. From heart-wrenching tragedies to uplifting resolutions, sentiment analysis offers a new way to uncover the rhythm of storytelling.

What is sentiment analysis?

At its core, sentiment analysis is about figuring out how people feel based on text. Using Natural Language Processing (NLP), sentiment analysis detects whether a piece of writing is positive, negative, or neutral.

So, where is sentiment analysis actually useful?

Social Media Monitoring: Companies track tweets and comments to understand audience sentiment.

Customer Reviews: Businesses use it to analyze feedback and gauge customer satisfaction.

Political Analysis: Researchers assess public opinion by analyzing speeches, news articles, or social media discussions.

Mental Health & Well-being: Some applications even scan text messages for signs of distress and alert support services.

And, for nerds like myself, sentiment analysis is a tool we can use for data exploring (or to produce really bizzare graphs).

Notable projects that use sentiment analysis

Sentiment analysis has been applied to historical speeches, famous books, and even song lyrics. Here are a few fascinating projects:

Shakespeare’s Emotional Patterns : Some researchers have analyzed Shakespeare’s plays to track mood shifts across acts and scenes.

Tracking Happiness in Literature : Studies have examined whether books published in different eras get darker or more optimistic over time.

Presidential Speech Analysis : Computational linguists have used sentiment analysis to measure how presidential rhetoric has evolved.

Social Media Sentiment: A project is measuring the sentiment across social media platforms before and after the Covid-19 pandemic.

Each of these projects gives us new perspectives on classic texts, glocal political shifts and historical trends—making sentiment analysis a powerful tool for storytelling and data science.

How does Sentiment Analysis work?

Now, let’s talk how sentiment analysis works. There are two main approaches:

Lexicon Method (Simple & Rule-Based)

This method relies on predefined word lists of positive and negative words. If a text contains more happy words than sad ones, it’s positive! If it’s full of negative words, it’s a downer. Simple, right?

Pros: Easy to implement, explainable results Cons: Struggles with sarcasm, context, and nuanced language (e.g., “This book is insanely good” might wrongly be flagged as negative because of “insanely”)

Machine Learning Method (AI-Powered & Smarter)

Machine learning models train on massive datasets, learning patterns instead of just counting words. They can interpret context, irony, and complex emotions better than lexicon-based models.

Pros: More accurate, can handle advanced language structures

Cons: Needs lots of training data, can behave unpredictably

For my own sentiment analyst, I stuck with the Lexicon Method—simple, efficient, and perfect for crunching through Victorian drama.

The script I wrote loads predefined lists of positive and negative words from text files. It then scans Wuthering Heights, breaking the book into chapters and scoring each one based on the number of happy vs sad words.

You can find the entire script at github below:

GitHub - alexheywood/py-sentiment-analysis: A python sentiment analysis using two word lists

Key Steps in My Code:

Loads positive and negative lexicon files

Cleans and preprocesses the text (removing punctuation, lowercasing everything)

Splits the book into chapters

Calculates sentiment scores for each chapter

Plots the results (because visuals = awesome)

What the Results Showed:

Wuthering Heights

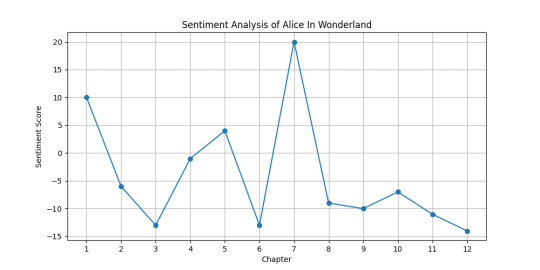

Alice In Wonderland

Frankenstein

It looks like Bronte's masterpiece is the greatest emotional rollercoaster of the three, based on this sentiment analysis. You can see that the tone of the words used throughout her novel vary greatly in comparison to Alice in Wonderland, where the change is far less volatile.

It's interesting that two of the three novels end more negative than they begin.

Tracking sentiment across literature helps us visualize emotional pacing. It can help us identify critical mood shifts within a story. We can compare multiple books to see which ones are more emotionally intense and linguists can uncover hidden themes, like whether a story follows a classic happiness-to-conflict-to-resolution structure

Sentiment analysis gives us a fresh, data-driven way to explore beloved stories.

#sentiment analysis#data analysis#data#programming#python#scripting#literature#books#novel#fiction#wuthering heights#coding#code#development#software

0 notes