#General Architecture of the Microprocessor and its operations

Explore tagged Tumblr posts

Text

Q.ANT Introduce Active Quantum Demonstration At ISC 2025

Q.ANT is prepared to impress at ISC 2025 with its first interactive live demos of its photonic Native Processing Server (NPS).

Guests will interact with functional photonic computing to demonstrate how light may boost energy and computer efficiency for complex scientific tasks like artificial intelligence, physics simulations, and others.

Key Technology: Light-Powered Computing

Q.ANT innovates with light-powered computing. The NPS calculates using light, unlike digital processors that employ electronic impulses. Instead of using digital abstraction, this fundamental improvement lets the system conduct operations directly in the optical domain, making computing more efficient, scalable, and sustainable.

The NPS is built on Q.ANT’s LENA architecture. A unique thin-film lithium niobate (TFLN) photonic chip is essential to this technology. This cutting-edge microprocessor performs complex, nonlinear maths directly using light. Thus, low-loss, high-speed optical modulation is possible without thermal crosstalk issues in electrical systems. Dr. Michael Förtsch, CEO of Q.ANT, says doing mathematical transformations natively with light transforms HPC economics, especially for more complex scientific workloads, physics simulations, and artificial intelligence.

Unmatched benefits and performance

Q.ANT NPS is expected to improve several key aspects for high-performance computing and data centres:

Outstanding Energy Efficiency:

NPS energy efficiency is expected to be 30 times higher than existing systems. This energy reduction is crucial for sustainable Quantum Computing.

That the NPS doesn’t need active cooling equipment boosts its efficiency. This eliminates complex cooling systems and saves money and energy.

The approach allows up to 100x higher compute density per rack and 90x lower power consumption per application in a data centre framework. Modern HPC systems and data centres require more electricity.

Performance and Accuracy in Computing:

The system provides 99.7% 16-bit floating point precision for all chip computations. Science and AI demand this accuracy.

Bob Sorensen, Senior VP for Research and Chief Analyst for Quantum Computing at Hyperion Research, believes this shows that analogue computing may be precise, effective, and deployable. One of his comments is “Attacking two of the biggest challenges in photonic computing: integration and precision”.

NPS efficiency is improved by 40–50% fewer operations for equal output.

Smooth Integration with Infrastructure:

New computer paradigms are difficult to integrate into digital systems. Q.ANT’s photonic architecture was designed to improve computing models.

PCI Express integration makes the NPS compatible with current HPC and data centre environments.

It supports Keras, TensorFlow, and PyTorch. This “seamless plug-and-play adoption” gives early AI and HPC adopters a competitive advantage by making Q.ANT product use easier.

Built for Next-Generation AI and Science

Q.ANT’s photonic NPS is ideal for data-intensive applications that exceed typical digital processors. These include:

Computational fluid dynamics, molecular dynamics, and material design are essential scientific simulations and physics. These simulations’ complex nonlinear and mathematical processes challenge digital systems, but the NPS excels at them.

Light can natively do sophisticated calculations, making it helpful for advanced picture analysis.

Large-scale AI model training and inference: The NPS is well-positioned to speed these processes. Light-based computation of nonlinear functions and Fourier transformations reduces AI model parameters, simplifying designs and system requirements.

At ISC 2025, Experience the Future

Q.ANT will exhibit their NPS at ISC 2025 in Hamburg, Germany, June 10–12. Q.ANT’s technology will be demonstrated at Hall H Booth G12 for a once-in-a-lifetime experience. This live display allows direct involvement with functional photonic computing and shows its potential to boost energy and computational efficiency in a variety of hard scientific applications.

In conclusion

Q.ANT’s photonic NPS replaces digital processors and advances computing. For the most demanding scientific and artificial intelligence applications, it will revolutionise high-performance computing by harnessing light to calculate with extraordinary accuracy and energy economy.

#artificialintelligence#QANT#ISC2025#NativeProcessingServer#HighPerformanceComputing#QuantumComputing#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

Understanding Embedded Computing Systems and their Role in the Modern World

Embedded systems are specialized computer systems designed to perform dedicated functions within larger mechanical or electrical systems. Unlike general-purpose computers like laptops and desktop PCs, embedded systems are designed to operate on specific tasks and are not easily reprogrammable for other uses. Embedded System Hardware At the core of any embedded system is a microcontroller or microprocessor chip that acts as the processing brain. This chip contains the CPU along with RAM, ROM, I/O ports and other components integrated onto a single chip. Peripherals like sensors, displays, network ports etc. are connected to the microcontroller through its input/output ports. Embedded systems also contain supporting hardware like power supply circuits, timing crystal oscillators etc. Operating Systems for Embedded Devices While general purpose computers run full featured operating systems like Windows, Linux or MacOS, embedded systems commonly use specialized Real Time Operating Systems (RTOS). RTOS are lean and efficient kernels optimized for real-time processing with minimal overhead. Popular RTOS include FreeRTOS, QNX, VxWorks etc. Some simple devices run without an OS, accessing hardware directly via initialization code. Programming Embedded Systems Embedded Computing System are programmed using low level languages like C and C++ for maximum efficiency and control over hardware. Assembler language is also used in some applications. Programmers need expertise in Microcontroller architecture, peripherals, memory management etc. Tools include compilers, linkers, simulators and debuggers tailored for embedded development. Applications of Embedded Computing Embedded systems have revolutionized various industries by bringing intelligence and connectivity to everyday devices. Some key application areas include: Get more insights on Embedded Computing

Unlock More Insights—Explore the Report in the Language You Prefer

French

German

Italian

Russian

Japanese

Chinese

Korean

Portuguese

Alice Mutum is a seasoned senior content editor at Coherent Market Insights, leveraging extensive expertise gained from her previous role as a content writer. With seven years in content development, Alice masterfully employs SEO best practices and cutting-edge digital marketing strategies to craft high-ranking, impactful content. As an editor, she meticulously ensures flawless grammar and punctuation, precise data accuracy, and perfect alignment with audience needs in every research report. Alice's dedication to excellence and her strategic approach to content make her an invaluable asset in the world of market insights.

(LinkedIn: www.linkedin.com/in/alice-mutum-3b247b137 )

#Embedded Computing#Embedded Systems#Microcontrollers#Embedded Software#Iot#Embedded Hardware#Embedded Programming#Edge Computing#Embedded Applications#Industrial Automation

0 notes

Photo

B.Tech Back Paper Tuition In Noida For Microprocessor B.Tech Back Paper Tuition In Noida For Microprocessor Introduction to Microprocessor Tuition In Noida Introduction to Microprocessor and its applications, Microprocessor Evolution Tree, Microprocessor…

#addressing modes#Amity University B.Tech Tuition In Noida#and controls structures. Assembler Level Programming: Memory space allocation for monitor and user program.Assembly language program using D#Arithmetic#B.Tech Back Paper Tuition In Noida For Microprocessor#B.Tech Back Paper Tuition In Noida For Microprocessor Introduction to Microprocessor Tuition In Noida Introduction to Microprocessor and its#Bennett University B.Tech Tuition In Noida#Branch control transfer and Processor control. Interrupts: Hardware and software interrupts. Fundamental of Programming: Program structure f#Bus Interface Unit#Buses#Component of Microprocessor system: Processor#DMA I/O interface#Execution unit#Flowcharts of series#Galgotias University B.Tech Tuition In Noida#General Architecture of the Microprocessor and its operations#Inputs-outputs (I/Os) and other Interfacing devices. 8-bit Microprocessor: Intel 8085 microprocessor: Pin Diagram#Instruction Set Groups: Data transfer#Internal architecture: ALU#Interrupt Driven I/O#interrupt: Instruction Set of 8085: Instruction format#Logic#Machine cycles and Tstates and Execution time computation of an instruction. Classification of instruction with their examples. Writing of a#Memory#Memory addressing#Memory Mapped I/O#memory segmentation#Microprocessor Architecture (Harward & Princeton)#Microprocessor Evolution Tree#Min & Max operating Modes 8086Instruction set: Format

0 notes

Text

What are the characteristics of different generations of computer?

Generations of Computers

First generation: vacuum tubes (1940–1956)

The earliest computers used gigantic, room-sized vacuum tubes as their main memory and magnetic drums as their circuitry. The original computers were highly expensive to run and consumed a lot of power in addition to producing a lot of heat, which frequently led to problems. A maximum of 20,000 characters may fit within the device.

First-generation computers were limited to solving a single issue at a time and depended on machine language, the most basic programming language that computers could understand. Operators would need days or perhaps weeks to build up a new issue. Printouts were used for output displays, and input was dependent on punched cards and paper tape.

The Von Neumann architecture, which shows the design architecture of an electrical digital computer, was first established during this generation. J. Presper Eckert created the UNIVAC and ENIAC computers, which later served as examples of first-generation computer technology. The United States Census Bureau received the UNIVAC, the first commercial computer, in 1951.

Second Generation: Transistors (1956–1963)

Transistors would take the role of vacuum tubes in the second generation of computers, changing the world. The transistor was created in 1947 at Bell Labs, but it wasn't used often in computers until the late 1950s. Hardware innovations, including magnetic core memory, magnetic tape, and the magnetic disc, were also included in this generation of computers.

Since the transistor outperformed the vacuum tube, computers of the second generation are now smaller, quicker, cheaper, more energy-efficient, and more dependable. The transistor was a tremendous advance over the vacuum tube, even though it still produced a lot of heat that may harm the computer. For input and output, a second-generation computer still used punched cards.

When Did Assembly Languages First Appear on Computers?

Symbolic, or assembly, languages were introduced to second-generation computers in place of the obscure binary language, enabling programmers to define instructions in words. High-level programming languages, such as the earliest iterations of COBOL and FORTRAN, were also being created around this time. These were also the first computers that used a magnetic core rather than a magnetic drum to store instructions in memory.

The atomic energy sector was the target market for the first computers of this generation.

Third generation: integrated circuits (1964–1971)

The third generation of computers was distinguished by the advancement of the integrated circuit. Computer speed and efficiency significantly increased once transistors were shrunk and installed on silicon chips or semiconductors.

Users would interact with a third-generation computer through keyboards, displays, and interfaces with an operating system instead of punched cards and printouts. This allowed the device to execute several programs at once with central software that supervised the memory. Because they were more compact and affordable than their forerunners, computers were made available to the general public for the first time.

Have You Ever heard..? Small electronic components known as integrated circuit (IC) chips are constructed using semiconductor material.

In the 1950s, Jack Kilby of Texas Instruments and Robert Noyce of Fairchild Semiconductor created the first integrated circuit.

Fourth generation: microprocessors (1971–present)

The fourth generation of computers was introduced with the microprocessor, which allowed thousands of integrated circuits to be packed onto a single silicon chip. The original generation's technology, which once filled a whole room, can now fit in the palm of your hand. Input/output controls, memory, the central processor unit, and other components were all combined into a single chip in the 1971-developed Intel 4004 chip.

IBM released its first personal computer for home use in 1981, while Apple released the Macintosh in 1984. As more and more commonplace goods started to employ the microprocessor chip, microprocessors also left the domain of desktop computers and entered numerous spheres of existence.

As these tiny computers gained strength, they could be connected to one another to create networks, which eventually resulted in the creation of the Internet. Each fourth-generation computer also saw the introduction of the mouse, portable devices, and graphical user interfaces (GUIs).

The fourth generation of computers was introduced with the microprocessor, which allowed thousands of integrated circuits to be packed onto a single silicon chip. The original generation's technology, which once filled a whole room, can now fit in the palm of your hand. Input/output controls, memory, the central processor unit, and other components were all combined into a single chip in the 1971-developed Intel 4004 chip.

Fifth Generation Computers

AI is the enabling technology for the fifth generation of computers. It enables machines to behave just like people. It is frequently used in speech recognition, medical, and entertainment systems. It has also demonstrated impressive success in the area of gaming, where computers are capable of defeating human opponents.

The fifth generation of computers has the greatest speed, the smallest size, and a much larger usage area. Although complete AI has not yet been attained, it is frequently predicted that this dream will likewise come true very soon, given current progress.

When comparing the characteristics of different computer generations, it is sometimes claimed that while there has been a significant advancement in terms of operating speed and accuracy, the dimensions have decreased with time. Additionally, value is declining while reliability is really rising.

The main characteristics of fifth-generation computers are:

Main electrical part

Utilizes parallel process and Ultra Large-Scale Integration (ULSI) technologies based on artificial intelligence (ULSI has millions of transistors on a single microchip)

Language

Recognize simple language (human language).

Size

Portable and small in size.

Input / output device

Keypad, display, mouse, trackpad (or touchpad), touchscreen, pen, voice input (recognize voice/speech), laser scanner, etc.

Example of the fifth generation

Desktops, laptops, tablets, smartphones, etc.

2 notes

·

View notes

Text

Top 20 AI tech terms to read before 2025

Statistical Language Modeling Statistical Language Modeling is the development of probabilistic models that can predict the next word within any given sequence.

Computational learning theory Computational learning theory (CoLT) is a branch of AI concerned with using mathematical methods or the design applied to computer learning programs.

Syntactic analysis Syntactic analysis is an analysis relationship between words and focuses on understanding the logical meaning of sentences or of parts of sentences.

Forward Chaining Forward chaining is a form of reasoning while using an inference engine. It is also called forward deduction or forward reasoning.

Language detection In natural language processing, language detection determines the natural language of the given content taking computational approach to the problem

WeChat Chatbot A WeChat bot works by recognizing keywords in content strings and utilizing rules that are hand-coded for reaction to various circumstances.

Mathematical optimization Mathematical optimization is the selection of a best element, with regard to some criterion, from some set of available alternatives

White Label Software White-label Softwares are generally unbranded fully developed Softwares resold by Saas companies after renaming and rebranding as their software.

Information Retrieval Information retrieval is the process of obtaining information system resources that are relevant to an information need from a collection of resources

Knowledge Engineering Knowledge engineering is a branch of AI that develops rules to apply to data, to simulate the judgment & thought process of a human expert.

Spatial-temporal Reasoning Spatial–temporal reasoning helps robots understand & navigate time and space. It is useful for problem-solving and organizational skills.

Statistical Inference Statistical inference is defined as the process of using data analysis to infer properties of an underlying distribution of probability.

Euclidean distance Euclidean distance refers to the distance between two points in Euclidean space. It essentially represents the shortest distance between two points.

Lemmatization Lemmatization is a text normalization technique used in NLP to group the inflected forms of a word so they can be analyzed as a single item.

Chatbot Architecture The heart of chatbot development is what we would call chatbot architecture. It changes based on the usability and context of business operations.

Customer experience as a service (CXaaS) CXaas is a cloud-based customer solution that provides a flexible approach to customer experience by providing reliability and efficiency to customers

Pattern matching In computer science, pattern matching is the process of checking a given sequence of tokens or data against a pattern and checking its presence.

Artificial Narrow Intelligence Narrow AI is goal-oriented and designed to perform singular tasks and is very intelligent at completing the specific task it is programmed to do.

Finite automata The finite automata is an abstract computing device used for recognizing patterns. A finite automaton/machine has a finite number of states.

Space complexity Space complexity is pretty much a measurement of the total amount of memory that algorithms or operations need to run according to their input size.

Vision processing unit A vision processing unit (VPU) is a type of microprocessor aimed at accelerating machine learning and artificial intelligence technologies.

2 notes

·

View notes

Text

TAFAKKUR: Part 399

HUMAN VISUAL SYSTEM AND MACHINE SYSTEM

Of the five senses - vision, hearing, smell, taste and touch - vision is undoubtedly the one that man has come to depend upon above all others and indeed the one that provides most of the data he receives. Not only do the input pathways from the eyes provide megabits of information at each glance, but also the data rates for continuous viewing probably exceed 10 megabits per second.

Another feature of the human visual system is the ease with which interpretation is carried out. We see a scene as it is - trees in a landscape, books on a desk, products in a factory. No obvious deductions are needed and no overt effort is required to interpret each scene. In addition, answers are immediate and available normally within a tenth of a second. The important point is that we are for the most part unaware of the complexities of vision. Seeing is not a simple process.

We are still largely ignorant of the process of human vision. However, man is inventive and he is now trying to get machines to do much of his work for him. For the simplest tasks there should be no particular difficulty in mechanization but for more complex tasks the machine must be given man’s prime sense, i.e. that of vision. Efforts have been made to achieve this, sometimes in modest ways, for well over 30 years. At first, such tasks seemed trivial and schemes were devised for reading, for interpreting chromosome images and so on. But when such schemes were confronted with rigorous practical tests, the problems often turned out to be more difficult.

Computer vision blends optical processing and sensing, computer architecture, mechanics and a deep knowledge of process control. Despite some success, in many fields of application it really is still in its infancy.

With the current state of computing technology, only digital images can be processed by our machines. Because our computers currently work with numerical rather than pictorial data, an image must be converted into numerical form before processing.

The field of machine or computer vision may be sub-divided into six principal areas (1) sensing, (2) pre-processing, (3) segmentation, (4) description (5) recognition and (6) interpretation. Sensing is the process that yields a visual image. The sensor, most commonly a TV camera, acquires an image of the object that is to be recognised or inspected. The digitizer converts this image into an array of numbers, representing the brightness values of the image at a grid of points; the numbers in the array are called pixels. Pre-processing deals with techniques such as noise reduction and the enhancement of details. The pixel array is fed into the processor, a general-purpose or custom-built computer that analyses the data and makes the necessary decisions. Segmentation is the process that partitions an image into objects of interest. The segmentation of images should result in regions which correspond to objects, parts of objects or groups of objects which appear in the image. These features of these entities, along with their positions relative to the entire image, help us to make a meaningful interpretation. Description deals with the computation of features such as size, shape, texture, etc. suitably for differentiating one type of object from another. Recognition is the process that identifies these objects. Finally, interpretation assigns meaning to an ensemble of recognised objects.

Any description of the human visual system only serves to illustrate how far computer vision has to go before it approaches human ability.

In terms of image acquisition, the eye is totally superior to any camera system yet developed. The retina, on which the upside-down image is projected, contains two classes of discrete light receptors - cones and rods. There are between 6 and 7 million cones in the eye, most of them located in the central part of the retina called the fovea. These cones are highly sensitive to colour and the eye muscles rotate the eye so that the image is focused primarily on the fovea. The cones are also sensitive to bright light and do not operate in dim light. Each cone is connected by its own nerve to the brain.

There are at least 75 million rods in the eye distributed across the surface of the retina. They are sensitive to light intensity but not to colour.

The range of intensities to which the eye can adapt is of the order of 1010, from the lowest visible light to the highest bearable glare. In practice the eye per forms this amazing task by altering its own sensitivity depending on the ambient level of brightness.

Of course, one of the ways in which the human visual system gains over the machine is that the brain possesses some 1010 cells (or neurons), some of which have well over 10,000 contacts (or synapses) with other neurons. If each neuron acts as a type of microprocessor, then we have an immense computer in which all the processing elements can operate concurrently. Probably, the largest manmade computer still contains less than a million processing elements, so the majority of the visual and mental processing tasks that the eye-brain system can perform in a flash have no chance of being performed by present-day man-made systems.

Added to these problems of scale is the problem of how to organize such a large processing system, and also how to program it. Clearly, the eye- rain system is partly hard-wired but there is also an interesting capability to program it dynamically by training during active use. This need for a large parallel processing system with the attendant complex control problems illustrates clearly that machine vision must indeed be one of the most difficult intellectual problems to tackle.

Part of the problem lies in the fact that the sophistication of the human visual system makes robot vision systems pale by comparison.

Developing general-purpose computer vision systems has been proved surprisingly difficult and complex. This has been particularly frustrating for vision researchers, who experience daily the apparent ease and spontaneity of human perception.

As can be seen from the information given above, developing a computer-vision system requires knowledge. Hence the eye performs better and better than even the best computer-vision system, the very complex eye-brain system also requires more comprehensive knowledge to build. When man understands and discovers the functioning of the eye-brain system, better computer-vision systems will be developed. That means the eye-brain system (like other systems in the body of human being) is a very deep and rich knowledge source. As this system has links with other systems in the body, it obviously shows that the maker of these systems is One who knows everything about every single part of the whole body, for not only the eye-brain system but the entire body develops accordingly.

So, who can be the maker of this fantastic eye-brain system? If it is said that it is self-creating, this has no meaning because everybody knows that such an important system cannot create itself, just as a computer-vision system cannot give itself existence. As for chance, is it possible for such a system, which is full of knowledge for human being to imitate in order to develop computer- vision systems, to be made by chance at all? Of course not. So who is the maker of the eye-brain system?

The maker or the creator of this system can only be One who has supernatural power. He says in His Holy Book:

‘Have we not made for him (human being) a pair of eyes?’ (The Holy Qur’an 90.8).

Yes, indeed, He made them just as He made the whole of the rest of the cosmos with His unlimited knowledge.

#allah#god#prophet#Muhammad#quran#ayah#sunnah#hadith#islam#muslim#muslimah#hijab#help#revert#convert#dua#salah#pray#prayer#reminder#religion#welcome to islam#how to convert to islam#new convert#new muslim#new revert#revert help#convert help#islam help#muslim help

2 notes

·

View notes

Photo

The Lisa Hardware

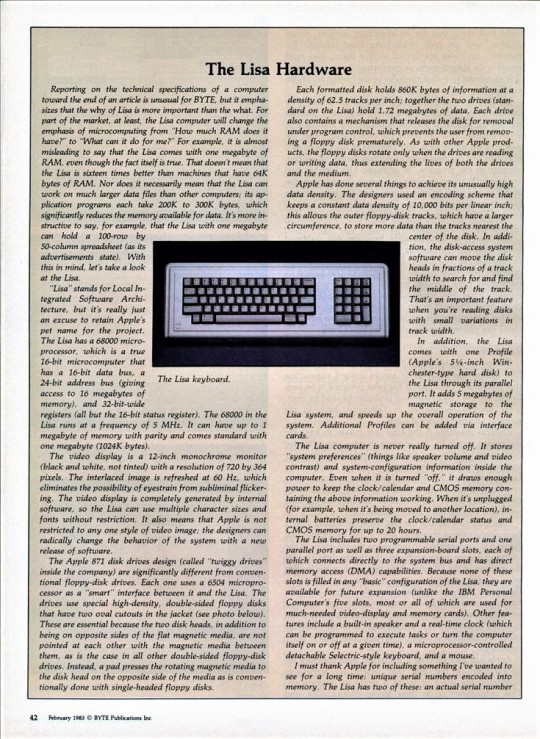

Reporting on the technical specifications of a computer toward the end of an article is unusual for BYTE, but it emphasizes that the why of Lisa is more important than the what. For part of the market, at least, the Lisa computer will change the emphasis of microcomputing from “How much RAM does it have?” to “What can it do for me?” For example, it is almost misleading to say that the Lisa comes with one megabyte of RAM, even though the fact itself is true. That doesn’t mean that the Lisa is sixteen times better than machines that have 64K bytes of RAM. Nor does it necessarily mean that the Lisa can work on much larger data files than other computers; its application programs each take 200K to 300K bytes, which significantly reduces the memory available for data. It’s more instructive to say, for example, that the Lisa with one megabyte can hold a 100-row by 50-column spreadsheet (as its advertisements state). With this in mind, let’s take a look at the Lisa.

“Lisa” stands for Local Integrated Software Architecture, but it’s really just an excuse to retain Apple’s pet name for the project. The Lisa has a 68000 microprocessor, which is a true 16-bit microcomputer that has a 16-bit data bus, a 24-bit address bus (giving access to 16 megabytes of memory), and 32-bit-wide registers (all but the 16-bit status register). The 68000 in the Lisa runs at a frequency of 5 MHz. It can have up to 1 megabyte of memory with parity and comes standard with one megabyte (1024K bytes).

The video display is a 12-inch monochrome monitor (black and white, not tinted) with a resolution of 720 by 364 pixels. The interlaced image is refreshed at 60 Hz, which eliminates the possibility of eyestrain from subliminal flickering. The video display is completely generated by internal software, so the Lisa can use multiple character sizes and fonts without restriction. It also means that Apple is not restricted to any one style of video image; the designers can radically change the behavior of the system with a new release of software.

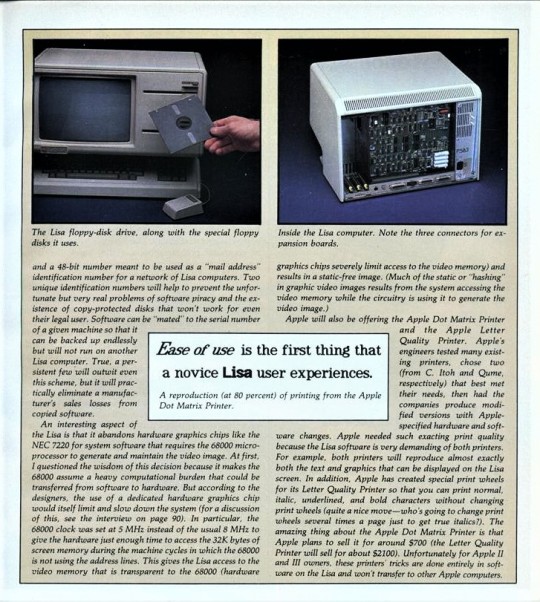

The Apple 871 disk drives design (called “twiggy drives” inside the company) are significantly different from conventional floppy-disk drives. Each one uses a 6504 microprocessor as a “smart” interface between it and the Lisa. The drives use special high-density, double-sided floppy disks that have two oval cutouts in the jacket (see photo below). These are essential because the two disk heads, in addition to being on opposite sides of the flat magnetic media, are not pointed at each other with the magnetic media between them, as is the case in all other double-sided floppy-disk drives. Instead, a pad presses the rotating magnetic media to the disk head on the opposite side of the media as is conventionally done with single-headed floppy disks.

Each formatted disk holds 860K bytes of information at a density of 62.5 tracks per inch; together the two drives (standard on the Lisa) hold 1.72 megabytes of data. Each drive also contains a mechanism that releases the disk for removal under program control, which prevents the user from removing a floppy disk prematurely. As with other Apple products, the floppy disks rotate only when the drives are reading or writing data, thus extending the lives of both the drives and the medium.

Apple has done several things to achieve its unusually high data density. The designers used an encoding scheme that keeps a constant data density of 10,000 bits per linear inch; this allows the outer floppy-disk tracks, which have a larger circumference, to store more data than the tracks nearest the center of the disk. In addition, the disk-access system software can move the disk heads in fractions of a track width to search for and find the middle of the track. That’s an important feature when you’re reading disks with small variations in track width.

In addition, the Lisa comes with one Profile (Apple’s 5-1/4-inch Winchester-type hard disk) to the Lisa through its parallel port. It adds 5 megabytes of magnetic storage to the Lisa system, and speeds up the overall operation of the system. Additional Profiles can be added via interface cards.

The Lisa computer is never really turned off. It stores “system preferences” (things like speaker volume and video contrast) and system-configuration information inside the computer. Even when it is turned “off,” it draws enough power to keep the clock/calendar and CMOS memory containing the above information working. When it’s unplugged (for example, when it’s being moved to another location), internal batteries preserve the clock/calendar status and CMOS memory for up to 20 hours.

The Lisa includes two programmable serial ports and one parallel port as well as three expansion-board slots, each of which connects directly to the system bus and has direct memory access (DMA) capabilities. Because none of these slots is filled in any “basic” configuration of the Lisa, they are available for future expansion (unlike the IBM Personal Computer’s five slots, most or all of which are used for much-needed video-display and memory cards). Other features include a built-in speaker and a real-time clock (which can be programmed to execute tasks or turn the computer itself on or off at a given time), a microprocessor-controlled detachable Selectric-style keyboard, and a mouse.

I must thank Apple for including something I’ve wanted to see for a long time: unique serial numbers encoded into memory. The Lisa has two of these: an actual serial number An interesting aspect of the Lisa is that it abandons hardware graphics chips like the NEC 7220 for system software that requires the 68000 microprocessor to generate and maintain the video image. At first, I questioned the wisdom of this decision because it makes the 68000 assume a heavy computational burden that could be transferred from software to hardware. But according to the designers, the use of a dedicated hardware graphics chip would itself limit and slow down the system (for a discussion of this, see the interview on page 90). In particular, the 68000 clock was set at 5 MHz instead of the usual 8 MHz to give the hardware just enough time to access the 32K bytes of screen memory during the machine cycles in which the 68000 is not using the address lines. This gives the Lisa access to the video memory that is transparent to the 68000 (hardware graphics chips severely limit access to the video memory) and results in a static-free image. (Much of the static or “hashing” in graphic video images results from the system accessing the video memory while the circuitry is using it to generate the video image.)

Apple will also be offering the Apple Dot Matrix Printer and the Apple Letter Quality Printer. Apple’s engineers tested many existing printers, chose two (from C. Itoh and Qume, respectively) that best met their needs, then had the companies produce modified versions with Apple-specified hardware and software changes. Apple needed such exacting print quality because the Lisa software is very demanding of both printers. For example, both printers will reproduce almost exactly both the text and graphics that can be displayed on the Lisa screen. In addition, Apple has created special print wheels for its Letter Quality Printer so that you can print normal, italic, underlined, and bold characters without changing print wheels (quite a nice move who’s going to change print wheels several times a page just to get true italics?). The amazing thing about the Apple Dot Matrix Printer is that Apple plans to sell it for around $700 (the Letter Quality Printer will sell for about $2100). Unfortunately for Apple II and III owners, these printers��� tricks are done entirely in software on the Lisa and won’t transfer to other Apple computers.

Daily inspiration. Discover more photos at http://justforbooks.tumblr.com

10 notes

·

View notes

Text

2000 iMRS Prime in Conjunction with iMRS 2000

The Life Mat Company's PEMF manufacturer of choice is Swiss Bionic Solutions, creators of our previous device, the iMRS, as well as the two major systems we now offer: the iMRS Prime (also known as the iMRS 2000) and the Omnium1.

Our primary selection criteria for the original iMRS 2000:

• Proven efficacy in a wide variety of consumers for a long period of time

• The use of low-intensity PEMF fields is secure.

• Basic integrated bio-feedback to balance the users' health status with the signal

• Simplicity of use

• Functions and accessories are available in a variety of configurations.

• Product dependability

• Warranty and service level

• A sizable market share and sales volume to finance ongoing research and product growth

There are several PEMF programs on the market, and we've owned and used some of the top brands. We've been approached by other suppliers numerous times, but there are several significant reasons why we've stuck with Swiss Bionic.

youtube

The iMRS Prime expanded on all of this by including:

• A composite energy field combining PEMF and advanced Far Infrared.

• A sophisticated operating system with many input and output ports has much greater upgradeability than most PEMF implementations.

• A versatile signal generator capable of producing some kind of PEMF signal.

• Advanced real-time biofeedback through heart rate variability and blood oxygen saturation to determine which settings are most effective and to monitor key health parameters over time.

• Quick Start services have immediate access to seven distinct experiences.

• One or two consumers using two or three applicators at the same time.

• A localised Helmholtz Effect spot applicator.

• Significant enhancements to architecture, construction, and materials.

• Five tiers of systems, packages, packs, and packages (described below), each with a unique collection of features.

The iMRS Prime's fusion of innovations elevates it to a whole new category of PEMF device, well above standard PEMF and unlike everything else on the market today. The iMRS Prime Hybrid Collection, in particular, is one of the most effective ways of relaxation, rejuvenation, and stress reduction ever devised.

Prime Construction + Design by iMRS

Any component and design detail on the iMRS Prime is of the highest quality. We searched, and there is nothing remotely similar on the market.

The tough angled glass of the touch-screen control unit, the strong aluminum and resistant coating of the control unit and connector box, the comfortable padding and tactile, washable surface on the mat and other applicators, the indicator lights on every applicator, the thick, medical-grade cables and their rubberized outer coating, the easy-to-insert click plugs and sockets, the simplicity of the design

It took five years to conceptualize and design the Prime. Despite developing the world's most sophisticated PEMF machine, Swiss Bionic realized that the user interface had to be as easy, relaxed, and adaptable as possible. As a lifestyle and health care unit, it also needed to have a sleek, futuristic look that would blend in with any interior.

They still had to look at the finest fabrics and parts available. Anything else would jeopardize their goal of revolutionizing the PEMF industry. We believe they met their objectives.

Professional pemf therapy

The iMRS Prime is powered by a control unit that includes a touch-screen monitor housed within a solid aluminum casing and stand. It resembles an iMac device but is compact enough to fit into a small shoulder pocket.

Many of the system's features and configurations are configured here. It has been built to be user-friendly, and you will be able to use it within minutes. Sessions can be begun by simply pressing two keys.

• Bronze titanium finish brushed aluminum case and pedestal

• Capacitive touch screen monitor 10.2"

• A single cord connects to the Connector Box.

• There are two internal microphones.

• Two USB ports (for upgrades, firmware updates and more)

• Two earphone jacks (you should use your own ears instead of the Exagon Brain's earphones if you prefer).

The iMRS Prime Connector Box functions as a hub, allowing for the use of more applicators. It attaches to the control unit with a single long cord, keeping it clutter-free.

It connects two sets of applicators, as well as pairs of bio-feedback and brain entrainment devices, and is made of the same brushed aluminum as the control panel.

• A microprocessor that can handle many inputs and outputs.

• Two digital-analogue converters to accommodate several programs running at the same time

• There are six applicator ports (to run multiple applicators at the same time)

• 2 multi-pin connectors (for Exagon Sense devices)

• Two USB ports (for Exagon Brain devices)

• Click-in applicator sockets of medical standard

The iMRS Prime's primary care kit is a cushioned whole-body-length pad measuring 170 cm x 59 cm x 4.5 cm thick (68′′ x 23′′ x 1.75′′). It is made up of three pairs of copper coils, with the lowest area at the head end (17.50 microTesla) and the highest at the feet (up to 45 mT).

Again, this is a significant upgrade over the mat that came with its ancestor, the iMRS. It is still made of a solid, sturdy, and washable material, but it now has an embossed surface texture that makes it softer and more pleasant to lay on (even before the comfort level is increased further by adding Far Infra Red on the Hybrid version).

As for all of the Prime's applicators, it has a small LED lamp on its edge that changes color as its field changes polarity every two minutes, from blue to green to blue, and to red if it has a flaw. It folds and stores comfortably and can be tucked into a corner, under a bed, or placed in an extra shoulder bag since it is divided into three hinged sheets.

When you lie down on the mat, it bathes the whole body and all of its internal structures in a pulsed electro magnetic field produced by a complicated "sawtooth" signal. A cycle of normal earth-based frequencies (determined by the time of day) modulates the mat's field further, including the Schumann Resonance.

Although other elements are beneficial for localized pain reduction, tissue recovery, and stress management, regular sessions on the mat are central to using the iMRS for better fitness, recovery, and well-being. Mat sessions, by influencing any system in the body, are beneficial for a wide range of health problems, especially boosting energy levels and sleep quality, speeding up metabolism, and enhancing circulation, oxygenation, and neurological functions.

The regular PEMF mat is replaced by a PEMF and Far Infrared mat in the iMRS Prime Hybrid kit. The Hybrid mat is the first of its kind to integrate a pure PEMF signal with next-generation FIR processing, thanks to an additional layer of carbon fiber mesh. The FIR field augments the effects of PEMF in re-energizing and opening cell membrane pathways.

Applicator for Pads

Any iMRS Prime standard includes a cushioned pad measuring 58 cm x 34 cm x 4 cm (23′′ x 13.5′′ x 1.5′′). The pad uses two of the same copper coils as the entire body mat, but with a separate, square-wave signal and a maximum strength of 65 microTesla. Its greater coverage area, which is larger than the previous iMRS pad, is particularly useful for treating the entire back, but also other main body regions, such as the chest and abdomen, at higher intensities than you can use on the mat. The pad is particularly beneficial for managing discomfort and discomfort in the shoulders and back, tendinitis, bursitis, plantar fasciitis, and myofascial injuries, sore muscles after physical exercise, inflammation in large joints, and accelerating the healing of bone breaks, cartilage, and muscle tears. It is also beneficial to the function of different organs in the body.

Applicator for Spot

This unit, which is part of the top four levels of the iMRS Prime, builds on our experience with the Omnium1 and replaces the probes that came with the old iMRS and MRS2000 devices. The spot applicator has dimensions of 32 cm x 14 cm x 3 cm (17′′ x 5.5′′ x 1.25′′). It has two coils in two pads that are connected by a flat velcro-attached connector strap.

This enables it to concentrate concentrated attention on small areas of pain or dysfunction: either one coil wrapped around a side, foot, arm, knee, elbow, wrist, or ankle, or wrapped around a hand, foot, shoulder, knee, elbow, wrist, or ankle. When the Spot is wrapped, the coils opposite each other produce a Helmholtz Effect (a homogeneous magnetic field), which doubles the effects of the PEMF field in that region. This concentrated strength can be especially beneficial for those living with chronic pain or dysfunction, as well as those who suffer from frequent injuries. And we all get hurt and get wounds from time to time. For small soft tissue or joint trauma, the Spot's highest pressure may be incredibly powerful — when applied right after the injury, it's not uncommon to see discomfort disappearing within minutes, or at the very least within a day or two. Repairs to more dense tissues, such as cartilage and bone fractures, can take longer but are usually much quicker than average.

Tags: release, professional pemf therapy,

The Spot, like the pad, employs a square-wave signal and is usually used at much higher intensities than the mat. The mat and pad have average outputs of 45 and 65 microTesla (0.45 and 0.65 gauss), respectively, while the Spot has a maximum output of 120 microTesla (1.2 gauss), making it the most powerful of the three iMRS Prime applicators.

The Exagon Sense is a term used to describe a person's ability

Since it is an optional accessory, the Exagon Sense is one of the most important methods for maximizing the iMRS Prime's capacity. The Sense is an optical measurement unit that attaches to your finger and monitors your Heart Rate Variability (HRV, the fluctuating time interval between each pair of heartbeats).

With this information, the device transforms the entire iMRS Prime system into a one-of-a-kind bio-feedback loop. The Sense software and system are much more sophisticated than the iMORE device and the old iMRS system. For the first time, it is now possible to fine-tune the PEMF area to meet the evolving needs of the customer, save the data for up to a year, and analyze it using specialized HRV tools. As a result, the autonomic nervous system (ANS) is tuned up during each session. If the HRV work slowly improves over time (this is not a fast fix), the body is pushed toward its maximum capacity for responding to stress, enhancing its ability to regenerate itself and re-establish health and wellbeing.

The Sense even tests the amount of oxygen in your blood (SpO2). Its light wave senses changes in the color of the blood, which signify the level of oxygen saturation. A typical reading ranges from 95 to 100 percent (of oxygenated vs un-oxygenated haemoglobin). Both PEMF systems help to boost circulation, and during an iMRS Prime session, blood oxygen levels will increase by 2-3 percentage points. During each session, the Exagon Sense allows you to see the magnitude of this.

youtube

The Exagon Mind

The Sound and Light System on the iMRS Prime is the ideal complement to both the main system and the Exagon Sense. The Exagon Brain works like a spa for the brain, removing tension and improving social balance, mental concentration, and clarity.

When the mat is soaking the whole body in a rejuvenating field, the Exagon Brain augments its effects by promoting deeper relaxation and allowing you to sleep deeper, more restoratively.

The Brain, in contrast to the longer-term effects of the Sense, is a fast cure, normally providing rapid relief. This is significant because several scientific trials have found that stress is a major contributor to the majority of severe diseases.

The Brain device consists of a very comfortable visor with an elastic headband and cushioned inner edges that connects to the connector box via a USB plug. You can then attach your own headphones to the built-in music player, or we can advice you on the right styles to consider.

The Brain integrates four distinct modalities:

• Brain Entrainment of blinking lights – assists the brain in moving through the Alpha and Theta waves, which are associated with deep learning, imagination, visualization, and concept development.

• Binaural beats brain entrainment – a decades-old method of brain entrainment used in healing, meditation, and learning.

• Music therapy – build a music library by using the built-in music programs or downloading your favorite songs. We will provide you with music playlists for various reasons such as relaxation, therapy, studying, and so on.

• Color therapy: choose virtually every color from the light spectrum to be emitted by the LED lamps. Different colors have been found to affect moods, energy levels, enthusiasm, and cognitive ability in various ways.

Systems iMRS Prime / iMRS 2000

The iMRS Prime Basic is a basic version of the iMRS Prime.

An entry-level version that includes only the control unit, connector box, mat, and pad, as well as the top intensity, quick start programs, and 1-60 minute timer, but does not include the most powerful applicator, the Spot attachment.

The iMRS Prime Advanced is an advanced version of the iMRS Prime.

All in the Basic plus the Spot applicator is included. This is a smaller but more effective double pad for localized pain relief and tissue healing. It's particularly helpful for people who suffer from chronic pain or dysfunction, but it's also handy to have on hand in case you hurt yourself.

The Prime iMRS Expert

• The iGuide database (updated) with hundreds of health problems with optimal settings for assisting the body for each – particularly helpful for therapists and hospitals, but also used by many home users. • A Split Mode, which enables the use of two applicators at the same time. That is, for example, one person uses two Spots on two ankles. Alternatively, two people may use two separate pads, two Sense sensors, and two Sound and Light systems, each with its own set of settings.

Please keep in mind that, while the Prime Basic, Advanced, and Expert levels can be upgraded, the Prime Hybrid and Trial cannot; you must order them at the time of your initial order.

The iMRS Prime Hybrid is a hybrid medical imaging system.

All in the Expert package, plus a new form of mat that mixes PEMF with carbon-fibre-based Far Infrared electricity. The feeling generated by these combined technologies is similar to floating. As this is mixed with Heart Rate Variability and Sound + Light (in the iMRS Prime Hybrid Set), the feeling of greater calm and recovery is likely to be unlike anything you've ever seen — we call this the Bliss Package at the Life Mat Company!

The iMRS Prime Trial is a clinical trial of the iMRS.

This top-tier system contains everything from the Expert and Hybrid systems, as well as a modern signal generator. Designed for clinicians and academics, this allows them to replicate and invent PEMF signals used in laboratory studies. The Heart Rate Variability storage and measurement features of the machine then become a helpful means of measuring and tracking the body's responses and progress in response to these new signals. The iMRS Prime Trial is capable of producing any combination of waveforms, frequencies, pulsing, and intensities up to 1000 microTesla (which is still relatively low intensity compared with some other systems but far higher than we recommend for regular home use). The purchasing and use of this system/set comes with a training and qualification obligation.

Overall, we believe the iMRS Prime Trial's mix of functions has the potential to become a reference standard for the PEMF testing industry, assisting it in achieving standardised, replicable signal parameters and measures of their effects using the commonly accepted diagnostic normal of Heart Rate Variability.

But for the Trial version, each stage of the iMRS Prime employs the same triple sawtooth and square waves, as well as the same Nature-based frequencies and intensities, as were employed by the original iMRS, the MRS2000, and now the Omnium1. When using the body-length mat, the control center adjusts frequencies based on the time of day. Specifically, the body's circadian rhythms, the time-of-day organ hypothesis used in Traditional Chinese Medicine, and the various brain waves dominant at different times of day.

Costs

Our vendor sets the rates for us and all other sellers, but if you see a cheaper price anywhere, it's probably a scam, and it's normally second-hand — the manufacturer can only complete a deal at the official price for that country and currency. The key distinctions between distributors are not costs or the availability of local assistance, but rather standards of expertise and competence, as well as the standard of consultations and preparation. The destination of which we are exporting decides the price and currency used. Much of Europe's pricing is in Euros, and we have local currency prices for the United States, Canada, Hong Kong, and Singapore. The bulk of the world's pricing is in US dollars.

In the United Kingdom, iMRS Prime systems start at £2520 for the Basic model, growing to £4646 for our favourite, the iMRS Prime Hybrid Set, and £5377 for the iMRS Prime Trial Set tailored for therapists and researchers. We ship internationally, and for a complete price list for any country, please call us at 1-800-900-5556 X 1. Please keep in mind that, whether you are VAT free, these rates are subject to 20% VAT, which will be done for you at UK customs by the courier firm.

Please keep in mind that we often have exclusive deals that have by far the best rates on selected systems. This are normally shown on the Special deals tab, but if you send us a call, we can check what is available and answer any questions you might have.

The other most cost-effective way to purchase an iMRS Prime is to order both the Exagon Sense and Brain systems at the same time, resulting in a highly discounted Prime Package. For further detail on sets, see the section below.

Sets for iMRS Prime

Any of these key systems can also be updated in the form of an iMRS Prime Set (at a significant discount if they are part of your original order). The Exagon Sense and Exagon Brain gadgets are included with both of these packs. There are much more sophisticated implementations of the iMORE and iSLRS systems that were formerly available on the iMRS framework.

Both the Sense and the Brain are well worth considering for their potential to relieve discomfort (and thereby deepen the impact of the PEMF field), as well as the Sense's ability to decide which settings are better for the consumer. And the cumulative discount from purchasing them as a package is so significant that it's often an easy decision to prioritize this as the first kind of device update.

It's worth noting that the I (for intelligent) in iMRS or iMRS 2000 refers to the Exagon Sense connection, which converts the whole device into an automated, continuous bio-feedback loop, with the user's heart rate variability calculation and the mat's PEMF field intensity serving as the two ends of the loop.

youtube

Packs and Bundles for iMRS Prime

Boxes and packages of iMRS Prime systems and sets are also available. Here are two illustrations:

Split Mode, which is now available on the iMRS Prime Expert, Hybrid, and Trial models, allows you to power a second range of applicators and accessories from a single control unit and connector box.

This enables more than one person to receive a care at the same time, which can be very helpful at some times of the day, especially before bedtime. It also allows a single patient to use multiple applicators at the same time, which is particularly useful for abdominal therapies and localized pain and dysfunction.

When placing several orders for systems and packs, we are always asked whether there are any additional savings. These are normally related orders for a group of family members, acquaintances, or peers during the same month, or where one person wishes to gift programs to those close to them.

If you want a quote for a double pack or a family package, please contact us.

Frequently Asked Questions About the iMRS Prime or iMRS 2000

How much does delivery cost and how long does it take?

We deliver attractive shipping rates for the iMRS Prime to the United Kingdom and Europe from a variety of carriers. DHL has become our long-term partner, and shipping to the UK costs £65, €30 to EU countries, and €70 to non-EU European countries. This normally takes three to four days for EU countries and around a week for the rest of the world.

The case with exports to the United Kingdom has become even more ambiguous. In addition to putting a burden on the entire global transport environment since the pandemic, post-Brexit shipping and customs have been even less predictable. DHL exports have been slowly slowing since January 2021, and can now take several weeks. We've tried other carriers since then, including UPS and FedEx, but the cheapest we've found so far is DPD, which costs £85 and takes 4-5 days on average.

We also ship internationally and provide global service. If you live outside of the UK or the EU, please contact us for shipping rates to your area.

Rates to non-EU European countries, the Middle East and Africa, North and South America, and Asia differ greatly, and we sometimes use another shipper, such as FedEx, depending on the region.

We always ship from the closest factory to you, and we always look for the cheapest alternative possible, so delivery would be no more expensive than if you bought from a nearby distributor.

Is it easy to use?

With so many on-screen buttons, the control unit can seem intimidating at first. It's really quite easy to use. To switch it on and start a session with the default settings, the user only needs to press two keys.

It just takes three more buttons to customize it to your own needs for strength, duration, and applicator selection (with the Prime Expert level, you can just select whatever health issue you want to support by using the iGuide database).

Certainly, the Trial stage of the iMRS Prime is much more complex, with the ability to modify the simple PEMF signal in a variety of ways and taking some in-depth preparation (we have video modules for this).

However, the only possible complexity for the normal consumer is the guidance and instruction that we provide to each customer about how to use the device for any needs they have.

How transportable is it?

The iMRS Prime Hybrid Set as a whole weighs just 11 kilograms and can be comfortably transported in its optional travel bags (one for the mat, another for the control unit, connector box and accessories).

The mat is divided into three jointed sections, which eliminates the guesswork of where to fold it and allows it to be easily folded into a zig zag cross section for packing or holding.

Is Graduated Intensity Used in the iMRS Prime?

Yes, it has eight different ranges of field pressure, ranging from a very low 0.09 microTesla on the sensitive setting with the mat to a relatively high 120 microTesla on the 400 setting with the location. The field is better with the location than with the pad or the mat using the same pressure settings.

The pad is also graduated, with a lower field from the head-end panel (due to less copper coil windings) and a slightly higher field from the feet-end panel.

Is there a Bio-Rhythm Clock?

Yes, the iMRS Prime configures a different output for each six hours of a 24-hour day, consistent with the dominant brainwave frequencies of the circadian rhythm: energetic and alert in the early morning, steadily shifting to tired and calm at night, using the main brainwave frequencies. See the section on frequencies below for more details.

The clock setting may be overridden at any moment to meet particular needs, such as staying alert and energized for late-night work or assisting with jet lag. You can also select whether you want to be alert, energized, calm, or tired by using the Quick Start programs, which are accessible by pressing a single on-screen button.

What type(s) of signal(s) does it employ?

For local zones, the iMRS Prime employs a sawtooth signal on the main mat and a square wave signal on the applicators (the pad and the spot). The only exception is by using the mat in conjunction with the Solfeggio Quick Start software. To produce the nine Solfeggio frequencies in their purest form, this Fast Start employs a sinusoidal wave that delivers each frequency in series.

The iMRS Prime Trial edition also includes a signal generator that can generate a wide range of waveforms, speeds, pulse rates, and intensities in order to reproduce almost any signal (although this is primarily designed for use as a standardised piece of research equipment for PEMF clinical trials).

What kind of coils does it have?

The iMRS Prime / iMRS2000 has three pairs of solid copper coils running the length of the mat, one pair in the pad, and a copper coil closely wrapped around a ferrous core within the probe. The coils inside the mat and pad are protected by lots of padding, both to shield the coils from metal fatigue and to make the mat as easy to lay down on as possible.

youtube

What do I do if I am too tall for the mat?

It is correct because if you are taller than 1.7 meters (5′ 7′′), your whole body would not match on the iMRS Prime pad. However, keep in mind that the system's architecture is partially a balance between its shape and portability (the Prime mat is the right length to fold into three and fit into a manageable shoulder bag), and the PEMF field emanates from the coils within the mat in all directions.

And if your feet (which are generally less important than your brain!) are dangling off the end, they are still collecting energy from the mat. If you have an issue with your feet, you will usually handle them individually with the pad, but if it's anything as basic and popular as weak foot circulation, just putting the whole of the body on top of the mat would improve the whole cardiovascular system. Is that using frequencies and intensities derived from the Earth?

Real. Here's how it works:

Intensities: The iMRS mat is built to mimic what is present in nature, with a spectrum of 0.09 microTesla to 45 microTesla based on the strength setting selected and the various parts of the mat (highest at the feet, lowest at the head). This contrasts with Earth's magnetic field, which averages about 50 microTesla and varies between highs of around 66 microTesla at the north and south poles and lows of about 26 microTesla at the equator.

We are often asked, "Doesn't this mean that the iMRS area is abnormally high when using the pad or spot?" (which reach peaks of 65 and 120 microTesla, respectively). The response is that matching Nature's field intensities is only important when using the mat to handle the whole body.

Just the strongest intensities of the spot truly surpass the range of the Earth's magnetic field, and it is only used for localized therapies. Furthermore, the highest intensities are often used mainly for acute discomfort and tissue injury, rather than on a normal basis.

Unless the individual is very vulnerable to electro-magnetic fields, even the highest intensities are unlikely to trigger any complications, at least on an occasional basis (and if they do, or if you have any reservations about using them, you can just turn them down, even to amounts that are a tiny fraction of the Earth's magnetic field).

Frequencies are produced by the iMRS Prime using pulse packages of time-varying electro-magnetic impulses. These frequencies, including intensities, are chosen to correspond to what is present in nature. They are particularly interested in research explaining the presence of a "Biological Window," a frequency band ranging from 0.5 to 30 Hz (cycles per second).

This has been optimized in the iMRS Prime / imrs2000 as a small range that remains well within the frequency range present in the Earth's magnetic field and atmosphere (including the Schumann Resonances peaking at 7.8 Hz and human brainwaves). The frequencies used in the iMRS Prime's "Organ Clock" loop between various brainwave frequencies for different times of day:

• Beta at 15Hz in the morning • Afternoon: 5.5Hz Alpha • Evening: 3Hz Theta • Delta at 0.5Hz at night

Another goal is to have frequencies that cause resonating effects within the cells. Cell membrane receptors react to frequencies they recognize and begin to resonate with them. Different types of cells and processes have different resonance frequencies:

• Frequency 2Hz: Nerve Regeneration • 1-5 Hz: Rejuvenation • Frequency 7 Hz: Bone Growth • Brain Cells: 0.5 – 15Hz Ligaments at 10Hz • Fibroblasts with frequencies ranging from 12 to 20 hertz • 20Hz: Circulation of the Blood + Oxygenation • Metabolic Stimulation: 25–30Hz

Is the iMRS identical to the iMRS2000?

This is an often asked topic. Despite many online references, “iMRS2000” or “iMRS 2000 Prime” is just an American marketing word for the original product name, the iMRS or iMRS Prime. The concept blends the names of two real products, the earlier MRS2000 device and the iMRS, which replaced it in 2010.

The MRS2000, which was discontinued when the iMRS was introduced, had a much bigger and heavier control unit, as well as a pad that was much bulkier and more difficult to fold. The addition of the letter I to the front of the product name, i.e. iMRS, indicated that it was now a "intelligent" machine capable of interacting with body readings through the iMORE interface (now the Exagon Sense connection in the iMRS Prime) as a continuous bio-feedback loop.

Regardless of where you buy, and even though the machine is sold as an iMRS 2000 Prime, you will obtain the most recent iMRS Prime model.

How long does the warranty last?

All of the major iMRS Prime systems have a three-year worldwide warranty that includes complete exchange of the control unit and applicators as well as free delivery back to the consumer. Accessories are covered for a period of six months. An enhanced warranty for the same level of support on the primary device is also available for £379.00 for an additional two years.

Is the iMRS Prime a well-made and dependable product?

Swiss Bionic Solutions, our manufacturer, is an ISO accredited firm, their PEMF products are CE certified, and they undergo routine external audits of their manufacturing and documentation. The iMRS is made of high-quality, long-lasting materials and is assembled in compliance with the EC directive for medical devices. In reality, it most likely has more robust regulatory and quality certifications than any other home-use PEMF product on the market, and this gap is expected to grow significantly in the near future. There are a few demands for maintenance that we see.

How Long Will An iMRS Prime Be Expected To Last?

Based on our previous iMRS and MRS2000 device experiences, an iMRS Prime system can last for several years under regular daily use. This is emphasized by the fact that SBS offers up to a 5-year warranty on it. We have an MRS2000 machine (predecessor to the iMRS) that has been running for over ten years and has never needed repairs. Moreover, despite the fact that the MRS2000 was phased out of manufacturing in 2011, SBS continues to provide assistance when an issue arises. There are no moving parts, and there is virtually no need for repair or re-calibration.

The Prime has two-way contact between the applicators and the connection box; if there is a problem with an applicator, the device processor will notice it and the light indicator on the applicator will turn red to alert you of a problem. Finally, the iMRS Prime can be easily upgraded via remote updates to higher product levels or new firmware. This provides it with a high level of "future-proofing" as new programs are created.

Is it possible for my pets to use the iMRS?

Many families share their iMRS scheme with their dogs, keeping it clean by putting a pet blanket on top. Large dogs will use the pad on their own, while small dogs and cats will often snuggle up to their parents. Placing the pet's blanket on top would keep the applicators clean and unmarked, but some people have so many dogs and are so concerned with their wellbeing that they prefer to have a different device only for the pets (this is uncommon!).

Localized issues, such as sore knees, are better handled on the spot, and sitting on the pad may be particularly helpful for the smallest animals. We make an equine variant for bigger animals like horses and cows that attaches the control box to two wide panels on each side of the body. There are so many ways these programs can benefit your pet's general health and well-being that you're sure to save money on vet bills. However, keep in mind that, particularly if the pet has a serious health problem, you should seek the approval of a veterinarian before handling the pet yourself.

Is it safe to use while driving?

The iMRS Prime has much more complex functions than its contemporaries, which necessitate a distinct form of power input. This is one of the implementations that would necessitate the use of an Omnium1 device with a large internal battery.

Some of our customers do this to maximize consumption, especially when the driver is suffering from back pain or requires extra energy on a long trip. Obviously, the mat cannot be used with a car seat, but if properly set up, the pad or position can be used behind the driver's back and for a variety of uses for passengers. Some families even use it on a regular basis to treat their children on their way to and from athletic practice and games.

Specifications for the iMRS Prime System

On the mat and pad, field intensities range from 0.09 to 65 microTesla (0.65 gauss). With the spot applicator, you can achieve up to 120 mT (1.2 gauss).

The mat has a graduated intensity: it is three times heavier at the feet than at the head.

The frequency spectrum is 0.1 to 32 hertz.

0.5Hz, 3Hz, 7,5Hz, and 15Hz are biorhythms.

Sawtooth on the entire body mat, Square Wave on the pad and spot applicators.

Every two minutes, the polarity is switched.

Sound and light wavelengths are similar to those of the mat and are synchronized for optimum gain.

The power voltage is auto-adjustable between 100 and 240 volts alternating current. Measurements:

26 cm (W) x 17 cm (H) x 15 cm (Control unit) (D).

Size of the connector box: 16cm x 16cm x 4cm.

170 cm x 59 cm x 4.5 cm (full body mat).

Pad dimensions are 58 cm x 33 cm x 4 cm.

Spot measures 32 cm x 14 cm x 3 cm.

Weight of the system: roughly 11 kg (iMRS Prime Hybrid Set).

Dimensions for shipping: 38 cm (W) x 62 cm (H) x 62 cm (D).

Details to Know Follow Our Official Page:

https://imrs2000.com/

Additional Resourcs:

https://en.wikipedia.org/wiki/Pulsed_electromagnetic_field_therapy

https://www.youtube.com/watch?v=QIMc5mCHG6I

1 note

·

View note

Text

Prototype microprocessor with substantial energy savings potential

- By Akiko Tsumura , Yokohama National University -

Researchers from Yokohama National University in Japan have developed a prototype microprocessor using superconductor devices that are about 80 times more energy efficient than the state-of-the-art semiconductor devices found in the microprocessors of today's high-performance computing systems.

As today's technologies become more and more integrated in our daily lives, the need for more computational power is ever increasing. Because of this increase, the energy use of that increasing computational power is growing immensely. For example, so much energy is used by modern day data centers that some are built near rivers so that the flowing water can be used to cool the machinery.

"The digital communications infrastructure that supports the Information Age that we live in today currently uses approximately 10% of the global electricity. Studies suggest that in the worst case scenario, if there is no fundamental change in the underlying technology of our communications infrastructure such as the computing hardware in large data centers or the electronics that drive the communication networks, we may see its electricity usage rise to over 50% of the global electricity by 2030," says Christopher Ayala, an associate professor at Yokohama National University, and lead author of the study.

The team's research, published in the following Journal: IEEE Journal of Solid-State Circuits, details an effort to develop a more energy efficient microprocessor architecture using superconductors, devices that are incredibly efficient, but require certain environmental conditions to operate.

To tackle this power problem, the team explored the use of an extremely energy-efficient superconductor digital electronic structure, called the adiabatic quantum-flux-parametron (AQFP), as a building block for ultra-low-power, high-performance microprocessors, and other computing hardware for the next generation of data centers and communication networks.

"In this paper, we wanted to prove that the AQFP is capable of practical energy-efficient high-speed computing, and we did this by developing and successfully demonstrating a prototype 4-bit AQFP microprocessor called MANA (Monolithic Adiabatic iNtegration Architecture), the world's first adiabatic superconductor microprocessor," said Ayala.

Image: AQFP MANA microprocessor die photo. MANA is the world's first adiabatic superconductor microprocessor. Credit: Yokohama National University.

"The demonstration of our prototype microprocessor shows that the AQFP is capable of all aspects of computing, namely: data processing and data storage. We also show on a separate chip that the data processing part of the microprocessor can operate up to a clock frequency of 2.5 GHz making this on par with today's computing technologies. We even expect this to increase to 5-10 GHz as we make improvements in our design methodology and our experimental setup," Ayala said.

However, superconductors require extremely cool temperatures to operate successfully. One would think that if you factor in the cooling required for a superconductor microprocessor, the energy requirement would become undesirable and surpass current day microprocessors. But according to the research team this, surprisingly, was not the case:

"The AQFP is a superconductor electronic device, which means that we need additional power to cool our chips from room temperature down to 4.2 Kelvin to allow the AQFPs to go into the superconducting state. But even when taking this cooling overhead into account, the AQFP is still about 80 times more energy-efficient when compared to the state-of-the-art semiconductor electronic devices found in high-performance computer chips available today."

Now that the team has proven the concept of this superconductor chip architecture, they plan to optimize the chip and determine the chip's scalability and speed post optimization.

"We are now working towards making improvements in the technology, including the development of more compact AQFP devices, increasing the operation speed, and increasing the energy-efficiency even further through reversible computation," Ayala said. "We are also scaling our design approach so that we can fit as many devices as possible in a single chip and operate all of them reliably at high clock frequencies."

In addition to building standard microprocessors, the team is also interested in examining how AQFPs could assist in other computing applications such as neuromorphic computing hardware for artificial intelligence as well as quantum computing applications.

--

Source: Yokohama National University

Full study: “MANA: A Monolithic Adiabatic iNtegration Architecture Microprocessor Using 1.4-zJ/op Unshunted Superconductor Josephson Junction Devices”, IEEE Journal of Solid-State Circuits.

https://doi.org/10.1109/JSSC.2020.3041338

Read Also

A computer chip that combines logic operations and data storage

#computing#microprocessor#hardware#superconductor#engineering#computer science#semiconductors#energy#energy saving

2 notes

·

View notes

Text

M4 Nod Delay for Apple Mac Studio and Mac Pro Until Mid-2025

Apple’s specially created M processors, which offer amazing performance and efficiency, have completely changed Mac computers. There seems to be an unexpected delay for the high-end Mac Pro and Apple Mac Studio, just as all waiting impatiently for the next iteration. This is an overview of the M4 chip’s capabilities and the reasons these potent machines won’t have it until mid-2025.

The M-Powered Mac Ecosystem: An Unqualified Triumph

With the release of the M1 chip in 2020, Apple has switched all of its Mac models to internal silicon. When compared to Intel processors, the M1, M1 Pro, M1 Max, and M2 chips have continuously wowed with their power efficiency and performance advancements. Apple’s status as a pioneer in personal computer chip design has been cemented by this change.

The Horizon’s M4

According to known yearly cycles, there are rumours that the M4 processor may be released at some point in late 2024, possibly in conjunction with new MacBook models. Significant performance gains over the M2 are anticipated from this next-generation microprocessor, especially in the areas of core count, clock rates, and graphics processing.

The M2 Remains in Place

The Mac Pro and Apple Mac Studio may see an unexpected delay, according to a recent claim by Mark Gurman of Bloomberg, even though the M4 is ready to power the upcoming generation of ordinary Macs. The M4 update won’t be applied to these high-performance machines until mid-2025; they are now outfitted with the M1 Max and M1 Ultra varieties.

Discussions concerning Apple’s professional Mac lineup approach have been triggered by this announcement. The following are a few possible explanations for the wait:

M2 Optimisation for Complex Workflows

Professional operations like scientific computing, video editing, and 3D rendering can already be performed with remarkable speed by the M1 Max and M1 Ultra CPUs. Apple may be concentrating on improving the M2 architecture even more for these demanding operations, maybe with the help of developer tools and software upgrades.

Setting Efficiency Gains First