#JupyterNotebook

Explore tagged Tumblr posts

Text

CORRECTED: Causes of Death In Infants.

My apologies. The previous "Causes" plot had the data reversed. Here is the correct plot.

4 notes

·

View notes

Text

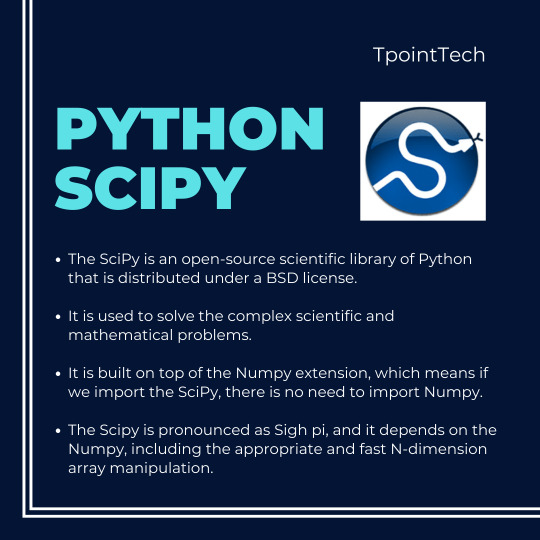

Python Scipy . . . . for more information and a tutorial https://bit.ly/3RhYl8V check the above link

2 notes

·

View notes

Text

Working With EMR Notebooks AWS Using Jupyter Notebook

Working with AWS EMR Notebooks

Amazon EMR Notebooks, renamed EMR Studio Workspaces, simplify data processing cluster interaction. They use the popular open-source Jupyter Notebook or JupyterLab editors and are available from Amazon EMR. This may be more efficient than EMR cluster notebooks. Users with suitable IAM rights can open the editor in the console.

Notebook statuses

When and how to communicate with EMR Notebooks requires knowing their status. The numerous states you may encounter are listed below:

The notebook is being produced and connected to the cluster. Launching, stopping, removing, or changing the editor's cluster is currently impossible. It starts rapidly but can take longer if a cluster forms.

You can access the fully prepared notebook in the notebook editor. Stop or remove the notebook in this state. Stop the notebook before altering the cluster. A Ready notebook will shut down after a long inactivity.

The notebook has been produced, however cluster integration may require resource provisioning or additional steps. In this case, you can launch the notebook editor in local mode, but cluster-dependent code will fail.

Stopping: Laptop or cluster shutdown. Like the ‘Starting’ state, the editor cannot be opened, stopped, deleted, or clusters altered while stopping.

The laptop shut down successfully. You can delete the laptop, swap clusters, or restart it on the same cluster (assuming the cluster is still operating).

Notebook is being removed from console list. Even after the notebook entry is erased, Amazon S3 will charge for the notebook file (NotebookName.ipynb). To retrieve the latest status, reload the console's notebook list.

Working in Notebook Editor

The notebook editor starts when the notebook is Ready or Pending. You choose Open in JupyterLab or Jupyter after choosing the notebook from the list. This opens a new browser tab with the editor. After opening, select your programming language's kernel from the Kernel menu.

The console-accessible editor's ability to limit EMR notebooks to one user is critical. Opening an already-used notebook will result in an error. Amazon EMR produces a unique pre-signed URL for each session that is only valid for a short time, displaying security.

This URL should not be shared since recipients could inherit your rights and be at risk. IAM permissions policies and granting EMR Notebooks service role access to the Amazon S3 location are two strategies to control access.

Preserving Work

While editing, your notebook cells and output are automatically and occasionally saved to the Amazon S3 notebook file. When there are no modifications since the last save, the editor displays “autosaved,” and otherwise, “unsaved.” You can manually save the notebook by pressing CTRL+S or choosing Save and Checkpoint from File. Manual saves create a checkpoint file (NotebookName.ipynb) in the notebook's principal Amazon S3 folder's checkpoints folder. This site stores only the latest checkpoint.

Attached Cluster Change

Switching the cluster to which an EMR notebook is linked without affecting its content is useful. Only Stopped notebooks can accomplish this. The approach involves selecting the paused notebook, viewing its data, selecting the Change cluster, and then choosing an existing Hadoop, Spark, and Livy cluster or creating a new one. Finally, select the security group and click Change cluster and start laptop to confirm.

Delete Notebooks and Files

The Amazon EMR interface lets you remove an EMR notebook from your list. Importantly, this approach does not delete Amazon S3 notebook files. These S3 data continue to accrue storage fees.

To remove the notebook entry and files, delete the notebook from the console and note its Amazon S3 location (in the notebook details). The AWS CLI or Amazon S3 interface must be used to manually remove the folder and its contents from the S3 location. An example CLI command removes the notebook directory and its contents.

Share and Use Notebook Files

Every EMR notebook has a NotebookName.ipynb file in Amazon S3. If it works with EMR Notebook Jupyter Notebook, you can open a notebook file as an EMR notebook. Saving the.ipynb file locally and uploading it to Jupyter or JupyterLab makes using a notebook file from another user straightforward. This method can recover a console-erased notebook or work with publicly published Jupyter notebooks if you have the file.

A new EMR notebook can be created by replacing the S3 notebook file. Stop all running EMR notebooks and close any open editor sessions.

Create a new EMR notebook with the precise name you want for the new file, record its S3 location and Notebook ID, stop it, and.Using the AWS CLI, copy and change the ipynb file at that S3 location, making sure the file name matches the notebook's name. This technique is shown using an AWS CLI command.

#EMRNotebooks#JupyterNotebook#JupyterLab#AmazonS3#AWSCommandLineInterface#AWSCLI#technology#technews#technologynews#news#govindhtech

0 notes

Text

Triển khai Jupyter Notebook trên FPT AI Factory

FPT Cloud hỗ trợ triển khai Jupyter Notebook trực tiếp trên nền tảng FPT AI Factory, giúp nhà phát triển và đội ngũ AI dễ dàng xây dựng, huấn luyện và thử nghiệm mô hình machine learning ngay trên môi trường đám mây. Giải pháp giúp tăng tốc phát triển AI, tối ưu tài nguyên và đảm bảo tính linh hoạt cao.Đọc chi tiết: http://fptcloud.com/trien-khai-jupyter-notebook-tren-fpt-ai-factory/

0 notes

Video

youtube

How To Install Jupyter Notebook In Windows [2025]

0 notes

Text

1/100 Days of Code #2

hello & welcome again to 100 days of coding! this theme helped me out while working thru the odin project pretty steadily.

i have officially switched tracks to python to get some deeper foundations set & try to fix my gaps in understanding on js. i tried for 1 whole year to bridge these gaps using js (so many textbooks, thanks no starch press!) and i’m frankly dog tired of not making progress!

so!

my new online learning course had me install jupyter & i’m honestly feeling a lot better already than i did even using VSCode or IDLE for the python stuff lmao.

that’s what i did today: 30 mins of set up, and about 2 hours of season 1 SNL as a reward. (30/30 a day is my proposed model for this project no exceptions, but the library hungers for SNL’s return so,,, prioritizing!)

anyway… ttyt can’t wait!!

1 note

·

View note

Text

Anyone else having trouble getting jupyter to export to pdf? I'm not sure if i just have some installs not there or what

1 note

·

View note

Text

Open Source Tools for Data Science: A Beginner’s Toolkit

Data science is a powerful tool used by companies and organizations to make smart decisions, improve operations, and discover new opportunities. As more people realize the potential of data science, the need for easy-to-use and affordable tools has grown. Thankfully, the open-source community provides many resources that are both powerful and free. In this blog post, we will explore a beginner-friendly toolkit of open-source tools that are perfect for getting started in data science.

Why Use Open Source Tools for Data Science?

Before we dive into the tools, it’s helpful to understand why using open-source software for data science is a good idea:

1. Cost-Effective: Open-source tools are free, making them ideal for students, startups, and anyone on a tight budget.

2. Community Support: These tools often have strong communities where people share knowledge, help solve problems, and contribute to improving the tools.

3. Flexible and Customizable: You can change and adapt open-source tools to fit your needs, which is very useful in data science, where every project is different.

4. Transparent: Since the code is open for anyone to see, you can understand exactly how the tools work, which builds trust.

Essential Open Source Tools for Data Science Beginners

Let’s explore some of the most popular and easy-to-use open-source tools that cover every step in the data science process.

1. Python

The most often used programming language for data science is Python. It's highly adaptable and simple to learn.

Why Python?

- Simple to Read: Python’s syntax is straightforward, making it a great choice for beginners.

- Many Libraries: Python has a lot of libraries specifically designed for data science tasks, from working with data to building machine learning models.

- Large Community: Python’s community is huge, meaning there are lots of tutorials, forums, and resources to help you learn.

Key Libraries for Data Science:

- NumPy: Handles numerical calculations and array data.

- Pandas: Helps you organize and analyze data, especially in tables.

- Matplotlib and Seaborn: Used to create graphs and charts to visualize data.

- Scikit-learn: A powerful tool for machine learning, offering easy-to-use tools for data analysis.

2. Jupyter Notebook

Jupyter Notebook is a web application where you can write and run code, see the results, and add notes—all in one place.

Why Jupyter Notebook?

- Interactive Coding: You can write and test code in small chunks, making it easier to learn and troubleshoot.

- Great for Documentation: You can write explanations alongside your code, which helps keep your work organized.

- Built-In Visualization: Jupyter works well with visualization libraries like Matplotlib, so you can see your data in graphs right in your notebook.

3. R Programming Language

R is another popular language in data science, especially known for its strength in statistical analysis and data visualization.

Why R?

- Strong in Statistics: R is built specifically for statistical analysis, making it very powerful in this area.

- Excellent Visualization: R has great tools for making beautiful, detailed graphs.

- Lots of Packages: CRAN, R’s package repository, has thousands of packages that extend R’s capabilities.

Key Packages for Data Science:

- ggplot2: Creates high-quality graphs and charts.

- dplyr: Helps manipulate and clean data.

- caret: Simplifies the process of building predictive models.

4. TensorFlow and Keras

TensorFlow is a library developed by Google for numerical calculations and machine learning. Keras is a simpler interface that runs on top of TensorFlow, making it easier to build neural networks.

Why TensorFlow and Keras?

- Deep Learning: TensorFlow is excellent for deep learning, a type of machine learning that mimics the human brain.

- Flexible: TensorFlow is highly flexible, allowing for complex tasks.

- User-Friendly with Keras: Keras makes it easier for beginners to get started with TensorFlow by simplifying the process of building models.

5. Apache Spark

Apache Spark is an engine used for processing large amounts of data quickly. It’s great for big data projects.

Why Apache Spark?

- Speed: Spark processes data in memory, making it much faster than traditional tools.

- Handles Big Data: Spark can work with large datasets, making it a good choice for big data projects.

- Supports Multiple Languages: You can use Spark with Python, R, Scala, and more.

6. Git and GitHub

Git is a version control system that tracks changes to your code, while GitHub is a platform for hosting and sharing Git repositories.

Why Git and GitHub?

- Teamwork: GitHub makes it easy to work with others on the same project.

- Track Changes: Git keeps track of every change you make to your code, so you can always go back to an earlier version if needed.

- Organize Projects: GitHub offers tools for managing and documenting your work.

7. KNIME

KNIME (Konstanz Information Miner) is a data analytics platform that lets you create visual workflows for data science without writing code.

Why KNIME?

- Easy to Use: KNIME’s drag-and-drop interface is great for beginners who want to perform complex tasks without coding.

- Flexible: KNIME works with many other tools and languages, including Python, R, and Java.

- Good for Visualization: KNIME offers many options for visualizing your data.

8. OpenRefine

OpenRefine (formerly Google Refine) is a tool for cleaning and organizing messy data.

Why OpenRefine?

- Data Cleaning: OpenRefine is great for fixing and organizing large datasets, which is a crucial step in data science.

- Simple Interface: You can clean data using an easy-to-understand interface without writing complex code.

- Track Changes: You can see all the changes you’ve made to your data, making it easy to reproduce your results.

9. Orange

Orange is a tool for data visualization and analysis that’s easy to use, even for beginners.

Why Orange?

- Visual Programming: Orange lets you perform data analysis tasks through a visual interface, no coding required.

- Data Mining: It offers powerful tools for digging deeper into your data, including machine learning algorithms.

- Interactive Exploration: Orange’s tools make it easier to explore and present your data interactively.

10. D3.js

D3.js (Data-Driven Documents) is a JavaScript library used to create dynamic, interactive data visualizations on websites.

Why D3.js?

- Highly Customizable: D3.js allows for custom-made visualizations that can be tailored to your needs.

- Interactive: You can create charts and graphs that users can interact with, making data more engaging.

- Web Integration: D3.js works well with web technologies, making it ideal for creating data visualizations for websites.

How to Get Started with These Tools

Starting out in data science can feel overwhelming with so many tools to choose from. Here’s a simple guide to help you begin:

1. Begin with Python and Jupyter Notebook: These are essential tools in data science. Start by learning Python basics and practice writing and running code in Jupyter Notebook.

2. Learn Data Visualization: Once you're comfortable with Python, try creating charts and graphs using Matplotlib, Seaborn, or R’s ggplot2. Visualizing data is key to understanding it.

3. Master Version Control with Git: As your projects become more complex, using version control will help you keep track of changes. Learn Git basics and use GitHub to save your work.

4. Explore Machine Learning: Tools like Scikit-learn, TensorFlow, and Keras are great for beginners interested in machine learning. Start with simple models and build up to more complex ones.

5. Clean and Organize Data: Use Pandas and OpenRefine to tidy up your data. Data preparation is a vital step that can greatly affect your results.

6. Try Big Data with Apache Spark: If you’re working with large datasets, learn how to use Apache Spark. It’s a powerful tool for processing big data.

7. Create Interactive Visualizations: If you’re interested in web development or interactive data displays, explore D3.js. It’s a fantastic tool for making custom data visualizations for websites.

Conclusion

Data science offers a wide range of open-source tools that can help you at every step of your data journey. Whether you're just starting out or looking to deepen your skills, these tools provide everything you need to succeed in data science. By starting with the basics and gradually exploring more advanced tools, you can build a strong foundation in data science and unlock the power of your data.

#DataScience#OpenSourceTools#PythonForDataScience#BeginnerDataScience#JupyterNotebook#RProgramming#MachineLearning#TensorFlow#DataVisualization#BigDataTools#GitAndGitHub#KNIME#DataCleaning#OrangeDataScience#D3js#DataScienceForBeginners#DataScienceToolkit#DataAnalytics#data science course in Coimbatore#LearnDataScience#FreeDataScienceTools

1 note

·

View note

Text

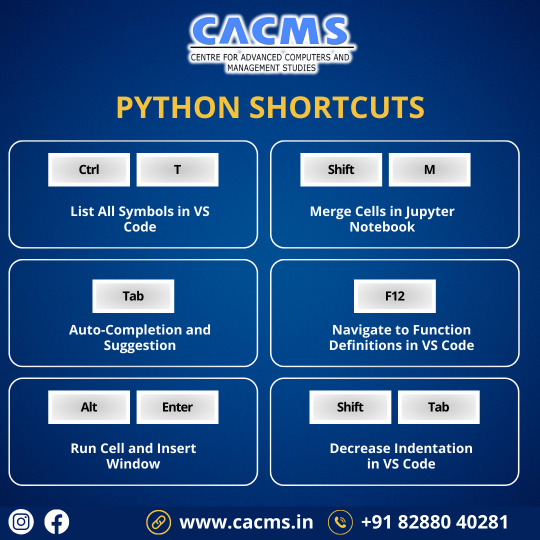

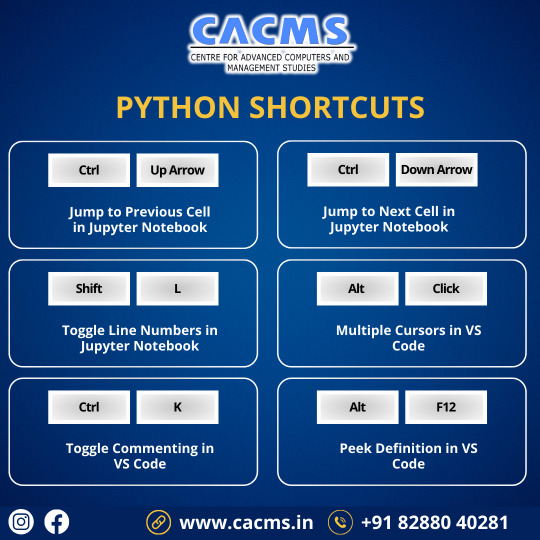

🚀 Boost your Python skills with these handy shortcuts and commands! 💻 Whether you're a data analyst, coder, or a tech enthusiast, these time-savers will level up your workflow. 🐍🔥 Which one's your favorite?

#Python#CodingShortcuts#ProductivityHacks#JupyterNotebook#VSCode#CodeLikeAPro#TechTips#DataScience#DataAnalysis#CodeEditor#LearnPython#ShortcutKeys#Programming#CodingLife#ProgrammingTricks#TrendingTech#BoostYourSkills#TechTools#ProgrammerLife#PythonForDataScience#TechTrends#EfficiencyBoost

0 notes

Text

نصائح وحيل Jupyter Notebook لزيادة إنتاجيتك في تحليل البيانات

Jupyter Notebook هو عبارة عن بيئة حوسبة تفاعلية قائمة على الويب يُمكنك استخدامه لتحليل البيانات والبرمجة التعاونية. حيث يسمح بدمج الأكواد والنصوص والتصورات في مستند واحد. لديه دعم لنظام بيئي واسع من المكتبات المُتاحة لإنجاز مهام مختلفة. يُمكن استخدامه من قبل العلماء والباحثين والمُطورين لمجموعة متنوعة من الأغراض ، بما في ذلك تصور البيانات وإنشاء التقارير وكتابة النصوص. حيث إنه يقود عالَم علم البيانات عندما يتعلق الأمر بتحليل البيانات ومعالجتها وهندسة الميزات. فيما يلي بعض النصائح والحيل الأساسية لمساعدتك في تحقيق أقصى استفادة من Jupyter Notebook. تحقق من طرق للبقاء على اطلاع بأحدث الاتجاهات في علم البيانات. Read the full article

0 notes

Text

youtube

0 notes

Text

Kivy . . . . for more information and a tutorial https://bit.ly/4itVOEu check the above link

0 notes

Text

Unlock Your Career Potential with a Data Science Certificate Program

What Can I Do with a Certificate in Data Science?

Data science is a broad field that includes activities like data analysis, statistical analysis, machine learning, and fundamental computer science. It might be a lucrative and exciting career path if you are up to speed on the latest technology and are competent with numbers and data. Depending on the type of work you want, you can take a variety of paths. Some will use your strengths more than others, so it is always a good idea to assess your options and select your course. Let’s look at what you may acquire with a graduate certificate in data science.

Data Scientist Salary

Potential compensation is one of the most critical factors for many people when considering a career. According to the Bureau of Labor Statistics (BLS), computer and information research scientists may expect a median annual pay of $111,840, albeit that amount requires a Ph.D. degree. The BLS predicts 19 percent growth in this industry over the next ten years, which is much faster than the general average.

Future data scientists can make impressive incomes if they are willing to acquire a Ph.D. degree. Data scientists that work for software publishers and R&D organizations often earn the most, with top earners making between $123,180 and $125,860 per year. On average, the lowest-paid data scientists work for schools and institutions, but their pay of $72,030 is still much higher than the national average of $37,040.

Role of statistics in research

At first appearance, a statistician’s job may appear comparable to that of a data analyst or data scientist. After all, this job necessitates regular engagement with data. On the other hand, statistical analysts are primarily concerned with mathematics, whereas data scientists and data analysts focus on extracting meaningful information from data. To excel in their field, statisticians must be experienced and confident mathematicians.

Statisticians may work in various industries since most organizations require some statistical analysis. Statisticians frequently specialize in fields such as agriculture or education. A statistician, on the other hand, can only be attained with a graduate diploma in data science due to the strong math talents necessary.

Machine Learning Engineer

Several firms’ principal product is data. Even a small group of engineers or data scientists might need help with data processing. Many workers must sift through vast data to provide a data service. Many companies are looking to artificial intelligence to assist them in managing extensive data. Machine learning, a kind of artificial intelligence, is a vital tool for handling vast amounts of data.

Machine learning, on the other hand, is designed by machine learning engineers to analyze data automatically and change it into something useful. However, the recommendation algorithm accumulates more data points when you watch more videos. As more data is collected, the algorithm “learns,” and its suggestions become more accurate. Furthermore, because the algorithm runs itself after construction, it speeds up the data collection.

Data Analyst

A data scientist and a data analyst are similar, and the terms can be used interchangeably depending on the company. You may be requested to access data from a database, master Excel spreadsheets, or build data visualizations for your company’s personnel. Although some coding or programming knowledge is advantageous, data analysts rarely use these skills to the extent that data scientists do.

Analysts evaluate a company’s data and draw meaningful conclusions from it. Analysts generate reports based on their findings to help the organization develop and improve over time. For example, a store analyst may use purchase data to identify the most common client demographics. The company might then utilize the data to create targeted marketing campaigns to reach those segments. Writing reports that explain data in a way that people outside the data field can understand is part of the intricacy of this career.

Data scientists

Data scientists and data analysts frequently share responsibilities. The direct contrast between the two is that a data scientist has a more substantial background in computer science. A data scientist may also take on more commonly associated duties with data analysts, particularly in smaller organizations with fewer employees. To be a competent data scientist, you must be skilled in math and statistics. To analyze data more successfully, you’ll also need to be able to write code. Most data scientists examine data trends before making forecasts. They typically develop algorithms that model data well.

Data Engineer

A data engineer and a data scientist are the same people. On the other hand, data engineers frequently have solid technological backgrounds, and data scientists usually have mathematical experience. Data scientists may develop software and understand how it works, but data engineers in the data science sector must be able to build, manage, and troubleshoot complex software.

A data engineer is essential as a company grows since it will create the basic data architecture necessary to move forward. Analytics may also discover areas that need to be addressed and those that are doing effectively. This profession requires solid software engineering skills rather than understanding how to interpret statistics correctly.

Important Data Scientist Skills

Data scientist abilities are further divided into two types.

Their mastery of sophisticated mathematical methods, statistics, and technologically oriented abilities is significantly tied to their technical expertise.

Excellent interpersonal skills, communication, and collaboration abilities are examples of non-technical attributes.

Technical Data Science Skills

While data scientists only need a lifetime of information stored in their heads to start a successful career in this field, a few basic technical skills that may be developed are required. These are detailed below Technical Data Science Skills

An Understanding of Basic Statistics

An Understanding of Basic Tools Used

A Good Understanding of Calculus and Algebra

Data Visualization Skills

Correcting Dirty Data

An Understanding of Basic Statistics

Regardless of whether an organization eventually hires a data science specialist, this person must know some of the most prevalent programming tools and the language used to use these programs. Understanding statistical programming languages such as R or Python and database querying languages such as SQL is required. Data scientists must understand maximum likelihood estimators, statistical tests, distributions, and other concepts. It is also vital that these experts understand how to identify which method will work best in a given situation. Depending on the company, data-driven tactics for interpreting and calculating statistics may be prioritized more or less.

A Good Understanding of Calculus and Algebra

It may appear unusual that a data science specialist would need to know how to perform calculus and algebra when many apps and software available today can manage all of that and more. Valid, not all businesses place the same importance on this knowledge. However, modern organizations whose products are characterized by data and incremental advances will benefit employees who possess these skills and do not rely just on software to accomplish their goals.

Data Visualization Skills & Correcting Dirty Data

This skill subset is crucial for newer firms beginning to make decisions based on this type of data and future projections. While robots solve this issue in many cases, the ability to detect and correct erroneous data may be a crucial skill that differentiates one in data science. Smaller firms significantly appreciate this skill since incorrect data can substantially impact their bottom line. These skills include locating and restoring missing data, correcting formatting problems, and changing timestamps.

Non-Technical Data Science Skills

It may be puzzling that data scientists would require non-technical skills. However, several essential skills must be had that fall under this category of Non-Technical Data Science Skills.

Excellent Communication Skills

A Keen Sense of Curiosity

Career Mapping and Goal Setting Skills

Excellent Communication Skills

Data science practitioners must be able to correctly communicate their work’s outcomes to technically sophisticated folks and those who are not. To do so, they must have exceptional interpersonal and communication abilities.

A Keen Sense of Curiosity

Data science specialists must maintain a level of interest to recognize current trends in their business and use them to make future projections based on the data they collect and analyze. This natural curiosity will drive them to pursue their education at the top of their game.

Career Mapping and Goal Setting Skills

A data scientist’s talents will transfer from one sub-specialty to another. Professionals in this business may specialize in different fields than their careers. As a result, they need to understand what additional skills they could need in the future if they choose to work in another area of data science.

Conclusion:

Data Science is about finding hidden data insights regarding patterns, behavior, interpretation, and assumptions to make informed business decisions. Data Scientists / Science professionals are the people who carry out these responsibilities. According to Harvard, data science is the world’s most in-demand and sought-after occupation. Nsccool Academy offers classroom self-paced learning certification courses and the most comprehensive Data Science certification training in Coimbatore.

#nschoolacademy#DataScience#DataScientist#MachineLearning#AI (Artificial Intelligence)#BigData#DeepLearning#Analytics#DataAnalysis#DataEngineering#DataVisualization#Python#RStats#TensorFlow#PyTorch#SQL#Tableau#PowerBI#JupyterNotebooks#ScikitLearn#Pandas#100DaysOfCode#WomenInTech#DataScienceCommunity#DataScienceJobs#LearnDataScience#AIForEveryone#DataDriven#DataLiteracy

1 note

·

View note

Text

Discover the top Data Science tools for beginners and learn where to start your journey. From Python and R to Tableau and SQL, find the best tools to build your Data Science skills.

#DataScience#BeginnersGuide#Python#RLanguage#SQL#Tableau#JupyterNotebooks#DataVisualization#LearningDataScience#TechTools

0 notes

Text

Dip Your Toes in Finance with Me!

I am very excited to share this with everyone! One of the aspects of teaching that I loved the most as substitute prof back in the day was sharing my notes with everyone and then discussing them.

In my very first post on my Notes of Finance series, I have started with the concept of Brownian motion and Standard Brownian motion, taking a somewhat deep dive into the meaning of the drift and diffusion constants.

I hope you enjoy following my Jupyter notebook, as I am eager to discuss this with everyone.

Keep tuned for the next posts in this series, as I now move to pricing!

0 notes