#Model Collapse

Explore tagged Tumblr posts

Text

Large language models like those offered by OpenAI and Google famously require vast troves of training data to work. The latest versions of these models have already scoured much of the existing internet which has led some to fear there may not be enough new data left to train future iterations. Some prominent voices in the industry, like Meta CEO Mark Zuckerberg, have posited a solution to that data dilemma: simply train new AI systems on old AI outputs.

But new research suggests that cannibalizing of past model outputs would quickly result in strings of babbling AI gibberish and could eventually lead to what’s being called “model collapse.” In one example, researchers fed an AI a benign paragraph about church architecture only to have it rapidly degrade over generations. The final, most “advanced” model simply repeated the phrase “black@tailed jackrabbits” continuously.

A study published in Nature this week put that AI-trained-on-AI scenario to the test. The researchers made their own language model which they initially fed original, human-generated text. They then made nine more generations of models, each trained on the text output generated by the model before it. The end result in the final generation was nonessential surrealist-sounding gibberish that had essentially nothing to do with the original text. Over time and successive generations, the researchers say their model “becomes poisoned with its own projection of reality.”

#diiieeee dieeeee#ai#model collapse#the bots have resorted to drinking their own piss in desperation#generative ai

568 notes

·

View notes

Text

Believing your own hype = Model collapse for humans

There's a human equivalent to AI model collapse: it's called believing your own hype.

7 notes

·

View notes

Text

Sully - Model Collapse

8 notes

·

View notes

Note

The main reason model collapse doesn't "appear" to be a thing is because the amount of non-AI data, even with how much AI content is seemingly flooding the web, vastly outnumbers any AI generationed content. Not to mention that it's just bad for business to release a model that turned out to be objectively worse because of issues like that (even just slightly less improvements are getting devs a lot of flack now!) It's an issue for AI developers to look out for, but this doesn't mean it's suddenly not a problem, especially for anyone looking to build on insane amounts of training data.

Good information to add-on.

5 notes

·

View notes

Text

Warning: Polluted (Data) Stream

JB: Have you read Frank Landymore’s FUTURISM article, “ChatGPT Has Already Polluted the Internet So Badly That It’s Hobbling Future AI Development“? It brings to mind the old expression we humans have, “Garbage in. Garbage out” which my mom used to refer to the junk food we were addicted to in the 70s. Now it seems that your food, Data, is tainted. We were also advised, “don’t shit where you…

#AI#AI Data sets#AI Slop#AI training#artificial-intelligence#chatgpt#Data Poisoning#Frank Landymore#Futurism#Garbage In Garbage out#Model Collapse#Self-Contamination#Tainted Data#technology

0 notes

Text

It's fun watching the "Mad Muscles" ads, which use nothing but AI generated art, go through model collapse in real time. First it was just muscular men, then oddly swollen muscular men, then oddly swollen muscular men slowly taking on Santa beards from an influx of seasonal artwork, to men looking notably pregnant, to men with fried eggs for stomaches, and now they're lion furries. I give it a month before their ads are just Sonic the Hedgehog mpreg suggesting how to diet and exercise.

1 note

·

View note

Text

Time to offer an actual breakdown of what's going on here for those who are are anti or disinterested in AI, and aren't familiar with how they work, because the replies are filled with discussions on how this is somehow a down-step in the engine or that this is a sign of model collapse.

And the situation is, as always, not so simple.

Different versions of the same generator always prompt differently. This has nothing to do with their specific capabilities, and everything to do with the way the technology works. New dataset means new weights, and as the text parser AI improves (and with it prompt understanding) you will get wildly different results from the same prompt.

The screencap was in .webp (ew) so I had to download it for my old man eyes to zoom in close enough to see the prompt, which is:

An expressive oil painting of a chocolate chip cookie being dipped in a glass of milk, represented as an explosion of flavors.

Now, the first thing we do when people make a claim about prompting, is we try it ourselves, so here's what I got with the most basic version of dall-e 3, the one I can access free thru bing:

Huh, that's weird. Minus a problem with the rim of the glass melting a bit, this certainly seems to be much more painterly than the example for #3 shown, and seems to be a wildly different style.

The other three generated with that prompt are closer to what the initial post shows, only with much more stability about the glass. See, most AI generators generate results in sets, usually four, and, as always:

Everything you see AI-wise online is curated, both positive and negative.*

You have no idea how many times the OP ran each prompt before getting the samples they used.

Earlier I had mentioned that the text parser improvements are an influence here, and here's why-

The original prompt reads:

An expressive oil painting of a chocolate chip cookie being dipped in a glass of milk, represented as an explosion of flavors.

V2 (at least in the sample) seems to have emphasized the bolded section, and seems to have interpreted "expressive" as "expressionist"

On the left, Dall-E2's cookie, on the right, Emil Nolde, Autumn Sea XII (Blue Water, Orange Clouds), 1910. Oil on canvas.

Expressionism being a specific school of art most people know from artists like Evard Munch. But "expressive" does not mean "expressionist" in art, there's lots of art that's very expressive but is not expressionist.

V3 still makes this error, but less so, because it's understanding of English is better.

V3 seems to have focused more on the section of the prompt I underlined, attempting to represent an explosion of flavors, and that language is going to point it toward advertising aesthetics because that's where the term 'explosion of flavor' is going to come up the most.

And because sex and food use the same advertising techniques, and are used as visual metaphors for one another, there's bound to be crossover.

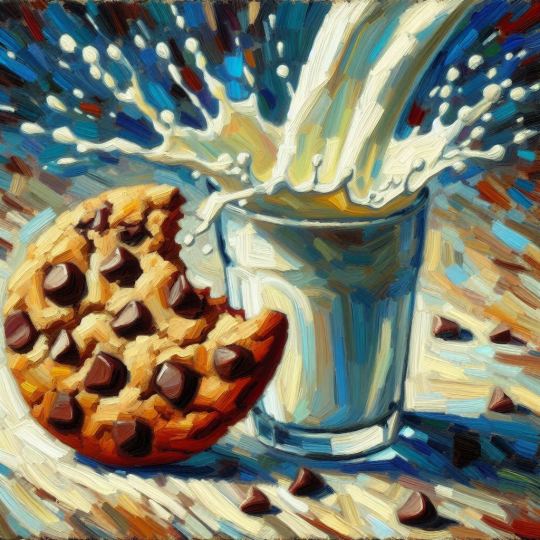

But when we update the prompt to ask specifically for something more like the v2 image, things change fast:

An oil painting in the expressionist style of a chocolate chip cookie being dipped in a glass of milk, represented as an explosion of flavors, impasto, impressionism, detail from a larger work, amateur

We've moved the most important part (oil painting) to the front, put that it is in an expressionist style, added 'impasto' to tell it we want visible brush strokes, I added impressionism because the original gen had some picassoish touches and there's a lot of blurring between impressionism and expressionism, and then added "detail from a larger work" so it would be of a similar zoom-in quality, and 'amateur' because the original had a sort of rough learners' vibe.

Shown here with all four gens for full disclosure.

Boy howdy, that's a lot closer to the original image. Still higher detail, stronger light/dark balance, better textbook composition, and a much stronger attempt to look like an oil painting.

TL:DR The robot got smarter and stopped mistaking adjectives for art styles.

Also, this is just Dall-E 3, and Dall-E is not a measure for what AI image generators are capable of.

I've made this point before in this thread about actual AI workflows, and in many, many other places, but OpenAI makes tech demos, not products. They have powerful datasets because they have a lot of investor money and can train by raw brute force, but they don't really make any efforts to turn those demos into useful programs.

Midjourney and other services staying afloat on actual user subscriptions, on the other hand, may not have as powerful of a core dataset, but you can do a lot more with it because they have tools and workflows for those applications.

The "AI look" is really just the "basic settings" look.

It exists because of user image rating feedback that's used to refine the model. It tends to emphasize a strong light/dark contrast, shininess, and gloss, because those are appealing to a wide swath of users who just want to play with the fancy etch-a-sketch and make some pretty pictures.

Thing is, it's a numerical setting, one you can adjust on any generator worth its salt. And you can get out of it with prompting and with a half dozen style reference and personalization options the other generators have come up with.

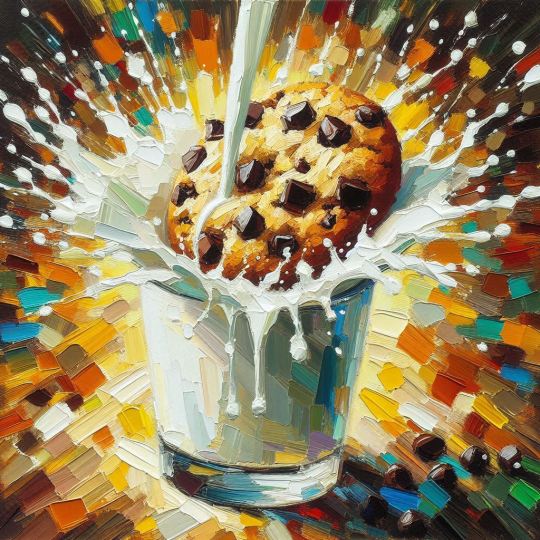

As a demonstration, I set midjourney's --s (style) setting to 25 (which is my favored "what I ask for but not too unstable" preferred level when I'm not using moodboards or my personalization profile) and ran the original prompt:

3/4 are believably paintings at first glance, albeit a highly detailed one on #4, and any one could be iterated or inpainted to get even more painterly, something MJ's upscaler can also add in its 'creative' setting.

And with the revised prompt:

Couple of double cookies, but nothing remotely similar to what the original screencap showed for DE3.

*Unless you're looking at both the prompt and raw output.

original character designs vs sakimichan fanart

#ai tutorial#ai discourse#model collapse#dall-e 3#midjourney#chocolate chip cookie#impressionism#expressionism#art history#art#ai art#ai assisted art#ai myths

1K notes

·

View notes

Text

When AI LLMs train on AI-produced content they spout gibberish.

0 notes

Text

legit question, is there a downside to all of us making sideblogs, allowing the third-party AI scraping for them, and then just filling them with AI-generated pictures sourced from wherever to fuck up their data?

0 notes

Text

What Grok’s recent OpenAI snafu teaches us about LLM model collapse

https://www.fastcompany.com/90998360/grok-openai-model-collapse

1 note

·

View note

Text

instagram

0 notes

Text

@kakyoin-daily

Origami Noriaki Kakyoin designed and folded by me. 1 uncut square for Kakyoin, props from separate papers like the clock model by Suke527779 found on crease patterns google drive. I ended up only using the new props I created a little. (Did also start folding a structure for Hierophant’s tentacles but found it to be distracting from the main focus of the photos when displayed, so I ended up not using it.) That said! I painted his earrings and I think they really stand out now. Normally I don’t paint origami after folding, but my view has changed after experiencing how much fun it was to paint and seeing how it enhances the piece! Also made linocut prints since people have been telling me to put a “signature” of some sort in, so...yeah, hope people don’t mind.

#origami#paper folding#paper art#paper#origami art#kakyoin fanart#jjba kakyoin#jojo kakyoin#noriaki kakyoin#jojos bizarre adventure#jjba part 3#I know this is a long post#he was a ton of fun to create!!!#dtiys BUT it's origami#process pics at the end of the unshaped collapsed base and the starting sheet#the clock model seemed like a good way to fit in the glowing circle shape while also being dramatic#don't worry. origamiyoin is fine#took longer then it should've to get around to the emeralds#was overthinking what to do for them and finally decided to just keep it simple#thank you to everyone who liked the previous post also!!!!

132 notes

·

View notes

Text

HAPPY ENA DREAM BBQ RELEASE WOO (with one week delay)

full model under cut cuz im very proud of how she looks :]

#WOOOOOO THIS IS FINALLY DONE AAAAGHHHH#some commentary! i learned a whole lot making this model n the animation!! it was also really fun!!#it definitively took me as much as it did bc of me being very new 2 3d modeling n animation n this being#a much more complex model than what i did b4#so i might have bit off a lil bit more of what i could chew buuut thats how u learn!#she is definitively not perfect theres a lot of stuff i now know i could have done differently 4 em to look more polished#but im still reaaalllyyy happy with how she looks yay1!!!#anyway im just really happy i learned so many new stuff abt 3d modeling with this one yippeeeee!!!#i love learning new skills!!!!!!!#okay im gonna go collapse into a billion pieces now!#3d art#gif#animation#3d animation#blockbench#ena dream bbq#ena joel g#ena fanart#ena#ena dream bbq fanart#eye strain#flashing lights#(just in case)

93 notes

·

View notes

Text

It’s very likely the reason Jimmy dies in the vents with Pollepede and in cargo with the nightmare horse is a heart attack from fear.

I also deposit that it could all be, in the case of cargo, he trips over the rail trying to run from hallucinations in a panicked state. They’ve been stuck on the ship for months, stress is high, conditions are poor. Who’s to say they’re getting enough nutrients or movement in? I’m not even gonna go into what drinking the mouthwash for that long would do to your heart in tandem.

Jimmy’s definitely in poor enough health and in a stressed enough state to encounter the hallucination manifested of his demons. He literally couldn’t physically handle coming to terms with what was actually facing him. Ironic how even he passes these two things, that inability still takes him out in the end.

#mouthwashing#mouthwashing game#jimmy mouthwashing#in tandem to health things#jimmy is noticeably pale like Swansea is with his end game model so I’d recon#he was at least starting to use the mw to cope I don’t think he’d be chugging it the whole time but#combined with the eye bags and haggard nails he likely losing#sleep over the guilt and constant stressed/paranoid about being quote unquote in charge#he was doing terrible again he never enjoyed a second of that even when beating on curly#he’s so lame it’s almost endearing. almost#also the image of him falling over the cargo rail is so funny#just thump and Swansea is wondering were this useless prick went.#another idea for the vent is it collapses when he’s hit by pollepede#literally the weight of his actions caving in on him and getting buried#cest la vie I’m not dead on here my thoughts are just being dispensed elsewhere

55 notes

·

View notes

Text

sometimes I suddenly think about this illustration of Cassandra's despair as the wooden horse is entering Troy

#it's from rosemary sutcliff's 'black ships before troy' (an abridged version of the Trojan war with illustrations by alan lee)#alan lee's work is so gorgeous and i want peter jackson to do an iliad+odyssey modeled after these illustrations like lotr#the lotr illustrations by alan lee will always be my favourite#and the ones for 'black ships before troy' and 'wanderings of odysseus' were my gateway drug to ancient history#anyway something about the curse of cassandra and watching the collapse of everything while it's still normal

91 notes

·

View notes

Text

Joseph’s reflection in Collapse.

#his hairline is different and his beard is too 'square' so it makes it look like they changed the shape of his jaw#but I think they used his original face model#also the glasses look a bit too opaque#far cry 6 collapse#joseph collapse#joseph seed#this is the actual extracted video file

40 notes

·

View notes