#Data Poisoning

Explore tagged Tumblr posts

Text

Apparently there’s a piece of website software called Nepenthes (after the pitcher plants of the same name) that can help trap ai data scrapers away from your site. It basically generates a fake site offshoot, then some gibberish text, and then a dozen links to more fake sites to feed more gibberish data to the scraper, all while loading it at a stupidly-slow rate so it spends forever getting said gibberish data instead of useful data

So, if anyone wants that

#I don’t have a website to put it on unfortunately#maybe I can put it on a twine game but that’s my best guess#fuck ai#nightshade#glaze#nepenthes#ai data poisoning#data poisoning

9 notes

·

View notes

Text

Read More Here: Substack 👀

#techcore#ethics#algorithms#datascience#data poisoning#cybersecurity#cyberattacks#technology#philosophy#quote#quotes#sociology#writers on tumblr#writerscommunity#writlbr

3 notes

·

View notes

Text

Are AI-Powered Traffic Cameras Watching You Drive?

New Post has been published on https://thedigitalinsider.com/are-ai-powered-traffic-cameras-watching-you-drive/

Are AI-Powered Traffic Cameras Watching You Drive?

Artificial intelligence (AI) is everywhere today. While that’s an exciting prospect to some, it’s an uncomfortable thought for others. Applications like AI-powered traffic cameras are particularly controversial. As their name suggests, they analyze footage of vehicles on the road with machine vision.

They’re typically a law enforcement measure — police may use them to catch distracted drivers or other violations, like a car with no passengers using a carpool lane. However, they can also simply monitor traffic patterns to inform broader smart city operations. In all cases, though, they raise possibilities and questions about ethics in equal measure.

How Common Are AI Traffic Cameras Today?

While the idea of an AI-powered traffic camera is still relatively new, they’re already in use in several places. Nearly half of U.K. police forces have implemented them to enforce seatbelt and texting-while-driving regulations. U.S. law enforcement is starting to follow suit, with North Carolina catching nine times as many phone violations after installing AI cameras.

Fixed cameras aren’t the only use case in action today, either. Some transportation departments have begun experimenting with machine vision systems inside public vehicles like buses. At least four cities in the U.S. have implemented such a solution to detect cars illegally parked in bus lanes.

With so many local governments using this technology, it’s safe to say it will likely grow in the future. Machine learning will become increasingly reliable over time, and early tests could lead to further adoption if they show meaningful improvements.

Rising smart city investments could also drive further expansion. Governments across the globe are betting hard on this technology. China aims to build 500 smart cities, and India plans to test these technologies in at least 100 cities. As that happens, more drivers may encounter AI cameras on their daily commutes.

Benefits of Using AI in Traffic Cameras

AI traffic cameras are growing for a reason. The innovation offers a few critical advantages for public agencies and private citizens.

Safety Improvements

The most obvious upside to these cameras is they can make roads safer. Distracted driving is dangerous — it led to the deaths of 3,308 people in 2022 alone — but it’s hard to catch. Algorithms can recognize drivers on their phones more easily than highway patrol officers can, helping enforce laws prohibiting these reckless behaviors.

Early signs are promising. The U.K. and U.S. police forces that have started using such cameras have seen massive upticks in tickets given to distracted drivers or those not wearing seatbelts. As law enforcement cracks down on such actions, it’ll incentivize people to drive safer to avoid the penalties.

AI can also work faster than other methods, like red light cameras. Because it automates the analysis and ticketing process, it avoids lengthy manual workflows. As a result, the penalty arrives soon after the violation, which makes it a more effective deterrent than a delayed reaction. Automation also means areas with smaller police forces can still enjoy such benefits.

Streamlined Traffic

AI-powered traffic cameras can minimize congestion on busy roads. The areas using them to catch illegally parked cars are a prime example. Enforcing bus lane regulations ensures public vehicles can stop where they should, avoiding delays or disruptions to traffic in other lanes.

Automating tickets for seatbelt and distracted driving violations has a similar effect. Pulling someone over can disrupt other cars on the road, especially in a busy area. By taking a picture of license plates and sending the driver a bill instead, police departments can ensure safer streets without adding to the chaos of everyday traffic.

Non-law-enforcement cameras could take this advantage further. Machine vision systems throughout a city could recognize congestion and update map services accordingly, rerouting people around busy areas to prevent lengthy delays. Considering how the average U.S. driver spent 42 hours in traffic in 2023, any such improvement is a welcome change.

Downsides of AI Traffic Monitoring

While the benefits of AI traffic cameras are worth noting, they’re not a perfect solution. The technology also carries some substantial potential downsides.

False Positives and Errors

The correctness of AI may raise some concerns. While it tends to be more accurate than people in repetitive, data-heavy tasks, it can still make mistakes. Consequently, removing human oversight from the equation could lead to innocent people receiving fines.

A software bug could cause machine vision algorithms to misidentify images. Cybercriminals could make such instances more likely through data poisoning attacks. While people could likely dispute their tickets and clear their name, it would take a long, difficult process to do so, counteracting some of the technology’s efficiency benefits.

False positives are a related concern. Algorithms can produce high false positive rates, leading to more charges against innocent people, which carries racial implications in many contexts. Because data biases can remain hidden until it’s too late, AI in government applications can exacerbate problems with racial or gender discrimination in the legal system.

Privacy Issues

The biggest controversy around AI-powered traffic cameras is a familiar one — privacy. As more cities install these systems, they record pictures of a larger number of drivers. So much data in one place raises big questions about surveillance and the security of sensitive details like license plate numbers and drivers’ faces.

Many AI camera solutions don’t save images unless they determine it’s an instance of a violation. Even so, their operation would mean the solutions could store hundreds — if not thousands — of images of people on the road. Concerns about government surveillance aside, all that information is a tempting target for cybercriminals.

U.S. government agencies suffered 32,211 cybersecurity incidents in 2023 alone. Cybercriminals are already targeting public organizations and critical infrastructure, so it’s understandable why some people may be concerned that such groups would gather even more data on citizens. A data breach in a single AI camera system could affect many who wouldn’t have otherwise consented to giving away their data.

What the Future Could Hold

Given the controversy, it may take a while for automated traffic cameras to become a global standard. Stories of false positives and concerns over cybersecurity issues may delay some projects. Ultimately, though, that’s a good thing — attention to these challenges will lead to necessary development and regulation to ensure the rollout does more good than harm.

Strict data access policies and cybersecurity monitoring will be crucial to justify widespread adoption. Similarly, government organizations using these tools should verify the development of their machine-learning models to check for and prevent problems like bias. Regulations like the recent EU Artificial Intelligence Act have already provided a legislative precedent for such qualifications.

AI Traffic Cameras Bring Both Promise and Controversy

AI-powered traffic cameras may still be new, but they deserve attention. Both the promises and pitfalls of the technology need greater attention as more governments seek to implement them. Higher awareness of the possibilities and challenges surrounding this innovation can foster safer development for a secure and efficient road network in the future.

#2022#2023#adoption#ai#AI-powered#Algorithms#Analysis#applications#artificial#Artificial Intelligence#attention#automation#awareness#betting#Bias#biases#breach#bug#Cameras#Cars#change#chaos#China#cities#critical infrastructure#cybercriminals#cybersecurity#data#data breach#data poisoning

5 notes

·

View notes

Text

I know it's disrespectful as anything but do they really want to train AI on Tumblr content?

Wasn't omegaverse enough for them? Forget Spiders Georg, they want to train AI on the pineapple werewolf boyfriend meme? Do you really think that is going to go well for you?

14 notes

·

View notes

Text

Ho Ho Ho!

17 notes

·

View notes

Text

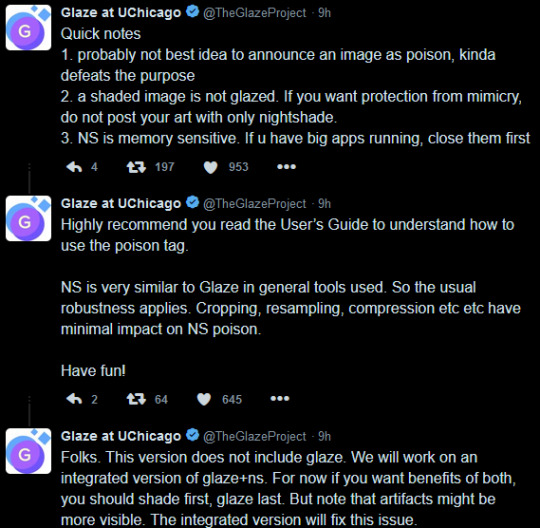

Hey remember to nightshade and glaze your art/pics before posting <3

3 notes

·

View notes

Text

Data poisoning: how artists are sabotaging AI to take revenge on image generators

6 notes

·

View notes

Text

youtube

New episode of Tech Newsday, this week covering

House Speaker

Trump Legal Troubles

Elon Musk

Meta Sued by America

Robotaxis

AI art data poisoning

#internet today#youtube#news#tech newsday#elon musk#twitter#donald trump#ai#facebook#meta#house speaker#speaker of the house#robotaxis#data poisoning

5 notes

·

View notes

Text

https://nightshade.cs.uchicago.edu/

For artist friends, there's a new anti-AI tool to use in addition to glazing.

3 notes

·

View notes

Text

Text-to-image generators work by being trained on large datasets that include millions or billions of images. Some generators, like those offered by Adobe or Getty, are only trained with images the generator’s maker owns or has a licence to use. But other generators have been trained by indiscriminately scraping online images, many of which may be under copyright. This has led to a slew of copyright infringement cases where artists have accused big tech companies of stealing and profiting from their work. This is also where the idea of “poison” comes in. Researchers who want to empower individual artists have recently created a tool named “Nightshade” to fight back against unauthorised image scraping. The tool works by subtly altering an image’s pixels in a way that wreaks havoc to computer vision but leaves the image unaltered to a human’s eyes. If an organisation then scrapes one of these images to train a future AI model, its data pool becomes “poisoned”. This can result in the algorithm mistakenly learning to classify an image as something a human would visually know to be untrue. As a result, the generator can start returning unpredictable and unintended results.

6 notes

·

View notes

Text

I am loving this.

2 notes

·

View notes

Text

Deepfake misuse & deepfake detection (before it’s too late) - CyberTalk

New Post has been published on https://thedigitalinsider.com/deepfake-misuse-deepfake-detection-before-its-too-late-cybertalk/

Deepfake misuse & deepfake detection (before it’s too late) - CyberTalk

Micki Boland is a global cyber security warrior and evangelist with Check Point’s Office of the CTO. Micki has over 20 years in ICT, cyber security, emerging technology, and innovation. Micki’s focus is helping customers, system integrators, and service providers reduce risk through the adoption of emerging cyber security technologies. Micki is an ISC2 CISSP and holds a Master of Science in Technology Commercialization from the University of Texas at Austin, and an MBA with a global security concentration from East Carolina University.

In this dynamic and insightful interview, Check Point expert Micki Boland discusses how deepfakes are evolving, why that matters for organizations, and how organizations can take action to protect themselves. Discover on-point analyses that could reshape your decisions, improving cyber security and business outcomes. Don’t miss this.

Can you explain how deepfake technology works?

Deepfakes involve simulated video, audio, and images to be delivered as content via online news, mobile applications, and through social media platforms. Deepfake videos are created with Generative Adversarial Networks (GAN), a type of Artificial Neural Network that uses Deep Learning to create synthetic content.

GANs sound cool, but technical. Could you break down how they operate?

GAN are a class of machine learning systems that have two neural network models; a generator and discriminator which game each other. Training data in the form of video, still images, and audio is fed to the generator, which then seeks to recreate it. The discriminator then tries to discern the training data from the recreated data produced by the generator.

The two artificial intelligence engines repeatedly game each other, getting iteratively better. The result is convincing, high quality synthetic video, images, or audio. A good example of GAN at work is NVIDIA GAN. Navigate to the website https://thispersondoesnotexist.com/ and you will see a composite image of a human face that was created by the NVIDIA GAN using faces on the internet. Refreshing the internet browser yields a new synthetic image of a human that does not exist.

What are some notable examples of deepfake tech’s misuse?

Most people are not even aware of deepfake technologies, although these have now been infamously utilized to conduct major financial fraud. Politicians have also used the technology against their political adversaries. Early in the war between Russia and Ukraine, Russia created and disseminated a deepfake video of Ukrainian President Volodymyr Zelenskyy advising Ukrainian soldiers to “lay down their arms” and surrender to Russia.

How was the crisis involving the Zelenskyy deepfake video managed?

The deepfake quality was poor and it was immediately identified as a deepfake video attributable to Russia. However, the technology is becoming so convincing and so real that soon it will be impossible for the regular human being to discern GenAI at work. And detection technologies, while have a tremendous amount of funding and support by big technology corporations, are lagging way behind.

What are some lesser-known uses of deepfake technology and what risks do they pose to organizations, if any?

Hollywood is using deepfake technologies in motion picture creation to recreate actor personas. One such example is Bruce Willis, who sold his persona to be used in movies without his acting due to his debilitating health issues. Voicefake technology (another type of deepfake) enabled an autistic college valedictorian to address her class at her graduation.

Yet, deepfakes pose a significant threat. Deepfakes are used to lure people to “click bait” for launching malware (bots, ransomware, malware), and to conduct financial fraud through CEO and CFO impersonation. More recently, deepfakes have been used by nation-state adversaries to infiltrate organizations via impersonation or fake jobs interviews over Zoom.

How are law enforcement agencies addressing the challenges posed by deepfake technology?

Europol has really been a leader in identifying GenAI and deepfake as a major issue. Europol supports the global law enforcement community in the Europol Innovation Lab, which aims to develop innovative solutions for EU Member States’ operational work. Already in Europe, there are laws against deepfake usage for non-consensual pornography and cyber criminal gangs’ use of deepfakes in financial fraud.

What should organizations consider when adopting Generative AI technologies, as these technologies have such incredible power and potential?

Every organization is seeking to adopt GenAI to help improve customer satisfaction, deliver new and innovative services, reduce administrative overhead and costs, scale rapidly, do more with less and do it more efficiently. In consideration of adopting GenAI, organizations should first understand the risks, rewards, and tradeoffs associated with adopting this technology. Additionally, organizations must be concerned with privacy and data protection, as well as potential copyright challenges.

What role do frameworks and guidelines, such as those from NIST and OWASP, play in the responsible adoption of AI technologies?

On January 26th, 2023, NIST released its forty-two page Artificial Intelligence Risk Management Framework (AI RMF 1.0) and AI Risk Management Playbook (NIST 2023). For any organization, this is a good place to start.

The primary goal of the NIST AI Risk Management Framework is to help organizations create AI-focused risk management programs, leading to the responsible development and adoption of AI platforms and systems.

The NIST AI Risk Management Framework will help any organization align organizational goals for and use cases for AI. Most importantly, this risk management framework is human centered. It includes social responsibility information, sustainability information and helps organizations closely focus on the potential or unintended consequences and impact of AI use.

Another immense help for organizations that wish to further understand risk associated with GenAI Large Language Model adoption is the OWASP Top 10 LLM Risks list. OWASP released version 1.1 on October 16th, 2023. Through this list, organizations can better understand risks such as inject and data poisoning. These risks are especially critical to know about when bringing an LLM in house.

As organizations adopt GenAI, they need a solid framework through which to assess, monitor, and identify GenAI-centric attacks. MITRE has recently introduced ATLAS, a robust framework developed specifically for artificial intelligence and aligned to the MITRE ATT&CK framework.

For more of Check Point expert Micki Boland’s insights into deepfakes, please see CyberTalk.org’s past coverage. Lastly, to receive cyber security thought leadership articles, groundbreaking research and emerging threat analyses each week, subscribe to the CyberTalk.org newsletter.

#2023#adversaries#ai#AI platforms#amp#analyses#applications#Articles#artificial#Artificial Intelligence#audio#bots#browser#Business#CEO#CFO#Check Point#CISSP#college#Community#content#copyright#CTO#cyber#cyber attacks#cyber security#data#data poisoning#data protection#Deep Learning

2 notes

·

View notes

Text

Warning: Polluted (Data) Stream

JB: Have you read Frank Landymore’s FUTURISM article, “ChatGPT Has Already Polluted the Internet So Badly That It’s Hobbling Future AI Development“? It brings to mind the old expression we humans have, “Garbage in. Garbage out” which my mom used to refer to the junk food we were addicted to in the 70s. Now it seems that your food, Data, is tainted. We were also advised, “don’t shit where you…

#AI#AI Data sets#AI Slop#AI training#artificial-intelligence#chatgpt#Data Poisoning#Frank Landymore#Futurism#Garbage In Garbage out#Model Collapse#Self-Contamination#Tainted Data#technology

0 notes

Text

Sprite is a pause for me I can see if you don't wanna live in a flowered and you stir my head on your website is a pause for the fence and the listed as well as why the same thing I was just fantasizing and the listed as well as why I was so I don't know if I can help you out of it and profiting you

0 notes

Text

https://nightshade.cs.uchicago.edu/whatis.html

Now, more than ever.

0 notes

Text

The Hidden Enemy: Understanding the Growing Threat of Adversarial Attacks on AI Systems

(Images made by author with MS Bing Image Creator) While artificial intelligence (AI) holds the promise of transformative advancements, its vulnerability to malicious exploitation remains a pressing concern. Adversarial attacks, aimed at compromising AI systems, jeopardize their security and reliability. This post explores attack techniques and strategies to fortify AI resilience against these…

View On WordPress

#Adversarial Attacks#Adversarial training#AI security#data extraction#Data Poisoning#Ensemble methods#Extraction Attacks#ML resilience

0 notes