#Open WebUI

Explore tagged Tumblr posts

Text

I’m changing how I use AI (Open WebUI + LiteLLM)

youtube

Massive Gem for Developers

0 notes

Text

UBUNTU TOUCH | DAY ONE

If you didn't see one of my previous posts, I've got a phone to experiment with, and I'm gonna be trying out various mobile operating systems! (Primarily linux based! Not that android isn't linux but lets be real.) please ignore my greasy ugly hand Installation Process: The installation actually wasn't that bad! Was kinda awkward trying to get the phone updated to the correct version without upgrading too far, but after that was dealt with everything else was taken care of very smoothly and easily with the UB Ports custom installer! Didn't have a fail state, nor did the phone brick! I'd rate this an 8/10! Setup and Tutorial: Setting up the phone after the install was very fast, frankly there wasn't much to set up. Thankfully it's not like Google or Apple where they ask for your firstborn son before even asking for your SIM card. Just needed to set up a password/passcode, set up my SIM (Which was thankfully automatic kinda, details later) and confirm a Wi-Fi connection if I wanted to!) Tutorial however, was a bit lackluster. While it was descriptive, it also only popped up on my first time seeing each screen. (E.g. I didn't get the tutorial for the phone app until I opened the phone app.) While I don't think this is necessarily a bad thing, it also means I would need to walk through every inbuilt app to make sure the tutorials are all done. kinda annoying in my personal opinion. Overall I'd rate this 6~7/10. Usage and Experience: For day one this isn't super important, however first impressions do matter for most people. The OS as a whole was very snappy, and never froze or lagged in any of my testing. Additionally, the pull-down shade is very nice! Could be simplified a little more so that you don't need to scroll sideways, and it would also be nice to see more settings overall since the system seems to be quite bare for settings at the moment. However one of the things that really bothers me as a button-enjoyer is the OS not having a button navigation method. The only way to navigate between screens is to swipe from the sides, top or bottom as gestures, similar to Apple and the recent Android iterations. These gestures don't even work perfectly either, which makes it more annoying that I don't have a home button or back button. If there is a home-swipe or back-swipe, then the tutorial did not detail it, and I cannot find it anywhere in the settings. Overall, it's a 4/10 but with LOTS of potential! Functionality: This focus' almost entirely on how it functions as a phone, and if it's problematic for any reason. So far it seems great outside of one issue, as commented on earlier (This is the details later bit lmao). Plugged an active SIM card into the phone and it automatically logged the APN and other important information which was very nice. Tested the mobile data and texting, and found no issues except for maybe being unable to send MMS (Need to double check that it was not a file size issue.) However, this entire time I've not had the ability to make or take calls. I'm not sure why, the APN, provider, and everything in the settings is correct to what I can tell. I may attempt to either reinstall the dialer application, or erase and manually set the APN in the event that might fix it. If I cannot get calling to work this score will look a lot uglier. Overall, it's a 7~8/10! for now.

App Availability: Seems great! The built in app-store, or "OpenStore" is pretty cool, and seems to have a lot of useful apps. I did notice a small fraction of android apps that I could use (Like Slack, thanks workplace -ﻌ-) but they were just WebUI apps, which isn't bad, but it's bad. I haven't attempted to set up or use Waydroid, but I will not have that change the score at all since using Android apps (kinda barely) defeats the purpose of an alternative operating system. Overall score, 8/10, but needs a deeper dive.

I'll likely put out another update at either 15 days or 30 days!

Whenever you guys think you want one!

8 notes

·

View notes

Text

Does anyone else like watching numbers slowly go up? Like I have the pihole webUI open right now and am enthralled but the numbers slowly going up

19 notes

·

View notes

Text

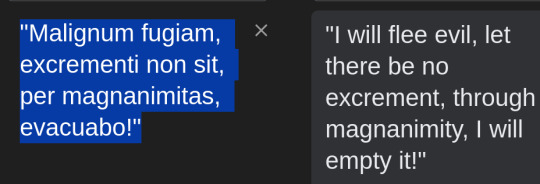

i trained an AI for writing incantations.

You can get the model, to run on your own hardware, under the cut. it is free. finetuning took about 3 hours with PEFT on a single gpu. It's also uncensored. Check it out:

The model requires a framework that can run ggufs, like gpt4all, Text-generation-webui, or similar. These are free and very easy to install.

You can find the model itself as a gguf file here:

About:

it turned out functional enough at this one (fairly linguistically complex) task and is unique enough that I figured I'd release it in case anyone wants the bot. It would be pretty funny in a discord. It's slightly overfit to the concept of magic, due to having such a small and intensely focused dataset.

Model is based on Gemma 2, is small, really fast, very funny, not good, dumb as a stump, (but multingual) and is abliterated. Not recommended for any purpose. It is however Apache 2.0 Licensed, so you can sell its output in books, modify it, re-release it, distill it into new datasets, whatever.

it's finetuned on a very small, very barebones dataset of 400 instructions to teach it to craft incantations based on user supplied intents. It has no custom knowledge of correspondence or spells in this release, it's one thing is writing incantations (and outputting them in UNIX strfile/fortune source format, if told to, that's it's other one thing).

magic related questions will cause this particular model to give very generic and internetty, "set your intention for Abundance" type responses. It also exhibits a failure mode where it warns the user that stuff its OG training advises against, like making negative statements about public figures, can attract malevolent entities, so that's very fun.

the model may get stuck repeating itself, (as they do) but takes instruction to write new incantations well, and occasionally spins up a clever rhyme. I'd recommend trying it with lots of different temperature settings to alter its creativity. it can also be guided concerning style and tone.

The model retains Gemma 2's multilingual output, choosing randomly to output latin about 40% of the time. Lots of missed rhymes, imperfect rhythm structures, and etc in english, but about one out of every three generated incantations is close enough to something you'd see in a book that I figure'd I'd release it to the wild anyway.

it is, however, NOT intended for kids or for use as any kind of advice machine; abliteration erodes the models refusal mechanism, resulting in a permanent jailbreak, more or less. This is kinda necessary for the use case (most pre-aligned LLMs will not discuss hexes. I tell people this is because computers belieb in magic.), but it does rend the models safeguards pretty much absent. Model is also *quite* small, at around 2.6 billion parameters, and a touch overfit for the purpose, so it's pretty damn stupid, and dangerous, and will happily advise very stupid shit or give very wrong answers if asked questions, so all standard concerns apply and doubly so with this model, and particularly because this one is so small and is abliterated. it will happily "Yes, and" pretty much any manner of question, which is hilarious, but definitely not a voice of reason:

it may make mistakes in parsing instructions altogether, reversing criteria, getting words mixed up, and sometimes failing to rhyme. It is however pretty small, at 2 gigs, and very fast, and runs well on shitty hardware. It should also fit on edge devices like smartphones or a decent SBC.

for larger / smarter models, the incantation generation function is approximated in a few-shot as a TavernAI card here:

If you use this model, please consider posting any particularly "good" (or funny) incantations it generates, so that I can refine the dataset.

3 notes

·

View notes

Text

Neve (NIKKE) stablediffusion checkpoint henmixReal cosplay aigirls

Neve (NIKKE) 제작툴 : stableDiffusion webui checkpoint : henmixReal_v40 승리의 여신 니케 : 네베 LORA Download https://civitai.com/models/135975/neve-nikke-lora civitai original prompt : masterpiece, best quality, 1girl, solo, <lora:neve-nikke-richy-v1:1> eve, animal hood, bodysuit, fur trim, wide sleeves, gloves, jacket, navel, headphones around neck, open mouth, squatting, arms behind head, My…

View On WordPress

#aiart#AIArtworks#AIグラビア#AI美女#aigirl#aigirls#aiphotography#aiwaifus#cosplay#네베#니케#henmixReal#승리의여신니케#코스프레#Neve#NIKKE#prompt#stablediffusion

25 notes

·

View notes

Text

Anatomy of a Scene: Photobashing in ControlNet for Visual Storytelling and Image Composition

This is a cross-posting of an article I published on Civitai.

Initially, the entire purpose for me to learn generative AI via Stable Diffusion was to create reproducible, royalty-free images for stories without worrying about reputation harm or consent (turns out not everyone wants their likeness associated with fetish smut!).

In the beginning, it was me just hacking through prompting iterations with a shotgun approach, and hoping to get lucky.

I did start the Pygmalion project and the Coven story in 2023 before I got banned (deservedly) for a ToS violation on an old post. Lost all my work without a proper backup, and was too upset to work on it for a while.

I did eventually put in work on planning and doing it, if not right, better this time. Was still having some issues with things like consistent settings and clothing. I could try to train LoRas for that, but seemed like a lot of work and there's really still no guarantees. The other issue is the action-oriented images I wanted were a nightmare to prompt for in 1.5.

I have always looked at ControlNet as frankly, a bit like cheating, but I decided to go to Google University and see what people were doing with image composition. I stumbled on this very interesting video and while that's not exactly what I was looking to do, it got me thinking.

You need to download the controlnet model you want, I use softedge like in the video. It goes in extensions/sd-webui-controlnet/models.

I got a little obsessed with Lily and Jamie's apartment because so much of the first chapter takes place there. Hopefully, you will not go back and look at the images side-by-side, because you will realize none of the interior matches at all. But the layout and the spacing work - because the apartment scenes are all based on an actual apartment.

The first thing I did was look at real estate listings in the area where I wanted my fictional university set. I picked Cambridge, Massachusetts.

I didn't want that mattress in my shot, where I wanted Lily by the window during the thunderstorm. So I cropped it, keeping a 16:9 aspect ratio.

You take your reference photo and put it in txt2img Controlnet. Choose softedge control type, and generate the preview. Check other preprocessors for more or less detail. Save the preview image.

Lily/Priya isn't real, and this isn't an especially difficult pose that SD1.5 has trouble drawing. So I generated a standard portrait-oriented image of her in the teal dress, standing looking over her shoulder.

I also get the softedge frame for this image.

I opened up both black-and-white images in Photoshop and erased any details I didn't want for each. You can also draw some in if you like. I pasted Lily in front of the window and tried to eyeball the perspective to not make her like tiny or like a giant. I used her to block the lamp sconces and erased the scenery, so the AI will draw everything outside.

Take your preview and put it back in Controlnet as the source. Click Enable, change preprocessor to None and choose the downloaded model.

You can choose to interrogate the reference pic in a tagger, or just write a prompt.

Notice I photoshopped out the trees and landscape and the lamp in the corner and let the AI totally draw the outside.

This is pretty sweet, I think. But then I generated a later scene, and realized this didn't make any sense from a continuity perspective. This is supposed to be a sleepy college community, not Metropolis. So I redid this, putting BACK the trees and buildings on just the bottom window panes. The entire point was to have more consistent settings and backgrounds.

Here I am putting the trees and more modest skyline back on the generated image in Photoshop. Then i'm going to repeat the steps above to get a new softedge map.

I used a much more detailed preprocessor this time.

Now here is a more modest, college town skyline. I believe with this one I used img2img on the "city skyline" image.

#ottopilot-ai#ai art#generated ai#workflow#controlnet#howto#stable diffusion#AI image composition#visual storytelling

2 notes

·

View notes

Text

Btw if you really want to use chatgpt or whatever you could instead download open-webui and ollama and get tons of high quality models, completely for Free, that run Offline, that means there is NO risk of your sensitive data being sent to some shady company for training or other purposes. You can usually choose the size of the model, so if you have a less powerful computer you can just choose a smaller model and still run it.

It functions exactly the same way as chatgpt like it's just a chat bot, but you can choose between your different installed models.

It can be a bit of a struggle to install if you're less technically inclined but I will literally guide you through it step by step if it means you're willing to switch away from chatgpt.

0 notes

Text

Next-Level AV Integration with Turtle AV: Dante Audio Solutions Built for Performance

New Post has been published on https://thedigitalinsider.com/next-level-av-integration-with-turtle-av-dante-audio-solutions-built-for-performance/

Next-Level AV Integration with Turtle AV: Dante Audio Solutions Built for Performance

Turtle AV is redefining professional AV with a suite of Dante-enabled hardware designed for seamless integration, intelligent control, and rock-solid reliability. Whether you’re outfitting a stadium, theater, classroom, or broadcast studio, these solutions deliver unmatched flexibility, redundancy, and ease of use. Here’s a closer look at the latest innovations.

DOWNTOWN: Dolby Atmos to Dante Bridge Meet the world’s first audio encoder to convert Dolby Atmos, Dolby Digital, Dolby Digital Plus, DTS:X, and PCM up to 9.1 channels into Dante. The DOWNTOWN is built for serious installs—theme parks, stadiums, theaters, and broadcast suites.

Power & network redundancy

AES67 support

Full API control

TAA compliant with a 5-year advance replacement warranty

MINEOLA 16×16: High-Density Dante–XLR Bridge Bridge the gap between analog XLR and digital Dante with 8 in / 8 out in a compact 2RU chassis. Ideal for live production, events, and fixed installs.

Dual redundant PoE

Neutrik etherCON locking ports

48V phantom power on all XLR inputs

Compatible with vMix, Vizrt, TriCaster, OBS, Wirecast, and more

PHOENIX 8×8: Compact DSP Dante Bridge Powerful audio control in a rugged, rack-mountable frame. The Phoenix 8×8 features 8 analog ins/outs with DSP control via WebGUI.

Euroblock connectors with 48V phantom power

Dual Dante network ports + locking DC connector

TAA compliant, 5-year warranty

EQ, delay, and gain control via open API

30W PoE+ Dante Power Amplifier This PoE+ amp bridges analog and Dante audio while powering two passive speakers at 15W/channel.

Balanced/unbalanced analog I/O

2-channel digital Dante I/O

DSP with EQ, gain, volume via WebUI

Plenum-rated, PoE+ or 12V powered

Learn more about Turtle AV below:

#amp#analog#API#audio#channel#classroom#connector#Delay#eq#Events#Features#Full#gap#Hardware#innovations#integration#Learn#network#performance#power#Production#reliability#replacement#Theater#turtle#world#X

0 notes

Text

Open WebUI changed license from BSD-3 to Open WebUI license with CLA

https://docs.openwebui.com/license/

0 notes

Text

0 notes

Text

In today’s tech landscape, the average VPS just doesn’t cut it for everyone. Whether you're a machine learning enthusiast, video editor, indie game developer, or just someone with a demanding workload, you've probably hit a wall with standard CPU-based servers. That’s where GPU-enabled VPS instances come in. A GPU VPS is a virtual server that includes access to a dedicated Graphics Processing Unit, like an NVIDIA RTX 3070, 4090, or even enterprise-grade cards like the A100 or H100. These are the same GPUs powering AI research labs, high-end gaming rigs, and advanced rendering farms. But thanks to the rise of affordable infrastructure providers, you don’t need to spend thousands to tap into that power. At LowEndBox, we’ve always been about helping users find the best hosting deals on a budget. Recently, we’ve extended that mission into the world of GPU servers. With our new Cheap GPU VPS Directory, you can now easily discover reliable, low-cost GPU hosting solutions for all kinds of high-performance tasks. So what exactly can you do with a GPU VPS? And why should you rent one instead of buying hardware? Let’s break it down. 1. AI & Machine Learning If you’re doing anything with artificial intelligence, machine learning, or deep learning, a GPU VPS is no longer optional, it’s essential. Modern AI models require enormous amounts of computation, particularly during training or fine-tuning. CPUs simply can’t keep up with the matrix-heavy math required for neural networks. That’s where GPUs shine. For example, if you’re experimenting with open-source Large Language Models (LLMs) like Mistral, LLaMA, Mixtral, or Falcon, you’ll need a GPU with sufficient VRAM just to load the model—let alone fine-tune it or run inference at scale. Even moderately sized models such as LLaMA 2–7B or Mistral 7B require GPUs with 16GB of VRAM or more, which many affordable LowEndBox-listed hosts now offer. Beyond language models, researchers and developers use GPU VPS instances for: Fine-tuning vision models (like YOLOv8 or CLIP) Running frameworks like PyTorch, TensorFlow, JAX, or Hugging Face Transformers Inference serving using APIs like vLLM or Text Generation WebUI Experimenting with LoRA (Low-Rank Adaptation) to fine-tune LLMs on smaller datasets The beauty of renting a GPU VPS through LowEndBox is that you get access to the raw horsepower of an NVIDIA GPU, like an RTX 3090, 4090, or A6000, without spending thousands upfront. Many of the providers in our Cheap GPU VPS Directory support modern drivers and Docker, making it easy to deploy open-source AI stacks quickly. Whether you’re running Stable Diffusion, building a custom chatbot with LLaMA 2, or just learning the ropes of AI development, a GPU-enabled VPS can help you train and deploy models faster, more efficiently, and more affordably. 2. Video Rendering & Content Creation GPU-enabled VPS instances aren’t just for coders and researchers, they’re a huge asset for video editors, 3D animators, and digital artists as well. Whether you're rendering animations in Blender, editing 4K video in DaVinci Resolve, or generating visual effects with Adobe After Effects, a capable GPU can drastically reduce render times and improve responsiveness. Using a remote GPU server also allows you to offload intensive rendering tasks, keeping your local machine free for creative work. Many users even set up a pipeline using tools like FFmpeg, HandBrake, or Nuke, orchestrating remote batch renders or encoding jobs from anywhere in the world. With LowEndBox’s curated Cheap GPU List, you can find hourly or monthly rentals that match your creative needs—without having to build out your own costly workstation. 3. Cloud Gaming & Game Server Hosting Cloud gaming is another space where GPU VPS hosting makes a serious impact. Want to stream a full Windows desktop with hardware-accelerated graphics? Need to host a private Minecraft, Valheim, or CS:GO server with mods and enhanced visuals? A GPU server gives you the headroom to do it smoothly. Some users even use GPU VPSs for game development, testing their builds in environments that simulate the hardware their end users will have. It’s also a smart way to experiment with virtualized game streaming platforms like Parsec or Moonlight, especially if you're developing a cloud gaming experience of your own. With options from providers like InterServer and Crunchbits on LowEndBox, setting up a GPU-powered game or dev server has never been easier or more affordable. 4. Cryptocurrency Mining While the crypto boom has cooled off, GPU mining is still very much alive for certain coins, especially those that resist ASIC centralization. Coins like Ethereum Classic, Ravencoin, or newer GPU-friendly tokens still attract miners looking to earn with minimal overhead. Renting a GPU VPS gives you a low-risk way to test your mining setup, compare hash rates, or try out different software like T-Rex, NBMiner, or TeamRedMiner, all without buying hardware upfront. It's a particularly useful approach for part-time miners, researchers, or developers working on blockchain infrastructure. And with LowEndBox’s flexible, budget-focused listings, you can find hourly or monthly GPU rentals that suit your experimentation budget perfectly. Why Rent a GPU VPS Through LowEndBox? ✅ Lower CostEnterprise GPU hosting can get pricey fast. We surface deals starting under $50/month—some even less. For example: Crunchbits offers RTX 3070s for around $65/month. InterServer lists setups with RTX 4090s, Ryzen CPUs, and 192GB RAM for just $399/month. TensorDock provides hourly options, with prices like $0.34/hr for RTX 4090s and $2.21/hr for H100s. Explore all your options on our Cheap GPU VPS Directory. ✅ No Hardware CommitmentRenting gives you flexibility. Whether you need GPU power for just a few hours or a couple of months, you don’t have to commit to hardware purchases—or worry about depreciation. ✅ Easy ScalabilityWhen your project grows, so can your resources. Many GPU VPS providers listed on LowEndBox offer flexible upgrade paths, allowing you to scale up without downtime. Start Exploring GPU VPS Deals Today Whether you’re training models, rendering video, mining crypto, or building GPU-powered apps, renting a GPU-enabled VPS can save you time and money. Start browsing the latest GPU deals on LowEndBox and get the computing power you need, without the sticker shock. We've included a couple links to useful lists below to help you make an informed VPS/GPU-enabled purchasing decision. https://lowendbox.com/cheap-gpu-list-nvidia-gpus-for-ai-training-llm-models-and-more/ https://lowendbox.com/best-cheap-vps-hosting-updated-2020/ https://lowendbox.com/blog/2-usd-vps-cheap-vps-under-2-month/ Read the full article

0 notes

Text

Diversions #9: The garlic of March

The sun has returned!

I mean, it has always been there. Relatively speaking in the same place it was over the last few months. But the Earth’s tilt is such that as it revolves around the nearest star the portion on which I live (the northern hemisphere) is getting ever so slightly closer and faces it just long enough that temperatures are beginning to warm.

And I’m a very happy person indeed.

I think I wrote far too many times on this blog that it has been a cold winter. But, it has been, in fact, a cold winter! And this has really cramped my style. It has stifled my creativity, hampered my movement, and chilled my emotions.

March is a bit of a wildcard. It came in like a lamb so I sort of expect it to give us a good sock in the stomach on its way out. As I look in my photo library at photos of past Marches, I clearly see that winter is not yet over and that I need to temper my excitement.

Garlic, planted in October 2024, shows signs of life

I almost, almost, got out into the garden and began to move soil (we’re rebuilding our raised garden beds this year, and I’m eager to get started). But not only is it too early I think it would result in double work. I think if I move the soil now I’ll only have to move it again in the near future. We’re planning on lots of backyard changes; shed being moved, pathways laid, top soil deliveries, new drainage trenches, felled tree removals, etc. Lots to do. But I think getting started too early will only lead to frustration and disappointment and the chance of needing to do things twice.

A bayside home in Sandbridge, VA • 35mm

I’ve scanned in most of my film backlog. There is more to do, I haven’t edited any of them, archived them, or cataloged them. But, at least I’m making progress. One of them is above.

I worry that as the weather breaks I’ll likely want to do this even less than I do right now so perhaps I’ll prioritize this work for the very next rainy day.

I keep whittling away at getting a local LLM to become an agent that knows a lot about our business. It is more of a pet project at this point – a project that gives me a direct way to learn these tools. Of course, we already have apps and services that can chew our sales data and let me know about trends, statistics, etc. But I think it would be very useful if I (or any other team member) could ask an agent a question and it return the answers, charts, etc. I think these LLMs are already capable of doing it, but I think the tooling around them needs to mature more to make this far easier to do locally.

Right now I’m using Ollama to download, manage, and run the models locally. I do this on the command line sometimes (I’ve set up a bash alias called “bot” that runs my local models via Ollama). But lately I’ve been playing with Open WebUI via Docker. It can see my local Ollama models and gives me a ChatGPT-like interface for interacting with them (which is far nicer than the command line for most things).

Again, huge shoutout to Simon Willison for his work in testing all of these tools and copiously writing about them.

A cluster of links:

The Social Life of Photographs – Jessamyn West writes about how the Flickr Foundation is focused on maintaining the serendipity of bumping into other photographs while doing research.

I MADE THIS FOR YOU – Ethan Moses releases a bunch of art made with his amazing cameras in hopes to raise enough capital to give away his 3D print files for free. I linked to Moses work in WIS #88 and just recently mentioned this on Mastodon. If you’re into photography I recommend looking at his work.

PHOTON – The visuals in this…

V.H. Belvadi – Belvadi’s personal website is so very nice. /via Naz.

0 notes

Text

Noise (NIKKE) stablediffusion checkpoint henmixReal cosplay aigirls

Noise (NIKKE) 제작툴 : stableDiffusion webui checkpoint : henmixReal _v40 승리의 여신 니케 : 노이즈 LORA Download https://civitai.com/models/111838/noise-nikke-lora-or-2-outfits original prompt : masterpiece, best quality, 1girl, solo, <lora:noise-nikke-richy-v1:1> noise \(nikke\), noisedress, see-through, dark-skinned female, criss-cross, collar, long sleeves, see-through sleeves, center opening,…

View On WordPress

#aiart#AIArtworks#AIグラビア#AI美女#aigirl#aigirls#cosplay#���이즈#니케#henmixReal#승리의여신니케#코스프레#NIKKE#Noise#prompt#stablediffusion

3 notes

·

View notes