#Programming the FPGA

Explore tagged Tumblr posts

Text

The Department of Electronics and Communication Engineering K.Ramakrishnan College of Technology, in association with the IEEE Student Branch, recently organized an insightful invited talk on “Demystifying FPGA Design: From Concept to Implementation.” Further, this event, featured Dr.M.Elangovan, Associate Professor, Department of Electronics & Communication Engineering, Government College of Engineering, Trichy. Moreover, nearly 122 third-year students from our department attended the session, actively participated, and greatly benefited from the expert insights shared.

For more interesting information CLICK HERE

#the best engineering college krct#quality engineering and technical education.#best college of technology in trichy#krct the best college of technology in trichy#k ramakrishnan college of technology trichy#top college of technology in trichy#best autonomous college of technology in trichy#the best college for b.tech#ECE Talk session on Demystifying FPGA Design at KRCT#What is FPGA?#Applications of FPGA Design#FPGA Design Flow#Programming the FPGA

0 notes

Text

youtube

AMD Xilinx Vivado: Free Download and Setup on Windows 11 / 10

Subscribe to "Learn And Grow Community"

YouTube : https://www.youtube.com/@LearnAndGrowCommunity

LinkedIn Group : linkedin.com/company/LearnAndGrowCommunity

Blog : https://LearnAndGrowCommunity.blogspot.com/

Facebook : https://www.facebook.com/JoinLearnAndGrowCommunity/

Twitter Handle : https://twitter.com/LNG_Community

DailyMotion : https://www.dailymotion.com/LearnAndGrowCommunity

Instagram Handle : https://www.instagram.com/LearnAndGrowCommunity/

Follow #LearnAndGrowCommunity

#AMD#Xilinx#Vivado#Windows11#Windows10#SoftwareSuite#Download#Installation#Tutorial#LogicDevices#Programming#BeginnersGuide#ProgrammableLogicDevice#TechGuide#SoftwareSetup#TechTips#Troubleshooting#fpga#vhdltutorial#veriloghdl#verilog#learnandgrow#synthesis#vhdl#Youtube

3 notes

·

View notes

Text

“you work in tech, arent you worried about AI stealing your job?” if AI demolishes webdev, i will give it a medal. anyway im a computer engineer and since the AI does not have actual hands to plug physical wires into physical holes i am not particularly concerned. viva la engineering get wrecked CS

#tilki#major tag#also people keep saying github copilot/chatgpt can write code#yeah it can write like#shit javascript and python code#chatgpt cannot program an FPGA i know this because i tried somewhere around allnighter 3 of my senior project#and it could not do shit. god bless i have job security#sorry for engineerposting on main it will happen again

5 notes

·

View notes

Text

That is a very neat idea!

If you like things like that you might want to look into VHDL ( I learned that... some years ago, but have not touched it since ) or Verilog.

They are... programming languages for making logic gate logic.

You combine that with an FPGA, which is essentially a whole lot of NAND gates ( Which as I said, can represent any logic gate system ), and then you can make hardware... via software.

And yes, these essentially do things like your idea. Things that would take a CPU aaaaages to do, can be done very very fast. So you "just" have normal C code, but if it runs onto one of the problems it have hardware for, it uses the hardware.

This is also how graphics cards work, or just floating point operations!

It is insanely cool! :D

What is half-adder and full-adder combinational circuits?

So this question came up in the codeblr discord server, and I thought I would share my answer here too :3

First, a combinational circuit simply means a circuit where the outputs only depends on its input. ( combinational means "Combine" as in, combining the inputs to give some output )

It is a bit like a pure function. It is opposed to circuits like latches which remembers 1 bit. Their output depends on their inputs AND their state.

These circuits can be shown via their logic gates, or truth tables. I will explain using only words and the circuits, but you can look up the truth tablet for each of the circuits I talk about to help understand.

Ok, so an in the case of electronics is a circuit made with logic gates ( I... assume you know what they are... Otherwise ask and I can explain them too ) that adds 2 binary numbers, each which have only 1 character.

So one number is 1 or 0

And the other number is 1 or 0

So the possible outputs are are 0, 1 and 2.

Since you can only express from 0 to 1 with one binary number, and 0 to 3 with 2, we need to output 2 binary numbers to give the answer. So the output is 2 binary numbers

00 = 0

01 = 1

10 = 2

11 = 3 // This can never happen with a half adder. The max possible result is 2

Each character will be represented with a wire, and a wire is a 0 if it is low voltage (usually ground, or 0 volts) and a 1 if it is high voltage (Voltage depends. Can be 5 volts, 3.3, 12 or something else. )

BUT if you only use half adders, you can ONLY add 2 single character binary numbers together. Never more.

If you want to add more together, you need a full adder. This takes 3 single character binary numbers, and adds them and outputs a single 2 character number.

This means it have 3 inputs and 2 outputs.

We have 2 outputs because we need to give a result that is 0, 1, 2 or 3

Same binary as before, except now we CAN get a 11 (which is 3)

And we can chain full adders together to count as many inputs as we want.

So why ever use a half adder? Well, every logic gate cirquit can be made of NAND (Not and) gates, so we usually compare complexity in how many NAND gates it would take to make a circuit. More NAND gates needed means the circuit is slower and more expensive to make.

A half adder takes 5 NAND gates to make

A full adder takes 9 NAND gates.

So only use a full adder if you need one.

Geeks for Geeks have a page for each of the most normal basic cirquits:

I hope that made sense, and was useful :3

40 notes

·

View notes

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

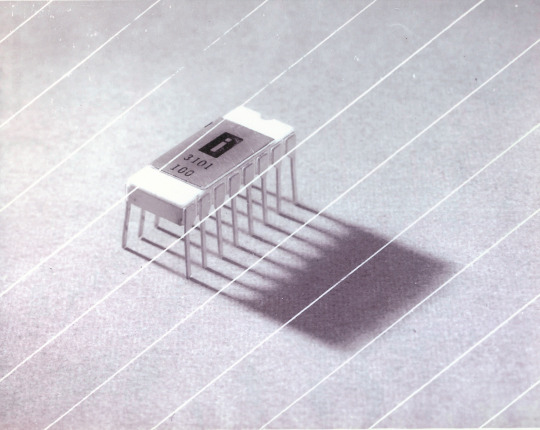

Intel 3101

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

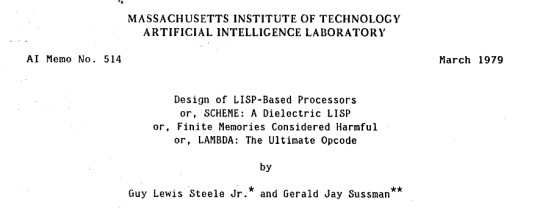

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P6 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P6 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P6 microarchitecture comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

237 notes

·

View notes

Text

2025-03-04

It's going to be a rainy day today, which makes me feel less guilty for staying inside and working. My last course (ever! omg) is on programming FPGAs, so I'm working on an assignment for that. Too bad I never liked redstone more when I played minecraft, that knowledge of logic gates would come in really handy right now. After this I'll be going to my favorite tea bar to do some readings on dynamical dark matter discussions sparked by the 2024 DESI results.

11 notes

·

View notes

Note

is it possible for people to like, create old consoles/computers from scratch? like if they could replicate the physical hardware using new materials, and plant old software onto the new hardware to create like, a totally new, say, win98 pc? cause i browse online and see a lot of secondhand stuff, but the issue is always that machines break down over time due to physical wear on the hardware itself, so old pcs aren't going to last forever. it makes me wonder if at a certain point, old consoles and computers are just gonna degrade past usability, or if it's possible to build new pieces of retro hardware just as they would have been built 30 or 40 years ago

Can of worms! I am happy to open it though. For the moment I will ignore any rights issues for various reasons including "those eventually expire" and "patent law is the branch of IP law I know the least about"

Off the top of my head so long as you're only* talking computer/console hardware there aren't any particular parts that we've lost the capability to start manufacturing again, but there's more economical approaches to building neo-retro** hardware.

But before digging into that I would like to mention that anecdotally, a great many hardware failures I see on old computers are on parts that you can just remove and replace with something new. Hard drive failures, floppy disc drive failures, damaged capacitors, various issues with batteries/battery compartments, these are mostly fixable without resorting to scavenging genuine old parts. Hard drive and floppy drive failures may require finding something that you can actually plug into the device but this isn't strictly impossible.

Additionally, it's common among retro computing enthusiasts to replace some of these parts with fancier parts than were possible when those machines were new. The primary use cases for buying say, floppy-to-USB converters are keeping old industrial and aviation computers alive longer, but hobbyists do also buy these (I want to put one in my 9801 too but that's pricy so it's just on my wishlist for after I have finished school and settled down ;u;) Sticking SSDs in old computers is also not an uncommon mod.

So-- hold on let me grab my half-disassembled PC-9801 BX2 to help me explain

(Feat. the parts I pulled out of it in the second photo)

In that second photo we have some ram modules, a power supply, floppy drives, a hard drive, and floppy+hard drive cables. The fdd+hdd+cables are easily replaceable with new parts as mentioned, the power supply is a power supply, and the ram chips are... actually I don't know a lot about this one. I have enough old ram chips laying around that I haven't had to think hard about how to replace them.

Now in photo number 1 we have the motherboard and some expansion chips. The sound card is centered a bit here*** and underneath it is a video expansion card and underneath that interesting expansion card setup is the motherboard itself.

The big kickers for manufacturing new would be the CPU and the sound card-- you in theory could make those new but chip fabrication is only economical if it's done beyond a certain scale that's not quite realistic for a niche hobbyist market.

But what you could use instead of those is an FPGA, or Field Programmable Gate Array. These aren't within my field of expertise so to simplify a bit, these are integrated chips (like a CPU or a sound chip) but unlike those, they can be reprogrammed after manufacture, rather than having a set-in-stone layout. So you could program one to act as an old CPU, at a cost that is... more than that of getting a standard mass-manufactured CPU, and less than attempting small scale manufacture of a CPU.

So in theory you could plunk one of those down into a custom circuit board, use the closest approximate off the shelf parts, and make something that runs like a pc-98 (or commodore, or famicom, or saturn, or whatever.) In practice as far as I'm aware, users who want hardware like this use something like the MiSTer FPGA (Third party link but I think it's a pretty useful intro to the project)

And of course for many users, emulation will also do the trick.

*manufacturing cathode ray tube displays is out of the question

**idk if this is a term but I hope it is. If it's not, I'm coining it

***That's a 26k which isn't the best soundcard but it's super moe!!!!!!!!!!

30 notes

·

View notes

Note

Assembly is not enough, i need to fuck my processor

VHDL or Verilog. I don't have any experience in Verilog, but have made some simple stuff on FPGAs with VHDL; it's pretty cool, but also quite frustrating since it's simultaneously the lowest level programming you'll probably ever do (as it is changing real hardware), but it's also quite abstract, so you don't really know what the compiler does; this makes fixing (heisen) bugs real tricky.

If you ment 'fuck my processor' more literally I recommend something with a LGA socket, because you will bend the pins of a PGA CPU. Also dry it off before reinserting it in your motherboard. I don't know how you'll get any real pleasure from it, but feel free to try!

5 notes

·

View notes

Text

ok because i know what fpgas are now i have a super ambitious project where i make a cpu, make an llvm target for that cpu, make an operating system using that llvm target, then make programs for that operating system

3 notes

·

View notes

Text

OneAPI Construction Kit For Intel RISC V Processor Interface

With the oneAPI Construction Kit, you may integrate the oneAPI Ecosystem into your Intel RISC V Processor.

Intel RISC-V

Recently, Codeplay, an Intel business, revealed that their oneAPI Construction Kit supports RISC-V. Rapidly expanding, Intel RISC V is an open standard instruction set architecture (ISA) available under royalty-free open-source licenses for processors of all kinds.

Through direct programming in C++ with SYCL, along with a set of libraries aimed at common functions like math, threading, and neural networks, and a hardware abstraction layer that allows programming in one language to target different devices, the oneAPI programming model enables a single codebase to be deployed across multiple computing architectures including CPUs, GPUs, FPGAs, and other accelerators.

In order to promote open source cooperation and the creation of a cohesive, cross-architecture programming paradigm free from proprietary software lock-in, the oneAPI standard is now overseen by the UXL Foundation.

A framework that may be used to expand the oneAPI ecosystem to bespoke AI and HPC architectures is Codeplay’s oneAPI Construction Kit. For both native on-host and cross-compilation, the most recent 4.0 version brings RISC-V native host for the first time.

Because of this capability, programs may be executed on a CPU and benefit from the acceleration that SYCL offers via data parallelism. With the oneAPI Construction Kit, Intel RISC V processor designers can now effortlessly connect SYCL and the oneAPI ecosystem with their hardware, marking a key step toward realizing the goal of a completely open hardware and software stack. It is completely free to use and open-source.

OneAPI Construction Kit

Your processor has access to an open environment with the oneAPI Construction Kit. It is a framework that opens up SYCL and other open standards to hardware platforms, and it can be used to expand the oneAPI ecosystem to include unique AI and HPC architectures.

Give Developers Access to a Dynamic, Open-Ecosystem

With the oneAPI Construction Kit, new and customized accelerators may benefit from the oneAPI ecosystem and an abundance of SYCL libraries. Contributors from many sectors of the industry support and maintain this open environment, so you may build with the knowledge that features and libraries will be preserved. Additionally, it frees up developers’ time to innovate more quickly by reducing the amount of time spent rewriting code and managing disparate codebases.

The oneAPI Construction Kit is useful for anybody who designs hardware. To get you started, the Kit includes a reference implementation for Intel RISC V vector processors, although it is not confined to RISC-V and may be modified for a variety of processors.

Codeplay Enhances the oneAPI Construction Kit with RISC-V Support

The rapidly expanding open standard instruction set architecture (ISA) known as RISC-V is compatible with all sorts of processors, including accelerators and CPUs. Axelera, Codasip, and others make Intel RISC V processors for a variety of applications. RISC-V-powered microprocessors are also being developed by the EU as part of the European Processor Initiative.

At Codeplay, has been long been pioneers in open ecosystems, and as a part of RISC-V International, its’ve worked on the project for a number of years, leading working groups that have helped to shape the standard. Nous realize that building a genuinely open environment starts with open, standards-based hardware. But in order to do that, must also need open hardware, open software, and open source from top to bottom.

This is where oneAPI and SYCL come in, offering an ecosystem of open-source, standards-based software libraries for applications of various kinds, such oneMKL or oneDNN, combined with a well-developed programming architecture. Both SYCL and oneAPI are heterogeneous, which means that you may create code once and use it on any GPU AMD, Intel, NVIDIA, or, as of late, RISC-V without being restricted by the manufacturer.

Intel initially implemented RISC-V native host for both native on-host and cross-compilation with the most recent 4.0 version of the oneAPI Construction Kit. Because of this capability, programs may be executed on a CPU and benefit from the acceleration that SYCL offers via data parallelism. With the oneAPI Construction Kit, Intel RISC V processor designers can now effortlessly connect SYCL and the oneAPI ecosystem with their hardware, marking a major step toward realizing the vision of a completely open hardware and software stack.

Read more on govindhtech.com

#OneAPIConstructionKit#IntelRISCV#SYCL#FPGA#IntelRISCVProcessorInterface#oneAPI#RISCV#oneDNN#oneMKL#RISCVSupport#OpenEcosystem#technology#technews#news#govindhtech

2 notes

·

View notes

Text

Understanding FPGA Architecture: Key Insights

Introduction to FPGA Architecture

Imagine having a circuit board that you could rewire and reconfigure as many times as you want. This adaptability is exactly what FPGAs offer. The world of electronics often seems complex and intimidating, but understanding FPGA architecture is simpler than you think. Let’s break it down step by step, making it easy for anyone to grasp the key concepts.

What Is an FPGA?

An FPGA, or Field Programmable Gate Array, is a type of integrated circuit that allows users to configure its hardware after manufacturing. Unlike traditional microcontrollers or processors that have fixed functionalities, FPGAs are highly flexible. You can think of them as a blank canvas for electrical circuits, ready to be customized according to your specific needs.

How FPGAs Are Different from CPUs and GPUs

You might wonder how FPGAs compare to CPUs or GPUs, which are more common in everyday devices like computers and gaming consoles. While CPUs are designed to handle general-purpose tasks and GPUs excel at parallel processing, FPGAs stand out because of their configurability. They don’t run pre-defined instructions like CPUs; instead, you configure the hardware directly to perform tasks efficiently.

Basic Building Blocks of an FPGA

To understand how an FPGA works, it’s important to know its basic components. FPGAs are made up of:

Programmable Logic Blocks (PLBs): These are the “brains” of the FPGA, where the logic functions are implemented.

Interconnects: These are the wires that connect the logic blocks.

Input/Output (I/O) blocks: These allow the FPGA to communicate with external devices.

These elements work together to create a flexible platform that can be customized for various applications.

Understanding Programmable Logic Blocks (PLBs)

The heart of an FPGA lies in its programmable logic blocks. These blocks contain the resources needed to implement logic functions, which are essentially the basic operations of any electronic circuit. In an FPGA, PLBs are programmed using hardware description languages (HDLs) like VHDL or Verilog, enabling users to specify how the FPGA should behave for their particular application.

What are Look-Up Tables (LUTs)?

Look-Up Tables (LUTs) are a critical component of the PLBs. Think of them as small memory units that can store predefined outputs for different input combinations. LUTs enable FPGAs to quickly execute logic operations by “looking up” the result of a computation rather than calculating it in real-time. This speeds up performance, making FPGAs efficient at performing complex tasks.

The Role of Flip-Flops in FPGA Architecture

Flip-flops are another essential building block within FPGAs. They are used for storing individual bits of data, which is crucial in sequential logic circuits. By storing and holding values, flip-flops help the FPGA maintain states and execute tasks in a particular order.

Routing and Interconnects: The Backbone of FPGAs

Routing and interconnects within an FPGA are akin to the nervous system in a human body, transmitting signals between different logic blocks. Without this network of connections, the logic blocks would be isolated and unable to communicate, making the FPGA useless. Routing ensures that signals flow correctly from one part of the FPGA to another, enabling the chip to perform coordinated functions.

Why are FPGAs So Versatile?

One of the standout features of FPGAs is their versatility. Whether you're building a 5G communication system, an advanced AI model, or a simple motor controller, an FPGA can be tailored to meet the exact requirements of your application. This versatility stems from the fact that FPGAs can be reprogrammed even after they are deployed, unlike traditional chips that are designed for one specific task.

FPGA Configuration: How Does It Work?

FPGAs are configured through a process called “programming” or “configuration.” This is typically done using a hardware description language like Verilog or VHDL, which allows engineers to specify the desired behavior of the FPGA. Once programmed, the FPGA configures its internal circuitry to match the logic defined in the code, essentially creating a custom-built processor for that particular application.

Real-World Applications of FPGAs

FPGAs are used in a wide range of industries, including:

Telecommunications: FPGAs play a crucial role in 5G networks, enabling fast data processing and efficient signal transmission.

Automotive: In modern vehicles, FPGAs are used for advanced driver assistance systems (ADAS), real-time image processing, and autonomous driving technologies.

Consumer Electronics: From smart TVs to gaming consoles, FPGAs are used to optimize performance in various devices.

Healthcare: Medical devices, such as MRI machines, use FPGAs for real-time image processing and data analysis.

FPGAs vs. ASICs: What’s the Difference?

FPGAs and ASICs (Application-Specific Integrated Circuits) are often compared because they both offer customizable hardware solutions. The key difference is that ASICs are custom-built for a specific task and cannot be reprogrammed after they are manufactured. FPGAs, on the other hand, offer the flexibility of being reconfigurable, making them a more versatile option for many applications.

Benefits of Using FPGAs

There are several benefits to using FPGAs, including:

Flexibility: FPGAs can be reprogrammed even after deployment, making them ideal for applications that may evolve over time.

Parallel Processing: FPGAs excel at performing multiple tasks simultaneously, making them faster for certain operations than CPUs or GPUs.

Customization: FPGAs allow for highly customized solutions, tailored to the specific needs of a project.

Challenges in FPGA Design

While FPGAs offer many advantages, they also come with some challenges:

Complexity: Designing an FPGA requires specialized knowledge of hardware description languages and digital logic.

Cost: FPGAs can be more expensive than traditional microprocessors, especially for small-scale applications.

Power Consumption: FPGAs can consume more power compared to ASICs, especially in high-performance applications.

Conclusion

Understanding FPGA architecture is crucial for anyone interested in modern electronics. These devices provide unmatched flexibility and performance in a variety of industries, from telecommunications to healthcare. Whether you're a tech enthusiast or someone looking to learn more about cutting-edge technology, FPGAs offer a fascinating glimpse into the future of computing.

2 notes

·

View notes

Text

youtube

[In Hindi] | AMD Xilinx Vivado: Free Download and Setup on Windows 11 / 10

Subscribe to "Learn And Grow Community"

YouTube : https://www.youtube.com/@LearnAndGrowCommunity

LinkedIn Group : linkedin.com/company/LearnAndGrowCommunity

Blog : https://LearnAndGrowCommunity.blogspot.com/

Facebook : https://www.facebook.com/JoinLearnAndGrowCommunity/

Twitter Handle : https://twitter.com/LNG_Community

DailyMotion : https://www.dailymotion.com/LearnAndGrowCommunity

Instagram Handle : https://www.instagram.com/LearnAndGrowCommunity/

Follow #LearnAndGrowCommunity

#AMD#Xilinx#Vivado#Windows11#Windows10#SoftwareSuite#Download#Installation#Tutorial#LogicDevices#Programming#BeginnersGuide#ProgrammableLogicDevice#TechGuide#SoftwareSetup#TechTips#Troubleshooting#fpga#vhdltutorial#veriloghdl#verilog#learnandgrow#synthesis#vhdl#vhdlprogramming#HDLsimulation#HDLSynthesis#Youtube

1 note

·

View note

Text

FPGA Market - Exploring the Growth Dynamics

The FPGA market is witnessing rapid growth finding a foothold within the ranks of many up-to-date technologies. It is called versatile components, programmed and reprogrammed to perform special tasks, staying at the fore to drive innovation across industries such as telecommunications, automotive, aerospace, and consumer electronics. Traditional fixed-function chips cannot be changed to an application, whereas in the case of FPGAs, this can be done. This brings fast prototyping and iteration capability—extremely important in high-flux technology fields such as telecommunications and data centers. As such, FPGAs are designed for the execution of complex algorithms and high-speed data processing, thus making them well-positioned to handle the demands that come from next-generation networks and cloud computing infrastructures.

In the aerospace and defense industries, FPGAs have critically contributed to enhancing performance in systems and enhancing their reliability. It is their flexibility that enables the realization of complex signal processing, encryption, and communication systems necessary for defense-related applications. FPGAs provide the required speed and flexibility to meet the most stringent specifications of projects in aerospace and defense, such as satellite communications, radar systems, and electronic warfare. The ever-improving FPGA technology in terms of higher processing power and lower power consumption is fueling demand in these critical areas.

Consumer electronics is another upcoming application area for FPGAs. From smartphones to smart devices, and finally the IoT, the demand for low-power and high-performance computing is on the rise. In this regard, FPGAs give the ability to integrate a wide array of varied functions onto a single chip and help in cutting down the number of components required, thereby saving space and power. This has been quite useful to consumer electronics manufacturers who wish to have state-of-the-art products that boast advanced features and have high efficiency. As IoT devices proliferate, the role of FPGAs in this area will continue to foster innovation.

Growing competition and investments are noticed within the FPGA market, where key players develop more advanced and efficient products. The performance of FPGAs is increased by investing in R&D; the number of features grows, and their cost goes down. This competitive environment is forcing innovation and a wider choice availability for end-users is contributing to the growth of the whole market.

Author Bio -

Akshay Thakur

Senior Market Research Expert at The Insight Partners

2 notes

·

View notes

Text

ARM Industrial Computers with LabVIEW graphical programming for industrial equipment monitoring and control

Case Details

LabVIEW is a powerful and flexible graphical programming platform, particularly suited for engineering and scientific applications that require interaction with hardware devices. Its intuitive interface makes the development process more visual, helping engineers and scientists quickly build complex measurement, testing, and control systems.

Combining ARM industrial computers with LabVIEW for industrial equipment monitoring and control is an efficient and flexible solution, especially suitable for industrial scenarios requiring real-time performance, reliability, and low power consumption. Below is a key-point analysis and implementation guide.

1. Why Choose ARM Industrial Computers?

Low Power Consumption & High Efficiency: ARM processors balance performance and energy efficiency, making them ideal for long-term industrial operation.

Compact & Rugged Design: Industrial-grade ARM computers often feature wide-temperature operation, vibration resistance, and dustproofing (e.g., IP65-rated enclosures).

Rich Interfaces: Support for various industrial communication protocols (e.g., RS-485, CAN bus, EtherCAT) and expandable I/O modules.

Cost-Effective: Compared to x86 platforms, ARM solutions are typically more economical, making them suitable for large-scale deployments.

2. LabVIEW Compatibility with ARM Platforms

ARM Support in LabVIEW: Verify whether the LabVIEW version supports ARM architecture (e.g., LabVIEW NXG or running C code generated by LabVIEW on Linux RT).

Cross-Platform Development:

Option 1: Develop LabVIEW programs on an x86 PC and deploy them to ARM via cross-compilation (requires LabVIEW Real-Time Module).

Option 2: Leverage LabVIEW’s Linux compatibility to run compiled executables on an ARM industrial computer with Linux OS.

Hardware Drivers: Ensure that GPIO, ADC, communication interfaces, etc., have corresponding LabVIEW drivers or can be accessed via C DLL calls.

3. Typical Applications

Real-Time Data Acquisition: Connect to sensors (e.g., temperature, vibration) via Modbus/TCP, OPC UA, or custom protocols.

Edge Computing: Preprocess data (e.g., FFT analysis, filtering) on the ARM device before uploading to the cloud to reduce bandwidth usage.

Control Logic: Implement PID control, state machines, or safety interlocks (e.g., controlling relays via digital outputs).

HMI Interaction: Use LabVIEW’s UI module to build local touchscreen interfaces or WebVI for remote monitoring.

4. Implementation Steps

Hardware Selection:

Choose an ARM industrial computer compatible with LabVIEW (e.g., ARMxy, Raspberry Pi CM5).

Expand I/O modules (e.g., NI 9401 digital I/O, MCC DAQ modules).

Software Configuration:

Install LabVIEW Real-Time Module or LabVIEW for Linux.

Deploy drivers for the ARM device (e.g., NI Linux Real-Time or third-party drivers).

Communication Protocol Integration:

Industrial protocols: Use LabVIEW DSC Module for OPC UA, Modbus.

Custom protocols: Leverage TCP/IP or serial communication (VISA library).

Real-Time Optimization:

Use LabVIEW Real-Time’s Timed Loop to ensure stable control cycles.

Priority settings: Assign high priority to critical tasks (e.g., safety interrupts).

Remote Monitoring:

Push data to SCADA systems (e.g., Ignition, Indusoft) via LabVIEW Web Services or MQTT.

5. Challenges & Solutions

ARM Compatibility: If LabVIEW does not natively support a specific ARM device, consider:

Generating C code (LabVIEW C Generator) to call low-level hardware APIs.

Using middleware (e.g., Node-RED) to bridge LabVIEW and ARM hardware.

Real-Time Requirements: For μs-level response, pair with a real-time OS (e.g., Xenomai) or FPGA extensions (e.g., NI Single-Board RIO).

Long-Term Maintenance: Adopt modular programming (LabVIEW SubVIs) and version control (Git integration).

6. Recommended Toolchain

Hardware: NI CompactRIO (ARM+FPGA), Advantech UNO-2484G (ARM Cortex-A72).

Software: LabVIEW Real-Time + Vision Module (if image processing is needed).

Cloud Integration: Push data to AWS IoT or Azure IoT Hub via LabVIEW.

Conclusion

The combination of ARM industrial computers and LabVIEW provides a lightweight, cost-effective edge solution for industrial monitoring and control, particularly in power- and space-sensitive environments. With proper hardware-software architecture design, it can achieve real-time performance, reliability, and scalability. For higher performance demands, consider hybrid architectures (ARM+FPGA) or deeper integration with NI’s embedded hardware.

0 notes

Text

Unlocking a Brighter Future with VLSI: The Gateway to Chip Design Careers

Introduction to the World of VLSI

Very-Large-Scale Integration (VLSI) has revolutionized the world of electronics by enabling the design and development of integrated circuits with millions of transistors. In today’s digital age, everything from smartphones to autonomous vehicles relies on VLSI technologies. This field not only drives innovation but also creates abundant career opportunities for aspiring engineers. With industries increasingly demanding skilled professionals, VLSI training has become a cornerstone for electronics and electrical graduates aiming to shape the future. Pursuing a specialized program in VLSI is essential for gaining hands-on knowledge and mastering the nuances of chip design, verification, and semiconductor technology. For students eager to make a mark in this high-growth domain, starting with foundational training sets the stage for long-term success.

Importance of Practical VLSI Skills

Theoretical knowledge alone is not sufficient to thrive in the VLSI industry. Employers look for candidates who can demonstrate real-world problem-solving skills using tools and methodologies used in modern chip design. This is where practical training becomes invaluable. Training programs that emphasize industry-relevant experience help bridge the gap between academic learning and corporate expectations. The best vlsi training institute in hyderabad offers a curriculum that mirrors actual work scenarios, equipping students with expertise in ASIC and FPGA design, physical verification, and layout techniques. These institutes use advanced software tools and simulations, ensuring learners are ready to step into the semiconductor industry with confidence. Practical exposure increases job readiness and boosts the chances of landing lucrative roles in top organizations.

Why Job-Oriented VLSI Training Matters

In a competitive job market, simply completing a degree in electronics or electrical engineering may not be enough. Employers often prefer candidates who have undergone rigorous, job-focused training. A vlsi job oriented training in hyderabad prepares students to meet these expectations head-on. These programs are tailored to align with the current hiring needs of chip design companies, offering modules in RTL coding, verification, and DFT (Design for Testability). They also include placement support, mock interviews, and resume-building sessions, which make students more attractive to recruiters. Such job-oriented training ensures learners understand both the theoretical and practical aspects of VLSI, thus standing out among a pool of generic applicants. It helps them build confidence while transitioning from academic settings into full-time employment.

Career Prospects After VLSI Training

After completing a comprehensive VLSI course, a wide array of career paths opens up for students. From design engineer to verification specialist, physical design engineer to embedded systems developer, the opportunities are diverse and promising. The semiconductor sector continues to grow with advancements in AI, IoT, and 5G, all of which depend heavily on VLSI technology. With the right training, candidates can secure roles in both multinational corporations and domestic startups. Furthermore, continuous learning and certification can lead to rapid career progression and global opportunities. Students who invest in specialized VLSI training often find themselves ahead of their peers, both in terms of skills and job prospects. A structured learning path also gives them the clarity to choose roles that align with their interests and strengths.

Conclusion: Choose the Right Platform

To excel in the VLSI industry, one must invest in quality education that blends theory with hands-on training. Choosing a reputed institute is critical for gaining the right skill set and securing meaningful employment. The learning experience should be immersive, practical, and aligned with industry standards. For aspirants seeking a reliable and impactful learning journey, platforms like takshila-vlsi.com offer a well-structured approach to VLSI training. By enrolling in a trusted program, students can step confidently into the semiconductor industry and build a rewarding career in chip design and development.

0 notes

Text

The FPGA Programming Handbook, Second Edition by Frank Bruno and Guy Eschemann #FPGA #programming

0 notes