#Python prediction

Explore tagged Tumblr posts

Text

The Rise Of Python Web Development: Trends And Predictions

This year, python has been making its strides to become the first citizenship in web development. Simple, readable, and with a rich ecosystem, Python has become a reference for many millions of developers across the globe. In this recent blog, we are going to explore the trends shaping present Python web development and provide you with an idea of its future as well as showcasing how Python development companies are evolving to handle modern challenges.

Current Trends In Python Web Development

1. Popularity Of Frameworks

The Python ecosystem has very rich web development frameworks that ease the whole process. Notable among the lot are Django, Flask, and FastAPI.

Ideal for building APIs with automatically generated interactive documentation and executed quickly

2. API-Driven Development Emanation

API-driven development is becoming more and more important with the growth of mobile applications and the microservices software architectural style. Python frameworks such as FastAPI are appreciated for their effectiveness in developing strong APIs with less coding effort.

3. Security Implications

Security is of prime importance when it comes to web applications. Django has in-built security features to guard against a vast array of security threats, including those like SQL injection and cross-site scripting; hence secure application development is more comfortable using Django than Flask. The security features that help deploy in Flask are also several; though when compared with Django, it requires more manual setup.

4. Asynchronous Programming Adopted

A main driver of the increased adoption of asynchronous programming within Python is the increased need for responsive and scalable applications. Overall, with support for the Starlette server, FastAPI uses Python asynchronous features to handle concurrent requests in a way that lends itself to good performance characteristics even in high-traffic scenarios.

Future Trends And Predictions

1. AI And Machine Learning Integration

As artificial intelligence (AI) and machine learning (ML) invade the workspace, this is how Python will be able to let you infuse with these technologies within web applications. In this regard, Python, again, is dominant due to the fact that it has the best libraries. For instance, TensorFlow, PyTorch, and scikit-learn are some of the rich sets of libraries that make a strong base for developing AI-powered features.

2. Emergence of Serverless Architectures

The massive rise of serverless computing services, which allow developers to build and operate applications without managing servers, is due to step up. Compatibility of Python with serverless platforms such as AWS Lambda and Azure Functions will make it highly useful for the development of serverless applications.

3. Enhanced Focus on Developer Experience

An increasing focus on improving developer experience will continue to shape Python web development. Easing development processes, tools, and frameworks for tasks like automatic code formatting and improved debugging, among others, are lifting the bar higher. Python development companies will invest in creating better development environments and tools to boost productivity.

4. Evolution Of Python Frameworks

The frameworks will continue to evolve to make developers' lives even easier. New versions of these existing frameworks or another new framework will be more focused on performance, security, and ease of use. For example, Django and Flask will very likely put in more advanced features and integrations to keep abreast of these.

How Python Development Companies Are Adapting

1. Implementing New Technologies

Python web development company are implying that they are adopting the latest technologies and trends to remain in the business. This involves the adoption of frameworks; for instance, FastAPI for API development, then integrating AI and ML capabilities for their projects.

2. Security Focus

Subsequently, the importance of security at Python development companies is raising, and they are gradually defining strategies around the secure development life cycle based on best practices of secure coding that come equipped with a continuous update mechanism during the development life cycle.

3. Enhanced Developers' Tools

Companies are investing in advanced practices and tools for a better development process. These include advanced code editors, automated testing frameworks, and continuous integration/deployment pipelines that will carry out the development part at a faster rate.

4. Custom Solutions Offering

Python development companies also customize solutions for flexibility within Python, thereby giving much value to their customer needs. This covers critical enterprise applications, third-party integration, and provision of support that is really needed to address business needs.

Conclusion

One such programming field is Web Development, and ever since then, Python has evolved to be the dominant language in building web applications. Some recent trends in its evolving role in web development are popular headless content management systems, advanced frameworks, API-driven development, and a new focus area on security. The capabilities of Python will just grow with complex web applications that are increasing their reliance on AI and machine learning technologies to further strengthen its status as one of the top-used languages in development

#hire python developers#python development company#python web development company#Python#Python trend#Python prediction

0 notes

Text

Our beloved villains

I know I said I'd wait, but Python and Delphi were begging to be released. Calliope and Circe will come out tomorrow instead.

Python and Delphi are the villains in Atlas' story.

Python is of course the Python from myth that was slain by Apollo, only now he's been resurrected and preying on the Cassandrasan family for millennia. His design is based on a Naga, though he has some human traits.

Delphi is Atlas' great grandmother, who met Python during a great "plague" in 1936 where the village water supply was poisoned with Python's venom. Finding him devouring the recent deceased, Delphi was coaxed into helping him eat more members of her family with the promise of immortality.

Delphi is now 101, and still completely fit and at ease thanks to Python's magic. She's helped Python for decades, even sacrificing her own children. Once Python devours the dead, Delphi burns a sheep and uses those ashes instead of the recently deceased family member. Since she's the head prophet, she's gotten away with this since she met Python.

@b0njourbeach @inotonline

#atlas speaks#twisted wonderland#twst rp#twst oc rp#twisted wonderland rp#twst roleplay#twst#atlas predictions#python#Delphi#art#artwork#art requests#lore dump

29 notes

·

View notes

Text

The Skills I Acquired on My Path to Becoming a Data Scientist

Data science has emerged as one of the most sought-after fields in recent years, and my journey into this exciting discipline has been nothing short of transformative. As someone with a deep curiosity for extracting insights from data, I was naturally drawn to the world of data science. In this blog post, I will share the skills I acquired on my path to becoming a data scientist, highlighting the importance of a diverse skill set in this field.

The Foundation — Mathematics and Statistics

At the core of data science lies a strong foundation in mathematics and statistics. Concepts such as probability, linear algebra, and statistical inference form the building blocks of data analysis and modeling. Understanding these principles is crucial for making informed decisions and drawing meaningful conclusions from data. Throughout my learning journey, I immersed myself in these mathematical concepts, applying them to real-world problems and honing my analytical skills.

Programming Proficiency

Proficiency in programming languages like Python or R is indispensable for a data scientist. These languages provide the tools and frameworks necessary for data manipulation, analysis, and modeling. I embarked on a journey to learn these languages, starting with the basics and gradually advancing to more complex concepts. Writing efficient and elegant code became second nature to me, enabling me to tackle large datasets and build sophisticated models.

Data Handling and Preprocessing

Working with real-world data is often messy and requires careful handling and preprocessing. This involves techniques such as data cleaning, transformation, and feature engineering. I gained valuable experience in navigating the intricacies of data preprocessing, learning how to deal with missing values, outliers, and inconsistent data formats. These skills allowed me to extract valuable insights from raw data and lay the groundwork for subsequent analysis.

Data Visualization and Communication

Data visualization plays a pivotal role in conveying insights to stakeholders and decision-makers. I realized the power of effective visualizations in telling compelling stories and making complex information accessible. I explored various tools and libraries, such as Matplotlib and Tableau, to create visually appealing and informative visualizations. Sharing these visualizations with others enhanced my ability to communicate data-driven insights effectively.

Machine Learning and Predictive Modeling

Machine learning is a cornerstone of data science, enabling us to build predictive models and make data-driven predictions. I delved into the realm of supervised and unsupervised learning, exploring algorithms such as linear regression, decision trees, and clustering techniques. Through hands-on projects, I gained practical experience in building models, fine-tuning their parameters, and evaluating their performance.

Database Management and SQL

Data science often involves working with large datasets stored in databases. Understanding database management and SQL (Structured Query Language) is essential for extracting valuable information from these repositories. I embarked on a journey to learn SQL, mastering the art of querying databases, joining tables, and aggregating data. These skills allowed me to harness the power of databases and efficiently retrieve the data required for analysis.

Domain Knowledge and Specialization

While technical skills are crucial, domain knowledge adds a unique dimension to data science projects. By specializing in specific industries or domains, data scientists can better understand the context and nuances of the problems they are solving. I explored various domains and acquired specialized knowledge, whether it be healthcare, finance, or marketing. This expertise complemented my technical skills, enabling me to provide insights that were not only data-driven but also tailored to the specific industry.

Soft Skills — Communication and Problem-Solving

In addition to technical skills, soft skills play a vital role in the success of a data scientist. Effective communication allows us to articulate complex ideas and findings to non-technical stakeholders, bridging the gap between data science and business. Problem-solving skills help us navigate challenges and find innovative solutions in a rapidly evolving field. Throughout my journey, I honed these skills, collaborating with teams, presenting findings, and adapting my approach to different audiences.

Continuous Learning and Adaptation

Data science is a field that is constantly evolving, with new tools, technologies, and trends emerging regularly. To stay at the forefront of this ever-changing landscape, continuous learning is essential. I dedicated myself to staying updated by following industry blogs, attending conferences, and participating in courses. This commitment to lifelong learning allowed me to adapt to new challenges, acquire new skills, and remain competitive in the field.

In conclusion, the journey to becoming a data scientist is an exciting and dynamic one, requiring a diverse set of skills. From mathematics and programming to data handling and communication, each skill plays a crucial role in unlocking the potential of data. Aspiring data scientists should embrace this multidimensional nature of the field and embark on their own learning journey. If you want to learn more about Data science, I highly recommend that you contact ACTE Technologies because they offer Data Science courses and job placement opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested. By acquiring these skills and continuously adapting to new developments, they can make a meaningful impact in the world of data science.

#data science#data visualization#education#information#technology#machine learning#database#sql#predictive analytics#r programming#python#big data#statistics

14 notes

·

View notes

Text

Autism Detection with Stacking Classifier

Introduction Navigating the intricate world of medical research, I've always been fascinated by the potential of artificial intelligence in health diagnostics. Today, I'm elated to unveil a project close to my heart, as I am diagnosed ASD, and my cousin who is 18 also has ASD. In my project, I employed machine learning to detect Adult Autism with a staggering accuracy of 95.7%. As followers of my blog know, my love for AI and medical research knows no bounds. This is a testament to the transformative power of AI in healthcare.

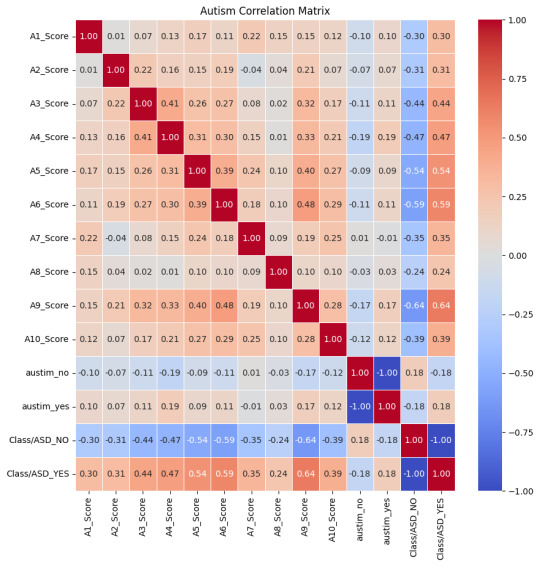

The Data My exploration commenced with a dataset (autism_screening.csv) which was full of scores and attributes related to Autism Spectrum Disorder (ASD). My initial step was to decipher the relationships between these scores, which I visualized using a heatmap. This correlation matrix was instrumental in highlighting the attributes most significantly associated with ASD.

The Process:

Feature Selection: Drawing insights from the correlation matrix, I pinpointed the following scores as the most correlated with ASD:

'A6_Score', 'A5_Score', 'A4_Score', 'A3_Score', 'A2_Score', 'A1_Score', 'A10_Score', 'A9_Score'

Data Preprocessing: I split the data into training and testing sets, ensuring a balanced representation. To guarantee the optimal performance of my model, I standardized the data using the StandardScaler.

Model Building: I opted for two powerhouse algorithms: RandomForest and XGBoost. With the aid of Optuna, a hyperparameter optimization framework, I fine-tuned these models.

Stacking for Enhanced Performance: To elevate the accuracy, I employed a stacking classifier. This technique combines the predictions of multiple models, leveraging the strengths of each to produce a final, more accurate prediction.

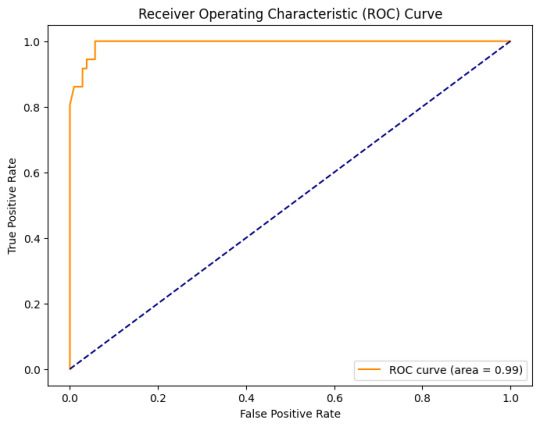

Evaluation: Testing my model, I was thrilled to achieve an accuracy of 95.7%. The Receiver Operating Characteristic (ROC) curve further validated the model's prowess, showcasing an area of 0.99.

Conclusion: This project's success is a beacon of hope and a testament to the transformative potential of AI in medical diagnostics. Achieving such a high accuracy in detecting Adult Autism is a stride towards early interventions and hope for many.

Note: For those intrigued by the technical details and eager to delve deeper, the complete code is available here. I would love to hear your feedback and questions!

Thank you for accompanying me on this journey. Together, let's keep pushing boundaries, learning, and making a tangible difference.

Stay curious, stay inspired.

#autism spectrum disorder#asd#autism#programming#python programming#python programmer#python#machine learning#ai#ai community#aicommunity#artificial intelligence#ai technology#prediction#data science#data analysis#neurodivergent

5 notes

·

View notes

Text

How to Analyze Data Effectively – A Complete Step-by-Step Guide

Learn how to analyze data in a structured, insightful way. From data cleaning to visualization, discover tools, techniques, and real-world examples. How to Analyze Data Effectively – A Complete Step-by-Step Guide Data analysis is the cornerstone of decision-making in the modern world. Whether in business, science, healthcare, education, or government, data informs strategies, identifies trends,…

#business intelligence#data analysis#data cleaning#data tools#data visualization#Excel#exploratory analysis#how to analyze data#predictive analysis#Python#Tableau

0 notes

Text

The Sensor Savvy - AI for Real-World Identification Course

Enroll in The Sensor Savvy - AI for Real-World Identification Course and master the use of AI to detect, analyze, and identify real-world objects using smart sensors and machine learning.

#Sensor Savvy#AI for Real-World Identification Course#python development course#advanced excel course online#AI ML Course for Beginners with ChatGPT mastery#AI Image Detective Course#Chatbot Crafters Course#ML Predictive Power Course

0 notes

Text

Explore IGMPI’s Big Data Analytics program, designed for professionals seeking expertise in data-driven decision-making. Learn advanced analytics techniques, data mining, machine learning, and business intelligence tools to excel in the fast-evolving world of big data.

#Big Data Analytics#Data Science#Machine Learning#Predictive Analytics#Business Intelligence#Data Visualization#Data Mining#AI in Analytics#Big Data Tools#Data Engineering#IGMPI#Online Analytics Course#Data Management#Hadoop#Python for Data Science

0 notes

Text

How Israel’s Smart Manufacturing Solutions are Shaping the Future of Global Industry in 2024

Introduction:

Israel is rapidly emerging as a leader in smart manufacturing solutions Israel, leveraging advanced technologies to drive efficiency, innovation, and sustainability in production processes. From robotics and IoT integration to AI-driven analytics and cybersecurity, Israeli companies are developing cutting-edge solutions that are transforming industries worldwide. As global manufacturing faces challenges like supply chain resilience, energy efficiency, and labor shortages, Israel’s tech-driven approach provides valuable answers. This blog explores the latest advancements in Israel’s smart manufacturing sector, highlighting how these innovations are setting new standards for industrial excellence and shaping the future of global manufacturing in 2024 and beyond.

IoT-Enabled Smart Factories

Israeli companies are leading the way in IoT integration, where connected devices and sensors enable real-time monitoring of production processes. This smart infrastructure helps manufacturers track machine health, identify bottlenecks, and optimize energy use. Israeli firms like Augury provide IoT-based solutions for predictive maintenance, helping companies prevent equipment failures, reduce downtime, and extend machine lifespan. With IoT-enabled factories, businesses can achieve a higher level of operational efficiency and reduce costs.

AI and Machine Learning for Process Optimization

Artificial intelligence is at the core of Israel’s smart manufacturing innovations. By using machine learning algorithms, manufacturers can analyze vast amounts of data to improve quality control, optimize production speeds, and reduce waste. Companies like Diagsense offer AI-driven platforms that provide real-time insights, allowing manufacturers to adjust operations swiftly. These predictive analytics systems are crucial for industries aiming to minimize waste and maximize productivity, enabling smarter, data-driven decisions.

Advanced Robotics for Precision and Flexibility

Robotics has transformed manufacturing in Israel, with smart robotic solutions that enhance both precision and flexibility on the production line. Israeli robotics firms, such as Roboteam and Elbit Systems, develop robots that assist with high-precision tasks, making production more agile. These robots adapt to varying tasks, which is particularly valuable for industries like electronics and automotive manufacturing, where precision and customization are crucial.

Cybersecurity for Manufacturing

As manufacturing becomes more digital, cybersecurity is a pressing need. Israel, known for its cybersecurity expertise, applies these strengths to secure manufacturing systems against cyber threats. Solutions from companies like Claroty and SCADAfence protect IoT devices and critical infrastructure in factories, ensuring data integrity and operational continuity. This focus on cybersecurity helps manufacturers defend against costly cyberattacks, safeguard intellectual property, and maintain secure operations.

Sustainable and Energy-Efficient Solutions

Sustainability is a growing focus in Israeli smart manufacturing, with innovations designed to reduce resource consumption and emissions. Companies such as ECOncrete are developing environmentally friendly materials and processes, supporting the global push for greener industries. From energy-efficient machinery to sustainable building materials, these innovations align with global efforts to reduce environmental impact, making manufacturing both profitable and responsible.

Conclusion

Israel’s smart manufacturing solutions Israel are redefining the global industrial landscape, setting benchmarks in efficiency, precision, and sustainability. By embracing IoT, AI, robotics, cybersecurity, and eco-friendly practices, Israeli innovators are helping industries worldwide become more resilient and adaptive to changing market demands. These advancements equip manufacturers with the tools they need to operate efficiently while minimizing environmental impact. For organizations looking to integrate these smart technologies, the expertise of partners like Diagsense can provide invaluable insights and tailored solutions, making it easier to navigate the smart manufacturing revolution and ensure long-term success in a competitive global market.

#pdm solutions#leak detection software#predicting energy#smart manufacturing solutions#predicting buying behavior using machine learning python#predicting energy consumption

0 notes

Text

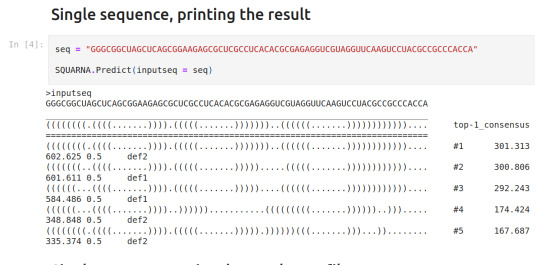

Now it's that simple to predict RNA secondary structure with SQUARNA Python3 library:

pip install SQUARNA

import SQUARNA SQUARNA.Predict(inputseq="CCCGNRAGGG")

Demo: github.com/febos/SQUARNA/blob/main/demo.ipynb

BioRxiv: doi.org/10.1101/2023.08.28.555103

1 note

·

View note

Text

Python for Predictive Maintenance

0 notes

Text

youtube

How OpenAI fixed AI + math #ai

#that was my question as well#because I went “if calculator good and if python good why ai so shit at maths herp derp?”#but stuff like chatgpt is first and foremost focused on predictive text not necessarily logic and reasoning#mada mada google-san!

1 note

·

View note

Text

Why Predictive Maintenance Is the Key to Future-Proofing Your Operations

The capacity to foresee and avoid equipment breakdowns is not just a competitive advantage but also a need in today's fast-paced industrial scene. PdM solutions are becoming a vital tactic for businesses looking to ensure their operations are future-proof. Businesses may anticipate equipment failure and take preventative measures to minimize costly downtime, prolong equipment life, and maximize operational efficiency by utilizing cutting-edge technology and data analytics.

The Evolution of Maintenance Strategies

Conventional maintenance approaches have generally been either proactive or reactive. Reactive maintenance, sometimes known as "run-to-failure," is the practice of repairing equipment after it malfunctions, which can result in unplanned downtime and possible safety hazards. Contrarily, preventive maintenance plans routine maintenance activities independent of the equipment's state, which occasionally leads to needless effort and additional expenses.

By utilizing real-time data and sophisticated algorithms to anticipate equipment breakdowns before they occur, predictive maintenance provides a more sophisticated method. This approach optimizes resources and lowers total maintenance costs by preventing downtime and ensuring that maintenance is only done when necessary.

How Predictive Maintenance Works

Big data analytics, machine learning, and the Internet of Things (IoT) are some of the technologies that are essential to predictive maintenance. Large volumes of data, such as temperature, vibration, pressure, and other performance indicators, are gathered by IoT devices from equipment. After that, machine learning algorithms are used to examine this data to find trends and anticipate future problems.

Benefits of Predictive Maintenance:

Decreased Downtime: Reducing unscheduled downtime is one of predictive maintenance's most important benefits. Businesses can minimize operational disturbance by scheduling maintenance during off-peak hours by anticipating when equipment is likely to break.

Cost savings: By lowering the expenses of emergency repairs and equipment replacements, predictive maintenance helps save money. It also cuts labor expenses by avoiding unneeded maintenance operations.

Increased Equipment Life: Businesses may minimize the frequency of replacements and prolong the life of their gear by routinely checking the operation of their equipment and performing maintenance only when necessary.

Increased Safety: By averting major equipment breakdowns that can endanger employees, predictive maintenance can also increase workplace safety.

Optimal Resource Allocation: Businesses may maximize their use of resources, including manpower and spare parts, by concentrating maintenance efforts on machinery that requires them.

Predictive Maintenance's Future

Predictive maintenance is becoming more widely available and reasonably priced for businesses of all sizes as technology develops. Predictive models will be further improved by the combination of artificial intelligence (AI) and machine learning, increasing their accuracy and dependability.

Predictive maintenance will likely become a more autonomous process in the future, with AI-driven systems scheduling and carrying out maintenance chores in addition to predicting faults. Businesses will be able to function with never-before-seen dependability and efficiency because of this degree of automation.

Conclusion:

PdM Solutions has evolved from an abstract concept to a workable solution that is revolutionizing the way companies run their operations. Businesses may future-proof their operations by using predictive maintenance, which will help them stay ahead of any problems, cut expenses, and keep a competitive advantage in the market. Those who embrace predictive maintenance will be well-positioned to prosper in the future as the industrial landscape continues to change.

#pdm solutions#predicting buying behavior using machine learning python#predicting energy consumption#predicting energy consumption using machine learning#leak detection software#smart manufacturing solutions

0 notes

Text

Leveraging Django for Machine Learning Projects: A Comprehensive Guide

Using Django for Machine Learning Projects: Integration, Deployment, and Best Practices

Introduction:As machine learning (ML) continues to revolutionize various industries, integrating ML models into web applications has become increasingly important. Django, a robust Python web framework, provides an excellent foundation for deploying and managing machine learning projects. Whether you’re looking to build a web-based machine learning application, deploy models, or create APIs for…

0 notes

Text

Big Data vs. Traditional Data: Understanding the Differences and When to Use Python

In the evolving landscape of data science, understanding the nuances between big data and traditional data is crucial. Both play pivotal roles in analytics, but their characteristics, processing methods, and use cases differ significantly. Python, a powerful and versatile programming language, has become an indispensable tool for handling both types of data. This blog will explore the differences between big data and traditional data and explain when to use Python, emphasizing the importance of enrolling in a data science training program to master these skills.

What is Traditional Data?

Traditional data refers to structured data typically stored in relational databases and managed using SQL (Structured Query Language). This data is often transactional and includes records such as sales transactions, customer information, and inventory levels.

Characteristics of Traditional Data:

Structured Format: Traditional data is organized in a structured format, usually in rows and columns within relational databases.

Manageable Volume: The volume of traditional data is relatively small and manageable, often ranging from gigabytes to terabytes.

Fixed Schema: The schema, or structure, of traditional data is predefined and consistent, making it easy to query and analyze.

Use Cases of Traditional Data:

Transaction Processing: Traditional data is used for transaction processing in industries like finance and retail, where accurate and reliable records are essential.

Customer Relationship Management (CRM): Businesses use traditional data to manage customer relationships, track interactions, and analyze customer behavior.

Inventory Management: Traditional data is used to monitor and manage inventory levels, ensuring optimal stock levels and efficient supply chain operations.

What is Big Data?

Big data refers to extremely large and complex datasets that cannot be managed and processed using traditional database systems. It encompasses structured, unstructured, and semi-structured data from various sources, including social media, sensors, and log files.

Characteristics of Big Data:

Volume: Big data involves vast amounts of data, often measured in petabytes or exabytes.

Velocity: Big data is generated at high speed, requiring real-time or near-real-time processing.

Variety: Big data comes in diverse formats, including text, images, videos, and sensor data.

Veracity: Big data can be noisy and uncertain, requiring advanced techniques to ensure data quality and accuracy.

Use Cases of Big Data:

Predictive Analytics: Big data is used for predictive analytics in fields like healthcare, finance, and marketing, where it helps forecast trends and behaviors.

IoT (Internet of Things): Big data from IoT devices is used to monitor and analyze physical systems, such as smart cities, industrial machines, and connected vehicles.

Social Media Analysis: Big data from social media platforms is analyzed to understand user sentiments, trends, and behavior patterns.

Python: The Versatile Tool for Data Science

Python has emerged as the go-to programming language for data science due to its simplicity, versatility, and robust ecosystem of libraries and frameworks. Whether dealing with traditional data or big data, Python provides powerful tools and techniques to analyze and visualize data effectively.

Python for Traditional Data:

Pandas: The Pandas library in Python is ideal for handling traditional data. It offers data structures like DataFrames that facilitate easy manipulation, analysis, and visualization of structured data.

SQLAlchemy: Python's SQLAlchemy library provides a powerful toolkit for working with relational databases, allowing seamless integration with SQL databases for querying and data manipulation.

Python for Big Data:

PySpark: PySpark, the Python API for Apache Spark, is designed for big data processing. It enables distributed computing and parallel processing, making it suitable for handling large-scale datasets.

Dask: Dask is a flexible parallel computing library in Python that scales from single machines to large clusters, making it an excellent choice for big data analytics.

When to Use Python for Data Science

Understanding when to use Python for different types of data is crucial for effective data analysis and decision-making.

Traditional Data:

Business Analytics: Use Python for traditional data analytics in business scenarios, such as sales forecasting, customer segmentation, and financial analysis. Python's libraries, like Pandas and Matplotlib, offer comprehensive tools for these tasks.

Data Cleaning and Transformation: Python is highly effective for data cleaning and transformation, ensuring that traditional data is accurate, consistent, and ready for analysis.

Big Data:

Real-Time Analytics: When dealing with real-time data streams from IoT devices or social media platforms, Python's integration with big data frameworks like Apache Spark enables efficient processing and analysis.

Large-Scale Machine Learning: For large-scale machine learning projects, Python's compatibility with libraries like TensorFlow and PyTorch, combined with big data processing tools, makes it an ideal choice.

The Importance of Data Science Training Programs

To effectively navigate the complexities of both traditional data and big data, it is essential to acquire the right skills and knowledge. Data science training programs provide comprehensive education and hands-on experience in data science tools and techniques.

Comprehensive Curriculum: Data science training programs cover a wide range of topics, including data analysis, machine learning, big data processing, and data visualization, ensuring a well-rounded education.

Practical Experience: These programs emphasize practical learning through projects and case studies, allowing students to apply theoretical knowledge to real-world scenarios.

Expert Guidance: Experienced instructors and industry mentors offer valuable insights and support, helping students master the complexities of data science.

Career Opportunities: Graduates of data science training programs are in high demand across various industries, with opportunities to work on innovative projects and drive data-driven decision-making.

Conclusion

Understanding the differences between big data and traditional data is fundamental for any aspiring data scientist. While traditional data is structured, manageable, and used for transaction processing, big data is vast, varied, and requires advanced tools for real-time processing and analysis. Python, with its robust ecosystem of libraries and frameworks, is an indispensable tool for handling both types of data effectively.

Enrolling in a data science training program equips you with the skills and knowledge needed to navigate the complexities of data science. Whether you're working with traditional data or big data, mastering Python and other data science tools will enable you to extract valuable insights and drive innovation in your field. Start your journey today and unlock the potential of data science with a comprehensive training program.

#Big Data#Traditional Data#Data Science#Python Programming#Data Analysis#Machine Learning#Predictive Analytics#Data Science Training Program#SQL#Data Visualization#Business Analytics#Real-Time Analytics#IoT Data#Data Transformation

0 notes

Text

#leak detection software#smart manufacturing solutions#predicting energy consumption#pdm solutions#PdM Solutions#consumption using machine learning#behavior using machine learning python

0 notes

Text

In today's data-driven world, businesses are constantly seeking innovative ways to leverage their vast amounts of data for competitive advantage.

0 notes