#Retail Data Extraction Services

Explore tagged Tumblr posts

Text

eCommerce App Data Scraping | Retail Data Extraction Services

Gain a competitive edge with our eCommerce and retail data scraping services in the USA, UK, UAE, India, China, and Spain. Unlock growth opportunities today.

know more: https://www.mobileappscraping.com/ecommerce-app-scraping-services.php

1 note

·

View note

Text

E-commerce data scraping provides detailed information on market dynamics, prevailing patterns, pricing data, competitors’ practices, and challenges.

Scrape E-commerce data such as products, pricing, deals and offers, customer reviews, ratings, text, links, seller details, images, and more. Avail of the E-commerce data from any dynamic website and get an edge in the competitive market. Boost Your Business Growth, increase revenue, and improve your efficiency with Lensnure's custom e-commerce web scraping services.

We have a team of highly qualified and experienced professionals in web data scraping.

#web scraping services#data extraction#ecommerce data extraction#ecommerce web scraping#retail data scraping#scrape#retail store location data#Lensnure Solutions#web scraper#big data

1 note

·

View note

Text

How to use DXVK with The Sims 3

Have you seen this post about using DXVK by Criisolate? But felt intimidated by the sheer mass of facts and information?

@desiree-uk and I compiled a guide and the configuration file to make your life easier. It focuses on players not using the EA App, but it might work for those just the same. It’s definitely worth a try.

Adding this to your game installation will result in a better RAM usage. So your game is less likely to give you Error 12 or crash due to RAM issues. It does NOT give a huge performance boost, but more stability and allows for higher graphics settings in game.

The full guide behind the cut. Let me know if you also would like it as PDF.

Happy simming!

Disclaimer and Credits

Desiree and I are no tech experts and just wrote down how we did this. Our ability to help if you run into trouble is limited. So use at your own risk and back up your files!

We both are on Windows 10 and start the game via TS3W.exe, not the EA App. So your experience may differ.

This guide is based on our own experiments and of course criisolate’s post on tumblr: https://www.tumblr.com/criisolate/749374223346286592/ill-explain-what-i-did-below-before-making-any

This guide is brought to you by Desiree-UK and Norn.

Compatibility

Note: This will conflict with other programs that “inject” functionality into your game so they may stop working. Notably

Reshade

GShade

Nvidia Experience/Nvidia Inspector/Nvidia Shaders

RivaTuner Statistics Server

It does work seamlessly with LazyDuchess’ Smooth Patch.

LazyDuchess’ Launcher: unknown

Alder Lake patch: does conflict. One user got it working by starting the game by launching TS3.exe (also with admin rights) instead of TS3W.exe. This seemed to create the cache file for DXVK. After that, the game could be started from TS3W.exe again. That might not work for everyone though.

A word on FPS and V-Sync

With such an old game it’s crucial to cap framerate (FPS). This is done in the DXVK.conf file. Same with V-Sync.

You need

a text editor (easiest to use is Windows Notepad)

to download DXVK, version 2.3.1 from here: https://github.com/doitsujin/DXVK/releases/tag/v2.3.1 Extract the archive, you are going to need the file d3d9.dll from the x32 folder

the configuration file DXVK.conf from here: https://github.com/doitsujin/DXVK/blob/master/DXVK.conf. Optional: download the edited version with the required changes here.

administrator rights on your PC

to know your game’s installation path (bin folder) and where to find the user folder

a tiny bit of patience :)

First Step: Backup

Backup your original Bin folder in your Sims 3 installation path! The DXVK file may overwrite some files! The path should be something like this (for retail): \Program Files (x86)\Electronic Arts\The Sims 3\Game\Bin (This is the folder where also GraphicsRule.sgr and the TS3W.exe and TS3.exe are located.)

Backup your options.ini in your game’s user folder! Making the game use the DXVK file will count as a change in GPU driver, so the options.ini will reset once you start your game after installation. The path should be something like this: \Documents\Electronic Arts\The Sims 3 (This is the folder where your Mods folder is located).

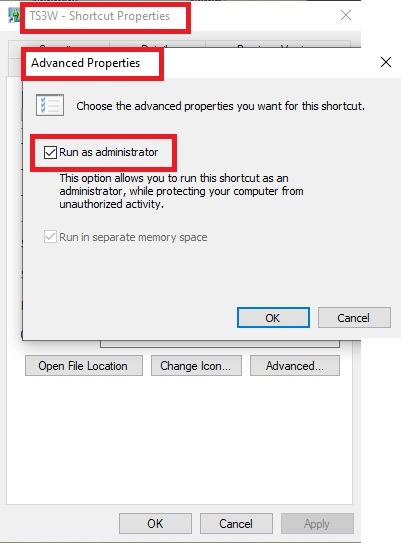

Preparations

Make sure you run the game as administrator. You can check that by right-clicking on the icon that starts your game. Go to Properties > Advanced and check the box “Run as administrator”. Note: This will result in a prompt each time you start your game, if you want to allow this application to make modifications to your system. Click “Yes” and the game will load.

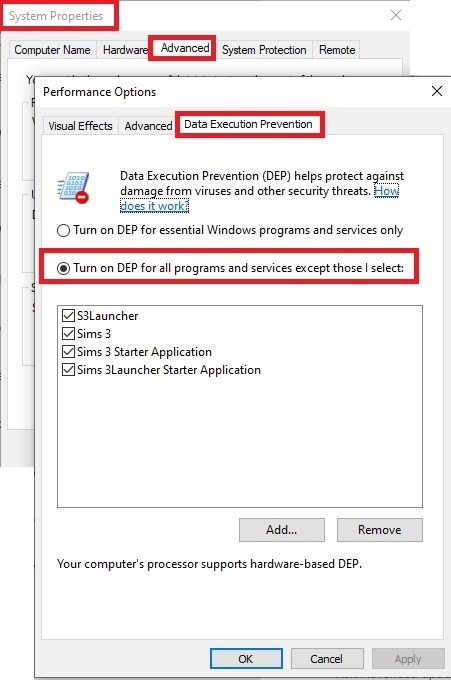

2. Make sure you have the DEP settings from Windows applied to your game.

Open the Windows Control Panel.

Click System and Security > System > Advanced System Settings.

On the Advanced tab, next to the Performance heading, click Settings.

Click the Data Execution Prevention tab.

Select 'Turn on DEP for all programs and services except these”:

Click the Add button, a window to the file explorer opens. Navigate to your Sims 3 installation folder (the bin folder once again) and add TS3W.exe and TS3.exe.

Click OK. Then you can close all those dialog windows again.

Setting up the DXVK.conf file

Open the file with a text editor and delete everything in it. Then add these values:

d3d9.textureMemory = 1

d3d9.presentInterval = 1

d3d9.maxFrameRate = 60

d3d9.presentInterval enables V-Sync,d3d9.maxFrameRate sets the FrameRate. You can edit those values, but never change the first line (d3d9.textureMemory)!

The original DXVK.conf contains many more options in case you would like to add more settings.

A. no Reshade/GShade

Setting up DXVK

Copy the two files d3d9.dll and DXVK.conf into the Bin folder in your Sims 3 installation path. This is the folder where also GraphicsRule.sgr and the TS3W.exe and TS3.exe are located. If you are prompted to overwrite files, please choose yes (you DID backup your folder, right?)

And that’s basically all that is required to install.

Start your game now and let it run for a short while. Click around, open Buy mode or CAS, move the camera.

Now quit without saving. Once the game is closed fully, open your bin folder again and double check if a file “TS3W.DXVK-cache” was generated. If so – congrats! All done!

Things to note

Heads up, the game options will reset! So it will give you a “vanilla” start screen and options.

Don’t worry if the game seems to be frozen during loading. It may take a few minutes longer to load but it will load eventually.

The TS3W.DXVK-cache file is the actual cache DXVK is using. So don’t delete this! Just ignore it and leave it alone. When someone tells to clear cache files – this is not one of them!

Update Options.ini

Go to your user folder and open the options.ini file with a text editor like Notepad.

Find the line “lastdevice = “. It will have several values, separated by semicolons. Copy the last one, after the last semicolon, the digits only. Close the file.

Now go to your backup version of the Options.ini file, open it and find that line “lastdevice” again. Replace the last value with the one you just copied. Make sure to only replace those digits!

Save and close the file.

Copy this version of the file into your user folder, replacing the one that is there.

Things to note:

If your GPU driver is updated, you might have to do these steps again as it might reset your device ID again. Though it seems that the DXVK ID overrides the GPU ID, so it might not happen.

How do I know it’s working?

Open the task manager and look at RAM usage. Remember the game can only use 4 GB of RAM at maximum and starts crashing when usage goes up to somewhere between 3.2 – 3.8 GB (it’s a bit different for everybody).

So if you see values like 2.1456 for RAM usage in a large world and an ongoing save, it’s working. Generally the lower the value, the better for stability.

Also, DXVK will have generated its cache file called TS3W.DXVK-cache in the bin folder. The file size will grow with time as DXVK is adding stuff to it, e.g. from different worlds or savegames. Initially it might be something like 46 KB or 58 KB, so it’s really small.

Optional: changing MemCacheBudgetValue

MemCacheBudgetValue determines the size of the game's VRAM Cache. You can edit those values but the difference might not be noticeable in game. It also depends on your computer’s hardware how much you can allow here.

The two lines of seti MemCacheBudgetValue correspond to the high RAM level and low RAM level situations. Therefore, theoretically, the first line MemCacheBudgetValue should be set to a larger value, while the second line should be set to a value less than or equal to the first line.

The original values represent 200MB (209715200) and 160MB (167772160) respectively. They are calculated as 200x1024x1024=209175200 and 160x1024x1024=167772160.

Back up your GraphicsRules.sgr file! If you make a mistake here, your game won’t work anymore.

Go to your bin folder and open your GraphicsRules.sgr with a text editor.

Search and find two lines that set the variables for MemCacheBudgetValue.

Modify these two values to larger numbers. Make sure the value in the first line is higher or equals the value in the second line. Examples for values: 1073741824, which means 1GB 2147483648 which means 2 GB. -1 (minus 1) means no limit (but is highly experimental, use at own risk)

Save and close the file. It might prompt you to save the file to a different place and not allow you to save in the Bin folder. Just save it someplace else in this case and copy/paste it to the Bin folder afterwards. If asked to overwrite the existing file, click yes.

Now start your game and see if it makes a difference in smoothness or texture loading. Make sure to check RAM and VRAM usage to see how it works.

You might need to change the values back and forth to find the “sweet spot” for your game. Mine seems to work best with setting the first value to 2147483648 and the second to 1073741824.

Uninstallation

Delete these files from your bin folder (installation path):

d3d9.dll

DXVK.conf

TS3W.DXVK-cache

And if you have it, also TS3W_d3d9.log

if you changed the values in your GraphicsRule.sgr file, too, don’t forget to change them back or to replace the file with your backed up version.

OR

delete the bin folder and add it from your backup again.

B. with Reshade/GShade

Follow the steps from part A. no Reshade/Gshade to set up DXVK.

If you are already using Reshade (RS) or GShade (GS), you will be prompted to overwrite files, so choose YES. RS and GS may stop working, so you will need to reinstall them.

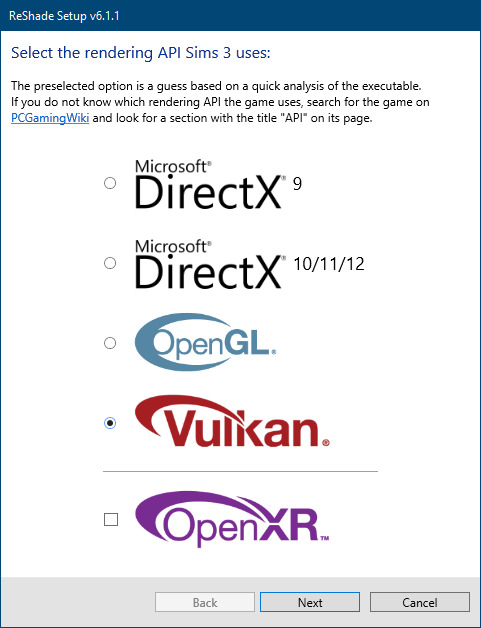

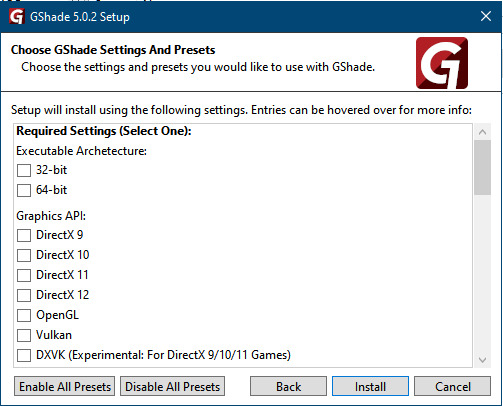

Whatever version you are using, the interface shows similar options of which API you can choose from (these screenshots are from the latest versions of RS and GS).

Please note:

Each time you install and uninstall DXVK, switching the game between Vulkan and d3d9, is essentially changing the graphics card ID again, which results in the settings in your options.ini file being repeatedly reset.

ReShade interface

Choose – Vulcan

Click next and choose your preferred shaders.

Hopefully this install method works and it won't install its own d3d9.dll file.

If it doesn't work, then choose DirectX9 in RS, but you must make sure to replace the d3d9.dll file with DXVK's d3d9.dll (the one from its 32bit folder, checking its size is 3.86mb.)

GShade interface

Choose –

Executable Architecture: 32bit

Graphics API: DXVK

Hooking: Normal Mode

GShade is very problematic, it won't work straight out of the box and the overlay doesn't show up, which defeats the purpose of using it if you can't add or edit the shaders you want to use.

Check the game's bin folder, making sure the d3d9.dll is still there and its size is 3.86mb - that is DXVK's dll file.

If installing using the DXVK method doesn't work, you can choose the DirectX method, but there is no guarantee it works either.

The game will not run with these files in the folder:

d3d10core.dll

d3d11.dll

dxgi.dll

If you delete them, the game will start but you can't access GShade! It might be better to use ReShade.

Some Vulcan and DirectX information, if you’re interested:

Vulcan is for rather high end graphic cards but is backward compatible with some older cards. Try this method with ReShade or GShade first.

DirectX is more stable and works best with older cards and systems. Try this method if Vulcan doesn't work with ReShade/GShade in your game – remember to replace the d3d9.dll with DXVK's d3d9.dll.

For more information on the difference between Vulcan and DirectX, see this article:

https://www.howtogeek.com/884042/vulkan-vs-DirectX-12/

746 notes

·

View notes

Text

Libraries have traditionally operated on a basic premise: Once they purchase a book, they can lend it out to patrons as much (or as little) as they like. Library copies often come from publishers, but they can also come from donations, used book sales, or other libraries. However the library obtains the book, once the library legally owns it, it is theirs to lend as they see fit. Not so for digital books. To make licensed e-books available to patrons, libraries have to pay publishers multiple times over. First, they must subscribe (for a fee) to aggregator platforms such as Overdrive. Aggregators, like streaming services such as HBO’s Max, have total control over adding or removing content from their catalogue. Content can be removed at any time, for any reason, without input from your local library. The decision happens not at the community level but at the corporate one, thousands of miles from the patrons affected. Then libraries must purchase each individual copy of each individual title that they want to offer as an e-book. These e-book copies are not only priced at a steep markup—up to 300% over consumer retail—but are also time- and loan-limited, meaning the files self-destruct after a certain number of loans. The library then needs to repurchase the same book, at a new price, in order to keep it in stock. This upending of the traditional order puts massive financial strain on libraries and the taxpayers that fund them. It also opens up a world of privacy concerns; while libraries are restricted in the reader data they can collect and share, private companies are under no such obligation. Some libraries have turned to another solution: controlled digital lending, or CDL, a process by which a library scans the physical books it already has in its collection, makes secure digital copies, and lends those out on a one-to-one “owned to loaned” ratio. The Internet Archive was an early pioneer of this technique. When the digital copy is loaned, the physical copy is sequestered from borrowing; when the physical copy is checked out, the digital copy becomes unavailable. The benefits to libraries are obvious; delicate books can be circulated without fear of damage, volumes can be moved off-site for facilities work without interrupting patron access, and older and endangered works become searchable and can get a second chance at life. Library patrons, who fund their local library’s purchases with their tax dollars, also benefit from the ability to freely access the books. Publishers are, unfortunately, not a fan of this model, and in 2020 four of them sued the Internet Archive over its CDL program. The suit ultimately focused on the Internet Archive’s lending of 127 books that were already commercially available through licensed aggregators. The publisher plaintiffs accused the Internet Archive of mass copyright infringement, while the Internet Archive argued that its digitization and lending program was a fair use. The trial court sided with the publishers, and on September 4, the Court of Appeals for the Second Circuit reaffirmed that decision with some alterations to the underlying reasoning. This decision harms libraries. It locks them into an e-book ecosystem designed to extract as much money as possible while harvesting (and reselling) reader data en masse. It leaves local communities’ reading habits at the mercy of curatorial decisions made by four dominant publishing companies thousands of miles away. It steers Americans away from one of the few remaining bastions of privacy protection and funnels them into a surveillance ecosystem that, like Big Tech, becomes more dangerous with each passing data breach. And by increasing the price for access to knowledge, it puts up even more barriers between underserved communities and the American dream.

11 September 2024

154 notes

·

View notes

Text

random, deeply unscientific poll time because I'm curious how well this website reflects the overall labor force lol

before you mark "unemployed," READ THE EXPLANATION AND INSTRUCTIONS

DETAILS AND INSTRUCTIONS:

*The number listed beside each category is the number of job positions available to the total workforce, not necessarily the number of people who are actually employed.

*Not having a job does not automatically make you "unemployed." Unemployed means you are a participating member of the workforce but don't have a job currently. To be consider part of the active workforce as defined by the BLS, you MUST be ALL of the following:

16 years of age or older

residing in the 50 states or DC

available for work

actively seeking employment in the last 4 weeks

not on active duty in the military

DO NOT select unemployed unless you meet ALL of the criteria above.

Examples of not having a job but not counting as unemployed: stay-at-home parent (I know this one is a bad reflection of reality, i know i know pls dont yell at me), a full-time student not currently working, a 25 year old who hasn't applied for any jobs in over a few months, someone with a permanent or temporary disability who is either not working/seeking employment or on FMLA.

Other notes and explanation:

This is a list of all non-agriculture industries that employ 10 million or more people, based on the most recent data from the US Bureau of Labor Statistics. The math might be way off bc I wasn't very careful lmao. If you have more than one job across more than one industry, pick the one that makes up the majority of your income.

A handful of familiar sub-industries that make up a portion of a larger industry but are less than 8 million people are listed in the "Other" category so that the much larger sub-industry can have its own line.

For example, healthcare belongs to "healthcare and public services," which is around 22M and includes childcare and social support services. Because direct healthcare delivery makes up such an enormous portion, I separated it out. The rest is fewer than 5M and thus does not get its own line, so they're included in "Other." (Insurance specifically is included in finance.)

More things included in "other":

Construction

Mining, quarrying, and oil and gas extraction

Utilities

Real-estate

48 notes

·

View notes

Text

How to know if a USB cable is hiding malicious hacker hardware

Are your USB cables sending your data to hackers?

We expect USB-C cables to perform a specific task: transferring either data or files between devices. We give little more thought to the matter, but malicious USB-C cables can do much more than what we expect.

These cables hide malicious hardware that can intercept data, eavesdrop on phone calls and messages, or, in the worst cases, take complete control of your PC or cellphone. The first of these appeared in 2008, but back then they were very rare and expensive — which meant the average user was largely safeguarded.

Since then, their availability has increased 100-fold and now with both specialist spy retailers selling them as “spy cables” as well as unscrupulous sellers passing them off as legitimate products, it’s all too easy to buy one by accident and get hacked. So, how do you know if your USB-C cable is malicious?

Further reading: We tested 43 old USB-C to USB-A cables. 1 was great. 10 were dangerous

Identifying malicious USB-C cables

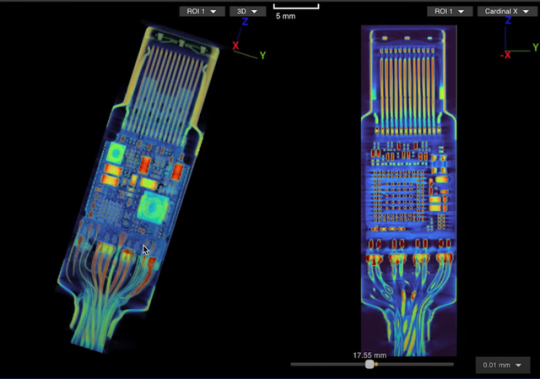

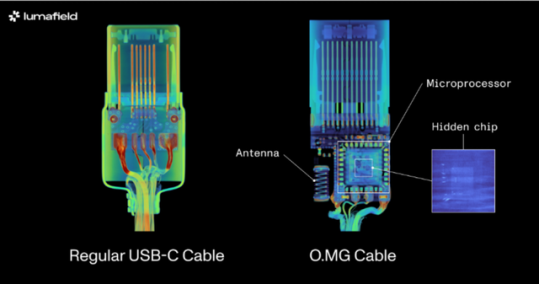

Identifying malicious USB-C cables is no easy task since they are designed to look just like regular cables. Scanning techniques have been largely thought of as the best way to sort the wheat from the chaff, which is what industrial scanning company, Lumafield of the Lumafield Neptune industrial scanner fame, recently set out to show.

The company employed both 2D and 3D scanning techniques on the O.MG USB-C cable — a well-known hacked cable built for covert field-use and research. It hides an embedded Wi-Fi server and a keylogger in its USB connector. PCWorld Executive Editor Gordon Ung covered it back in 2021, and it sounds scary as hell.

What Lumafield discovered is interesting to say the least. A 2D X-ray image could identify the cable’s antenna and microcontroller, but only the 3D CT scan could reveal another band of wires connected to a die stacked on top of the cable’s microcontroller. You can explore a 3D model of the scan yourself on Lumafield’s website.

It confirms the worst — that you can only unequivocally confirm that a USB-C cable harbors malicious hardware with a 3D CT scanner, which unless you’re a medical radiographer or 3D industrial scientist is going to be impossible for you to do. That being so, here are some tips to avoid and identify suspicious USB-C cables without high-tech gear:

Buy from a reputable seller: If you don’t know and trust the brand, simply don’t buy. Manufacturers like Anker, Apple, Belkin, and Ugreen have rigorous quality-control processes that prevent malicious hardware parts from making it into cables. Of course, the other reason is simply that you’ll get a better product — 3D scans have similarly revealed how less reputable brands can lack normal USB-C componentry, which can result in substandard performance. If you’re in the market for a new cable right now, see our top picks for USB-C cables.

Look for the warning signs: Look for brand names or logos that don’t look right. Strange markings, cords that are inconsistent lengths or widths, and USB-C connectors with heat emanating from them when not plugged in can all be giveaways that a USB-C cable is malicious.

Use the O.MG malicious cable detector: This detector by O.MG claims to detect all malicious USB cables.

Use data blockers: If you’re just charging and not transferring data, a blocker will ensure no data is extracted. Apart from detecting malicious USB-C cables, the O.MG malicious cable detector functions as such a data blocker.

Use a detection service: If you’re dealing with extremely sensitive data for a business or governmental organization, you might want to employ the services of a company like Lumafield to detect malicious cables with 100 percent accuracy. Any such service will come with a fee, but it could be a small price to pay for security and peace of mind.

11 notes

·

View notes

Text

AI’s Role in Business Process Automation

Automation has come a long way from simply replacing manual tasks with machines. With AI stepping into the scene, business process automation is no longer just about cutting costs or speeding up workflows—it’s about making smarter, more adaptive decisions that continuously evolve. AI isn't just doing what we tell it; it’s learning, predicting, and innovating in ways that redefine how businesses operate.

From hyperautomation to AI-powered chatbots and intelligent document processing, the world of automation is rapidly expanding. But what does the future hold?

What is Business Process Automation?

Business Process Automation (BPA) refers to the use of technology to streamline and automate repetitive, rule-based tasks within an organization. The goal is to improve efficiency, reduce errors, cut costs, and free up human workers for higher-value activities. BPA covers a wide range of functions, from automating simple data entry tasks to orchestrating complex workflows across multiple departments.

Traditional BPA solutions rely on predefined rules and scripts to automate tasks such as invoicing, payroll processing, customer service inquiries, and supply chain management. However, as businesses deal with increasing amounts of data and more complex decision-making requirements, AI is playing an increasingly critical role in enhancing BPA capabilities.

AI’s Role in Business Process Automation

AI is revolutionizing business process automation by introducing cognitive capabilities that allow systems to learn, adapt, and make intelligent decisions. Unlike traditional automation, which follows a strict set of rules, AI-driven BPA leverages machine learning, natural language processing (NLP), and computer vision to understand patterns, process unstructured data, and provide predictive insights.

Here are some of the key ways AI is enhancing BPA:

Self-Learning Systems: AI-powered BPA can analyze past workflows and optimize them dynamically without human intervention.

Advanced Data Processing: AI-driven tools can extract information from documents, emails, and customer interactions, enabling businesses to process data faster and more accurately.

Predictive Analytics: AI helps businesses forecast trends, detect anomalies, and make proactive decisions based on real-time insights.

Enhanced Customer Interactions: AI-powered chatbots and virtual assistants provide 24/7 support, improving customer service efficiency and satisfaction.

Automation of Complex Workflows: AI enables the automation of multi-step, decision-heavy processes, such as fraud detection, regulatory compliance, and personalized marketing campaigns.

As organizations seek more efficient ways to handle increasing data volumes and complex processes, AI-driven BPA is becoming a strategic priority. The ability of AI to analyze patterns, predict outcomes, and make intelligent decisions is transforming industries such as finance, healthcare, retail, and manufacturing.

“At the leading edge of automation, AI transforms routine workflows into smart, adaptive systems that think ahead. It’s not about merely accelerating tasks—it’s about creating an evolving framework that continuously optimizes operations for future challenges.”

— Emma Reynolds, CTO of QuantumOps

Trends in AI-Driven Business Process Automation

1. Hyperautomation

Hyperautomation, a term coined by Gartner, refers to the combination of AI, robotic process automation (RPA), and other advanced technologies to automate as many business processes as possible. By leveraging AI-powered bots and predictive analytics, companies can automate end-to-end processes, reducing operational costs and improving decision-making.

Hyperautomation enables organizations to move beyond simple task automation to more complex workflows, incorporating AI-driven insights to optimize efficiency continuously. This trend is expected to accelerate as businesses adopt AI-first strategies to stay competitive.

2. AI-Powered Chatbots and Virtual Assistants

Chatbots and virtual assistants are becoming increasingly sophisticated, enabling seamless interactions with customers and employees. AI-driven conversational interfaces are revolutionizing customer service, HR operations, and IT support by providing real-time assistance, answering queries, and resolving issues without human intervention.

The integration of AI with natural language processing (NLP) and sentiment analysis allows chatbots to understand context, emotions, and intent, providing more personalized responses. Future advancements in AI will enhance their capabilities, making them more intuitive and capable of handling complex tasks.

3. Process Mining and AI-Driven Insights

Process mining leverages AI to analyze business workflows, identify bottlenecks, and suggest improvements. By collecting data from enterprise systems, AI can provide actionable insights into process inefficiencies, allowing companies to optimize operations dynamically.

AI-powered process mining tools help businesses understand workflow deviations, uncover hidden inefficiencies, and implement data-driven solutions. This trend is expected to grow as organizations seek more visibility and control over their automated processes.

4. AI and Predictive Analytics for Decision-Making

AI-driven predictive analytics plays a crucial role in business process automation by forecasting trends, detecting anomalies, and making data-backed decisions. Companies are increasingly using AI to analyze customer behaviour, market trends, and operational risks, enabling them to make proactive decisions.

For example, in supply chain management, AI can predict demand fluctuations, optimize inventory levels, and prevent disruptions. In finance, AI-powered fraud detection systems analyze transaction patterns in real-time to prevent fraudulent activities. The future of BPA will heavily rely on AI-driven predictive capabilities to drive smarter business decisions.

5. AI-Enabled Document Processing and Intelligent OCR

Document-heavy industries such as legal, healthcare, and banking are benefiting from AI-powered Optical Character Recognition (OCR) and document processing solutions. AI can extract, classify, and process unstructured data from invoices, contracts, and forms, reducing manual effort and improving accuracy.

Intelligent document processing (IDP) combines AI, machine learning, and NLP to understand the context of documents, automate data entry, and integrate with existing enterprise systems. As AI models continue to improve, document processing automation will become more accurate and efficient.

Going Beyond Automation

The future of AI-driven BPA will go beyond automation—it will redefine how businesses function at their core. Here are some key predictions for the next decade:

Autonomous Decision-Making: AI systems will move beyond assisting human decisions to making autonomous decisions in areas such as finance, supply chain logistics, and healthcare management.

AI-Driven Creativity: AI will not just automate processes but also assist in creative and strategic business decisions, helping companies design products, create marketing strategies, and personalize customer experiences.

Human-AI Collaboration: AI will become an integral part of the workforce, working alongside employees as an intelligent assistant, boosting productivity and innovation.

Decentralized AI Systems: AI will become more distributed, with businesses using edge AI and blockchain-based automation to improve security, efficiency, and transparency in operations.

Industry-Specific AI Solutions: We will see more tailored AI automation solutions designed for specific industries, such as AI-driven legal research tools, medical diagnostics automation, and AI-powered financial advisory services.

AI is no longer a futuristic concept—it’s here, and it’s already transforming the way businesses operate. What’s exciting is that we’re still just scratching the surface. As AI continues to evolve, businesses will find new ways to automate, innovate, and create efficiencies that we can’t yet fully imagine.

But while AI is streamlining processes and making work more efficient, it’s also reshaping what it means to be human in the workplace. As automation takes over repetitive tasks, employees will have more opportunities to focus on creativity, strategy, and problem-solving. The future of AI in business process automation isn’t just about doing things faster—it’s about rethinking how we work all together.

Learn more about DataPeak:

#datapeak#factr#technology#agentic ai#saas#artificial intelligence#machine learning#ai#ai-driven business solutions#machine learning for workflow#ai solutions for data driven decision making#ai business tools#aiinnovation#digitaltools#digital technology#digital trends#dataanalytics#data driven decision making#data analytics#cloudmigration#cloudcomputing#cybersecurity#cloud computing#smbs#chatbots

2 notes

·

View notes

Text

The Automation Revolution: How Embedded Analytics is Leading the Way

Embedded analytics tools have emerged as game-changers, seamlessly integrating data-driven insights into business applications and enabling automation across various industries. By providing real-time analytics within existing workflows, these tools empower organizations to make informed decisions without switching between multiple platforms.

The Role of Embedded Analytics in Automation

Embedded analytics refers to the integration of analytical capabilities directly into business applications, eliminating the need for separate business intelligence (BI) tools. This integration enhances automation by:

Reducing Manual Data Analysis: Automated dashboards and real-time reporting eliminate the need for manual data extraction and processing.

Improving Decision-Making: AI-powered analytics provide predictive insights, helping businesses anticipate trends and make proactive decisions.

Enhancing Operational Efficiency: Automated alerts and anomaly detection streamline workflow management, reducing bottlenecks and inefficiencies.

Increasing User Accessibility: Non-technical users can easily access and interpret data within familiar applications, enabling data-driven culture across organizations.

Industry-Wide Impact of Embedded Analytics

1. Manufacturing: Predictive Maintenance & Process Optimization

By analyzing real-time sensor data, predictive maintenance reduces downtime, enhances production efficiency, and minimizes repair costs.

2. Healthcare: Enhancing Patient Outcomes & Resource Management

Healthcare providers use embedded analytics to track patient records, optimize treatment plans, and manage hospital resources effectively.

3. Retail: Personalized Customer Experiences & Inventory Optimization

Retailers integrate embedded analytics into e-commerce platforms to analyze customer preferences, optimize pricing, and manage inventory.

4. Finance: Fraud Detection & Risk Management

Financial institutions use embedded analytics to detect fraudulent activities, assess credit risks, and automate compliance monitoring.

5. Logistics: Supply Chain Optimization & Route Planning

Supply chain managers use embedded analytics to track shipments, optimize delivery routes, and manage inventory levels.

6. Education: Student Performance Analysis & Learning Personalization

Educational institutions utilize embedded analytics to track student performance, identify learning gaps, and personalize educational experiences.

The Future of Embedded Analytics in Automation

As AI and machine learning continue to evolve, embedded analytics will play an even greater role in automation. Future advancements may include:

Self-Service BI: Empowering users with more intuitive, AI-driven analytics tools that require minimal technical expertise.

Hyperautomation: Combining embedded analytics with robotic process automation (RPA) for end-to-end business process automation.

Advanced Predictive & Prescriptive Analytics: Leveraging AI for more accurate forecasting and decision-making support.

Greater Integration with IoT & Edge Computing: Enhancing real-time analytics capabilities for industries reliant on IoT sensors and connected devices.

Conclusion

By integrating analytics within existing workflows, businesses can improve efficiency, reduce operational costs, and enhance customer experiences. As technology continues to advance, the synergy between embedded analytics and automation will drive innovation and reshape the future of various industries.

To know more: data collection and insights

data analytics services

2 notes

·

View notes

Text

Transforming Businesses with IoT: How Iotric’s IoT App Development Services Drive Innovation

In these days’s fast-paced virtual world, companies should include smart technology to stay ahead. The Internet of Things (IoT) is revolutionizing industries by way of connecting gadgets, collecting actual-time data, and automating approaches for stronger efficiency. Iotric, a leading IoT app improvement carrier issuer, makes a speciality of developing contemporary answers that help businesses leverage IoT for boom and innovation.

Why IoT is Essential for Modern Businesses IoT generation allows seamless communique between gadgets, permitting agencies to optimize operations, enhance patron enjoy, and reduce charges. From smart homes and wearable gadgets to business automation and healthcare monitoring, IoT is reshaping the manner industries perform. With a complicated IoT app, companies can:

Enhance operational efficiency by automating methods Gain real-time insights with linked devices Reduce downtime thru predictive renovation Improve purchaser revel in with smart applications

Strengthen security with far off tracking

Iotric: A Leader in IoT App Development Iotric is a trusted name in IoT app development, imparting cease-to-stop solutions tailored to numerous industries. Whether you want an IoT mobile app, cloud integration, or custom firmware improvement, Iotric can provide modern answers that align with your commercial enterprise goals.

Key Features of Iotric’s IoT App Development Service Custom IoT App Development – Iotric builds custom designed IoT programs that seamlessly connect to various gadgets and systems, making sure easy statistics waft and person-pleasant interfaces.

Cloud-Based IoT Solutions – With knowledge in cloud integration, Iotric develops scalable and comfy cloud-based totally IoT programs that permit real-time statistics access and analytics.

Embedded Software Development – Iotric focuses on developing green firmware for IoT gadgets, ensuring optimal performance and seamless connectivity.

IoT Analytics & Data Processing – By leveraging AI-driven analytics, Iotric enables businesses extract valuable insights from IoT facts, enhancing decision-making and operational efficiency.

IoT Security & Compliance – Security is a pinnacle precedence for Iotric, ensuring that IoT programs are covered in opposition to cyber threats and comply with enterprise standards.

Industries Benefiting from Iotric’s IoT Solutions Healthcare Iotric develops IoT-powered healthcare programs for far off patient tracking, clever wearables, and real-time health monitoring, making sure better patient care and early diagnosis.

Manufacturing With business IoT (IIoT) solutions, Iotric facilitates manufacturers optimize manufacturing traces, lessen downtime, and decorate predictive preservation strategies.

Smart Homes & Cities From smart lighting and security structures to intelligent transportation, Iotric’s IoT solutions make contributions to building linked and sustainable cities.

Retail & E-commerce Iotric’s IoT-powered stock monitoring, smart checkout structures, and personalized purchaser reviews revolutionize the retail region.

Why Choose Iotric for IoT App Development? Expert Team: A team of professional IoT builders with deep industry understanding Cutting-Edge Technology: Leverages AI, gadget gaining knowledge of, and big records for smart solutions End-to-End Services: From consultation and development to deployment and support Proven Track Record: Successful IoT projects throughout more than one industries

Final Thoughts As organizations maintain to embody digital transformation, IoT stays a game-changer. With Iotric’s advanced IoT app improvement services, groups can unencumber new possibilities, beautify efficiency, and live ahead of the competition. Whether you are a startup or an established agency, Iotric offers the expertise and innovation had to carry your IoT vision to lifestyles.

Ready to revolutionize your commercial enterprise with IoT? Partner with Iotric these days and enjoy the destiny of connected generation!

2 notes

·

View notes

Text

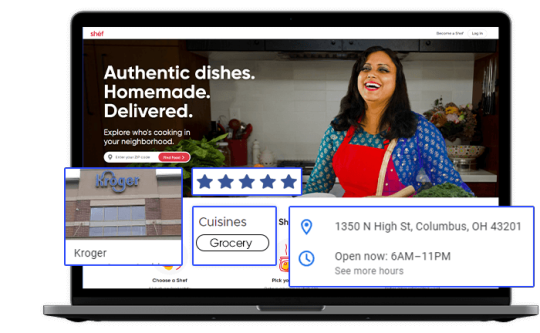

Tapping into Fresh Insights: Kroger Grocery Data Scraping

In today's data-driven world, the retail grocery industry is no exception when it comes to leveraging data for strategic decision-making. Kroger, one of the largest supermarket chains in the United States, offers a wealth of valuable data related to grocery products, pricing, customer preferences, and more. Extracting and harnessing this data through Kroger grocery data scraping can provide businesses and individuals with a competitive edge and valuable insights. This article explores the significance of grocery data extraction from Kroger, its benefits, and the methodologies involved.

The Power of Kroger Grocery Data

Kroger's extensive presence in the grocery market, both online and in physical stores, positions it as a significant source of data in the industry. This data is invaluable for a variety of stakeholders:

Kroger: The company can gain insights into customer buying patterns, product popularity, inventory management, and pricing strategies. This information empowers Kroger to optimize its product offerings and enhance the shopping experience.

Grocery Brands: Food manufacturers and brands can use Kroger's data to track product performance, assess market trends, and make informed decisions about product development and marketing strategies.

Consumers: Shoppers can benefit from Kroger's data by accessing information on product availability, pricing, and customer reviews, aiding in making informed purchasing decisions.

Benefits of Grocery Data Extraction from Kroger

Market Understanding: Extracted grocery data provides a deep understanding of the grocery retail market. Businesses can identify trends, competition, and areas for growth or diversification.

Product Optimization: Kroger and other retailers can optimize their product offerings by analyzing customer preferences, demand patterns, and pricing strategies. This data helps enhance inventory management and product selection.

Pricing Strategies: Monitoring pricing data from Kroger allows businesses to adjust their pricing strategies in response to market dynamics and competitor moves.

Inventory Management: Kroger grocery data extraction aids in managing inventory effectively, reducing waste, and improving supply chain operations.

Methodologies for Grocery Data Extraction from Kroger

To extract grocery data from Kroger, individuals and businesses can follow these methodologies:

Authorization: Ensure compliance with Kroger's terms of service and legal regulations. Authorization may be required for data extraction activities, and respecting privacy and copyright laws is essential.

Data Sources: Identify the specific data sources you wish to extract. Kroger's data encompasses product listings, pricing, customer reviews, and more.

Web Scraping Tools: Utilize web scraping tools, libraries, or custom scripts to extract data from Kroger's website. Common tools include Python libraries like BeautifulSoup and Scrapy.

Data Cleansing: Cleanse and structure the scraped data to make it usable for analysis. This may involve removing HTML tags, formatting data, and handling missing or inconsistent information.

Data Storage: Determine where and how to store the scraped data. Options include databases, spreadsheets, or cloud-based storage.

Data Analysis: Leverage data analysis tools and techniques to derive actionable insights from the scraped data. Visualization tools can help present findings effectively.

Ethical and Legal Compliance: Scrutinize ethical and legal considerations, including data privacy and copyright. Engage in responsible data extraction that aligns with ethical standards and regulations.

Scraping Frequency: Exercise caution regarding the frequency of scraping activities to prevent overloading Kroger's servers or causing disruptions.

Conclusion

Kroger grocery data scraping opens the door to fresh insights for businesses, brands, and consumers in the grocery retail industry. By harnessing Kroger's data, retailers can optimize their product offerings and pricing strategies, while consumers can make more informed shopping decisions. However, it is crucial to prioritize ethical and legal considerations, including compliance with Kroger's terms of service and data privacy regulations. In the dynamic landscape of grocery retail, data is the key to unlocking opportunities and staying competitive. Grocery data extraction from Kroger promises to deliver fresh perspectives and strategic advantages in this ever-evolving industry.

#grocerydatascraping#restaurant data scraping#food data scraping services#food data scraping#fooddatascrapingservices#zomato api#web scraping services#grocerydatascrapingapi#restaurantdataextraction

4 notes

·

View notes

Text

EXPLANATION OF DATA SCIENCE

Data science

In today's data-driven world, the term "data science" has become quite the buzzword. At its core, data science is all about turning raw data into valuable insights. It's the art of collecting, analyzing, and interpreting data to make informed decisions. Think of data as the ingredients, and data scientists as the chefs who whip up delicious insights from them.

The Data Science Process

Data Collection: The journey begins with collecting data from various sources. This can include anything from customer surveys and social media posts to temperature readings and financial transactions.

Data Cleaning: Raw data is often messy and filled with errors and inconsistencies. Data scientists clean, preprocess, and organize the data to ensure it's accurate and ready for analysis.

Data Analysis: Here's where the real magic happens. Data scientists use statistical techniques and machine learning algorithms to uncover patterns, trends, and correlations in the data. This step is like searching for hidden gems in a vast treasure chest of information.

Data Visualization: Once the insights are extracted, they need to be presented in a way that's easy to understand. Data scientists create visualizations like charts and graphs to communicate their findings effectively.

Decision Making: The insights obtained from data analysis empower businesses and individuals to make informed decisions. For example, a retailer might use data science to optimize their product inventory based on customer preferences.

Applications of Data Science

Data science has a wide range of applications in various industries.

Business: Companies use data science to improve customer experiences, make marketing strategies more effective, and enhance operational efficiency.

Healthcare: Data science helps in diagnosing diseases, predicting patient outcomes, and even drug discovery.

Finance: In the financial sector, data science plays a crucial role in fraud detection, risk assessment, and stock market predictions.

Transportation: Transportation companies use data science for route optimization, predicting maintenance needs, and even developing autonomous vehicles.

Entertainment: Streaming platforms like Netflix use data science to recommend movies and TV shows based on your preferences.

Why Data Science Matters

Data science matters for several reasons:

Informed Decision-Making: It enables individuals and organizations to make decisions based on evidence rather than guesswork.

Innovation: Data science drives innovation by uncovering new insights and opportunities.

Efficiency: Businesses can streamline their operations and reduce costs through data-driven optimizations.

Personalization: It leads to personalized experiences for consumers, whether in the form of product recommendations or targeted advertisements.

In a nutshell, data science is the process of turning data into actionable insights. It's the backbone of modern decision-making, fueling innovation and efficiency across various industries. So, the next time you hear the term "data science," you'll know that it's not just a buzzword but a powerful tool that helps shape our data-driven world.

Overall, data science is a highly rewarding career that can lead to many opportunities. If you're interested in this field and have the right skills, you should definitely consider it as a career option. If you want to gain knowledge in data science, then you should contact ACTE Technologies. They offer certifications and job placement opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested.

Thanks for reading.

2 notes

·

View notes

Text

Key A200 ASX Stocks Driving Australia’s Core Economic Sectors

Highlights

A200 ASX features companies across banking, mining, retail, and telecom

Key tickers include CBA, BHP, WES, TLS, NAB, and WOW

Covers prominent contributors to national supply chains and employment

The A200 ASX tracks a broad spectrum of companies listed on the Australian Securities Exchange, reflecting diversified economic activity across multiple sectors. Firms like Commonwealth Bank of Australia (ASX:CBA), BHP Group Limited (ASX:BHP), Wesfarmers Limited (ASX:WES), Telstra Group Limited (ASX:TLS), National Australia Bank (ASX:NAB), and Woolworths Group Limited (ASX:WOW) serve as examples of the depth and breadth within this benchmark index. These companies contribute to sectors such as banking, mining, telecommunications, consumer goods, and industrial operations.

Banking and Finance Sector Contributions

Commonwealth Bank of Australia (ASX:CBA) and National Australia Bank (ASX:NAB) are two of the most recognised names within the A200 ASX. These companies operate widely in retail banking, wealth management, and business lending. Both have deep connections with Australian households and businesses through branch networks and digital platforms.

Their roles within the A200 ASX reflect the scale and consistency of financial services across the economy. These institutions are central to monetary distribution, lending capacity, and overall credit flow. Their financial footprint also extends internationally through service segments in New Zealand and beyond.

Resources and Mining Sector Engagement

BHP Group Limited (ASX:BHP) is a prominent representative of the resources sector in the A200 ASX. The company is involved in the extraction and export of minerals including iron ore, copper, and metallurgical coal. It maintains global operations and infrastructure that support logistics and raw material supply chains.

As a top constituent of the index, BHP plays a critical role in global commodity markets. The mining industry it represents is foundational to Australia’s industrial profile and external trade. BHP’s inclusion in the A200 ASX reflects the importance of raw material exports in national economic outcomes.

Retail and Consumer Services Segment

Retail is well represented in the A200 ASX by Woolworths Group Limited (ASX:WOW) and Wesfarmers Limited (ASX:WES). WOW operates across supermarket and liquor retail under multiple brand umbrellas, with a widespread footprint in urban and regional areas. Its customer base spans the entire country through food and essential service offerings.

WES holds a portfolio including hardware, department stores, and office supply chains. With brands like Bunnings and Kmart, Wesfarmers addresses both household and commercial needs. Its operations stretch into chemicals, fertilisers, and industrial processes as well. Both companies contribute to employment, logistics coordination, and inventory movement across Australia.

Telecommunications Infrastructure Presence

Telstra Group Limited (ASX:TLS) represents the technology and communication segment in the A200 ASX. It is a leading provider of mobile, fixed-line, data, and cloud services. With an emphasis on expanding digital networks, TLS supports regional connectivity and national infrastructure goals.

It manages one of the most extensive telecommunication networks in the region and has seen continual development in areas such as 5G rollout and enterprise-level solutions. TLS's listing in the A200 ASX marks its relevance in supporting innovation and communications advancement across sectors.

Integrated and Diversified Sector Entities

Wesfarmers Limited (ASX:WES) showcases a diverse presence across both retail and industrial domains. This dual-sector identity gives it a unique place in the A200 ASX. The company participates in manufacturing, energy services, and agricultural inputs alongside its retail ventures.

Its integrated approach across sectors allows operational flexibility and exposure to varying economic cycles. The A200 ASX includes companies like WES that reflect broad economic influence, operational scalability, and multi-channel distribution. This cross-sector participation strengthens resilience in supply and demand chains.

0 notes

Text

Ultimate Guide to Cross-Channel Campaign Management in 2025

Cross-channel marketing done well may seem like building a dream team of channels and platforms that work together to help you provide the finest customer experience possible. You may harness the strengths of each channel, including email, social media, SMS, mobile applications, websites, and physical stores, to create a compelling and unified customer experience across the customer journey.

Consumers want smooth, integrated experiences across all touchpoints at a minimum. However, if you are unable to handle the intricacies of several platforms, technologies, and data interfaces, business gets quite complicated. This leads to a cascade effect of uneven brand experience, a fragmented customer view since various touchpoints cannot be tracked seamlessly, and trouble appropriately crediting conversions. Inadequate cross-channel coordination can lead to overlapping messages, excessive messaging, low frequency, and limited customer reach.

This guide provides actionable strategies for implementing cross-channel marketing analytics in complex environments. It covers data integration methods, attribution modeling strategies, and techniques for extracting actionable insights from multi-channel data.

Download the sample report of Market Forecast: https://qksgroup.com/download-sample-form/market-forecast-cross-channel-campaign-management-platform-2024-2028-worldwide-2148

Who Needs Cross-Channel Campaign Management and Why?

Today's patrons want a tailored experience from every marketing message they acquire. Therefore, campaign orchestration throughout all platforms is essential. Cross-channel campaign management enables organizations to manage marketing campaigns across many channels or platforms, resulting in the tailored experience that customers seek.

Customers lose their interest in companies whose marketing campaigns are irrelevant and lack personalization. As the marketing terrain grows more competitive, marketers must customize the consumer experience to boost customer loyalty and engagement. Cross-channel campaign management enables you to build a highly tailored experience by conforming messaging throughout all channels, improving client engagement.

Top Cross-Channel Campaign Management Platform

Braze

Braze is a customer interaction platform that enables long-term relationships between consumers and their favorite companies. Braze enables marketers to collect and act on data from many sources, allowing for real-time consumer engagement across channels from a single platform. From cross-channel messaging and journey orchestration to Al-powered testing and optimization, Braze helps businesses develop and sustain engaging customer interactions that promote growth and loyalty.

Bloomreach

Bloomreach is a cross-channel campaign management tool that enables customization. Bloomreach personalizes the whole consumer experience by enabling autonomous search, conversational shopping, and autonomous marketing. Businesses across industries, including retail, finance, hospitality, and gaming, build experiences that promote growth and long-term loyalty.

Insider

Insider is a platform that enables personalized, multi-channel encounters. Its core principle lies in enabling enterprise marketers to interconnect customers' data across different channels and systems. Insider's AI intent engine predicts future user behavior, allowing for personalized experiences. The company's platform is used to offer experiences over a variety of channels, including web, app, web push, email, SMS, and messaging apps. Insider provides services to a diverse range of worldwide enterprises, including retail, automotive, and travel.

MoEngage

MoEngage is a platform that focuses on providing insights-driven consumer engagement services. The firm's major goal is to help analyze client behavior and then interact with them through tailored communication channels. These include the web, mobile, and email. MoEngage is a comprehensive system that includes intensive customer analytics, artificial intelligence-powered customer journey orchestration, and customization capabilities, all in a single dashboard. This platform is largely used by product managers and growth marketers who want to give a tailored experience at various points of the customer lifecycle, including onboarding, retention, and growth.

Optimove

Optimove is a customer-centric marketing platform that enables marketers to achieve quantifiable growth by creating, executing, and optimizing marketing strategies that emphasize the customer experience above particular campaigns or goods. The platform finds significant opportunities for client involvement, continually improves interactions, and properly assesses the overall impact of all marketing initiatives. Optimove enables companies to build client loyalty, increase retention, and optimize customer lifetime value. The platform enables marketing automation, customer data insights, sophisticated analytics, and path mapping, resulting in a more comprehensive approach to customer-centric marketing success.

Download the sample report of Market Share: https://qksgroup.com/download-sample-form/market-share-cross-channel-campaign-management-platform-2023-worldwide-2344

The Right Marketing Channels for Your Brand Marketing Campaign

Choosing the correct marketing channels for your brand marketing campaign is determined by several criteria, including your target demographic, campaign objectives, budget, and the type of your products or services.

When choosing marketing channels for your brand campaign, prioritize those that provide the most effective opportunity to reach and engage your target audience depending on your specific business goals and resources. Experiment with several channels, monitor their success, and adapt your plan to improve outcomes over time.

As for the specific market share and forecast for the Cross-Channel Campaign Management market in 2023 and the forecast from 2024 to 2028, these figures are dynamic and subject to change based on various factors, including technological advancements, consumer behavior changes, and competitive landscape shifts. QKS Group' reports typically provide these details, offering a snapshot of the current market and predictions for future growth.

Market Share 2023, Worldwide: The report would detail the percentage of the market controlled by leading CCCM platforms and how they compare with each other. This information is crucial for understanding which platforms are dominating the market and their potential influence on market trends.

Market Forecast 2024-2028, Worldwide: This forecast would project the growth of the CCCM market over the next five years, considering factors like emerging technologies (e.g., AI, machine learning), consumer demands, and economic conditions. It helps companies plan their long-term strategies and investments in CCCM solutions.

Conclusion

Today's organizations recognize that cross-channel campaigns are essential for generating engagement throughout the entire customer lifecycle—from initial awareness to education, conversion, and finally, the post-purchase phase. By implementing a streamlined solution that enables you to reach, track, and analyze your customers across all marketing channels in one centralized location, you can provide a consistent customer experience while also gaining access to valuable data insights that will help boost revenue and enhance customer retention.

Related Reports –

https://qksgroup.com/market-research/market-forecast-cross-channel-campaign-management-platform-2024-2028-western-europe-7765

https://qksgroup.com/market-research/market-share-cross-channel-campaign-management-platform-2023-western-europe-7774

https://qksgroup.com/market-research/market-forecast-cross-channel-campaign-management-platform-2024-2028-usa-7764

https://qksgroup.com/market-research/market-share-cross-channel-campaign-management-platform-2023-usa-7773

https://qksgroup.com/market-research/market-forecast-cross-channel-campaign-management-platform-2024-2028-middle-east-and-africa-7763

https://qksgroup.com/market-research/market-share-cross-channel-campaign-management-platform-2023-middle-east-and-africa-7772

https://qksgroup.com/market-research/market-forecast-cross-channel-campaign-management-platform-2024-2028-china-7760

https://qksgroup.com/market-research/market-share-cross-channel-campaign-management-platform-2023-china-7769

https://qksgroup.com/market-research/market-forecast-cross-channel-campaign-management-platform-2024-2028-asia-excluding-japan-and-china-7757

https://qksgroup.com/market-research/market-share-cross-channel-campaign-management-platform-2023-asia-excluding-japan-and-china-7766

0 notes

Text

Top Notch Data Mining Company – Empowering Your Data-Driven Future

At Devant IT Solutions, we pride ourselves on being a top notch data mining company committed to transforming raw data into actionable insights. Our expert team leverages cutting-edge tools and techniques to uncover patterns, trends, and relationships within vast datasets, helping businesses make smarter, data-driven decisions. Whether you're in retail, healthcare, finance, or any other sector, our tailored solutions ensure that your data works for you efficiently and effectively.

As one of the leading data mining service providers, we offer scalable, secure, and industry-specific data solutions to meet evolving business needs. Our services range from web data extraction and predictive analytics to customer behavior analysis and risk assessment. With a client-centric approach, we help businesses stay ahead of the curve through deeper data understanding and strategic foresight. Contact us today to discover how Devant IT Solutions can turn your data into your greatest asset.

#top notch data mining company#data mining service providers#best data mining company in India#devant

0 notes

Text

Network Packet Broker Market Size, Share, Trends, Industry Growth and Competitive Outlook

Executive Summary Network Packet Broker Market :

The global network packet broker market size was valued at USD 814.27 billion in 2024 and is expected to reach USD 1575.46 billion by 2032,at a CAGR of 8.60% during the forecast period

This global Network Packet Broker Market research report is organized by collecting market research data from different corners of the globe with an experienced team of language resources. As market research reports are gaining immense importance in this swiftly transforming market place, Network Packet Broker Market report has been created in a way that you anticipate. Keeping in mind the customer requirement, this finest market research report is constructed with the professional and in-depth study of industry. It all-inclusively estimates general market conditions, the growth prospects in the market, possible restrictions, significant industry trends, market size, market share, sales volume and future trends.

This Network Packet Broker Market research report is formed with a nice combination of industry insight, smart solutions, practical solutions and newest technology to give better user experience. Data collection modules with large sample sizes are used to pull together data and perform base year analysis. To perform this market research study, competent and advanced tools and techniques have been used that include SWOT analysis and Porter's Five Forces Analysis. This Network Packet Broker Market report gives information about company profile, product specifications, capacity, production value, and market shares for each company for the year 2018 to 2015 under the competitive analysis study.

Discover the latest trends, growth opportunities, and strategic insights in our comprehensive Network Packet Broker Market report. Download Full Report: https://www.databridgemarketresearch.com/reports/global-network-packet-broker-market

Network Packet Broker Market Overview

Segments

By Bandwidth: The market can be segmented based on bandwidth into 1 and 10 Gbps, 40 Gbps, 100 Gbps, and 100 Gbps & above. With the increasing data traffic and need for faster processing speeds, the higher bandwidth segments are expected to witness significant growth during the forecast period.

By Organization Size: The Global Network Packet Broker Market can also be segmented based on organization size into small and medium-sized enterprises (SMEs) and large enterprises. SMEs are increasingly adopting network packet broker solutions to enhance their network visibility and security posture.

By End-Use Industry: This market can be segmented by end-use industry into IT and Telecom, Banking, Financial Services, and Insurance (BFSI), Healthcare, Retail, Government, and Others. The IT and Telecom sector is expected to dominate the market due to the extensive use of network packet brokers for network monitoring and security purposes.

Market Players

Gigamon

Cisco Systems

Ixia (Keysight)

APCON

NETSCOUT

Network Critical

Garland Technology

Broadcom (CA Technologies)

Arista Networks

Cubro Network Visibility

Flowmon Networks

Juniper Networks

cPacket Networks

Zenoss

Profitap

Browse More Reports:

Europe Reverse Logistic Market U.S. Soft Tissue Repair Market Middle East and Africa Fluoroscopy- C Arms Market Global Gluten Free Pasta Market Global Cloud Microservices Market Global Hazelnut Paste Market Global Agriculture Seeder Market Global Point-of-Care Ultrasound Market Asia-Pacific Printing Inks/Packaging Inks Market Europe Instant Noodles Market Global Data Extraction Software Market Middle East and Africa Non-Stick Cookware Market Europe Sweet Potato Powder Market Global Sprinkler Gun Market Global Aseptic Necrosis Treatment Market Global Self-Cleaning Glass Market Global Electrical String Trimmers Market Global Personal Electronic Dosimeter Market Asia-Pacific Trauma Devices Market North America Inherited Retinal Diseases Market Global Neutropenia Market Global Maintenance Repair and Operations (MRO) Market Global Automotive Advanced Driver Assistance Systems (ADAS) and Park Assist Market Global Empagliflozin, Dapagliflozin and Canagliflozin Market Global Solid Masterbatches Market Middle East and Africa Sugar Substitute Market Global Glucosamine Market Global Sexually Transmitted Infections (STIs) Market Global Commercial Air Filter Market Middle East and Africa Sweet Potato Powder Market Global Plant Enzymes Market Global Antibiotic Resistance Market Asia-Pacific Cenospheres Market Global Wearable Ambulatory Monitoring Devices Market

The global network packet broker market is highly competitive with key players focusing on product innovation, partnerships, and strategic acquisitions to gain a competitive edge. The market is witnessing a surge in demand for network packet broker solutions due to the rapid adoption of cloud services, IoT devices, and the increasing need for real-time network monitoring and security. The evolution of virtualization and software-defined networking (SDN) technologies is also expected to drive market growth as organizations look to optimize their network performance and security infrastructure.

The Asia-Pacific region is anticipated to witness substantial growth in the network packet broker market due to rapid digitization, increasing internet penetration, and expanding IT infrastructure across various industry verticals. North America and Europe are also expected to hold significant market shares owing to the presence of major IT companies, high internet penetration rates, and stringent data protection regulations.

Overall, the global network packet broker market is poised for significant growth in the coming years as organizations across various sectors prioritize network visibility, security, and performance optimization. Key market players are expected to capitalize on this trend by introducing advanced solutions and expanding their market presence through strategic collaborations and acquisitions.

The global network packet broker market is experiencing a transformational shift driven by the escalating demand for enhanced network visibility and security solutions across diverse industries. One of the emerging trends in the market is the increasing adoption of network packet brokers by organizations to efficiently monitor and optimize network performance in the face of growing data traffic and the proliferation of connected devices. This trend is particularly pronounced in sectors such as IT and Telecom, BFSI, Healthcare, Retail, Government, and others, where network reliability and security are paramount.

Market players in the network packet broker space are vigorously pursuing strategies to stay competitive and meet the evolving needs of customers. Product innovation remains a key focus area for companies such as Gigamon, Cisco Systems, Ixia (Keysight), and APCON, who are continuously introducing advanced features and functionalities to address the complex network challenges faced by modern enterprises. In addition to product innovation, strategic partnerships and acquisitions are playing a crucial role in shaping the market landscape, enabling companies to expand their market presence, enhance their product portfolios, and cater to a broader customer base.

The Asia-Pacific region stands out as a hotbed of growth opportunities for network packet broker vendors, driven by factors such as rapid digital transformation, increasing internet penetration, and the burgeoning IT infrastructure in countries like China, India, and Japan. These dynamics are creating a conducive environment for market expansion and technological advancements in network visibility and security solutions. On the other hand, North America and Europe continue to be key markets for network packet brokers, buoyed by the presence of tech giants, high connectivity rates, and regulatory frameworks that emphasize data protection and compliance.

Looking ahead, the global network packet broker market is poised for significant growth as organizations intensify their focus on fortifying their network defenses, optimizing performance, and ensuring seamless operations in an increasingly interconnected world. As the demand for advanced network visibility solutions escalates, market players are expected to ramp up their R&D efforts, forge strategic collaborations, and pursue M&A activities to stay ahead of the curve and capitalize on the burgeoning opportunities in the network packet broker landscape. This market evolution underscores the critical role that network packet brokers play in enabling organizations to navigate the complexities of modern networks and safeguard against emerging cyber threats and operational challenges.The global network packet broker market is undergoing a significant transformation fueled by the escalating demand for enhanced network visibility and security solutions across various industries. One of the key trends shaping the market is the growing adoption of network packet brokers by organizations to effectively monitor and optimize network performance in the midst of surging data traffic and the proliferation of connected devices. Particularly in sectors like IT and Telecom, BFSI, Healthcare, Retail, Government, and others, network reliability and security have become critical priorities driving the adoption of these solutions. This trend underscores the importance of robust network infrastructure to support the seamless operation of businesses in today's digitally-driven landscape.

Market players in the network packet broker industry are actively engaged in strategies to remain competitive and meet the evolving needs of their customers. Product innovation is a central focus for companies such as Gigamon, Cisco Systems, Ixia (Keysight), and APCON, as they strive to introduce advanced features and functionalities to address the complex network challenges faced by modern enterprises. Besides product advancements, strategic partnerships and acquisitions play a pivotal role in shaping the market landscape, enabling companies to expand their market reach, enrich their product offerings, and cater to a wider customer base effectively.

The Asia-Pacific region emerges as a key growth hub for network packet broker vendors, driven by factors such as rapid digital transformation, increasing internet penetration, and the expanding IT infrastructure in countries like China, India, and Japan. This region presents a fertile ground for market expansion and technological innovations in network visibility and security solutions. In contrast, North America and Europe continue to be crucial markets for network packet brokers, leveraging their tech prowess, high connectivity rates, and stringent regulatory frameworks emphasizing data protection and compliance.

Looking forward, the global network packet broker market is poised for substantial growth as organizations intensify their efforts to strengthen their network defenses, enhance performance, and ensure uninterrupted operations in an increasingly interconnected world. With the rising demand for advanced network visibility solutions, market players are expected to ramp up their research and development initiatives, forge strategic alliances, and pursue mergers and acquisitions to stay ahead of the curve and seize the growing opportunities in the network packet broker landscape. The evolving market scenario underscores the pivotal role network packet brokers play in helping organizations navigate the complexities of modern networks, safeguard against emerging cyber threats, and tackle operational challenges effectively.

The Network Packet Broker Market is highly fragmented, featuring intense competition among both global and regional players striving for market share. To explore how global trends are shaping the future of the top 10 companies in the keyword market.

Learn More Now: https://www.databridgemarketresearch.com/reports/global-network-packet-broker-market/companies

DBMR Nucleus: Powering Insights, Strategy & Growth

DBMR Nucleus is a dynamic, AI-powered business intelligence platform designed to revolutionize the way organizations access and interpret market data. Developed by Data Bridge Market Research, Nucleus integrates cutting-edge analytics with intuitive dashboards to deliver real-time insights across industries. From tracking market trends and competitive landscapes to uncovering growth opportunities, the platform enables strategic decision-making backed by data-driven evidence. Whether you're a startup or an enterprise, DBMR Nucleus equips you with the tools to stay ahead of the curve and fuel long-term success.

Key Pointers Covered in the Network Packet Broker Market Industry Trends and Forecast

Network Packet Broker Market Size

Network Packet Broker Market New Sales Volumes

Network Packet Broker Market Replacement Sales Volumes

Network Packet Broker Market By Brands

Network Packet Broker Market Procedure Volumes

Network Packet Broker Market Product Price Analysis

Network Packet Broker Market Regulatory Framework and Changes

Network Packet Broker Market Shares in Different Regions

Recent Developments for Market Competitors

Network Packet Broker Market Upcoming Applications

Network Packet Broker Market Innovators Study

About Data Bridge Market Research:

An absolute way to forecast what the future holds is to comprehend the trend today!

Data Bridge Market Research set forth itself as an unconventional and neoteric market research and consulting firm with an unparalleled level of resilience and integrated approaches. We are determined to unearth the best market opportunities and foster efficient information for your business to thrive in the market. Data Bridge endeavors to provide appropriate solutions to the complex business challenges and initiates an effortless decision-making process. Data Bridge is an aftermath of sheer wisdom and experience which was formulated and framed in the year 2015 in Pune.

Contact Us: Data Bridge Market Research US: +1 614 591 3140 UK: +44 845 154 9652 APAC : +653 1251 975 Email:- [email protected]

0 notes

Text

ETL and Data Testing Services: Why Data Quality Is the Backbone of Business Success | GQAT Tech

Data drives decision-making in the digital age. Businesses use data to build strategies, attain insights, and measure performance to plan for growth opportunities. However, data-driven decision-making only exists when the data is clean, complete, accurate, and trustworthy. This is where ETL and Data Testing Services are useful.