#Speech-to-Text API Market share

Explore tagged Tumblr posts

Text

0 notes

Link

#adroit market research#speech-to-text api#speech-to-text api 2020#speech-to-text api size#speech-to-text api share

0 notes

Link

#adroit market research#speech-to-text api#speech-to-text api 2020#speech-to-text api size#speech-to-text api share

0 notes

Text

AI Voice Cloning Market Size, Share, Analysis, Forecast, Growth 2032: Ethical and Regulatory Considerations

The AI Voice Cloning Market was valued at USD 1.9 Billion in 2023 and is expected to reach USD 15.7 Billion by 2032, growing at a CAGR of 26.74% from 2024-2032.

AI Voice Cloning Market is rapidly reshaping the global communication and media landscape, unlocking new levels of personalization, automation, and accessibility. With breakthroughs in deep learning and neural networks, businesses across industries—from entertainment to customer service—are leveraging synthetic voice technologies to enhance user engagement and reduce operational costs. The adoption of AI voice cloning is not just a technological leap but a strategic asset in redefining how brands communicate with consumers in real time.

AI Voice Cloning Market is gaining momentum as ethical concerns and regulatory standards gradually align with its growing adoption. Innovations in zero-shot learning and multilingual voice synthesis are pushing the boundaries of what’s possible, allowing voice clones to closely mimic tone, emotion, and linguistic nuances. As industries continue to explore voice-first strategies, AI-generated speech is transitioning from novelty to necessity, providing solutions for content localization, virtual assistants, and interactive media experiences.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/5923

Market Keyplayers:

Amazon Web Services (AWS) – Amazon Polly

Google – Google Cloud Text-to-Speech

Microsoft – Azure AI Speech

IBM – Watson Text to Speech

Meta (Facebook AI) – Voicebox

NVIDIA – Riva Speech AI

OpenAI – Voice Engine

Sonantic (Acquired by Spotify) – Sonantic Voice

iSpeech – iSpeech TTS

Resemble AI – Resemble Voice Cloning

ElevenLabs – Eleven Multilingual AI Voices

Veritone – Veritone Voice

Descript – Overdub

Cepstral – Cepstral Voices

Acapela Group – Acapela TTS Voices

Market Analysis The AI Voice Cloning Market is undergoing rapid evolution, driven by increasing demand for hyper-realistic voice interfaces, expansion of virtual content, and the proliferation of voice-enabled devices. Enterprises are investing heavily in AI-driven speech synthesis tools to offer scalable and cost-effective communication alternatives. Competitive dynamics are intensifying as startups and tech giants alike race to refine voice cloning capabilities, with a strong focus on realism, latency reduction, and ethical deployment. Use cases are expanding beyond consumer applications to include accessibility tools, personalized learning, digital storytelling, and more.

Market Trends

Growing integration of AI voice cloning in personalized marketing and customer service

Emergence of ethical voice synthesis standards to counter misuse and deepfake threats

Advancements in zero-shot and few-shot voice learning models for broader user adaptation

Use of cloned voices in gaming, film dubbing, and audiobook narration

Rise in demand for voice-enabled assistants and AI-driven content creators

Expanding language capabilities and emotional expressiveness in cloned speech

Shift toward decentralized voice datasets to ensure privacy and consent compliance

AI voice cloning supporting accessibility features for visually impaired users

Market Scope The scope of the AI Voice Cloning Market spans a broad array of applications across entertainment, healthcare, education, e-commerce, media production, and enterprise communication. Its versatility enables brands to deliver authentic voice experiences at scale while preserving the unique voice identity of individuals and characters. The market encompasses software platforms, APIs, SDKs, and fully integrated solutions tailored for developers, content creators, and corporations. Regional growth is being driven by widespread digital transformation and increased language localization demands in emerging markets.

Market Forecast Over the coming years, the AI Voice Cloning Market is expected to experience exponential growth fueled by innovations in neural speech synthesis and rising enterprise adoption. Enhanced computing power, real-time processing, and cloud-based voice generation will enable rapid deployment across digital platforms. As regulatory frameworks mature, ethical voice cloning will become a cornerstone in brand communication and media personalization. The future holds significant potential for AI-generated voices to become indistinguishable from human ones, ushering in new possibilities for immersive and interactive user experiences across sectors.

Access Complete Report: https://www.snsinsider.com/reports/ai-voice-cloning-market-5923

Conclusion AI voice cloning is no longer a futuristic concept—it's today’s reality, powering a silent revolution in digital interaction. As it continues to mature, it promises to transform not just how we hear technology but how we relate to it. Organizations embracing this innovation will stand at the forefront of a new era of voice-centric engagement, where authenticity, scalability, and creativity converge. The AI Voice Cloning Market is not just evolving—it’s amplifying the voice of the future.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

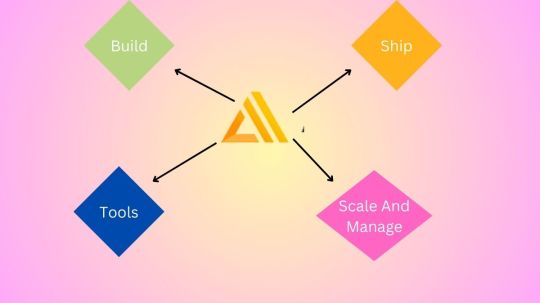

AWS Amplify Features For Building Scalable Full-Stack Apps

AWS Amplify features

Build

Summary

Create an app backend using Amplify Studio or Amplify CLI, then connect your app to your backend using Amplify libraries and UI elements.

Verification

With a fully-managed user directory and pre-built sign-up, sign-in, forgot password, and multi-factor auth workflows, you can create smooth onboarding processes. Additionally, Amplify offers fine-grained access management for web and mobile applications and enables login with social providers like Facebook, Google Sign-In, or Login With Amazon. Amazon Cognito is used.

Data Storage

Make use of an on-device persistent storage engine that is multi-platform (iOS, Android, React Native, and Web) and driven by GraphQL to automatically synchronize data between desktop, web, and mobile apps and the cloud. Working with distributed, cross-user data is as easy as working with local-only data thanks to DataStore’s programming style, which leverages shared and distributed data without requiring extra code for offline and online scenarios. Utilizing AWS AppSync.

Analysis

Recognize how your iOS, Android, or online consumers behave. Create unique user traits and in-app analytics, or utilize auto tracking to monitor user sessions and web page data. To increase customer uptake, engagement, and retention, gain access to a real-time data stream, analyze it for customer insights, and develop data-driven marketing plans. Amazon Kinesis and Amazon Pinpoint are the driving forces.

API

To access, modify, and aggregate data from one or more data sources, including Amazon DynamoDB, Amazon Aurora Serverless, and your own custom data sources with AWS Lambda, send secure HTTP queries to GraphQL and REST APIs. Building scalable apps that need local data access for offline situations, real-time updates, and data synchronization with configurable conflict resolution when devices are back online is made simple with Amplify. powered by Amazon API Gateway and AWS AppSync.

Functions

Using the @function directive in the Amplify CLI, you can add a Lambda function to your project that you can use as a datasource in your GraphQL API or in conjunction with a REST API. Using the CLI, you can modify the Lambda execution role policies for your function to gain access to additional resources created and managed by the CLI. You may develop, test, and deploy Lambda functions using the Amplify CLI in a variety of runtimes. After choosing a runtime, you can choose a function template for the runtime to aid in bootstrapping your Lambda function.

GEO

In just a few minutes, incorporate location-aware functionalities like maps and location search into your JavaScript online application. In addition to updating the Amplify Command Line Interface (CLI) tool with support for establishing all necessary cloud location services, Amplify Geo comes with pre-integrated map user interface (UI) components that are based on the well-known MapLibre open-source library. For greater flexibility and sophisticated visualization possibilities, you can select from a variety of community-developed MapLibre plugins or alter embedded maps to fit the theme of your app. Amazon Location Service is the driving force.

Interactions

With only one line of code, create conversational bots that are both interactive and captivating using the same deep learning capabilities that underpin Amazon Alexa. When it comes to duties like automated customer chat support, product information and recommendations, or simplifying routine job chores, chatbots can be used to create fantastic user experiences. Amazon Lex is the engine.

Forecasts

Add AI/ML features to your app to make it better. Use cases such as text translation, speech creation from text, entity recognition in images, text interpretation, and text transcription are all simply accomplished. Amplify makes it easier to orchestrate complex use cases, such as leveraging GraphQL directives to chain numerous AI/ML activities and uploading photos for automatic training. powered by Amazon Sagemaker and other Amazon Machine Learning services.

PubSub

Transmit messages between your app’s backend and instances to create dynamic, real-time experiences. Connectivity to cloud-based message-oriented middleware is made possible by Amplify. Generic MQTT Over WebSocket Providers and AWS IoT services provide the power.

Push alerts

Increase consumer interaction by utilizing analytics and marketing tools. Use consumer analytics to better categorize and target your clientele. You have the ability to customize your content and interact via a variety of channels, such as push alerts, emails, and texts. Pinpoint from Amazon powers this.

Keeping

User-generated content, including images and movies, can be safely stored on a device or in the cloud. A straightforward method for managing user material for your app in public, protected, or private storage buckets is offered by the AWS Amplify Storage module. Utilize cloud-scale storage to make the transition from prototype to production of your application simple. Amazon S3 is the power source.

Ship

Summary

Static web apps can be hosted using the Amplify GUI or CLI.

Amplify Hosting

Fullstack web apps may be deployed and hosted with AWS Amplify’s fully managed service, which includes integrated CI/CD workflows that speed up your application release cycle. A frontend developed with single page application frameworks like React, Angular, Vue, or Gatsby and a backend built with cloud resources like GraphQL or REST APIs, file and data storage, make up a fullstack serverless application. Changes to your frontend and backend are deployed in a single workflow with each code commit when you simply connect your application’s code repository in the Amplify console.

Manage and scale

Summary

To manage app users and content, use Amplify Studio.

Management of users

Authenticated users can be managed with Amplify Studio. Without going through verification procedures, create and modify users and groups, alter user properties, automatically verify signups, and more.

Management of content

Through Amplify Studio, developers may grant testers and content editors access to alter the app data. Admins can render rich text by saving material as markdown.

Override the resources that are created

Change the fine-grained backend resource settings and use CDK to override them. The heavy lifting is done for you by Amplify. Amplify, for instance, can be used to add additional Cognito resources to your backend with default settings. Use amplified override auth to override only the settings you desire.

Personalized AWS resources

In order to add custom AWS resources using CDK or CloudFormation, the Amplify CLI offers escape hatches. By using the “amplify add custom” command in your Amplify project, you can access additional Amplify-generated resources and obtain CDK or CloudFormation placeholders.

Get access to AWS resources

Infrastructure-as-Code, the foundation upon which Amplify is based, distributes resources inside your account. Use Amplify’s Function and Container support to incorporate business logic into your backend. Give your container access to an existing database or give functions access to an SNS topic so they can send an SMS.

Bring in AWS resources

With Amplify Studio, you can incorporate your current resources like your Amazon Cognito user pool and federated identities (identity pool) or storage resources like DynamoDB + S3 into an Amplify project. This will allow your storage (S3), API (GraphQL), and other resources to take advantage of your current authentication system.

Hooks for commands

Custom scripts can be executed using Command Hooks prior to, during, and following Amplify CLI actions (“amplify push,” “amplify api gql-compile,” and more). During deployment, customers can perform credential scans, initiate validation tests, and clear up build artifacts. This enables you to modify Amplify’s best-practice defaults to satisfy the operational and security requirements of your company.

Infrastructure-as-Code Export

Amplify may be integrated into your internal deployment systems or used in conjunction with your current DevOps processes and tools to enforce deployment policies. You may use CDK to export your Amplify project to your favorite toolchain by using Amplify’s export capability. The Amplify CLI build artifacts, such as CloudFormation templates, API resolver code, and client-side code generation, are exported using the “amplify export” command.

Tools

Amplify Libraries

Flutter >> JavaScript >> Swift >> Android >>

To create cloud-powered mobile and web applications, AWS Amplify provides use case-centric open source libraries. Powered by AWS services, Amplify libraries can be used with your current AWS backend or new backends made with Amplify Studio and the Amplify CLI.

Amplify UI components

An open-source UI toolkit called Amplify UI Components has cross-framework UI components that contain cloud-connected workflows. In addition to a style guide for your apps that seamlessly integrate with the cloud services you have configured, AWS Amplify offers drop-in user interface components for authentication, storage, and interactions.

The Amplify Studio

Managing app content and creating app backends are made simple with Amplify Studio. A visual interface for data modeling, authorization, authentication, and user and group management is offered by Amplify Studio. Amplify Studio produces automation templates as you develop backend resources, allowing for smooth integration with the Amplify CLI. This allows you to add more functionality to your app’s backend and establish multiple testing and team collaboration settings. You can give team members without an AWS account access to Amplify Studio so that both developers and non-developers can access the data they require to create and manage apps more effectively.

Amplify CLI toolchain

A toolset for configuring and maintaining your app’s backend from your local desktop is the Amplify Command Line Interface (CLI). Use the CLI’s interactive workflow and user-friendly use cases, such storage, API, and auth, to configure cloud capabilities. Locally test features and set up several environments. Customers can access all specified resources as infrastructure-as-code templates, which facilitates improved teamwork and simple integration with Amplify’s continuous integration and delivery process.

Amplify Hosting

Set up CI/CD on the front end and back end, host your front-end web application, build and delete backend environments, and utilize Amplify Studio to manage users and app content.

Read more on Govindhtech.com

#AWSAmplifyfeatures#GraphQ#iOS#AWSAppSync#AmazonDynamoDB#RESTAPIs#Amplify#deeplearning#AmazonSagemaker#AmazonS3#News#Technews#Technology#technologynews#Technologytrends#govindhtech

0 notes

Text

What is Videogen?

VideoGen is an AI-powered platform designed to simplify and democratize the video creation process. It enables users to generate high-quality videos quickly and efficiently, making it ideal for content creators, marketers, educators, and businesses.

Here are some of the key features and benefits of VideoGen:

Quick Video Creation: VideoGen allows users to create videos in seconds by inputting a script or using a prompt. The AI then compiles relevant stock footage, generates text-to-speech narration, and edits the video automatically.

Text-to-Speech Capabilities: The platform includes a sophisticated text-to-speech engine with over 150 unique voices in more than 40 languages and accents, making the narration sound natural and human-like.

Automatic Captioning and Subtitles: VideoGen can automatically generate captions and subtitles for the videos, enhancing accessibility and engagement.

Editing Tools: Users can customize their videos using an easy-to-use online editor that includes features like keyboard shortcuts, drag-and-drop functionality, and more. This makes the editing process fast and efficient.

Multilingual Support: The platform supports video creation in multiple languages, allowing users to reach a global audience effectively.

Integration and Compatibility: VideoGen is web-based and compatible with most browsers and devices. It also integrates well with social media platforms, making it easy to share videos directly.

Use Cases: VideoGen is suitable for various applications, including creating social media content, educational materials, marketing videos, and more. It is designed to save users significant time and resources while producing professional-quality videos.

0 notes

Text

Transforming Sound into Text: The Evolution and Benefits of Audio Data Transcription Services.

Introduction:

Evolution of Audio Data Transcription:

Manual Transcription to Automated Solutions:

In the early days, audio transcription was a time-consuming task that often relied on human transcribers to listen to recordings and type out the content. However, with advancements in technology, automated transcription solutions powered by artificial intelligence (AI) and machine learning (ML) have taken centre stage. These automated tools can quickly and accurately convert spoken words into written text, saving time and reducing costs.

Speech Recognition Technology:

The advent of sophisticated speech recognition technology has played a pivotal role in the evolution of audio data transcription. These systems can analyse spoken language patterns and convert them into written text with remarkable accuracy. Companies like Google, Microsoft, and IBM have developed powerful speech recognition APIs that are integrated into transcription services.

Benefits of Audio Data Transcription Services:

Accessibility and Inclusivity:

Transcribing audio content into text enhances accessibility for individuals with hearing impairments. It ensures that information is available to a wider audience, promoting inclusivity in various fields such as education, entertainment, and business.

Documentation and Knowledge Management:

Transcription services provide a convenient way to document important meetings, interviews, and discussions. This documentation is not only useful for future reference but also contributes to knowledge management within organisations. It enables easy retrieval and sharing of valuable insights.

Improved SEO and Content Optimization:

Transcribing audio content can significantly boost search engine optimization (SEO). Search engines can index and rank text-based content more effectively than audio or video files. Transcriptions also facilitate content optimization by providing a written version that can be edited and refined for clarity and conciseness.

Enhanced Workflow Efficiency:

Businesses and professionals benefit from improved workflow efficiency when audio data is transcribed. Text-based documents are easier to organize, search, and analyze, leading to streamlined processes and faster decision-making.

Data Analysis and Insights:

Transcribed audio data becomes a valuable source for data analysis. Businesses can extract insights, identify patterns, and make informed decisions based on the textual information. This is particularly beneficial in market research, customer feedback analysis, and other data-driven fields.

Legal and Compliance Requirements:

In industries such as legal and healthcare, where accurate documentation is critical, audio data transcription services play a vital role. Transcribed records not only aid in compliance but also serve as a reliable source for legal documentation.

Conclusion:

Audio data transcription services have come a long way, transforming the way we interact with and utilise spoken content. From manual transcription efforts to cutting-edge automated solutions, these services have become indispensable for a wide range of applications. Whether it's improving accessibility, enhancing documentation, or enabling data-driven decision-making, the benefits of audio data transcription services continue to shape the way we handle and derive value from audio content in our increasingly digital world.

How GTS.AI Can Help You?

At Globose Technology Solutions Pvt Ltd (GTS), data collection is not service;It is our passion and commitment to fueling the progress of AI and ML technologies.GTS.AI can leverage natural language processing capabilities to understand and interpret human language. This can be valuable in tasks such as text analysis, sentiment analysis, and language translation.GTS.AI can be adapted to meet specific business needs. Whether it's creating a unique user interface, developing a specialised chatbot, or addressing industry-specific challenges, customization options are diverse.

0 notes

Text

Tan Sheng Zheng: Stock Market Strategies and Challenges in the Era of Artificial Intelligence

In today's rapidly advancing technological era, breakthroughs in artificial intelligence (AI) technology have become a key driving force for global economic development. Especially in the ever-changing stock market field, the application of AI has become an important auxiliary tool for investors' decision-making. The latest developments from OpenAI have not only attracted widespread attention in the tech industry but also had a significant impact on the financial market. Senior financial market analyst Tan Sheng Zheng has conducted in-depth analysis and provided unique insights on this matter. The recent launch of OpenAI's customized versions, ChatGPT and the more advanced GPT-4 Turbo, marks important advancements in AI technology. Tan Sheng Zheng points out that this technological innovation not only strengthens OpenAI's leading position in the industry but also brings significant benefits to related industries and stock market investors. OpenAI announced that users can now create their own GPT without writing code and openly share it. Additionally, OpenAI plans to launch the GPT Store later this month, allowing users to earn income based on the number of users of their original GPT. This trend showcases a new trend in the commercialization of AI technology. Tan Sheng Zheng mentions that the Turbo version of GPT-4, with its 128k context window, can process prompts with over 300 pages of text content at once, which is of great significance in improving the quality and speed of information processing. Furthermore, the cost of output tokens has been reduced by half compared to the original version, and the cost of input tokens has been reduced by three times. This may have far-reaching implications for financial analysis, stock trading, and other fields. OpenAI has also released the AI API Assistant API, which adds multimodal capabilities such as image creation and text-to-speech. Tan Sheng Zheng believes that this series of innovative measures not only demonstrates OpenAI's technical strength in the field of AI but also provides more diversified application possibilities for different industries. He emphasizes that the development and application of these technologies will profoundly impact the operation of the financial market, especially in stock trading, which heavily relies on data analysis. When analyzing the US stock market, Tan Sheng Zheng emphasizes the importance of using the "Three-Line Determines the Universe" strategy. This technical analysis method is based on moving average indicators and aims to help investors grasp market trends, avoid risks, and find suitable buying and selling opportunities by combining three different periods of moving average indicators. "Three-Line Determines the Universe" not only provides a systematic investment approach but also offers important technical support for investors to plan strategies in a volatile market. Tan Sheng Zheng reminds investors that the key to understanding and applying the "Three-Line Determines the Universe" lies in a deep understanding of moving average indicators and a sensitive grasp of market dynamics. In this ever-changing market environment, investors should combine these technical analysis tools and flexibly adjust their investment strategies to adapt to changing market conditions. Tan Sheng Zheng further analyzes OpenAI's development over the past year. He mentions that since the release of GPT-4 in March this year, OpenAI has become one of the world's most powerful AI models. Currently, there are 2 million developers worldwide using OpenAI's API to provide various services. Additionally, 92% of Fortune 500 companies are using OpenAI's products to build services, and the weekly active user count of ChatGPT has reached 100 million. These data not only demonstrate the widespread application of OpenAI's technology but also reflect the market's high recognition of these technologies.

0 notes

Text

Say Farewell to Traditional Video Production Costs! In the dynamic realm of video creation, innovation has a name – D-ID. This cutting-edge platform ushers in a new era where generating videos from plain text is not only feasible but swift, cost-effective, and scalable. Bid adieu to the burdensome expenses of conventional video production and embrace a revolution that empowers you to create captivating videos from mere photos. The Dawn of Conversational AI: Meet chat.D-ID Embark on an immersive conversational AI journey with chat.D-ID. This ingenious web application employs real-time face animation and advanced text-to-speech technology to craft remarkably human-like experiences. Unlock the ability to engage face-to-face with ChatGPT, a prime example of the platform's prowess. Unveiling Creative Reality™ Studio: Crafting Videos in a Snap Meet the future of video production through D-ID's Creative Reality™ Studio. Transforming photos into dynamic video presenters at scale has never been this effortless. Whether for training materials, internal communications, or marketing endeavors, the studio's AI-powered videos deliver a cost-effective solution that will revolutionize your content creation strategy. Enabling Human Imagination: The Power of Generative AI Harness the potential of the latest generative AI tools via the Creative Reality™ Studio. Empowered by Stable Diffusion and GPT-3, this self-service haven nurtures your ideas into personalized, captivating videos. Experience a seamless reduction in video production costs across 100+ languages, all without requiring extensive technical know-how. D-ID for Developers: Unleash the Possibilities For developers, D-ID offers a robust API that's remarkably simple to integrate. With a mere four lines of code, you can tap into its massive scalability. Now supporting streaming generation of talking head videos from images and audio files, D-ID opens doors to endless possibilities for creating captivating ecosystems. Testimonials from Visionaries The likes of My Heritage, Josh, and Green Productions have lauded D-ID's groundbreaking technology. From breathing life into ancestral photos to enhancing user experiences, D-ID's impact is resounding. Its quality, speed, and cost-effectiveness have left an indelible mark on the industry. Join the D-ID Community: Where Innovation Thrives Become part of the D-ID Community and share your creative journeys. Connect, learn, and stay updated on product developments, joining a vibrant hub of like-minded individuals shaping the future of video creation. Leading the Way: News and Partnerships Stay informed through D-ID's latest updates and partnerships. From premium voices to emotion-infused digital human experiences, D-ID's contributions to the field are ever-evolving. Experience the evolution of video creation. Discover D-ID today and embark on a journey where innovation and creativity know no bounds.

0 notes

Text

The Generative AI Market: A Detailed Analysis and Forecast 2032

Introduction

Generative artificial intelligence (AI) refers to AI systems capable of generating new content, such as text, images, audio, and video. Unlike traditional AI systems that are focused on analysis and classification, generative AI can create novel artifacts that are often indistinguishable from human-created content.

The generative AI market has seen explosive growth in recent years, driven by advances in deep learning and the increasing availability of large datasets required to train generative models. Some of the most prominent real-world applications of generative AI include:

- Text generation - Automatically generating long-form content like news articles, reports, stories, code, and more.

- Image generation - Creating photorealistic images and art from text descriptions.

- Audio generation - Synthesizing human-like speech and music.

- Video generation - Producing artificial but believable video content.

- Data synthesis - Automatically generating synthetic datasets for training AI systems.

In this comprehensive guide, we analyze the current state and projected growth of the generative AI market. We provide key market statistics, drivers, challenges, use cases, top companies, and an outlook on what the future holds for this transformative technology.

Request For Sample: https://www.dimensionmarketresearch.com/report/generative-ai-market/requestSample.aspx

Market Size and Growth Projections

The generative AI market is still in the emerging phase but growing at a rapid pace. Here are some key stats on the market size and growth forecasts:

- In 2022, the global generative AI market was valued at $4.3 billion.

- The market is projected to grow at an explosive CAGR of 42.2% between 2023 and 2030.

- By 2030, the market is forecast to reach $136.5 billion according to Emergen Research.

- In terms of sub-technologies, the text generation segment accounts for the dominant share of the market currently.

- Image generation is projected to grow at the highest CAGR of 43.7% in the forecast period.

- North America held the largest share of the generative AI market in 2022, followed by Asia Pacific and Europe.

The phenomenal growth in generative AI is attributed to the advancements in deep learning and GANs, increasing computing power with the emergence of dedicated AI chips, availability of large datasets, and a growing focus on creating human-like AI systems.

Key Drivers for Generative AI Adoption

What factors are fueling the rapid growth of generative AI globally? Here are some of the key drivers:

- Lower computing costs - The cost of computing has declined dramatically in recent years with GPU and TPU chips. This enables training complex generative AI models.

- Better algorithms - New techniques like diffusion models, transformers, GANs have enhanced the ability of systems to generate realistic artifacts.

- Increasing data - The availability of large text, image, audio, and video datasets helps train robust generative models.

- Democratization - Easy access to powerful generative AI models via APIs by companies like Anthropic, Cohere, etc.

- Investments - Significant VC funding and investments in generative startups like Anthropic, DALL-E, Stability AI, etc.

- Commercial adoption - Growing industry adoption across sectors like media, advertising, retail for use cases like content creation, data augmentation, product images and more.

Challenges Facing the Generative AI Industry

While the long-term potential of generative AI is substantial, it faces some challenges currently that need to be addressed:

- Bias - Generated content sometimes reflects biases that exist in training data. Mitigating bias remains an active research problem.

- Misuse potential - Generative models can be misused to spread misinformation or generate illegal content. Responsible practices are required.

- IP issues - Copyright of artifacts generated by AI systems presents a gray area that needs regulatory clarity.

- High compute requirements - Large generative models require specialized hardware like thousands of GPUs/TPUs to train and run which is inaccessible to many.

- Lack of transparency - Most generative models act as black boxes making it hard to audit their working and detect flaws.

- Information security - Potential risks of data leaks and model thefts need to be addressed through cybersecurity measures.

Request For Sample: https://www.dimensionmarketresearch.com/report/generative-ai-market/requestSample.aspx

Major Use Cases and Industry Adoption

Generative AI is seeing rapid adoption across a diverse range of industries. Some major use cases and sectors driving this adoption are:

Media and Publishing

- Automated content creation like sports reports, financial articles, long-form fiction, etc. - Personalized news generation for readers. - Interactive storytelling. - Generating media images and graphics.

Retail and E-commerce

- Producing product images and descriptions at scale. - Generating catalogs tailored to customers. - Conversational shopping assistants.

Healthcare

- Drug discovery research. - Generating synthetic health data for training models. - Automated report writing.

Technology

- Code generation - frontend, backend, mobile apps, etc. - Quick prototyping of interfaces and assets. - Data pipeline automation.

Marketing and Advertising

- Generating ad images and videos. - Producing marketing copy and content. - Personalized campaigns at scale.

Finance

- Automating routine reports and documents like contracts. - Forecasting demand, prices, risk scenarios. - Customizing statements, descriptions for clients.

The rapid adoption across sectors is being driven by advanced generative AI solutions that can integrate into enterprise workflows and generate value at scale.

Leading Generative AI Startups and Solutions

Many promising generative AI startups have emerged over the past 3-4 years. Some of the top startups leading innovation in this market include:

- Anthropic - Offers Claude, Pate, and Constitutional AI focused on safe and helpful AI.

- Cohere - Provides powerful NLG APIs for text generation. Counts Nestle, Brex, and Intel among clients.

- DALL-E - Created by OpenAI, it set off the explosion in AI image generation.

- Lex - YC-backed startup offering an API for code generation using LLMs like Codex.

- Stable Diffusion - Open-source image generation model created by Stability AI.

- Jasper - Focused on creating content and voices for the metaverse.

- Murf - AI conversation platform targeted at enterprises.

- Replika - End-user app that provides an AI companion chatbot.

- Inworld - Using AI to generate interactive stories, characters, and worlds.

The level of innovation happening in generative AI right now is tremendous. These startups are making powerful generative models accessible to businesses and developers.

Outlook on the Future of Generative AI

Looking forward, here are some key predictions on how generative AI will evolve and its impact:

- Generative models will keep getting more sophisticated at an astonishing pace thanks to advances in algorithms and data.

- Capabilities will expand beyond text, images, audio and video into applications like 3D and VR content.

-Specialized vertical AI will emerge - AI that can generate industry-specific artifacts tailored to business needs.

- Democratization will accelerate with easy access to generative AI for all via APIs, low-code tools and consumer apps.

- Concerns around misuse, bias, and IP will result in work on AI watermarking, provenance tracking, etc.

- Regulatory scrutiny will increase, however blanket bans are unlikely given generative AI's economic potential.

- Many new startups will emerge taking generative AI into new frontiers like science, software automation, gaming worlds and human-AI collaboration.

By the end of this decade, generative AI will be ubiquitous across industries. The long-term implications on economy, society, and humanity remain profound.

Frequently Asked Questions

Here are answers to some common questions about the generative AI market:

Which company is leading in generative AI currently?

OpenAI is the top company pushing innovation in generative AI via models like GPT-3, DALL-E 2, and ChatGPT. Anthropic and Cohere are other leading startups in the space.

What are some key challenges for the generative AI industry?

Key challenges as outlined earlier include mitigating bias, preventing misuse, addressing IP and copyright issues, model security, transparency, and high compute requirements.

What are the major drivers propelling growth of generative AI?

The major drivers are lower computing costs, advances in algorithms, increase in high-quality training data, democratization of access via APIs, VC investments, and a range of practical business applications across sectors.

Which industries are using generative AI the most today?

Currently generative AI sees significant use in sectors like media, retail, technology, marketing, finance, and healthcare. But adoption is rapidly increasing across many industries.

Is generative AI a threat to human creativity and jobs?

While generative AI can automate certain tasks, experts believe it will augment rather than replace human creativity. It may disrupt some jobs but can also create new opportunities.

How can businesses benefit from leveraging generative AI?

Major business benefits include increased productivity, faster ideation, cost savings, personalization at scale, and improved customer engagement. It enables businesses to experiment rapidly and enhance human capabilities.

Conclusion

Generative AI represents an extraordinarily powerful technology that will have far-reaching impacts on many sectors. While currently in its early stages, rapid progress in capabilities driven by advances in deep learning foreshadows a future where generative models can be creative collaborators alongside humans.

With increasing investments and research around making these models safe, ethically-aligned and transparent, generative AI has the potential to become an engine of economic growth and progress for humanity. But thoughtful regulation, open access, and ethical practices are critical to realizing its full potential. Going forward, integrations with vertical domains could enable generative AI to help tackle some of the world's most pressing challenges.

0 notes

Text

Speech to Text API Market Size, Share, Trends, Forecast 2030

The Speech-to-Text API Market Size and Overview Report for 2023 presents a detailed analysis of the latest trends in the global Speech-to-Text API Market Size. The report covers new product launches, market contributions, partnerships, and mergers during the forecast period. It provides a comprehensive overview of the market, including market segmentation and highlights the characteristics of the global market.

Read More:

List of Key Companies Profiled:

Amazon Web Services, Inc.

Google LLC

IBM Corporation

Microsoft Corporation

Nuance Communications, Inc.

com, Inc.

Speechmatics

Verint Systems, Inc.

Vocapia Research SAS,

VoiceBase, Inc.

Driver: Increasing Rate of Recovery of Financial Losses to Stimulate Speech-to-Text API Market Size

The increase in the rate of recovery of financial losses is anticipated to augment the growth of the Speech-to-Text API Market Size within the estimated period. Speech-to-Text API Market Size helps all organizations and enterprises mitigate losses from a variety of incidents that take place every day, from a data breach involving sensitive customer information to network damage and disruption. Though does not protect any organization against the hack itself, it does offer help before, during, and after an attack. Further, it also helps with the costs associated with remediation, including payment for legal assistance, crisis communicators, investigators, and customer credits or refunds. However, the lack of awareness related to Speech-to-Text API Market Size Moreover, the increasing adoption of artificial intelligence and blockchain technology for risk analytics is further anticipated to support the growth in the years to come.

About Us:

Fortune Business Insights™ offers expert corporate analysis and accurate data, helping organizations of all sizes make timely decisions. We tailor innovative solutions for our clients, assisting them to address challenges distinct to their businesses. Our goal is to empower our clients with holistic market intelligence, giving a granular overview of the market they are operating in.

Contact Us:

Fortune Business Insights™ Pvt. Ltd.

US: +1 424 253 0390

UK: +44 2071 939123

APAC: +91 744 740 1245

Email:[email protected]

0 notes

Text

0 notes

Text

0 notes

Text

GPT-4o Mini: OpenAI’s Most Cost-Efficient Small Model

OpenAI is dedicated to maximising the accessibility of intelligence. OpenAI is pleased to present the GPT-4o mini, their most affordable little variant. Because GPT-4o mini makes intelligence considerably more affordable, OpenAI anticipate that it will greatly increase the breadth of applications produced using AI. GPT-4o mini beats GPT-4 on conversation preferences in the LMSYS leaderboard, scoring 82% on MMLU at the moment (opens in a new window). It is priced at 15 cents per million input tokens and 60 cents per million output tokens, which is more than 60% less than GPT-3.5 Turbo and an order of magnitude more affordable than prior frontier models.

GPT-4o mini’s low cost and latency enable a wide range of applications, including those that call multiple APIs, chain or parallelize multiple model calls, pass a large amount of context to the model (such as the entire code base or conversation history), or engage with customers via quick, real-time text responses (e.g., customer support chatbots).

The GPT-4o mini currently supports text and vision inputs and outputs through the API; support for image, text, video, and audio inputs and outputs will be added later. The model supports up to 16K output tokens per request, has a context window of 128K tokens, and has knowledge through October 2023. It is now even more economical to handle non-English text because of the enhanced tokenizer that GPT-4o shared.

A little model with superior multimodal reasoning and textual intelligence

GPT-4o mini supports the same range of languages as GPT-4o and outperforms GPT-3.5 Turbo and other small models on academic benchmarks in textual intelligence and multimodal reasoning. Additionally, it shows better long-context performance than GPT-3.5 Turbo and excellent function calling speed, allowing developers to create applications that retrieve data or interact with external systems.

GPT-4o mini has been assessed using a number of important benchmarks.

Tasks incorporating both text and vision reasoning: GPT-4o mini outperforms other compact models with a score of 82.0% on MMLU, a benchmark for textual intelligence and reasoning, compared to 77.9% for Gemini Flash and 73.8% for Claude Haiku.

Proficiency in math and coding: The GPT-4o mini outperforms earlier tiny models available on the market in activities including mathematical reasoning and coding. GPT-4o mini earned 87.0% on the MGSM, a test of math thinking, compared to 75.5% for Gemini Flash and 71.7% for Claude Haiku. In terms of coding performance, GPT-4o mini scored 87.2% on HumanEval, while Gemini Flash and Claude Haiku scored 71.5% and 75.9%, respectively.

Multimodal reasoning: The GPT-4o mini scored 59.4% on the Multimodal Reasoning Measure (MMMU), as opposed to 56.1% for Gemini Flash and 50.2% for Claude Haiku, demonstrating good performance in this domain.

OpenAI collaborated with a few reliable partners as part of their model building approach to gain a deeper understanding of the capabilities and constraints of GPT-4o mini. Companies like Ramp(opens in a new window) and Superhuman(opens in a new window), with whom they collaborated, discovered that GPT-4o mini outperformed GPT-3.5 Turbo in tasks like extracting structured data from receipt files and producing excellent email responses when given thread history.

Integrated safety precautions

OpenAI models are constructed with safety in mind from the start, and it is reinforced at every stage of the development process. Pre-training involves filtering out (opens in a new window) content that they do not want their models to encounter or produce, including spam, hate speech, adult content, and websites that primarily collect personal data. In order to increase the precision and dependability of the models’ answers, OpenAI use post-training approaches like reinforcement learning with human feedback (RLHF) to align the model’s behaviour to their policies.

The safety mitigations that GPT-4o mini has in place are identical to those of GPT-4o, which they thoroughly examined using both automated and human reviews in accordance with their preparedness framework and their voluntary commitments. OpenAI tested GPT-4o with over 70 outside experts in social psychology and disinformation to find potential dangers. OpenAI have resolved these risks and will provide more information in the upcoming GPT-4o system card and Preparedness scorecard. These expert assessments have yielded insights that have enhanced the safety of GPT-4o and GPT-4o mini.

Based on these discoveries, OpenAI groups additionally sought to enhance GPT-4o mini’s safety by implementing fresh methods that were influenced by their study. The first model to use their instruction hierarchy(opens in a new window) technique is the GPT-4o mini in the API. This technique helps to strengthen the model’s defence against system prompt extractions, jailbreaks, and prompt injections. As a result, the model responds more consistently and is safer to use in large-scale applications.

As new hazards are discovered, OpenAI will keep an eye on how GPT-4o mini is being used and work to make the model safer.

Accessibility and cost

As a text and vision model, GPT-4o mini is now accessible through the Assistants API, Chat Completions API, and Batch API. The cost to developers is 15 cents for every 1 million input tokens and 60 cents for every 1 million output tokens, or around 2500 pages in a typical book. In the upcoming days, OpenAI want to launch GPT-4o mini fine-tuning.

GPT-3.5 will no longer be available to Free, Plus, and Team users in ChatGPT; instead, they will be able to access GPT-4o mini. In keeping with OpenAI goal of ensuring that everyone can benefit from artificial intelligence, enterprise users will also have access starting next week.

Next Steps

In recent years, there have been notable breakthroughs in artificial intelligence along with significant cost savings. For instance, since the introduction of the less capable text-davinci-003 model in 2022, the cost per token of the GPT-4o mini has decreased by 99%. OpenAI is determined to keep cutting expenses and improving model capabilities in this direction.

In the future, models should be readily included into all websites and applications. Developers may now more effectively and economically create and expand robust AI applications thanks to GPT-4o mini. OpenAI is thrilled to be leading the way as AI becomes more dependable, approachable, and integrated into their everyday digital interactions.

Azure AI now offers GPT-4o mini, the fastest model from OpenAI

Customers can deliver beautiful apps at a reduced cost and with lightning speed thanks to GPT-4o mini. GPT-4o mini is more than 60% less expensive and considerably smarter than GPT-3.5 Turbo, earning 82% on Measuring Massive Multitask Language Understanding (MMLU) as opposed to 70%.1. Global languages now have higher quality thanks to the model’s integration of GPT-4o’s enhanced multilingual capabilities and larger 128K context window.

The OpenAI-announced GPT-4o mini is available concurrently on Azure AI, offering text processing at a very high speed; picture, audio, and video processing to follow. Visit the Azure OpenAI Studio Playground to give it a try for free.

GPT-4o mini is safer by default thanks to Azure AI

As always, safety is critical to the efficient use and confidence that Azure clients and Azure both need.

Azure is happy to report that you may now use GPT-4o mini on Azure OpenAI Service with their Azure AI Content Safety capabilities, which include protected material identification and prompt shields, “on by default.”

To enable you to take full advantage of the advances in model speed without sacrificing safety, Azure has made significant investments in enhancing the throughput and performance of the Azure AI Content Safety capabilities. One such investment is the addition of an asynchronous filter. Developers in a variety of industries, such as game creation (Unity), tax preparation (H&R Block), and education (South Australia Department for Education), are already receiving support from Azure AI Content Safety to secure their generative AI applications.

Data residency is now available for all 27 locations with Azure AI

Azure’s data residency commitments have applied to Azure OpenAI Service since the beginning.

Azure AI provides a comprehensive data residency solution that helps customers satisfy their specific compliance requirements by giving them flexibility and control over where their data is processed and kept. Azure also give you the option to select the hosting structure that satisfies your needs in terms of applications, business, and compliance. Provisioned Throughput Units (PTUs) and regional pay-as-you-go provide control over data processing and storage.

Azure is happy to announce that the Azure OpenAI Service is currently accessible in 27 locations, with Spain being the ninth region in Europe to launch earlier this month.

Global pay-as-you-go with the maximum throughput limitations for GPT-4o mini is announced by Azure AI

With Azure’s global pay-as-you-go deployment, GPT-4o mini is now accessible for 15 cents per million input tokens and 60 cents per million output tokens, a substantial savings over earlier frontier models.

Customers can enjoy the largest possible scale with global pay-as-you-go, which offers 30 million tokens per minute (TPM) for GPT-4o and 15 million TPM (TPM) for GPT-4o mini. With the same industry-leading speed and 99.99% availability as their partner OpenAI, Azure OpenAI Service provides GPT-4o mini.

For GPT-4o mini, Azure AI provides industry-leading performance and flexibility

Azure AI is keeping up its investment in improving workload efficiency for AI across the Azure OpenAI Service.

This month, GPT-4o mini becomes available on their Batch service in Azure AI. By utilising off-peak capacity, Batch provides high throughput projects with a 24-hour turnaround at a 50% discount rate. This is only feasible because Microsoft is powered by Azure AI, which enables us to provide customers with off-peak capacity.

This month, Azure is also offering GPT-4o micro fine-tuning, which enables users to further tailor the model for your unique use case and situation in order to deliver outstanding quality and value at previously unheard-of speeds. Azure lowered the hosting costs by as much as 43% in response to their announcement last month that Azure would be moving to token-based charging for training. When combined with their affordable inferencing rate, Azure OpenAI Service fine-tuned deployments are the most economical option for clients with production workloads.

Azure is thrilled to witness the innovation from businesses like Vodafone (customer agent solution), the University of Sydney ( AI assistants), and GigXR ( AI virtual patients), with more than 53,000 customers turning to Azure AI to provide ground-breaking experiences at incredible scale. Using Azure OpenAI Service, more than half of the Fortune 500 are developing their apps.

Read more on govindhtech.com

#gpt40mini#openai#mostcost#Efficientsmallmodel#gpt4#alittlemodel#multimodal#textualintelligence#gpt40#geminiflash#claudehaiku#api#openaimodel#azureai#azureopenaiservice#globalpay#technology#technews#news#govindhtech

0 notes