#ThreadPools

Explore tagged Tumblr posts

Link

https://bit.ly/3tgesM8 - 🎉 SafeBreach Labs Researchers have unveiled groundbreaking process injection techniques using Windows thread pools, outwitting leading endpoint detection and response (EDR) systems. These new methods, named "Pool Party" variants, bypass current EDR solutions by injecting malicious code into legitimate processes, posing a significant challenge for traditional cybersecurity measures. #CyberSecurity #ProcessInjection 🛡️ Understanding the limitation of existing process injection techniques, researchers explored Windows thread pools as a novel vector. They developed eight unique techniques that work across all processes without limitations, enhancing their flexibility and effectiveness. These methods prove undetectable against five leading EDR solutions, highlighting a critical gap in current cyber defense strategies. #InnovationInCyberSecurity #ThreadPools 🔍 The research delved deep into the architecture of Windows thread pools, identifying potential areas for process injections. It focused on worker factories, task queues, I/O completion queues, and timer queues. The techniques involved manipulating these components to execute malicious code, revealing a sophisticated approach to cyber attacks. #TechResearch #AdvancedCyberAttacks 💻 Notably, the Pool Party variants were tested against five major EDR solutions, including Palo Alto Cortex and Microsoft Defender. All variants successfully evaded detection, demonstrating a 100% success rate. This finding underscores the need for continuous evolution and improvement in cybersecurity tools and practices. #EDRBypass #CyberThreats 🌐 The implications of this research are significant for the cybersecurity community. While EDR systems have evolved, they currently lack the capability to generically detect new process injection techniques. This research emphasizes the need for a more generic detection approach and deeper inspection of trusted processes to combat sophisticated cyber threats. #CyberDefense #InnovationInSecurity 🔗 SafeBreach has responsibly disclosed their findings and shared the research with the security community. By openly discussing these techniques at Black Hat Europe and providing a detailed GitHub repository, they aim to raise awareness and aid in the development of proactive defense strategies against such advanced attacks.

#CyberSecurity#ProcessInjection#InnovationInCyberSecurity#ThreadPools#TechResearch#AdvancedCyberAttacks#EDRBypass#CyberThreats#CyberDefense#InnovationInSecurity#ResponsibleDisclosure#CyberSecurityAwareness

0 notes

Text

Understanding SQL Server Worker Threads and THREADPOOL Waits

Introduction If you’ve ever used SQLQueryStress to test your SQL Server’s performance, you might have encountered a situation where you see THREADPOOL waits but still have available worker threads. This scenario can be perplexing, especially if you’re trying to understand how SQL Server manages its resources. In this article, we’ll demystify this situation and explain why it happens, helping you…

View On WordPress

0 notes

Quote

@so in the process, the mighty process, the I/O sleeps tonight. In the process, the quiet process the I/O sleeps tonight. Async/await, async/await, async/await, async/await, async/await, async/await, async/await, async/awaitHush my theadpool, dont fear my threadpool, the I/O sleeps tonight. Hush my theadpool, dont fear my threadpool, the I/O sleeps tonight. Thanks sleepy brain 😂

@sitharus

2 notes

·

View notes

Text

Improving Python Threading Strategies For AI/ML Workloads

Python Threading Dilemma Solution Python excels at AI and machine learning. CPython, the computer language's reference implementation and byte-code interpreter, needs intrinsic support for parallel processing and multithreading. The notorious Global Interpreter Lock (GIL) “locks” the CPython interpreter into running on one thread at a time, regardless of the context. NumPy, SciPy, and PyTorch provide multi-core processing using C-based implementations.

Python should be approached differently. Imagine GIL as a thread and vanilla Python as a needle. That needle and thread make a clothing. Although high-grade, it might have been made cheaper without losing quality. Therefore, what if Intel could circumvent that “limiter” by parallelising Python programs with Numba or oneAPI libraries? What if a sewing machine replaces a needle and thread to construct that garment? What if dozens or hundreds of sewing machines manufacture several shirts extremely quickly?

Intel Distribution of Python uses robust modules and tools to optimise Intel architecture instruction sets.

Using oneAPI libraries to reduce Python overheads and accelerate math operations, the Intel distribution gives compute-intensive Python numerical and scientific apps like NumPy, SciPy, and Numba C++-like performance. This helps developers provide their applications with excellent multithreading, vectorisation, and memory management while enabling rapid cluster expansion.One Let's look at Intel's Python parallelism and composability technique and how it helps speed up AI/ML processes. Numpy/SciPy Nested Parallelism The Python libraries NumPy and SciPy were designed for scientific computing and numerical computation.

Exposing parallelism on all software levels, such as by parallelising outermost loops or using functional or pipeline parallelism at the application level, can enable multithreading and parallelism in Python applications. This parallelism can be achieved with Dask, Joblib, and the multiprocessing module mproc (with its ThreadPool class).

An optimised math library like the Intel oneAPI Math Kernel Library helps accelerate Python modules like NumPy and SciPy for data parallelism. The high processing needs of huge data for AI and machine learning require this. Multi-threat oneMKL using Python Threading runtimes. Environment variable MKL_THREADING_LAYER adjusts the threading layer. Nested parallelism occurs when one parallel part calls a function that contains another parallel portion. Sync latencies and serial parts—parts that cannot operate in parallel—are common in NumPy and SciPy programs. Parallelism-within-parallelism reduces or hides these areas.

Numba

Even though they offer extensive mathematical and data-focused accelerations, NumPy and SciPy are defined mathematical instruments accelerated with C-extensions. If a developer wants it as fast as C-extensions, they may need unorthodox math. Numba works well here. Just-In-Time compilers Numba and LLVM. Reduce the performance gap between Python and statically typed languages like C and C++. We support Workqueue, OpenMP, and Intel oneAPI Python Threading Building Blocks. The three built-in Python Threading layers represent these runtimes. New threading layers are added using conda commands (e.g., $ conda install tbb). Only workqueue is automatically installed. Numba_threading_layer sets the threading layer. Remember that there are two ways to select this threading layer: (1) picking a layer that is normally safe under diverse parallel processing, or (2) explicitly specifying the suitable threading layer name (e.g., tbb). For Numba threading layer information, see the official documentation.

Threading Composability

The Python Threading composability of an application or component determines the efficiency of co-existing multi-threaded components. A “perfectly composable” component operates efficiently without affecting other system components. To achieve a fully composable Python Threading system, over-subscription must be prevented by ensuring that no parallel piece of code or component requires a specific number of threads (known as “mandatory” parallelism). The alternative is to provide "optional" parallelism in which a work scheduler chooses which user-level threads components are mapped to and automates task coordination across components and parallel areas. The scheduler uses a single thread-pool to arrange the program's components and libraries, hence its threading model must be more efficient than the built-in high-performance library technique. Efficiency is lost otherwise.

Intel's Parallelism and Composability Strategy

Python Threading composability is easier with oneTBB as the work scheduler. The open-source, cross-platform C++ library oneTBB, which supports threading composability, optional parallelism, and layered parallelism, enables multi-core parallel processing. The oneTBB version available at the time of writing includes an experimental module that provides threading composability across libraries, enabling multi-threaded performance enhancements in Python. Acceleration comes from the scheduler's improved Python Threading allocation. OneTBB replaces Python ThreadPool with Pool. By dynamically replacing or updating objects at runtime, monkey patching keeps the thread pool active across modules without code changes. OneTBB also substitutes oneMKL by activating its Python Threading layer, which automates composable parallelism using NumPy and SciPy calls.

Nested parallelism can improve performance, as seen in the following composability example on a system with MKL-enabled NumPy, TBB, and symmetric multiprocessing (SMP) modules and IPython kernels. IPython's command shell interface allows interactive computing in multiple programming languages. The demo was ran in Jupyter Notebook to compare performance quantitatively.

If the kernel is changed in the Jupyter menu, the preceding cell must be run again to construct the ThreadPool and deliver the runtime results below.

With the default Python kernel, the following code runs for all three trials:

This method can find matrix eigenvalues with the default Python kernel. Activating the Python-m SMP kernel improves runtime by an order of magnitude. The Python-m TBB kernel boosts even more.

For this composability example, OneTBB's dynamic task scheduler performs best because it manages code where the innermost parallel sections cannot completely leverage the system's CPU and where work may vary. SMP is still useful, however it works best when workloads are evenly divided and outermost workers have similar loads.

Conclusion

In conclusion, multithreading speeds AI/ML operations. Python AI and machine learning apps can be optimised in several ways. Multithreading and multiprocessing will be crucial to pushing AI/ML software development workflows. See Intel's AI Tools and Framework optimisations and the unified, open, standards-based oneAPI programming architecture that underpins its AI Software Portfolio.

#PythonThreading#Numba#Multithreadingpython#parallelism#nestedparallelism#NumPyandSciPy#Threadingpython#technology#technews#technologynews#news#govindhtech

0 notes

Text

When there are not enough worker threads available to handle incoming requests, a special form of wait event known as a THREADPOOL Wait form takes place in SQL Servers. This article goes deeply into the complexities of THREADPOOL wait types:

https://madesimplemssql.com/threadpool-wait-type/

Please follow our FB page: https://www.facebook.com/profile.php?id=100091338502392

0 notes

Text

BIG IMPORTANT COMPANY BUG TRACKING FORM: tries to encourage helpful bug reports by having multiple supplementary fields prompting for specific details.

Component/configuration where the error happened

Root cause

Consequences of the error

How to reproduce

How it was fixed

ALLEGEDLY FIXED BUG, ASSIGNED TO ME FOR RETEST: all supplementary fields are empty, and the main field is 30 lines of copypasted error message in the general style of 'Error at line 813 of CompanyName.BlahBlahFactory.GetCallRequest.FrobTheFrob in CompanyName.BlahBlahFactory.FindFrobsThatNeedFrobbing in Company.Name.BusinessLogicHandler.Gateway.Entities.YesNoBox at SystemHandling.ReferrerHandling.ThreadHandling (Thread thread, Threadpool threadpool, Deadpool deadpool)' plus a Slack link to the company's internal Slack chat.

reeeeeeeeeeeee!

9 notes

·

View notes

Text

android 고급 11강 구조화1 tacademy

original source : https://youtu.be/48eE_O0p8Zc

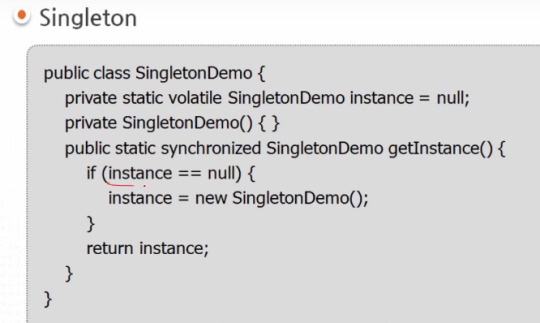

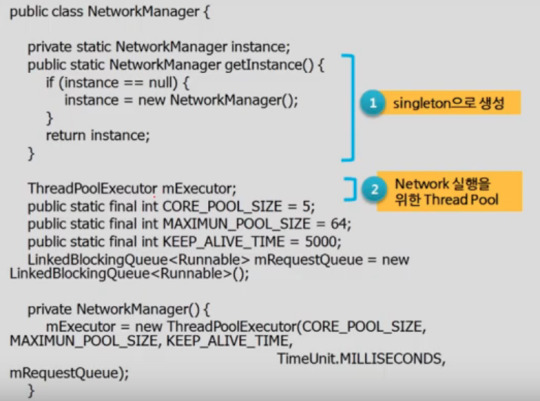

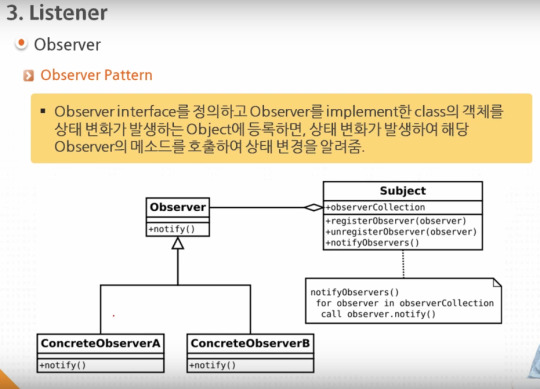

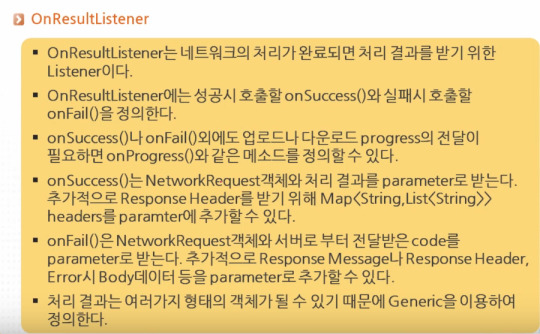

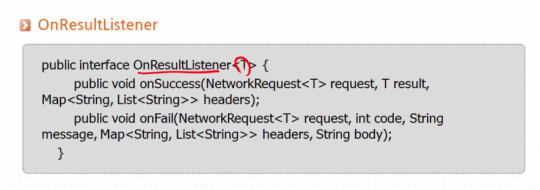

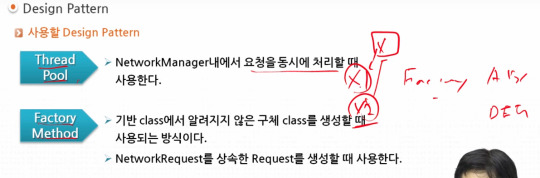

이 강의는 프로그래밍의 여러 design pattern 중에서 android에서 유용하게 쓰일수 있는 singleton, threadpool, listener 의 사용법을 보여준다. 이 강의는 networkmanager를 만드는 것을 예로 들고 있다.

==========================================================

.

.

==========================================================

.

.

==========================================================

.

.

==========================================================

.

.

==========================================================

.

.

==========================================================

.

.

==========================================================

.

.

==========================================================

.

.

==========================================================

.

.

==========================================================

.

.

참고로 factory pattern은 하나의 factory class를 통해 여러 다른 타입의 하위 클래스를 상황에 따라 맞게 생성할수 있다. 예를 들어 X를 extends한 x1 , x2클래스가 있는 경우 factory X를 통해 상황에 따라 x1, x2를 생성할수 있다.

#android#고급#11강#11#구조화#tacademy#design#design pattern#singleton#threadpool#thread pool#listener#factory

0 notes

Text

bsd on terrorism and self-immolation

now such a fancy title for something i didnt go in-depth into. i just have these momentary neat thoughts popped up in my head while i was looking up irl-based terrorism for an essay for one of my subjects (i'm a lit major, not polsci but i needed to cite some examples on something so yeah) but enough of why i came to this point. i just realize that some of their methods, ways, and motives are so so resembling that of doa and fyodor (well, i mean, it's terrorism so ofc the patterns would align)

but what i am realizing more is about dazai's intention on why he is in the same prison as fyodor. (someone might have tackled abt this topic and more in-depth than mine but pls i'm just having fun ;;;) but yes, anyway, the more obvious reasons why he's there is to watch his moves and move according to his observations so they can foil fyodor's plans but during their time in there, they also argue, right??? example of which is dazai rebuking fyodor's ideologies of the god he worships and the plans he call perfect and harmonious and the holiness of his mission, and i also took note that dazai might also be trying to tear down fyodor's ideologies bit by bit. you know, idk how dazai does it and honestly i can only brush the surface bcs he's a character smarter than me, but in a creative-writing way of explaining it, dazai might be sizing up the threads in fyodor's ideologies, following their trail so he can piece the bigger picture, then find the root of it, rip it out, disentangle it, and throw it back to fyodor in a messy and unconnected threadpool - of course, that is, presuming he would win against fyodor. and well knowing dazai, he isnt the type who teaches others lessons by talking and talking and talking...he wants to show it to them, and of course, if things don't go to worse, he might be able to pick up that threadpool of fyodor's ideologies when all his plans are effective against his and throw it back to his face and watch him try to make it make sense again

and that's where self-immolation comes in. by definition, immolation is an act of sacrifice by giving up or giving into destruction. self-immolation is just equals to self-destruction. (i could have just said self-destruction, i know, but self-immolation sounds fancier. let me indulge ;;;) now of course if you have these ideologies you've been holding onto your whole fucking life that they're basically your life guide, your anchor, your north star, your lifeline, having it crushed and disentangled into a bunch of balderdash that aren't coherent anymore because there's an argument that can slap you right across the face and prove you wrong, you would be salvaging the pieces left or worse, you would go into a total meltdown while trying to salvage the scraps that can't even materialize or form into a familiar picture in front of you anymore. and idk,,,,in my head, i picture fyodor being just so lost and confused at the threadpool dazai tosses back to him. he tries to knit back the things he believe in but no he wouldnt be able to anymore, bcs the threads dont match, they got tangled up to others, and others are lost in the mist. and knowing fyodor, who probably have spent his entire life pursuing That Specific Goal, that he probably have sacrificed a lot for it, holding unto pride for life's worth, as an act of sacrifice to himself, his pride, and his ideologies, i just can't help but imagine him....giving into destruction, giving into death as a message to the world that they might not have heard his plea but such a thing as himself and his mission exists and that's enough to convey to everyone else what he believes in, even if it at the end it pathetically becomes a one-man show...

BUT DEAR HEAVENS I WOULDN'T IN MY WHOLE LIFE WISH FOR BSD TO END WITH FYODOR DYING, JUST NO-

asagiri doesn't squander deaths unless the effects of it would matter in the narrative but i don't really think that explanation i laid out would matter to the narrative when there's the option of redemption that can fit with his backstory

and also personally, i really don't want him to die (bcs well, you know, just don't. i love him, just don't.)

but it was fun thinking how that can be a possible turn of events in the series just based on my meager research. now none of what i said can or should be taken as a truth. i'm just really having fun here because finally, the things i love doing and my interests finally align to what i am doing in university so yeah

13 notes

·

View notes

Text

Good News For Bitcoin From India, Should Other Countries Additionally Observe The Go Well With?

Steemit, the web site that drives steem, gives forex to content material creators within the type of the eponymous steem tokens, which might then be traded on the crypto market. Scrypt is a password-primarily based key derivation operate that is designed to be costly computationally and memory-clever to be able to make brute-pressure assaults unrewarding. Provides an asynchronous scrypt implementation. Asch is a Chinese blockchain answer that gives users with the power to create sidechains and blockchain functions with a streamlined interface. The consumer interface is welcoming, and anyone can full a transaction with out figuring out a lot element about Exodus. RandomValues() is the only member of the Crypto interface which can be used from an insecure context. By July 2018, Multicoin had raised a mixed $70 million from David Sacks (a member of the so-called "PayPal Mafia"), Wilson and other investors. Nearly immediately they raised $2.5 million from angel buyers. This tells me that buyers are merely "buying the dip" quite than figuring out which cryptos have enough real-world value to outlive the crash. The only time when producing the random bytes could conceivably block for a longer period of time is true after boot, when the entire system continues to be low on entropy. Implementations are required to make use of a seed with enough entropy, like a system-stage entropy supply.

Trusted by users all internationally

MoneyGram has gained over $eleven million from the blockchain-based mostly funds firm Ripple Labs

Up-to-date information and opinion regarding cryptocurrency by way of tech and worth

Easy methods to Trade Ethereum

60 Years of Kolkata Mint

Limit Order

Practically 200 trading pairs

Setup a Binance Account‚https://CryptoCousins.com/Binance

youtube

However keep in thoughts 3.1.x variations still use Math.random() which is cryptographically not safe, as it is not random enough. If it's absolute required to run CryptoJS in such an surroundings, stay with 3.1.x model. For this reason CryptoJS would possibly doesn't run in some JavaScript environments without native crypto module. CCM mode may fail as CCM can't handle multiple chunk of knowledge per instance. The Numeraire resolution is a decentralized effort that is designed to supply better outcomes by leveraging anonymized knowledge sets. Spherical represents a concerted effort to decentralize inefficient eSports platforms. The FirstBlood platform goals to optimize the static, centralized eSports world. To convey the neatest minds and prime initiatives in the trade together for a FREE online occasion that anybody can watch anywhere on the planet. Bitcoin is the most important and most successful cryptocurrency on this planet, and goals to solve a big-scale problem- the world economic system is to interconnected, and, over the long run, is unstable.

Be a part of the CryptoRisingNews mailing checklist and get an important, exclusive Cryptocurrency news along with cryptocurrency and fintech gives that can boost your trading revenue, straight to your inbox! IOTA is a highly revolutionary distributed ledger technology platform that aims to operate because the backbone of the Web of Issues. MaidSafeCoin is much like Factom, providing for the storage of critical items on a decentralized blockchain ledger. The Bitshares platform was originally designed to create digital assets that could possibly be used to trace assets equivalent to gold and silver, but has grown into a decentralized exchange that offers customers the ability to situation new belongings on. Like Monero, Zcash gives complete transaction anonymity, but also pioneers the usage of "zero-data proofs", which permit for totally encrypted transactions to be confirmed as legitimate. Our line presents whole and natural merchandise full of well being benefits on your equine partners and pets in and out.

The asynchronous version of crypto.randomFill() is carried out in a single threadpool request. The final time the present help stage was hit TNTBTC grew by 250% in a single single candle. There is no such thing as a single entity that can affect the currency. This methodology can throw an exception underneath error circumstances. Observe that typedArray is modified in-place, and no copy is made. Observe that these charts only embody a small variety of precise algorithms as examples. The API additionally permits using ciphers and hashes with a small key dimension which can be too weak for protected use. Gold has historically been viewed as the protected haven throughout recessions and bear markets. The important thing used with RSA, DSA, and DH algorithms is advisable to have at the least 2048 bits and that of the curve of ECDSA and ECDH at the least 224 bits, to be protected to use for a number of years. It is strongly recommended that a salt is random and no less than 16 bytes long. A selected HMAC digest algorithm specified by digest is applied to derive a key of the requested byte size (keylen) from the password, salt and iterations.

The salt ought to be as unique as potential. The iterations argument must be a quantity set as excessive as possible. The Helix crew has set its most block sizes to 2 MB. The algorithm is dependent on the available algorithms supported by the version of OpenSSL on the platform. On this model Math.random() has been replaced by the random methods of the native crypto module. Synchronous version of crypto.randomFill(). Don't USE THIS Version! This property, however, has been deprecated and use needs to be avoided. An exception is thrown when key derivation fails, in any other case the derived key is returned as a Buffer. If key isn't a KeyObject, this perform behaves as if key had been handed to crypto.createPublicKey(). If key isn't a KeyObject, this function behaves as if key had been handed to crypto.createPrivateKey(). In that case, this perform behaves as if crypto.createPrivateKey() had been referred to as, except that the kind of the returned KeyObject will probably be 'public' and that the personal key cannot be extracted from the returned KeyObject.

1 note

·

View note

Text

Guide To Python NumPy and SciPy In Multithreading In Python

An Easy Guide to Multithreading in Python

Python is a strong language, particularly for developing AI and machine learning applications. However, CPython, the programming language’s original, reference implementation and byte-code interpreter, lacks multithreading functionality; multithreading and parallel processing need to be enabled from the kernel. Some of the desired multi-core processing is made possible by libraries Python NumPy and SciPy such as NumPy, SciPy, and PyTorch, which use C-based implementations. However, there is a problem known as the Global Interpreter Lock (GIL), which literally “locks” the CPython interpreter to only working on one thread at a time, regardless of whether the interpreter is in a single or multi-threaded environment.

Let’s take a different approach to Python.

The robust libraries and tools that support Intel Distribution of Python, a collection of high-performance packages that optimize underlying instruction sets for Intel architectures, are designed to do this.

For compute-intensive, core Python numerical and scientific packages like NumPy, SciPy, and Numba, the Intel distribution helps developers achieve performance levels that are comparable to those of a C++ program by accelerating math and threading operations using oneAPI libraries while maintaining low Python overheads. This enables fast scaling over a cluster and assists developers in providing highly efficient multithreading, vectorization, and memory management for their applications.

Let’s examine Intel’s strategy for enhancing Python parallelism and composability in more detail, as well as how it might speed up your AI/ML workflows.

Parallelism in Nests: Python NumPy and SciPy

Python libraries called Python NumPy and SciPy were created especially for scientific computing and numerical processing, respectively.

Exposing parallelism on all conceivable levels of a program for example, by parallelizing the outermost loops or by utilizing various functional or pipeline sorts of parallelism on the application level is one workaround to enable multithreading/parallelism in Python scripts. This parallelism can be accomplished with the use of libraries like Dask, Joblib, and the included multiprocessing module mproc (with its ThreadPool class).

Data-parallelism can be performed with Python modules like Python NumPy and SciPy, which can then be accelerated with an efficient math library like the Intel oneAPI Math Kernel Library (oneMKL). This is because massive data processing requires a lot of processing. Using various threading runtimes, oneMKL is multi-threaded. An environment variable called MKL_THREADING_LAYER can be used to adjust the threading layer.

As a result, a code structure known as nested parallelism is created, in which a parallel section calls a function that in turn calls another parallel region. Since serial sections that is, regions that cannot execute in parallel and synchronization latencies are typically inevitable in Python NumPy and SciPy based systems, this parallelism-within-parallelism is an effective technique to minimize or hide them.

Going One Step Further: Numba

Despite offering extensive mathematical and data-focused accelerations through C-extensions, Python NumPy and SciPy remain a fixed set of mathematical tools accelerated through C-extensions. If non-standard math is required, a developer should not expect it to operate at the same speed as C-extensions. Here’s where Numba can work really well.

OneTBB

Based on LLVM, Numba functions as a “Just-In-Time” (JIT) compiler. It aims to reduce the performance difference between Python and compiled, statically typed languages such as C and C++. Additionally, it supports a variety of threading runtimes, including workqueue, OpenMP, and Intel oneAPI Threading Building Blocks (oneTBB). To match these three runtimes, there are three integrated threading layers. The only threading layer installed by default is workqueue; however, other threading layers can be added with ease using conda commands (e.g., $ conda install tbb).

The environment variable NUMBA_THREADING_LAYER can be used to set the threading layer. It is vital to know that there are two ways to choose this threading layer: either choose a layer that is generally safe under different types of parallel processing, or specify the desired threading layer name (e.g., tbb) explicitly.

Composability of Threading

The efficiency or efficacy of co-existing multi-threaded components depends on an application’s or component’s threading composability. A component that is “perfectly composable” would operate without compromising the effectiveness of other components in the system or its own efficiency.

In order to achieve a completely composable threading system, care must be taken to prevent over-subscription, which means making sure that no parallel region of code or component can require a certain number of threads to run (this is known as “mandatory” parallelism).

An alternative would be to implement a type of “optional” parallelism in which a work scheduler determines at the user level which thread(s) the components should be mapped to while automating the coordination of tasks among components and parallel regions. Naturally, the efficiency of the scheduler’s threading model must be better than the high-performance libraries’ integrated scheme since it is sharing a single thread-pool to arrange the program’s components and libraries around. The efficiency is lost otherwise.

Intel’s Strategy for Parallelism and Composability

Threading composability is more readily attained when oneTBB is used as the work scheduler. OneTBB is an open-source, cross-platform C++ library that was created with threading composability and optional/nested parallelism in mind. It allows for multi-core parallel processing.

An experimental module that enables threading composability across several libraries unlocks the potential for multi-threaded speed benefits in Python and was included in the oneTBB version released at the time of writing. As was previously mentioned, the scheduler’s improved threads allocation is what causes the acceleration.

The ThreadPool for Python standard is replaced by the Pool class in oneTBB. Additionally, the thread pool is activated across modules without requiring any code modifications thanks to the use of monkey patching, which allows an object to be dynamically replaced or updated during runtime. Additionally, oneTBB replaces oneMKL by turning on its own threading layer, which allows it to automatically provide composable parallelism when using calls from the Python NumPy and SciPy libraries.

See the code samples from the following composability demo, which is conducted on a system with MKL-enabled NumPy, TBB, and symmetric multiprocessing (SMP) modules and their accompanying IPython kernels installed, to examine the extent to which nested parallelism can enhance performance. Python is a feature-rich command-shell interface that supports a variety of programming languages and interactive computing. To get a quantifiable performance comparison, the demonstration was executed using the Jupyter Notebook extension.

import NumPy as np from multiprocessing.pool import ThreadPool pool = ThreadPool(10)

The aforementioned cell must be executed again each time the kernel in the Jupyter menu is changed in order to build the ThreadPool and provide the runtime outcomes listed below.

The following code, which runs the identical line for each of the three trials, is used with the default Python kernel:

%timeit pool.map(np.linalg.qr, [np.random.random((256, 256)) for i in range(10)])

This approach can be used to get the eigenvalues of a matrix using the standard Python kernel. Runtime is significantly improved up to an order of magnitude when the Python-m SMP kernel is enabled. Applying the Python-m TBB kernel yields even more improvements.

OneTBB’s dynamic task scheduler, which most effectively manages code where the innermost parallel sections cannot fully utilize the system’s CPU and where there may be a variable amount of work to be done, yields the best performance for this composability example. Although the SMP technique is still quite effective, it usually performs best in situations when workloads are more evenly distributed and the loads of all workers in the outermost regions are generally identical.

In summary, utilizing multithreading can speed up AI/ML workflows

The effectiveness of Python programs with an AI and machine learning focus can be increased in a variety of ways. Using multithreading and multiprocessing effectively will remain one of the most important ways to push AI/ML software development workflows to their limits.

Read more on Govindhtech.com

#python#numpy#SciPy#AI#machinelearning#API#AI/ML#onemkl#PYTHONNUMPY#multithreadinginpython#News#technews#technology#technologynews#TechnologyTrends#govindhtech

0 notes

Text

Elasticsearch Troubleshoot

https://opster.com/guides/opensearch/opensearch-basics/opensearch-heap-size-usage-and-jvm-garbage-collection/

https://opster.com/guides/elasticsearch/operations/elasticsearch-max-shards-per-node-exceeded/

https://opster.com/guides/elasticsearch/how-tos/search-latency-guide/

https://opster.com/guides/elasticsearch/operations/elasticsearch-oversharding/

https://aws.amazon.com/blogs/big-data/understanding-the-jvmmemorypressure-metric-changes-in-amazon-opensearch-service/

https://confluence.atlassian.com/bitbucketserverkb/elasticsearch-index-fails-due-to-garbage-collection-overhead-1044803633.html

https://www.elastic.co/guide/en/elasticsearch/reference/current/size-your-shards.html#shard-size-recommendation

https://docs.aws.amazon.com/opensearch-service/latest/developerguide/managedomains-cloudwatchmetrics.html

https://discuss.elastic.co/t/elasticsearch-problem-search-thread-pool-rejected/114446

https://aws.amazon.com/premiumsupport/knowledge-center/opensearch-resolve-429-error/

https://opster.com/guides/opensearch/opensearch-basics/threadpool/

https://aws.amazon.com/premiumsupport/knowledge-center/opensearch-troubleshoot-high-cpu/

http://man.hubwiz.com/docset/ElasticSearch.docset/Contents/Resources/Documents/www.elastic.co/guide/en/elasticsearch/reference/current/modules-threadpool.html

https://stackoverflow.com/questions/61788792/elasticsearch-understanding-threadpool

https://www.elastic.co/guide/en/cloud/current/ec-monitoring.html

https://www.elastic.co/guide/en/cloud/current/ec-cpu-usage-exceed-allowed-threshold.html

https://www.elastic.co/blog/managing-and-troubleshooting-elasticsearch-memory

https://opster.com/guides/elasticsearch/capacity-planning/elasticsearch-memory-usage/

0 notes

Text

Cryptext dll

#Cryptext dll windows#

P7RFile SystemRootsystem32rundll32.exe cryptext.dll,CryptExtOpenP7R 1.p7s. If the file is missing you may receive an error and the application may not function properly. cryptext.dll,CryptExtOpenCRL C:UsersuserAppDataLocalMicrosoftWindowsINetCacheIEVINVDFP6GTS1O1.crl MD5: 73C519F050C20580F8A62C849D49215A).

#Cryptext dll windows#

Thus, if the cryptext.dll file is missing, it may negatively affect the work of the associated software. When an application requires cryptext.dll, Windows will check the application and system folders for this. It is an essential component, which ensures that Windows programs operate properly. Index of /literatura/ulr/bin/MeriloReakcije.app/Contents/Resources/wineprefix/drive_c/windows/system32 Index of /literatura/ulr/bin/MeriloReakcije.app/Contents/Resources/wineprefix/drive_c/windows/system32Īpi-ms-win-core-kernel32-legacy-l1-1-0.dllĪpi-ms-win-core-localization-obsolete-l1-1-0.dllĪpi-ms-win-core-processenvironment-l1-1-0.dllĪpi-ms-win-core-processenvironment-l1-2-0.dllĪpi-ms-win-core-processthreads-l1-1-0.dllĪpi-ms-win-core-processthreads-l1-1-1.dllĪpi-ms-win-core-processthreads-l1-1-2.dllĪpi-ms-win-core-shlwapi-legacy-l1-1-0.dllĪpi-ms-win-core-threadpool-legacy-l1-1-0. DLL) Win32 Dynamic Link Library (generic) 5.1 (. What is Cryptext.dll used for Cryptext.dll file, also known as Crypto Shell Extensions, is commonly associated with Microsoft Windows Operating System. DocumentC:PROGRA1CompaqCpqimlvcpqimlv.exe 1 CRLFileSystemRootsystem32rundll32.exe cryptext.dll,CryptExtOpenCRL 1 DocShortcutrundll32.

0 notes

Text

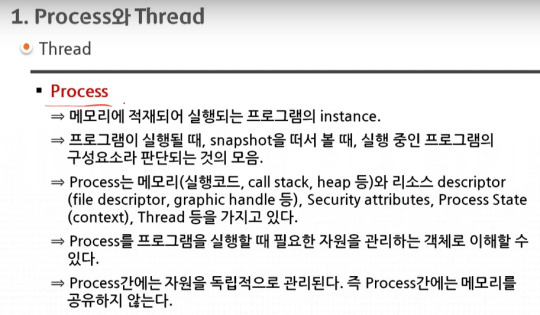

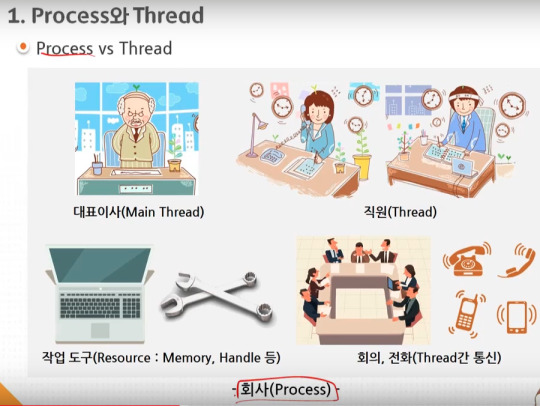

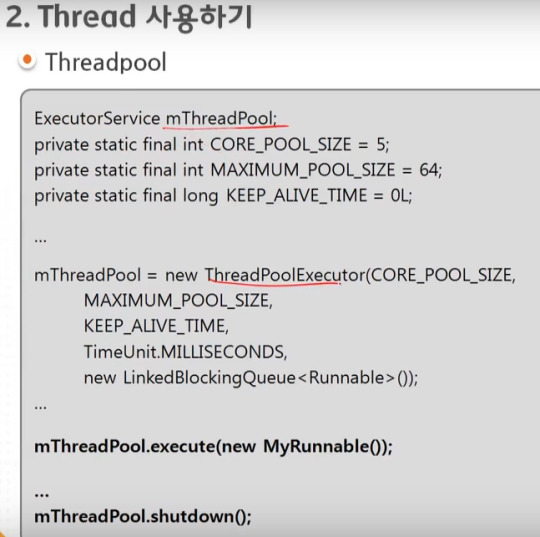

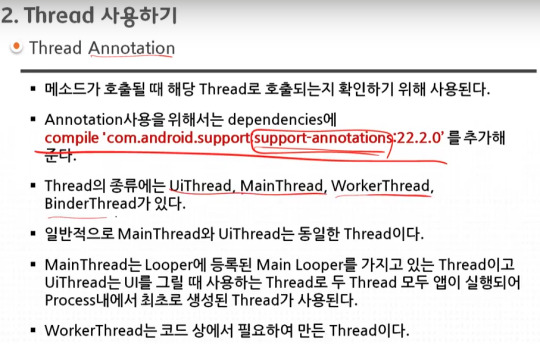

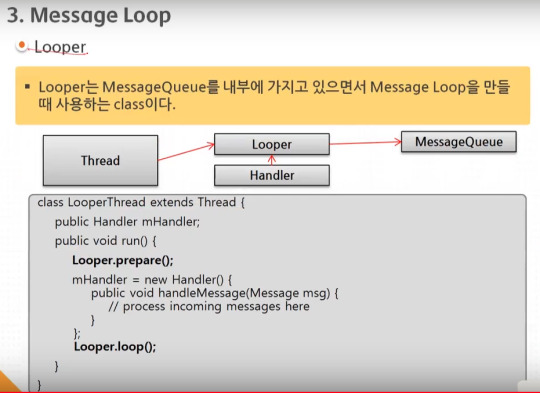

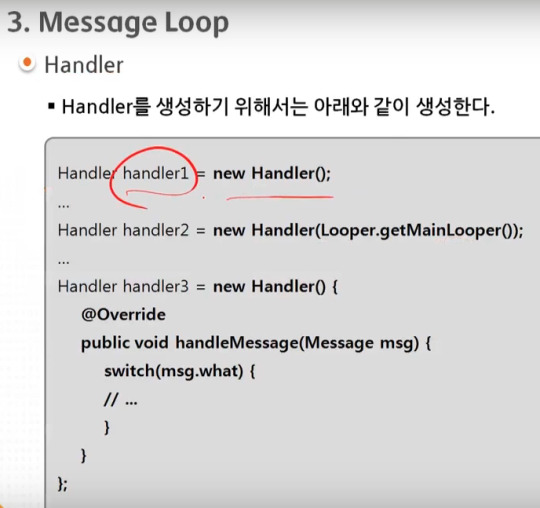

android 중급 1강 Thread(스레드)1 tacademy

original source : https://youtu.be/qt-l0MIdhTM

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

ThreadPoolExecutor를 이용해서 ThreadPool을 만든다.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

=========================================================

.

.

0 notes

Text

Senior Android Developer resume in Ann Arbor, MI

#HR #jobopenings #jobs #career #hiring #Jobposting #LinkedIn #Jobvacancy #Jobalert #Openings #Jobsearch Send Your Resume: [email protected]

Stefan Bayne

Stefan Bayne – Senior Android Developer

Ann Arbor, MI 48105

+1-734-***-****

• 7+ Years Experience working Android.

• 5 published Apps in the Google Play Store.

• Apply in-depth understanding of HTTP and REST-style web services.

• Apply in-depth understanding of server-side software, scalability, performance, and reliability.

• Create robust automated test suites and deployment scripts.

• Considerable experience debugging and profiling Android applications.

• Apply in-depth knowledge of relational databases (Oracle, MS SQL Server, MySQL, PostgreSQL, etc.).

• Hands-on development in full software development cycle from concept to launch; requirement gathering, design, analysis, coding, testing and code review.

• Stays up to date with latest developments.

• Experience in the use several version control tools (Subversion SVN, Source Safe VSS, GIT, GitHub).

• Optimize performance, battery and CPU usage and prevented memory leaks using LeakCanary and IcePick.

• Proficient in Agile and Waterfall methodologies, working with multiple-sized teams from 3 to 10 members often with role of SCRUM master, and mentor to junior developers.

• Implement customized HTTP clients to consume a Web Resource with Retrofit, Volley, OkHTTP and the native HttpURLConnection.

• Implement Dependency Injection frameworks to optimize the unit testing and mocking processes

(Dagger, Butter Knife, RoboGuice, Hilt).

• Experienced with third-party APIs and web services like Volley, Picasso, Facebook, Twitter, YouTube Player and Surface View.

• Android app development using Java and Android SDK compatible across multiple Android versions.

• Hands-on with Bluetooth.

• Apply architectures such as MVVM and MVP.

• Appy design patterns such as Singleton, Decorator, Façade, Observer, etc. Willing to relocate: Anywhere

Work Experience

Senior Android Developer

Domino’s Pizza, Inc. – Ann Arbor, MI

August 2020 to Present

https://play.google.com/store/apps/details?id=com.modinospizza&hl=en_US

· Reviewed Java code base and refactored Java arrays to Kotlin.

· Began refactoring modules of the Android mobile application from Java to Kotlin.

· Worked in Android Studio IDE.

· Implemented Android Jetpack components, Room, and LiveView.

· Implemented ThreadPool for Android app loading for multiple parallel calls.

· Utilized Gradle for managing builds.

· Made use of Web Views for certain features and inserted Javascript code into them to perform specific tasks.

· Used Broadcast to send information across multiple parts of the app.

· Implemented Location Services for geolocation and geofencing.

· Decoupled the logics from tangled code and created a Java library for use in different projects.

· Transitioned architecture from MVP to MVVM architecture.

· Used Spring for Android as well as Android Annotations.

· Implemented push notification functionality using Google Cloud Messaging (GCM).

· Incorporated Amazon Alexa into the Android application for easy ordering.

· Developed Domino’s Tracker ® using 3rd-party service APIs to allow users to see the delivery in progress.

· Handled implementation of Google Voice API for voice in dom bot feature.

· Used Jenkins pipelines for Continuous Integration and testing on devices.

· Performed unit tests with JUnit and automated testing with Espresso.

· Switching from manual JSON parsing into automated parsers and adapting the existing code to use new models.

· Worked on Android app permissions and implementation of deep linking. Senior Android Mobile Application Developer

Carnival – Miami, FL

April 2018 to August 2020

https://play.google.com/store/apps/details?id=com.carnival.android&hl=en_CA&gl=US

· Used Android Studio to review codes from server side and compile server binary.

· Worked with different team (server side, application side) to meet the client requirements.

· Implemented Google Cloud Messaging for instant alerts for the customers.

· Implemented OAuth and authentication tokens.

· Implemented entire feature using Fragments and Custom Views.

· Used sync adapters to load changed data from server and to send modified data to server from app.

· Implemented RESTful API to consume RESTful web services to retrieve account information, itinerary, and event schedules, etc.

· Utilized RxJava and Retrofit to manage communication on multiple threads.

· Used Bugzilla to track open bugs/enhancements.

· Debugged Android applications to refine logic and codes for efficiency/betterment.

· Created documentation for admin and end users on the application and server features and use cases.

· Worked with Broadcast receiver features for communication between application Android OS.

· Used Content provider to access and maintain data between applications and OS.

· Used saved preference features to save credential and other application constants.

· Worked with FCM and Local server notification system for notification.

· Used Git for version control and code review.

· Documented release notes for Server and Applications. Android Mobile Application Developer

Sonic Ind. Services – Oklahoma, OK

May 2017 to April 2018

https://play.google.com/store/apps/details?id=com.sonic.sonicdrivein

· Used Bolts framework to perform branching, parallelism, and complex error handling, without the spaghetti code of having many named callbacks.

· Generated a custom behavior in multiple screens included in the CoordinatorLayout to hide the Toolbar and the Floating Action Button on the user scroll.

· Worked in Java and Kotlin using Android Studio IDE.

· Utilized OkHTTP, Retrofit, and Realm database library to implement on-device data store with built-in synchronization to backend data store feature.

· Worked with lead to integrate Kochava SDK for mobile install attribution and analytics for connected devices.

· Implemented authentication support with the On-Site server using a Bound Service and an authenticator component, OAuth library.

· Coded in Clean Code Architecture on domain and presentation layer in MVP and apply builder, factory, façade, design patterns to make code loosely coupled in layer communication (Dependency principle).

· Integrated PayPal SDK and card.io to view billing history and upcoming payment schedule in custom view.

· Added maps-based data on Google Maps to find the closest SONIC Drive-In locations in user area and see their hours.

· Included Splunk MINT to collect crash, track all HTTP and HTTPS calls, monitor fail rate trends and send it to Cloud server.

· Coded network module using Volley library to mediate the stream of data between different API Components, supported request prioritization and multiple concurrent network connections.

· Used Firebase Authentication for user logon and SQL Cipher to encrypt transactional areas.

· Used Paging library to load information on demand from data source.

· Created unit test cases and mock object to verify that the specified conditions are met and capture arguments of method calls using Mockito framework.

· Included Google Guice dependency injection library for to inject presenters in views, make code easier to change, unit test and reuse in other contexts.

Android Application Developer

Plex, Inc. – San Francisco, CA

March 2016 to May 2017

https://play.google.com/store/apps/details?id=com.plexapp.android&hl=en

· Implemented Android app in Eclipse using MVP architecture.

· Use design patterns Singleton, and Decorator.

· Used WebViews, ListViews, and populated lists to display the lists from database using simple adapters.

· Developed the database wrapper functions for data staging and modeled the data objects relevant to the mobile application.

· Integrated with 3rd-Party libraries such as MixPanel and Flurry analytics.

· Replaced volley by Retrofit for faster JSON parsing.

· Worked on Local Service to perform long running tasks without impact to the UI thread.

· Involved in testing and testing design for the application after each sprint.

· Implemented Robolectric to speed-up unit testing.

· Used Job Scheduler to manage resources and optimize power usage in the application.

· Used Shared preferences and SQLite for data persistence and securing user information.

· Used Picasso for efficient image loading

· Provided loose coupling using Dagger dependency injection lib from Google

· Tuned components for high performance and scalability using techniques such as caching, code optimization, and efficient memory management.

· Cleaned up code to make it more efficient, scalable, reusable, consistent, and managed the code base with Git and Jenkins for continuous integration.

· Used Google GSON to parse JSON files.

· Tested using emulator and device testing with multiple versions and sizes with the help of ADB.

· Used Volley to request data from the various APIs.

· Monitored the error logs using Log4J and fixed the problems. Android Application Software Developer

SunTrust Bank – Atlanta, GA

February 2015 to March 2016

https://play.google.com/store/apps/details?id=com.suntrust.mobilebanking

· Used RESTful APIs to communicate with web services and replaced old third-party libraries versions with more modern and attractive ones.

· Followed Google Material Design Guidelines, added an Action Bar to handle external and constant menu items related to the Android app’s current Activity and extra features.

· Implemented changes to the Android Architecture of some legacy data structures to better support our primary user cases.

· Utilized Parcelables for object transfers within Activities.

· Used Crashlytics to track user behavior and obtain mobile analytics.

· Automated payment integration using Google Wallet and PayPal API for Android.

· Used certificate pinning and AES encryption for security in Android mobile apps.

· Added Trust Manager to support SSL/TLS connection for the Android app connection.

· Stored user credentials with Keychain.

· Use of Implicit Intents, ActionBar tabs with Fragments.

· Utilized Git version control tool as source control management system,

· Used a Jenkins instance for continuous integration to ensure quality methods.

· Utilized Dagger for dependency injection in Android mobile app.

· Used GSON library to deserialize JSON information.

· Utilized JIRA as the issue tracker, and for epics, stories, and tasks and backlog to manage the project for the Android development team.

Education

Bachelor’s degree in Computer Science

Florida A&M University

Skills

• Languages: Java, Kotlin

• IDE/Dev: Eclipse, Android Studio, IntelliJ

• Design Standards: Material Design

• TDD

• JIRA

• Continuous Integration

• Kanban

• SQLite

• MySQL

• Firebase DB

• MVP

• MVC

• MVVM

• Git

• GitHub

• SVN

• Bitbucket

• SourceTree

• REST

• SOAP

• XML

• JSON

• GSON

• Retrofit

• Loopers

• Loaders

• AsyncTask

• Intent Service

• RxJava

• Dependency Injection

• EventBus

• Dagger

• Crashlytics

• Mixpanel

• Material Dialogs

• RxCache

• Retrofit

• Schematic

• Smart TV

• Certificate Pinning

• MonkeyRunner

• Bluetooth Low Energy

• ExoPlayer

• SyncAdapters

• Volley

• IcePick

• Circle-CI

• Samsung SDK

• Glide

• VidEffects

• JUnit

• Ion

• GSON

• ORMLite

• Push Notifications

• Kickflip

• SpongyCastle

• Parse

• Flurry

• Twitter

• FloatingActionButton

• Espresso

• Fresco

• Moshi

• Jenkins

• UIAutomator

• Parceler

• Marshmallow

• Loaders

• Android Jetpack

• Room

• LiveView

• JobScheduler

• ParallaxPager

• XmlPullParser

• Google Cloud Messaging

• LeakCanary

Certifications and Licenses

Certified Scrum Master

Contact this candidate

Apply Now

0 notes