#TransferLearning

Explore tagged Tumblr posts

Text

Applying Transfer Learning in Generative AI: Guide for Developers

Generative AI has revolutionized the field of artificial intelligence by enabling machines to create content, from images and music to text and code. One of the most effective techniques in this domain is transfer learning. This approach allows developers to leverage pre-trained models, significantly reducing the time and computational resources needed to build robust generative AI applications. read more

#GenerativeAI#TransferLearning#AIForDevelopers#MachineLearning#AIInnovation#DeepLearning#ArtificialIntelligence

0 notes

Text

What Is Transfer Learning? And Benefits Of Transfer Learning

A significant advancement in the field of artificial intelligence and machine learning would seem to be the creation of a model that is universally applicable to a wide range of activities and domains. And transfer learning is one such method that has grown in popularity recently. Through the application of transfer learning, machine learning models may accelerate learning overall by using the information they have learned from one task to enhance performance on a related task.

What is transfer learning?

A machine learning technique called transfer learning involves adapting a model that has already been trained on one issue to operate on a related but unrelated topic. The model improves accuracy and speeds up training by reusing learnt characteristics from the first challenge rather than beginning from blank. For example, a model that has been trained to identify things in pictures may be adjusted to identify certain objects, such as vehicles or animals. Because it enables models to transfer information from previously trained data, this method is especially effective in situations when data is limited.

Transfer Learning Models

The Mechanism of Transfer Learning

Fundamental to machine learning, transfer learning is the act of optimizing a previously learned model like a neural network used in deep learning for a new task. The early layers, which capture more broad information, are usually kept, while the ones closest to the model’s output are changed. This procedure is effective because the first few layers of a deep learning model often identify general patterns (such as edges or textures) that may be applied to a variety of tasks.

By altering the last layer, a model trained on the ImageNet dataset to identify thousands of items, for instance, may be reused to categorize medical pictures in transfer learning deep learning. Without having to start the model from scratch, the information gained from identifying generic items is used to the identification of particular medical problems.

Transfer Learning: Why Use It?

Transfer learning is a useful technique in machine learning and deep learning for a number of reasons:

Shorter Training Time: Because the model has already learnt certain patterns or traits, training goes considerably more quickly.

Better Performance: By using previously learnt knowledge, models may often perform better with less data.

Data Efficiency: Transfer learning may be used in situations when standard machine learning is known to encounter a bottleneck due to a lack of labeled data for the new job.

When to Apply Transfer Learning

Transfer learning is useful in the following situations:

For the goal job, there is a little amount of labeled data.

Common elements throughout the challenges include object recognition from various picture datasets.

It would take too long or be too computationally costly to train a model from scratch.

Although the challenge is hard, the likelihood of success is greatly increased by beginning with a pre-trained model.

For example, models such as BERT or GPT in natural language processing (NLP) are pre-trained on large text corpora. For applications like sentiment analysis and text summarizing, they may be adjusted.

Pre-trained model

Implementations of Transfer Learning

Depending on the purpose and model type, transfer learning may be implemented in a variety of ways:

Fine-tuning a Pre-trained Model: Choosing a pre-trained model, freezing certain layers (typically the early ones), and retraining the latter layers to the new goal is the most popular method.

Feature Extraction: The pre-trained model may sometimes be used as a fixed feature extractor, using its acquired features to your job without requiring any model adjustments.

Multi-task learning: This entails teaching a model to execute many related tasks at once so that it may share information across the tasks.

Benefits of Transfer Learning

Enhanced Efficiency: Transfer learning-trained models need less data and computational resources, which makes them perfect for companies and academics with limited resources.

Faster Deployment: Solutions can be scaled and deployed more quickly since the model doesn’t have to learn from start.

Greater Accuracy: By beginning with features acquired from large datasets, pre-trained models perform better.

Versatility: Transfer learning may be used in a wide range of fields, including financial forecasting, medical diagnostics, and natural language processing in addition to picture categorization.

Drawbacks with Transfer Learning

Overfitting: Overfitting may occur when the task performed by the pre-trained model deviates too much from the goal task.

Data Bias: A pre-trained model’s performance in the new task may be impacted by biases derived from the data used for its original training.

Restricted Transferability: Not every model or job may be used with another. When there are substantial parallels between the source and target activities, transfer learning is most effective.

In summary

Machine learning has undergone a revolution thanks to transfer learning, which enables models to use previously acquired information, speeds up training, increases accuracy, and facilitates the use of ML approaches in situations with sparse data. The method’s generalizability across tasks, in spite of some difficulties, makes it an invaluable resource for academics and enterprises seeking to effectively implement AI solutions.

Transfer learning in deep learning and other areas will remain essential to extending the potential applications of artificial intelligence as machine learning develops. And to become an expert in the field of AI and machine learning, you need to sign up for Purdue University’s unique Post Graduate Program in AI and Machine Learning, which will teach you all the best techniques and resources in only 11 months!

Read more on Govindhtech.com

#Transferlearning#artificialintelligence#machinelearning#neuralnetwork#deeplearning#naturallanguageprocessing#largedatasets#News#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Advanced Techniques in Deep Learning: Transfer Learning and Reinforcement Learning

Deep learning has made remarkable strides in artificial intelligence, enabling machines to perform tasks that were once thought to be the exclusive domain of human intelligence. Neural networks, which lie at the heart of deep learning, emulate the human brain’s structure and function to process large volumes of data, identify patterns, and make informed decisions.

While traditional deep learning models have proven to be highly effective, advanced techniques like transfer learning and reinforcement learning are setting new benchmarks, expanding the potential of AI even further. This article explores these cutting-edge techniques, shedding light on their functionalities, advantages, practical applications, and real-world case studies.

Understanding Transfer Learning

Transfer learning is a powerful machine learning method where a model trained on one problem is repurposed to solve a different, but related, problem. This technique leverages knowledge from a previously solved task to tackle new challenges, much like how humans apply past experiences to new situations. Here's a breakdown of how transfer learning works and its benefits:

Use of Pre-Trained Models: In essence, transfer learning involves using pre-trained models like VGG, ResNet, or BERT. These models are initially trained on large datasets such as ImageNet for visual tasks or extensive text corpora for natural language processing (NLP). This pre-training equips them with a broad understanding of patterns and features.

Fine-Tuning for Specific Tasks: Once a pre-trained model is selected, it undergoes a fine-tuning process. This typically involves modifying the model's architecture:

Freezing Layers: Some layers of the model are frozen to retain the learned features.

Adapting or Replacing Layers: Other layers are adapted or replaced to tailor the model to the specific needs of a new, often smaller, dataset. This customization ensures that the model is optimized for the specific task at hand.

Reduced Training Time and Resources: One of the major benefits of transfer learning is that it significantly reduces the time and computational power required to train a new model. Since the model has already learned essential features from the initial training, it requires less data and fewer resources to fine-tune for new tasks.

Enhanced Performance: By reusing existing models, transfer learning brings valuable pre-learned features and insights, which can lead to higher accuracy in new tasks. This pre-existing knowledge provides a solid foundation, allowing the model to perform better than models trained from scratch.

Effectiveness with Limited Data: Transfer learning is particularly beneficial when labeled data is scarce. This is a common scenario in specialized fields such as medical imaging, where collecting and labeling data can be costly and time-consuming. By leveraging a pre-trained model, researchers can achieve high performance even with a limited dataset.

Transfer learning’s ability to save time, resources, and enhance performance makes it a popular choice across various domains, from image classification to natural language processing and healthcare diagnostics.

Practical Applications of Transfer Learning

Transfer learning has demonstrated its effectiveness across various domains by adapting pre-trained models to solve specific tasks with high accuracy. Below are some key applications:

Image Classification: One of the most common uses of transfer learning is in image classification. For instance, Google’s Inception model, which was pre-trained on the ImageNet dataset, has been successfully adapted for various image recognition tasks. Researchers have fine-tuned the Inception model to detect plant diseases, classify wildlife species, and identify objects in satellite imagery. These applications have achieved high accuracy, even with relatively small amounts of training data.

Natural Language Processing (NLP): Transfer learning has revolutionized how models handle language-related tasks. A prominent example is BERT (Bidirectional Encoder Representations from Transformers), a model pre-trained on vast amounts of text data. BERT has been fine-tuned for a variety of NLP tasks, such as:

Sentiment Analysis: Understanding and categorizing emotions in text, such as product reviews or social media posts.

Question Answering: Powering systems that can provide accurate answers to user queries.

Language Translation: Improving the quality of automated translations between different languages. Companies have also utilized BERT to develop customer service bots capable of understanding and responding to inquiries, which significantly enhances user experience and operational efficiency.

Healthcare: The healthcare industry has seen significant benefits from transfer learning, particularly in medical imaging. Pre-trained models have been fine-tuned to analyze images like X-rays and MRIs, allowing for early detection of diseases. Examples include:

Pneumonia Detection: Models fine-tuned on medical image datasets to identify signs of pneumonia from chest X-rays.

Brain Tumor Identification: Using pre-trained models to detect abnormalities in MRI scans.

Cancer Detection: Developing models that can accurately identify cancerous lesions in radiology scans, thereby assisting doctors in making timely diagnoses and improving patient outcomes.

Performance Improvements: Studies have shown that transfer learning can significantly enhance model performance. According to research published in the journal Nature, using transfer learning reduced error rates in image classification tasks by 40% compared to models trained from scratch. In the field of NLP, a survey by Google AI reported that transfer learning improved accuracy metrics by up to 10% over traditional deep learning methods.

These examples illustrate how transfer learning not only saves time and resources but also drives significant improvements in accuracy and efficiency across various fields, from agriculture and wildlife conservation to customer service and healthcare diagnostics.

Exploring Reinforcement Learning

Reinforcement learning (RL) offers a unique approach compared to other machine learning techniques. Unlike supervised learning, which relies on labeled data, RL focuses on training an agent to make decisions by interacting with its environment and receiving feedback in the form of rewards or penalties. This trial-and-error method enables the agent to learn optimal strategies that maximize cumulative rewards over time.

How Reinforcement Learning Works:

Agent and Environment Interaction: In RL, an agent (the decision-maker) perceives its environment, makes decisions, and performs actions that alter its state. The environment then provides feedback, which could be a reward (positive feedback) or a penalty (negative feedback), based on the action taken.

Key Components of RL:

Agent: The learner or decision-maker that interacts with the environment.

Environment: The system or scenario within which the agent operates and makes decisions.

Actions: The set of possible moves or decisions the agent can make.

States: Different configurations or situations that the environment can be in.

Rewards: Feedback received by the agent after taking an action, which is used to evaluate the success of that action.

Policy: The strategy or set of rules that define the actions the agent should take based on the current state.

Adaptive Learning and Real-Time Decision-Making:

The adaptive nature of reinforcement learning makes it particularly effective in dynamic environments where conditions are constantly changing. This adaptability allows systems to learn autonomously, without requiring explicit instructions, making RL suitable for real-time applications where quick, autonomous decision-making is crucial. Examples include robotics, where robots learn to navigate different terrains, and self-driving cars that must respond to unpredictable road conditions.

Statistics and Real-World Impact:

Success in Gaming: One of the most prominent examples of RL’s success is in the field of gaming. DeepMind’s AlphaGo, powered by reinforcement learning, famously defeated the world champion in the complex game of Go. This achievement demonstrated RL's capability for strategic thinking and complex decision-making. AlphaGo's RL-based approach achieved a win rate of 99.8% against other AI systems and professional human players.

Robotic Efficiency: Research by OpenAI has shown that using reinforcement learning can improve the efficiency of robotic grasping tasks by 30%. This increase in efficiency leads to more reliable and faster robotic operations, highlighting RL’s potential in industrial automation and logistics.

Autonomous Driving: In the automotive industry, reinforcement learning is used to train autonomous vehicles for tasks such as lane changing, obstacle avoidance, and route optimization. By continually learning from the environment, RL helps improve the safety and efficiency of self-driving cars. For instance, companies like Waymo and Tesla use RL techniques to enhance their vehicle's decision-making capabilities in real-time driving scenarios.

Reinforcement learning's ability to adapt and learn from interactions makes it a powerful tool in developing intelligent systems that can operate in complex and unpredictable environments. Its applications across various fields, from gaming to robotics and autonomous vehicles, demonstrate its potential to revolutionize how machines learn and make decisions.

Practical Applications of Reinforcement Learning

One of the most prominent applications of reinforcement learning is in robotics. RL is employed to train robots for tasks such as walking, grasping objects, and navigating complex environments. Companies like Boston Dynamics use reinforcement learning to develop robots that can adapt to varying terrains and obstacles, enhancing their functionality and reliability in real-world scenarios.

Reinforcement learning has also made headlines in the gaming industry. DeepMind’s AlphaGo, powered by reinforcement learning, famously defeated a world champion in the ancient board game Go, demonstrating RL's capacity for strategic thinking and complex decision-making. The success of AlphaGo, which achieved a 99.8% win rate against other AI systems and professional human players, showcased the potential of RL in mastering sophisticated tasks.

In the automotive industry, reinforcement learning is used to train self-driving cars to make real-time decisions. Autonomous vehicles rely on RL to handle tasks such as lane changing, obstacle avoidance, and route optimization. Companies like Tesla and Waymo utilize reinforcement learning to improve the safety and efficiency of their autonomous driving systems, pushing the boundaries of what AI can achieve in real-world driving conditions.

Comparing Transfer Learning and Reinforcement Learning

While both transfer learning and reinforcement learning are advanced techniques that enhance deep learning capabilities, they serve different purposes and excel in different scenarios. Transfer learning is ideal for tasks where a pre-trained model can be adapted to a new but related problem, making it highly effective in domains like image and language processing. It is less resource-intensive and quicker to implement compared to reinforcement learning.

Reinforcement learning, on the other hand, is better suited for scenarios requiring real-time decision-making and adaptation to dynamic environments. Its complexity and need for extensive simulations make it more resource-demanding, but its potential to achieve breakthroughs in fields like robotics, gaming, and autonomous systems is unparalleled.

Conclusion

Transfer learning and reinforcement learning represent significant advancements in the field of deep learning, each offering unique benefits that can be harnessed to solve complex problems. By repurposing existing knowledge, transfer learning allows for efficient and effective solutions, especially when data is scarce. Reinforcement learning, with its ability to learn and adapt through interaction with the environment, opens up new possibilities in areas requiring autonomous decision-making and adaptability.

As AI continues to evolve, these techniques will play a crucial role in developing intelligent, adaptable, and efficient systems. Staying informed about these advanced methodologies and exploring their applications will be key to leveraging the full potential of AI in various industries. Whether it's enhancing healthcare diagnostics, enabling self-driving cars, or creating intelligent customer service bots, transfer learning and reinforcement learning are paving the way for a smarter, more automated future.

#ReinforcementLearning#TransferLearning#DeepLearning#MachineLearning#AI#ArtificialIntelligence#NaturalLanguageProcessing#ImageClassification#Robotics#AutonomousVehicles#PretrainedModels#BERT#AlphaGo#AIResearch#RealTimeAI

1 note

·

View note

Text

youtube

Deep Learning : Generative AI

#GenerativeAI#GANs (Generative Adversarial Networks)#VAEs (Variational Auto encoders)#ArtificialIntelligence#MachineLearning#DeepLearning#NeuralNetworks#AIApplications#CreativeAI#NaturalLanguageGeneration (NLG)#ImageSynthesis#TextGeneration#ComputerVision#DeepfakeTechnology#AIArt#GenerativeDesign#AutonomousSystems#ContentCreation#TransferLearning#ReinforcementLearning#CreativeCoding#AIInnovation#TDM#health#healthcare#bootcamp#llm#youtube#branding#artwork

1 note

·

View note

Text

New Paper: PharmKE: Knowledge Extraction Platform for Pharmaceutical Texts Using Transfer Learning

The new year is off to a great start! Our paper "PharmKE: Knowledge Extraction Platform for Pharmaceutical Texts Using Transfer Learning" has just been published in the MDPI Computers journal. It highlights the work by our team at the Faculty of Computer Science and Engineering - Skopje in the field of transfer learning in pharmacology.

More specifically, in it we introduce PharmKE, a text analysis platform tailored to the pharmaceutical industry that uses deep learning at several stages to perform an in-depth semantic analysis of relevant publications. With the platform, pharmaceutical domain specialists can easily identify and visualize the knowledge extracted from the input texts.

Shout out to our team: Nasi Jofche, Kostadin Mishev, Riste Stojanov, Milos Jovanovik, Eftim Zdravevski and Dimitar Trajanov.

Paper: https://www.mdpi.com/2073-431X/12/1/17

0 notes

Text

youtube

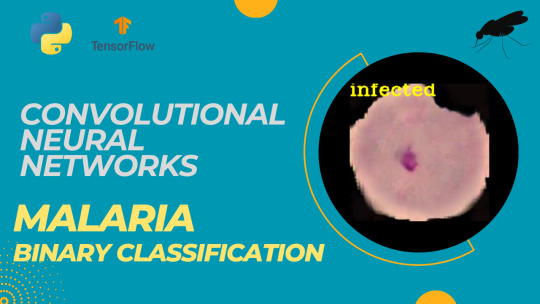

How to classify Malaria Cells using Convolutional neural network

This tutorial provides a step-by-step easy guide on how to implement and train a CNN model for Malaria cell classification using TensorFlow and Keras.

🔍 What You’ll Learn 🔍:

Data Preparation — In this part, you’ll download the dataset and prepare the data for training. This involves tasks like preparing the data , splitting into training and testing sets, and data augmentation if necessary.

CNN Model Building and Training — In part two, you’ll focus on building a Convolutional Neural Network (CNN) model for the binary classification of malaria cells. This includes model customization, defining layers, and training the model using the prepared data.

Model Testing and Prediction — The final part involves testing the trained model using a fresh image that it has never seen before. You’ll load the saved model and use it to make predictions on this new image to determine whether it’s infected or not.

You can find link for the code in the blog : https://eranfeit.net/how-to-classify-malaria-cells-using-convolutional-neural-network/

Full code description for Medium users : https://medium.com/@feitgemel/how-to-classify-malaria-cells-using-convolutional-neural-network-c00859bc6b46

You can find more tutorials, and join my newsletter here : https://eranfeit.net/

Check out our tutorial here : https://youtu.be/WlPuW3GGpQo&list=UULFTiWJJhaH6BviSWKLJUM9sg

Enjoy

Eran

#Python #Cnn #TensorFlow #deeplearning #neuralnetworks #imageclassification #convolutionalneuralnetworks #computervision #transferlearning

1 note

·

View note

Text

This colorful infographic demystifies the concept of transfer learning in machine learning. It illustrates how a pre-trained source model serves as a foundation for a target model, which is then fine-tuned with new data for specific tasks. The flowcharts depict the process from input to output using the source model and the transfer of knowledge to the target model. Neural network layers, including convolutional (CNN) and fully connected (FC) layers, adapt to classify thousands of classes in the source model and binary classes in the target model.

🎨 Why It’s Interesting:

Simplifies a complex topic for learners.

Engages viewers with vibrant visuals.

Makes transfer learning accessible.

#TransferLearning

#MachineLearning

#DeepLearning

#NeuralNetworks

#AI

#DataScience

#ModelTraining

#FineTuning

#SourceModel

#TargetModel

#ConvolutionalLayers

#FullyConnectedLayers

#KnowledgeTransfer

#PretrainedModel

#BinaryClassification

#ArtificialIntelligence

#TechExplained

#VisualLearning

#Infographic

#LearnML

#MLConcepts

#EducationalContent

#Simplified

#ComplexTopics

#VisualizeML

#UnderstandingML

#MLBeginners

#MLExploration

#MLCommunity

#MLForAll

1 note

·

View note

Text

"Exploring the Intersection of AI and Image Captioning: How Machines Generate Accurate and Meaningful Descriptions"

AI technology has come a long way in recent years, and one area where it has made significant progress is in image captioning. Image captioning refers to the process of generating a textual description of an image or video. In this article, we will explore how AI technology works with captioning and the different approaches used to generate captions.

Neural Networks

Neural networks are a key technology in image captioning. These networks are designed to mimic the human brain and can learn from examples and data. The networks consist of several layers of nodes, each of which performs a specific operation on the input data. For image captioning, the neural network is trained on a large dataset of images and their associated captions. The network then uses this training to generate captions for new images.

Natural Language Processing

Natural language processing (NLP) is another important technology used in image captioning. NLP is a subfield of AI that focuses on the interaction between computers and human language. It involves the analysis of language and the development of algorithms that can understand and generate natural language. In image captioning, NLP is used to generate captions that are grammatically correct and semantically meaningful.

Attention Mechanism

Attention mechanism is a technique used to improve the performance of neural networks in image captioning. It works by allowing the network to focus its attention on specific parts of the image when generating the caption. For example, if the image contains a person, the attention mechanism can direct the network to focus on the person's face when generating the caption. This helps to ensure that the generated caption is more accurate and relevant to the image.

Transfer Learning

Transfer learning is a technique that involves using a pre-trained neural network as a starting point for a new task. In image captioning, transfer learning can be used to improve the performance of the network by starting with a pre-trained network that has already been trained on a large dataset of images and captions. This allows the network to learn more quickly and accurately, reducing the amount of training time required.

In conclusion, AI technology has made significant strides in image captioning, thanks to the use of neural networks, natural language processing, attention mechanisms, and transfer learning. These technologies have enabled machines to generate captions that are accurate, meaningful, and grammatically correct. As AI technology continues to evolve, we can expect to see even more advanced image captioning systems that can understand and describe images and videos with greater accuracy and nuance.

#AItechnology#ImageCaptioning#NeuralNetworks#NaturalLanguageProcessing#AttentionMechanism#TransferLearning#ArtificialIntelligence#MachineLearning#ComputerVision#informatology#technologynews#information

13 notes

·

View notes

Photo

Collective Intelligence . . . #machinelearning #datascience #datascientist #analytics #infographic #infographics #dataanalytics #collectiveintelligence #crowdwiz #transferlearning #artificialintelligence #statistics #intelligence #knowledge (at United States of America (USA)) https://www.instagram.com/p/B0od96QgSu0/?igshid=3b5kjxeeubv8

#machinelearning#datascience#datascientist#analytics#infographic#infographics#dataanalytics#collectiveintelligence#crowdwiz#transferlearning#artificialintelligence#statistics#intelligence#knowledge

5 notes

·

View notes

Photo

Machines have the capability to transfer their knowledge from one domain to another. Google researchers are now seeking to develop analysis techniques to understand how transfer learning works in machines.

0 notes

Photo

#WeHadItFirst Your Mistake by @only1sonta on DunkDolls.com #DunkDolls #BlockchainMusic #CryptoMusic #Algorithm #Bitcoin #CryptoCurrency #Blockchain #node #ArtificialIntelligence #MachineLearning #PatternRecognition #AI #TransferLearning #Bittorrent #CashApp #Worcester $DunkDolls https://www.instagram.com/p/B2QMu5xATz0/?igshid=zwcz6f5j84gu

#wehaditfirst#dunkdolls#blockchainmusic#cryptomusic#algorithm#bitcoin#cryptocurrency#blockchain#node#artificialintelligence#machinelearning#patternrecognition#ai#transferlearning#bittorrent#cashapp#worcester

0 notes

Text

youtube

#generativeai #artificialintelligence #chatgpt #entrepreneur #business #datascience #machinelearning

Unlock the world of Generative AI with this informative video! Delve into the realm of Generative AI and discover its inner workings, common applications, various model types, and essential fundamentals for utilization. Whether you're a beginner or an enthusiast, this video breaks down the complexities of Generative AI in an accessible and engaging manner. 🌐 Explore Common Applications: Uncover the diverse applications of Generative AI, from creative endeavors to practical solutions. Learn how this cutting-edge technology is transforming industries and enhancing user experiences. 🧠 Understand Model Types: Dive into the intricacies of different Generative AI model types, gaining insights into their unique functionalities and applications. From GANs (Generative Adversarial Networks) to VAEs (Variational Autoencoders), this video provides a comprehensive overview. 🔍 Master Fundamentals: Equip yourself with the foundational knowledge needed to harness the power of Generative AI. Understand the key concepts and principles that drive these intelligent systems, empowering you to navigate this fascinating field with confidence. 🚀 Elevate Your Understanding: Whether you're a developer, student, or tech enthusiast, this video caters to all levels of expertise. Elevate your understanding of Generative AI and stay ahead in the ever-evolving landscape of artificial intelligence. 🔗 Keywords: Generative AI, Artificial Intelligence, GANs, VAEs, Machine Learning, Deep Learning, Neural Networks, Applications of AI, AI Fundamentals, Technology Trends. Don't miss out on the opportunity to expand your knowledge of Generative AI. Hit play now and embark on a journey into the future of artificial intelligence.

#GenerativeAI #GANs #VAEs #ArtificialIntelligence #MachineLearning #DeepLearning #NeuralNetworks #AIApplications #CreativeAI #NaturalLanguageGeneration #ImageSynthesis #TextGeneration #ComputerVision #DeepfakeTechnology #AIArt #GenerativeDesign #AutonomousSystems #image #translation #ContentCreation #TransferLearning #ReinforcementLearning #CreativeCoding #AIInnovation #tdm

#youtube#artificial intelligence#machine learning#artists on tumblr#animation#art#artwork#branding#accounting#architecture

1 note

·

View note

Link

We assume underlying structure (or Form) to things we see. If you see a shadow there is something casting it, once you see a giraffe, you recognize other giraffes - old and young, large and small. And now, it looks like the same thing happens with #NeuralNetworks too - that when it comes to image recognition, they also rely on commonality in the underlying dimensional manifold! Next stop - #TransferLearning! . #MachineLearning #DeepLearning

#Deep Learning#DeepLearning#Machine Learning#MachineLearning#NeuralNetworks#Neural Network#Ai#Transfer Learning#TransferLearning

0 notes

Text

youtube

👁️ CNN Image Classification for Retinal Health Diagnosis with TensorFlow and Keras! 👁️

How to gather and preprocess a dataset of over 80,000 retinal images, design a CNN deep learning model , and train it that can accurately distinguish between these health categories.

What You'll Learn:

🔹 Data Collection and Preprocessing: Discover how to acquire and prepare retinal images for optimal model training.

🔹 CNN Architecture Design: Create a customized architecture tailored to retinal image classification.

🔹 Training Process: Explore the intricacies of model training, including parameter tuning and validation techniques.

🔹 Model Evaluation: Learn how to assess the performance of your trained CNN on a separate test dataset.

You can find link for the code in the blog : https://eranfeit.net/build-a-cnn-model-for-retinal-image-diagnosis/

You can find more tutorials, and join my newsletter here : https://eranfeit.net/

Check out our tutorial here : https://youtu.be/PVKI_fXNS1E&list=UULFTiWJJhaH6BviSWKLJUM9sg

Enjoy

Eran

#Python #Cnn #TensorFlow #deeplearning #neuralnetworks #imageclassification #convolutionalneuralnetworks #computervision #transferlearning

#artificial intelligence#convolutional neural network#deep learning#tensorflow#youtube#python#machine learning#programming#code#Youtube

1 note

·

View note

Text

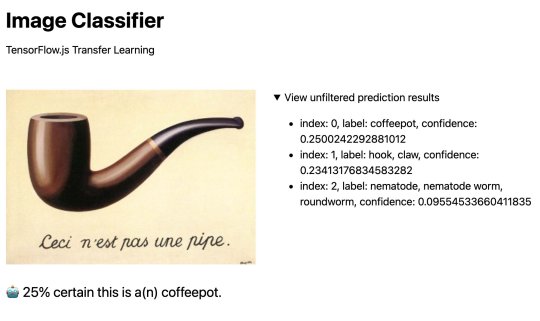

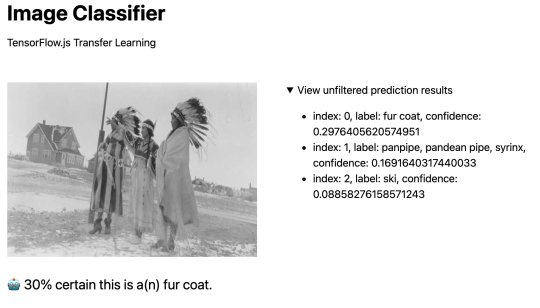

The image classifer is in agreement about this treachery. This is a coffeepot.

As part of my research informatics work, I've been prototyping how we can use #machinelearning to create metadata or connect it to scholarship and research processes. This image classifier uses #transferlearning based on the ImageNet database of 14,197,122 images (http://image-net.org) and samples a few @msulibrary digital collection images. Use the link below and refresh to cycle through the predictions.

https://www.jasonclark.info/files/image-classifier/

Opening René Magritte jokes aside... This prototyping has been instructive to understand the limitations of #machinelearning image classification. More importantly, it gives me faith that this can be improved with some additional models. The #TensorFlow library I'm using here allows me to register the confidence it has in the predictions. Moreover, I can train and serve an additional model to help it. There is a path to improvement.

Too often, I've seen these limitations put forward as reasons to not pursue or worry about how #machinelearning will work in cultural heritage settings. A refrain of "Oh, that dumb machine can't figure out what this is!" But, the scale and opportunity to refine our metadata and classification routines within these tools is too hard for me to ignore. The scale gives me pause. Because if we get classification and description wrong, we not only impact discovery, we might also label someone's humanity incorrectly. See image below where Native American context is not present in the prediction.

These #machinelearning classification applications need to be grounded in requirements of "do no harm" & ethics. Sara Mannheimer has been leading some of our local and national work around these ethical questions and I'm looking forward to seeing where we go from here. And if you are interested, code for the #transferlearning image classifier is available here: https://github.com/jasonclark/image-classifier.

0 notes

Photo

Winners of the @inaturalist Challenge 2017 released their model on #TensorflowHub showcasing advantages of transfer learning! #tfhub #transferlearning Check it out here ↓ https://t.co/ELrBIBWJUn

1 note

·

View note