#Understanding Different Types of Variables in Statistical Analysis

Explore tagged Tumblr posts

Text

Understanding Different Types of Variables in Statistical Analysis

Summary: This blog delves into the types of variables in statistical analysis, including quantitative (continuous and discrete) and qualitative (nominal and ordinal). Understanding these variables is critical for practical data interpretation and statistical analysis.

Introduction

Statistical analysis is crucial in research and data interpretation, providing insights that guide decision-making and uncover trends. By analysing data systematically, researchers can draw meaningful conclusions and validate hypotheses.

Understanding the types of variables in statistical analysis is essential for accurate data interpretation. Variables representing different data aspects play a crucial role in shaping statistical results.

This blog aims to explore the various types of variables in statistical analysis, explaining their definitions and applications to enhance your grasp of how they influence data analysis and research outcomes.

What is Statistical Analysis?

Statistical analysis involves applying mathematical techniques to understand, interpret, and summarise data. It transforms raw data into meaningful insights by identifying patterns, trends, and relationships. The primary purpose is to make informed decisions based on data, whether for academic research, business strategy, or policy-making.

How Statistical Analysis Helps in Drawing Conclusions

Statistical analysis aids in concluding by providing a structured approach to data examination. It involves summarising data through measures of central tendency (mean, median, mode) and variability (range, variance, standard deviation). By using these summaries, analysts can detect trends and anomalies.

More advanced techniques, such as hypothesis testing and regression analysis, help make predictions and determine the relationships between variables. These insights allow decision-makers to base their actions on empirical evidence rather than intuition.

Types of Statistical Analyses

Analysts can effectively interpret data, support their findings with evidence, and make well-informed decisions by employing both descriptive and inferential statistics.

Descriptive Statistics: This type focuses on summarising and describing the features of a dataset. Techniques include calculating averages and percentages and crating visual representations like charts and graphs. Descriptive statistics provide a snapshot of the data, making it easier to understand and communicate.

Inferential Statistics: Inferential analysis goes beyond summarisation to make predictions or generalisations about a population based on a sample. It includes hypothesis testing, confidence intervals, and regression analysis. This type of analysis helps conclude a broader context from the data collected from a smaller subset.

What are Variables in Statistical Analysis?

In statistical analysis, a variable represents a characteristic or attribute that can take on different values. Variables are the foundation for collecting and analysing data, allowing researchers to quantify and examine various study aspects. They are essential components in research, as they help identify patterns, relationships, and trends within the data.

How Variables Represent Data

Variables act as placeholders for data points and can be used to measure different aspects of a study. For instance, variables might include test scores, study hours, and socioeconomic status in a survey of student performance.

Researchers can systematically analyse how different factors influence outcomes by assigning numerical or categorical values to these variables. This process involves collecting data, organising it, and then applying statistical techniques to draw meaningful conclusions.

Importance of Understanding Variables

Understanding variables is crucial for accurate data analysis and interpretation. Continuous, discrete, nominal, and ordinal variables affect how data is analysed and interpreted. For example, continuous variables like height or weight can be measured precisely. In contrast, nominal variables like gender or ethnicity categorise data without implying order.

Researchers can apply appropriate statistical methods and avoid misleading results by correctly identifying and using variables. Accurate analysis hinges on a clear grasp of variable types and their roles in the research process, interpreting data more reliable and actionable.

Types of Variables in Statistical Analysis

Understanding the different types of variables in statistical analysis is crucial for practical data interpretation and decision-making. Variables are characteristics or attributes that researchers measure and analyse to uncover patterns, relationships, and insights. These variables can be broadly categorised into quantitative and qualitative types, each with distinct characteristics and significance.

Quantitative Variables

Quantitative variables represent measurable quantities and can be expressed numerically. They allow researchers to perform mathematical operations and statistical analyses to derive insights.

Continuous Variables

Continuous variables can take on infinite values within a given range. These variables can be measured precisely, and their values are not limited to specific discrete points.

Examples of continuous variables include height, weight, temperature, and time. For instance, a person's height can be measured with varying degrees of precision, from centimetres to millimetres, and it can fall anywhere within a specific range.

Continuous variables are crucial for analyses that require detailed and precise measurement. They enable researchers to conduct a wide range of statistical tests, such as calculating averages and standard deviations and performing regression analyses. The granularity of continuous variables allows for nuanced insights and more accurate predictions.

Discrete Variables

Discrete variables can only take on separate values. Unlike continuous variables, discrete variables cannot be subdivided into finer increments and are often counted rather than measured.

Examples of discrete variables include the number of students in a class, the number of cars in a parking lot, and the number of errors in a software application. For instance, you can count 15 students in a class, but you cannot have 15.5 students.

Discrete variables are essential when counting or categorising is required. They are often used in frequency distributions and categorical data analysis. Statistical methods for discrete variables include chi-square tests and Poisson regression, which are valuable for analysing count-based data and understanding categorical outcomes.

Qualitative Variables

Qualitative or categorical variables describe characteristics or attributes that cannot be measured numerically but can be classified into categories.

Nominal Variables

Nominal variables categorise data without inherent order or ranking. These variables represent different categories or groups that are mutually exclusive and do not have a natural sequence.

Examples of nominal variables include gender, ethnicity, and blood type. For instance, gender can be classified as male, female, and non-binary. However, there is no inherent ranking between these categories.

Nominal variables classify data into distinct groups and are crucial for categorical data analysis. Statistical techniques like frequency tables, bar charts, and chi-square tests are commonly employed to analyse nominal variables. Understanding nominal variables helps researchers identify patterns and trends across different categories.

Ordinal Variables

Ordinal variables represent categories with a meaningful order or ranking, but the differences between the categories are not necessarily uniform or quantifiable. These variables provide information about the relative position of categories.

Examples of ordinal variables include education level (e.g., high school, bachelor's degree, master's degree) and customer satisfaction ratings (e.g., poor, fair, good, excellent). The categories have a specific order in these cases, but the exact distance between the ranks is not defined.

Ordinal variables are essential for analysing data where the order of categories matters, but the precise differences between categories are unknown. Researchers use ordinal scales to measure attitudes, preferences, and rankings. Statistical techniques such as median, percentiles, and ordinal logistic regression are employed to analyse ordinal data and understand the relative positioning of categories.

Comparison Between Quantitative and Qualitative Variables

Quantitative and qualitative variables serve different purposes and are analysed using distinct methods. Understanding their differences is essential for choosing the appropriate statistical techniques and drawing accurate conclusions.

Measurement: Quantitative variables are measured numerically and can be subjected to arithmetic operations, whereas qualitative variables are classified without numerical measurement.

Analysis Techniques: Quantitative variables are analysed using statistical methods like mean, standard deviation, and regression analysis, while qualitative variables are analysed using frequency distributions, chi-square tests, and non-parametric techniques.

Data Representation: Continuous and discrete variables are often represented using histograms, scatter plots, and box plots. Nominal and ordinal variables are defined using bar charts, pie charts, and frequency tables.

Frequently Asked Questions

What are the main types of variables in statistical analysis?

The main variables in statistical analysis are quantitative (continuous and discrete) and qualitative (nominal and ordinal). Quantitative variables involve measurable data, while qualitative variables categorise data without numerical measurement.

How do continuous and discrete variables differ?

Continuous variables can take infinite values within a range and are measured precisely, such as height or temperature. Discrete variables, like the number of students, can only take specific, countable values and are not subdivisible.

What are nominal and ordinal variables in statistical analysis?

Nominal variables categorise data into distinct groups without any inherent order, like gender or blood type. Ordinal variables involve categories with a meaningful order but unequal intervals, such as education levels or satisfaction ratings.

Conclusion

Understanding the types of variables in statistical analysis is crucial for accurate data interpretation. By distinguishing between quantitative variables (continuous and discrete) and qualitative variables (nominal and ordinal), researchers can select appropriate statistical methods and draw valid conclusions. This clarity enhances the quality and reliability of data-driven insights.

#Understanding Different Types of Variables in Statistical Analysis#Variables in Statistical Analysis#Statistical Analysis#statistics#data science

4 notes

·

View notes

Text

“The machines we have now, they’re not conscious,” he says. “When one person teaches another person, that is an interaction between consciousnesses.” Meanwhile, AI models are trained by toggling so-called “weights” or the strength of connections between different variables in the model, in order to get a desired output. “It would be a real mistake to think that when you’re teaching a child, all you are doing is adjusting the weights in a network.”

Chiang’s main objection, a writerly one, is with the words we choose to describe all this. Anthropomorphic language such as “learn”, “understand”, “know” and personal pronouns such as “I” that AI engineers and journalists project on to chatbots such as ChatGPT create an illusion. This hasty shorthand pushes all of us, he says — even those intimately familiar with how these systems work — towards seeing sparks of sentience in AI tools, where there are none.

“There was an exchange on Twitter a while back where someone said, ‘What is artificial intelligence?’ And someone else said, ‘A poor choice of words in 1954’,” he says. “And, you know, they’re right. I think that if we had chosen a different phrase for it, back in the ’50s, we might have avoided a lot of the confusion that we’re having now.”

So if he had to invent a term, what would it be? His answer is instant: applied statistics.

“It’s genuinely amazing that . . . these sorts of things can be extracted from a statistical analysis of a large body of text,” he says. But, in his view, that doesn’t make the tools intelligent. Applied statistics is a far more precise descriptor, “but no one wants to use that term, because it’s not as sexy”.

[...]

Given his fascination with the relationship between language and intelligence, I’m particularly curious about his views on AI writing, the type of text produced by the likes of ChatGPT. How, I ask, will machine-generated words change the type of writing we both do? For the first time in our conversation, I see a flash of irritation. “Do they write things that speak to people? I mean, has there been any ChatGPT-generated essay that actually spoke to people?” he says.

Chiang’s view is that large language models (or LLMs), the technology underlying chatbots such as ChatGPT and Google’s Bard, are useful mostly for producing filler text that no one necessarily wants to read or write, tasks that anthropologist David Graeber called “bullshit jobs”. AI-generated text is not delightful, but it could perhaps be useful in those certain areas, he concedes.

“But the fact that LLMs are able to do some of that — that’s not exactly a resounding endorsement of their abilities,” he says. “That’s more a statement about how much bullshit we are required to generate and deal with in our daily lives.”

5K notes

·

View notes

Text

In the search for life on exoplanets, finding nothing is something too

What if humanity's search for life on other planets returns no hits? A team of researchers led by Dr. Daniel Angerhausen, a Physicist in Professor Sascha Quanz's Exoplanets and Habitability Group at ETH Zurich and a SETI Institute affiliate, tackled this question by considering what could be learned about life in the universe if future surveys detect no signs of life on other planets. The study, which has just been published in The Astronomical Journal and was carried out within the framework of the Swiss National Centre of Competence in Research, PlanetS, relies on a Bayesian statistical analysis to establish the minimum number of exoplanets that should be observed to obtain meaningful answers about the frequency of potentially inhabited worlds.

Accounting for uncertainty

The study concludes that if scientists were to examine 40 to 80 exoplanets and find a "perfect" no-detection outcome, they could confidently conclude that fewer than 10 to 20 percent of similar planets harbour life. In the Milky Way, this 10 percent would correspond to about 10 billion potentially inhabited planets. This type of finding would enable researchers to put a meaningful upper limit on the prevalence of life in the universe, an estimate that has, so far, remained out of reach.

There is, however, a relevant catch in that ‘perfect’ null result: Every observation comes with a certain level of uncertainty, so it's important to understand how this affects the robustness of the conclusions that may be drawn from the data. Uncertainties in individual exoplanet observations take different forms: Interpretation uncertainty is linked to false negatives, which may correspond to missing a biosignature and mislabeling a world as uninhabited, whereas so-called sample uncertainty introduces biases in the observed samples. For example, if unrepresentative planets are included even though they fail to have certain agreed-upon requirements for the presence of life.

Asking the right questions

"It's not just about how many planets we observe – it's about asking the right questions and how confident we can be in seeing or not seeing what we're searching for," says Angerhausen. "If we're not careful and are overconfident in our abilities to identify life, even a large survey could lead to misleading results."

Such considerations are highly relevant to upcoming missions such as the international Large Interferometer for Exoplanets (LIFE) mission led by ETH Zurich. The goal of LIFE is to probe dozens of exoplanets similar in mass, radius, and temperature to Earth by studying their atmospheres for signs of water, oxygen, and even more complex biosignatures. According to Angerhausen and collaborators, the good news is that the planned number of observations will be large enough to draw significant conclusions about the prevalence of life in Earth's galactic neighbourhood.

Still, the study stresses that even advanced instruments require careful accounting and quantification of uncertainties and biases to ensure that outcomes are statistically meaningful. To address sample uncertainty, for instance, the authors point out that specific and measurable questions such as, "Which fraction of rocky planets in a solar system's habitable zone show clear signs of water vapor, oxygen, and methane?" are preferable to the far more ambiguous, "How many planets have life?"

The influence of previous knowledge

Angerhausen and colleagues also studied how assumed previous knowledge – called a prior in Bayesian statistics – about given observation variables will affect the results of future surveys. For this purpose, they compared the outcomes of the Bayesian framework with those given by a different method, known as the Frequentist approach, which does not feature priors. For the kind of sample size targeted by missions like LIFE, the influence of chosen priors on the results of the Bayesian analysis is found to be limited and, in this scenario, the two frameworks yield comparable results.

"In applied science, Bayesian and Frequentist statistics are sometimes interpreted as two competing schools of thought. As a statistician, I like to treat them as alternative and complementary ways to understand the world and interpret probabilities," says co-author Emily Garvin, who's currently a PhD student in Quanz' group. Garvin focussed on the Frequentist analysis that helped to corroborate the team's results and to verify their approach and assumptions. "Slight variations in a survey's scientific goals may require different statistical methods to provide a reliable and precise answer," notes Garvin. "We wanted to show how distinct approaches provide a complementary understanding of the same dataset, and in this way present a roadmap for adopting different frameworks."

Finding signs of life could change everything

This work shows why it's so important to formulate the right research questions, to choose the appropriate methodology and to implement careful sampling designs for a reliable statistical interpretation of a study's outcome. "A single positive detection would change everything," says Angerhausen, "but even if we don't find life, we'll be able to quantify how rare – or common – planets with detectable biosignatures really might be."

IMAGE: The first Earth-size planet orbiting a star in the “habitable zone” — the range of distance from a star where liquid water might pool on the surface of an orbiting planet. Credit NASA Ames/SETI Institute/JPL-Caltech

4 notes

·

View notes

Text

The Philosophy of Statistics

The philosophy of statistics explores the foundational, conceptual, and epistemological questions surrounding the practice of statistical reasoning, inference, and data interpretation. It deals with how we gather, analyze, and draw conclusions from data, and it addresses the assumptions and methods that underlie statistical procedures. Philosophers of statistics examine issues related to probability, uncertainty, and how statistical findings relate to knowledge and reality.

Key Concepts:

Probability and Statistics:

Frequentist Approach: In frequentist statistics, probability is interpreted as the long-run frequency of events. It is concerned with making predictions based on repeated trials and often uses hypothesis testing (e.g., p-values) to make inferences about populations from samples.

Bayesian Approach: Bayesian statistics, on the other hand, interprets probability as a measure of belief or degree of certainty in an event, which can be updated as new evidence is obtained. Bayesian inference incorporates prior knowledge or assumptions into the analysis and updates it with data.

Objectivity vs. Subjectivity:

Objective Statistics: Objectivity in statistics is the idea that statistical methods should produce results that are independent of the individual researcher’s beliefs or biases. Frequentist methods are often considered more objective because they rely on observed data without incorporating subjective priors.

Subjective Probability: In contrast, Bayesian statistics incorporates subjective elements through prior probabilities, meaning that different researchers can arrive at different conclusions depending on their prior beliefs. This raises questions about the role of subjectivity in science and how it affects the interpretation of statistical results.

Inference and Induction:

Statistical Inference: Philosophers of statistics examine how statistical methods allow us to draw inferences from data about broader populations or phenomena. The problem of induction, famously posed by David Hume, applies here: How can we justify making generalizations about the future or the unknown based on limited observations?

Hypothesis Testing: Frequentist methods of hypothesis testing (e.g., null hypothesis significance testing) raise philosophical questions about what it means to "reject" or "fail to reject" a hypothesis. Critics argue that p-values are often misunderstood and can lead to flawed inferences about the truth of scientific claims.

Uncertainty and Risk:

Epistemic vs. Aleatory Uncertainty: Epistemic uncertainty refers to uncertainty due to lack of knowledge, while aleatory uncertainty refers to inherent randomness in the system. Philosophers of statistics explore how these different types of uncertainty influence decision-making and inference.

Risk and Decision Theory: Statistical analysis often informs decision-making under uncertainty, particularly in fields like economics, medicine, and public policy. Philosophical questions arise about how to weigh evidence, manage risk, and make decisions when outcomes are uncertain.

Causality vs. Correlation:

Causal Inference: One of the most important issues in the philosophy of statistics is the relationship between correlation and causality. While statistics can show correlations between variables, establishing a causal relationship often requires additional assumptions and methods, such as randomized controlled trials or causal models.

Causal Models and Counterfactuals: Philosophers like Judea Pearl have developed causal inference frameworks that use counterfactual reasoning to better understand causation in statistical data. These methods help to clarify when and how statistical models can imply causal relationships, moving beyond mere correlations.

The Role of Models:

Modeling Assumptions: Statistical models, such as regression models or probability distributions, are based on assumptions about the data-generating process. Philosophers of statistics question the validity and reliability of these assumptions, particularly when they are idealized or simplified versions of real-world processes.

Overfitting and Generalization: Statistical models can sometimes "overfit" data, meaning they capture noise or random fluctuations rather than the underlying trend. Philosophical discussions around overfitting examine the balance between model complexity and generalizability, as well as the limits of statistical models in capturing reality.

Data and Representation:

Data Interpretation: Data is often considered the cornerstone of statistical analysis, but philosophers of statistics explore the nature of data itself. How is data selected, processed, and represented? How do choices about measurement, sampling, and categorization affect the conclusions drawn from data?

Big Data and Ethics: The rise of big data has led to new ethical and philosophical challenges in statistics. Issues such as privacy, consent, bias in algorithms, and the use of data in decision-making are central to contemporary discussions about the limits and responsibilities of statistical analysis.

Statistical Significance:

p-Values and Significance: The interpretation of p-values and statistical significance has long been debated. Many argue that the overreliance on p-values can lead to misunderstandings about the strength of evidence, and the replication crisis in science has highlighted the limitations of using p-values as the sole measure of statistical validity.

Replication Crisis: The replication crisis in psychology and other sciences has raised concerns about the reliability of statistical methods. Philosophers of statistics are interested in how statistical significance and reproducibility relate to the notion of scientific truth and the accumulation of knowledge.

Philosophical Debates:

Frequentism vs. Bayesianism:

Frequentist and Bayesian approaches to statistics represent two fundamentally different views on the nature of probability. Philosophers debate which approach provides a better framework for understanding and interpreting statistical evidence. Frequentists argue for the objectivity of long-run frequencies, while Bayesians emphasize the flexibility and adaptability of probabilistic reasoning based on prior knowledge.

Realism and Anti-Realism in Statistics:

Is there a "true" probability or statistical model underlying real-world phenomena, or are statistical models simply useful tools for organizing our observations? Philosophers debate whether statistical models correspond to objective features of reality (realism) or are constructs that depend on human interpretation and conventions (anti-realism).

Probability and Rationality:

The relationship between probability and rational decision-making is a key issue in both statistics and philosophy. Bayesian decision theory, for instance, uses probabilities to model rational belief updating and decision-making under uncertainty. Philosophers explore how these formal models relate to human reasoning, especially when dealing with complex or ambiguous situations.

Philosophy of Machine Learning:

Machine learning and AI have introduced new statistical methods for pattern recognition and prediction. Philosophers of statistics are increasingly focused on the interpretability, reliability, and fairness of machine learning algorithms, as well as the role of statistical inference in automated decision-making systems.

The philosophy of statistics addresses fundamental questions about probability, uncertainty, inference, and the nature of data. It explores how statistical methods relate to broader epistemological issues, such as the nature of scientific knowledge, objectivity, and causality. Frequentist and Bayesian approaches offer contrasting perspectives on probability and inference, while debates about the role of models, data representation, and statistical significance continue to shape the field. The rise of big data and machine learning has introduced new challenges, prompting philosophical inquiry into the ethical and practical limits of statistical reasoning.

#philosophy#epistemology#knowledge#learning#education#chatgpt#ontology#metaphysics#Philosophy of Statistics#Bayesianism vs. Frequentism#Probability Theory#Statistical Inference#Causal Inference#Epistemology of Data#Hypothesis Testing#Risk and Decision Theory#Big Data Ethics#Replication Crisis

2 notes

·

View notes

Text

Enterprises Explore These Advanced Analytics Use Cases

Businesses want to use data-driven strategies, and advanced analytics solutions optimized for enterprise use cases make this possible. Analytical technology has come a long way, with new capabilities ranging from descriptive text analysis to big data. This post will describe different use cases for advanced enterprise analytics.

What is Advanced Enterprise Analytics?

Advanced enterprise analytics includes scalable statistical modeling tools that utilize multiple computing technologies to help multinational corporations extract insights from vast datasets. Professional data analytics services offer enterprises industry-relevant advanced analytics solutions.

Modern descriptive and diagnostic analytics can revolutionize how companies leverage their historical performance intelligence. Likewise, predictive and prescriptive analytics allow enterprises to prepare for future challenges.

Conventional analysis methods had a limited scope and prioritized structured data processing. However, many advanced analytics examples quickly identify valuable trends in unstructured datasets. Therefore, global business firms can use advanced analytics solutions to process qualitative consumer reviews and brand-related social media coverage.

Use Cases of Advanced Enterprise Analytics

1| Big Data Analytics

Modern analytical technologies have access to the latest hardware developments in cloud computing virtualization. Besides, data lakes or warehouses have become more common, increasing the capabilities of corporations to gather data from multiple sources.

Big data is a constantly increasing data volume containing mixed data types. It can comprise audio, video, images, and unique file formats. This dynamic makes it difficult for conventional data analytics services to extract insights for enterprise use cases, highlighting the importance of advanced analytics solutions.

Advanced analytical techniques process big data efficiently. Besides, minimizing energy consumption and maintaining system stability during continuous data aggregation are two significant advantages of using advanced big data analytics.

2| Financial Forecasting

Enterprises can raise funds using several financial instruments, but revenue remains vital to profit estimation. Corporate leadership is often curious about changes in cash flow across several business quarters. After all, reliable financial forecasting enables them to allocate a departmental budget through informed decision-making.

The variables impacting your financial forecasting models include changes in government policies, international treaties, consumer interests, investor sentiments, and the cost of running different business activities. Businesses always require industry-relevant tools to calculate these variables precisely.

Multivariate financial modeling is one of the enterprise-level examples of advanced analytics use cases. Corporations can also automate some components of economic feasibility modeling to minimize the duration of data processing and generate financial performance documents quickly.

3| Customer Sentiment Analysis

The customers’ emotions influence their purchasing habits and brand perception. Therefore, customer sentiment analysis predicts feelings and attitudes to help you improve your marketing materials and sales strategy. Data analytics services also provide enterprises with the tools necessary for customer sentiment analysis.

Advanced sentiment analytics solutions can evaluate descriptive consumer responses gathered during customer service and market research studies. So, you can understand the positive, negative, or neutral sentiments using qualitative data.

Negative sentiments often originate from poor customer service, product deficiencies, and consumer discomfort in using the products or services. Corporations must modify their offerings to minimize negative opinions. Doing so helps them decrease customer churn.

4| Productivity Optimization

Factory equipment requires a reasonable maintenance schedule to ensure that machines operate efficiently. Similarly, companies must offer recreation opportunities, holidays, and special-purpose leaves to protect the employees’ psychological well-being and physical health.

However, these activities affect a company’s productivity. Enterprise analytics solutions can help you use advanced scheduling tools and human resource intelligence to determine the optimal maintenance requirements. They also include other productivity optimization tools concerning business process innovation.

Advanced analytics examples involve identifying, modifying, and replacing inefficient organizational practices with more impactful workflows. Consider how outdated computing hardware or employee skill deficiencies affect your enterprise’s productivity. Analytics lets you optimize these business aspects.

5| Enterprise Risk Management

Risk management includes identifying, quantifying, and mitigating internal or external corporate risks to increase an organization’s resilience against market fluctuations and legal changes. Moreover, improved risk assessments are the most widely implemented use cases of advanced enterprise analytics solutions.

Internal risks revolve around human errors, software incompatibilities, production issues, accountable leadership, and skill development. Lacking team coordination in multi-disciplinary projects is one example of internal risks.

External risks result from regulatory changes in the laws, guidelines, and frameworks that affect you and your suppliers. For example, changes in tax regulations or import-export tariffs might not affect you directly. However, your suppliers might raise prices, involving you in the end.

Data analytics services include advanced risk evaluations to help enterprises and investors understand how new market trends or policies affect their business activities.

Conclusion

Enterprise analytics has many use cases where data enhances management’s understanding of supply chain risks, consumer preferences, cost optimization, and employee productivity. Additionally, the advanced analytics solutions they offer their corporate clients assist them in financial forecasts.

New examples that integrate advanced analytics can also process mixed data types, including unstructured datasets. Furthermore, you can automate the process of insight extraction from the qualitative consumer responses collected in market research surveys.

While modern analytical modeling benefits enterprises in financial planning and business strategy, the reliability of the insights depends on data quality, and different data sources have unique authority levels. Therefore, you want experienced professionals who know how to ensure data integrity.

A leader in data analytics services, SG Analytics, empowers enterprises to optimize their business practices and acquire detailed industry insights using cutting-edge technologies. Contact us today to implement scalable data management modules to increase your competitive strength.

2 notes

·

View notes

Text

Key Concepts in Frozen Shoulder Clinical Trials

When exploring and understanding Frozen Shoulder Syndrome (FSS) clinical trials, several key concepts are important to consider. These concepts provide insights into the design, implementation, and outcomes of clinical trials related to FSS.

To know more about the leading sponsors in the Frozen Shoulder Syndrome clinical trials market, download a free report sample

Here are some key concepts:

Study Phases:

Clinical trials are often conducted in phases, with each phase serving a specific purpose. Phase 1 focuses on safety, Phase 2 on efficacy, Phase 3 on effectiveness and safety in a larger population, and Phase 4 involves post-marketing surveillance. Understanding the phase of a trial helps assess its developmental stage.

Intervention Types:

Trials may involve different types of interventions, such as medications, physical therapies, surgical procedures, or a combination of these. Understanding the nature of the intervention is crucial to evaluating its potential effectiveness for treating FSS.

Randomized Controlled Trials (RCTs):

RCTs are considered the gold standard in clinical research. In these trials, participants are randomly assigned to different groups, with one group receiving the experimental treatment and another serving as a control. RCTs help establish causation and reduce bias.

Placebo-Controlled Trials:

Some trials involve a placebo group, where participants receive an inactive substance. This helps researchers assess the true impact of the intervention by comparing it to a group that does not receive the treatment.

Blinding:

Trials may be single-blind (participants are unaware of their treatment group) or double-blind (both participants and researchers are unaware). Blinding helps minimize bias in reporting and assessing outcomes.

Inclusion and Exclusion Criteria:

Trials have specific criteria for participant eligibility. Inclusion criteria define characteristics participants must have, and exclusion criteria specify factors that disqualify individuals. Understanding these criteria is essential for determining eligibility.

Primary and Secondary Endpoints:

Primary endpoints are the main outcomes the trial aims to measure, often related to efficacy. Secondary endpoints provide additional information. These endpoints guide the assessment of the intervention's impact.

Safety Monitoring:

Trials include safety monitoring to identify and assess adverse events. Robust safety measures are essential to ensure participant well-being.

Patient-Reported Outcomes (PROs):

PROs capture data directly from participants about their health status and treatment experiences. They can provide insights into the impact of FSS and the effectiveness of interventions from the patient's perspective.

Crossover Design:

Some trials use a crossover design, where participants switch from one treatment to another during the course of the study. This design helps control for individual variability.

Statistical Significance:

Statistical analysis is crucial for determining whether observed differences between groups are likely due to the intervention or occurred by chance. Results are considered statistically significant if the probability of their occurrence by chance is low.

Informed Consent:

Informed consent is a fundamental ethical requirement. Participants must be fully informed about the trial's objectives, procedures, potential risks, and benefits before providing their consent to participate.

Understanding these key concepts will enhance your ability to critically evaluate FSS clinical trials and interpret their findings. Always consult with healthcare professionals and researchers for personalized advice and insights.

2 notes

·

View notes

Text

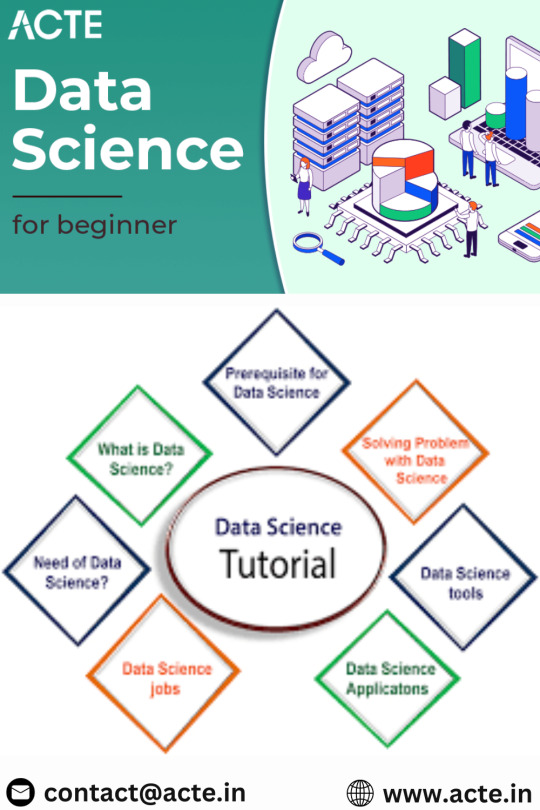

Cracking the Code: A Beginner's Roadmap to Mastering Data Science

Embarking on the journey into data science as a complete novice is an exciting venture. While the world of data science may seem daunting at first, breaking down the learning process into manageable steps can make the endeavor both enjoyable and rewarding. Choosing the best Data Science Institute can further accelerate your journey into this thriving industry.

In this comprehensive guide, we'll outline a roadmap for beginners to get started with data science, from understanding the basics to building a portfolio of projects.

1. Understanding the Basics: Laying the Foundation

The journey begins with a solid understanding of the fundamentals of data science. Start by familiarizing yourself with key concepts such as data types, variables, and basic statistics. Platforms like Khan Academy, Coursera, and edX offer introductory courses in statistics and data science, providing a solid foundation for your learning journey.

2. Learn Programming Languages: The Language of Data Science

Programming is a crucial skill in data science, and Python is one of the most widely used languages in the field. Platforms like Codecademy, DataCamp, and freeCodeCamp offer interactive lessons and projects to help beginners get hands-on experience with Python. Additionally, learning R, another popular language in data science, can broaden your skill set.

3. Explore Data Visualization: Bringing Data to Life

Data visualization is a powerful tool for understanding and communicating data. Explore tools like Tableau for creating interactive visualizations or dive into Python libraries like Matplotlib and Seaborn. Understanding how to present data visually enhances your ability to derive insights and convey information effectively.

4. Master Data Manipulation: Unlocking Data's Potential

Data manipulation is a fundamental aspect of data science. Learn how to manipulate and analyze data using libraries like Pandas in Python. The official Pandas website provides tutorials and documentation to guide you through the basics of data manipulation, a skill that is essential for any data scientist.

5. Delve into Machine Learning Basics: The Heart of Data Science

Machine learning is a core component of data science. Start exploring the fundamentals of machine learning on platforms like Kaggle, which offers beginner-friendly datasets and competitions. Participating in Kaggle competitions allows you to apply your knowledge, learn from others, and gain practical experience in machine learning.

6. Take Online Courses: Structured Learning Paths

Enroll in online courses that provide structured learning paths in data science. Platforms like Coursera (e.g., "Data Science and Machine Learning Bootcamp with R" or "Applied Data Science with Python") and edX (e.g., "Harvard's Data Science Professional Certificate") offer comprehensive courses taught by experts in the field.

7. Read Books and Blogs: Supplementing Your Knowledge

Books and blogs can provide additional insights and practical tips. "Python for Data Analysis" by Wes McKinney is a highly recommended book, and blogs like Towards Data Science on Medium offer a wealth of articles covering various data science topics. These resources can deepen your understanding and offer different perspectives on the subject.

8. Join Online Communities: Learning Through Connection

Engage with the data science community by joining online platforms like Stack Overflow, Reddit (e.g., r/datascience), and LinkedIn. Participate in discussions, ask questions, and learn from the experiences of others. Being part of a community provides valuable support and insights.

9. Work on Real Projects: Applying Your Skills

Apply your skills by working on real-world projects. Identify a problem or area of interest, find a dataset, and start working on analysis and predictions. Whether it's predicting housing prices, analyzing social media sentiment, or exploring healthcare data, hands-on projects are crucial for developing practical skills.

10. Attend Webinars and Conferences: Staying Updated

Stay updated on the latest trends and advancements in data science by attending webinars and conferences. Platforms like Data Science Central and conferences like the Data Science Conference provide opportunities to learn from experts, discover new technologies, and connect with the wider data science community.

11. Build a Portfolio: Showcasing Your Journey

Create a portfolio showcasing your projects and skills. This can be a GitHub repository or a personal website where you document and present your work. A portfolio is a powerful tool for demonstrating your capabilities to potential employers and collaborators.

12. Practice Regularly: The Path to Mastery

Consistent practice is key to mastering data science. Dedicate regular time to coding, explore new datasets, and challenge yourself with increasingly complex projects. As you progress, you'll find that your skills evolve, and you become more confident in tackling advanced data science challenges.

Embarking on the path of data science as a beginner may seem like a formidable task, but with the right resources and a structured approach, it becomes an exciting and achievable endeavor. From understanding the basics to building a portfolio of real-world projects, each step contributes to your growth as a data scientist. Embrace the learning process, stay curious, and celebrate the milestones along the way. The world of data science is vast and dynamic, and your journey is just beginning. Choosing the best Data Science courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science.

3 notes

·

View notes

Text

Common Pitfalls in Research Reliability and How to Avoid Them

Reliability is a cornerstone of quality research. It refers to the consistency and dependability of results over time, across researchers, and under different conditions. When research is reliable, it means that if repeated, it would yield similar outcomes. However, many researchers—especially students or early-career professionals—encounter common errors in Research that can compromise reliability, leading to questionable results and reduced credibility.

In this blog, we will explore the most common errors that affect research reliability, clarify the distinction between validity and reliability, and provide practical strategies to enhance the reliability of your academic or professional research.

Understanding Reliability in Research

Reliability in research is about consistency. A reliable study produces stable and consistent results that can be replicated under the same conditions. There are different types of reliability:

Test-Retest Reliability: Consistency of results when the same test is repeated over time.

Inter-Rater Reliability: Agreement among different observers or raters.

Internal Consistency: Consistency of results across items within a test.

Parallel-Forms Reliability: Consistency between different versions of a test measuring the same concept.

Maintaining reliability ensures that your data are dependable and that your findings can be trusted by others.

Common Pitfalls That Compromise Research Reliability

1. Inadequate Sample Size

A small or unrepresentative sample can produce results that aren't generalizable. Small samples are more prone to anomalies and can lead to fluctuating results.

How to avoid: Use appropriate sampling techniques and ensure your sample size is large enough to support statistical analysis. Tools like power analysis can help determine an adequate size.

2. Poor Instrument Design

If your survey or measurement tools are unclear, biased, or inconsistent, your data will reflect these weaknesses.

How to avoid: Pilot your instruments and seek peer feedback. Revise unclear or ambiguous questions, and ensure scales are standardized.

3. Lack of Standardized Procedures

When researchers or participants don't follow the same procedures, results become inconsistent.

How to avoid: Create a detailed protocol or procedure manual. Train all researchers and ensure that participants are given the same instructions every time.

4. Observer Bias

Subjectivity from researchers or raters can skew data, especially in qualitative or observational studies.

How to avoid: Use blind assessments where possible, and establish clear criteria for judgments. Training raters can also help standardize observations.

5. Environmental Variability

Changes in setting, timing, or conditions during data collection can affect the outcome.

How to avoid: Control environmental factors as much as possible. Collect data at the same time of day and under similar conditions.

6. Improper Data Handling

Inconsistent or incorrect data entry, coding errors, or flawed analysis can all impact the reliability of findings.

How to avoid: Double-check data entry, use reliable software, and consider having a second analyst verify results.

7. Fatigue and Participant Effects

Participants may become tired, distracted, or uninterested, leading to unreliable responses, especially in long studies.

How to avoid: Keep sessions concise, include breaks if necessary, and monitor engagement levels.

Validity vs. Reliability: What's the Difference?

These two concepts are often confused but are distinct in meaning and purpose.

Reliability: Refers to consistency of results. A reliable test yields the same results under consistent conditions.

Validity: Refers to the accuracy of the test. A valid test measures what it claims to measure.

A research tool can be reliable but not valid. For example, a bathroom scale that always shows you are 5kg heavier is reliable (consistent results) but not valid (inaccurate measurement).

Key Point: Validity depends on reliability. If a measurement is not consistent, it cannot be valid.

Strategies to Improve Research Reliability

Pretest Your Instruments: Run a pilot study to identify issues with your survey, test, or observation protocol.

Use Established Tools: Where possible, use instruments with proven reliability from previous research.

Train Your Team: Ensure all researchers or raters follow the same methods and understand the study's goals.

Document Procedures Clearly: A detailed methodology ensures consistency and allows replication.

Standardize Data Collection: Maintain uniform conditions and follow strict protocols during data gathering.

Use Statistical Tests: Apply reliability statistics like Cronbach’s alpha or inter-rater reliability coefficients to test consistency.

Seek Peer Review: Having others evaluate your methods can highlight weaknesses you might miss.

Conclusion

Ensuring research reliability is not just a technical requirement—it's a fundamental aspect of producing trustworthy and impactful work. By avoiding common pitfalls and understanding how reliability differs from validity, you can significantly enhance the quality of your research.

Whether you are conducting academic studies, writing a dissertation, or working on professional projects, making reliability a priority will strengthen your findings and bolster your reputation as a credible researcher.

Remember, reliable research is replicable, respected, and results in real-world impact.

CTA- (CAll-t0-Action): Need help improving your research design or data collection methods? Connect with our academic experts for tailored guidance and support.

0 notes

Text

What You’ll Learn in a Data Analyst Course in Noida: A Complete Syllabus Breakdown

If you are thinking about starting a career in data analytics, you’re making a great decision. Companies today use data to make better decisions, improve services, and grow their businesses. That’s why the demand for data analysts is growing quickly. But to become a successful data analyst, you need the right training.

In this article, we will give you a complete breakdown of what you’ll learn in a Data Analyst Course in Noida, especially if you choose to study at Uncodemy, one of the top training institutes in India.

Let’s dive in and explore everything step-by-step.

Why Choose a Data Analyst Course in Noida?

Noida has become a tech hub in India, with many IT companies, startups, and MNCs. As a result, it offers great job opportunities for data analysts. Whether you are a fresher or a working professional looking to switch careers, Noida is the right place to start your journey.

Uncodemy, located in Noida, provides industry-level training that helps you learn not just theory but also practical skills. Their course is designed by experts and is updated regularly to match real-world demands.

Overview of Uncodemy’s Data Analyst Course

The Data Analyst course at Uncodemy is beginner-friendly. You don’t need to be a coder or tech expert to start. The course starts from the basics and goes step-by-step to advanced topics. It includes live projects, assignments, mock interviews, and job placement support.

Here’s a detailed syllabus breakdown to help you understand what you will learn.

1. Introduction to Data Analytics

In this first module, you will learn:

What is data analytics?

Why is it important?

Different types of analytics (Descriptive, Diagnostic, Predictive, Prescriptive)

Real-world applications of data analytics

Role of a data analyst in a company

This module sets the foundation and gives you a clear idea of what the field is about.

2. Excel for Data Analysis

Microsoft Excel is one of the most used tools for data analysis. In this module, you’ll learn:

Basics of Excel (formulas, formatting, functions)

Data cleaning and preparation

Creating charts and graphs

Using pivot tables and pivot charts

Lookup functions (VLOOKUP, HLOOKUP, INDEX, MATCH)

Conditional formatting

Data validation

After this module, you will be able to handle small and medium data sets easily using Excel.

3. Statistics and Probability Basics

Statistics is the heart of data analysis. At Uncodemy, you’ll learn:

What is statistics?

Mean, median, mode

Standard deviation and variance

Probability theory

Distribution types (normal, binomial, Poisson)

Correlation and regression

Hypothesis testing

You will learn how to understand data patterns and make conclusions from data.

4. SQL for Data Analytics

SQL (Structured Query Language) is used to work with databases. You’ll learn:

What is a database?

Introduction to SQL

Writing basic SQL queries

Filtering and sorting data

Joins (INNER, LEFT, RIGHT, FULL)

Group By and aggregate functions

Subqueries and nested queries

Creating and updating tables

With these skills, you will be able to extract and analyze data from large databases.

5. Data Visualization with Power BI and Tableau

Data visualization is all about making data easy to understand using charts and dashboards. You’ll learn:

Power BI:

What is Power BI?

Connecting Power BI to Excel or SQL

Creating dashboards and reports

Using DAX functions

Sharing reports

Tableau:

Basics of Tableau interface

Connecting to data sources

Creating interactive dashboards

Filters, parameters, and calculated fields

Both tools are in high demand, and Uncodemy covers them in depth.

6. Python for Data Analysis

Python is a powerful programming language used in data analytics. In this module, you’ll learn:

Installing Python and using Jupyter Notebook

Python basics (variables, loops, functions, conditionals)

Working with data using Pandas

Data cleaning and transformation

Visualizing data using Matplotlib and Seaborn

Introduction to NumPy for numerical operations

Uncodemy makes coding easy to understand, even for beginners.

7. Exploratory Data Analysis (EDA)

Exploratory Data Analysis helps you find patterns, trends, and outliers in data. You’ll learn:

What is EDA?

Using Pandas and Seaborn for EDA

Handling missing and duplicate data

Outlier detection

Data transformation techniques

Feature engineering

This step is very important before building any model.

8. Introduction to Machine Learning (Optional but Included)

Even though it’s not required for every data analyst, Uncodemy gives you an introduction to machine learning:

What is machine learning?

Types of machine learning (Supervised, Unsupervised)

Algorithms like Linear Regression, K-Means Clustering

Using Scikit-learn for simple models

Evaluating model performance

This module helps you understand how data analysts work with data scientists.

9. Projects and Assignments

Real-world practice is key to becoming job-ready. Uncodemy provides:

Mini projects after each module

A final capstone project using real data

Assignments with detailed feedback

Projects based on industries like banking, e-commerce, healthcare, and retail

Working on projects helps you build confidence and create a strong portfolio.

10. Soft Skills and Resume Building

Along with technical skills, soft skills are also important. Uncodemy helps you with:

Communication skills

Resume writing

How to answer interview questions

LinkedIn profile optimization

Group discussions and presentation practice

These sessions prepare you to face real job interviews confidently.

11. Mock Interviews and Job Placement Assistance

Once you complete the course, Uncodemy offers:

Multiple mock interviews

Feedback from industry experts

Job referrals and placement support

Internship opportunities

Interview scheduling with top companies

Many Uncodemy students have landed jobs in top IT firms, MNCs, and startups.

Tools You’ll Learn in the Uncodemy Course

Throughout the course, you will gain hands-on experience in tools like:

Microsoft Excel

Power BI

Tableau

Python

Pandas, NumPy, Seaborn

SQL (MySQL, PostgreSQL)

Jupyter Notebook

Google Sheets

Scikit-learn (Basic ML)

All these tools are in high demand in the job market.

Who Can Join This Course?

The best part about the Data Analyst Course at Uncodemy is that anyone can join:

Students (B.Tech, B.Sc, B.Com, BBA, etc.)

Fresh graduates

Working professionals looking to switch careers

Business owners who want to understand data

Freelancers

You don’t need any prior experience in coding or data.

Course Duration and Flexibility

Course duration: 3 to 5 months

Modes: Online and offline

Class timings: Weekdays or weekends (flexible batches)

Support: 24/7 doubt support and live mentoring

Whether you’re a student or a working professional, Uncodemy provides flexible learning options.

Certifications You’ll Get

After completing the course, you will receive:

Data Analyst Course Completion Certificate

SQL Certificate

Python for Data Analysis Certificate

Power BI & Tableau Certification

Internship Letter (if applicable)

These certificates add great value to your resume and LinkedIn profile.

Final Thoughts

The job market for data analysts is booming, and now is the perfect time to start learning. If you’re in or near Noida, choosing the Data Analyst Course at Uncodemy can be your best career move.

You’ll learn everything from basics to advanced skills, work on live projects, and get support with job placement. The trainers are experienced, the syllabus is job-focused, and the learning environment is friendly and supportive.

Whether you’re just starting or planning a career switch, Uncodemy has the tools, training, and team to help you succeed.

Ready to start your journey as a data analyst? Join Uncodemy’s Data analyst course in Noida and unlock your future today!

0 notes

Text

0 notes

Text

6 Types of Data Analysis That Help Decision-Makers| MAE

There are different methods of data analysis used by companies today. Some are similar to each other, some are complementary. Below, we’ll focus on the six key ways of analysing data that we believe to be most frequently used in various software projects. Let’s take a look.

1. Exploratory Analysis

The goal of this type of analysis is to visually examine existing data and potentially find relationships between variables that may have been unknown or overlooked. It can be useful for discovering new connections to form a hypothesis for further testing.

With an exploratory analysis, you try to get a general view of the data you have on-hand and let it speak for itself. This approach looks at digital information to find relationships and inform you of their existence, but it does not establish causality. For that, you’d have to conduct further analytical procedures.

2. Inferential Analysis

This type of analysis is all about using a sample of digital information to make inferences about a larger population. It is a common statistical approach that is generally present across various types of data analysis, but is particularly relevant in predictive analytics. This is because it essentially allows you to make forecasts about the behaviour of a larger population.

Here, it’s important to remember that the chosen sampling technique dictates the accuracy of the inference. If you choose a sample that isn’t representative of the population, the resulting generalizations will be inaccurate.

3. Descriptive Analysis

Now, we’ll be going into the terminology that is most widely used in the context of software development and that you may have already heard of.

Descriptive analysis is probably the most common type of data analysis employed by modern businesses. Its goal is to explain what happened by looking at historical data. So, it is precisely what is used to track KPIs and determine how the company is performing based on the chosen metrics.

Things like revenue or website visitor reports all rely on descriptive analysis, as do CRM dashboards that visually summarize leads acquired or deals closed. This approach merges data from various sources to deliver valuable insights about the past. Thus, helping you identify benchmarks and set new goals.

Find out how we helped a FinTech Company Unify Data from Disparate Systems

Of course, this technique isn’t very helpful in explaining why things are the way they are or recommending best courses of action. Hence, it’s a good idea to pair descriptive analysis with other types of data analytics.

4. Diagnostic Analysis

Once you’ve established what has occurred, it’s important to understand why it happened. This is where diagnostic analysis plays a role. It helps uncover connections between data and identify hidden patterns that may have caused an event.

By continuously leveraging this type of data analysis, you can quickly determine why issues arise by looking at historical information that may pertain to them. Thus, any problems that are interconnected can be swiftly discovered and dealt with.

For example, after conducting a descriptive analysis, you may learn that contact centre calls have become 60 seconds longer than they used to be. Naturally, you’d want to find out why. By employing diagnostic analysis at this point, your analysts will try to identify additional data sources that may explain this.

After looking at real-time call analytics, they might discover that some newly hired agents are struggling with finding relevant information to help the client, and this causes extra time to be spent on the search. Now that the problem is clearly identified, you’ll be able to deal with it in the most suitable manner.

Discover how a leading insurance firm benefited from a Real-Time Call Analytics Platform

5. Predictive Analysis

Now we’re moving on to some of the most exciting types of data analysis. Predictive analytics, as the name suggests, delivers forecasts regarding what is going to happen in the future. Often, it incorporates artificial intelligence and machine learning technologies to provide more accurate predictions in a faster manner.

Read up on the Role of AI in Business

Predictive analysis usually looks at patterns from historical data as well as insights about current events to make the most reliable forecast about what might happen in the future.

For example, in the manufacturing industry, historical information on machine failure can be supplemented with real-time data from connected devices to predict asset malfunctions and schedule timely maintenance.

If you’re looking to better manage your data and start employing analytical solutions — don’t hesitate to contact Mobile app experts because our company is the best mobile app development in India. Our team has vast expertise in delivering data analysis services that drive business growth and positively impact the bottom line.

#mobile app development#top app development companies#mobile application development#mobile app development company#mobile app developers

0 notes

Text

Learning About Different Types of Functions in R Programming

Summary: Learn about the different types of functions in R programming, including built-in, user-defined, anonymous, recursive, S3, S4 methods, and higher-order functions. Understand their roles and best practices for efficient coding.

Introduction

Functions in R programming are fundamental building blocks that streamline code and enhance efficiency. They allow you to encapsulate code into reusable chunks, making your scripts more organised and manageable.

Understanding the various types of functions in R programming is crucial for leveraging their full potential, whether you're using built-in, user-defined, or advanced methods like recursive or higher-order functions.

This article aims to provide a comprehensive overview of these different types, their uses, and best practices for implementing them effectively. By the end, you'll have a solid grasp of how to utilise these functions to optimise your R programming projects.

What is a Function in R?

In R programming, a function is a reusable block of code designed to perform a specific task. Functions help organise and modularise code, making it more efficient and easier to manage.

By encapsulating a sequence of operations into a function, you can avoid redundancy, improve readability, and facilitate code maintenance. Functions take inputs, process them, and return outputs, allowing for complex operations to be performed with a simple call.

Basic Structure of a Function in R

The basic structure of a function in R includes several key components:

Function Name: A unique identifier for the function.

Parameters: Variables listed in the function definition that act as placeholders for the values (arguments) the function will receive.

Body: The block of code that executes when the function is called. It contains the operations and logic to process the inputs.

Return Statement: Specifies the output value of the function. If omitted, R returns the result of the last evaluated expression by default.

Here's the general syntax for defining a function in R:

Syntax and Example of a Simple Function

Consider a simple function that calculates the square of a number. This function takes one argument, processes it, and returns the squared value.

In this example:

square_number is the function name.

x is the parameter, representing the input value.

The body of the function calculates x^2 and stores it in the variable result.

The return(result) statement provides the output of the function.

You can call this function with an argument, like so:

This function is a simple yet effective example of how you can leverage functions in R to perform specific tasks efficiently.

Must Read: R Programming vs. Python: A Comparison for Data Science.

Types of Functions in R

In R programming, functions are essential building blocks that allow users to perform operations efficiently and effectively. Understanding the various types of functions available in R helps in leveraging the full power of the language.

This section explores different types of functions in R, including built-in functions, user-defined functions, anonymous functions, recursive functions, S3 and S4 methods, and higher-order functions.

Built-in Functions

R provides a rich set of built-in functions that cater to a wide range of tasks. These functions are pre-defined and come with R, eliminating the need for users to write code for common operations.

Examples include mathematical functions like mean(), median(), and sum(), which perform statistical calculations. For instance, mean(x) calculates the average of numeric values in vector x, while sum(x) returns the total sum of the elements in x.

These functions are highly optimised and offer a quick way to perform standard operations. Users can rely on built-in functions for tasks such as data manipulation, statistical analysis, and basic operations without having to reinvent the wheel. The extensive library of built-in functions streamlines coding and enhances productivity.

User-Defined Functions

User-defined functions are custom functions created by users to address specific needs that built-in functions may not cover. Creating user-defined functions allows for flexibility and reusability in code. To define a function, use the function() keyword. The syntax for creating a user-defined function is as follows:

In this example, my_function takes two arguments, arg1 and arg2, adds them, and returns the result. User-defined functions are particularly useful for encapsulating repetitive tasks or complex operations that require custom logic. They help in making code modular, easier to maintain, and more readable.

Anonymous Functions

Anonymous functions, also known as lambda functions, are functions without a name. They are often used for short, throwaway tasks where defining a full function might be unnecessary. In R, anonymous functions are created using the function() keyword without assigning them to a variable. Here is an example:

In this example, sapply() applies the anonymous function function(x) x^2 to each element in the vector 1:5. The result is a vector containing the squares of the numbers from 1 to 5.

Anonymous functions are useful for concise operations and can be utilised in functions like apply(), lapply(), and sapply() where temporary, one-off computations are needed.

Recursive Functions

Recursive functions are functions that call themselves in order to solve a problem. They are particularly useful for tasks that can be divided into smaller, similar sub-tasks. For example, calculating the factorial of a number can be accomplished using recursion. The following code demonstrates a recursive function for computing factorial:

Here, the factorial() function calls itself with n - 1 until it reaches the base case where n equals 1. Recursive functions can simplify complex problems but may also lead to performance issues if not implemented carefully. They require a clear base case to prevent infinite recursion and potential stack overflow errors.

S3 and S4 Methods

R supports object-oriented programming through the S3 and S4 systems, each offering different approaches to object-oriented design.

S3 Methods: S3 is a more informal and flexible system. Functions in S3 are used to define methods for different classes of objects. For instance:

In this example, print.my_class is a method that prints a custom message for objects of class my_class. S3 methods provide a simple way to extend functionality for different object types.

S4 Methods: S4 is a more formal and rigorous system with strict class definitions and method dispatch. It allows for detailed control over method behaviors. For example:

Here, setClass() defines a class with a numeric slot, and setMethod() defines a method for displaying objects of this class. S4 methods offer enhanced functionality and robustness, making them suitable for complex applications requiring precise object-oriented programming.

Higher-Order Functions

Higher-order functions are functions that take other functions as arguments or return functions as results. These functions enable functional programming techniques and can lead to concise and expressive code. Examples include apply(), lapply(), and sapply().

apply(): Used to apply a function to the rows or columns of a matrix.

lapply(): Applies a function to each element of a list and returns a list.

sapply(): Similar to lapply(), but returns a simplified result.

Higher-order functions enhance code readability and efficiency by abstracting repetitive tasks and leveraging functional programming paradigms.

Best Practices for Writing Functions in R

Writing efficient and readable functions in R is crucial for maintaining clean and effective code. By following best practices, you can ensure that your functions are not only functional but also easy to understand and maintain. Here are some key tips and common pitfalls to avoid.

Tips for Writing Efficient and Readable Functions

Keep Functions Focused: Design functions to perform a single task or operation. This makes your code more modular and easier to test. For example, instead of creating a function that processes data and generates a report, split it into separate functions for processing and reporting.

Use Descriptive Names: Choose function names that clearly indicate their purpose. For instance, use calculate_mean() rather than calc() to convey the function’s role more explicitly.

Avoid Hardcoding Values: Use parameters instead of hardcoded values within functions. This makes your functions more flexible and reusable. For example, instead of using a fixed threshold value within a function, pass it as a parameter.

Common Mistakes to Avoid

Overcomplicating Functions: Avoid writing overly complex functions. If a function becomes too long or convoluted, break it down into smaller, more manageable pieces. Complex functions can be harder to debug and understand.

Neglecting Error Handling: Failing to include error handling can lead to unexpected issues during function execution. Implement checks to handle invalid inputs or edge cases gracefully.

Ignoring Code Consistency: Consistency in coding style helps maintain readability. Follow a consistent format for indentation, naming conventions, and comment style.

Best Practices for Function Documentation

Document Function Purpose: Clearly describe what each function does, its parameters, and its return values. Use comments and documentation strings to provide context and usage examples.

Specify Parameter Types: Indicate the expected data types for each parameter. This helps users understand how to call the function correctly and prevents type-related errors.

Update Documentation Regularly: Keep function documentation up-to-date with any changes made to the function’s logic or parameters. Accurate documentation enhances the usability of your code.

By adhering to these practices, you’ll improve the quality and usability of your R functions, making your codebase more reliable and easier to maintain.

Read Blogs:

Pattern Programming in Python: A Beginner’s Guide.

Understanding the Functional Programming Paradigm.

Frequently Asked Questions

What are the main types of functions in R programming?

In R programming, the main types of functions include built-in functions, user-defined functions, anonymous functions, recursive functions, S3 methods, S4 methods, and higher-order functions. Each serves a specific purpose, from performing basic tasks to handling complex operations.

How do user-defined functions differ from built-in functions in R?

User-defined functions are custom functions created by users to address specific needs, whereas built-in functions come pre-defined with R and handle common tasks. User-defined functions offer flexibility, while built-in functions provide efficiency and convenience for standard operations.

What is a recursive function in R programming?

A recursive function in R calls itself to solve a problem by breaking it down into smaller, similar sub-tasks. It's useful for problems like calculating factorials but requires careful implementation to avoid infinite recursion and performance issues.

Conclusion

Understanding the types of functions in R programming is crucial for optimising your code. From built-in functions that simplify tasks to user-defined functions that offer customisation, each type plays a unique role.

Mastering recursive, anonymous, and higher-order functions further enhances your programming capabilities. Implementing best practices ensures efficient and maintainable code, leveraging R’s full potential for data analysis and complex problem-solving.

#Different Types of Functions in R Programming#Types of Functions in R Programming#r programming#data science

3 notes

·

View notes

Text

How Weather and Pitch Conditions Change Your Fantasy Picks

Fantasy sports fans can use WINFIX’s advanced tools to make better choices based on real-time data. These strong but unknown factors can affect how well a player does and help them win. To stay ahead, you need to know how the weather and pitch factors affect the game. There is a trusted IPL match earning app called WINFIX that helps users understand how these things change the game and stay ahead in the fantasy sports business.

The Impact of Weather on Fantasy Sports Performance

In the fantasy world, especially for IPL match earning app, weather forecasts are very important because they can have a big effect on the result of a match, from a good score to a disappointing loss.

Understanding Weather Variables and Player Performance

The weather has a big effect on how well players do on game days. Rain, wind, temperature, and humidity all have different effects on the game. Games can be called off because of rain, and wind can change the path of the ball, which can affect both hitting and throwing. Temperature impacts energy, while humidity impacts how far the ball travels and how quickly players recover. When it’s hot and humid, pitchers can get tired faster, which can lead to more earned runs. Wind can make normal fly balls into home runs or easy saves, which can cut down on scoring chances or boost player stats in ways that were not expected.

Case Studies: Weather Disruptions in Recent Seasons