#Web Scraping service 2024

Explore tagged Tumblr posts

Text

Which Are The 4 Web Scraping Projects Will Help You Automate Your Life?

In the age of digital abundance, information is everywhere, but harnessing it efficiently can be a daunting task. However, with the power of web scraping, mundane tasks can be automated, freeing up time for more important endeavors. Here are four web scraping projects that have the potential to transform and streamline your daily life.

Price Tracking and Comparison: Have you ever found yourself endlessly scrolling through multiple websites to find the best deal on a product? With web scraping, you can automate this process. By creating a scraper that collects data from various e-commerce sites, you can track price fluctuations in real-time and receive notifications when the price drops below a certain threshold. Not only does this save you time, but it also ensures that you never miss out on a great deal. Whether you're shopping for electronics, clothing, or groceries, price tracking and comparison can help you make informed purchasing decisions without the hassle.

Recipe Aggregation and Meal Planning: Planning meals can be a tedious task, especially when you're trying to balance nutrition, taste, and budget. However, with web scraping, you can simplify the process by aggregating recipes from your favorite cooking websites and creating personalized meal plans. By scraping recipe data such as ingredients, cooking instructions, and user ratings, you can build a database of diverse meal options tailored to your dietary preferences and restrictions. Additionally, you can automate grocery list generation based on the ingredients required for each recipe, ensuring that you have everything you need for the week ahead. Whether you're a seasoned chef or a novice cook, recipe aggregation and meal planning can help you save time and explore new culinary delights.

Job Search and Application: Searching for a new job can be a full-time job in itself, but web scraping can make the process more manageable. By scraping job listings from various career websites, you can create a centralized database of job opportunities tailored to your skills and preferences. You can set up filters based on criteria such as location, industry, and job title to narrow down your search and receive email alerts for new listings that match your criteria. Additionally, you can extract relevant data such as job descriptions, required qualifications, and application deadlines to streamline the application process. With web scraping, you can spend less time scouring the internet for job openings and more time crafting tailored applications that stand out to potential employers.

Social Media Monitoring and Analysis: Whether you're a business owner, marketer, or social media enthusiast, monitoring online conversations and trends is essential for staying informed and engaged. With web scraping, you can gather data from social media platforms such as Twitter, Facebook, and Instagram to track mentions, hashtags, and engagement metrics in real-time. By analyzing this data, you can identify emerging trends, monitor brand sentiment, and measure the effectiveness of your social media campaigns. Additionally, you can automate responses to customer inquiries and comments, ensuring timely and personalized engagement. Whether you're managing a brand's online presence or tracking your personal social media activity, web scraping can provide valuable insights and streamline your social media strategy.

In conclusion, web scraping offers a myriad of possibilities for automating and optimizing various aspects of daily life. Whether you're looking to save money, plan meals, find a job, or monitor social media, web scraping can help you achieve your goals more efficiently and effectively. By harnessing the power of data from the web, you can revolutionize your routine and free up time for the things that truly matter. So why not embrace the power of web scraping and take control of your digital life today?

0 notes

Text

There has been a real backlash to AI’s companies’ mass scraping of the internet to train their tools that can be measured by the number of website owners specifically blocking AI company scraper bots, according to a new analysis by researchers at the Data Provenance Initiative, a group of academics from MIT and universities around the world. The analysis, published Friday, is called “Consent in Crisis: The Rapid Decline of the AI Data Commons,” and has found that, in the last year, “there has been a rapid crescendo of data restrictions from web sources” restricting web scraper bots (sometimes called “user agents”) from training on their websites. Specifically, about 5 percent of the 14,000 websites analyzed had modified their robots.txt file to block AI scrapers. That may not seem like a lot, but 28 percent of the “most actively maintained, critical sources,” meaning websites that are regularly updated and are not dormant, have restricted AI scraping in the last year. An analysis of these sites’ terms of service found that, in addition to robots.txt restrictions, many sites also have added AI scraping restrictions to their terms of service documents in the last year.

[...]

The study, led by Shayne Longpre of MIT and done in conjunction with a few dozen researchers at the Data Provenance Initiative, called this change an “emerging crisis” not just for commercial AI companies like OpenAI and Perplexity, but for researchers hoping to train AI for academic purposes. The New York Times said this shows that the data used to train AI is “disappearing fast.”

23 July 2024

86 notes

·

View notes

Text

On Rivd and AI

So last night I made this post and said I'd elaborate more in the morning and when I had the time to do a bit of research. Upon doing said research, I realized that I had misunderstood the concerns being raised with the Rivd situation, but that isn't the case any more. However, some of my thoughts on ai still stand. Heads up, this is going to be a long post. Some actual proper blogging for once wow.

I'm going to discuss the Rivd phishing scam, what can be done for fic writers as ai begins to invade fan spaces, and my elaborated thoughts on Language Learning Models. Warning for transparency: I did utilize chat gpt for this post, NOT for the text itself but to provide examples of the current state of LLMs. Some articles I link to will also be ai generated, and their generated quality is part of what I'll be warning about. This is not a generated post and you can tell because I've got those nifty writing things called "voice" and "style."

ANYWAYS:

Okay so what was the Rivd situation? So two days ago this post was uploaded on tumblr, linking back to a twitter thread on the same topic. I saw it late last night because I was traveling. A reddit post was also uploaded 3 days ago. According to google trends, there was a slight uptick in search traffic the week of June 23rd, and a more severe uptick last week (June 30th-July 6th). That's all to say, this website did not exist until last week, caused a stir, and immediately was put down.

Rivd is not longer up. Enough people contacted its web hosting service Cloudflare and they took the site down. This happened yesterday, from the looks of it.

So, then, what was Rivd? And more importantly, what was the point of scraping a chunk of ao3 and re-uploading it? There seems to be 2 possible theories.

1) The more innocent of the two: they genuinely want to be an ao3 competitor. I can't look at the website any more, and very little positive results appear when googled, but I did find one ai-generated puff piece called "Exploring Rivd: The Premier Platform for Movie-Based Fanfiction" posted to Medium last week by one "Steffen Holzmann" (if that is your real name... x to doubt). This account appeared the same week that Rivd had that first little uptick in google queries, so it is undoubtedly made by the people running the website themselves to create an air of legitimacy. Medium appears to be a safe enough website that you can click that link if you really want to, but you shouldn't. It's a bad generated article, there's very little to glean from it. But it is a remnant source on what Rivd was claiming to be, before it was taken down. Here's the conclusion from the article, the only portion that gave any actual information (and it barely offers any):

Rivd is the ultimate platform for movie-based fanfiction, offering a diverse range of content, a supportive community, and robust interactive features. Whether you’re a writer looking to share your work or a reader seeking new adventures in your favorite movie universes, Rivd provides the perfect platform to engage with a passionate and creative community. Start your journey on Rivd today and immerse yourself in the world of fanfiction.

There's a second article by Holzmann titled "Mastering the Art of Fanfiction Writing in 2024" that's essentially similar ai bull, but trades explaining that fans can write Star Wars fic for explaining that you can make OC's and maybe get a beta (not that that's advice I've ever heeded. Beta? Not in this house we don't.) This was posted six days ago and similarly spends half the time jerking Rivd off. That's all to say, if they are to be believed at face value, this website wanted to just be a fic hosting site. Scraping Ao3 would have made it seem like there was already an active user base for anyone they were attempting to attract, like buying your first 50,000 instagram followers. Anyone actually looking to use this as a fic site would have quickly realized that there's no one on it and no actual fan engagement. There's already fan community spaces online. This website offers nothing ao3 or ffn or wattpad or livejournal or tumblr or reddit didn't already.

Similarly, it reeks of tech bro. Between the scraping and the ai articles, the alarms are already going off. According to that Reddit thread, they were based out of Panama, though that doesn't mean much other than an indicator that these are the type of people to generate puff articles and preemptively base their business off-shore. Holzmann, it should be mentioned, also only has 3 followers, which means my tiny ass blog already has more reach than him. Don't go following that guy. The two comments on the first article are also disparaging of Rivd. This plan didn't work and was seen right through immediately.

If fan communities, and those who write fic know anything, it's how to sniff out when someone isn't being genuine. People write fic for the love of the game, at least generally. It's a lot of work to do for free, and it's from a place of love. Ao3 is run on volunteers and donations. If this genuinely is meant to be a business bro website to out-compete ao3, then they will be sorely disappointed to learn that there's no money in this game. It would be short lived anyway. A website like this was never going to work, or if it was, it would need to ban all copyrighted and explicit materials. You know, the pillars of fic.

So then what was the point of all of this? Unless there was a more nefarious plan going on.

2) Rivd was a phishing scam. This is so so so much more likely. The mark for the scam isn't fic readers, it's fic writers. Here's how it works: they scrape a mass of ao3 accounts for their stories, you catch it, you enter a lengthy form with personal info like your full name and address etc. requesting they take your work down, they sell your data. Yes, a lot of personal info is required to take copyrighted materials down on other sites, too. That's what makes it a good scam. Fic already sits in a legal grey area (you have a copyright over your fic but none of the characters/settings/borrowed plot within it. You also CANNOT make money off of fic writing). So the site holds your works ransom, and you can't go to Marvel or Shueisha or fuck it the ghost of Ann Rice herself to deal with this on your behalf. Thankfully, enough people were able to submit valid DMCA's to Cloudflare to deal with the issue from the top.

Remember this resolution for the next time this situation arises (because of course there will be a next time). Go through higher means rather than the site itself. These scams are only getting more bold. Me personally? I'm not going to give that amount of personal info to a website that shady. Be aware of the warning signs for phishing attacks. Unfortunately, a lot of the resources online are still around text/email phishing. We live in a time where there's legal data harvesting and selling, and illegal data harvesting and selling, and the line in between the two is thin and blurry. Here's an FTC article on the signs of phishing scams, but again, it's more about emails.

I should note, I do not think that Rivd is connected to the ransomware virus of the same name that popped up two or three years ago [link is to Rivd page on PCrisk, a cypersecurity/anti-malware website]. It's probably just coincidence.... but even so. A new business priding itself on SEO and all that tech guy crap should know not to name itself the same thing as a literal virus meant to scam out out of a thousand dollars.

That's all to say, this was absolutely a scam meant to take personal info from ao3 writers. And that blows. To love art and writing and creation so much just to have your works held hostage over data feels really bad. Of course it does!

So, should you lock down your ao3 account?

That, to me, is a little trickier. You can do as you please, of course, and you should do what makes you feel safest. Me personally, though, I don't plan on it. I really, really like that guests can interact with my work from the outside. Ao3 still uses an invite system, so a lot of regular users still don't have accounts for any number of reasons. I read a lot of the time logged out anyways. I started writing again last year after all the info on the ao3 Language Learning Model training had already come out. Like I talked about in my last post, I set out to write things that a computer couldn't produce. (from here on out, I'm going to be using One Piece fic as an example, simply because that's what I write. It's also in a really prime position for a lot of ai discussion due to the size of the fandom and the size of the original work)

I'm going to be honest, I'm not all that afraid of my work being scraped for LLMs to train off of. Text generators have existed for a decade now, but they really popped off last year. LLM's are at a point, though, where the tech is sort of stagnating after the initial boom. The flaws of current ai chat bots are so baked into the flaws of ai itself that they won't be ironed out soon. They make up info, they're easily manipulated, they simply are not people. The flaw here is deep- you can trace it to the fact that we don't know enough about neuroscience itself, and even then neuroscientists don't make ai. Ai programers do. Complex info already has to make one synaptic jump without losing any understanding from person to person, and then it has to be perfectly abstracted for a machine to comprehend. It can't be done with the tools we currently have. I DO hate to admit it, but there are some decent uses for ai. if you are specifically a python programmer. The rest of us? Maybe not.

I don't like generated fic, I don't read it on principle. Checking on ao3, though, there's only 4 total generated op fics (3 in spanish, one in english, 3 xreader, 1 zosan but 2nd person zosan? and the author edited the framework the computer generated text. I still didn't enjoy the fic, tho.) But that breaks down to .005% of all op fics. I went into chat gpt and asked it to generate a drabble for me based on One Piece, and this is what it wrote:

[Image ID: A screenshot of chat GPT. The first line shows my prompt. It reads: You- write me a short 100 word fanfiction based on the manga One Piece. Next line, Chat GPT- "On the shores of an uncharted island, the Straw Hat Pirates stumbled upon a hidden treasure trove. Among the glittering gold and jewels, Luffy discovered an old, weathered journal. Inside, he found sketches and notes detailing the adventures of a legendary pirate who once roamed the Grand Line. Excited by the prospect of a new adventure, the crew set sail. following clues left in the journal. Along the way, they faced formidable foes and forged new alliances, unraveling the mysteries of the lost pirate's legacy. With each island they conquered, they grew closer to uncovering the ultimate treasure- a legacy that would shape their own journey." End ID]

Okay so like, this blows, yeah? 75,000 One Piece fics to train off of on ao3 and it still can't like, write a decent little story. This reads more like an episode summary or a book dust jacket blurb. Drabbles have an internal structure that make them interesting, much like a single sentence short story. You have to get very baby shoes never worn with them, yeah? Ai doesn't understand that, it just knows how many words it has to generate. It doesn't have any sense for creative language, metaphor, simile, poetry. I asked it to write me a poem and it wrote me the same poem with thesaurus replacements each time it re-generated one. A lot about dreams and stars and midnight and whispers, over and over again. The same rhyme scheme and cadence too.

It's also- vitally- so unhorny that it's almost a crime. For a test, I asked it to generate a story about a uhhhhh robot and an archeologist kissing. Just to see what it would give me. I ran 4 tests, a robot falling in love with an archeologist, a robot kissing an archeologist, an archeologist kissing a robot, and an archeologist falling in love with a robot. All 4 were incredibly chaste, quiet little drabbles about the innocence of discovery or whatever the fuck. Here's one of them:

[Image ID: A screenshot of chat GPT. The first line shows my prompt. It reads: You- write me a short 100 word story about an archeologist kissing a robot. Next line, Chat GPT- "Int he dimly lit chamber, Professor Evelyn Porter stared in awe at the ancient artifact- a remarkably lifelike robot buried for millennia. As she leaned closer to examine its intricacies, a surge of curiosity and admiration swept over her. In a moment of spontaneous fascination, she brushed her lips against the cool metal of its cheek, a gesture of reverence and connection across ages. The robot, dormant for eons, stirred faintly in response to the unexpected contact. It was fleeting, almost surreal exchange between past and future, bridging the gap between human exploration and technological marvels of ancient civilizations." End ID]

So like, some of these phrases gets to what's vaguely intriguing about this dynamic, sure. But at the end of the day it's still uhhhh chaste as hell. A reverent kiss??? Not what I want in my fic!!!! This is all to say, LLM's can scrape ao3 all they want, that doesn't mean they can USE what they're stealing. The training wheels have to stay on for corporate palatability. I'm stealing, I'm taking these dolls out of Shueisha's grubby hands and I'm making them sexy kiss for FREE.

In my opinion, the easiest way to keep your work out of the hands of ai is to write something they cannot use. If the grey area of copyright lies in how much is transformed, then motherfucking TRANSFORM it. Write incomprehensible smut. Build surreal worlds. Write poems and metaphors and flush out ideas that a computer could never dream of. Find niches. Get funky with it. Take it too far. and then take it even farther. Be a little freaking weirdo, you're already writing fic so then why be normal about it, you know? Even if they rob you, they can't use it. Like fiber in the diet, undigestible. Make art, make magic.

Even so, I don't mind if the computer keeps a little bit of my art. If you've ever read one of my fics, and then thought about it when you're doing something else or listening to a song or reading a book, that means something I made has stuck with you just a little bit. That;'s really cool to me, I don't know you but I live in your brain. I've made you laugh or cry or c** from my living room on the other side of the world without knowing it. It's part of why I love to write. In all honesty, I don't mind if a computer "reads" my work and a little bit of what I created sticks with it. Even if it's more in a technical way.

Art, community, fandom- they're all part of this big conversation about the world as we experience it. The way to stop websites like Rivd is how we stopped it this week. By talking to each other, by leaning on fan communities, by sending a mass of DMCA's to web host daddy. Participation in fandom spaces keeps the game going, reblogging stuff you like and sending asks and having fun and making art is what will save us. Not to sound like a sappy fuck, but really caring about people and the way we all experience the same art but interpret it differently, that's the heart of the whole thing. It's why we do this. It's meant to be fun. Love and empathy and understanding is the foundation. Build from there. Be confident in the things you make, it's the key to having your own style. You'll find your people. You aren't alone, but you have to also be willing to toss the ball back and forth with others. It takes all of us to play, even if we look a little foolish.

#meta#fandom#fanfic#ao3#again i put this in my last post but this is JUST about LLMs#ai image generation is a whole other story#and also feel free to have opposing thoughts#i'm total open to learning more about this topic#LONG post

25 notes

·

View notes

Text

Which Are the BEST 4 Proxy Providers in 2024?

Choosing the best proxy provider can significantly impact your online privacy, web scraping efficiency, and overall internet experience. In this review, we examine the top four proxy providers in 2024, focusing on their unique features, strengths, and tools to help you make an informed decision.

Oneproxy.pro: Premium Performance and Security

Oneproxy.pro offers top-tier proxy services with a focus on performance and security. Here’s an in-depth look at Oneproxy.pro:

Key Features:

High-Performance Proxies: Ensures high-speed and low-latency connections, perfect for data-intensive tasks like streaming and web scraping.

Security: Provides strong encryption to protect user data and ensure anonymity.

Comprehensive Support: Offers extensive customer support, including detailed setup guides and troubleshooting.

Flexible Plans: Provides flexible pricing plans to suit different user needs, from individuals to large enterprises.

Pros and Cons:

Pros

High-speed and secure

Excellent customer support

Cons

Premium pricing

Might be overkill for casual users

Oneproxy.pro is ideal for users requiring premium performance and high security.

Proxy5.net: Cost-Effective and Versatile

Proxy5.net is a favorite for its affordability and wide range of proxy options. Here’s a closer look at Proxy5.net:

Key Features:

Affordable Pricing: Known for some of the most cost-effective proxy packages available.

Multiple Proxy Types: Offers shared, private, and rotating proxies to meet various needs.

Global Coverage: Provides a wide range of IP addresses from numerous locations worldwide.

Customer Support: Includes reliable customer support for setup and troubleshooting.

Pros and Cons:

Pros

Budget-friendly

Extensive proxy options

Cons

Shared proxies may be slower

Limited advanced features support

Proxy5.net is an excellent choice for budget-conscious users needing versatile proxy options.

FineProxy.org: High-Speed, Reliable, and Affordable

FineProxy.org is well-regarded for delivering high-quality proxy services since 2011. Here’s why FineProxy.org remains a top pick:

Key Features:

Diverse Proxy Packages: Offers various proxy packages, including US, Europe, and World mix, with high-anonymous IP addresses.

High Speed and Minimal Latency: Provides high-speed data transfer with minimal latency, suitable for fast and stable connections.

Reliability: Guarantees a network uptime of 99.9%, ensuring continuous service availability.

Customer Support: Offers 24/7 technical support to address any issues or queries.

Free Trial: Allows users to test the service with a free trial period before purchasing.

Pricing:

Shared Proxies: 1000 proxies for $50

Private Proxies: $5 per proxy

Pros and Cons:

Pros

Affordable shared proxies

Excellent customer support

Cons

Expensive private proxies

Shared proxies may have fluctuating performance

Shared proxies may have fluctuating performance

FineProxy.org provides a balanced mix of affordability and performance, making it a strong contender for shared proxies.

ProxyElite.Info: Secure and User-Friendly

ProxyElite.Info is known for its high-security proxies and user-friendly interface. Here’s what you need to know about ProxyElite.Info:

Key Features:

High Security: Offers high levels of anonymity and security, suitable for bypassing geo-restrictions and protecting user privacy.

Variety of Proxies: Provides HTTP, HTTPS, and SOCKS proxies.

User-Friendly Interface: Known for its easy setup process and intuitive user dashboard.

Reliable Support: Maintains high service uptime and offers reliable customer support.

Pros and Cons:

Pros

High security

Easy to use

Cons

Can be more expensive

Limited free trial options

ProxyElite.Info is ideal for users who prioritize security and ease of use.

Comparison Table

To help you compare these providers at a glance, here’s a summary table:

Conclusion

Selecting the right proxy provider depends on your specific needs, whether it's speed, security, pricing, or customer support. Oneproxy.pro offers premium performance and security, making it ideal for high-demand users. Proxy5.net is perfect for those looking for cost-effective and versatile proxy solutions. FineProxy.org provides a balanced mix of affordability and performance, especially for shared proxies. ProxyElite.Info excels in security and user-friendliness, making it a great choice for those who prioritize privacy.

These top four proxy services in 2024 have proven to be reliable and effective, catering to a wide range of online requirements.

2 notes

·

View notes

Text

25 Python Projects to Supercharge Your Job Search in 2024

Introduction: In the competitive world of technology, a strong portfolio of practical projects can make all the difference in landing your dream job. As a Python enthusiast, building a diverse range of projects not only showcases your skills but also demonstrates your ability to tackle real-world challenges. In this blog post, we'll explore 25 Python projects that can help you stand out and secure that coveted position in 2024.

1. Personal Portfolio Website

Create a dynamic portfolio website that highlights your skills, projects, and resume. Showcase your creativity and design skills to make a lasting impression.

2. Blog with User Authentication

Build a fully functional blog with features like user authentication and comments. This project demonstrates your understanding of web development and security.

3. E-Commerce Site

Develop a simple online store with product listings, shopping cart functionality, and a secure checkout process. Showcase your skills in building robust web applications.

4. Predictive Modeling

Create a predictive model for a relevant field, such as stock prices, weather forecasts, or sales predictions. Showcase your data science and machine learning prowess.

5. Natural Language Processing (NLP)

Build a sentiment analysis tool or a text summarizer using NLP techniques. Highlight your skills in processing and understanding human language.

6. Image Recognition

Develop an image recognition system capable of classifying objects. Demonstrate your proficiency in computer vision and deep learning.

7. Automation Scripts

Write scripts to automate repetitive tasks, such as file organization, data cleaning, or downloading files from the internet. Showcase your ability to improve efficiency through automation.

8. Web Scraping

Create a web scraper to extract data from websites. This project highlights your skills in data extraction and manipulation.

9. Pygame-based Game

Develop a simple game using Pygame or any other Python game library. Showcase your creativity and game development skills.

10. Text-based Adventure Game

Build a text-based adventure game or a quiz application. This project demonstrates your ability to create engaging user experiences.

11. RESTful API

Create a RESTful API for a service or application using Flask or Django. Highlight your skills in API development and integration.

12. Integration with External APIs

Develop a project that interacts with external APIs, such as social media platforms or weather services. Showcase your ability to integrate diverse systems.

13. Home Automation System

Build a home automation system using IoT concepts. Demonstrate your understanding of connecting devices and creating smart environments.

14. Weather Station

Create a weather station that collects and displays data from various sensors. Showcase your skills in data acquisition and analysis.

15. Distributed Chat Application

Build a distributed chat application using a messaging protocol like MQTT. Highlight your skills in distributed systems.

16. Blockchain or Cryptocurrency Tracker

Develop a simple blockchain or a cryptocurrency tracker. Showcase your understanding of blockchain technology.

17. Open Source Contributions

Contribute to open source projects on platforms like GitHub. Demonstrate your collaboration and teamwork skills.

18. Network or Vulnerability Scanner

Build a network or vulnerability scanner to showcase your skills in cybersecurity.

19. Decentralized Application (DApp)

Create a decentralized application using a blockchain platform like Ethereum. Showcase your skills in developing applications on decentralized networks.

20. Machine Learning Model Deployment

Deploy a machine learning model as a web service using frameworks like Flask or FastAPI. Demonstrate your skills in model deployment and integration.

21. Financial Calculator

Build a financial calculator that incorporates relevant mathematical and financial concepts. Showcase your ability to create practical tools.

22. Command-Line Tools

Develop command-line tools for tasks like file manipulation, data processing, or system monitoring. Highlight your skills in creating efficient and user-friendly command-line applications.

23. IoT-Based Health Monitoring System

Create an IoT-based health monitoring system that collects and analyzes health-related data. Showcase your ability to work on projects with social impact.

24. Facial Recognition System

Build a facial recognition system using Python and computer vision libraries. Showcase your skills in biometric technology.

25. Social Media Dashboard

Develop a social media dashboard that aggregates and displays data from various platforms. Highlight your skills in data visualization and integration.

Conclusion: As you embark on your job search in 2024, remember that a well-rounded portfolio is key to showcasing your skills and standing out from the crowd. These 25 Python projects cover a diverse range of domains, allowing you to tailor your portfolio to match your interests and the specific requirements of your dream job.

If you want to know more, Click here:https://analyticsjobs.in/question/what-are-the-best-python-projects-to-land-a-great-job-in-2024/

#python projects#top python projects#best python projects#analytics jobs#python#coding#programming#machine learning

2 notes

·

View notes

Text

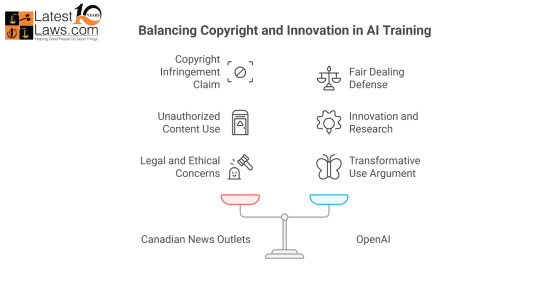

ChatGPT in the Copyright Crossfire: Canadian News Giants Sue OpenAI Over AI Training Practices

In a landmark legal move, five of Canada’s largest and most influential news organizations have filed a copyright infringement lawsuit against OpenAI—the creator of ChatGPT. The case, filed in late November 2024, accuses OpenAI of unauthorized use of protected content to train its AI models, with potential damages running into billions of dollars.

This lawsuit, brought forward by Torstar, Postmedia, The Globe and Mail Inc., The Canadian Press, and CBC/Radio-Canada, marks a pivotal moment in the evolving legal landscape surrounding AI and digital copyright. It follows similar high-profile legal actions, including one initiated earlier by The New York Times and other U.S. publishers, and may shape how future AI models are developed and regulated.

Why Are Canadian News Outlets Suing OpenAI?

At the heart of the lawsuit is the accusation that OpenAI scraped large volumes of copyrighted material from media websites without obtaining permission or compensating content creators.

The news outlets claim:

OpenAI bypassed technical safeguards like the Robot Exclusion Protocol.

The AI company violated website terms of service, which explicitly restrict commercial use.

The use of this scraped content generates commercial gain, as ChatGPT is monetized by OpenAI.

By doing this, the plaintiffs argue, OpenAI has built and refined ChatGPT—a tool used by millions—at the expense of original journalism.

Understanding the Concept of Content Scraping in AI Training

Web scraping refers to the use of automated bots or scripts that systematically collect information from web pages. For AI companies like OpenAI, scraping offers access to a vast dataset of human-written language—crucial for training large language models (LLMs) like ChatGPT.

However, Canadian news organizations assert that this goes beyond simple data collection. They argue that:

The scraped articles are copyrighted works, not public domain content.

OpenAI’s use of them in a commercial product like ChatGPT exceeds fair dealing rights.

Readers accessing the sites are bound by terms and conditions, which OpenAI knowingly ignored.

This raises a major legal and ethical question: Is using published journalism to train AI tools fair use, or a violation of copyright law?

Legal Grounds for the Lawsuit: Copyright, Contracts, and Code

The plaintiffs base their case on three primary legal arguments:

1. Copyright Infringement

The news organizations argue that copying their work for commercial AI training constitutes unauthorized reproduction. Since OpenAI profits from ChatGPT subscriptions and enterprise partnerships, the media companies claim this use is commercial and exploitative.

2. Breach of Terms of Service

Each website has clear terms of use. Most specify that content is for personal, non-commercial consumption only. By scraping and reusing this data, OpenAI is accused of breaching digital contracts that all users—including bots—are expected to follow.

3. Bypassing Anti-Scraping Technologies

Websites employ several anti-scraping tools, including the Robot Exclusion Protocol, paywalls, session tracking, and account-based access control. The lawsuit claims OpenAI intentionally circumvented these barriers, which could also invoke anti-circumvention laws under the Canadian Copyright Act.

OpenAI’s Defense: Is It Fair Dealing or Innovation?

OpenAI, although not yet responding formally to this specific lawsuit, has addressed similar accusations elsewhere—especially in the U.S. lawsuits filed by major publishers. Its main argument is that the use of online content for AI training falls under “fair use” (U.S.) or “fair dealing” (Canada).

Here’s their reasoning:

AI does not reproduce the text, but instead learns from patterns to generate new content.

The training process is transformative, meaning the original content is not simply reused but used to build a system that produces new outputs.

Many legal scholars and AI ethicists support the idea that AI training could qualify under existing copyright exceptions for research and innovation.

However, Canadian courts evaluate fair dealing under a six-factor test:

Purpose of the use (commercial vs. educational)

Character of the use (multiple copies vs. limited)

Amount of the work used

Alternatives to the use

Nature of the original work

Effect on the market value of the original

In this case, the commercial nature, volume of data scraped, and economic harm to publishers may work against OpenAI.

Why This Lawsuit Matters: The Future of AI, Media, and Copyright

This case has the potential to set global legal precedents. It pits two powerful forces against each other:

Traditional journalism, which invests in factual reporting, editorial integrity, and investigative work.

AI innovation, which relies on vast datasets to improve linguistic, factual, and contextual capabilities.

The core issue is this: Can generative AI be trained on copyrighted content without permission or payment?

If courts decide against OpenAI:

AI companies may need to license content or negotiate data-sharing agreements with publishers.

New regulatory frameworks may emerge to protect digital intellectual property.

A system of compensating content creators could become standard across the tech industry.

If OpenAI prevails:

The legal definition of “fair dealing” could expand to include AI training.

Media companies may need to adapt their models, possibly through AI licensing partnerships.

The ethical debate around consent and compensation in AI will intensify.

Broader Implications for SEO, Content Creators, and Digital Publishers

This case highlights a growing concern among digital creators—how AI tools use their work without acknowledgment or reward. It also raises questions for SEO experts, webmasters, and marketers:

Should you block AI crawlers in robots.txt?

Is your content protected against scraping?

Do you need copyright enforcement strategies in place?

As AI becomes more integrated into content generation, search engine algorithms, and online engagement, original publishers must rethink their digital rights frameworks.

Conclusion: A Defining Moment for Digital Copyright Law

The lawsuit filed by Canada’s top media outlets against OpenAI is not just a local news story—it’s a global copyright showdown with far-reaching consequences. It will test the boundaries between innovation and exploitation, fair dealing and infringement, and public access and proprietary rights.

As courts begin to assess the legal and ethical dimensions of this case, the decision could reshape how AI companies source data, how media companies protect content, and how laws evolve to balance both innovation and authorship.

For now, both the tech and media industries await what may become a landmark ruling in the age of artificial intelligence.

Source: https://latestlaws.com/intellectual-property-news/chatgpt-in-the-copyright-soup-this-country-s-news-media-outlets-file-lawsuits-against-openai-for-copyright-infringement-222083/

0 notes

Text

In a world that depends more and more on data, organizations count on factual information to fine-tune their strategy, monitor the market, and safeguard their brands. This is particularly true when collecting data for market research, SEO tracking, and brand protection, which can get expensive. A cheap proxy allows companies to get around geo-restrictions, evade IP restrictions, and scrape data effectively without paying too much. Finding a balance between cost and performance is vital to helping organizations reduce expenses while maintaining productivity. Many businesses use digital tools and automated processes for data collection. Therefore, many use proxies as a confidential solution to get information from various online sources without restriction. This guide outlines practical techniques for leveraging inexpensive data-collection methods without sacrificing performance. Understanding the True Cost of Data Collection Collecting data involves much more than simply gathering raw information. In addition to obvious expenditures, businesses often incur hidden costs with subscriptions, API access fees, or maintenance at the infrastructure level. Without a reliable plan, out-of-control costs can mean that collecting in this way can be prohibitive for smaller businesses. In 2024, the average data breach cost skyrocketed to $4.88 million, marking a 10% rise from 2023, according to up-to-date statistics. By focusing on cost-effective, secure, and reliable data collection methods, as highlighted by these figures, businesses can avoid these risks through good practices. Leveraging Free and Affordable Market Research Tools Investing in expensive software is unnecessary for market intelligence. Numerous tools available at little or no cost provide insights into customer behavior, industry trends, and competitor analysis. Using a combination of all these tools, most businesses can formulate a strong market research strategy without breaking the bank. The analytic tools used by search engines furnish trends of keywords on popular web pages and consumer search behavior, implying what’s in demand. In addition, government reports and industry surveys constitute some of the public data sources that provide factual information with no additional costs. Platforms that deal in open-source data also supply crucial perspectives that would otherwise be behind paid subscriptions. Using Cheap Proxy Services for Efficient Web Scraping Web scraping is an important aspect of contemporary data collection, enabling organizations to automate their data extraction from multiple websites. However, there are obstacles to these initiatives: IP bans, geo-blocking, etc. A cheap proxy is an affordable answer to these issues, allowing businesses to scrape data at a large scale without being blocked. Proxies help distribute data requests across multiple IPs so that servers cannot deny access due to high request volumes. It is especially useful for e-commerce companies tracking competitor pricing information, digital marketers trying to understand consumer activity, and cybersecurity teams monitoring potential threats. Cost-Effective SEO Tracking Strategies Search engine rankings highly affect brand visibility and online traffic. Sadly, SEO tracking tools come at a price and can become quite expensive for businesses with multiple campaigns they oversee. Using affordable SEO tracking solutions allows businesses to keep track of their progress without breaking the bank. The basics of keyword tracking and competitor analysis are well-known among SEO experts. Companies that combine these low-cost keyword-tracking software solutions with free webmaster tools can keep an effective check on their search rankings without breaking the bank. A cheap proxy can also help with SEO tracking, as these can be used to check search results from various locations without bias. This is vital for businesses pursuing a global audience or location-based search trends.

Affordable Brand Protection and Competitor Monitoring A brand reputation is one of the most coveted assets in the business world. However, significant costs can be associated with protecting a brand from infringement, counterfeiting, and unauthorized use. Brand protection solutions on a budget or how to protect your brand without breaking the bank. One practical step would be to automate alerts for digital brand mentions. Businesses can monitor violations of their brand name with free tools like search engine alerts and social media monitoring software. Moreover, by using proxies to scale web scraping, they can gather information about their competitors and check for fraudulent activity, such as fake websites trying to impersonate them. Key Metrics to Measure Cost-Effectiveness Purchasing data-collection devices and software benefits firms only if they monitor their return on investment. Setting key performance indicators is important to ensure organizations measure the efficiency and effectiveness of their data collection strategies. One key metric is data accuracy. Bad data translates into bad decisions, which is not a costless process. Inaccurate data costs the U.S. economy around $3.1 trillion yearly, demonstrating a clear incentive for reliable data-gathering methods. Organizations would need to audit their data sources and validate their data regularly. Strategic Investments for Sustainable Data Gathering Collecting data cheaply should not be synonymous with a reduction in quality or performance. With tools available at low costs, automated processes, and advanced solutions like proxies, businesses can collect quality data without incurring heavy costs. With the help of smart strategies, organizations can balance their resources while preparing to get ahead in a competitive market. As the need for data-driven decision-making rises, companies must become more sustainable and efficient in their data collection processes. Balancing the benefits of affordability and performance assures financial stability and sustainability.

0 notes

Text

Data Marketplace Platform Market: A Deep Dive into Key Industry Players

The global data marketplace platform market size is expected to reach USD 5.73 billion by 2030, registering a CAGR of 25.2% from 2025 to 2030, according to a new report by Grand View Research, Inc. A data marketplace is a transactional platform that facilitates buying and selling of different data types, offering a unique user experience. Data marketplaces comprise cloud services where businesses or individuals can upload data to the cloud. The different data types, such as demographic, business intelligence, and firmographic, personal data, are available in a data marketplace.

The data marketplace platform enables self-service data access, maintaining a high quality of data, consistency, and security for both parties. Businesses and organizations are commencing to augment internal data sets with external data propelling the growth of the data marketplace platform market. It enables buyers with segmented, dependable, and relevant data to access data via analytical tools and platforms to meet business needs.

For instance, the Microsoft Azure marketplace provides data through a uniform interface, and developers can access data via Microsoft Excel and PowerPivot. The gradual shift from conventional business practices to online platforms resulted in the establishment of efficient data marketplace services and solutions. Data marketplace arranges data flow and optimizes data sourcing, providing a data analytics framework for tuning decision models to optimize and improve processes.

Several companies have launched new platforms during the COVID-19 pandemic. For instance, in 2020, Aiisma, a data marketplace, launched Aiisma App with Aiihealth feature, consisting of marketplaces’ location sharing and health mapping features. Users can anonymously and consensually share their behavioral data in exchange for rewards, and this application has proven helpful in creating a digital fence against the pandemic.

The growth is attributed to the emergence of Big Data, web scraping, and advancements in blockchain, as it provides access to considerable data to enhance performance and generate revenue. Web scraping is an automatic process of extracting data from websites; it automates data collection, unlocks web data sources, and suggests data-driven decisions that add value to decision-making. The increasing adoption of AI, data mesh, data management, and blockchain in data & analytics is propelling the usage of self-service analytics solutions.

The market is projected to witness significant growth attributed to the increasing adoption of Internet of Things (IoT) solutions and the deployment of cutting-edge technologies such as AI, AR/VR orchestration capabilities, and machine-to-machine (M2M) advancements in communications networks. Additionally, a growing emphasis on the usage of cloud services is anticipated to boost the growth.

Gather more insights about the market drivers, restrains and growth of the Data Marketplace Platform Market

Data Marketplace Platform Market Report Highlights

• The platform segment dominated the overall market with a revenue share of more than 62% in 2024, and is expected to witness a CAGR of 24.3% during the forecast period.

• The B2B data marketplace platforms segment dominated the overall data marketplace platform industry, gaining a revenue share of over 58% in 2024. It is expected to witness a CAGR of 24.6% during the forecast period.

• The subscription segment dominated the overall market, gaining a revenue share of over 52% in 2024. It is anticipated to witness a CAGR of 27.2% during the forecast period.

• The large enterprises segment dominated the overall market with a revenue share of nearly 57% in 2024 and is expected to grow at a CAGR of 24.7% during the forecast period.

• The financial services segment dominated the overall market, gaining a revenue share of 18.5% in 2024. It is witnessing a CAGR of 18.7% during the forecast period.

• North America data marketplace platform market led the overall data marketplace platform industry in 2024, with a share of more than 35%.

Data Marketplace Platform Market Segmentation

Grand View Research has segmented global data marketplace platform market report based on component, type, revenue model, enterprise size, end-user, and region:

Data Marketplace Platform Component Outlook (Revenue, USD Million, 2017 - 2030)

• Platform

• Services

Data Marketplace Platform Type Outlook (Revenue, USD Million, 2017 - 2030)

• Personal Data Marketplace Platforms

• B2B Data Marketplace Platforms

• IoT Data Marketplace Platforms

Data Marketplace Platform Revenue Model Outlook (Revenue, USD Million, 2017 - 2030)

• Subscription

• Commission

• Paid features

• Others

Data Marketplace Platform Enterprise size Outlook (Revenue, USD Million, 2017 - 2030)

• Large Enterprises

• SME's

Data Marketplace Platform End-user Outlook (Revenue, USD Million, 2017 - 2030)

• Financial Services

• Advertising, Media & Entertainment

• Retail & CPG

• Healthcare & Life Sciences

• Technology

• Public Sector

• Manufacturing

• Other (Education, Automotive, Energy, Oil & Gas)

Data Marketplace Platform Regional Outlook (Revenue, USD Million, 2017 - 2030)

• North America

o U.S.

o Canada

o Mexico

• Europe

o U.K.

o Germany

o France

o Italy

• Asia Pacific

o China

o Japan

o India

o South Korea

o Australia

• Latin America

o Brazil

• Middle East & Africa

o UAE

o KSA

o South Africa

Order a free sample PDF of the Data Marketplace Platform Market Intelligence Study, published by Grand View Research.

#Data Marketplace Platform Market#Data Marketplace Platform Market Analysis#Data Marketplace Platform Market Report#Data Marketplace Platform Market Size#Data Marketplace Platform Market Share

0 notes

Text

An 83-year-old short story by Borges portends a bleak future for the internet

MEMPHIS, Tenn

Fiction writers have explored some possibilities.

In his 2019 novel “Fall,” science fiction author Neal Stephenson imagined a near future in which the internet still exists. But it has become so polluted with misinformation, disinformation and advertising that it is largely unusable.

Characters in Stephenson’s novel deal with this problem by subscribing to “edit streams” – human-selected news and information that can be considered trustworthy.

The drawback is that only the wealthy can afford such bespoke services, leaving most of humanity to consume low-quality, noncurated online content.

To some extent, this has already happened: Many news organizations, such as The New York Times and The Wall Street Journal, have placed their curated content behind paywalls. Meanwhile, misinformation festers on social media platforms like X and TikTok.

Stephenson’s record as a prognosticator has been impressive – he anticipated the metaverse in his 1992 novel “Snow Crash,” and a key plot element of his “Diamond Age,” released in 1995, is an interactive primer that functions much like a chatbot.

On the surface, chatbots seem to provide a solution to the misinformation epidemic. By dispensing factual content, chatbots could supply alternative sources of high-quality information that aren’t cordoned off by paywalls.

Ironically, however, the output of these chatbots may represent the greatest danger to the future of the web – one that was hinted at decades earlier by Argentine writer Jorge Luis Borges.

The rise of the chatbots

Today, a significant fraction of the internet still consists of factual and ostensibly truthful content, such as articles and books that have been peer-reviewed, fact-checked or vetted in some way.

The developers of large language models, or LLMs – the engines that power bots like ChatGPT, Copilot and Gemini – have taken advantage of this resource.

To perform their magic, however, these models must ingest immense quantities of high-quality text for training purposes. A vast amount of verbiage has already been scraped from online sources and fed to the fledgling LLMs.

The problem is that the web, enormous as it is, is a finite resource. High-quality text that hasn’t already been strip-mined is becoming scarce, leading to what The New York Times called an “emerging crisis in content.”

This has forced companies like OpenAI to enter into agreements with publishers to obtain even more raw material for their ravenous bots. But according to one prediction, a shortage of additional high-quality training data may strike as early as 2026.

As the output of chatbots ends up online, these second-generation texts – complete with made-up information called “hallucinations,” as well as outright errors, such as suggestions to put glue on your pizza – will further pollute the web.

And if a chatbot hangs out with the wrong sort of people online, it can pick up their repellent views. Microsoft discovered this the hard way in 2016, when it had to pull the plug on Tay, a bot that started repeating racist and sexist content.

Over time, all of these issues could make online content even less trustworthy and less useful than it is today. In addition, LLMs that are fed a diet of low-calorie content may produce even more problematic output that also ends up on the web.

An infinite − and useless − library

It’s not hard to imagine a feedback loop that results in a continuous process of degradation as the bots feed on their own imperfect output.

A July 2024 paper published in Nature explored the consequences of training AI models on recursively generated data. It showed that “irreversible defects” can lead to “model collapse” for systems trained in this way – much like an image’s copy and a copy of that copy, and a copy of that copy, will lose fidelity to the original image.

How bad might this get?

Consider Borges’ 1941 short story “The Library of Babel.” Fifty years before computer scientist Tim Berners-Lee created the architecture for the web, Borges had already imagined an analog equivalent.

In his 3,000-word story, the writer imagines a world consisting of an enormous and possibly infinite number of hexagonal rooms. The bookshelves in each room hold uniform volumes that must, its inhabitants intuit, contain every possible permutation of letters in their alphabet.

Initially, this realization sparks joy: By definition, there must exist books that detail the future of humanity and the meaning of life.

The inhabitants search for such books, only to discover that the vast majority contain nothing but meaningless combinations of letters. The truth is out there –but so is every conceivable falsehood. And all of it is embedded in an inconceivably vast amount of gibberish.

Even after centuries of searching, only a few meaningful fragments are found. And even then, there is no way to determine whether these coherent texts are truths or lies. Hope turns into despair.

Will the web become so polluted that only the wealthy can afford accurate and reliable information? Or will an infinite number of chatbots produce so much tainted verbiage that finding accurate information online becomes like searching for a needle in a haystack?

The internet is often described as one of humanity’s great achievements. But like any other resource, it’s important to give serious thought to how it is maintained and managed – lest we end up confronting the dystopian vision imagined by Borges.

Roger J Kreuz is Associate Dean and Professor of Psychology, University of Memphis.

1 note

·

View note

Text

Understand how web scraping and enterprise data management solutions transform your business by collecting data insights to stay ahead of the competition.

For More Infomation:-

0 notes

Text

How to Choose a Reliable Residential Proxy Service Provider: Top 5 Criteria for 2024

In the world of data collection, web scraping, and online privacy, residential proxies have become an essential tool. Unlike traditional data center proxies, residential proxies provide users with IP addresses assigned to real devices in residential areas, making them appear as if they are real people browsing the internet. As businesses, marketers, and individuals rely on residential proxies for tasks such as bypassing geo-restrictions, web scraping, and ad verification, selecting the right provider is crucial. The choice can impact the success of your digital operations. To help you make an informed decision, this article outlines the top five criteria for evaluating residential proxy providers, ensuring you get the best performance, security, and value for your needs.

Understanding Residential Proxies Before diving into the factors for evaluating a provider, it's important to first understand what residential proxies are and how they function. A residential proxy is an IP address provided by an Internet Service Provider (ISP) that is associated with a physical device such as a laptop, smartphone, or desktop computer. These proxies are often used for activities that require a high level of anonymity, like web scraping, ad verification, or accessing geo-restricted content.

Unlike data center proxies, which are generated from servers in data centers, residential proxies are harder to detect because they originate from real residential devices. This makes them ideal for users who need to mimic real user traffic or avoid being blocked by websites.

Key Criteria for Choosing a Residential Proxy Provider 2.1 IP Pool Size and Diversity One of the most important factors to consider when choosing a residential proxy provider is the size and diversity of their IP pool. A large pool of IP addresses means that you can rotate your IPs across different locations, which is essential for web scraping and ad verification. If you're conducting activities like price comparison, competitor analysis, or local market research, you will benefit from proxies that cover a wide variety of countries and regions.

Providers offering unlimited residential proxies or a broad range of IPs across different geographical areas allow users to access localized content, ensuring that their activities appear more natural and less likely to be flagged. Look for a provider that offers rotating residential proxies, allowing IPs to change at regular intervals, or static residential proxies, which remain consistent over time for activities requiring stable connections.

2.2 Reliability and Uptime A reliable proxy service is essential to ensure that your operations run smoothly. Proxy downtime can result in delays or even the blocking of your IP addresses, which can disrupt business operations. Therefore, always check for a provider that guarantees a high uptime—ideally 99.9% or more.

Good residential proxy providers back their services with clear service-level agreements (SLAs) that outline uptime expectations. They should also have built-in redundancy to ensure that your proxies continue to perform, even if certain IP addresses are flagged or blocked.

2.3 Speed and Performance While residential proxies are generally slower than data center proxies, they should still meet performance expectations for most use cases. Factors such as latency, bandwidth, and connection speed are essential when choosing a provider. For web scraping, ad verification, or SEO monitoring, the speed of the proxies will determine how efficiently your tasks can be executed.

Make sure to test the provider’s proxies to see if they meet your performance requirements. If your task requires high-speed data transfers or real-time scraping, you should choose a provider that offers unlimited bandwidth or high-speed residential proxies.

2.4 Security and Privacy Security is a paramount consideration when selecting a residential proxy provider. Since residential proxies are often used to conceal the user's identity, it’s important that the provider respects your privacy and follows best practices for data security. Ensure the provider uses HTTPS encryption to protect your traffic from potential interception.

Also, avoid providers that collect or log your personal information. A reliable provider will have a transparent privacy policy that clearly outlines their practices and guarantees that your data will not be shared or sold to third parties. Look for providers that adhere to strong security standards and avoid any providers that have questionable practices in this area.

2.5 Customer Support Responsive customer support can make or break your experience with a residential proxy provider. When using proxies, you may encounter technical issues, IP bans, or other obstacles that require prompt assistance. Choose a provider that offers 24/7 customer support across multiple channels like live chat, email, and phone support.

It’s always a good idea to test the provider’s customer support before committing to a plan. Reach out with a few questions to see how quickly and effectively they respond. A provider that offers excellent support can ensure your proxy service remains uninterrupted, helping you solve any issues in a timely manner.

2.6 Pricing and Plans Cost is an important factor in any decision-making process. However, when it comes to residential proxies, the cheapest option is not always the best. Lower-cost services might come with limitations in terms of IP pool size, speed, or security. Make sure the pricing aligns with your business needs.

Some providers offer flexible pricing models, including pay-as-you-go or subscription-based plans, which can help businesses scale up or down based on usage. Additionally, look for free trial periods or money-back guarantees to test the service before committing long-term.

Providers that offer dedicated residential proxies may charge higher fees, but they can be ideal for activities that require high consistency and performance. On the other hand, rotating residential proxies can be more cost-effective for tasks like web scraping where IP rotation is necessary.

2.7 Reputation and Reviews One of the best ways to evaluate a residential proxy provider is by checking user reviews and feedback from other customers. Reviews on independent forums, third-party review sites, or social media platforms can give you an honest perspective on the provider’s strengths and weaknesses.

Pay attention to what other users are saying about the provider’s IP quality, customer support, uptime, and performance. Negative reviews about slow or unreliable proxies, poor customer service, or hidden fees are red flags that should not be ignored.

However, be cautious about providers with overly positive reviews, as some may engage in review manipulation to boost their reputation. Consider multiple sources of feedback to get a well-rounded view of the provider’s reliability.

2.8 Trial Periods and Money-Back Guarantees Many reliable residential proxy service providers offer free trials or money-back guarantees to give users the opportunity to test the service before making a full commitment. Taking advantage of these offers allows you to evaluate the quality of the proxies, their speed, and their compatibility with your intended use case.

For instance, some providers offer a residential proxy trial that allows you to test a certain number of IPs for free or at a reduced rate. This is an excellent way to assess the provider’s performance and see if they meet your needs before making a long-term financial commitment.

Red Flags to Watch Out For While many proxy providers are legitimate, there are a few red flags that might indicate a less trustworthy service:

Vague terms of service: If the provider doesn’t have clear terms of service or a privacy policy, it may be a sign of poor practices. Unrealistically low pricing: Extremely low pricing could mean that the provider is cutting corners in terms of service quality or security. Poor customer support: If the provider is unresponsive to inquiries or doesn’t offer adequate support, it’s best to avoid them. Limited geographic coverage: Some providers may not offer the regional diversity you need for global operations.

Conclusion Selecting a reliable residential proxy service provider is a critical decision for any business or individual using proxies for activities like web scraping, ad verification, or geo-restricted content access. The key factors to consider include IP pool size, geographic coverage, speed and performance, security and privacy, customer support, and pricing.

By carefully evaluating these factors and testing out different providers, you can ensure that you select the best provider for your needs. Always watch out for red flags and take advantage of trial periods to test the service before committing to a long-term plan. With the right residential proxy provider, you can ensure the success of your digital operations while maintaining the highest levels of security, performance, and reliability.

#HTTP Proxy#SOCKS5 Proxy#Dedicated Proxy#Rotating Proxies#Residential Proxy#DNS Proxy#Static Proxy#Shared Proxy#ShadowSOCKS Proxy#US PROXY#UK PROXY#IPV4 PROXY#Anonymous Proxy#Seo Proxy#ISP Proxies#Premium Proxy#Cheap Proxy#Private Proxy#Proxy Service#Linkedin Proxies#IP Rotation

0 notes

Text

Bot Management in Japan and China: A Market Overview and Forecast

The escalating sophistication of cyber threats has made bot management a critical aspect of cybersecurity in Japan and China. These markets are witnessing rapid technological advancements and a growing reliance on digital platforms, making them highly susceptible to bot attacks. From credential stuffing and web scraping to Distributed Denial of Service (DDoS) attacks, bots have become a significant concern for businesses across various industries. Organizations are increasingly adopting bot management solutions to safeguard their digital assets and ensure seamless user experiences.

The Growing Need for Bot Management

In Japan, the rise of e-commerce, financial services, and online gaming platforms has increased the need for robust bot management solutions. Similarly, China’s digital ecosystem, driven by its massive e-commerce market and fintech innovations, is a hotspot for bot activity. The prevalence of malicious bots has led to significant financial and reputational damages in both countries, prompting organizations to prioritize investments in advanced bot management tools.

Bot management solutions are designed to detect, analyze, and mitigate bot traffic. These tools leverage technologies such as artificial intelligence (AI), machine learning (ML), and behavioral analysis to distinguish between legitimate and malicious traffic. As businesses in Japan and China accelerate their digital transformation initiatives, the demand for sophisticated bot management solutions is expected to surge.

Market Drivers and Trends

Key drivers for the bot management market in Japan and China include the increasing volume of cyberattacks, stringent regulatory frameworks, and the need for superior customer experiences. Companies like QKS Group are at the forefront of providing innovative bot management solutions tailored to these markets. Their offerings emphasize cutting-edge analytics, real-time monitoring, and user-friendly interfaces to address the unique cybersecurity challenges faced by businesses in these regions.

In Japan, government initiatives like the Cybersecurity Basic Act and awareness programs aimed at SMEs are expected to fuel market growth. Similarly, China’s strict data protection regulations and its growing emphasis on securing digital infrastructure are creating a favorable environment for bot management solutions.

Market Forecast: Bot Management, 2024-2028, Japan

The bot management market in Japan is projected to grow at a compound annual growth rate (CAGR) of 14.2% between 2024 and 2028. This growth is driven by increasing adoption in sectors such as e-commerce, banking, and healthcare. Companies like QKS Group are strategically expanding their operations in Japan, capitalizing on the country’s demand for advanced cybersecurity solutions. By 2028, the Japanese market is expected to reach a valuation of $600 million, highlighting its robust growth trajectory.

Japan's bot management market is poised for growth, driven by advancements in AI and machine learning, enabling precise bot attack mitigation. Growing emphasis on comprehensive cybersecurity strategies and collaboration between public and private sectors positions Japan as a leader in securing its digital economy.

Market Forecast: Bot Management, 2024-2028, China

China’s bot management market is expected to exhibit even stronger growth, with a forecasted CAGR of 17.5% during the same period. The market is anticipated to surpass $1.2 billion by 2028, driven by the increasing digitization of industries and a proactive regulatory stance. QKS Group is poised to capture significant market share in China by leveraging its expertise and localized strategies, addressing the unique needs of Chinese businesses.

China's bot management market is set for strong growth, driven by AI-powered solutions and a dynamic digital landscape. Rising cybersecurity priorities, public-private collaboration, and investment will solidify China's role in the global market.

Conclusion

The bot management markets forecast in Japan and China are on a growth trajectory, fueled by rising cyber threats and increasing investments in digital technologies. Companies like QKS Group are playing a pivotal role in shaping these markets by providing innovative and scalable solutions. With favorable market dynamics and growing awareness, the bot management landscape in Japan and China is poised for transformative growth between 2024 and 2028.

0 notes

Text

Web Crawling Tools: Optimize Your Data Strategy in 2024

Looking for powerful web crawling tools for 2024? Open-source tools are free, customizable, and highly effective for scraping and data collection. Explore our top picks that help you crawl websites efficiently, whether you're a beginner or an expert.

Contact us to know more: https://outsourcebigdata.com/top-10-open-source-web-crawling-tools-to-watch-out-in-2024/

About AIMLEAP Outsource Bigdata is a division of Aimleap. AIMLEAP is an ISO 9001:2015 and ISO/IEC 27001:2013 certified global technology consulting and service provider offering AI-augmented Data Solutions, Data Engineering, Automation, IT Services, and Digital Marketing Services. AIMLEAP has been recognized as a ‘Great Place to Work®’. With a special focus on AI and automation, we built quite a few AI & ML solutions, AI-driven web scraping solutions, AI-data Labeling, AI-Data-Hub, and Self-serving BI solutions. We started in 2012 and successfully delivered IT & digital transformation projects, automation-driven data solutions, on-demand data, and digital marketing for more than 750 fast-growing companies in the USA, Europe, New Zealand, Australia, Canada; and more. -An ISO 9001:2015 and ISO/IEC 27001:2013 certified -Served 750+ customers -11+ Years of industry experience -98% client retention -Great Place to Work® certified -Global delivery centers in the USA, Canada, India & Australia Our Data Solutions APISCRAPY: AI driven web scraping & workflow automation platform APISCRAPY is an AI driven web scraping and automation platform that converts any web data into ready-to-use data. The platform is capable to extract data from websites, process data, automate workflows, classify data and integrate ready to consume data into database or deliver data in any desired format. AI-Labeler: AI augmented annotation & labeling solution AI-Labeler is an AI augmented data annotation platform that combines the power of artificial intelligence with in-person involvement to label, annotate and classify data, and allowing faster development of robust and accurate models. AI-Data-Hub: On-demand data for building AI products & services On-demand AI data hub for curated data, pre-annotated data, pre-classified data, and allowing enterprises to obtain easily and efficiently, and exploit high-quality data for training and developing AI models. PRICESCRAPY: AI enabled real-time pricing solution An AI and automation driven price solution that provides real time price monitoring, pricing analytics, and dynamic pricing for companies across the world. APIKART: AI driven data API solution hub APIKART is a data API hub that allows businesses and developers to access and integrate large volume of data from various sources through APIs. It is a data solution hub for accessing data through APIs, allowing companies to leverage data, and integrate APIs into their systems and applications. Locations: USA: 1-30235 14656 Canada: +1 4378 370 063 India: +91 810 527 1615 Australia: +61 402 576 615 Email: [email protected]

0 notes

Link

0 notes

Text

Why Flutter is the Future of Cross-Platform Development: Insights for 2024

Discover why Flutter is trending as a framework for cross-platform app development Services. Discover its benefits, and what makes it different from other frameworks, and get insights from succeeding case studies.

In today’s rapidly evolving digital environment, businesses aspire to acquire a broad audience across several platforms, especially Android and iOS. Building separate apps for diverse platforms can take a lot of time and money.

Cross-platform development frameworks provide a solution, allowing developers to create applications that we can utilize on different platforms using a single codebase. This process significantly decreases development time and saves money while guaranteeing a harmonious user experience.

What Makes Cross-Platform Development a Perfect Choice in 2024

Time and Cost Saver

You can now create the app much faster with one codebase and with fewer resources as compared to separate apps from respective platforms. This summarizes delivering apps sooner and potentially paying less.

Consistency

Cross-platform development gives your app the feel of using iPhones and Androids. The kind of consistency it builds soars brand recognition and makes it easy to use the app no matter what device it might be.

Timely Updates

Handling one codebase is way easier than regulating separate ones for each platform. This indicates swifter bug fixes and smoother updates for your application.

Further, we’ll explore what makes cross-platform development a perfect choice for Flutter apps, and discover how Flutter can help you build a mobile app that reaches a wider audience with minimal effort and cost.

What is Flutter? Benefits, Features, Concepts and Tools

Flutter is a powerful, open-source platform developed by Google, allowing developers to build high-quality, natively compiled mobile apps for both iOS and Android devices with a single codebase. Flutter application development services generally revolve around utilizing natively assembled apps for web, mobile, and desktop from a single codebase. Some of its features include:

Dart is a coding language that anyone can learn. Dart is a modern language used by Flutter, Dart delivers clear instructions and speedy development, making it most suitable for beginners.

When it comes to designing a high-performance Flutter app, a reputable Flutter app development company leverages Flutter’s special effects engine, Skia, to build a fast and slick experience.

Flutter comes with a massive toolbox of customizable building blocks for all types of UI elements. This library allows innovators to produce spectacular and engaging app interfaces without writing lots of code from scrape.

Flutter’s hot reload feature is revolutionary. Hot reload allows developers to perceive their code modifications imprinted in the app straight away, making development considerably speedier and more manageable.

Key Flutter Layout Concepts

The primary design concepts of Flutter are:

1. Row and Column

The classes that comprise of widget are called rows and columns. Widgets inside a Column or Row are referred to as children, while Column and Row themselves are known as parents. A Row arranges its children horizontally, whereas a Column arranges its children vertically.

2. mainAxisSize

Column and Row have distinct main axes: the main axis of a Column is vertical, while that of a Row is horizontal. The `mainAxisSize` property determines how much space a Column or Row will occupy along its main axis.

3. mainAxisAlignment

When mainAxisSize is set to MainAxisSize.max, Columns and Rows can layout their widgets with additional space. The mainAxisAlignment property then determines how the widgets are positioned within this extra space.

4. crossAxisAlignment

The crossAxisAlignment property decides how Columns and Rows position their widgets along the cross-axis. For a Column, the cross axis is horizontal, while for a Row, it is vertical.

5. Flexible and Expanded Widgets