#activation function in neural network

Explore tagged Tumblr posts

Text

#activation function in neural network#artificial neural network in machine learning#neural network in machine learning#neural networks and deep learning#activation function

1 note

·

View note

Text

Day 12 _ Activation Function, Hidden Layer and non linearity

Understanding Non-Linearity in Neural Networks – Part 1 Understanding Non-Linearity in Neural Networks – Part 1 Non-linearity in neural networks is essential for solving complex tasks where the data is not linearly separable. This blog post will explain why hidden layers and non-linear activation functions are necessary, using the XOR problem as an example. What is Non-Linearity? Non-linearity…

#activation function#artificial intelligence#deep learning#hidden layers#hidden layers in neural network#linear equation#machine learning#mathematic#neural network#non linearity#non linearity in deep learning

0 notes

Text

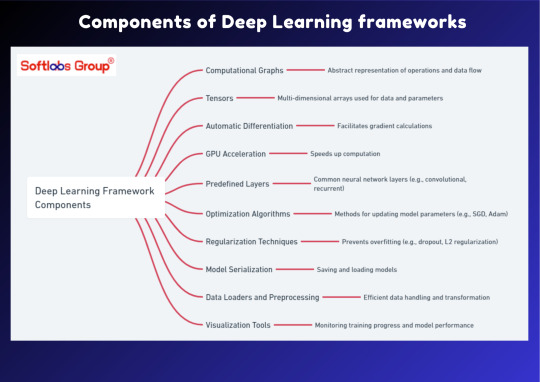

Discover the core components of Deep Learning frameworks with our informative guide. This simplified overview breaks down the essential elements that make up DL frameworks, crucial for building and deploying advanced neural network models. Perfect for those interested in exploring the world of artificial intelligence. Stay informed with Softlabs Group for more insightful content on cutting-edge technologies.

0 notes

Text

dr. jacobo grinberg, the scientist who went missing for researching shifting 🗝️

the man, the myth, the legend. being a keen enthusiast of the human brain from a young age, dr. jacobo grinberg was a mexican neurophysiologist and psychologist who delved into the depths of human consciousness, meditation, mexican shamanism and aimed to establish links between science and spirituality.

grinberg's theories and research can be tied to reality shifting, seeing as he explored the fusion of quantum physics and occultism. being not only heavily established in the field of psychology but also a prolific writer, he wrote about 50 books on such topics. he was a firm believer of the idea that human consciousness possesses hidden and powerful abilities like telepathy, psychic power and astral projection.

the unfortunate loss of his mother to a brain tumour when he was only twelve not only fuelled his interest in the human brain but also pushed him to study it on a deeper level, making it his life’s aim.

he went on to earn a phd in psychophysiology, established his own laboratory and even founded the instituto para el estudio de la conciencia - the national institute for the study of consciousness.

despite sharing groundbreaking and revolutionary ideas, his proposals were rejected by the scientific community due to the inclusion of shamanism and metaphysical aspects. on december 8th, 1994, he went missing just before his 48th birthday. grinberg vanished without a trace, leaving people thoroughly perplexed about his whereabouts. some believe he was silenced, while others believe he discovered something so powerful and revolutionary that changed the entire course of reality, or well, his reality.

grinberg's work was heavily influenced by karl pribram and david bohm's contributions to the holographic theory of consciousness, which suggests that reality functions the same way as a hologram does. meaning, reality exists as a vast, interconnected macrocosm. it even suggests that all realities exist among this holographic structure.

lastly, it also proposes that the brain does not perceive reality, rather actively creates it through tuning into different frequencies of existence.

this not only proves the multiverse theory (infinite realities exist), but also the consciousness theory (we don’t observe reality, but instead create it).

grinberg’s most notable contribution was the syntergic theory, which states that, “there exists a “syntergic” field, a universal, non-local field of consciousness that interacts with the human brain." - david franco.

this theory also stated that

the syntergic field is a fundamental and foundational layer of reality that contains all possible experiences and states of consciousness.

the brain doesn’t generate consciousness, it instead acts as a receiver and its neural networks collapse the syntergic field into a coherent and structured reality.

reality is created, not observed.

we can access different variations of reality (which is the very essence of shifting realities)

the syntergic theory is even in congruence with the universal consciousness theory (all minds are interconnected as a part of a whole, entire consciousness that encompasses all living beings in the universe).

grinberg concluded that

all minds are connected through the syntergic field

this field can be accessed and manipulated by metaphysical and spiritual practices, altered states of consciousness and deep meditation.

in conclusion, the syntergic theory proposes that our consciousness is not a mere byproduct of the brain, but rather a fundamental force of the universe.

grinberg was far ahead of his time, and even 31 years after his disappearance, the true nature of reality remains a mystery. regardless, the syntergic theory helps provide insight and a new perspective on how we access and influence reality.

summary of grinberg’s findings:

the brain constructs reality

other realities exist and can be experienced

other states of consciousness exist and can be experienced

consciousness is not limited

all minds are connected through the syntergic field

shamanic, spiritual, metaphysical and meditative practices can alter and influence our perception of reality.

some of grinberg's works that can be associated with shifting:

el cerebro consciente

la creación de la experiencia

teoría sintérgica

#reality shifting#shifting#shifting realities#desired reality#shifting motivation#shifting blog#shifting community#shifting antis dni#shiftblr#shifter#shifting to hogwarts#loassumption#loa tumblr#manifesting#robotic affirming#shiftingrealities#anti shifters dni#quantum jumping#quantum physics#shifting advice#neville goddard

2K notes

·

View notes

Text

The Brain’s Natural Ability to Shift Realities

Shifting is easy because it taps into the brain’s natural ability to exist across different realities. The brain is highly adaptable due to neuroplasticity, allowing it to rewire itself and form new neural pathways. When you visualize your Desired Reality (DR), your brain activates networks like the default mode network (DMN), which governs imagination and daydreaming, making the experience feel as real as a memory. This activates your episodic memory systems, creating simulations of experiences that feel tangible.

The reticular activating system (RAS) also plays a key role. It filters and prioritizes relevant information, so when you focus your intention on your DR, your brain tunes into that reality, shifting your awareness there.

The most powerful part of shifting, though, is that the brain doesn’t have to force anything. It already knows how to move between realities. It’s a natural function of the mind to adjust focus and intention, making the process of shifting feel effortless. By simply engaging with your DR, you’re allowing your brain to do what it already knows how to do: move between different realities, seamlessly and without resistance.

#reality shifting#shiftinconsciousness#shiftblr#shifters#shifting community#shifting motivation#shifting blog#shifting diary#shiftingrealities#shifting script#shifting is easy#desired reality#shifting realities#shifting reality#reality shift#current reality#3d reality#shifting is real#i love shifting#shifting ideas#law of assumption#shifting antis dni#shifting doubts#shifting help#loassumption#shifting consciousness#void state#manifesting is easy#manifesting advice#manifestation advice

162 notes

·

View notes

Text

Today, as defined by the IASP, nociplastic pain is “pain that arises from altered nociception despite no clear evidence of actual or threatened tissue damage causing the activation of peripheral nociceptors or evidence for disease or lesion of the somatosensory system causing the pain.”

It reflects sensitization of the CNS due to neural network dysregulation and multiple neurobiologic, psychosocial, and genetic factors. Functional brain imaging studies in patients who have chronic pain with nociplastic features have shown alterations in the CNS and abnormal brain connectivity between brain regions, notably increased functional connectivity between regions of the default mode, salience, and sensorimotor networks. (Enmeshment of these networks has been observed in patients with osteoarthritis, RA, and ankylosing spondylitis, according to Clauw.)

In addition to having excess ascending CNS nociceptive signaling, these patients also have decreased descending inhibitory signaling...

74 notes

·

View notes

Text

Hey chat! I decided that I don't care if you care or not, I'll post it anyway. Because I'm a scientist nerd, and a TF2 fan.

So here you go, my theory on how the respawn machine actually works.

⚠️It'll be a lot of reading and you need half of a braincell to understand it.

The Respawn Machine can recreate a body within minutes, complete with all previous memories and personality, as if the person never died. We all know this, but I doubt many have thought about how it actually works.

Of course, such a thing is impossible in real life (at least for now), but we’re talking about a game where there’s magic and mutant bread, so it’s all good.

But being an autistic dork, I couldn’t help but start searching for logical and scientific explanations for how this machine might work. How the hell does it actually function? So, I spent hours of my life on yet another useless big brain time.

In the context of the Respawn Machine, the idea is that the technology can instantly create a new mercenary body, identical to the original. This body must be ready for use immediately after the previous one’s death. To achieve this, the cloning process, which in real life takes months or even years, would need to be significantly accelerated. This means the machine is probably powered by a freaking nuclear reactor, or maybe even Australium.

My theory is that this machine is essentially a massive 3D printer capable of printing biological tissues. But how? You see, even today, people can (or are trying to) recreate creatures that lived millions of years ago using DNA. By using the mercenary’s DNA, which was previously loaded into the system, the machine could recreate a perfect copy.

However, this method likely wouldn’t be able to perfectly recreate the exact personality and all the memories from the previous body. I believe the answer lies in neuroscience.

For the Respawn Machine to restore the mercenary’s consciousness and memories, it would need to be capable of recording and preserving the complete structure of the brain, including all neural connections, synapses, and activity that encode personality and memory. This process is known as brain mapping. After creating a brain map, this data could be stored digitally and then transferred to the new body.

“Okay, but how would you transfer memories that are dated right up until the moment of death? The mercenaries clearly remember everything about their previous death.”

Well, I have a theory about that too!

Neural interfaces! Inside each mercenary’s head could be an implant (a nanodevice) that reads brain activity before death and updates a digital copy of the memories. This system operates at the synaptic level, recording changes in the structure of neurons that occur as memories are formed. After death, this data could be instantly transferred to the new body via a quantum network.

Once the data is uploaded and the brain is synchronized with the new body, the mercenary’s consciousness "awakens." Ideally, the mercenary wouldn’t notice any break in consciousness and would remember everything that happened right up to the moment of death.

However… there are also questions regarding potential negative consequences.

Can the transfer of consciousness really preserve all aspects of personality, or is something inevitably lost in the process?

Unfortunately, nothing is perfect, and there’s a chance that some small memories might be lost—like those buried in the subconscious. Or the person’s personality might become distorted. Maybe that’s why they’re all crazy?

How far does the implant’s range extend? Does the distance between the mercenary and the machine affect the accuracy of data transfer?

My theory is that yes, it does. The greater the distance, the fewer memories are retained.

Could there be deviations in the creation of the body itself?

Yes, there could be. We saw this in "Emesis Blue," which led to a complete disaster. But let’s assume everything is fine, and the only deviations are at most an extra finger (or organ—not critical, Medic would only be happy about that).

Well, these are just my theories and nothing more. I’m not a scientist; I’m an amateur enthusiast with a lot of time on my hands. My theories have many holes that I can’t yet fill due to a lack of information.

#tf2#team fortress 2#canis says#respawn machine#i got nothing better to do sorry#i like brainstorming

105 notes

·

View notes

Note

are hive men sentient in the same fashion we are? Do they experience the full range of subjectivity the modern homo sapiens does or was some of that excluded from their creation? It feels like it would have to in order to make them function well in an eusocial context. I'd be curious if you could expand on that

This is a question i've left a bit ambiguous for myself, because there is a wide range of answers.

In one version of the hive mind, a mass of human brains could be linked to become components of one huge brain (like the components of our own brains), with the individual completely subsumed into the whole. This angle is one that I want to play with later, as a biotech answer to making supercomputers...

For our martians, and the crew of the Hale-Bopp dyson Tree, I imagine there would be some of this at play, since my presumed method of communication in the hive is some sort of radio-telepathy - low-fi neural network stuff. Individual hive-men might have some agency of their own, though, in how they reacted to specific stimuli.

But you're right in that their subjective experience would be a lot different then ours, in part because they'd always be sharing in the data from the rest of the hive. There'd be little individual internal life for a hive member, since they'd always be picking up feelings and thoughts from their hive-mates.

In the story i first wanted to make about the hive-men, a big part of it was about an earthman finding himself inducted into the hive, and having the neural biotech grow inside him... and finding that all his individual neuroses, worries, etc, were obliterated as he found the sweet release of ego death, losing himself into the hive. Like when people surrender to a cult, basically, but this cult doesn't have a tyrannical leader, and operates as a super-organism... which to me seems a little more appealing.

Now, because this is science fiction, I like to think that the hive, as a collective mind, would be a bit more sophisticated than an ant colony, and perhaps capable of more abstract thought - daydreaming great symphonies, pondering philosophical and scientific problems with the brain power of the whole hive, that sort of thing. Not just reactive, but pro-active. But the rich, subjective internal life that is the heritage of all humans might not be as useful, or as possible, when you're sharing your thoughts and brainpower with ten thousand other people.

As a final little thought, I do imagine that these hive men would have began as a voluntary experiment by scientist types, somewhere out in the rings of saturn, far from the prying eyes of earth. Perhaps starting as a more traditional cultish organization, to start, but I'd like to think that the practical problems of living as a super-organism in deep space would temper the more extreme elements.

(but another story idea i would like to flesh out, one day, would be the bad side of other hive-people - non-biotech hive cults prone to mass religious mania, etc. There's a lot of room to play in...)

30 notes

·

View notes

Text

Neuroscience in Manifestation: Creating Reality

The human brain is a complex machine that interprets electrical and chemical signals to create our perception of the world. All stimuli we receive—visual, auditory, tactile—are processed by the brain, which converts them into a coherent experience. This process is so sophisticated that we often forget that we are not experiencing the world directly but rather an interpretation created by our brain.

EEGs: Mapping Brain Activity - Electroencephalography (EEG) is a tool that measures the brain's electrical activity through electrodes placed on the scalp. EEG reveals different brain wave patterns associated with various mental states. When we are focused, relaxed, or stressed, the patterns of brain waves change. These patterns can indicate how our thoughts and intentions are influencing our experience.

Alpha Waves: Associated with relaxation and creativity. When we are immersed in positive thoughts and visualizing our intentions, alpha waves may predominate, suggesting a productive mental state for manifestation.

Beta Waves: Linked to concentration and active thinking. When we are focused on our goals, increased beta waves can reflect a mental state geared toward achievement.

Associative Networks (ANs) - the brain are complex systems of neurons that work together to process and integrate sensory, cognitive, and emotional information. They are crucial for forming associations between different stimuli and experiences, allowing us to create memories, learn, and adapt our behavior. A critical aspect of ANs is the Reticular Activating System (RAS), which plays a central role in modulating our attention and perception of reality.

Reticular Activating System (RAS) - The RAS is a network of neurons located in the brainstem, responsible for filtering the sensory information we receive at every moment and determining which of it is relevant for our conscious attention. It acts as a "filter" that decides which stimuli we should focus on and which we can ignore, based on our expectations, interests, and past experiences.

How the RAS Influences Perception of Reality? When we focus our attention on a particular subject or goal, the RAS adjusts our perception to highlight information and stimuli related to that focus. This mechanism explains why, when we are interested in something specific, we start to notice more frequently related things in our environment. This phenomenon is known as "confirmation bias" and is a direct manifestation of how ANs function.

For example, if you are thinking about buying a new car and have a specific model in mind, you are likely to start noticing that car model everywhere. Your RAS is actively filtering sensory information to prioritize stimuli that match your current interest.

Neuroplasticity - One of the most fascinating aspects of the brain is its plasticity—the ability to reorganize and form new neural connections throughout life. Studies show that our thoughts and experiences can literally reshape the brain's structure. For example, regularly practicing meditation can increase the gray matter density in areas associated with self-awareness and emotional regulation.

This plasticity suggests that by changing our thought patterns, we can alter how our brain perceives and interacts with the world, thus influencing our subjective reality. When we intentionally focus on something, we are strengthening the neural connections associated with that focus, which in turn increases the likelihood of perceiving and remembering relevant information.

Effect of Attention on Manifesting Reality - Focused attention can, therefore, shape our experience of reality in several ways:

Information Filtering: The RAS filters sensory information to highlight relevant stimuli, making us more aware of opportunities and resources that support our goals.

Strengthening Neural Connections: Repetition of focused thoughts and visualizations strengthens neural connections, increasing the likelihood of perceiving and acting in alignment with our interests.

Confirmation Bias: Our brain seeks to confirm our expectations and beliefs, making it more likely that we notice and remember events that align with them.

Associative Networks (ANs), especially through the Reticular Activating System (RAS), play a fundamental role in how we perceive and interact with the world. By focusing our attention on specific goals and interests, we can train our brain to highlight relevant information and shape our reality according to our desires and intentions. By understanding and applying these neuroscientific principles, we can enhance our ability to manifest the reality we desire.

References:

Moruzzi, G., & Magoun, H. W. (1949). Brain stem reticular formation and activation of the EEG. Electroencephalography and Clinical Neurophysiology.

Fredrickson, B. L. (2001). The role of positive emotions in positive psychology: The broaden-and-build theory of positive emotions. American Psychologist.

Lazar, S. W., et al. (2005). Meditation experience is associated with increased cortical thickness. NeuroReport.

#manifesting#manifestation#law of assumption#affirmations#affirm and persist#robotic affirming#loassumption#void state#neuroscience#manifestationscience

68 notes

·

View notes

Text

Interesting Papers for Week 19, 2025

Individual-specific strategies inform category learning. Collina, J. S., Erdil, G., Xia, M., Angeloni, C. F., Wood, K. C., Sheth, J., Kording, K. P., Cohen, Y. E., & Geffen, M. N. (2025). Scientific Reports, 15, 2984.

Visual activity enhances neuronal excitability in thalamic relay neurons. Duménieu, M., Fronzaroli-Molinieres, L., Naudin, L., Iborra-Bonnaure, C., Wakade, A., Zanin, E., Aziz, A., Ankri, N., Incontro, S., Denis, D., Marquèze-Pouey, B., Brette, R., Debanne, D., & Russier, M. (2025). Science Advances, 11(4).

The functional role of oscillatory dynamics in neocortical circuits: A computational perspective. Effenberger, F., Carvalho, P., Dubinin, I., & Singer, W. (2025). Proceedings of the National Academy of Sciences, 122(4), e2412830122.

Expert navigators deploy rational complexity–based decision precaching for large-scale real-world planning. Fernandez Velasco, P., Griesbauer, E.-M., Brunec, I. K., Morley, J., Manley, E., McNamee, D. C., & Spiers, H. J. (2025). Proceedings of the National Academy of Sciences, 122(4), e2407814122.

Basal ganglia components have distinct computational roles in decision-making dynamics under conflict and uncertainty. Ging-Jehli, N. R., Cavanagh, J. F., Ahn, M., Segar, D. J., Asaad, W. F., & Frank, M. J. (2025). PLOS Biology, 23(1), e3002978.

Hippocampal Lesions in Male Rats Produce Retrograde Memory Loss for Over‐Trained Spatial Memory but Do Not Impact Appetitive‐Contextual Memory: Implications for Theories of Memory Organization in the Mammalian Brain. Hong, N. S., Lee, J. Q., Bonifacio, C. J. T., Gibb, M. J., Kent, M., Nixon, A., Panjwani, M., Robinson, D., Rusnak, V., Trudel, T., Vos, J., & McDonald, R. J. (2025). Journal of Neuroscience Research, 103(1).

Sensory experience controls dendritic structure and behavior by distinct pathways involving degenerins. Inberg, S., Iosilevskii, Y., Calatayud-Sanchez, A., Setty, H., Oren-Suissa, M., Krieg, M., & Podbilewicz, B. (2025). eLife, 14, e83973.

Distributed representations of temporally accumulated reward prediction errors in the mouse cortex. Makino, H., & Suhaimi, A. (2025). Science Advances, 11(4).

Adaptation optimizes sensory encoding for future stimuli. Mao, J., Rothkopf, C. A., & Stocker, A. A. (2025). PLOS Computational Biology, 21(1), e1012746.

Memory load influences our preparedness to act on visual representations in working memory without affecting their accessibility. Nasrawi, R., Mautner-Rohde, M., & van Ede, F. (2025). Progress in Neurobiology, 245, 102717.

Layer-specific control of inhibition by NDNF interneurons. Naumann, L. B., Hertäg, L., Müller, J., Letzkus, J. J., & Sprekeler, H. (2025). Proceedings of the National Academy of Sciences, 122(4), e2408966122.

Multisensory integration operates on correlated input from unimodal transient channels. Parise, C. V, & Ernst, M. O. (2025). eLife, 12, e90841.3.

Random noise promotes slow heterogeneous synaptic dynamics important for robust working memory computation. Rungratsameetaweemana, N., Kim, R., Chotibut, T., & Sejnowski, T. J. (2025). Proceedings of the National Academy of Sciences, 122(3), e2316745122.

Discriminating neural ensemble patterns through dendritic computations in randomly connected feedforward networks. Somashekar, B. P., & Bhalla, U. S. (2025). eLife, 13, e100664.4.

Effects of noise and metabolic cost on cortical task representations. Stroud, J. P., Wojcik, M., Jensen, K. T., Kusunoki, M., Kadohisa, M., Buckley, M. J., Duncan, J., Stokes, M. G., & Lengyel, M. (2025). eLife, 13, e94961.2.

Representational geometry explains puzzling error distributions in behavioral tasks. Wei, X.-X., & Woodford, M. (2025). Proceedings of the National Academy of Sciences, 122(4), e2407540122.

Deficiency of orexin receptor type 1 in dopaminergic neurons increases novelty-induced locomotion and exploration. Xiao, X., Yeghiazaryan, G., Eggersmann, F., Cremer, A. L., Backes, H., Kloppenburg, P., & Hausen, A. C. (2025). eLife, 12, e91716.4.

Endopiriform neurons projecting to ventral CA1 are a critical node for recognition memory. Yamawaki, N., Login, H., Feld-Jakobsen, S. Ø., Molnar, B. M., Kirkegaard, M. Z., Moltesen, M., Okrasa, A., Radulovic, J., & Tanimura, A. (2025). eLife, 13, e99642.4.

Cost-benefit tradeoff mediates the transition from rule-based to memory-based processing during practice. Yang, G., & Jiang, J. (2025). PLOS Biology, 23(1), e3002987.

Identification of the subventricular tegmental nucleus as brainstem reward center. Zichó, K., Balog, B. Z., Sebestény, R. Z., Brunner, J., Takács, V., Barth, A. M., Seng, C., Orosz, Á., Aliczki, M., Sebők, H., Mikics, E., Földy, C., Szabadics, J., & Nyiri, G. (2025). Science, 387(6732).

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#neurons#neural computation#neural networks#computational neuroscience

12 notes

·

View notes

Note

Hi, hope I'm still alive by the time you get this message! We are in danger and we are in dire need an Ace/Aro Pilot to help sort this out, I swear it is Really Really Really important, and I can't stress enough how much it pains me to actually type this down.

I'm the Operator for a small Mercenary Lancer Squad called "The Pegasi-M3 Sisters", and while I'm very proud of my teammates abilities, however a tiny problem has emerged due to some of the teams shared preferences.

We are located in a small station in orbit of the Zeta Leporis star, our mission was to escort some scientists through the remains of the station, to gather some old equipment and analysis data from the satellite, but a HORUS Cell had taken over the station, and managed to leave a few remotely activated mechs in the station before escaping.

We've managed to eliminate most of the mechs, and even evacuated the scientists on a safe carrier to our main ship, but we are stranded in the station with the last remaining enemy mech: a Goblin/Deathhead piloted by a cascading Osiris NHP called C130.

Normally, my team would be able to destroy a single mech in an instant, but, and I can't believe I have to say this, the NHP seems to be "very hot" and terribly "coercive" towards the all-disaster-lesbian technophile polycule that makes up the team, and everyone of my gals has fell prey to the sweet-talking of this aforementioned NHP. I lost contact with the ship and two of my pilots, while the last one of my gals seems to be barking on command to this C130, so I suspect it might have hacked it's way into her neural enhancement suite.

We had a Union pilot along, but he started calling the enemy mech "Mommy" and stopped responding a few moments later.

Sigh, I kinda knew it was going to end like this one of these days. At the moment I'm locked in a small cargo compartment of the station, with only a box of rations and my long-distance network system that I managed to connect to the stations omninet, but I know the NHP is going to find that connection soon...

I'm sending this anonymously due to the confidentiality clause of my contract, but I'm sending the coordinates of the station and the Comp/CON data from our teams mechs to help identify the threats.

Signed, PEG-M3-Operator.

Ah. Well. I suppose it is not our place to judge the circumstances of these distress calls. I am asexual myself, though Erdaf is rather distant from these coordinates and we do not have any currently functional ships besides. I shall forward this request to the nearmost makteba, I am certain they shall have several suitable candidates.

-Loyal Wing Catrin Cavazos

---

Well met! Loyal Wing Nori Chimal-4926 of Makteba Sunda, your local aroace Lannie pilot reporting for this mission. ...I did not expect that to ever be relevant on a mission, mostly we do natural disaster relief and search & rescue here, not... whatever this is. But, I will be honest, I have seen weirder missions, most especially when HORUS is involved. Such as the time my Lancaster was teleported to the top of a mech-scale ladder and fell, knocking the rest of my team into a pit of slime that may have been semi-alive. Ask me about it some time. Or maybe do not. Anyroad, fear not, help is on the way, for I am on the path towards you as we speak. As we say in Makteba Sunda, the storm always breaks.

#ooc: the thing with a ladder is based on a real thing that happened in a game#except it was pathfinder instead of lancer and the Lannie was a summoned horse#also congratulations on sending me the funniest ask ever I literally laughed out loud reading this#lancer rpg#lancer#lancer albatross#albatross

22 notes

·

View notes

Text

Yesterday, I don't exactly remember how or why, but I felt motivated to create my own version of The Kiss by Gustav Klimt. Honestly, I came across so many versions before making mine that perhaps that's one of the reasons why my motivation lasted as long as it did XD.

It was challenging to mentally prepare myself to replicate the positioning of Gustav's faces. It was a real struggle trying to figure out how Hal's glasses would behave in that position, and even though I used the BJD as a reference, I couldn’t quite translate it "successfully" into the drawing… I still don’t know how to draw glasses, sorry.

Lina’s face was tricky because I found that the original reference had a longer face than hers, so I had to make adjustments.

Now, I’m super happy with what I created (which is rare), and I want to explain some of the choices I made *smiles*. This drawing was made using bases and references in Krita.

Hal:

His clothing is a photograph of a motherboard that I pixelated in certain areas and stained with a watercolor texture brush. Then I duplicated the layer and saturated it to give it the orange tone but still preserving the green. I also used a square texture pencil, imitating the decorations in the original painting, and finally applied an oil painting filter. (I also did this on Lina’s dress and the layer with the oranges.)

Lina’s dress, the cape, and the cosmos

These elements follow similar processes. I drew the hair textures for both Hal and Lina myself. The stars, grass, and flowers in Hal’s hair are brushes. The sunflower and roses are PNGs, and the background texture is another photograph.

Symbolism

Hal’s tunic: The motherboard is obvious. The orange color seems to take over the green of the motherboard, symbolizing Lina’s presence in his life.

The grass: It’s filled with blue roses, representing Snake. (I’ll always make this representation because I adore Snake, and even though I ship Hal with Lina, Snake will always be present near Hal.)

Hal’s head: It features daisies, which could also be interpreted as sunflowers, referencing the two women in his life. These are on his head because they are his biggest concern. The large Sunflower on the ground represents Sunny, pixelated because she also knows programming.

The orange cape: Inside the cape is the cosmos, an important symbol for my fic. Hal’s parents met at NASA, and one of my big headcanons about Strangelove and Hal is that she would have taught Hal to observe the night sky at an early age (inspiring his interest in extraterrestrial theories, as mentioned in the MGS2 codec, even if it was a joke). The image of the starry sky is also linked to Lina through her birthmark, a characteristic that is both positive and negative in the story and one of the traits she shares with me. For this reason, it’s near her (and also have stars over her hair) ("It was as if the cosmos had marked you to show you were the one.")

Lina’s dress: It features daisies ("Margarita" in Spanish, my IRL name), which are summer flowers that bloom in June, my birth month and my Hal’s fan birthday. These are pixelated, symbolizing Hal’s presence in Lina’s life and her career as a programmer. Daisies represent purity and true love, and they had medicinal properties.

Oranges on the cape: The oranges symbolize food, tied to Lina’s role as a chef, her work/facade she initially adopts to hide from the antagonist. They also symbolize fertility, virtue, fidelity, optimism, energy, and creativity. Additionally, in color psychology, the color stimulate appetite. (And that's a fact as food, because they activate the gastric juice)

At Lina’s feet: There’s a neural programming network, reminding us that she is an AI programmer. The same function is carried out by the code above it.

This also serves to highlight a little, yet significant, detail: Hal is an engineer, skilled in both hardware and software, capable of building a Metal Gear (like Huey); while Lina is a programmer, AI developer (like Strangelove) who is obsessed with response times.

#original character#otacon#oc x canon#lina shirou#halina#mgs fanfic#the emmerich's curse#theemmerichscurse#halemmerich#hal emmerich

15 notes

·

View notes

Text

Been a while, crocodiles. Let's talk about cad.

or, y'know...

Yep, we're doing a whistle-stop tour of AI in medical diagnosis!

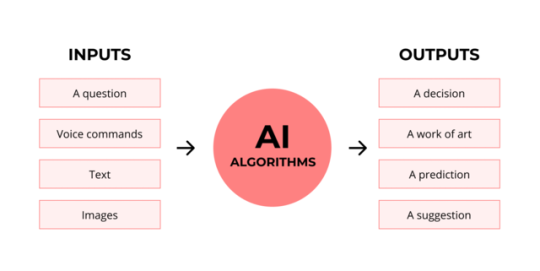

Much like programming, AI can be conceived of, in very simple terms, as...

a way of moving from inputs to a desired output.

See, this very funky little diagram from skillcrush.com.

The input is what you put in. The output is what you get out.

This output will vary depending on the type of algorithm and the training that algorithm has undergone – you can put the same input into two different algorithms and get two entirely different sorts of answer.

Generative AI produces ‘new’ content, based on what it has learned from various inputs. We're talking AI Art, and Large Language Models like ChatGPT. This sort of AI is very useful in healthcare settings to, but that's a whole different post!

Analytical AI takes an input, such as a chest radiograph, subjects this input to a series of analyses, and deduces answers to specific questions about this input. For instance: is this chest radiograph normal or abnormal? And if abnormal, what is a likely pathology?

We'll be focusing on Analytical AI in this little lesson!

Other forms of Analytical AI that you might be familiar with are recommendation algorithms, which suggest items for you to buy based on your online activities, and facial recognition. In facial recognition, the input is an image of your face, and the output is the ability to tie that face to your identity. We’re not creating new content – we’re classifying and analysing the input we’ve been fed.

Many of these functions are obviously, um, problematique. But Computer-Aided Diagnosis is, potentially, a way to use this tool for good!

Right?

....Right?

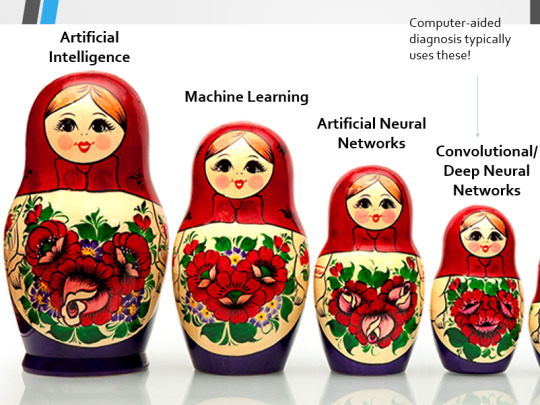

Let's dig a bit deeper! AI is a massive umbrella term that contains many smaller umbrella terms, nested together like Russian dolls. So, we can use this model to envision how these different fields fit inside one another.

AI is the term for anything to do with creating and managing machines that perform tasks which would otherwise require human intelligence. This is what differentiates AI from regular computer programming.

Machine Learning is the development of statistical algorithms which are trained on data –but which can then extrapolate this training and generalise it to previously unseen data, typically for analytical purposes. The thing I want you to pay attention to here is the date of this reference. It’s very easy to think of AI as being a ‘new’ thing, but it has been around since the Fifties, and has been talked about for much longer. The massive boom in popularity that we’re seeing today is built on the backs of decades upon decades of research.

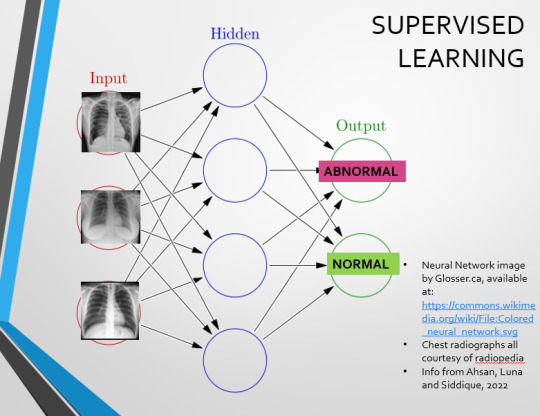

Artificial Neural Networks are loosely inspired by the structure of the human brain, where inputs are fed through one or more layers of ‘nodes’ which modify the original data until a desired output is achieved. More on this later!

Deep neural networks have two or more layers of nodes, increasing the complexity of what they can derive from an initial input. Convolutional neural networks are often also Deep. To become ‘convolutional’, a neural network must have strong connections between close nodes, influencing how the data is passed back and forth within the algorithm. We’ll dig more into this later, but basically, this makes CNNs very adapt at telling precisely where edges of a pattern are – they're far better at pattern recognition than our feeble fleshy eyes!

This is massively useful in Computer Aided Diagnosis, as it means CNNs can quickly and accurately trace bone cortices in musculoskeletal imaging, note abnormalities in lung markings in chest radiography, and isolate very early neoplastic changes in soft tissue for mammography and MRI.

Before I go on, I will point out that Neural Networks are NOT the only model used in Computer-Aided Diagnosis – but they ARE the most common, so we'll focus on them!

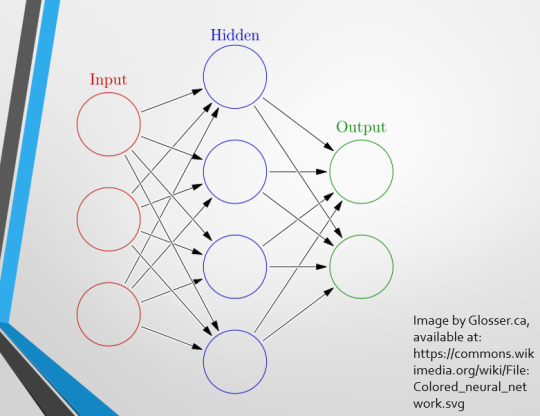

This diagram demonstrates the function of a simple Neural Network. An input is fed into one side. It is passed through a layer of ‘hidden’ modulating nodes, which in turn feed into the output. We describe the internal nodes in this algorithm as ‘hidden’ because we, outside of the algorithm, will only see the ‘input’ and the ‘output’ – which leads us onto a problem we’ll discuss later with regards to the transparency of AI in medicine.

But for now, let’s focus on how this basic model works, with regards to Computer Aided Diagnosis. We'll start with a game of...

Spot The Pathology.

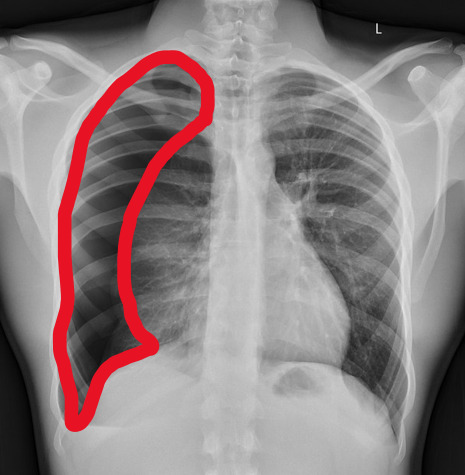

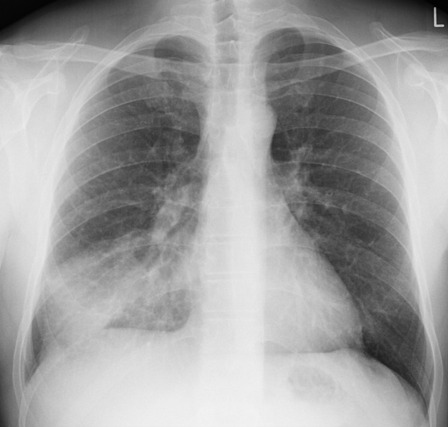

yeah, that's right. There's a WHACKING GREAT RIGHT-SIDED PNEUMOTHORAX (as outlined in red - images courtesy of radiopaedia, but edits mine)

But my question to you is: how do we know that? What process are we going through to reach that conclusion?

Personally, I compared the lungs for symmetry, which led me to note a distinct line where the tissue in the right lung had collapsed on itself. I also noted the absence of normal lung markings beyond this line, where there should be tissue but there is instead air.

In simple terms.... the right lung is whiter in the midline, and black around the edges, with a clear distinction between these parts.

Let’s go back to our Neural Network. We’re at the training phase now.

So, we’re going to feed our algorithm! Homnomnom.

Let’s give it that image of a pneumothorax, alongside two normal chest radiographs (middle picture and bottom). The goal is to get the algorithm to accurately classify the chest radiographs we have inputted as either ‘normal’ or ‘abnormal’ depending on whether or not they demonstrate a pneumothorax.

There are two main ways we can teach this algorithm – supervised and unsupervised classification learning.

In supervised learning, we tell the neural network that the first picture is abnormal, and the second and third pictures are normal. Then we let it work out the difference, under our supervision, allowing us to steer it if it goes wrong.

Of course, if we only have three inputs, that isn’t enough for the algorithm to reach an accurate result.

You might be able to see – one of the normal chests has breasts, and another doesn't. If both ‘normal’ images had breasts, the algorithm could as easily determine that the lack of lung markings is what demonstrates a pneumothorax, as it could decide that actually, a pneumothorax is caused by not having breasts. Which, obviously, is untrue.

or is it?

....sadly I can personally confirm that having breasts does not prevent spontaneous pneumothorax, but that's another story lmao

This brings us to another big problem with AI in medicine –

If you are collecting your dataset from, say, a wealthy hospital in a suburban, majority white neighbourhood in America, then you will have those same demographics represented within that dataset. If we build a blind spot into the neural network, and it will discriminate based on that.

That’s an important thing to remember: the goal here is to create a generalisable tool for diagnosis. The algorithm will only ever be as generalisable as its dataset.

But there are plenty of huge free datasets online which have been specifically developed for training AI. What if we had hundreds of chest images, from a diverse population range, split between those which show pneumothoraxes, and those which don’t?

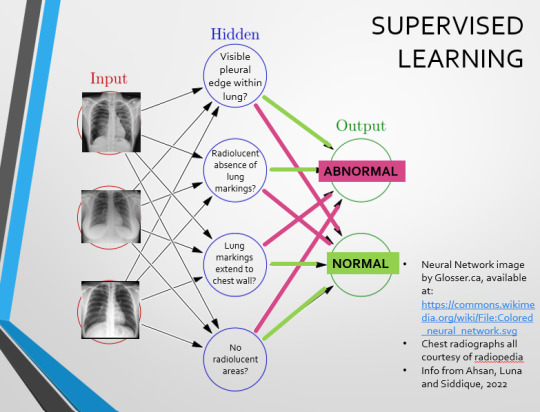

If we had a much larger dataset, the algorithm would be able to study the labelled ‘abnormal’ and ‘normal’ images, and come to far more accurate conclusions about what separates a pneumothorax from a normal chest in radiography. So, let’s pretend we’re the neural network, and pop in four characteristics that the algorithm might use to differentiate ‘normal’ from ‘abnormal’.

We can distinguish a pneumothorax by the appearance of a pleural edge where lung tissue has pulled away from the chest wall, and the radiolucent absence of peripheral lung markings around this area. So, let’s make those our first two nodes. Our last set of nodes are ‘do the lung markings extend to the chest wall?’ and ‘Are there no radiolucent areas?’

Now, red lines mean the answer is ‘no’ and green means the answer is ‘yes’. If the answer to the first two nodes is yes and the answer to the last two nodes is no, this is indicative of a pneumothorax – and vice versa.

Right. So, who can see the problem with this?

(image courtesy of radiopaedia)

This chest radiograph demonstrates alveolar patterns and air bronchograms within the right lung, indicative of a pneumonia. But if we fed it into our neural network...

The lung markings extend all the way to the chest wall. Therefore, this image might well be classified as ‘normal’ – a false negative.

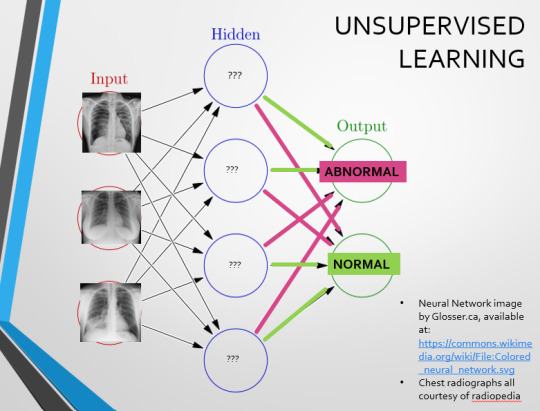

Now we start to see why Neural Networks become deep and convolutional, and can get incredibly complex. In order to accurately differentiate a ‘normal’ from an ‘abnormal’ chest, you need a lot of nodes, and layers of nodes. This is also where unsupervised learning can come in.

Originally, Supervised Learning was used on Analytical AI, and Unsupervised Learning was used on Generative AI, allowing for more creativity in picture generation, for instance. However, more and more, Unsupervised learning is being incorporated into Analytical areas like Computer-Aided Diagnosis!

Unsupervised Learning involves feeding a neural network a large databank and giving it no information about which of the input images are ‘normal’ or ‘abnormal’. This saves massively on money and time, as no one has to go through and label the images first. It is also surprisingly very effective. The algorithm is told only to sort and classify the images into distinct categories, grouping images together and coming up with its own parameters about what separates one image from another. This sort of learning allows an algorithm to teach itself to find very small deviations from its discovered definition of ‘normal’.

BUT this is not to say that CAD is without its issues.

Let's take a look at some of the ethical and practical considerations involved in implementing this technology within clinical practice!

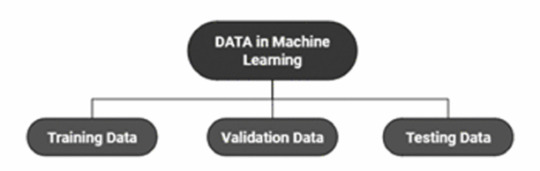

(Image from Agrawal et al., 2020)

Training Data does what it says on the tin – these are the initial images you feed your algorithm. What is key here is volume, variety - with especial attention paid to minimising bias – and veracity. The training data has to be ‘real’ – you cannot mislabel images or supply non-diagnostic images that obscure pathology, or your algorithm is useless.

Validation data evaluates the algorithm and improves on it. This involves tweaking the nodes within a neural network by altering the ‘weights’, or the intensity of the connection between various nodes. By altering these weights, a neural network can send an image that clearly fits our diagnostic criteria for a pneumothorax directly to the relevant output, whereas images that do not have these features must be put through another layer of nodes to rule out a different pathology.

Finally, testing data is the data that the finished algorithm will be tested on to prove its sensitivity and specificity, before any potential clinical use.

However, if algorithms require this much data to train, this introduces a lot of ethical questions.

Where does this data come from?

Is it ‘grey data’ (data of untraceable origin)? Is this good (protects anonymity) or bad (could have been acquired unethically)?

Could generative AI provide a workaround, in the form of producing synthetic radiographs? Or is it risky to train CAD algorithms on simulated data when the algorithms will then be used on real people?

If we are solely using CAD to make diagnoses, who holds legal responsibility for a misdiagnosis that costs lives? Is it the company that created the algorithm or the hospital employing it?

And finally – is it worth sinking so much time, money, and literal energy into AI – especially given concerns about the environment – when public opinion on AI in healthcare is mixed at best? This is a serious topic – we’re talking diagnoses making the difference between life and death. Do you trust a machine more than you trust a doctor? According to Rojahn et al., 2023, there is a strong public dislike of computer-aided diagnosis.

So, it's fair to ask...

why are we wasting so much time and money on something that our service users don't actually want?

Then we get to the other biggie.

There are also a variety of concerns to do with the sensitivity and specificity of Computer-Aided Diagnosis.

We’ve talked a little already about bias, and how training sets can inadvertently ‘poison’ the algorithm, so to speak, introducing dangerous elements that mimic biases and problems in society.

But do we even want completely accurate computer-aided diagnosis?

The name is computer-aided diagnosis, not computer-led diagnosis. As noted by Rajahn et al, the general public STRONGLY prefer diagnosis to be made by human professionals, and their desires should arguably be taken into account – as well as the fact that CAD algorithms tend to be incredibly expensive and highly specialised. For instance, you cannot put MRI images depicting CNS lesions through a chest reporting algorithm and expect coherent results – whereas a radiologist can be trained to diagnose across two or more specialties.

For this reason, there is an argument that rather than focusing on sensitivity and specificity, we should just focus on producing highly sensitive algorithms that will pick up on any abnormality, and output some false positives, but will produce NO false negatives.

(Sensitivity = a test's ability to identify sick people with a disease)

(Specificity = a test's ability to identify that healthy people do not have this disease)

This means we are working towards developing algorithms that OVERESTIMATE rather than UNDERESTIMATE disease prevalence. This makes CAD a useful tool for triage rather than providing its own diagnoses – if a CAD algorithm weighted towards high sensitivity and low specificity does not pick up on any abnormalities, it’s highly unlikely that there are any.

Finally, we have to question whether CAD is even all that accurate to begin with. 10 years ago, according to Lehmen et al., CAD in mammography demonstrated negligible improvements to accuracy. In 1989, Sutton noted that accuracy was under 60%. Nowadays, however, AI has been proven to exceed the abilities of radiologists when detecting cancers (that’s from Guetari et al., 2023). This suggests that there is a common upwards trajectory, and AI might become a suitable alternative to traditional radiology one day. But, due to the many potential problems with this field, that day is unlikely to be soon...

That's all, folks! Have some references~

#medblr#artificial intelligence#radiography#radiology#diagnosis#medicine#studyblr#radioactiveradley#radley irradiates people#long post

16 notes

·

View notes

Text

Drone Reproduction Headcanons PART 2!! (NOT NSFW)

Please read this post first if you haven't, it's important for context:

This post is gonna be mainly about Disassembly drones- while I originally said they wouldn't have reproductive functions and they would have to be modified to have a port for the specialized cord to copy data into a pill baby. I've decided to go back on this in favor of a much COOLER idea.

CUCKOO DRONES!!!!!

A cuckoo is a species of bird popularly associated with "brood parasitism". A behavior in the wild where they will lay their eggs in the nests of other birds to raise their young for them. (just birds as an example, this does happen in other species)

Disassembly drones still don't have their own infant forms.. so they have to steal babies from worker drones, whether already activated or not doesn't matter. They inject a code into the UNN(Untrained Neural Network) that replaces the template for what they're supposed to grow up into with a disassembly drone template.

The new parent disassembly drone can choose to keep the child and raise it themselves or.. leave the child with the worker drone colony to raise it for them. (this'll be what the rest of this is about)

To prevent the child from being kicked out by the colony too early, they will look practically identical to a worker drone(minor differences) in their early stages. It won't be until their late teens that they begin to present disassembly drone features, and start to intensely crave oil.

This will lead to them killing the worker drones and they won't leave until the entire colony is dead.

- - - - - - - - - - -

At least that is the premise, of course at times the child may develop morality and resist murdering what they've come to know as their family.. but unfortunately this effort will usually lead to their death from core overheat(unless they can find a compromise). Sometimes the parent disassembly drone may return in search of the child after they become a certain age. Revealing their parentage and attempting to persuade them into killing the workers and coming with them.

Cuckoo drones do have some differences from normal disassembly drones. For one they wouldn't have a name like "Serial Designation (letter)", no yellow armband either, and they wouldn't have any programmed-in role because they don't have a squad.

By the way, cuckoo drones who were altered *after* activation are still very much related to their worker drone parents and still inherit their traits! The parent disassembly drone only changed them so they grow up into a DD instead of a worker. This means the cuckoo can have any colored lights derived from their parents.

Cuckoos who were altered before activation simply inherit traits exclusively from their parent DD (basically a clone cuz they don't require 2 DDs).

- - - - - - - - - - -

Well, I think that's all I have to say. I really need to just make a list of Disassembly Drone headcanons because I just LOVE coming up with the workings of a fictional species- I know they're robots but, let's be honest.. they're animals in a robot shell lol.

I wanted to share this immediately because I think it would make an AWESOME OC concept. So ABSOLUTELY feel free to use this for your OCs, I haven't seen anyone else make something like this.

Please tag me on you cuckoo drone OCs, I would LOVE to see them!! :333 (you don't have to of course)

#murder drones#headcanons#murder drones headcanon#rambling#and the void stared back#cuckoo drones#disassembly drones#oc ideas

16 notes

·

View notes

Text

hithisisawkward said: Master’s in ML here: Transformers are not really monstrosities, nor hard to understand. The first step is to go from perceptrons to multi-layered neural networks. Once you’ve got the hand of those, with their activation functions and such, move on to AutoEncoders. Once you have a handle on the concept of latent space ,move to recurrent neural networks. There are many types, so you should get a basic understading of all, from simple recurrent units to something like LSTM. Then you need to understand the concept of attention, and study the structure of a transformer (which is nothing but a couple of recurrent network techniques arranged in a particularly clever way), and you’re there. There’s a couple of youtube videos that do a great job of it.

thanks, autoencoders look like a productive topic to start with!

16 notes

·

View notes

Text

This project is unfinished and will remain that way. There are bugs. Not all endings are implemented. The ending tracker doesn't work. Images are broken. Nothing will be fixed. There's still quite a bit of content, though, so I am releasing what's here as is.

Tilted Sands is a project I started back when AI Dungeon first came out--the very early version you had to run in a Google colabs notebook. Sometime in late 2018, I think? I was a contributor at Botnik Studios at the time and I was delighted by AI Dungeon, but I knew it would never be a truly satisfying choose your own adventure generator on its own. I would argue that the modern AI Dungeon 2 and NovelAI don't fully function as such even now. That's not how AI works. It has to be guided heavily, the product has to be sculpted by human hands.

Anyway, it inspired me to use Transformer--a GPT2 predictive text writing tool--to craft a more coherent and polished but still silly and definitely AI-flavored CYOA experience. It was an ambitious project, but I was experienced with writing what I like to call "cyborg" pieces--meaning the finished product is, in a way, made by both an AI/algorithm/other bot AND a human writer. Something strange and wonderful that could not have been made by the bot alone, nor by the human writer alone. Algorithms can surprise us and trigger our creative human minds to move in directions we never would've thought to go in otherwise. To me, that's what actual AI art is: a human engaging in a creative activity like writing in a way that also includes utilizing an algorithm of some sort. The results are always fascinating, strangely insightful, and sometimes beautiful.

I worked on Tilted Sands off-and-on for a couple years, and then the entire AI landscape changed practically overnight with DALL-E and ChatGPT. And I soon realized that I cannot continue working on this project. Mainstream, corporate AI is disgustingly unethical and I don't want the predictive text writing I used to enjoy so much to be associated with "AI art". It's not. Before DALL-E and ChatGPT, there were artists and writers who made art by utilizing algorithms, neural networks, etc. Some things were perhaps in an ethical or legal grey area, but people actually did care about that. I remember discussing "would it be ethical to scrape [x]?" with other writers, and sharing databases of things like commercial advertising scripts and public domain content. I liked using mismatched databases to write things, like a corpus of tech product reviews that I used to write a song. The line between transformative art and fair use vs theft was constantly on all of our minds, because we were artists ourselves.

All of the artists and writers I knew in those days who made "cyborg art" have stopped by now. Including me.

But I poured a lot of love and thought and energy into this silly little project, and the thought of leaving it to rot on my hard drive hurt too much. It's not done, but there's a lot there--over 14,000 words, multiple endings and game over scenarios. I had so much fun with it and I wanted to complete it, but I can't. I don't want it to be associated in any way with the current "AI art" scene. It's not.

Please consider this my love letter to what technology-augmented art used to be, and what AI art could have been.

I know I'm not the only one mourning this brief but intense period from about 2014-2019 in which human creativity and developing AI technology combined organically to create an array of beautiful, stupid, silly, terrible, wonderful works of art. If you're also feeling sad and nostalgic about it, I hope you find this silly game enjoyable even in its unfinished state.

In conclusion:

Fuck capitalism, fuck what is currently called AI art, fuck ChatGPT, fuck every company taking advantage of artists and writers and other creative types by using AI.

24 notes

·

View notes