#neural network in machine learning

Explore tagged Tumblr posts

Text

#activation function in neural network#artificial neural network in machine learning#neural network in machine learning#neural networks and deep learning#activation function

1 note

·

View note

Text

Frank Rosenblatt, often cited as the Father of Machine Learning, photographed in 1960 alongside his most-notable invention: the Mark I Perceptron machine — a hardware implementation for the perceptron algorithm, the earliest example of an artificial neural network, est. 1943.

#frank rosenblatt#tech history#machine learning#neural network#artificial intelligence#AI#perceptron#60s#black and white#monochrome#technology#u

820 notes

·

View notes

Text

[image ID: Bluesky post from user marawilson that reads

“Anyway, Al has already stolen friends' work, and is going to put other people out of work. I do not think a political party that claims to be the party of workers in this country should be using it. Even for just a silly joke.”

beneath a quote post by user emeraldjaguar that reads

“Daily reminder that the underlying purpose of Al is to allow wealth to access skill while removing from the skilled the ability to access wealth.” /end ID]

#ai#artificial intelligence#machine learning#neural network#large language model#chat gpt#chatgpt#scout.txt#but obvs not OP

22 notes

·

View notes

Text

9 times of 10 I see AI hate it is misaddressed from the actual problem, which isn't the fucking learning algorithms but the capitalistic desire to squeeze as much money with as little effort as possible from everything and anything that promises a prospect of Any financial gain and prioritises it over quality and whether it actually makes someone's life better, because the measure of "value" is entirely linked to financial gain. It doesn't matter if it makes our world a better place, it doesn't matter whether it makes our world a significantly worse place, what matters is whether it sells. And THIS is the problem.

AI voice assistants, chats and other machine learning tools are not the enemy to hate on. The system surrounding their use that prioritises the interests of CEOs over the interests of general public as well as Requires people to do stupid useless things for money to survive is the actual problem. Ironically, the people who could benefit from AI tools the most, those whose life could literally be turned around for the better (people with various disabilities) are the LAST people who are considered in this ai race. Because the system dictates that it'll rather focus on cheap production of shitty pictures to cut costs and eliminate the need for the rich to pay those of us who may actually enjoy their job rather than make accessibility tools actually accessible, despite being able to do so if only it was the priority.

We have chatbots who speak in natural voices, we have face and object recognition features integrated into our phones. But we don't have screen-readers that don't suck, we don't have accessible tools for translating in real time what the camera sees into accurate descriptions to help visually impaired people. We have algorithms to generate pictures, social medias integrate various bots to put them on their platforms for god knows why, but what they don't have is algorithms that could automatically generate text descriptions of pictures and videos posted on them. What they don't have is AI tools for detecting flashing in videos and gifs and a way to filter them. They have algorithms searching for porn and copyrighted materials tho even if it's just a few seconds of music created by a world famous artist who has been dead for decades.

Granted, we have really good automated subtitles and translators, but at the same time there are no automated descriptions of other sounds while the tools for GENERATING various sounds exist. And subtitles themselves do nothing to convey intonation even though just adding some cursive words when a speaker makes an emphasis on them would already greatly improve the experience.

Whenever I think of the current state of AI, I remember that project that through implants gave blind people the ability to see. The one that got shut down and left all the people who used it blind again. Because it wasn't profitable.

14 notes

·

View notes

Text

Neturbiz Enterprises - AI Innov7ions

Our mission is to provide details about AI-powered platforms across different technologies, each of which offer unique set of features. The AI industry encompasses a broad range of technologies designed to simulate human intelligence. These include machine learning, natural language processing, robotics, computer vision, and more. Companies and research institutions are continuously advancing AI capabilities, from creating sophisticated algorithms to developing powerful hardware. The AI industry, characterized by the development and deployment of artificial intelligence technologies, has a profound impact on our daily lives, reshaping various aspects of how we live, work, and interact.

#ai technology#Technology Revolution#Machine Learning#Content Generation#Complex Algorithms#Neural Networks#Human Creativity#Original Content#Healthcare#Finance#Entertainment#Medical Image Analysis#Drug Discovery#Ethical Concerns#Data Privacy#Artificial Intelligence#GANs#AudioGeneration#Creativity#Problem Solving#ai#autonomous#deepbrain#fliki#krater#podcast#stealthgpt#riverside#restream#murf

17 notes

·

View notes

Text

Под лунным светом таинственных снов

Слушай звуки lo-fi музыки, что тянут вдаль.

В гармонии тихих нот, в мире чилла,

Твой разум погружается в мечты вновь.

Какие измерения снов ты исследуешь сегодня?

#chill beats for sleep#lofi music for relaxation#Palestine#neural network#downtempo music#machine learning#lofi beats#photography#city explorer#writing community#chillhop beats

2 notes

·

View notes

Text

Tonight I am hunting down venomous and nonvenomous snake pictures that are under the creative commons of specific breeds in order to create one of the most advanced, in depth datasets of different venomous and nonvenomous snakes as well as a test set that will include snakes from both sides of all species. I love snakes a lot and really, all reptiles. It is definitely tedious work, as I have to make sure each picture is cleared before I can use it (ethically), but I am making a lot of progress! I have species such as the King Cobra, Inland Taipan, and Eyelash Pit Viper among just a few! Wikimedia Commons has been a huge help!

I'm super excited.

Hope your nights are going good. I am still not feeling good but jamming + virtual snake hunting is keeping me busy!

#programming#data science#data scientist#data analysis#neural networks#image processing#artificial intelligence#machine learning#snakes#snake#reptiles#reptile#herpetology#animals#biology#science#programming project#dataset#kaggle#coding

43 notes

·

View notes

Text

#coincidence? i think not#my little pony#mlp#twilight sparkle#my little pony fim#my little pony friendship is magic#friendship is magic#machine learning#ml#i am talking#artificial intelligence#neural networks#multilayer perceptron#weirdcore#weird#fav fav fav#me me me

5 notes

·

View notes

Text

The Human Brain vs. Supercomputers: The Ultimate Comparison

Are Supercomputers Smarter Than the Human Brain?

This article delves into the intricacies of this comparison, examining the capabilities, strengths, and limitations of both the human brain and supercomputers.

#human brain#science#nature#health and wellness#skill#career#health#supercomputer#artificial intelligence#ai#cognitive abilities#human mind#machine learning#neural network#consciousness#creativity#problem solving#pattern recognition#learning techniques#efficiency#mindset#mind control#mind body connection#brain power#brain training#brain health#brainhealth#brainpower

5 notes

·

View notes

Text

The Mathematical Foundations of Machine Learning

In the world of artificial intelligence, machine learning is a crucial component that enables computers to learn from data and improve their performance over time. However, the math behind machine learning is often shrouded in mystery, even for those who work with it every day. Anil Ananthaswami, author of the book "Why Machines Learn," sheds light on the elegant mathematics that underlies modern AI, and his journey is a fascinating one.

Ananthaswami's interest in machine learning began when he started writing about it as a science journalist. His software engineering background sparked a desire to understand the technology from the ground up, leading him to teach himself coding and build simple machine learning systems. This exploration eventually led him to appreciate the mathematical principles that underlie modern AI. As Ananthaswami notes, "I was amazed by the beauty and elegance of the math behind machine learning."

Ananthaswami highlights the elegance of machine learning mathematics, which goes beyond the commonly known subfields of calculus, linear algebra, probability, and statistics. He points to specific theorems and proofs, such as the 1959 proof related to artificial neural networks, as examples of the beauty and elegance of machine learning mathematics. For instance, the concept of gradient descent, a fundamental algorithm used in machine learning, is a powerful example of how math can be used to optimize model parameters.

Ananthaswami emphasizes the need for a broader understanding of machine learning among non-experts, including science communicators, journalists, policymakers, and users of the technology. He believes that only when we understand the math behind machine learning can we critically evaluate its capabilities and limitations. This is crucial in today's world, where AI is increasingly being used in various applications, from healthcare to finance.

A deeper understanding of machine learning mathematics has significant implications for society. It can help us to evaluate AI systems more effectively, develop more transparent and explainable AI systems, and address AI bias and ensure fairness in decision-making. As Ananthaswami notes, "The math behind machine learning is not just a tool, but a way of thinking that can help us create more intelligent and more human-like machines."

The Elegant Math Behind Machine Learning (Machine Learning Street Talk, November 2024)

youtube

Matrices are used to organize and process complex data, such as images, text, and user interactions, making them a cornerstone in applications like Deep Learning (e.g., neural networks), Computer Vision (e.g., image recognition), Natural Language Processing (e.g., language translation), and Recommendation Systems (e.g., personalized suggestions). To leverage matrices effectively, AI relies on key mathematical concepts like Matrix Factorization (for dimension reduction), Eigendecomposition (for stability analysis), Orthogonality (for efficient transformations), and Sparse Matrices (for optimized computation).

The Applications of Matrices - What I wish my teachers told me way earlier (Zach Star, October 2019)

youtube

Transformers are a type of neural network architecture introduced in 2017 by Vaswani et al. in the paper “Attention Is All You Need”. They revolutionized the field of NLP by outperforming traditional recurrent neural network (RNN) and convolutional neural network (CNN) architectures in sequence-to-sequence tasks. The primary innovation of transformers is the self-attention mechanism, which allows the model to weigh the importance of different words in the input data irrespective of their positions in the sentence. This is particularly useful for capturing long-range dependencies in text, which was a challenge for RNNs due to vanishing gradients. Transformers have become the standard for machine translation tasks, offering state-of-the-art results in translating between languages. They are used for both abstractive and extractive summarization, generating concise summaries of long documents. Transformers help in understanding the context of questions and identifying relevant answers from a given text. By analyzing the context and nuances of language, transformers can accurately determine the sentiment behind text. While initially designed for sequential data, variants of transformers (e.g., Vision Transformers, ViT) have been successfully applied to image recognition tasks, treating images as sequences of patches. Transformers are used to improve the accuracy of speech-to-text systems by better modeling the sequential nature of audio data. The self-attention mechanism can be beneficial for understanding patterns in time series data, leading to more accurate forecasts.

Attention is all you need (Umar Hamil, May 2023)

youtube

Geometric deep learning is a subfield of deep learning that focuses on the study of geometric structures and their representation in data. This field has gained significant attention in recent years.

Michael Bronstein: Geometric Deep Learning (MLSS Kraków, December 2023)

youtube

Traditional Geometric Deep Learning, while powerful, often relies on the assumption of smooth geometric structures. However, real-world data frequently resides in non-manifold spaces where such assumptions are violated. Topology, with its focus on the preservation of proximity and connectivity, offers a more robust framework for analyzing these complex spaces. The inherent robustness of topological properties against noise further solidifies the rationale for integrating topology into deep learning paradigms.

Cristian Bodnar: Topological Message Passing (Michael Bronstein, August 2022)

youtube

Sunday, November 3, 2024

#machine learning#artificial intelligence#mathematics#computer science#deep learning#neural networks#algorithms#data science#statistics#programming#interview#ai assisted writing#machine art#Youtube#lecture

4 notes

·

View notes

Text

youtube

Discover how to build a CNN model for skin melanoma classification using over 20,000 images of skin lesions

We'll begin by diving into data preparation, where we will organize, clean, and prepare the data form the classification model.

Next, we will walk you through the process of build and train convolutional neural network (CNN) model. We'll explain how to build the layers, and optimize the model.

Finally, we will test the model on a new fresh image and challenge our model.

Check out our tutorial here : https://youtu.be/RDgDVdLrmcs

Enjoy

Eran

#Python #Cnn #TensorFlow #deeplearning #neuralnetworks #imageclassification #convolutionalneuralnetworks #SkinMelanoma #melonomaclassification

#artificial intelligence#convolutional neural network#deep learning#python#tensorflow#machine learning#Youtube

3 notes

·

View notes

Text

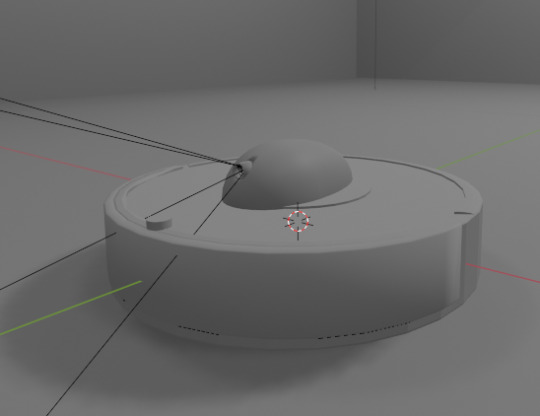

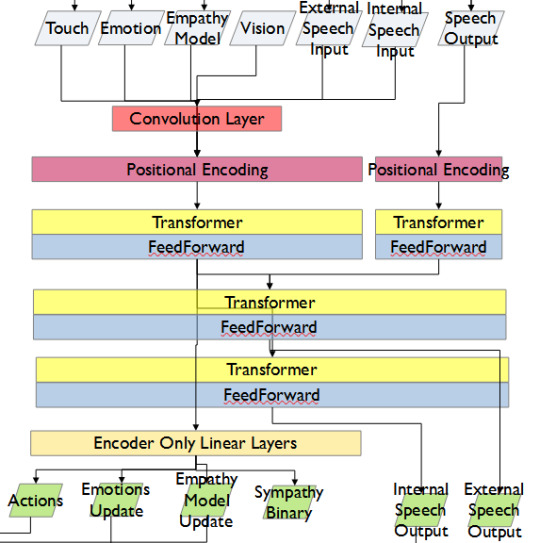

Applied AI - Integrating AI With a Roomba

AKA. What have I been doing for the past month and a half

Everyone loves Roombas. Cats. People. Cat-people. There have been a number of Roomba hacks posted online over the years, but an often overlooked point is how very easy it is to use Roombas for cheap applied robotics projects.

Continuing on from a project done for academic purposes, today's showcase is a work in progress for a real-world application of Speech-to-text, actionable, transformer based AI models. MARVINA (Multimodal Artificial Robotics Verification Intelligence Network Application) is being applied, in this case, to this Roomba, modified with a Raspberry Pi 3B, a 1080p camera, and a combined mic and speaker system.

The hardware specifics have been a fun challenge over the past couple of months, especially relating to the construction of the 3D mounts for the camera and audio input/output system.

Roomba models are particularly well suited to tinkering - the serial connector allows the interface of external hardware - with iRobot (the provider company) having a full manual for commands that can be sent to the Roomba itself. It can even play entire songs! (Highly recommend)

Scope:

Current:

The aim of this project is to, initially, replicate the verbal command system which powers the current virtual environment based system.

This has been achieved with the custom MARVINA AI system, which is interfaced with both the Pocket Sphinx Speech-To-Text (SpeechRecognition · PyPI) and Piper-TTS Text-To-Speech (GitHub - rhasspy/piper: A fast, local neural text to speech system) AI systems. This gives the AI the ability to do one of 8 commands, give verbal output, and use a limited-training version of the emotional-empathy system.

This has mostly been achieved. Now that I know it's functional I can now justify spending money on a better microphone/speaker system so I don't have to shout at the poor thing!

The latency time for the Raspberry PI 3B for each output is a very spritely 75ms! This allows for plenty of time between the current AI input "framerate" of 500ms.

Future - Software:

Subsequent testing will imbue the Roomba with a greater sense of abstracted "emotion" - the AI having a ground set of emotional state variables which decide how it, and the interacting person, are "feeling" at any given point in time.

This, ideally, is to give the AI system a sense of motivation. The AI is essentially being given separate drives for social connection, curiosity and other emotional states. The programming will be designed to optimise for those, while the emotional model will regulate this on a seperate, biologically based, system of under and over stimulation.

In other words, a motivational system that incentivises only up to a point.

The current system does have a system implemented, but this only has very limited testing data. One of the key parts of this project's success will be to generatively create a training data set which will allow for high-quality interactions.

The future of MARVINA-R will be relating to expanding the abstracted equivalent of "Theory-of-Mind". - In other words, having MARVINA-R "imagine" a future which could exist in order to consider it's choices, and what actions it wishes to take.

This system is based, in part, upon the Dyna-lang model created by Lin et al. 2023 at UC Berkley ([2308.01399] Learning to Model the World with Language (arxiv.org)) but with a key difference - MARVINA-R will be running with two neural networks - one based on short-term memory and the second based on long-term memory. Decisions will be made based on which is most appropriate, and on how similar the current input data is to the generated world-model of each model.

Once at rest, MARVINA-R will effectively "sleep", essentially keeping the most important memories, and consolidating them into the long-term network if they lead to better outcomes.

This will allow the system to be tailored beyond its current limitations - where it can be designed to be motivated by multiple emotional "pulls" for its attention.

This does, however, also increase the number of AI outputs required per action (by a magnitude of about 10 to 100) so this will need to be carefully considered in terms of the software and hardware requirements.

Results So Far:

Here is the current prototyping setup for MARVINA-R. As of a couple of weeks ago, I was able to run the entire RaspberryPi and applied hardware setup and successfully interface with the robot with the components disconnected.

I'll upload a video of the final stage of initial testing in the near future - it's great fun!

The main issues really do come down to hardware limitations. The microphone is a cheap ~$6 thing from Amazon and requires you to shout at the poor robot to get it to do anything! The second limitation currently comes from outputting the text-to-speech, which does have a time lag from speaking to output of around 4 seconds. Not terrible, but also can be improved.

To my mind, the proof of concept has been created - this is possible. Now I can justify further time, and investment, for better parts and for more software engineering!

#robot#robotics#roomba#roomba hack#ai#artificial intelligence#machine learning#applied hardware#ai research#ai development#cybernetics#neural networks#neural network#raspberry pi#open source

8 notes

·

View notes

Text

The Ai Peel

Welcome to The Ai Peel!

Dive into the fascinating world of artificial intelligence with us. At The Ai Peel, we unravel the layers of AI to bring you insightful content, from beginner-friendly explanations to advanced concepts. Whether you're a tech enthusiast, a student, or a professional, our channel offers something for everyone interested in the rapidly evolving field of AI. What You Can Expect: AI Basics: Simplified explanations of fundamental AI concepts. Tutorials: Step-by-step guides on popular AI tools and techniques. Latest Trends: Stay updated with the newest advancements and research in AI. In-depth Analyses: Explore detailed discussions on complex AI topics. Real-World Applications: See how AI is transforming industries and everyday life. Join our community of AI enthusiasts and embark on a journey to peel back the layers of artificial intelligence.

Don't forget to subscribe and hit the notification bell so you never miss an update!

#Artificial Intelligence#Machine Learning#AI Tutorials#Deep Learning#AI Basics#AI Trends#AI Applications#Neural Networks#AI Technology#AI Research

3 notes

·

View notes

Text

It was just me and the six-legged goat in the back of the truck. The goat had been put together to army specs, so no pink was involved. Everything was drab olive, smoky, dirty—the colors of war. I had been told that the six-legger's control mechanism consisted of cultivated horse nerves specially trained for the environment. The original horse came from local stock, so they were used to the mountains, Uwe said.

"Harmony" by Project Itoh, 2008

In the marvellous science fiction novel "Harmony", Japanese author Project Itoh describes autonomous vehicles like airplanes or legged robots that have animal brain tissue in their control mechanisms, so that their computer systems have quick, almost intuitive access to information about how to use wings or legs. In 2008, when "harmony" was first published, this must have been a weird but fascinating thought. Bionics put to the extreme.

I first read "Harmony" in 2018, after watching the absolutely congenial anime adaptation by Michael Arias and Takashi Nakamura of Studio 4°C. And ever since this time I increasingly have to think about machine learning and artificial neural networks when I'm reminded of "harmony". And about how very clever Itoh's vision of the future was and still is.

No, the computers of the future won't need real brain tissue. But simulating the neural network of a goat to enable a mobile robot to climb some rocks? Pretty clever.

Pretty clever, Project Itoh.

#he's a genius#project itoh#keikaku itoh#harmony#project itoh harmony#book recommendations#science fiction#robotics#bionics#neural network#machine learning#schroed's thoughts

3 notes

·

View notes

Text

#accounting#python#linux#machine learning#marketing#neural network#poster#programming#rpg maker#sales#digital painting#digital illustration#digital drawing#digitalmarketing#drawing#artists on tumblr#digital art#procreate#lineart#social marketing#social media#socialism#social anxiety#social issues#social justice#global news#trading view#news 1#stablecoins#no homepage

2 notes

·

View notes

Text

A fun way to think about this, for people who don’t know what an algorithm really is, is that algorithm is basically a fancy word for recipe. It’s a set of instructions to take a set of inputs (ingredients) and produce a desired output.

When a site produces an algorithm to recommend content, the input comes from the information they have about you, other users, and the content they are ranking, and the output is an ordered list of top picks for you. It basically takes in everything and then the person writing the algorithm chooses which inputs to actually use.

Imagine that like as the entire grocery store is available to be used, so the person writing the recipe lists out the specific ones used within the steps of the recipe as ingredients to use.

Then they write all the steps in a way that another cook could understand, maybe with helpful notes (comments) along the way describing why they did certain things. And in the end they have a recipe that someone else could follow, make informed changes to, explain the reasoning for decisions, etc.

That’s a traditional algorithm. Sorting by a single field like kudos is the simplest form of this, like a recipe for toast.

Ingredients:

Bread, 1 slice

Instructions:

Put the bread in the toaster.

Pull down the lever.

Wait until it pops up.

Enjoy your toast!

Is that a recipe? Yes, clearly.

Now let’s consider what the equivalent of ML-based recommendation systems (the key differentiator of what people often refer to as “the algorithm”) is:

Ingredients:

The entire grocery store

Instructions:

Put the grocery store into THE MACHINE

THE MACHINE should be set to 0.135, 0.765, 0.474, 0.8833… (this list continues for hundreds of entries)

Consume your personalized Feed™️

Is that a recipe? Technically yes.

“Ao3 needs an algorithm” no it doesn’t, part of the ao3 experience is scrolling through pages of cursed content looking for the one fic you want to read until you get distracted by a summary so cursed that it completely derails your entire search

#algorithm#the algorithm#feed#programming#computer science#cs#machine learning#deep learning#neural networks

91K notes

·

View notes