#apr 4 2023

Text

136649 Poll (redo)

I should have also given the option to combine those two ideas, so here's another version of the same poll:

38 notes

·

View notes

Text

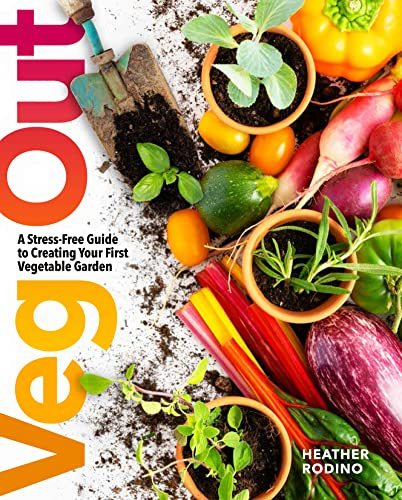

Veg Out

Veg Out is the perfect gardening book for newbies who want an edible garden (or a patio pot or two) but have never read another gardening book. It is clearly laid out with plenty of photos. The steps to proceed are easy to follow. There is an extensive section with details on common plants. Overall, a great resource for individuals newly interesting in growing their own food. 4 stars!

Thanks to…

View On WordPress

0 notes

Text

awstenknight: WATERPARKS ALBUM IN 3 DAYS SWIPE FOR SURPRISES

[Apr 11, 2023]

233 notes

·

View notes

Text

he's lying to himself he's very much also a dumb virgin

#art#my art#apr 2023#persona#p5#goro akechi#royal trio#hes also lying to himself in the sense that he loves them really /p#(/p intent w sumi as always but tag how you like)#on the topic of greentexts do you think he would use 4/ch/an. not on like... /pol/ or whatever but just to argue about shit#reddit yeah sure but i do think the ability to send anonymous death threats without getting banned would b very alluring to him#edit: im entering this into my Internal Akechi Lore now actually i think he leaks info about shidos campaign on 4chan#and gets brushed off as a conspiracy theorist but it blows up after shidos arrested

165 notes

·

View notes

Photo

#jwight#jim dwight#schrupert#jim x dwight#the office tv#jim and dwight#dwight schrute#jim halpert#the office#office tv#original post#jim&dwight#date: 4 apr 2023#ep 9x11

180 notes

·

View notes

Text

Denso apr Racing climbed the standings in the 2023 Super Taikyu Fuji 4 Hour to top the field with their winning N°31 Lexus RC F GT3 at Fuji Speedway JP.

#lexus rc f gt3#4 hours of fuji#denso apr racing#fuji speedway#super taikyu#2023 supertaikyu#スーパー耐#富士スピードウェイ#s耐

8 notes

·

View notes

Note

they have a point though. you wouldn't need everyone to accommodate you if you just lost weight, but you're too lazy to stick to a healthy diet and exercise. it's that simple. I'd like to see you back up your claims, but you have no proof. you have got to stop lying to yourselves and face the facts

Must I go through this again? Fine. FINE. You guys are working my nerves today. You want to talk about facing the facts? Let's face the fucking facts.

In 2022, the US market cap of the weight loss industry was $75 billion [1, 3]. In 2021, the global market cap of the weight loss industry was estimated at $224.27 billion [2].

In 2020, the market shrunk by about 25%, but rebounded and then some since then [1, 3] By 2030, the global weight loss industry is expected to be valued at $405.4 billion [2]. If diets really worked, this industry would fall overnight.

1. LaRosa, J. March 10, 2022. "U.S. Weight Loss Market Shrinks by 25% in 2020 with Pandemic, but Rebounds in 2021." Market Research Blog.

2. Staff. February 09, 2023. "[Latest] Global Weight Loss and Weight Management Market Size/Share Worth." Facts and Factors Research.

3. LaRosa, J. March 27, 2023. "U.S. Weight Loss Market Partially Recovers from the Pandemic." Market Research Blog.

Over 50 years of research conclusively demonstrates that virtually everyone who intentionally loses weight by manipulating their eating and exercise habits will regain the weight they lost within 3-5 years. And 75% will actually regain more weight than they lost [4].

4. Mann, T., Tomiyama, A.J., Westling, E., Lew, A.M., Samuels, B., Chatman, J. (2007). "Medicare’s Search For Effective Obesity Treatments: Diets Are Not The Answer." The American Psychologist, 62, 220-233. U.S. National Library of Medicine, Apr. 2007.

The annual odds of a fat person attaining a so-called “normal” weight and maintaining that for 5 years is approximately 1 in 1000 [5].

5. Fildes, A., Charlton, J., Rudisill, C., Littlejohns, P., Prevost, A.T., & Gulliford, M.C. (2015). “Probability of an Obese Person Attaining Normal Body Weight: Cohort Study Using Electronic Health Records.” American Journal of Public Health, July 16, 2015: e1–e6.

Doctors became so desperate that they resorted to amputating parts of the digestive tract (bariatric surgery) in the hopes that it might finally result in long-term weight-loss. Except that doesn’t work either. [6] And it turns out it causes death [7], addiction [8], malnutrition [9], and suicide [7].

6. Magro, Daniéla Oliviera, et al. “Long-Term Weight Regain after Gastric Bypass: A 5-Year Prospective Study - Obesity Surgery.” SpringerLink, 8 Apr. 2008.

7. Omalu, Bennet I, et al. “Death Rates and Causes of Death After Bariatric Surgery for Pennsylvania Residents, 1995 to 2004.” Jama Network, 1 Oct. 2007.

8. King, Wendy C., et al. “Prevalence of Alcohol Use Disorders Before and After Bariatric Surgery.” Jama Network, 20 June 2012.

9. Gletsu-Miller, Nana, and Breanne N. Wright. “Mineral Malnutrition Following Bariatric Surgery.” Advances In Nutrition: An International Review Journal, Sept. 2013.

Evidence suggests that repeatedly losing and gaining weight is linked to cardiovascular disease, stroke, diabetes and altered immune function [10].

10. Tomiyama, A Janet, et al. “Long‐term Effects of Dieting: Is Weight Loss Related to Health?” Social and Personality Psychology Compass, 6 July 2017.

Prescribed weight loss is the leading predictor of eating disorders [11].

11. Patton, GC, et al. “Onset of Adolescent Eating Disorders: Population Based Cohort Study over 3 Years.” BMJ (Clinical Research Ed.), 20 Mar. 1999.

The idea that “obesity” is unhealthy and can cause or exacerbate illnesses is a biased misrepresentation of the scientific literature that is informed more by bigotry than credible science [12].

12. Medvedyuk, Stella, et al. “Ideology, Obesity and the Social Determinants of Health: A Critical Analysis of the Obesity and Health Relationship” Taylor & Francis Online, 7 June 2017.

“Obesity” has no proven causative role in the onset of any chronic condition [13, 14] and its appearance may be a protective response to the onset of numerous chronic conditions generated from currently unknown causes [15, 16, 17, 18].

13. Kahn, BB, and JS Flier. “Obesity and Insulin Resistance.” The Journal of Clinical Investigation, Aug. 2000.

14. Cofield, Stacey S, et al. “Use of Causal Language in Observational Studies of Obesity and Nutrition.” Obesity Facts, 3 Dec. 2010.

15. Lavie, Carl J, et al. “Obesity and Cardiovascular Disease: Risk Factor, Paradox, and Impact of Weight Loss.” Journal of the American College of Cardiology, 26 May 2009.

16. Uretsky, Seth, et al. “Obesity Paradox in Patients with Hypertension and Coronary Artery Disease.” The American Journal of Medicine, Oct. 2007.

17. Mullen, John T, et al. “The Obesity Paradox: Body Mass Index and Outcomes in Patients Undergoing Nonbariatric General Surgery.” Annals of Surgery, July 2005. 18. Tseng, Chin-Hsiao. “Obesity Paradox: Differential Effects on Cancer and Noncancer Mortality in Patients with Type 2 Diabetes Mellitus.” Atherosclerosis, Jan. 2013.

Fatness was associated with only 1/3 the associated deaths that previous research estimated and being “overweight” conferred no increased risk at all, and may even be a protective factor against all-causes mortality relative to lower weight categories [19].

19. Flegal, Katherine M. “The Obesity Wars and the Education of a Researcher: A Personal Account.” Progress in Cardiovascular Diseases, 15 June 2021.

Studies have observed that about 30% of so-called “normal weight” people are “unhealthy” whereas about 50% of so-called “overweight” people are “healthy”. Thus, using the BMI as an indicator of health results in the misclassification of some 75 million people in the United States alone [20].

20. Rey-López, JP, et al. “The Prevalence of Metabolically Healthy Obesity: A Systematic Review and Critical Evaluation of the Definitions Used.” Obesity Reviews : An Official Journal of the International Association for the Study of Obesity, 15 Oct. 2014.

While epidemiologists use BMI to calculate national obesity rates (nearly 35% for adults and 18% for kids), the distinctions can be arbitrary. In 1998, the National Institutes of Health lowered the overweight threshold from 27.8 to 25—branding roughly 29 million Americans as fat overnight—to match international guidelines. But critics noted that those guidelines were drafted in part by the International Obesity Task Force, whose two principal funders were companies making weight loss drugs [21].

21. Butler, Kiera. “Why BMI Is a Big Fat Scam.” Mother Jones, 25 Aug. 2014.

Body size is largely determined by genetics [22].

22. Wardle, J. Carnell, C. Haworth, R. Plomin. “Evidence for a strong genetic influence on childhood adiposity despite the force of the obesogenic environment” American Journal of Clinical Nutrition Vol. 87, No. 2, Pages 398-404, February 2008.

Healthy lifestyle habits are associated with a significant decrease in mortality regardless of baseline body mass index [23].

23. Matheson, Eric M, et al. “Healthy Lifestyle Habits and Mortality in Overweight and Obese Individuals.” Journal of the American Board of Family Medicine : JABFM, U.S. National Library of Medicine, 25 Feb. 2012.

Weight stigma itself is deadly. Research shows that weight-based discrimination increases risk of death by 60% [24].

24. Sutin, Angela R., et al. “Weight Discrimination and Risk of Mortality .” Association for Psychological Science, 25 Sept. 2015.

Fat stigma in the medical establishment [25] and society at large arguably [26] kills more fat people than fat does [27, 28, 29].

25. Puhl, Rebecca, and Kelly D. Bronwell. “Bias, Discrimination, and Obesity.” Obesity Research, 6 Sept. 2012.

26. Engber, Daniel. “Glutton Intolerance: What If a War on Obesity Only Makes the Problem Worse?” Slate, 5 Oct. 2009.

27. Teachman, B. A., Gapinski, K. D., Brownell, K. D., Rawlins, M., & Jeyaram, S. (2003). Demonstrations of implicit anti-fat bias: The impact of providing causal information and evoking empathy. Health Psychology, 22(1), 68–78.

28. Chastain, Ragen. “So My Doctor Tried to Kill Me.” Dances With Fat, 15 Dec. 2009. 29. Sutin, Angelina R, Yannick Stephan, and Antonio Terraciano. “Weight Discrimination and Risk of Mortality.” Psychological Science, 26 Nov. 2015.

There's my "proof." Where is yours?

#inbox#fat liberation#fat acceptance#fat activism#anti fatness#anti fat bias#anti diet#resources#facts#weight science#save

8K notes

·

View notes

Link

裕子(ヒロコ)🌸 @hiroko_fujimaki

2023/04/21 1:52:04

こんな時間にこんなお知らせ来た❗️ そんなの覚えてないけど、お知らせ来たってことはそういうことなんだろうね⁉️ もう3時間半しか寝られないけど、今日の幸せを噛み締め、素敵な夢を見ながら眠りたい🎵 寝坊しないことを祈りつつ··😅

Twitterに登録した日を覚えていますか? #MyTwitterAnniversary

https://twitter.com/hiroko_fujimaki/status/1649093586586320896

0 notes

Text

Apr 4 - Toilet Cleaning Tuesday plus also went through the fridge and threw out some things that were getting rather elderly. Still a couple more things to go, but what I already removed topped off a composting bag. What remains can wait until Thursday night when I’ll be getting additional compostables and recyclables ready to go out the next morning.

Had french fries and a chicken burger for supper.

0 notes

Text

Earthquake

This is the kind of quake ya want to feel.

Big enough to be exciting.

Small enough that no injuries or major damage reported.

About a minute afterwards I heard folks down the street come outdoors and loudly say Earthquake.

Like, okay, yeah, I got that figured out, thanks ;)

#earthquake#life's little excitements#I don't know those neighbors#so I don't know if they were just funnin'#commented Apr 4 2023

1 note

·

View note

Note

Hello! Hope you're having a nice day. I just wanted to ask something about Charles phipps because it's been one of my shower thoughts lol. So we know Charles grey is an earl right? How about Charles Phipps? I don't think there's a mention of his peerage title or anything. He's only called by 'sir' and if he was from a noble family afaik he would be called 'Lord'. Is it possible that he's not from a noble family? No one mentioned he was an earl or anything. It doesn't seem like he's the son of a noble either. Just very curious about this miniscule detail. '

Is Phipps a noble?

We must assume that Charles Phipps is in the nobility. And here's why: generally speaking, any high-ranking servant of the queen is going to be of high birth. Even the queen's chambermaids or ladies-in-waiting are in the peerage. Victoria even calls him Phipps, which places him higher than he would have been if she'd called him Charles.

Charles Grey becomes earl when his father dies, which occurs during the manga series. Idk Phipps' rank, but I suspect he might be even higher than an earl. However, if his father is still alive, he won't have the title just yet. He'd just be Lord Phipps... but the queen doesn't have to call him by that, and she definitely seems comfortable enough skipping the formality.

I have not noticed him being called sir, but if that's true, then he's knighted. And I'd believe it.

Anyway, that link also mentions how the court is filled by nepotism. Grey and Phipps likely got their positions because of their noble parents. They had to train, of course, and they must have various skills already, but they would have been selected among the sons of noble families.

#black butler#kuroshitsuji#charles phipps#charles grey#double charles#nobility#peerage#anon asks#i answer#answered asks#apr 4 2023#sorry for the delay

41 notes

·

View notes

Text

Dirty Laundry

Even in suburban Ireland, stay-at-home wives with young children hide behind their Instagram filters while their real home life falls apart. Ciara and Lauren are neighbors—but certainly not friends. Lauren’s life is chaotic but is based on love. Ciara, an important, at least in her own mind, Instagram influencer, has an enviable life from the outside. But inside her home, the Dirty Laundry is…

View On WordPress

0 notes

Text

mikeyway: 👀 👀 👀 @fender

[Apr 11, 2023]

122 notes

·

View notes

Text

الطريق هو نفسهُ

قدماي تستمتعان بالنّزهة

شكرا أيّها النّسيان

- الأخضر بركة

0 notes

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

733 notes

·

View notes

Text

The 2023 Super Taikyu series comes to a close with Denso apr Racing finding their N°31 Lexus RC F GT3 triumphant at the Fuji 4 Hour.

#lexus rc f gt3#fuji 4 hour#apr racing#fuji speedway#super taikyu#2023 fuji4h#2023 supertaikyu#S耐#スーパー耐#富士スピードウェイ

12 notes

·

View notes