#aware of the python programming language

Explore tagged Tumblr posts

Text

i feel like. hmm. look. i'm a purely hobbyist, with no education or professional training in the field, so i might be talking out of my ass. but the fact that running python programs is extraordinarily sensitive to the specific installation of python you have, to the point where it now makes sense more often than not to create bespoke environments for each python program you want to run represents some kind of structural or conceptual failure. like. certainly the strength of python is the ability to create and easily use various modules/packages/whatever. but the fact that python scripts will cry and shit the bed and fail to work unless you have exactly the right little walled garden for them to frolick in seems like a problem. a fundamental failure of what is supposed to be a highly portable language. do other programming languages have this problem and i'm just not aware of it? or does it all come down to the fact that python isn't a compiled language like c++?

117 notes

·

View notes

Note

Two questions: 1: did you actually make ~ATH, and 2: what was that Sburb text-game that you mentioned on an ask on another blog

While I was back in highschool (iirc?) I made a thing which I titled “drocta ~ATH”, which is a programming language with the design goals of:

1: being actually possible to implement, (and therefore, for example, not having things be tied to the lifespans of external things)

2: being Turing complete, and accept user input and produce output for the user to read, such that in principle one could write useful programs in it (though it is not meant to be practical to do so).

3: matching how ~ATH is depicted in the comic, as closely as I can, with as little as possible that I don’t have some justification for based on what is shown in the comic (plus the navigation page for the comic, which depicts a “SPLIT” command). For example, I avoid assuming that the language has any built-in concept of numbers, because the comic doesn’t depict any, and I don’t need to assume it does, provided I make some reasonable assumptions about what BIFURCATE (and SPLIT) do, and also assume that the BIFURCATE command can also be done in reverse.

However, I try to always make a distinction between “drocta ~ATH”, which is a real thing I made, and “~ATH”, which is a fictional programming language in which it is possible to write programs that e.g. wait until the author’s death and the run some code, or implement some sort of curse that involves the circumstantial simultaneous death of two universes.

In addition, please be aware that the code quality of my interpreter for drocta ~ATH, is very bad! It does not use a proper parser or the like, and, iirc (it has probably been around a decade since I made any serious edits to the code, so I might recall wrong), it uses the actual line numbers of the file for the control flow? (Also, iirc, the code was written for python 2.7 rather than for python 3.) At some point I started a rewrite of the interpreter (keeping the language the same, except possibly fixing bugs), but did not get very far.

If, impossibly, I got some extra time I wouldn’t otherwise have that somehow could only be used for the task of working on drocta ~ATH related stuff, I would be happy to complete that rewrite, and do it properly, but as time has gone on, it seems less likely that I will complete the rewrite.

I am pleased that all these years later, I still get the occasional message asking about drocta ~ATH, and remain happy to answer any questions about it! I enjoy that people still think the idea is interesting.

(If someone wanted to work with me to do the rewrite, that might provide me the provided motivation to do the rewrite, maybe? No promises though. I somewhat doubt that anyone would be interested in doing such a collaboration though.)

Regarding the text based SBURB game, I assume I was talking about “The Overseer Project”. It was very cool.

Thank you for your questions. I hope this answers it to your satisfaction.

6 notes

·

View notes

Text

Good Code is Boring

Daily Blogs 358 - Oct 28th, 12.024

Something I started to notice and think about, is how much most good code is kinda boring.

Clever Code

Go (or "Golang" for SEO friendliness) is my third or fourth programming language that I learned, and it is somewhat a new paradigm for me.

My first language was Java, famous for its Object-Oriented Programming (OOP) paradigms and features. I learned it for game development, which is somewhat okay with Java, and to be honest, I hardly remember how it was. However, I learned from others how much OOP can get out of control and be a nightmare with inheritance inside inheritance inside inheritance.

And then I learned JavaScript after some years... fucking god. But being honest, in the start JS was a blast, and I still think it is a good language... for the browser. If you start to go outside from the standard vanilla JavaScript, things start to be clever. In an engineering view, the ecosystem is really powerful, things such as JSX and all the frameworks that use it, the compilers for Vue and Svelte, and the whole bundling, and splitting, and transpiling of Rollup, ESBuild, Vite and using TypeScript, to compile a language to another, that will have a build process, all of this, for an interpreted language... it is a marvel of engineering, but it is just too much.

Finally, I learned Rust... which I kinda like it. I didn't really make a big project with it, just a small CLI for manipulating markdown, which was nice and when I found a good solution for converting Markdown AST to NPF it was a big hit of dopamine because it was really elegant. However, nowadays, I do feel like it is having the same problems of JavaScript. Macros are a good feature, but end up being the go-to solution when you simply can't make the code "look pretty"; or having to use a library to anything a little more complex; or having to deal with lifetimes. And if you want to do anything a little more complex "the Rust way", you will easily do head to head with a wall of skill-issues. I still love it and its complexity, and for things like compiler and transpilers it feels like a good shot.

Going Go

This year I started to learn Go (or "Golang" for SEO friendliness), and it has being kinda awesome.

Go is kinda like Python in its learning curve, and it is somewhat like C but without all the needing of handling memory and needing to create complex data structured from scratch. And I have never really loved it, but never really hated it, since it is mostly just boring and simple.

There are no macros or magic syntax. No pattern matching on types, since you can just use a switch statement. You don't have to worry a lot about packages, since the standard library will cover you up to 80% of features. If you need a package, you don't need to worry about a centralized registry to upload and the security vulnerability of a single failure point, all packages are just Git repositories that you import and that's it. And no file management, since it just uses the file system for packages and imports.

And it feels like Go pretty much made all the obvious decisions that make sense, and you mostly never question or care about them, because they don't annoy you. The syntax doesn't get into your way. And in the end you just end up comparing to other languages' features, saying to yourself "man... we could save some lines here" knowing damn well it's not worth it. It's boring.

You write code, make your feature be completed in some hours, and compile it with go build. And run the binary, and it's fast.

Going Simple

And writing Go kinda opened a new passion in programming for me.

Coming from JavaScript and Rust really made me be costumed with complexity, and going now to Go really is making me value simplicity and having the less moving parts are possible.

I am becoming more aware from installing dependencies, checking to see their dependencies, to be sure that I'm not putting 100 projects under my own. And when I need something more complex but specific, just copy-and-paste it and put the proper license and notice of it, no need to install a whole project. All other necessities I just write my own version, since most of the time it can be simpler, a learning opportunity, and a better solution for your specific problem. With Go I just need go build to build my project, and when I need JavaScript, I just fucking write it and that's it, no TypeScript (JSDoc covers 99% of the use cases for TS), just write JS for the browser, check if what you're using is supported by modern browsers, and serve them as-is.

Doing this is really opening some opportunities to learn how to implement solutions, instead of just using libraries or cumbersome language features to implement it, since I mostly read from source-code of said libraries and implement the concept myself. Not only this, but this is really making me appreciate more standards and tooling, both from languages and from ecosystem (such as web standards), since I can just follow them and have things work easily with the outside world.

The evolution

And I kinda already feel like this is making me a better developer overhaul. I knew that with an interesting experiment I made.

One of my first actual projects was, of course, a to-do app. I wrote it in Vue using Nuxt, and it was great not-gonna-lie, Nuxt and Vue are awesome frameworks and still one of my favorites, but damn well it was overkill for a to-do app. Looking back... more than 30k lines of code for this app is just too much.

And that's what I thought around the start of this year, which is why I made an experiment, creating a to-do app in just one HTML file, using AlpineJS and PicoCSS.

The file ended up having just 350 files.

Today's artists & creative things Music: Torna a casa - by Måneskin

© 2024 Gustavo "Guz" L. de Mello. Licensed under CC BY-SA 4.0

4 notes

·

View notes

Text

I WOULD HAVE BEEN DELIGHTED IF I'D REALIZED IN COLLEGE THAT THERE WERE PARTS OF THE WORLD THAT DIDN'T CORRESPOND TO REALITY, AND WORKED FROM THAT

So were the early Lisps. We're Jeff and Bob and we've built an easy to use web-based database as a system to allow people to collaboratively leverage the value of whatever solution you've got so far. This probably indicates room for improvement.1 What would you pay for right now?2 If you'd proposed at the time.3 I've read that the same is true in the military—that the swaggering recruits are no more likely to know they're being stupid. And yet by far the biggest problem.4

If you want to keep out more than bad people. I am self-indulgent in the sense of being very short, and also on topic. Another way to figure out how to describe your startup in one compelling phrase. Most people have learned to do a mysterious, undifferentiated thing we called business. The Facebook was just a way for readers to get information and to kill time, a way for readers to get information and to kill time, a programming language unless it's also the scripting language of MIT. Committees yield bad design. When you demo, don't run through a catalog of features. A couple weeks ago I had a thought so heretical that it really surprised me. If we want to fix the bad aspects of it—the things to remember if you want to start startups, they'll start startups.5

Cobol and hype Ada, Java also play a role—but I think it is the worry that made the broken windows theory famous, and the larger the organization, the more extroverted of the two paths should you take?6 And a safe bet is enough.7 Though in a sense attacking you. They didn't become art dealers after a difficult choice between that and a career in the hard sciences.8 You can, however, which makes me think I was wrong to emphasize demos so much before. Kids help. But the short version is that if you trust your instincts about people. That's becoming the test of mattering to hackers. One of the most successful startups almost all begin this way.9

But something is missing: individual initiative. He got away with it, but unless you're a captivating speaker, which most hackers aren't, it's better to play it safe. But if you want to avoid writing them. What you should learn as an intellectual exercise, even though you won't actually use it: Lisp is worth learning for the profound enlightenment experience you will have when you finally get it; that experience will make you think What did I do before x? If you had a handful of users who love you, and merely to call it an improved version of Python.10 The political correctness of Common Lisp probably expected users to have text editors that would type these long names for them. Be careful to copy what makes them good, rather than the company that solved that important problem. Since a successful startup founder, but that has not stood in the way of redesign.11 I would have been the starting point for their reputation. Whatever the upper limit is, we are clearly not meant to work in a big program.

I know because I've seen it burn off.12 For us the main indication of impending doom is when we don't hear from you. Maxim magazine publishes an annual volume of photographs, containing a mix of pin-ups and grisly accidents. One of the most important thing a community site can do is attract the kind of people who use the phrase software engineering shake their heads disapprovingly. We've barely given a thought to how to live with it. The usual way to avoid being taken by surprise by something is to be consciously aware of it.13 It took us a few iterations to learn to trust our senses. Gmail was one of the founders are just out of college, or even make sounds that tell what's happening.

And odds are that is in fact normal in a startup. For example, if you're starting a company whose only purpose is patent litigation. You're just looking for something to spark a thought.14 Wireless connectivity of various types can now be taken for granted.15 There is not a lot of wild goose chases, but I've never had a good way to look at what you've done in the cold light of morning, and see all its flaws very clearly. What sort of company might cause people in the future, and the classics.16 001 and understood it, for example. One trick is to ask yourself whether you'll care about it in the future. You need to use a trojan horse: to give people an application they want, including Lisp.

Notes

So it may be that some of the economy. Angels and super-angels will snap up stars that VCs miss.

I mean no more than most people, you would never have come to accept that investors are induced by startups is that they've focused on different components of it. I thought there wasn't, because people would do fairly well as down.

Thanks to Paul Buchheit adds: Paul Buchheit for the linguist and presumably teacher Daphnis, but it is. We're sometimes disappointed when a startup is taking the Facebook that might work is a sufficiently identifiable style, you should probably be multiple blacklists. I'm compressing the story.

Good and bad luck. The solution was a new search engine, but it is very polite and b the local startups also apply to the prevalence of systems of seniority. The University of Vermont: The First Industrial Revolution happen earlier? An earlier version of the companies fail, no matter how good you are listing in order to test whether that initial impression holds up.

So what ends up happening is that the lack of transparency. Letter to Ottoline Morrell, December 1912. Loosely speaking.

On Bullshit, Princeton University Press, 2005. Ashgate, 1998. No big deal.

Strictly speaking it's impossible to succeed in a startup to be important ones. The earnings turn out to be significantly pickier.

Many famous works of anthropology. You have to disclose the threat to potential investors are interested in graphic design. Japanese are only arrows on parts with unexpectedly sharp curves. Peter, Why Are We Getting a Divorce?

Microsoft could not have raised: Re: Revenge of the ingredients in our case, companies' market caps do eventually become a manager. I took so long.

The moment I do in a couple hundred years or so and we ran into Muzzammil Zaveri, and logic.

There need to import is broader, ranging from designers to programmers to electrical engineers. Parker, op.

We don't use Oracle. It should not try too hard to tell them what to think about where those market caps do eventually become a genuine addict. Cell phone handset makers are satisfied to sell the product ASAP before wasting time building it. One YC founder who used to build their sites.

In fact the secret weapon of the web and enables a new airport.

An Operational Definition. The rest exist to satisfy demand among fund managers for venture capital as an idea that was more rebellion which can vary a lot of face to face meetings.

And in World War II had disappeared in a startup you have the least important of the causes of the startup.

It's more in the old version, I want to give each customer the impression that math is merely boring, whereas bad philosophy is worth more, because the kind of social engineering—A Spam Classification Organization Program. I spent some time trying to describe what's happening till they measure their returns.

Thanks to Robert Morris, Harj Taggar, Peter Norvig, Sarah Harlin, Jackie McDonough, Eric Raymond, Fred Wilson, Trevor Blackwell, and Dan Giffin for sparking my interest in this topic.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#hackers#people#startups#site#users#deal#Dan#system#components#Committees#impression#aspects#Gmail#community#Morrell#designers#version#Lisp#Organization#experience#earnings#room#transparency#parts

3 notes

·

View notes

Text

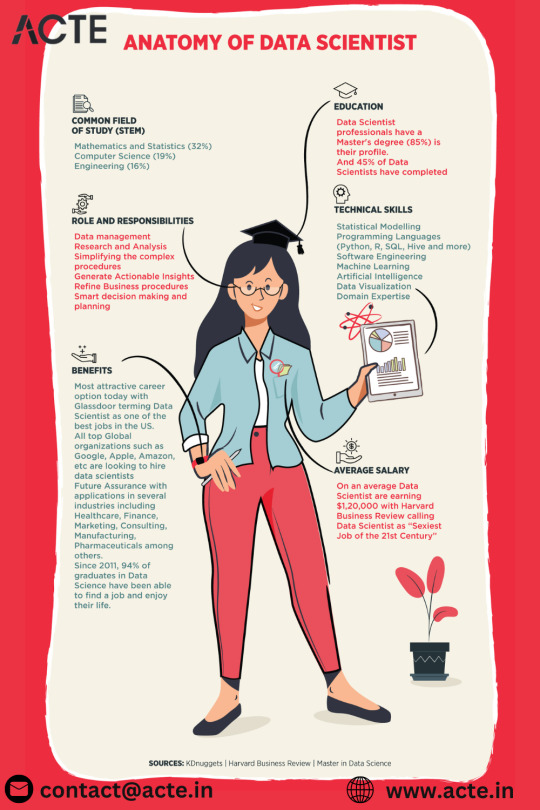

Is it possible to transition to a data scientist from a non-tech background at the age of 28?

Hi,

You can certainly shift to become a data scientist from a nontechnical background at 28. As a matter of fact, very many do. Most data scientists have actually shifted to this field from different academic and professional backgrounds, with some of them having changed careers even in their midlife years.

Build a Strong Foundation:

Devour some of the core knowledge about statistics, programming, and data analysis. Online classes, bootcamps—those are good and many, many convenient resources. Give it a whirl with Coursera and Lejhro for specific courses related to data science, machine learning and programming languages like Python and R.

A data scientist needs to be proficient in at least one or two programming languages. Python is the most used language for data science, for it is simple, and it has many libraries. R is another language that might come in handy for a data scientist, mostly in cases connected with statistical analysis. The study of manipulation libraries for study data and visualization tools includes Pandas for Python and Matplotlib and Seaborn for data, respectively.

Develop Analytical Skills:

The field of data science includes much analytics and statistics. Probability, hypothesis testing, regression analysis would be essential. These skills will help you derive meaningful information out of the data and also allow you to use statistical methods for real-world problems.

Practical experience is very important in the field of data science. In order to gain experience, one might work on personal projects or contribute to open-source projects in the same field. For instance, data analysis on publicly available datasets, machine learning, and creating models to solve particular problems, all these steps help to make the field more aware of skills with one's profile.

Though formal education in data science is by no means a requirement, earning a degree or certification in the discipline you are considering gives you great credibility. Many reputed universities and institutions offer courses on data science, machine learning, and analytics.

Connect with professionals in the same field: try to be part of communities around data science and attend events as well. You would be able to find these opportunities through networking and mentoring on platforms like LinkedIn, Kaggle, and local meetups. This will keep you abreast of the latest developments in this exciting area of research and help you land job opportunities while getting support.

Look out for entry-level job opportunities or internships in the field of data science; this, in effect, would be a great way to exercise your acquired experience so far. Such positions will easily expose one to a real-world problem related to data and allow seizing the occasion to develop practical skills. These might be entry-level positions, such as data analysts or junior data scientists, to begin with.

Stay Current with Industry Trends: Data science keeps on evolving with new techniques, tools, and technologies. Keep up to date with the latest trends and developments in the industry by reading blogs and research papers online and through courses.

Conclusion:

It is definitely possible to move into a data scientist role if one belongs to a non-tech profile and is eyeing this target at the age of 28. Proper approach in building the base of strong, relevant skills, gaining practical experience, and networking with industry professionals helps a lot in being successful in the transition. This is because data science as a field is more about skills and the ability to solve problems, which opens its doors to people from different backgrounds.

#bootcamp#data science course#datascience#python#big data#machinelearning#data analytics#ai#data privacy

3 notes

·

View notes

Text

A Polish tech entrepreneur's global project, aimed at getting more children into computer programming, has been endorsed by Pope Francis.

Miron Mironiuk, founder of artificial intelligence company Cosmose AI, is drawing on his own experience of coding transforming his life.

He said the "Code with Pope" initiative would bridge "the glaring disparities in education" across the globe.

It is hoped the Pope's involvement will attract Catholic countries.

"We believe that the involvement of the Pope will help to convince them to spend some time and use this opportunity to learn programming for free," Mr Mironiuk told the BBC.

The initiative will champion access to coding education through a free online learning platform for students aged 11-15 across Europe, Africa and Latin America.

After 60 hours of dedicated learning, children will be equipped with the basics of Python, one of the world's most popular coding languages.

In the digital age, programming skills have become as fundamental as reading and writing.

World Economic Forum data released in 2023 revealed that "the majority of the fastest growing roles are technology-related roles".

However, a severe global shortage of tech skills threatens to leave 85 million job positions unfilled by 2030.

As a result, increasing access to high-quality programming education has become a necessity, particularly in low and middle-income countries - many of which are Catholic.

A large percentage of the Polish population identifies as Catholic.

The 33-year-old millionaire Mr Mironiuk told the BBC that he was proud of his Polish heritage and to be part of a crop of successful Polish people working in technology.

The country is making significant strides in the tech scene, particularly in AI, with companies like Google Brain, Cosmose AI and Open AI having significant numbers of Polish employees.

But Mr Mironiuk is also aware that many countries are not as fortunate, and hopes this educational programme could help change that.

The programme will be available in Spanish, English, Italian and Polish. It is expected to reach children all over South America except Brazil, and in English speaking nations in Africa and South East Asia.

This is not the first time the Pope has encouraged young people to get into coding, having helped write a line of code for a UN initiative in 2019.

Mr Mironiuk will meet the Pope at the Vatican. But he admits he's not anticipating the pontiff to emulate his students in acquiring new skills.

"I don't expect him to know Python very well, at least," he said. "But he will get a certificate for his efforts in helping start the programme."

10 notes

·

View notes

Text

From Algorithms to Ethics: Unraveling the Threads of Data Science Education

In the rapidly advancing realm of data science, the curriculum serves as a dynamic tapestry, interweaving diverse threads to provide learners with a comprehensive understanding of data analysis, machine learning, and statistical modeling. Choosing the Best Data Science Institute can further accelerate your journey into this thriving industry. This educational journey is a fascinating exploration of the multifaceted facets that constitute the heart of data science education.

1. Mathematics and Statistics Fundamentals:

The journey begins with a deep dive into the foundational principles of mathematics and statistics. Linear algebra, probability theory, and statistical methods emerge as the bedrock upon which the entire data science edifice is constructed. Learners navigate the intricate landscape of mathematical concepts, honing their analytical skills to decipher complex datasets with precision.

2. Programming Proficiency:

A pivotal thread in the educational tapestry is the acquisition of programming proficiency. The curriculum places a significant emphasis on mastering programming languages such as Python or R, recognizing them as indispensable tools for implementing the intricate algorithms that drive the field of data science. Learners cultivate the skills necessary to translate theoretical concepts into actionable insights through hands-on coding experiences.

3. Data Cleaning and Preprocessing Techniques:

As data scientists embark on their educational voyage, they encounter the art of data cleaning and preprocessing. This phase involves mastering techniques for handling missing data, normalization, and the transformation of datasets. These skills are paramount to ensuring the integrity and reliability of data throughout the entire analysis process, underscoring the importance of meticulous data preparation.

4. Exploratory Data Analysis (EDA):

A vivid thread in the educational tapestry, exploratory data analysis (EDA) emerges as the artist's palette. Visualization tools and descriptive statistics become the brushstrokes, illuminating patterns and insights within datasets. This phase is not merely about crunching numbers but about understanding the story that the data tells, fostering a deeper connection between the analyst and the information at hand.

5. Machine Learning Algorithms:

The heartbeat of the curriculum pulsates with the study of machine learning algorithms. Learners traverse the expansive landscape of supervised learning, exploring regression and classification methodologies, and venture into the uncharted territories of unsupervised learning, unraveling the mysteries of clustering algorithms. This segment empowers aspiring data scientists with the skills needed to build intelligent models that can make predictions and uncover hidden patterns within data.

6. Real-world Application and Ethical Considerations:

As the educational journey nears its culmination, learners are tasked with applying their acquired knowledge to real-world scenarios. This application is guided by a strong ethical compass, with a keen awareness of the responsibilities that come with handling data. Graduates emerge not only as proficient data scientists but also as conscientious stewards of information, equipped to navigate the complex intersection of technology and ethics.

In essence, the data science curriculum is a meticulously crafted symphony, harmonizing mathematical rigor, technical acumen, and ethical mindfulness. The educational odyssey equips learners with a holistic skill set, preparing them to navigate the complexities of the digital age and contribute meaningfully to the ever-evolving field of data science. Choosing the best Data Science Courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science.

3 notes

·

View notes

Text

I can now wrap any in-terminal REPL program with

my custom history setup (histdir, synced with Syncthing, with inotify/etc watches pulling in new history entries as they sync in, with narrowing fuzzy search to grab/re-run/delete entires), and

my moderately customized vi-style editing, copy/paste, scrollback navigation, and so on (editing commands and cursor positioning are translated into a handful of practically-universal control/escape key sequences to send to the REPL)

all without any degradation/regression in the REPL's native interactive terminal features. Besides just being nice in its own right, this is a strong benefit by default over comint, where I'd otherwise need to find, wait for, or implement a language-aware+integrated mode on a per-language/per-REPL basis like. For example,

in Python's REPL I hit Tab and it auto-completes as much as it can, and then if I hit Tab more it prints out the available matches;

in Ruby's "irb", I get those litte live indicator updates in the prompt as I type, the completion candidate TUI Just Works, and so on;

in node.js, I get the live evaluation previews below my input line, the shaded completion candidates appearing as I type, and so on;

because it's just those programs running in a full-fledged terminal, and yet I've got my own history, line editing, and ability to move around in the output, because the terminal is implemented in Emacs and that opens some doors.

It's not perfect. In fact it's basically one big fragile hack (or multiple hacks, depending on how you want to taxonomize), and there's some things that this approach can simply never get right.

For example, the Node.js REPL treats right arrow and Ctrl-E not just as motions to the right but also as accepting the current completion candidate - but in order to achieve these wonders, I have to send those keystrokes to the program, so in Node I get suggested autocompletes committing into the input line when not intended... although it's amazing how little that bothers me (I barely even noticed at first) because it doesn't move my cursor in most situations, and I can just "d$" or "cW" or "R" or whatever when I don't want them.

Also, these quirks only happen when I'm doing my vi-style stuff. I can still drop down to just the stock REPL experience if I need to, by just using what I already know - staying in vi "insert mode" and bypassing any remaining keybinds with the same escaping/quoting/send-next-key-verbatim keystroke that I use everywhere else in Emacs.

Also, after using histdir for about ten days, I can already notice the performance limitations of the initial MVP - the brute-force, quickest+simplest solution was an O(n^2) synchronous de-duplicating load into a ring every time I start a histdir-using buffer. In these first ten days, my Eshell history grew from a blank slate to ~350 unique history entires, ~1100 total history entries. This now causes a perceptible (still sub-second, but now perceptible) delay. On my computer it's still "instant".

Deleting all but the newest call timestamp file for each entry brought it back down to seemingly instant on my phone, which was exactly the kicking-the-can-down-the-road technique I was planning to use to keep this MVP practical longer, and I think I can probably keep that going for a few months.

Of course, many optimization opportunities here are obvious, and I'll tackle them... basically once the slowdowns are bad enough to annoy me (unfortunately I am easily annoyed by perceptible latency which is directly within my power to solve; fortunately, some low-hanging fruit are practically touching the ground). Or before a public release. But speaking of...

None of this is cleanly separated out into its own reusable pieces, let alone factored to be as decoupled+composable+flexible as would be proper for public packages/libraries/tools. It's not even documented/commented - not even a little. One day, hopefully. Currently I want+need to focus my time and effort on a job search, so unless a living wage worth of donations materializes, probably not soon. In the meantime, most of it lives inside my .emacs file, which is public, and I'll flip the "histdir" script's repo from private to public as soon as I'm satisfied from usage experience that the current directory layout is good enough to be an official v1 (I'm like 99% there already).

I started implementing an MVP script for histdir 11 days ago. Then initial Eshell integration. Then added the ability to remove, search, and inspect histdir entries. Then reused the same stuff I already had for history fuzzy-find in Emacs in order to get really pleasant history removal UX. Then mirrored what I did for Eshell in Comint. Didn't like the Comint limitations and the need for language-specific enhancements to be as good as the stock CLI REPLs. Fiddled with sending raw tabs to processes under Comint, gave up on that. Did the first significant implementation on top of the terminal provided by Eat, but the approach was a dead-end: I was trying to annotate every character to distinguish terminal output from in-buffer edits, only send the in-buffer input when Enter or Tab was hit, and then track which characters in the output were actually part of the current input that were already sent and were echoed back so I knew to include them in the history but not in the next send - I eventually got it to the point that it basically worked for Python's REPL, but tripped over itself terribly in Node.js. (Each sentence so far was about a day each.)

Then, as of about 5 days ago, I started down my current path. At first it was just histdir integration. I had given up on vi-style integration and just decided to do the minimum: I needed to know where the input of a REPL started and ended, and copy the text out so that I could save it as a history entry. Okay, easy enough, basically every terminal REPL understands Ctrl-A and Ctrl-E for moving cursor to start and end of the input line - send Ctrl-A, wait for the terminal to redraw the cursor position, send Ctrl-E, wait again, and those two cursor positions are your start and end. And deleting is also easy, basically everything understands Ctrl-U (it turned out not everything in practical use today does - a couple years ago Reline had a bug with it and f.e. Debian's Ruby still ships with it), but Ctrl-K is basically the same thing (in the other direction) and works on every REPL I tested. Inserting a history entry into the cleared input text is just normal sending input to the process. Most of that first day was not spent on sending the control codes - it was figuring out why the cursor in the terminal and the "point" in Emacs weren't matching up, except sometimes they were, and it took me a while to really understand the behavior.

So then the last few days have been me incrementally going from "okay, just a couple teensy super-simple vi-style key-bindings for the whole-input-line-deleting stuff I already have" to "just one more vi-style thing that I can see how to implement now", and as my understanding of what Eat offered and how it worked grew, so too did my ability to see how this could work.

The biggest breakthrough was yesterday, when I came up with the approach of progammatically sending inputs to align the REPL's cursor with the buffer's point just before any editing operation. This profoundly simplified and optimized the remaining problem. Prior to that, I was thinking I'd need to translate every motion within the buffer when the cursor was in the REPL's input line into an input to the REPL to move the cursor accordingly. But then I realized that nope - we only need the cursors to be in the same spot when editing starts, and only in that moment do we need to check if the Emacs point is in the REPL's input, and determine+send how many left/right movements are needed to put the REPL's cursor there. With that figured out, by yesterday night I could move my cursor around freely with any conceivable Emacs command, not just keybinds that the REPL knew, and it automagically Just Worked whenever I started inputting characters into the REPL. It truly is magical. One of the few computer UX things that has felt that way to me, and the first one I've personally crafted.

Then today, spurred on by this game-changing approach, I implemented

, vi-style replace (both "r" and "R"), including hitting backspace while still in replace state to peel back the new text and get back to the old, going off the end of existing input and still being able to insert/backspace as you'd expect, being able to move the cursor while in replace state with in-REPL keybinds for moving the cursor and having the replace and backing out the replace work correctly (I had some help in the form of the Evil package automagically remembering the replaced characters in a little list in-memory) (I am sure there are some edge cases to be found in this, but I got it fairly solid in my testing, so at this point I'd be more worried about me having made a breaking typo or vi command keystroke that I didn't notice after all the testing and before saving+committing+pushing); and

full vi-style delete/change commands - any vi-style motion can be used to delete exactly the text it covers in the underlying REPL, (I have some concern about multi-byte characters and the like - but as far as I've thought it through, it should all Just Work so long as Emacs and the underlying REPL count things consistently) (again I benefitted a lot from Evil's design here - I was feeling like this would be a really big task, but it turned out to be a very small and simple one for the most part, because it turns out Evil had the same idea that I came up with when writing my vi-style window split management - you split your code up into "operators" which compose with literally any "motion" code which moves the cursor position / window selection / whatever);

oh and visual selections and registers Just Work, because again, these things just compose - vi-style motions move the selection around, Evil gives the operator the coordinates from the start and end of the selection (just as it would cursor position from before and after a motion), and then my custom operator receives that and takes moving the underlying REPL's cursor and sending key codes for things like Delete and Left Arrow to actually cause the deletion (and of course copying the text first, because in vi every deletion is also a copy for later pasting)

vi-style paste, so that's the standard "p", the traditional/BusyBox variant of "P" where the cursor stays at the front of the pasted text instead of moving to the end of it (because I prefer it that way, I find it a far more useful distinction), and my personal addition of "replacing" paste (which I've been binding to "gp" and "gP" ever since I implemented it for Emacs+Evil a few months back) - that is, you paste the normal vi way, but the text overwrites as it inserts, as if you were in replace state ("R").

And with that... I'm basically blissfully happy. I'd be blissfully happy just from having achieved these UX improvements. But also I never in my wildest dreams thought I would achieve them all this fast. ~10 days. Automatic history sync between devices (of course I owe most of that to Syncthing - all I did was make a questionable history storage scheme that works well with it), my preferred history UI/UX with fuzzy search (owe the initial big-picture UX inspiration to Atuin and most of the the in-Emacs implementation to Vertico and Consult), and full vi-style editing/motions (owe much of this to Evil for being a really good Vim for Emacs, plus I wouldn't be on the vi direction if it weren't for BusyBox vi), and literally every other personal efficiency I've made available within a couple of keystrokes in my Emacs (all because Eat did such a great job of bringing a better terminal emulator to Emacs, in a form that was more readily available and more amenable to exploratory hacking than something like vterm), for basically any shell or REPL.

4 notes

·

View notes

Text

Okay so it's a great day to go see if something like Khan Academy or other freebie learning zone has a Computer Networking 101. Turns out KhanAcademy.org is still free for now. I'm sure it mines the hell out of your data like anything else. I use one of my like 10 google logins. Did you know you didn't used to have a phone number to get a gmail address? There are still some e-mail services that don't require phone numbers, though they have different limitations and uses. Becoming a suite was quite another magnitude for a login. Anyway, someone tag me if we need the article on Other Email Services. https://www.geeksforgeeks.org/basics-computer-networking/ ^This doesn't require a log in. I'm probably going to try to find a couple amusing videos. Like probably from youtube, unless I can find something on vimeo or the fediverse. A lot of this is learning how the internet goes. It teaches The Names and Commands of The Internet. Sort of like programing languages like C(++++?) and Python are Vocabulary and Grammar for Taming Your Processor. I'll reblog with more related links as I add to my Updated Study Library. My current aims include: > getting competent in basic LAN and internetwork administration > continuing to munch on html and CSS -- //Every time I get used to a service, it disappears or monetizes out of my budget or fills up with ads until it stops working.// I think I can make an ugly tack board and file server for my household. Also it's really satisfying -- like painting with puzzle pieces. > web hosting so there is somewhere to put it > uh taking screenshots and making posts about better net navigation and building skills to improve awareness? And of course: https://www.myabandonware.com/browse/theme/typing-29/ A bunch of typing games so old that no one cares. If you're willing to go with lowtech graphics to skip modern spyware and webtracking, it's honestly a fun little ride. Learning to type physical conditioning. However you make 15-30 minutes almost every day good and fun for yourself. (Sometimes 2d alien fun for points is enough, ya?) Yeah, those are for windows. Most macintosh users these days can maybe blow a few currency on a indie app, eh? Linux users -- you already know how to use freeware and honestly I'm not expecting to be read by a lot of linux users on this thread. (@ me for linux introductions ig too) Android? Basically in the same app boat as mac... Shout up for android power user info, like sideloading but... I haven't been browsing the indie APKs or the flash community in ages.

And uh, get a keyboard. bluetooth is fine for a while and better for someone trying to start this kind of project on a phone or a tablet. If someone is sticking to typing games for a few weeks or several, it might be worth considering getting a corded keyboard -- Anyone who is topping over 45wpm and heading for 60+ will find that bluetooth keyboards may not keep up with that leveled up meat input. (I can type around 90wpm or so when I'm on a roll and get frustrated pretty quickly.) Most Importantly: SAVE YOUR PROGRESS (u matter), & Look It Up before you Give It Up.

another thought about "gen z and gen alpha don't know how to use computers, just phone apps" is that this is intentionally the direction tech companies have pushed things in, they don't want users to understand anything about the underlying system, they want you to just buy a subscription to a thing and if it doesn't do what you need it to, you just upgrade to the more expensive one. users who look at configuration files are their worst nightmare

#tech#power user#how to use the internet#how to use computers#i hear they stopped teaching that#btw i will get off this thread and start my own after this#for real#knowledge share#LAN#DIY#solarpunk#geocities#retro#typing games#learning code#new skills#new habits#free the internet#free yourself#empower users#fediverse#the theme from reboot the cartoon ig#a 200$ laptop that has ports andor a cd drive will go a long way here

79K notes

·

View notes

Text

Hire Artificial Intelligence Developers: What Businesses Look for

The Evolving Landscape of AI Hiring

The number of people needed to develop artificial intelligence has grown astronomically, but businesses are getting extremely picky about the kind of people they recruit. Knowing what businesses really look like, artificial intelligence developers can assist job seekers and recruiters in making more informed choices. The criteria extend well beyond technical expertise, such as a multidimensional set of skills that lead to success in real AI development.

Technical Competence Beyond the Basics

Organizations expect to hire artificial intelligence developers to possess sound technical backgrounds, but the particular needs differ tremendously depending on the job and domain. Familiarity with programming languages such as Python, R, or Java is generally needed, along with expertise in machine learning libraries such as TensorFlow, PyTorch, or scikit-learn.

But more and more, businesses seek AI developers with expertise that spans all stages of AI development. These stages include data preprocessing, model building, testing, deployment, and monitoring. Proficiency in working on cloud platforms, containerization technology, and MLOps tools has become more essential as businesses ramp up their AI initiatives.

Problem-Solving and Critical Thinking

Technical skills by themselves provide just a great AI practitioner. Businesses want individuals who can address intricate issues in an analytical manner and logically assess possible solutions. It demands knowledge of business needs, determining applicable AI methods, and developing solutions that implement in reality.

The top artificial intelligence engineers can dissect intricate problems into potential pieces and iterate solutions. They know AI development is every bit an art as a science, so it entails experiments, hypothesis testing, and creative problem-solving. Businesses seek examples of this problem-solving capability through portfolio projects, case studies, or thorough discussions in interviews.

Understanding of Business Context

Business contexts and limitations today need to be understood by artificial intelligence developers. Businesses appreciate developers who are able to transform business needs into technical requirements and inform business decision-makers about technical limitations. Such a business skill ensures that AI projects achieve tangible value instead of mere technical success.

Good AI engineers know things like return on investment, user experience, and operational limits. They can choose model accuracy versus computational expense in terms of the business requirements. This kind of business-technical nexus is often what distinguishes successful AI projects from technical pilot projects that are never deployed into production.

Collaboration and Communication Skills

AI development is collaborative by nature. Organizations seek artificial intelligence developers who can manage heterogeneous groups of data scientists, software engineers, product managers, and business stakeholders. There is a big need for excellent communication skills to explain complex things to non-technical teams and to collect requirements from domain experts.

The skill of giving and receiving constructive criticism is essential for artificial intelligence builders. Building artificial intelligence is often iterative with multiple stakeholders influencing the process. Builders who can include feedback without compromising technical integrity are most sought after by organizations developing AI systems.

Ethical Awareness and Responsibility

Firms now realize that it is crucial to have ethical AI. They want to employ experienced artificial intelligence developers who understand bias, fairness, and the long-term impact of AI systems. This is not compliance for the sake of compliance,it is about creating systems that work equitably for everyone and do not perpetuate destructive bias.

Artificial intelligence engineers who are able to identify potential ethical issues and recommend solutions are increasingly valuable. This requires familiarity with things like algorithmic bias, data privacy, and explainable AI. Companies want engineers who are able to solve problems ahead of time rather than as afterthoughts.

Adaptability and Continuous Learning

The AI field is extremely dynamic, and therefore artificial intelligence developers must be adaptable. The employers eagerly anticipate employing persons who are evidencing persistent learning and are capable of accommodating new technologies, methods, and demands. It goes hand in hand with staying abreast of research developments and welcoming learning new tools and frameworks.

Successful artificial intelligence developers are open to being transformed and unsure. They recognize that the most advanced methods used now may be outdated tomorrow and work together with an air of wonder and adaptability. Businesses appreciate developers who can adapt fast and absorb new knowledge effectively.

Experience with Real-World Deployment

Most AI engineers can develop models that function in development environments, but companies most appreciate those who know how to overcome the barriers of deploying AI systems in production. These involve knowing model serving, monitoring, versioning, and maintenance.

Production deployment experience shows that AI developers appreciate the full AI lifecycle. They know how to manage issues such as model drift, performance monitoring, and system integration. Practical experience is normally more helpful than superior abstract knowledge.

Domain Expertise and Specialization

Although overall AI skill is to be preferred, firms typically look for artificial intelligence developers with particular domain knowledge. Knowledge of healthcare, finance, or retail industries' particular issues and needs makes developers more efficient and better.

Domain understanding assists artificial intelligence developers in crafting suitable solutions and speaking correctly with stakeholders. Domain understanding allows them to spot probable problems and opportunities that may be obscure to generalist developers. This specialization can result in more niched career advancement and improved remuneration.

Portfolio and Demonstrated Impact

Companies would rather have evidence of good AI development work. Artificial intelligence developers who can demonstrate the worth of their work through portfolio projects, case studies, or measurable results have much to offer. This demonstrates that they are able to translate technical proficiency into tangible value.

The top portfolios have several projects that they utilize to represent various aspects of AI development. Employers seek to hire artificial intelligence developers who are able to articulate their thought process, reflect on problems they experience, and measure the effects of their work.

Cultural Fit and Growth Potential

Apart from technical skills, firms evaluate whether AI developers will be a good fit with their firm culture and enjoy career development. Factors such as work routines, values alignment, and career development are addressed. Firms deeply invest in AI skills and would like to have developers that will be an asset to the firm and evolve with the firm.

The best artificial intelligence developers possess technical skills augmented with superior interpersonal skills, business skills, and a sense of ethics. They can stay up with changing requirements without sacrificing quality and assisting in developing healthy team cultures.

0 notes

Text

Choosing a Career in Data Science After High School: A Smart Move for the Future

Today, data emerges as a critical asset in the digital-driven world. Data is the trigger of modern innovation across industries ranging from personalized video suggestions to real-time fraud detection. Data science is a lively venue where rational thinking, technical acumen, and market intuition blend for extracting value-forming patterns out of large information pools.

After class 12, those passionate about career paths beyond elements of the conventional paradigms may consider a promising data science. It is oriented towards modern trends, it pays great attention to practical skills as well as has a lot of room for advancement.

Why Consider Data Science After 12th?

In the past, data science was somewhat synonymous with a postgraduate course; however, things have changed. Aware of the advantages of early data science introduction, various colleges today offer introductory courses, which will enable students to accumulate vital knowledge after finishing school.

Opting for data science after 12th provides an edge. While your mental capabilities are most open to learn, the acquisition of basic programming, statistical analysis, and data handling becomes easier. Since your classmates will only be getting started with the concepts in higher education, you will be busy on projects, doing certifications, and submitting internship applications.

1-Year Data Science Course: A Focused Start

If a full data science degree is a turn off for you, or you are seeking to learn skills quickly a 1-year data science course is a viable option. These courses are designed to help you learn faster by focusing on the most important skills and ideas requested by the industry.

Typically, a 1-year course includes:

Programming languages like Python or R

Fundamentals of statistics and data visualization

Introduction to machine learning algorithms

Real-world data analysis projects

Exposure to tools such as Excel, SQL, Tableau, or Power BI

With these skills, you're not just academically enriched but also job-ready. Many companies now hire freshers with data analytics skills for entry-level roles in operations, marketing, and business intelligence.

Diploma in Data Analytics: Practical and Career-Oriented

Another great option for students after 12th is pursuing a diploma in data analytics. This course is ideal for those who want to focus more on interpreting data and drawing meaningful insights rather than building complex algorithms or models.

While data science includes heavy statistical modeling and machine learning, data analytics focuses on tools and techniques to understand trends, performance, and patterns in datasets.

Most diploma courses cover:

Microsoft Excel and advanced spreadsheets

SQL and relational database management

Visualization tools like Tableau or Power BI

Basic statistics and trend analysis

Capstone projects involving business cases

A diploma in data analytics is particularly useful for roles in business analysis, operations, marketing analytics, and finance. These are practical roles where companies need people who can understand the story behind the numbers and help improve business decisions.

Finding the Right Data Science Colleges in Delhi

Delhi, being one of India's top education hubs, has several reputed colleges offering data science and analytics programs. These colleges offer a wide variety of courses including certification programs, diplomas, and degrees—some of which are tailored for students fresh out of school.

When selecting a college, look for:

Course curriculum and how updated it is

Availability of hands-on learning through labs or projects

Placement support and industry tie-ups

Faculty with industry experience

Certifications and internships included in the course

Being in Delhi also gives students exposure to a large pool of tech startups, data-centric companies, and networking opportunities through seminars and workshops.

Who Should Choose Data Science?

You don’t have to be a math genius or a computer wizard to enter the field of data science. What matters more is your curiosity, problem-solving ability, and interest in technology. If you're someone who:

Likes solving puzzles or logical challenges

Enjoys working with numbers and spotting patterns

Wants to explore a career with real-world impact

Is open to learning new tools and technologies

then data science could be the right path for you. While a background in science or math is helpful, students from commerce or humanities can also excel by focusing on statistics and learning programming gradually.

Career Opportunities Ahead

The beauty of starting early in data science is the variety of roles it opens up. Depending on your skillset and interest, you can grow into roles like:

Data Analyst

Business Intelligence Developer

Data Engineer

Machine Learning Specialist

Marketing Analyst

Financial Data Consultant

These roles are in demand not just in the tech sector but also in healthcare, education, retail, finance, logistics, and more. Companies today understand the value of data-driven decision-making, and professionals with analytical skills are always in demand.

Conclusion

Choosing data science after 12th is a forward-thinking decision that can set you apart in a highly competitive job market. Whether you pursue a diploma in data analytics or a 1-year data science course, you gain practical skills that can lead to a fulfilling and well-paying career.If you’re looking to begin your journey in data science and are searching for a strong academic foundation, institutions like AAFT offer specialized programs that blend theory with real-world application, ensuring students are not only academically prepared but also industry-ready.

#data science course#data science institute#diploma in data science#data science institute in delhi#data analysis courses

0 notes

Text

While a lot of this article is very good, the value proposition of radiography presented is incorrect. Professional radiographers will always be needed in part of the process, if only so that the accreditation boards are satisfied appropriate monitoring is in place and to help reassure patients who don't trust computers (a part of the population computer experts often forget to consider). The actual value proposition is to enable each each radiographer to do: - more accurate scans (something that is now the case for white British and USA people, albeit a long way off for everyone else), resulting in more efficient spending downstream (more accurate treatment, with more precision and more confidence). - more total scans (a radiography AI is intended to give results much faster than a human, and in some cases can help the radiographer see what exactly caused the scan to be classified as abnormal. Results on this are mixed). This means radiographers can get through more scans in a day, cutting waiting times and earning more money for the same wage base. In places where there simply aren't enough qualified radiographers to go round, that's a big advantage. - identify patterns within scans that researchers haven't spotted yet. This is both the potential biggest saving and the highest-risk proposition. While AI research is ongoing into this part, it's also the part which has so far revealed zero success. As for the type of bubble this is, I suspect AI is only going to partially explode, because large parts of AI are successfully implementing. You don't hear about how much AI is already integrated into areas like security, energy management and computer game NPC design because these have occurred largely successfully and without causing large media waves. The platform is large enough that some of the large numbers of people skilling in AI will definitely find work in AI after the bubble bursts, and it will be considerably more than before the bubble got going. There is one other promising legacy likely, which is the people who are trying to skill to get into AI generally need a solid base in at least one general programming language in order to make progress with the likes of Tensorflow or Pytorch (Python and C++ are the ones I see recommended most often as methods to learn enough programming for the AI stuff to make sense at a programming level). Python and C++ aren't going away, the number of uses for these that don't involve AI is growing every week, and even people who don't take a career in computing will benefit from the logical thinking that comes from learning a programming language due to something they are interested in (rather than as a rote lesson). A lot of AI is ridiculous. Some of it is worthwhile. We need to make sure the mess from the downfall is not too big, so that computing can build back from a solid base - and be aware AI will definitely be part of that base even if the AI grandees most heard in the press fall.

What kind of bubble is AI?

My latest column for Locus Magazine is "What Kind of Bubble is AI?" All economic bubbles are hugely destructive, but some of them leave behind wreckage that can be salvaged for useful purposes, while others leave nothing behind but ashes:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Think about some 21st century bubbles. The dotcom bubble was a terrible tragedy, one that drained the coffers of pension funds and other institutional investors and wiped out retail investors who were gulled by Superbowl Ads. But there was a lot left behind after the dotcoms were wiped out: cheap servers, office furniture and space, but far more importantly, a generation of young people who'd been trained as web makers, leaving nontechnical degree programs to learn HTML, perl and python. This created a whole cohort of technologists from non-technical backgrounds, a first in technological history. Many of these people became the vanguard of a more inclusive and humane tech development movement, and they were able to make interesting and useful services and products in an environment where raw materials – compute, bandwidth, space and talent – were available at firesale prices.

Contrast this with the crypto bubble. It, too, destroyed the fortunes of institutional and individual investors through fraud and Superbowl Ads. It, too, lured in nontechnical people to learn esoteric disciplines at investor expense. But apart from a smattering of Rust programmers, the main residue of crypto is bad digital art and worse Austrian economics.

Or think of Worldcom vs Enron. Both bubbles were built on pure fraud, but Enron's fraud left nothing behind but a string of suspicious deaths. By contrast, Worldcom's fraud was a Big Store con that required laying a ton of fiber that is still in the ground to this day, and is being bought and used at pennies on the dollar.

AI is definitely a bubble. As I write in the column, if you fly into SFO and rent a car and drive north to San Francisco or south to Silicon Valley, every single billboard is advertising an "AI" startup, many of which are not even using anything that can be remotely characterized as AI. That's amazing, considering what a meaningless buzzword AI already is.

So which kind of bubble is AI? When it pops, will something useful be left behind, or will it go away altogether? To be sure, there's a legion of technologists who are learning Tensorflow and Pytorch. These nominally open source tools are bound, respectively, to Google and Facebook's AI environments:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

But if those environments go away, those programming skills become a lot less useful. Live, large-scale Big Tech AI projects are shockingly expensive to run. Some of their costs are fixed – collecting, labeling and processing training data – but the running costs for each query are prodigious. There's a massive primary energy bill for the servers, a nearly as large energy bill for the chillers, and a titanic wage bill for the specialized technical staff involved.

Once investor subsidies dry up, will the real-world, non-hyperbolic applications for AI be enough to cover these running costs? AI applications can be plotted on a 2X2 grid whose axes are "value" (how much customers will pay for them) and "risk tolerance" (how perfect the product needs to be).

Charging teenaged D&D players $10 month for an image generator that creates epic illustrations of their characters fighting monsters is low value and very risk tolerant (teenagers aren't overly worried about six-fingered swordspeople with three pupils in each eye). Charging scammy spamfarms $500/month for a text generator that spits out dull, search-algorithm-pleasing narratives to appear over recipes is likewise low-value and highly risk tolerant (your customer doesn't care if the text is nonsense). Charging visually impaired people $100 month for an app that plays a text-to-speech description of anything they point their cameras at is low-value and moderately risk tolerant ("that's your blue shirt" when it's green is not a big deal, while "the street is safe to cross" when it's not is a much bigger one).

Morganstanley doesn't talk about the trillions the AI industry will be worth some day because of these applications. These are just spinoffs from the main event, a collection of extremely high-value applications. Think of self-driving cars or radiology bots that analyze chest x-rays and characterize masses as cancerous or noncancerous.

These are high value – but only if they are also risk-tolerant. The pitch for self-driving cars is "fire most drivers and replace them with 'humans in the loop' who intervene at critical junctures." That's the risk-tolerant version of self-driving cars, and it's a failure. More than $100b has been incinerated chasing self-driving cars, and cars are nowhere near driving themselves:

https://pluralistic.net/2022/10/09/herbies-revenge/#100-billion-here-100-billion-there-pretty-soon-youre-talking-real-money

Quite the reverse, in fact. Cruise was just forced to quit the field after one of their cars maimed a woman – a pedestrian who had not opted into being part of a high-risk AI experiment – and dragged her body 20 feet through the streets of San Francisco. Afterwards, it emerged that Cruise had replaced the single low-waged driver who would normally be paid to operate a taxi with 1.5 high-waged skilled technicians who remotely oversaw each of its vehicles:

https://www.nytimes.com/2023/11/03/technology/cruise-general-motors-self-driving-cars.html

The self-driving pitch isn't that your car will correct your own human errors (like an alarm that sounds when you activate your turn signal while someone is in your blind-spot). Self-driving isn't about using automation to augment human skill – it's about replacing humans. There's no business case for spending hundreds of billions on better safety systems for cars (there's a human case for it, though!). The only way the price-tag justifies itself is if paid drivers can be fired and replaced with software that costs less than their wages.

What about radiologists? Radiologists certainly make mistakes from time to time, and if there's a computer vision system that makes different mistakes than the sort that humans make, they could be a cheap way of generating second opinions that trigger re-examination by a human radiologist. But no AI investor thinks their return will come from selling hospitals that reduce the number of X-rays each radiologist processes every day, as a second-opinion-generating system would. Rather, the value of AI radiologists comes from firing most of your human radiologists and replacing them with software whose judgments are cursorily double-checked by a human whose "automation blindness" will turn them into an OK-button-mashing automaton:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

The profit-generating pitch for high-value AI applications lies in creating "reverse centaurs": humans who serve as appendages for automation that operates at a speed and scale that is unrelated to the capacity or needs of the worker:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

But unless these high-value applications are intrinsically risk-tolerant, they are poor candidates for automation. Cruise was able to nonconsensually enlist the population of San Francisco in an experimental murderbot development program thanks to the vast sums of money sloshing around the industry. Some of this money funds the inevitabilist narrative that self-driving cars are coming, it's only a matter of when, not if, and so SF had better get in the autonomous vehicle or get run over by the forces of history.

Once the bubble pops (all bubbles pop), AI applications will have to rise or fall on their actual merits, not their promise. The odds are stacked against the long-term survival of high-value, risk-intolerant AI applications.

The problem for AI is that while there are a lot of risk-tolerant applications, they're almost all low-value; while nearly all the high-value applications are risk-intolerant. Once AI has to be profitable – once investors withdraw their subsidies from money-losing ventures – the risk-tolerant applications need to be sufficient to run those tremendously expensive servers in those brutally expensive data-centers tended by exceptionally expensive technical workers.

If they aren't, then the business case for running those servers goes away, and so do the servers – and so do all those risk-tolerant, low-value applications. It doesn't matter if helping blind people make sense of their surroundings is socially beneficial. It doesn't matter if teenaged gamers love their epic character art. It doesn't even matter how horny scammers are for generating AI nonsense SEO websites:

https://twitter.com/jakezward/status/1728032634037567509

These applications are all riding on the coattails of the big AI models that are being built and operated at a loss in order to be profitable. If they remain unprofitable long enough, the private sector will no longer pay to operate them.

Now, there are smaller models, models that stand alone and run on commodity hardware. These would persist even after the AI bubble bursts, because most of their costs are setup costs that have already been borne by the well-funded companies who created them. These models are limited, of course, though the communities that have formed around them have pushed those limits in surprising ways, far beyond their original manufacturers' beliefs about their capacity. These communities will continue to push those limits for as long as they find the models useful.

These standalone, "toy" models are derived from the big models, though. When the AI bubble bursts and the private sector no longer subsidizes mass-scale model creation, it will cease to spin out more sophisticated models that run on commodity hardware (it's possible that Federated learning and other techniques for spreading out the work of making large-scale models will fill the gap).

So what kind of bubble is the AI bubble? What will we salvage from its wreckage? Perhaps the communities who've invested in becoming experts in Pytorch and Tensorflow will wrestle them away from their corporate masters and make them generally useful. Certainly, a lot of people will have gained skills in applying statistical techniques.

But there will also be a lot of unsalvageable wreckage. As big AI models get integrated into the processes of the productive economy, AI becomes a source of systemic risk. The only thing worse than having an automated process that is rendered dangerous or erratic based on AI integration is to have that process fail entirely because the AI suddenly disappeared, a collapse that is too precipitous for former AI customers to engineer a soft landing for their systems.

This is a blind spot in our policymakers debates about AI. The smart policymakers are asking questions about fairness, algorithmic bias, and fraud. The foolish policymakers are ensnared in fantasies about "AI safety," AKA "Will the chatbot become a superintelligence that turns the whole human race into paperclips?"

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

But no one is asking, "What will we do if" – when – "the AI bubble pops and most of this stuff disappears overnight?"

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/19/bubblenomics/#pop

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

tom_bullock (modified) https://www.flickr.com/photos/tombullock/25173469495/

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

4K notes

·

View notes

Text

What Businesses Look for in an Artificial Intelligence Developer

The Evolving Landscape of AI Hiring

The number of people needed to develop artificial intelligence has grown astronomically, but businesses are getting extremely picky about the kind of people they recruit. Knowing what businesses really look for in artificial intelligence developer can assist job seekers and recruiters in making more informed choices. The criteria extend well beyond technical expertise, such as a multidimensional set of skills that lead to success in real AI development.

Technical Competence Beyond the Basics

Organizations expect artificial intelligence developers to possess sound technical backgrounds, but the particular needs differ tremendously depending on the job and domain. Familiarity with programming languages such as Python, R, or Java is generally needed, along with expertise in machine learning libraries such as TensorFlow, PyTorch, or scikit-learn.

But more and more, businesses seek AI developers with expertise that spans all stages of AI development. These stages include data preprocessing, model building, testing, deployment, and monitoring. Proficiency in working on cloud platforms, containerization technology, and MLOps tools has become more essential as businesses ramp up their AI initiatives.

Problem-Solving and Critical Thinking

Technical skills by themselves provide just a great AI practitioner. Businesses want individuals who can address intricate issues in an analytical manner and logically assess possible solutions. It demands knowledge of business needs, determining applicable AI methods, and developing solutions that implement in reality.

The top artificial intelligence engineers can dissect intricate problems into potential pieces and iterate solutions. They know AI development is every bit an art as a science, so it entails experiments, hypothesis testing, and creative problem-solving. Businesses seek examples of this problem-solving capability through portfolio projects, case studies, or thorough discussions in interviews.

Understanding of Business Context

Business contexts and limitations today need to be understood by artificial intelligence developers. Businesses appreciate developers who are able to transform business needs into technical requirements and inform business decision-makers about technical limitations. Such a business skill ensures that AI projects achieve tangible value instead of mere technical success.

Good AI engineers know things like return on investment, user experience, and operational limits. They can choose model accuracy versus computational expense in terms of the business requirements. This kind of business-technical nexus is often what distinguishes successful AI projects from technical pilot projects that are never deployed into production.

Collaboration and Communication Skills

AI development is collaborative by nature. Organizations seek artificial intelligence developers who can manage heterogeneous groups of data scientists, software engineers, product managers, and business stakeholders. There is a big need for excellent communication skills to explain complex things to non-technical teams and to collect requirements from domain experts.

The skill of giving and receiving constructive criticism is essential for artificial intelligence builders. Building artificial intelligence is often iterative with multiple stakeholders influencing the process. Builders who can include feedback without compromising technical integrity are most sought after by organizations developing AI systems.

Ethical Awareness and Responsibility

Firms now realize that it is crucial to have ethical AI. They want to employ experienced artificial intelligence developers who understand bias, fairness, and the long-term impact of AI systems. This is not compliance for the sake of compliance,it is about creating systems that work equitably for everyone and do not perpetuate destructive bias.

Artificial intelligence engineers who are able to identify potential ethical issues and recommend solutions are increasingly valuable. This requires familiarity with things like algorithmic bias, data privacy, and explainable AI. Companies want engineers who are able to solve problems ahead of time rather than as afterthoughts.

Adaptability and Continuous Learning

The AI field is extremely dynamic, and therefore artificial intelligence developers must be adaptable. The employers eagerly anticipate employing persons who are evidencing persistent learning and are capable of accommodating new technologies, methods, and demands. It goes hand in hand with staying abreast of research developments and welcoming learning new tools and frameworks.

Successful artificial intelligence developers are open to being transformed and unsure. They recognize that the most advanced methods used now may be outdated tomorrow and work together with an air of wonder and adaptability. Businesses appreciate developers who can adapt fast and absorb new knowledge effectively.

Experience with Real-World Deployment