#but i never heard people mention it

Text

information flow in transformers

In machine learning, the transformer architecture is a very commonly used type of neural network model. Many of the well-known neural nets introduced in the last few years use this architecture, including GPT-2, GPT-3, and GPT-4.

This post is about the way that computation is structured inside of a transformer.

Internally, these models pass information around in a constrained way that feels strange and limited at first glance.

Specifically, inside the "program" implemented by a transformer, each segment of "code" can only access a subset of the program's "state." If the program computes a value, and writes it into the state, that doesn't make value available to any block of code that might run after the write; instead, only some operations can access the value, while others are prohibited from seeing it.

This sounds vaguely like the kind of constraint that human programmers often put on themselves: "separation of concerns," "no global variables," "your function should only take the inputs it needs," that sort of thing.

However, the apparent analogy is misleading. The transformer constraints don't look much like anything that a human programmer would write, at least under normal circumstances. And the rationale behind them is very different from "modularity" or "separation of concerns."

(Domain experts know all about this already -- this is a pedagogical post for everyone else.)

1. setting the stage

For concreteness, let's think about a transformer that is a causal language model.

So, something like GPT-3, or the model that wrote text for @nostalgebraist-autoresponder.

Roughly speaking, this model's input is a sequence of words, like ["Fido", "is", "a", "dog"].

Since the model needs to know the order the words come in, we'll include an integer offset alongside each word, specifying the position of this element in the sequence. So, in full, our example input is

[

("Fido", 0),

("is", 1),

("a", 2),

("dog", 3),

]

The model itself -- the neural network -- can be viewed as a single long function, which operates on a single element of the sequence. Its task is to output the next element.

Let's call the function f. If f does its job perfectly, then when applied to our example sequence, we will have

f("Fido", 0) = "is"

f("is", 1) = "a"

f("a", 2) = "dog"

(Note: I've omitted the index from the output type, since it's always obvious what the next index is.

Also, in reality the output type is a probability distribution over words, not just a word; the goal is to put high probability on the next word. I'm ignoring this to simplify exposition.)

You may have noticed something: as written, this seems impossible!

Like, how is the function supposed to know that after ("a", 2), the next word is "dog"!? The word "a" could be followed by all sorts of things.

What makes "dog" likely, in this case, is the fact that we're talking about someone named "Fido."

That information isn't contained in ("a", 2). To do the right thing here, you need info from the whole sequence thus far -- from "Fido is a", as opposed to just "a".

How can f get this information, if its input is just a single word and an index?

This is possible because f isn't a pure function. The program has an internal state, which f can access and modify.

But f doesn't just have arbitrary read/write access to the state. Its access is constrained, in a very specific sort of way.

2. transformer-style programming

Let's get more specific about the program state.

The state consists of a series of distinct "memory regions" or "blocks," which have an order assigned to them.

Let's use the notation memory_i for these. The first block is memory_0, the second is memory_1, and so on.

In practice, a small transformer might have around 10 of these blocks, while a very large one might have 100 or more.

Each block contains a separate data-storage "cell" for each offset in the sequence.

For example, memory_0 contains a cell for position 0 ("Fido" in our example text), and a cell for position 1 ("is"), and so on. Meanwhile, memory_1 contains its own, distinct cells for each of these positions. And so does memory_2, etc.

So the overall layout looks like:

memory_0: [cell 0, cell 1, ...]

memory_1: [cell 0, cell 1, ...]

[...]

Our function f can interact with this program state. But it must do so in a way that conforms to a set of rules.

Here are the rules:

The function can only interact with the blocks by using a specific instruction.

This instruction is an "atomic write+read".

It writes data to a block, then reads data from that block for f to use.

When the instruction writes data, it goes in the cell specified in the function offset argument. That is, the "i" in f(..., i).

When the instruction reads data, the data comes from all cells up to and including the offset argument.

The function must call the instruction exactly once for each block.

These calls must happen in order.

For example, you can't do the call for memory_1 until you've done the one for memory_0.

Here's some pseudo-code, showing a generic computation of this kind:

f(x, i) {

calculate some things using x and i;

// next 2 lines are a single instruction

write to memory_0 at position i;

z0 = read from memory_0 at positions 0...i;

calculate some things using x, i, and z0;

// next 2 lines are a single instruction

write to memory_1 at position i;

z1 = read from memory_1 at positions 0...i;

calculate some things using x, i, z0, and z1;

[etc.]

}

The rules impose a tradeoff between the amount of processing required to produce a value, and how early the value can be accessed within the function body.

Consider the moment when data is written to memory_0. This happens before anything is read (even from memory_0 itself).

So the data in memory_0 has been computed only on the basis of individual inputs like ("a," 2). It can't leverage any information about multiple words and how they relate to one another.

But just after the write to memory_0, there's a read from memory_0. This read pulls in data computed by f when it ran on all the earlier words in the sequence.

If we're processing ("a", 2) in our example, then this is the point where our code is first able to access facts like "the word 'Fido' appeared earlier in the text."

However, we still know less than we might prefer.

Recall that memory_0 gets written before anything gets read. The data living there only reflects what f knows before it can see all the other words, while it still only has access to the one word that appeared in its input.

The data we've just read does not contain a holistic, "fully processed" representation of the whole sequence so far ("Fido is a"). Instead, it contains:

a representation of ("Fido", 0) alone, computed in ignorance of the rest of the text

a representation of ("is", 1) alone, computed in ignorance of the rest of the text

a representation of ("a", 2) alone, computed in ignorance of the rest of the text

Now, once we get to memory_1, we will no longer face this problem. Stuff in memory_1 gets computed with the benefit of whatever was in memory_0. The step that computes it can "see all the words at once."

Nonetheless, the whole function is affected by a generalized version of the same quirk.

All else being equal, data stored in later blocks ought to be more useful. Suppose for instance that

memory_4 gets read/written 20% of the way through the function body, and

memory_16 gets read/written 80% of the way through the function body

Here, strictly more computation can be leveraged to produce the data in memory_16. Calculations which are simple enough to fit in the program, but too complex to fit in just 20% of the program, can be stored in memory_16 but not in memory_4.

All else being equal, then, we'd prefer to read from memory_16 rather than memory_4 if possible.

But in fact, we can only read from memory_16 once -- at a point 80% of the way through the code, when the read/write happens for that block.

The general picture looks like:

The early parts of the function can see and leverage what got computed earlier in the sequence -- by the same early parts of the function.

This data is relatively "weak," since not much computation went into it. But, by the same token, we have plenty of time to further process it.

The late parts of the function can see and leverage what got computed earlier in the sequence -- by the same late parts of the function.

This data is relatively "strong," since lots of computation went into it. But, by the same token, we don't have much time left to further process it.

3. why?

There are multiple ways you can "run" the program specified by f.

Here's one way, which is used when generating text, and which matches popular intuitions about how language models work:

First, we run f("Fido", 0) from start to end.

The function returns "is."

As a side effect, it populates cell 0 of every memory block.

Next, we run f("is", 1) from start to end.

The function returns "a."

As a side effect, it populates cell 1 of every memory block.

Etc.

If we're running the code like this, the constraints described earlier feel weird and pointlessly restrictive.

By the time we're running f("is", 1), we've already populated some data into every memory block, all the way up to memory_16 or whatever.

This data is already there, and contains lots of useful insights.

And yet, during the function call f("is", 1), we "forget about" this data -- only to progressively remember it again, block by block. The early parts of this call have only memory_0 to play with, and then memory_1, etc. Only at the end do we allow access to the juicy, extensively processed results that occupy the final blocks.

Why? Why not just let this call read memory_16 immediately, on the first line of code? The data is sitting there, ready to be used!

Why? Because the constraint enables a second way of running this program.

The second way is equivalent to the first, in the sense of producing the same outputs. But instead of processing one word at a time, it processes a whole sequence of words, in parallel.

Here's how it works:

In parallel, run f("Fido", 0) and f("is", 1) and f("a", 2), up until the first write+read instruction.

You can do this because the functions are causally independent of one another, up to this point.

We now have 3 copies of f, each at the same "line of code": the first write+read instruction.

Perform the write part of the instruction for all the copies, in parallel.

This populates cells 0, 1 and 2 of memory_0.

Perform the read part of the instruction for all the copies, in parallel.

Each copy of f receives some of the data just written to memory_0, covering offsets up to its own.

For instance, f("is", 1) gets data from cells 0 and 1.

In parallel, continue running the 3 copies of f, covering the code between the first write+read instruction and the second.

Perform the second write.

This populates cells 0, 1 and 2 of memory_1.

Perform the second read.

Repeat like this until done.

Observe that mode of operation only works if you have a complete input sequence ready before you run anything.

(You can't parallelize over later positions in the sequence if you don't know, yet, what words they contain.)

So, this won't work when the model is generating text, word by word.

But it will work if you have a bunch of texts, and you want to process those texts with the model, for the sake of updating the model so it does a better job of predicting them.

This is called "training," and it's how neural nets get made in the first place. In our programming analogy, it's how the code inside the function body gets written.

The fact that we can train in parallel over the sequence is a huge deal, and probably accounts for most (or even all) of the benefit that transformers have over earlier architectures like RNNs.

Accelerators like GPUs are really good at doing the kinds of calculations that happen inside neural nets, in parallel.

So if you can make your training process more parallel, you can effectively multiply the computing power available to it, for free. (I'm omitting many caveats here -- see this great post for details.)

Transformer training isn't maximally parallel. It's still sequential in one "dimension," namely the layers, which correspond to our write+read steps here. You can't parallelize those.

But it is, at least, parallel along some dimension, namely the sequence dimension.

The older RNN architecture, by contrast, was inherently sequential along both these dimensions. Training an RNN is, effectively, a nested for loop. But training a transformer is just a regular, single for loop.

4. tying it together

The "magical" thing about this setup is that both ways of running the model do the same thing. You are, literally, doing the same exact computation. The function can't tell whether it is being run one way or the other.

This is crucial, because we want the training process -- which uses the parallel mode -- to teach the model how to perform generation, which uses the sequential mode. Since both modes look the same from the model's perspective, this works.

This constraint -- that the code can run in parallel over the sequence, and that this must do the same thing as running it sequentially -- is the reason for everything else we noted above.

Earlier, we asked: why can't we allow later (in the sequence) invocations of f to read earlier data out of blocks like memory_16 immediately, on "the first line of code"?

And the answer is: because that would break parallelism. You'd have to run f("Fido", 0) all the way through before even starting to run f("is", 1).

By structuring the computation in this specific way, we provide the model with the benefits of recurrence -- writing things down at earlier positions, accessing them at later positions, and writing further things down which can be accessed even later -- while breaking the sequential dependencies that would ordinarily prevent a recurrent calculation from being executed in parallel.

In other words, we've found a way to create an iterative function that takes its own outputs as input -- and does so repeatedly, producing longer and longer outputs to be read off by its next invocation -- with the property that this iteration can be run in parallel.

We can run the first 10% of every iteration -- of f() and f(f()) and f(f(f())) and so on -- at the same time, before we know what will happen in the later stages of any iteration.

The call f(f()) uses all the information handed to it by f() -- eventually. But it cannot make any requests for information that would leave itself idling, waiting for f() to fully complete.

Whenever f(f()) needs a value computed by f(), it is always the value that f() -- running alongside f(f()), simultaneously -- has just written down, a mere moment ago.

No dead time, no idling, no waiting-for-the-other-guy-to-finish.

p.s.

The "memory blocks" here correspond to what are called "keys and values" in usual transformer lingo.

If you've heard the term "KV cache," it refers to the contents of the memory blocks during generation, when we're running in "sequential mode."

Usually, during generation, one keeps this state in memory and appends a new cell to each block whenever a new token is generated (and, as a result, the sequence gets longer by 1).

This is called "caching" to contrast it with the worse approach of throwing away the block contents after each generated token, and then re-generating them by running f on the whole sequence so far (not just the latest token). And then having to do that over and over, once per generated token.

#ai tag#is there some standard CS name for the thing i'm talking about here?#i feel like there should be#but i never heard people mention it#(or at least i've never heard people mention it in a way that made the connection with transformers clear)

304 notes

·

View notes

Text

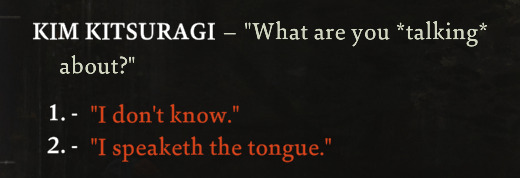

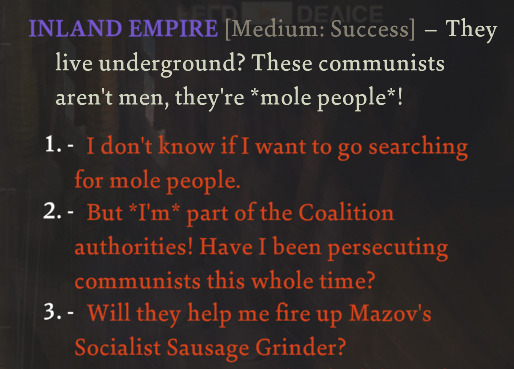

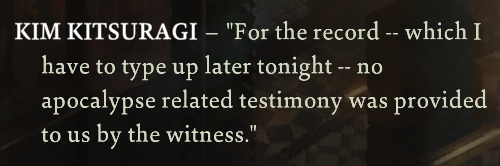

Collection Of My Disco Elysium Screenshots That I Like A Lot

#disco elysium#harry du bois#harrier du bois#somehow my harry went all off-putting and strange and apocalyptic on me#started as a sorry cop; became a cop of the apocalypse#I hear about all the other cop types a lot but I've never heard anyone mention being the cop of the apocalypse#are the majority of people not going the whole 'warning the world about the end times' route?

2K notes

·

View notes

Text

I’ve mentioned this elsewhere but it feels relevant again in light of the most recent episode. Something that’s really fascinating to me about Orym’s grief in comparison to the rest of the hells’ grief is that his is the youngest/most fresh and because of that tends to be the most volatile when it is triggered (aside from FCG, who was two and obviously The Most volatile when triggered.)

As in: prior to the attack on Zephrah, Orym was leading a normal, happy, casual life! with family who loved him and still do! Grief was something that was inflicted upon him via Ludinus’ machinations, whereas with characters like Imogen or Ashton, grief has been the background tapestry of their entire lives. And I think that shows in how the rest of them are largely able to, if not see past completely (Imogen/Laudna/Chetney) then at least temper/direct their vitriol or grief (Ashton/Fearne/Chetney again) to where it is most effective. (There is a glaring reason, for example, that Imogen scolded Orym for the way he reacted to Liliana and not Ashton. Because Ashton’s anger was directed in a way that was ultimately protective of Imogen—most effective—and Orym’s was founded solely in his personal grief.)

He wants Imogen to have her mom and he wants Lilliana to be salvageable for Imogen because he loves Imogen. But his love for the people in his present actively and consistently tend to conflict with the love he has for the people in his past. They are in a constant battle and Orym—he cannot fathom losing either of them.

(Or, to that point, recognize that allowing empathy to take root in him for the enemy isn't losing one of them.)

It is deeply poignant, then, that Orym’s grief is symbolized by both a sword and shield. It is something he wields as a blade when he feels his philosophy being threatened by certain conversational threads (as he believes it is one of the only things he has left of Will and Derrig, and is therefore desperately clinging onto with both bloody hands even if it makes him, occasionally, a hypocrite), but also something he can use in defense of the people he presently loves—if that provocative, blade-grief side of him does not push them—or himself—away first.

(it won’t—he is as loved by the hells as he loves them. he just needs to—as laudna so beautifully said—say and hear it more often.)

#critical role#cr spoilers#bells hells#orym of the air ashari#cr meta#imogen temult#ashton greymoore#liliana temult#this is genuinely completely written in good faith as someone who loves orym#but is also about orym and so will inevitably end up being completely misconstrued and made into discourse. alas#I could talk about how Orym’s unwillingness to allow the hells to actually finish/come to a solid conclusion on Philosophy Talk#is directly connected to one of the largest criticisms of c3 (that they are constantly having these conversations)#all day. alas. engaging with orym’s flaws tends to make people upset#it is ESP prevelant when he walks off after exclaiming ‘they (vangaurd) are NOT right’#which was not only never said but wasn’t even what they were talking about#he even admits as much to imogen like ten minutes later! that he is incapable of viewing it objectively#which is 100% justifiable and understandable but simultaneously does not make his grief alone the most important perspective in the world#also bc i fear ppl will play semantics on my tags yes the line ‘i hope she’s right’ was said but it was from ASHTON#who does not believe they are at all and wasn’t saying they actively WERE right. orym just heard something to latch onto and ran with it#ultimately there is a reason orym only admitted that he was struggling when he had stepped away to talk to dorian#who has not been around and thusly has not changed once n orym's eyes#and it isn't that the hells never check in or care. they do. they have several times over#it is dishonest to say they haven't#the actual reason is that all of this is something He Is Aware Of. he doesn't mention it bc he KNOWS it's hypocritical and selfish#he says as much!#EXHALES. @ MY OWN BRAIN CAN WE THINK ABT MOG AGAIN. FYRA RAI EVEN. FOR ME.#posting this literally at 8 in the morning so I can get my thoughts out of my brain but also attempt to immediately make this post invisibl

166 notes

·

View notes

Text

if you're ever like, "hey sarah, how generally lame are you?" please know that I went on a historical walking tour today, and literally pumped the air when the tour guide mentioned a local historian I follow on twitter, and his recent project.

that.

that's exactly how lame I am.

#one of the great blessings of age is you realize you're never ever going to be cool and you just let it go#fortunately historical tour guides very much understand this particular lameness.#the guide was telling me about all the historical sites they've explored; the doors they've opened#and the stories they've heard from other nerds in this space.#so that was lovely. and I was genuinely jazzed when they mentioned that project.#it's cool! I love historical stories and the people who put them together.#about me

127 notes

·

View notes

Text

#just looking at the dates/travel distances for Danhausen's toy signing tour makes me feel tired#I have no idea how he does it because he never seems to stop working even when he's injured#but I've heard literally nothing but good things about people's experiences with him#not just from fans either!#he was one of the first names mentioned as being above and beyond nice when I was chatting with someone who works wrestling events in the US#plus if RJ is saying something genuine about danhausen it must be true. RJ would never risk journalistic integrity by lying like that#anyway love that danhausen#AEW#RJ City#Danhausen

302 notes

·

View notes

Text

btw i will forever recommend just. refusing to engage in discourse. its free its easy and you literally arent missing anything LMAO - 99% of discourse on here is just on the most pointless petty shit that literally doesnt mean a single thing to anyone in real life (i am looking directly at you "pRo/AnTi" shippers), and the other 1% is genuinely important shit... that isnt going to be solved in any productive way by insulting '''the other side''' online. arguing with strangers online never changes anyones mind all youre doing is making yourself *and* your cause look annoying as hell :thumbsup: maybe chill out. find a hobby.

#dont even get me started on how apparently this entire fucking site has never heard of nuance in its life#im ngl dude i think if youre boiling down a complicated topic to 'well this is the good side (my side) and then the BAD EVIL SIDE'#and putting anyone who even slightly falls out of line with your beliefs on the evil side#like. thats not gonna be productive in the slightest right. you understand that right#if you wanna have meaningful nuanced discussions with people you actually know about serious topics then go for it!#just dont drag random strangers into it#if i have to see one more post with dumb bullshit acronyms that everyones expected to know that insults anyone who doesnt blindly agree wit#them i stg#'if you dont agree with this then clearly youre a [evil side] who hates [group] and does [bad thing]. theres no other logical explanation#for you possibly not agreeing with me'#and theyre talking about the most obscure insane discourse youve literally never heard of before thatll be flooding your dash for the next#month#had to unfollow a really good artist because they just kep reblogging the most aggressive 'every [evil side] sucks and hates [good side] an#doesnt care about them and wants to oppress them'#(said '[evil side]' wasnt even a moral stance it was literally just something you were born as. like. you get how thats fucked up right)#which uh. sucked! especially since i was part of that [evil side]#anyway midnight rant over tldr uhhh discourse stupid go get hobbies#and if i ever mention what discourse topic inspired this post ill probably get torn apart LMAOO#(hint: its one of the stupid pointless ones)#me.txt

47 notes

·

View notes

Text

Ask and you shall receive (a sneak peak of what's to come)

#poorly drawn mdzs#mdzs#wei wuxian#lan wangji#mdzs au#homestuck#I genuinely do have troll designs for the major characters of mdzs adn thoughts pertaining to their hemospectrum#I honestly thought ppl would start throwing holy salt at me at the mention of homestuck but the enthusiasm is super motivating!#With that said; Thank you all so much for the support with the hollow knight crossover#Even people who have never heard of hollow knight have been so kind (go buy and play hollow knight; the aesthetic and story are amazing)#More bug doodles and comic are ahead! I'll try and space them out between comic updates.#More thoughts will come later but for now...allow me to leave you with this:#Non-homestucks may see the blue and red and go 'aw blue-red ship how cute' while those who know might realize exactly what im putting down#namely that this version of wwx is *very* interested and persistent about getting lwj to spend time with him.#Lwj lives in a very insular indigo colony and isn't fully aware of the differences in life span between hemocasts (yet).#But wwx is. So he's driven to live life to the fullest! This would also drive him to be way more self-sacrificing.#Since his life is so small compared to everyone else he loves anyways. That purple isn't just aesthetic either.

334 notes

·

View notes

Note

is it true that women usually give birth on their backs because some king had a fetish for childbirth and wanted to see it

No

#ask#just-some-common-bitch#ask meme#ask game#no nuance history asks#i’ve never even heard that one to be honest#but I looked it up and apparently it’s attributed to various different people with no one able to agree who the supposed king actually was#*attributed to#The version I heard was that it was easier for doctors#I don’t think a lot of people even do give birth lying down nowadays#aren’t they usually like… Reclining but propped up?#childbirth mention

32 notes

·

View notes

Text

antarctica blood red five story tall waterfall from a pool 400 meters under a glacier and three times saltier than seawater that's been evolving its own unique anaerobic microbial ecosystem in isolation for two million years that flows at a temperature of 1.4 degrees f/-17 c. if you even care

#HELLO?????#this is one of those things that i would think people would freak out about on here. are you guys seeing this#why have i never heard about this before#i saw someone mention a blood river in a post and it was unsourced and i was like no way thats real. but it is#only accessible by helicopter or cruise ship 😔#me

49 notes

·

View notes

Text

i don't get the demetrius hate - maybe he never mentions sebastian because sebastian set a boundary that he isn't his father and that he doesn't want him to be his father

we all know the town gossips & it's clear to see sebastian and maru are half siblings - a child sebastian could've internalised that and pushed demetrius away

#i feel like because maru & sebastian are likely the same age & 'irish twins' that it's a subject of heavy gossip in the valley#and that heavily strained his relationship with his half sister and stepfather#and bc sebastian is an adult and allowed to set his own boundaries - demetrius would hopefully respect that#maybe that's wishful thinking but i'm hoping sebastian decided that he didn't want demetrius to be his dad or have a relationship with him#during adulthood which is why sebastian still sometimes mentions it#i think it's more two traumatised people hurting each other because small town...#and let's be honest - without added diversity mods - the majority white small town isn't gonna have nice things to say about demetrius!!!!#sebastian could've easily heard it and internalised it#we never know why specifically their relationship is nonexistent except that demetrius favours maru#and that sebastian isn't close with him and that demetrius made him tear down his snowglob#is this stardew valley meta#stardew valley#stardew valley demetrius#demetrius

24 notes

·

View notes

Text

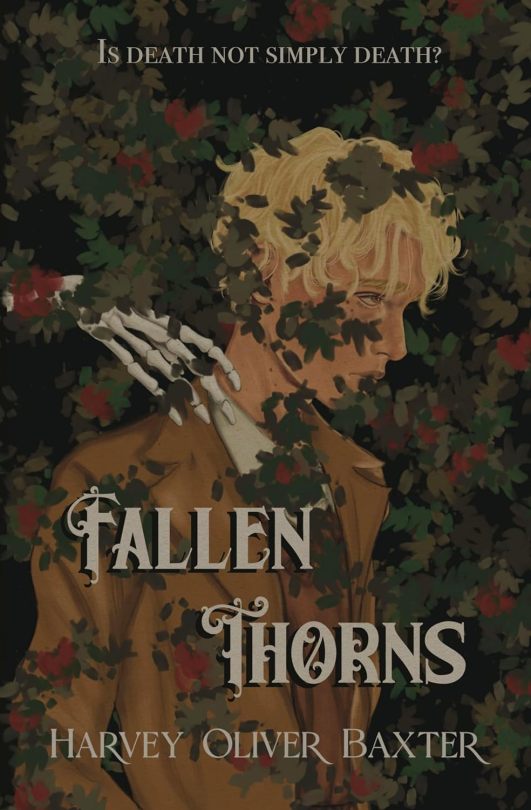

2024 reads / storygraph

Fallen Thorns

dark urban fantasy coming-of-age

follows a boy settling into university, when after a date (that he didn’t even want to go on) turns bad he’s made into a vampire

as he settles into his new existence and the local vampire community - while they try to find who’s been leaving bodies across the city - he discovers that there’s something different and darker within him

aroace neurodivergent MC

#fallen thorns#aroaessidhe 2024 reads#I enjoyed this a lot!#there's definitely things I want to know more about...I think it's going to be a series? the only thing explicitly mentioned is a prequel#the ending is a bit weird and I don't entirely get the sun/star/moon stuff dfhgd#really great characters and atmosphere#great aroace MC in general tho minor pet peeve from me:#it does have that thing where the character spends half the book ruminating about how there’s something wrong with them bc they don't feel#things everyone else does etc etc and it’s like omg all these queer people and nobody’s heard about asexuality???#then he’s having a conversation with someone later in the book and he’s like yeah I know about asexual but I didn’t think about that re: me#and the other char is like: what if…..it IS you…..and he’s like omg. i AM asexual there’s nothing wrong with me after all! (in like. a page#like I’ve read this in multiple books LMAO. I do get that you can know about an identity and not connect it to your experiences#but somehow the writing of it like this is never quite believable? too sudden? then it's not thought about much after that?#anyway that's not a critique of the ''representation'' as much as just the writing I think - there's a few areas where I thought the writin#could be improved structurally or whatever. Didn't massively impede my enjoyment.#(I do also love an aroace mentor/parent figure!)#aromantic#aroace books#aromantic books

48 notes

·

View notes

Text

Hello fandom. I understand that very few of you will care about my personal opinion, and that's fine, but I find it important enough to how I run my blog to share anyway.

In the future, all of my posts will simply be avoiding any mention of Wilbur wherever possible. His character is a major part of Tallulah's story, but I will be keeping him away from my blog as much as I can.

Typically, I would go with a "death of the author" approach and keep mentions of the character and cc more separate. However, the cc's alleged quest for money and fame changes that entirely. I will not be contributing to that. That's just my personal choice, so there should be no shame to anyone who chooses to separate the two, obviously.

I watched Shubble's video and I saw his response. In my opinion, it was terrible. The way he centralized his own "growth," minimized the pain he caused, and left the actual apology on the second page is revealing. His statement reminds me of some of the past emotional abuse I've experienced, so his content will no longer be welcome on my blog. I believe in the merit of archiving, so I will not be deleting any past posts, but he will no longer have any place in my death family related tags.

#I am not usually an unprovoked statement kind of person#especially because I don't think that's why people look at my content and I 100% understand a desire for escapism#However his name would absolutely pop up naturally on this blog since I main Philza.#I have little interest in letting that happen though.#I have mentioned in the past how victims should never feel forced into silence and this is a part of that.#I listen to the victim. I wait for more information. I form an opinion when I can assess both parties b/c victims deserve to be heard .#I have listened and will continue listening but Wilbur and his own words are enough to paint my own picture.#Unless something major changes this is how me and my blog will be handling it. Thank you.#qsmp#qsmp wilbur#wilbur situation#tw abuse

37 notes

·

View notes

Text

The idea that Black people are predisposed to hating queer people is Anti-Black rascism. The people most hurt by these assertions are not only Black people but queer Black people as well. You are not saving queer people by throwing Black people under the bus, and you'll never save queers by doing that, so just stop.

#queer#lgbt#lgbtq#racism tw#racism mention tw#antiblackness tw#antiblackness mention tw#queerphobia tw#also evaluate what underlying prejudices led to that strong sense that that idea was not only *right* but something to verbalize#saw some uhhhh... words of choice from somebody who said something i've heard for years#like... y'all do know Black queer people exist and can see/hear/read what you're saying right. and that that doesn't make them feel safe.#but i digress. this is not my post to add onto anymore. Black people feel free to take this and add onto/expand/whatever...#...you feel comfortable and/or safe sharing 🫡#and even IF somehow Black queer people didn't exist... it still isn't appropriate or right or just to be anti-Black. because it never is.#even IF somehow you didn't share community with Black people they still deserve not to face anti-Blackness.#okay. NOW i digress apparently.

154 notes

·

View notes

Text

austin should just never say what vibe a season is going for ever again because i keep seeing people referencing him talking about palisade as a hopeful season (as something they're aiming for, at least) & well. how it didn't really shake out that way. people including me btw i have thought about this many a times (probably most during the questlandia game post Oh-You-Know-What happening!)

edit: where tf did he even say that because it was not the playlist thing like i thought. unless i just missed it 3 times

#it's one thing to have a goal (& even there: thing to keep in mind vs hard rule?) and another to set expectations#& it's not like i'm really faulting austin for that especially bc. if i remember right he said that first on the thing he recorded -#- for the playlist! talking about song choices in relation to the season!#i don't quite know where i'm going w that it's just. many people never heard him say that and DIDN'T have expectations and probably in some#- cases that meant they had a better time of it. and ig i wish i could've been one of those people#wouldn't have helped with all my grievances certainly but probably a few!#as is it kind of stuck with me#palisadeposting#palisade spoilers#ish.#ig don't know how i feel seeing it mentioned alongside more genuine(?) criticisms to make about the season#which i personally haven't even articulated for myself yet though i've seen a few i at least somewhat agree with...#anywho i just got jumpscared by slyvi liking my leapcas comic

12 notes

·

View notes

Text

I. Hurt.

And I was hurting anyway, I'm pretty down this morning, but this hurt came from an outside source, and affected me in a way I'd honestly not have expected.

See, we bought Nimona last week. After seeing the movie, my kids wanted to read it. And I ended up reading ahead, and I just finished it.

Bonus content at the end, it said, and I was like, oh, an epilogue to the epilogue maybe? That'd be nice. I don't love bittersweet endings, I'd rather...

...no, it's not the conclusion.

It's CHRISTMAS.

In a book that'd had no religion that I noticed up to that point, BOTH bonus extras...were Christmas.

Ya know, usually it doesn't bother me. Usually I just suck it up. I think it helps that I was raised around mostly Jews and people who, if Christian, it didn't matter much to them. I'm from the Upper West Side of Manhattan, the descendent of Lower East Side immigrants, and while the world outside was brutal - my grandfather was a World War 2 veteran and among the soldiers who liberated Dachau, I can't remember a time when I didn't know that most people would look the other way if people like me were slaughtered wholesale - my bubble was safe, we were accepted, we were insiders.

I honestly can't think of another time I've interacted with a piece of media and felt so immediately, instantly knocked across the face by OUTSIDER as I just did when I excitedly turned the page to see what these fun extra bonuses were...and it was fucking Christmas.

I didn't even read them.

I'm honestly. So disappointed.

I don't have a thick armor for this kind of hurt. I'm Jewish, and as an adult living outside my old UWS bubble, that's often meant I've felt like an outlier, but I've hardly ever had this feeling where I was welcome to something only to be suddenly, violently shoved out the door.

And I've heard nothing, n.o.t.h.i.n.g. but praise for this book. And on another day, it might not have bothered me. I've never really felt like I had to fight to be seen, especially since I'm tremendously secular. I mean, I've celebrated Christmas my entire life, for starters.

But why. Why was this fantasy setting suddenly Christian? Why was this the touted extra content? Why is THIS special, when the areligious world established to that point was apparently not special enough?

I can't say yet if this ruined the story for me. It's far too soon. But I'm *intensely*, viscerally let down, and...I hurt.

Christians...maybe stop doing this shit.

#unforth rambles#im not sure how to tag this#i dont want to tag fandom since its kinda anti#and i dont want to tag antisemitism cause its not really#and i dont want to tag microaggressions cause thatll just show my privilege that ive been lucky enough to not have this feeling more often#but seriously WHAT THE FUCK#im genuinely considering rereading just so i can see if it was always christian and i just missed it until then#because its so fucking ubiquitous that it slides right off#but i dont think it was!!!#WHYS IT GOTTA BE CHRISTIAN WHAT THE HELL#and why have i never heard this mentioned surely im not the only person to notice this#maybe it was less jarring for people who rwad along with the webcomic#since these were extras released along thw qay#not shoved in the back of the book like in the print edition#i dont fucking know#i just know i hate it#this special fun thing could have been anything#and instead it was for one specific segment of the audience#and thats honestly so unnecessary and kinda yicky#dont you guys every get tired of making everything about your fucking dumb holidays you stole from other cultures#give me back passover i demand you turn over easter as a reparation

70 notes

·

View notes

Text

jewish shower thoughts

A Reddit comment I can't stop thinking about: "If you think whiteness is bad, Jews are white to you. If you think whiteness is good, Jews aren't white to you."

#Assorted context:#the belief that Israel is white people colonizing and stealing the land of indigenous people of color#“Jewish supremacy”#“Israel supremacy”#“nobody can tell you're Jewish unless you say something therefore antisemitism is not a problem and it's [only] our hijabis who are at risk#if you're confused about the first example:#Jews are an ethnicity and are the indigenous people of the region under the UN's definition of indigineity#nobody is stealing the Gaza Strip#Israel pulled out of it in 2005 and made all Israeli citizens move too#nobody stole land from Palestinians to create Israel#if a two-state solution is stealing land from Palestinians then it should be even worse that Britain gave 3/4 of the land up to Jordan#literally the entire state of Jordan was originally part of the 'Mandate for Palestine'#I've never even heard people mention that; I found it out by accident while digging into the history here

46 notes

·

View notes