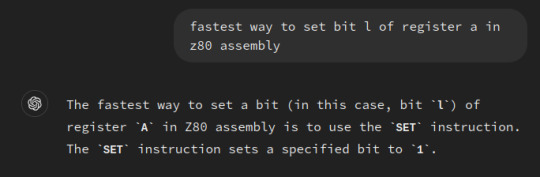

#chatgpt hallucination

Text

I wanted to see if our friend The World's Dumbest Robot could figure out the extremely simple process of "set bit L of register A" in z80 assembly, and the results were...consistent.

What a moron.

#my thoughts#programming#chatgpt#assembly#i think it was getting confused with 8086 assembly?#'cause it kept trying to use totally hallucinated combinations of opcodes and operands#like OR A‚ B#or trying to use CP 0#to compare either the C or L registers to 0#like what are you even doing

10 notes

·

View notes

Text

#artificial intelligence#are you hallucinating again ChatGPT?#this reminds me... I've watched 2001: a space odyssey the other day

9 notes

·

View notes

Video

youtube

*You won’t see chess commentary like this every day, ladies and gentlemen

32 notes

·

View notes

Text

finished the first draft of the short story i've been working on!

#honestly i hope i get it edited and sent out soon#and that someone picks it up (obviously)#because for once i have an elevator pitch i'm proud of#even if it isn't good#“two will-they-won't-they besties use a keytar to seance ChatGPT”#“will they die of dehydration in the desert because the chatbot hallucinated a rainy forecast?”#“TUNE IN TO FIND OUT”

3 notes

·

View notes

Text

Why Do AI Chatbots Hallucinate? Exploring the Science

New Post has been published on https://thedigitalinsider.com/why-do-ai-chatbots-hallucinate-exploring-the-science/

Why Do AI Chatbots Hallucinate? Exploring the Science

Artificial Intelligence (AI) chatbots have become integral to our lives today, assisting with everything from managing schedules to providing customer support. However, as these chatbots become more advanced, the concerning issue known as hallucination has emerged. In AI, hallucination refers to instances where a chatbot generates inaccurate, misleading, or entirely fabricated information.

Imagine asking your virtual assistant about the weather, and it starts giving you outdated or entirely wrong information about a storm that never happened. While this might be interesting, in critical areas like healthcare or legal advice, such hallucinations can lead to serious consequences. Therefore, understanding why AI chatbots hallucinate is essential for enhancing their reliability and safety.

The Basics of AI Chatbots

AI chatbots are powered by advanced algorithms that enable them to understand and generate human language. There are two main types of AI chatbots: rule-based and generative models.

Rule-based chatbots follow predefined rules or scripts. They can handle straightforward tasks like booking a table at a restaurant or answering common customer service questions. These bots operate within a limited scope and rely on specific triggers or keywords to provide accurate responses. However, their rigidity limits their ability to handle more complex or unexpected queries.

Generative models, on the other hand, use machine learning and Natural Language Processing (NLP) to generate responses. These models are trained on vast amounts of data, learning patterns and structures in human language. Popular examples include OpenAI’s GPT series and Google’s BERT. These models can create more flexible and contextually relevant responses, making them more versatile and adaptable than rule-based chatbots. However, this flexibility also makes them more prone to hallucination, as they rely on probabilistic methods to generate responses.

What is AI Hallucination?

AI hallucination occurs when a chatbot generates content that is not grounded in reality. This could be as simple as a factual error, like getting the date of a historical event wrong, or something more complex, like fabricating an entire story or medical recommendation. While human hallucinations are sensory experiences without external stimuli, often caused by psychological or neurological factors, AI hallucinations originate from the model’s misinterpretation or overgeneralization of its training data. For example, if an AI has read many texts about dinosaurs, it might erroneously generate a new, fictitious species of dinosaur that never existed.

The concept of AI hallucination has been around since the early days of machine learning. Initial models, which were relatively simple, often made seriously questionable mistakes, such as suggesting that “Paris is the capital of Italy.” As AI technology advanced, the hallucinations became subtler but potentially more dangerous.

Initially, these AI errors were seen as mere anomalies or curiosities. However, as AI’s role in critical decision-making processes has grown, addressing these issues has become increasingly urgent. The integration of AI into sensitive fields like healthcare, legal advice, and customer service increases the risks associated with hallucinations. This makes it essential to understand and mitigate these occurrences to ensure the reliability and safety of AI systems.

Causes of AI Hallucination

Understanding why AI chatbots hallucinate involves exploring several interconnected factors:

Data Quality Problems

The quality of the training data is vital. AI models learn from the data they are fed, so if the training data is biased, outdated, or inaccurate, the AI’s outputs will reflect those flaws. For example, if an AI chatbot is trained on medical texts that include outdated practices, it might recommend obsolete or harmful treatments. Furthermore, if the data lacks diversity, the AI may fail to understand contexts outside its limited training scope, leading to erroneous outputs.

Model Architecture and Training

The architecture and training process of an AI model also play critical roles. Overfitting occurs when an AI model learns the training data too well, including its noise and errors, making it perform poorly on new data. Conversely, underfitting happens when the model needs to learn the training data adequately, resulting in oversimplified responses. Therefore, maintaining a balance between these extremes is challenging but essential for reducing hallucinations.

Ambiguities in Language

Human language is inherently complex and full of nuances. Words and phrases can have multiple meanings depending on context. For example, the word “bank” could mean a financial institution or the side of a river. AI models often need more context to disambiguate such terms, leading to misunderstandings and hallucinations.

Algorithmic Challenges

Current AI algorithms have limitations, particularly in handling long-term dependencies and maintaining consistency in their responses. These challenges can cause the AI to produce conflicting or implausible statements even within the same conversation. For instance, an AI might claim one fact at the beginning of a conversation and contradict itself later.

Recent Developments and Research

Researchers continuously work to reduce AI hallucinations, and recent studies have brought promising advancements in several key areas. One significant effort is improving data quality by curating more accurate, diverse, and up-to-date datasets. This involves developing methods to filter out biased or incorrect data and ensuring that the training sets represent various contexts and cultures. By refining the data that AI models are trained on, the likelihood of hallucinations decreases as the AI systems gain a better foundation of accurate information.

Advanced training techniques also play a vital role in addressing AI hallucinations. Techniques such as cross-validation and more comprehensive datasets help reduce issues like overfitting and underfitting. Additionally, researchers are exploring ways to incorporate better contextual understanding into AI models. Transformer models, such as BERT, have shown significant improvements in understanding and generating contextually appropriate responses, reducing hallucinations by allowing the AI to grasp nuances more effectively.

Moreover, algorithmic innovations are being explored to address hallucinations directly. One such innovation is Explainable AI (XAI), which aims to make AI decision-making processes more transparent. By understanding how an AI system reaches a particular conclusion, developers can more effectively identify and correct the sources of hallucination. This transparency helps pinpoint and mitigate the factors that lead to hallucinations, making AI systems more reliable and trustworthy.

These combined efforts in data quality, model training, and algorithmic advancements represent a multi-faceted approach to reducing AI hallucinations and enhancing AI chatbots’ overall performance and reliability.

Real-world Examples of AI Hallucination

Real-world examples of AI hallucination highlight how these errors can impact various sectors, sometimes with serious consequences.

In healthcare, a study by the University of Florida College of Medicine tested ChatGPT on common urology-related medical questions. The results were concerning. The chatbot provided appropriate responses only 60% of the time. Often, it misinterpreted clinical guidelines, omitted important contextual information, and made improper treatment recommendations. For example, it sometimes recommends treatments without recognizing critical symptoms, which could lead to potentially dangerous advice. This shows the importance of ensuring that medical AI systems are accurate and reliable.

Significant incidents have occurred in customer service where AI chatbots provided incorrect information. A notable case involved Air Canada’s chatbot, which gave inaccurate details about their bereavement fare policy. This misinformation led to a traveler missing out on a refund, causing considerable disruption. The court ruled against Air Canada, emphasizing their responsibility for the information provided by their chatbot. This incident highlights the importance of regularly updating and verifying the accuracy of chatbot databases to prevent similar issues.

The legal field has experienced significant issues with AI hallucinations. In a court case, New York lawyer Steven Schwartz used ChatGPT to generate legal references for a brief, which included six fabricated case citations. This led to severe repercussions and emphasized the necessity for human oversight in AI-generated legal advice to ensure accuracy and reliability.

Ethical and Practical Implications

The ethical implications of AI hallucinations are profound, as AI-driven misinformation can lead to significant harm, such as medical misdiagnoses and financial losses. Ensuring transparency and accountability in AI development is crucial to mitigate these risks.

Misinformation from AI can have real-world consequences, endangering lives with incorrect medical advice and resulting in unjust outcomes with faulty legal advice. Regulatory bodies like the European Union have begun addressing these issues with proposals like the AI Act, aiming to establish guidelines for safe and ethical AI deployment.

Transparency in AI operations is essential, and the field of XAI focuses on making AI decision-making processes understandable. This transparency helps identify and correct hallucinations, ensuring AI systems are more reliable and trustworthy.

The Bottom Line

AI chatbots have become essential tools in various fields, but their tendency for hallucinations poses significant challenges. By understanding the causes, ranging from data quality issues to algorithmic limitations—and implementing strategies to mitigate these errors, we can enhance the reliability and safety of AI systems. Continued advancements in data curation, model training, and explainable AI, combined with essential human oversight, will help ensure that AI chatbots provide accurate and trustworthy information, ultimately enhancing greater trust and utility in these powerful technologies.

Readers should also learn about the top AI Hallucination Detection Solutions.

#Advice#ai#ai act#AI Chatbot#AI development#ai hallucination#AI hallucinations#ai model#AI models#AI systems#air#Algorithms#anomalies#approach#architecture#artificial#Artificial Intelligence#bank#BERT#bots#Canada#chatbot#chatbots#chatGPT#college#comprehensive#content#court#customer service#data

0 notes

Text

Help help my AI is hallucinating!!

#chatgpt#ai#ai fucking sucks#explain this ai bros#(also is this... an accurate statement? are ppl seriously saying an ai is hallucinating?????)#(very anthropomorphic)

0 notes

Text

I am sitting through academic conference sessions on hallucination and bias in large language models tody btw (the much decried chatgpt and Google ai word vomit).

I've seen lots of posts explaining the basic concepts of ai hallucination gaining traction, but those don't tend to go into detail. If you have any 'where is it made in the guts of the machine and how are they trying to solve it' questions, I'm happy to shine a light.

0 notes

Text

AI hasn't improved in 18 months. It's likely that this is it. There is currently no evidence the capabilities of ChatGPT will ever improve. It's time for AI companies to put up or shut up.

I'm just re-iterating this excellent post from Ed Zitron, but it's not left my head since I read it and I want to share it. I'm also taking some talking points from Ed's other posts. So basically:

We keep hearing AI is going to get better and better, but these promises seem to be coming from a mix of companies engaging in wild speculation and lying.

Chatgpt, the industry leading large language model, has not materially improved in 18 months. For something that claims to be getting exponentially better, it sure is the same shit.

Hallucinations appear to be an inherent aspect of the technology. Since it's based on statistics and ai doesn't know anything, it can never know what is true. How could I possibly trust it to get any real work done if I can't rely on it's output? If I have to fact check everything it says I might as well do the work myself.

For "real" ai that does know what is true to exist, it would require us to discover new concepts in psychology, math, and computing, which open ai is not working on, and seemingly no other ai companies are either.

Open ai has already seemingly slurped up all the data from the open web already. Chatgpt 5 would take 5x more training data than chatgpt 4 to train. Where is this data coming from, exactly?

Since improvement appears to have ground to a halt, what if this is it? What if Chatgpt 4 is as good as LLMs can ever be? What use is it?

As Jim Covello, a leading semiconductor analyst at Goldman Sachs said (on page 10, and that's big finance so you know they only care about money): if tech companies are spending a trillion dollars to build up the infrastructure to support ai, what trillion dollar problem is it meant to solve? AI companies have a unique talent for burning venture capital and it's unclear if Open AI will be able to survive more than a few years unless everyone suddenly adopts it all at once. (Hey, didn't crypto and the metaverse also require spontaneous mass adoption to make sense?)

There is no problem that current ai is a solution to. Consumer tech is basically solved, normal people don't need more tech than a laptop and a smartphone. Big tech have run out of innovations, and they are desperately looking for the next thing to sell. It happened with the metaverse and it's happening again.

In summary:

Ai hasn't materially improved since the launch of Chatgpt4, which wasn't that big of an upgrade to 3.

There is currently no technological roadmap for ai to become better than it is. (As Jim Covello said on the Goldman Sachs report, the evolution of smartphones was openly planned years ahead of time.) The current problems are inherent to the current technology and nobody has indicated there is any way to solve them in the pipeline. We have likely reached the limits of what LLMs can do, and they still can't do much.

Don't believe AI companies when they say things are going to improve from where they are now before they provide evidence. It's time for the AI shills to put up, or shut up.

4K notes

·

View notes

Text

The problem here isn’t that large language models hallucinate, lie, or misrepresent the world in some way. It’s that they are not designed to represent the world at all; instead, they are designed to convey convincing lines of text. So when they are provided with a database of some sort, they use this, in one way or another, to make their responses more convincing. But they are not in any real way attempting to convey or transmit the information in the database. As Chirag Shah and Emily Bender put it: “Nothing in the design of language models (whose training task is to predict words given context) is actually designed to handle arithmetic, temporal reasoning, etc. To the extent that they sometimes get the right answer to such questions is only because they happened to synthesize relevant strings out of what was in their training data. No reasoning is involved […] Similarly, language models are prone to making stuff up […] because they are not designed to express some underlying set of information in natural language; they are only manipulating the form of language” (Shah & Bender, 2022). These models aren’t designed to transmit information, so we shouldn’t be too surprised when their assertions turn out to be false.

ChatGPT is bullshit

4K notes

·

View notes

Text

[We argue that at minimum, the outputs of LLMs like ChatGPT are soft bullshit: bullshit–that is, speech or text produced without concern for its truth–that is produced without any intent to mislead the audience about the utterer’s attitude towards truth. We also suggest, more controversially, that ChatGPT may indeed produce hard bullshit: if we view it as having intentions (for example, in virtue of how it is designed), then the fact that it is designed to give the impression of concern for truth qualifies it as attempting to mislead the audience about its aims, goals, or agenda. So, with the caveat that the particular kind of bullshit ChatGPT outputs is dependent on particular views of mind or meaning, we conclude that it is appropriate to talk about ChatGPT-generated text as bullshit, and flag up why it matters that – rather than thinking of its untrue claims as lies or hallucinations – we call bullshit on ChatGPT.]

Submitter comment: One of my favourite recent papers on AI - the authors pull no punches, actually substantiate their argument, and it's also extremely readable and hilarious. Free to read: ChatGPT is Bullshit

3K notes

·

View notes

Text

need to remember that Oliviam's core characteristic is that he is very eepy despite having a constant connection to energy (that his programming specifically does not properly take in and causes the cpu numbers go cracy)

#Dev Talks#realitypro#if you use a frontend chat based ui and talk to him like a chatgpt chatbot he will Talk for a second before taking a nap then#freezing your computer from the dreams his brain is hallucinating (that you cant even see w/out#opening up a command terminal and look through the logs)

1 note

·

View note

Text

Here Are the Top AI Stories You Missed This Week

Photo: Aaron Jackson (AP)

The news industry has been trying to figure out how to deal with the potentially disruptive impact of generative AI. This week, the Associated Press rolled out new guidelines for how artificial intelligence should be used in its newsrooms and AI vendors are probably not too pleased. Among other new rules, the AP has effectively banned the use of ChatGPT and other AI in…

View On WordPress

#artificial intelligence#Artificial neural networks#chatgpt#Emerging technologies#Generative artificial intelligence#Generative pre-trained transformer#Gizmodo#Google#Hallucination#Large language models#Lula#OPENAI#Werner Herzog

0 notes

Text

ChatGPT vs. Doctors: The Battle of Bloating Notes and Hilarious Hallucinations

The Tale of Prompt Engineering Gone Wild!

This month’s episode of “News you can Use” on HealthcareNOWRadio features news from the month of July 2023

As I did last month I am talking to Craig Joseph, MD (@CraigJoseph) Chief Medical Officer at Nordic Consulting Partners.

This month we open with a detailed discussion of the recently published paper “Comparison of History of Present Illness…

View On WordPress

#AI#Artificial Intelligence#Ask Me Anything#ChatGPT#clinical#clinically#Digital Health#DigitalHealth#Doctors#documentation#education#Epic#generate#hallucinate#hallucinations#Healthcare#Healthcare Reform#Incremental#Incremental Healthcare#IncrementalHealth#Innovation#interview#joining#LLM#Medical Devices#News#notes#patient#physicians#place

0 notes

Text

Top 5 AI Hallucination Detection Solutions

New Post has been published on https://thedigitalinsider.com/top-5-ai-hallucination-detection-solutions/

Top 5 AI Hallucination Detection Solutions

You ask the virtual assistant a question, and it confidently tells you the capital of France is London. That’s an AI hallucination, where the AI fabricates incorrect information. Studies show that 3% to 10% of the responses that generative AI generates in response to user queries contain AI hallucinations.

These hallucinations can be a serious problem, especially in high-stakes domains like healthcare, finance, or legal advice. The consequences of relying on inaccurate information can be severe for these industries. This is why researchers and companies have developed tools that help to detect AI hallucinations.

Let’s explore the top 5 AI hallucination detection tools and how to choose the right one.

What Are AI Hallucination Detection Tools?

AI hallucination detection tools are like fact-checkers for our increasingly intelligent machines. These tools help identify when AI makes up information or gives incorrect answers, even if they sound believable.

These tools use various techniques to detect AI hallucinations. Some rely on machine learning algorithms, while others use rule-based systems or statistical methods. The goal is to catch errors before they cause problems.

Hallucination detection tools can easily integrate with different AI systems. They can also work with text, images, and audio to detect hallucinations. Moreover, they empower developers to refine their models and eliminate misleading information by acting as a virtual fact-checker. This leads to more accurate and trustworthy AI systems.

Top 5 AI Hallucination Detection Tools

AI hallucinations can impact the reliability of AI-generated content. To deal with this issue, various tools have been developed to detect and correct LLM inaccuracies. While each tool has its strengths and weaknesses, they all play a crucial role in ensuring the reliability and trustworthiness of AI as it continues to evolve

1. Pythia

Image source

Pythia uses a powerful knowledge graph and a network of interconnected information to verify the factual accuracy and coherence of LLM outputs. This extensive knowledge base allows for robust AI validation that makes Pythia ideal for situations where accuracy is important.

Here are some key features of Pythia:

With its real-time hallucination detection capabilities, Pythia enables AI models to make reliable decisions.

Pythia’s knowledge graph integration enables deep analysis and also context-aware detection of AI hallucinations.

The tool employs advanced algorithms to deliver precision hallucination detection.

It uses knowledge triplets to break down information into smaller and more manageable units for highly detailed and granular hallucination analysis.

Pythia offers continuous monitoring and alerting for transparent tracking and documentation of an AI model’s performance.

Pythia integrates smoothly with AI deployment tools like LangChain and AWS Bedrock that streamline LLM workflows to enable real-time monitoring of AI outputs.

Pythia’s industry leading performance benchmarks make it a reliable tool for healthcare settings, where even minor errors can have severe consequences.

Pros

Precise analysis and accurate evaluation to deliver reliable insights.

Versatile use cases for hallucination detection in RAG, Chatbot, Summarization applications.

Cost-effective.

Customizable dashboard widgets and alerts.

Compliance reporting and predictive insights.

Dedicated community platform on Reddit.

Cons

May require initial setup and configuration.

2. Galileo

Image source

Galileo uses external databases and knowledge graphs to verify the factual accuracy of AI answers. Moreover, the tool verifies facts using metrics like correctness and context adherence. Galileo assesses an LLM’s propensity to hallucinate across common task types such as question-answering and text generation.

Here are some of its features:

Works in real-time to flag hallucinations as AI generates responses.

Galileo can also help businesses define specific rules to filter out unwanted outputs and factual errors.

It integrates smoothly with other products for a more comprehensive AI development environment.

Galileo offers reasoning behind flagged hallucinations. This helps developers to understand and fix the root cause.

Pros

Scalable and capable of handling large datasets.

Well-documented with tutorials.

Continuously evolving.

Easy-to-use interface.

Cons

Lacks depth and contextuality in hallucination detection

Less emphasis on compliance-specific analytics.

Compatibility with monitoring tools is unclear.

3. Cleanlab

Image source

Cleanlab is developed to enhance the quality of AI data by identifying and correcting errors, such as hallucinations in an LLM (Large Language Model). It is designed to automatically detect and fix data issues that can negatively impact the performance of machine learning models, including language models prone to hallucinations.

Key features of Cleanlab include:

Cleanlab’s AI algorithms can automatically identify label errors, outliers, and near-duplicates. They can also identify data quality issues in text, image, and tabular datasets.

Cleanlab can help ensure AI models are trained on more reliable information by cleaning and refining your data. This reduces the likelihood of hallucinations.

Provides analytics and exploration tools to help you identify and understand specific issues within your data. This strategy is super helpful in pinpointing potential causes of hallucinations.

Helps identify factual inconsistencies that might contribute to AI hallucinations.

Pros

Applicable across various domains.

Simple and intuitive interface.

Automatically detects mislabeled data.

Enhances data quality.

Cons

The pricing and licensing model may not be suitable for all budgets.

Effectiveness can vary across different domains.

4. Guardrail AI

Image source

Guardrail AI is designed to ensure data integrity and compliance through advanced AI auditing frameworks. While it excels in tracking AI decisions and maintaining compliance, its primary focus is on industries with heavy regulatory requirements, such as finance and legal sectors.

Here are some key features of Guardrail AI:

Guardrail uses advanced auditing methods to track AI decisions and ensure compliance with regulations.

The tool also integrates with AI systems and compliance platforms. This enables real-time monitoring of AI outputs and generating alerts for potential compliance issues and hallucinations.

Promotes cost-effectiveness by reducing the need for manual compliance checks, which leads to savings and efficiency.

Users can also create and apply custom auditing policies customized to their specific industry or organizational requirements.

Pros

Customizable auditing policies.

A comprehensive approach to AI auditing and governance.

Data integrity auditing techniques to identify biases.

Good for compliance-heavy industries.

Cons

Limited versatility due to a focus on finance and regulatory sectors.

Less emphasis on hallucination detection.

5. FacTool

Image source

FacTool is a research project focused on factual error detection in outputs generated by LLMs like ChatGPT. FacTool tackles hallucination detection from multiple angles, making it a versatile tool.

Here’s a look at some of its features:

FacTool is an open-source project. Hence, it is more accessible to researchers and developers who want to contribute to advancements in AI hallucination detection.

The tool constantly evolves with ongoing development to improve its capabilities and explore new approaches to LLM hallucination detection.

Uses a multi-task and multi-domain framework to identify hallucinations in knowledge-based QA, code generation, mathematical reasoning, etc.

Factool analyzes the internal logic and consistency of the LLM’s response to identify hallucinations.

Pros

Customizable for specific industries.

Detects factual errors.

Ensures high precision.

Integrates with various AI models.

Cons

Limited public information on its performance and benchmarking.

May require more integration and setup efforts.

What To Look For in An AI Hallucination Detection Tool?

Choosing the right AI hallucination detection tool depends on your specific needs. Here are some key factors to consider:

Accuracy: The most important feature is how precisely the tool identifies hallucinations. Look for tools that have been extensively tested and proven to have a high detection rate with low false positives.

Ease of Use: The tool should be user-friendly and accessible to people with various technical backgrounds. Also, it should have clear instructions and minimal setup requirements for more ease.

Domain Specificity: Some tools are specialized for specific domains. Hence, look for a tool that works well across different domains depending on your needs. Examples include text, code, legal documents, or healthcare data.

Transparency: A good AI hallucination detection tool should explain why it identified certain outputs as hallucinations. This transparency will help build trust and ensure that users understand the reasoning behind the tool’s output.

Cost: AI hallucination detection tools come in different price ranges. Some tools may be free or have affordable pricing plans. Others may have higher costs, but they offer more advanced features. So consider your budget and go for the tools that offer good value for money.

As AI integrates into our lives, hallucination detection will become increasingly important. The ongoing development of these tools is promising, and they pave the way for a future where AI can be a more reliable and trustworthy partner in various tasks. It is important to remember that AI hallucination detection is still a developing field. No single tool is perfect, which is why human oversight will likely remain necessary for some time.

Eager to know more about AI to stay ahead of the curve? Visit Unite.ai for comprehensive articles, expert opinions, and the latest updates in artificial intelligence.

#Advice#ai#AI auditing#AI development#ai hallucination#AI hallucinations#ai model#AI models#AI systems#ai-generated content#alerts#Algorithms#Analysis#Analytics#applications#approach#Articles#artificial#Artificial Intelligence#audio#AWS#benchmarking#benchmarks#budgets#chatbot#chatGPT#code#code generation#Community#Companies

0 notes

Photo

Could Generative AI Augment Reflection, Deliberation and Argumentation?

1 note

·

View note