#cloud-computing users need

Explore tagged Tumblr posts

Text

I don't know I'm not done talking about it. It's insane that I can't just uninstall Edge or Copilot. That websites require my phone number to sign up. That people share their contacts to find their friends on social media.

I wouldn't use an adblocker if ads were just banners on the side funding a website I enjoy using and want to support. Ads pop up invasively and fill my whole screen, I misclick and get warped away to another page just for trying to read an article or get a recipe.

Every app shouldn't be like every other app. Instagram didn't need reels and a shop. TikTok doesn't need a store. Instagram doesn't need to be connected to Facebook. I don't want my apps to do everything, I want a hub for a specific thing, and I'll go to that place accordingly.

I love discord, but so much information gets lost to it. I don't want to join to view things. I want to lurk on forums. I want to be a user who can log in and join a conversation by replying to a thread, even if that conversation was two days ago. I know discord has threads, it's not the same. I don't want to have to verify my account with a phone number. I understand safety and digital concerns, but I'm concerned about information like that with leaks everywhere, even with password managers.

I shouldn't have to pay subscriptions to use services and get locked out of old versions. My old disk copy of photoshop should work. I should want to upgrade eventually because I like photoshop and supporting the business. Adobe is a whole other can of worms here.

Streaming is so splintered across everything. Shows release so fast. Things don't get physical releases. I can't stream a movie I own digitally to friends because the share-screen blocks it, even though I own two digital copies, even though I own a physical copy.

I have an iPod, and I had to install a third party OS to easily put my music on it without having to tangle with iTunes. Spotify bricked hardware I purchased because they were unwillingly to upkeep it. They don't pay their artists. iTunes isn't even iTunes anymore and Apple struggles to upkeep it.

My TV shows me ads on the home screen. My dad lost access to eBook he purchased because they were digital and got revoked by the company distributing them. Hitman 1-3 only runs online most of the time. Flash died and is staying alive because people love it and made efforts to keep it up.

I have to click "not now" and can't click "no". I don't just get emails, they want to text me to purchase things online too. My windows start search bar searches online, not just my computer. Everything is blindly called an app now. Everything wants me to upload to the cloud. These are good tools! But why am I forced to use them! Why am I not allowed to own or control them?

No more!!!!! I love my iPod with so much storage and FLAC files. I love having all my fics on my harddrive. I love having USBs and backups. I love running scripts to gut suck stuff out of my Windows computer I don't want that spies on me. I love having forums. I love sending letters. I love neocities and webpages and webrings. I will not be scanning QR codes. Please hand me a physical menu. If I didn't need a smartphone for work I'd get a "dumb" phone so fast. I want things to have buttons. I want to use a mouse. I want replaceable batteries. I want the right to repair. I grew up online and I won't forget how it was!

67K notes

·

View notes

Text

I think every computer user needs to read this because holy fucking shit this is fucking horrible.

So Windows has a new feature incoming called Recall where your computer will first, monitor everything you do with screenshots every couple of seconds and "process that" with an AI.

Hey, errrr, fuck no? This isn't merely because AI is really energy intensive to the point that it causes environmental damage. This is because it's basically surveilling what you are doing on your fucking desktop.

This AI is not going to be on your desktop, like all AI, it's going to be done on another server, "in the cloud" to be precise, so all those data and screenshot? They're going to go off to Microsoft. Microsoft are going to be monitoring what you do on your own computer.

Now of course Microsoft are going to be all "oooh, it's okay, we'll keep your data safe". They won't. Let me just remind you that evidence given over from Facebook has been used to prosecute a mother and daughter for an "illegal abortion", Microsoft will likely do the same.

And before someone goes "durrr, nuthin' to fear, nuthin to hide", let me remind you that you can be doing completely legal and righteous acts and still have the police on your arse. Are you an activist? Don't even need to be a hackivist, you can just be very vocal about something concerning and have the fucking police on your arse. They did this with environmental protesters in the UK. The culture war against transgender people looks likely to be heading in a direction wherein people looking for information on transgender people or help transitioning will be tracked down too. You have plenty to hide from the government, including your opinions and ideas.

Again, look into backing up your shit and switching to Linux Mint or Ubuntu to get away from Microsoft doing this shit.

44K notes

·

View notes

Text

I would like to address something that has come up several times since I relaunched my computer recommendation blog two weeks ago. Part of the reason that I started @okay-computer and that I continue to host my computer-buying-guide is that it is part of my job to buy computers every day.

I am extremely conversant with pricing trends and specification norms for computers, because literally I quoted seven different laptops with different specs at different price-points *today* and I will do more of the same on Monday.

Now, I am holding your face in my hands. I am breathing in sync with you. We are communicating. We are on the same page. Listen.

Computer manufacturers don't expect users to store things locally so it is no longer standard to get a terabyte of storage in a regular desktop or laptop. You're lucky if you can find one with a 512gb ssd that doesn't have an obnoxious markup because of it.

If you think that the norm is for computers to come with 1tb of storage as a matter of course, you are seeing things from a narrow perspective that is out of step with most of the hardware out there.

I went from a standard expectation of a 1tb hdd five years ago to expecting to get a computer with a 1tb hdd that we would pull and replace with a 1tb ssd to expecting to get a computer that came with a 256gb ssd that we would pull and replace with a 1tb ssd, to just having the 256gb ssd come standard and and only seeking out more storage if the customer specifically requested it because otherwise they don't want to pay for more storage.

Computer manufacturers consider any storage above 256gb to be a premium feature these days.

Look, here's a search for Lenovo Laptops with 16GB RAM (what I would consider the minimum in today's market) and a Win11 home license (not because I prefer that, but to exclude chromebooks and business machines). Here are the storage options that come up for those specs:

You will see that the majority of the options come with less than a terabyte of storage. You CAN get plenty of options with 1tb, but the point of Okay-Computer is to get computers with reasonable specs in an affordable price range. These days, that mostly means half a terabyte of storage (because I can't bring myself to *recommend* less than that but since most people carry stuff in their personal cloud these days, it's overkill for a lot of people)

All things being equal, 500gb more increases the price of this laptop by $150:

It brings this one up by $130:

This one costs $80 more to go from 256 to 512 and there isn't an option for 1TB.

For the last three decades storage has been getting cheaper and cheaper and cheaper, to the point that storage was basically a negligible cost when HDDs were still the standard. With the change to SSDs that cost increased significantly and, while it has come down, we have not reached the cheap, large storage as-a-standard on laptops stage; this is partially because storage is now SO cheap that people want to entice you into paying a few dollars a month to use huge amounts of THEIR storage instead of carrying everything you own in your laptop.

You will note that 1tb ssds cost you a lot less than the markup to pay for a 1tb ssd instead of a 500gb ssd

In fact it can be LESS EXPENSIVE to get a 1tb ssd than a 500gb ssd.

This is because computer manufacturers are, generally speaking, kind of shitty and do not care about you.

I stridently recommend getting as much storage as you can on your computer. If you can't get the storage you want up front, I recommend upgrading your storage.

But also: in the current market (December 2024), you should not expect to find desktops or laptops in the low-mid range pricing tier with more than 512gb of storage. Sometimes you'll get lucky, but you shouldn't be expecting it - if you need more storage and you need an inexpensive computer, you need to expect to upgrade that component yourself.

So, if you're looking at a computer I linked and saying "32GB of RAM and an i7 processor but only 500GB of storage? What kind of nonsense is that?" Then I would like to present you with one of the computers I had to quote today:

A three thousand dollar macbook with the most recent apple silicon (the m4 released like three weeks ago) and 48 FUCKING GIGABYTES OF RAM with a 512gb ssd.

You can't even upgrade that SSD! That's an apple that drive isn't going fucking anywhere! (don't buy apple, apple is shit)

The norms have shifted! It sucks, but you have to be aware of these kinds of things if you want to pay a decent price for a computer and know what you're getting into.

5K notes

·

View notes

Text

So, let me try and put everything together here, because I really do think it needs to be talked about.

Today, Unity announced that it intends to apply a fee to use its software. Then it got worse.

For those not in the know, Unity is the most popular free to use video game development tool, offering a basic version for individuals who want to learn how to create games or create independently alongside paid versions for corporations or people who want more features. It's decent enough at this job, has issues but for the price point I can't complain, and is the idea entry point into creating in this medium, it's a very important piece of software.

But speaking of tools, the CEO is a massive one. When he was the COO of EA, he advocated for using, what out and out sounds like emotional manipulation to coerce players into microtransactions.

"A consumer gets engaged in a property, they might spend 10, 20, 30, 50 hours on the game and then when they're deep into the game they're well invested in it. We're not gouging, but we're charging and at that point in time the commitment can be pretty high."

He also called game developers who don't discuss monetization early in the planning stages of development, quote, "fucking idiots".

So that sets the stage for what might be one of the most bald-faced greediest moves I've seen from a corporation in a minute. Most at least have the sense of self-preservation to hide it.

A few hours ago, Unity posted this announcement on the official blog.

Effective January 1, 2024, we will introduce a new Unity Runtime Fee that’s based on game installs. We will also add cloud-based asset storage, Unity DevOps tools, and AI at runtime at no extra cost to Unity subscription plans this November. We are introducing a Unity Runtime Fee that is based upon each time a qualifying game is downloaded by an end user. We chose this because each time a game is downloaded, the Unity Runtime is also installed. Also we believe that an initial install-based fee allows creators to keep the ongoing financial gains from player engagement, unlike a revenue share.

Now there are a few red flags to note in this pitch immediately.

Unity is planning on charging a fee on all games which use its engine.

This is a flat fee per number of installs.

They are using an always online runtime function to determine whether a game is downloaded.

There is just so many things wrong with this that it's hard to know where to start, not helped by this FAQ which doubled down on a lot of the major issues people had.

I guess let's start with what people noticed first. Because it's using a system baked into the software itself, Unity would not be differentiating between a "purchase" and a "download". If someone uninstalls and reinstalls a game, that's two downloads. If someone gets a new computer or a new console and downloads a game already purchased from their account, that's two download. If someone pirates the game, the studio will be asked to pay for that download.

Q: How are you going to collect installs? A: We leverage our own proprietary data model. We believe it gives an accurate determination of the number of times the runtime is distributed for a given project. Q: Is software made in unity going to be calling home to unity whenever it's ran, even for enterprice licenses? A: We use a composite model for counting runtime installs that collects data from numerous sources. The Unity Runtime Fee will use data in compliance with GDPR and CCPA. The data being requested is aggregated and is being used for billing purposes. Q: If a user reinstalls/redownloads a game / changes their hardware, will that count as multiple installs? A: Yes. The creator will need to pay for all future installs. The reason is that Unity doesn’t receive end-player information, just aggregate data. Q: What's going to stop us being charged for pirated copies of our games? A: We do already have fraud detection practices in our Ads technology which is solving a similar problem, so we will leverage that know-how as a starting point. We recognize that users will have concerns about this and we will make available a process for them to submit their concerns to our fraud compliance team.

This is potentially related to a new system that will require Unity Personal developers to go online at least once every three days.

Starting in November, Unity Personal users will get a new sign-in and online user experience. Users will need to be signed into the Hub with their Unity ID and connect to the internet to use Unity. If the internet connection is lost, users can continue using Unity for up to 3 days while offline. More details to come, when this change takes effect.

It's unclear whether this requirement will be attached to any and all Unity games, though it would explain how they're theoretically able to track "the number of installs", and why the methodology for tracking these installs is so shit, as we'll discuss later.

Unity claims that it will only leverage this fee to games which surpass a certain threshold of downloads and yearly revenue.

Only games that meet the following thresholds qualify for the Unity Runtime Fee: Unity Personal and Unity Plus: Those that have made $200,000 USD or more in the last 12 months AND have at least 200,000 lifetime game installs. Unity Pro and Unity Enterprise: Those that have made $1,000,000 USD or more in the last 12 months AND have at least 1,000,000 lifetime game installs.

They don't say how they're going to collect information on a game's revenue, likely this is just to say that they're only interested in squeezing larger products (games like Genshin Impact and Honkai: Star Rail, Fate Grand Order, Among Us, and Fall Guys) and not every 2 dollar puzzle platformer that drops on Steam. But also, these larger products have the easiest time porting off of Unity and the most incentives to, meaning realistically those heaviest impacted are going to be the ones who just barely meet this threshold, most of them indie developers.

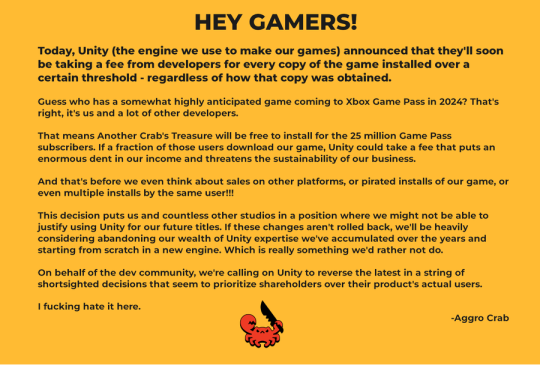

Aggro Crab Games, one of the first to properly break this story, points out that systems like the Xbox Game Pass, which is already pretty predatory towards smaller developers, will quickly inflate their "lifetime game installs" meaning even skimming the threshold of that 200k revenue, will be asked to pay a fee per install, not a percentage on said revenue.

[IMAGE DESCRIPTION: Hey Gamers!

Today, Unity (the engine we use to make our games) announced that they'll soon be taking a fee from developers for every copy of the game installed over a certain threshold - regardless of how that copy was obtained.

Guess who has a somewhat highly anticipated game coming to Xbox Game Pass in 2024? That's right, it's us and a lot of other developers.

That means Another Crab's Treasure will be free to install for the 25 million Game Pass subscribers. If a fraction of those users download our game, Unity could take a fee that puts an enormous dent in our income and threatens the sustainability of our business.

And that's before we even think about sales on other platforms, or pirated installs of our game, or even multiple installs by the same user!!!

This decision puts us and countless other studios in a position where we might not be able to justify using Unity for our future titles. If these changes aren't rolled back, we'll be heavily considering abandoning our wealth of Unity expertise we've accumulated over the years and starting from scratch in a new engine. Which is really something we'd rather not do.

On behalf of the dev community, we're calling on Unity to reverse the latest in a string of shortsighted decisions that seem to prioritize shareholders over their product's actual users.

I fucking hate it here.

-Aggro Crab - END DESCRIPTION]

That fee, by the way, is a flat fee. Not a percentage, not a royalty. This means that any games made in Unity expecting any kind of success are heavily incentivized to cost as much as possible.

[IMAGE DESCRIPTION: A table listing the various fees by number of Installs over the Install Threshold vs. version of Unity used, ranging from $0.01 to $0.20 per install. END DESCRIPTION]

Basic elementary school math tells us that if a game comes out for $1.99, they will be paying, at maximum, 10% of their revenue to Unity, whereas jacking the price up to $59.99 lowers that percentage to something closer to 0.3%. Obviously any company, especially any company in financial desperation, which a sudden anchor on all your revenue is going to create, is going to choose the latter.

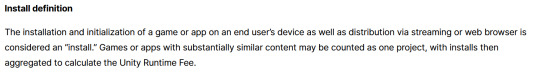

Furthermore, and following the trend of "fuck anyone who doesn't ask for money", Unity helpfully defines what an install is on their main site.

While I'm looking at this page as it exists now, it currently says

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

However, I saw a screenshot saying something different, and utilizing the Wayback Machine we can see that this phrasing was changed at some point in the few hours since this announcement went up. Instead, it reads:

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming or web browser is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

Screenshot for posterity:

That would mean web browser games made in Unity would count towards this install threshold. You could legitimately drive the count up simply by continuously refreshing the page. The FAQ, again, doubles down.

Q: Does this affect WebGL and streamed games? A: Games on all platforms are eligible for the fee but will only incur costs if both the install and revenue thresholds are crossed. Installs - which involves initialization of the runtime on a client device - are counted on all platforms the same way (WebGL and streaming included).

And, what I personally consider to be the most suspect claim in this entire debacle, they claim that "lifetime installs" includes installs prior to this change going into effect.

Will this fee apply to games using Unity Runtime that are already on the market on January 1, 2024? Yes, the fee applies to eligible games currently in market that continue to distribute the runtime. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

Again, again, doubled down in the FAQ.

Q: Are these fees going to apply to games which have been out for years already? If you met the threshold 2 years ago, you'll start owing for any installs monthly from January, no? (in theory). It says they'll use previous installs to determine threshold eligibility & then you'll start owing them for the new ones. A: Yes, assuming the game is eligible and distributing the Unity Runtime then runtime fees will apply. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

That would involve billing companies for using their software before telling them of the existence of a bill. Holding their actions to a contract that they performed before the contract existed!

Okay. I think that's everything. So far.

There is one thing that I want to mention before ending this post, unfortunately it's a little conspiratorial, but it's so hard to believe that anyone genuinely thought this was a good idea that it's stuck in my brain as a significant possibility.

A few days ago it was reported that Unity's CEO sold 2,000 shares of his own company.

On September 6, 2023, John Riccitiello, President and CEO of Unity Software Inc (NYSE:U), sold 2,000 shares of the company. This move is part of a larger trend for the insider, who over the past year has sold a total of 50,610 shares and purchased none.

I would not be surprised if this decision gets reversed tomorrow, that it was literally only made for the CEO to short his own goddamn company, because I would sooner believe that this whole thing is some idiotic attempt at committing fraud than a real monetization strategy, even knowing how unfathomably greedy these people can be.

So, with all that said, what do we do now?

Well, in all likelihood you won't need to do anything. As I said, some of the biggest names in the industry would be directly affected by this change, and you can bet your bottom dollar that they're not just going to take it lying down. After all, the only way to stop a greedy CEO is with a greedier CEO, right?

(I fucking hate it here.)

And that's not mentioning the indie devs who are already talking about abandoning the engine.

[Links display tweets from the lead developer of Among Us saying it'd be less costly to hire people to move the game off of Unity and Cult of the Lamb's official twitter saying the game won't be available after January 1st in response to the news.]

That being said, I'm still shaken by all this. The fact that Unity is openly willing to go back and punish its developers for ever having used the engine in the past makes me question my relationship to it.

The news has given rise to the visibility of free, open source alternative Godot, which, if you're interested, is likely a better option than Unity at this point. Mostly, though, I just hope we can get out of this whole, fucking, environment where creatives are treated as an endless mill of free profits that's going to be continuously ratcheted up and up to drive unsustainable infinite corporate growth that our entire economy is based on for some fuckin reason.

Anyways, that's that, I find having these big posts that break everything down to be helpful.

#Unity#Unity3D#Video Games#Game Development#Game Developers#fuckshit#I don't know what to tag news like this

6K notes

·

View notes

Note

whats wrong with ai?? genuinely curious <3

okay let's break it down. i'm an engineer, so i'm going to come at you from a perspective that may be different than someone else's.

i don't hate ai in every aspect. in theory, there are a lot of instances where, in fact, ai can help us do things a lot better without. here's a few examples:

ai detecting cancer

ai sorting recycling

some practical housekeeping that gemini (google ai) can do

all of the above examples are ways in which ai works with humans to do things in parallel with us. it's not overstepping--it's sorting, using pixels at a micro-level to detect abnormalities that we as humans can not, fixing a list. these are all really small, helpful ways that ai can work with us.

everything else about ai works against us. in general, ai is a huge consumer of natural resources. every prompt that you put into character.ai, chatgpt? this wastes water + energy. it's not free. a machine somewhere in the world has to swallow your prompt, call on a model to feed data into it and process more data, and then has to generate an answer for you all in a relatively short amount of time.

that is crazy expensive. someone is paying for that, and if it isn't you with your own money, it's the strain on the power grid, the water that cools the computers, the A/C that cools the data centers. and you aren't the only person using ai. chatgpt alone gets millions of users every single day, with probably thousands of prompts per second, so multiply your personal consumption by millions, and you can start to see how the picture is becoming overwhelming.

that is energy consumption alone. we haven't even talked about how problematic ai is ethically. there is currently no regulation in the united states about how ai should be developed, deployed, or used.

what does this mean for you?

it means that anything you post online is subject to data mining by an ai model (because why would they need to ask if there's no laws to stop them? wtf does it matter what it means to you to some idiot software engineer in the back room of an office making 3x your salary?). oh, that little fic you posted to wattpad that got a lot of attention? well now it's being used to teach ai how to write. oh, that sketch you made using adobe that you want to sell? adobe didn't tell you that anything you save to the cloud is now subject to being used for their ai models, so now your art is being replicated to generate ai images in photoshop, without crediting you (they have since said they don't do this...but privacy policies were never made to be human-readable, and i can't imagine they are the only company to sneakily try this). oh, your apartment just installed a new system that will use facial recognition to let their residents inside? oh, they didn't train their model with anyone but white people, so now all the black people living in that apartment building can't get into their homes. oh, you want to apply for a new job? the ai model that scans resumes learned from historical data that more men work that role than women (so the model basically thinks men are better than women), so now your resume is getting thrown out because you're a woman.

ai learns from data. and data is flawed. data is human. and as humans, we are racist, homophobic, misogynistic, transphobic, divided. so the ai models we train will learn from this. ai learns from people's creative works--their personal and artistic property. and now it's scrambling them all up to spit out generated images and written works that no one would ever want to read (because it's no longer a labor of love), and they're using that to make money. they're profiting off of people, and there's no one to stop them. they're also using generated images as marketing tools, to trick idiots on facebook, to make it so hard to be media literate that we have to question every single thing we see because now we don't know what's real and what's not.

the problem with ai is that it's doing more harm than good. and we as a society aren't doing our due diligence to understand the unintended consequences of it all. we aren't angry enough. we're too scared of stifling innovation that we're letting it regulate itself (aka letting companies decide), which has never been a good idea. we see it do one cool thing, and somehow that makes up for all the rest of the bullshit?

#yeah i could talk about this for years#i could talk about it forever#im so passionate about this lmao#anyways#i also want to point out the examples i listed are ONLY A FEW problems#there's SO MUCH MORE#anywho ai is bleh go away#ask#ask b#🐝's anons#ai

1K notes

·

View notes

Text

I have a lot of feelings about the use of AI in Everything These Days, but they're not particularly strong feelings, like I've got other shit going on. That said, when I use a desktop computer, every single file I use in Google Drive now has a constant irritating popup on the right-hand side asking me how Gemini AI Can Help Me. You can't, Gemini. You are in the way. I'm not even mad there's an AI there, I'm mad there's a constantly recurring popup taking up space and attention on my screen.

Here's the problem, however: even Gemini doesn't know how to disable Gemini. I did my own research and then finally, with a deep appreciation of the irony of this, I asked it how to turn it off. It said in any google drive file go to Help > Gemini and there will be an option to turn it off. Guess what isn't a menu item under Help?

I've had a look around at web tutorials for removing or blocking it, but they are either out of date or for the Gemini personal assistant, which I already don't have, and thus cannot turn off. Gemini for Drive is an integrated "service" within Google Drive, which I guess means I'm going to have to look into moving off Google Drive.

So, does anyone have references for a service as seamless and accessible as Google Drive? I need document, spreadsheet, slideshow, and storage, but I don't have any fancy widgets installed or anything. I do technically own Microsoft Office so I suppose I could use that but I've never found its cloud function to actually, uh, function. I could use OneNote for documents if things get desperate but OneNote is very limited overall. I want to be able to open and edit files, including on an Android phone, and I'd prefer if I didn't have to receive a security code in my text messages every time I log in. I also will likely need to be able to give non-users access, but I suppose I could kludge that in Drive as long as I only have to deal with it short-term.

Any thoughts, friends? If I find a good functional replacement I'm happy to post about it once I've tested it.

Also, saying this because I love you guys but if I don't spell it out I will get a bunch of comments about it: If you yourself have managed to banish Gemini from your Drive account including from popping up in individual files, I'm interested! Please share. If you have not actually implemented a solution yourself, rest assured, anything you find I have already tried and it does not work.

1K notes

·

View notes

Text

I'd been seeing videos on Tiktok and Youtube about how younger Gen Z & Gen Alpha were demonstrating low computer literacy & below benchmark reading & writing skills, but-- like with many things on the internet-- I assumed most of what I read and watched was exaggerated. Hell, even if things were as bad as people were saying, it would be at least ~5 years before I started seeing the problem in higher education.

I was very wrong.

Of the many applications I've read this application season, only %6 percent demonstrated would I would consider a college-level mastery of language & grammar. The students writing these applications have been enrolled in university for at least two years, and have taken all fundamental courses. This means they've had classes dedicated to reading, writing, and literature analysis, and yet!

There are sentences I have to read over and over again to discern intent. Circular arguments that offer no actual substance. Errors in spelling and capitalization that spellcheck should've flagged.

At a glance, it's easy to trace this issue back to two things:

The state of education in the United States is abhorrent. Instructors are not paid enough, so schools-- particularly public schools-- take whatever instructors they can find.

COVID. The two year long gap in education, especially in high school, left many students struggling to keep up.

But I think there's a third culprit-- something I mentioned earlier in this post. A lack of computer literacy.

This subject has been covered extensively by multiple news outlets like the Washington Post and Raconteur, but as someone seeing it firsthand I wanted to add my voice to the rising chorus of concerned educators begging you to pay attention.

As the interface we use to engage with technology becomes more user friendly, the knowledge we need to access our files, photos, programs, & data becomes less and less important. Why do I need to know about directories if I can search my files in Windows (are you searching in Windows? Are you sure? Do you know what that bar you're typing into is part of? Where it's looking)? Maybe you don't have any files on your computer at all-- maybe they're on the cloud through OneDrive, or backed up through Google. Some of you reading this may know exactly where and how your files are stored. Many of you probably don't, and that's okay. For most people, being able to access a file in as short a time as possible is what they prioritize.

The problem is, when you as a consumer are only using a tool, you are intrinsically limited by the functions that tool is advertised to have. Worse yet, when the tool fails or is insufficient for what you need, you have no way of working outside of that tool. You'll need to consult an expert, which is usually expensive.

When you as a consumer understand a tool, your options are limitless. You can break it apart and put it back together in just the way you like, or you can identify what parts of the tool you need and search for more accessible or affordable options that focus more on your specific use-case.

The problem-- and to be clear, I do not blame Gen Z & Gen Alpha for what I'm about to outline-- is that this user-friendly interface has fostered a culture that no longer troubleshoots. If something on the computer doesn't work well, it's the computer's fault. It's UI should be more intuitive, and it it's not operating as expected, it's broken. What I'm seeing more and more of is that if something's broken, students stop there. They believe there's nothing they can do. They don't actively seek out solutions, they don't take to Google, they don't hop on Reddit to ask around; they just... stop. The gap in knowledge between where they stand and where they need to be to begin troubleshooting seems to wide and inaccessible (because the fundamental structure of files/directories is unknown to many) that they don't begin.

This isn't demonstrative of a lack of critical thinking, but without the drive to troubleshoot the number of opportunities to develop those critical thinking skills are greatly diminished. How do you communicate an issue to someone online? How do look for specific information? How do you determine whether that information is specifically helpful to you? If it isn't, what part of it is? This process fosters so many skills that I believe are at least partially linked to the ability to read and write effectively, and for so many of my students it feels like a complete non-starter.

We need basic computer classes back in schools. We need typing classes, we need digital media classes, we need classes that talk about computers outside of learning to code. Students need every opportunity to develop critical thinking skills and the ability to self-reflect & self correct, and in an age of misinformation & portable technology, it's more important now than ever.

536 notes

·

View notes

Text

in wake of yet another wave of people being turned off by windows, here's a guide on how to dual boot windows and 🐧 linux 🐧 (useful for when you're not sure if you wanna make the switch and just wanna experiment with the OS for a bit!)

if you look up followup guides online you're gonna see that people are telling you to use ubuntu but i am gonna show you how to do this using kubuntu instead because fuck GNOME. all my homies hate GNOME.

i'm just kidding, use whatever distro you like. my favorite's kubuntu (for a beginner home environment). read up on the others if you're curious. and don't let some rando on reddit tell you that you need pop! OS for gaming. gaming on linux is possible without it.

why kubuntu?

- it's very user friendly

- it comes with applications people might already be familiar with (VLC player and firefox for example)

- libreoffice already preinstalled

- no GNOME (sorry GNOME enthusiasts, let me old man yell at the clouds) (also i'm playing this up for the laughs. wholesome kde/gnome meme at the bottom of this post.)

for people who are interested in this beyond my tl;dr: read this

(if you're a linux user, don't expect any tech wizardry here. i know there's a billion other and arguably better ways to do x y and/or z. what i'm trying to do here is to keep these instructions previous windows user friendly. point and click. no CLI bro, it'll scare the less tech savvy hoes. no vim supremacy talk (although hell yeah vim supremacy). if they like the OS they'll figure out bash all by themselves in no time.)

first of all, there'll be a GUI. you don't need to type lines of code to get this all running. we're not going for the ✨hackerman aesthetics✨ today. grab a mouse and a keyboard and you're good to go.

what you need is a computer/laptop/etc with enough disk space to install both windows and linux on it. i'm recommending to reserve at least a 100gb for the both of them. in the process of this you'll learn how to re-allocate disk space either way and you'll learn how to give and take some, we'll do a bit of disk partitioning to fit them both on a single disk.

and that's enough babbling for now, let's get to the actual tutorial:

🚨IMPORTANT. DO NOT ATTEMPT THIS ON A 32BIT SYSTEM. ONLY DO THIS IF YOU'RE WORKING WITH A 64BIT SYSTEM. 🚨 (win10 and win11: settings -> system -> about -> device specifications -> system type ) it should say 64bit operating system, x64-based processor.

step 1: install windows on your computer FIRST. my favorite way of doing this is by creating an installation media with rufus. you can either grab and prepare two usb sticks for each OS, or you can prepare them one after the other. (pro tip: get two usb sticks, that way you can label them and store them away in case you need to reinstall windows/linux or want to install it somewhere else)

in order to do this, you need to download three things:

rufus

win10 (listen. i know switching to win11 is difficult. not much of a fan of it either. but support's gonna end for good. you will run into hiccups. it'll be frustrating for everyone involved. hate to say it, but in this case i'd opt for installing its dreadful successor over there ->) or win11

kubuntu (the download at the top is always the latest, most up-to-date one)

when grabbing your windows installation of choice pick this option here, not the media creation tool option at the top of the page:

side note: there's also very legit key sellers out there who can hook you up with cheap keys. you're allowed to do that if you use those keys privately. don't do this in an enterprise environment though. and don't waste money on it if your ultimate goal is to switch to linux entirely at one point.

from here it's very easy sailing. plug your usb drive into your computer and fire up rufus (just double click it).

🚨two very important things though!!!!!!:🚨

triple check your usb device. whatever one you selected will get wiped entirely in order to make space for your installation media. if you want to be on the safe side only plug in the ONE usb stick you want to use. and back up any music, pictures or whatever else you had on there before or it'll be gone forever.

you can only install ONE OS on ONE usb drive. so you need to do this twice, once with your kubuntu iso and once with your windows iso, on a different drive each.

done. now you can dispense windows and linux left and right, whenever and wherever you feel like it. you could, for example, start with your designated dual boot device. installing windows is now as simple as plugging the usb device into your computer and booting it up. from there, click your way through the installation process and come back to this tutorial when you're ready.

step 2: preparing the disks for a dual boot setup

on your fresh install, find your disk partitions. in your search bar enter either "diskmgr" and hit enter or just type "partitions". the former opens your disk manager right away, the latter serves you up with this "create and format hard disk partitions" search result and that's what you're gonna be clicking.

you'll end up on a screen that looks more or less like in the screenshot below. depending on how many disks you've installed this might look different, but the basic gist is the same. we're going to snip a little bit off Disk 0 and make space for kubuntu on it. my screenshot isn't the best example because i'm using the whole disk and in order to practice what i preach i'd have to go against my own advice. that piece of advice is: if this screen intimidates you and you're not sure what you're doing here, hands off your (C:) drive, EFI system, and recovery partition. however, if you're feeling particularly fearless, go check out the amount of "free space" to the right. is there more than 30gb left available? if so, you're free to right click your (C:) drive and click "shrink volume"

this screen will pop up:

the minimum disk space required for kubuntu is 25gb. the recommended one is 50gb. for an installation like this, about 30gb are enough. in order to do that, simply change the value at

Enter the amount of space to shrink in MB: to 30000

and hit Shrink.

once that's done your partitions will have changed and unallocated space at about the size of 30gb should be visible under Disk 0 at the bottom like in the bottom left of this screenshot (courtesy of microsoft.com):

this is gonna be kubuntu's new home on your disk.

step 3: boot order, BIOS/UEFI changes

all you need to do now is plug the kubuntu-usb drive you prepared earlier with rufus into your computer again and reboot that bad boy.

the next step has no screenshots. we're heading into your UEFI/BIOS (by hitting a specific key (like ESC, F10, Enter) while your computer boots up) and that'll look different for everyone reading this. if this section has you completely lost, google how to do these steps for your machine.

a good search term would be: "[YOUR DEVICE (i.e Lenovo, your mainboard's name, etc.)] change boot order"

what you need to do is to tell your computer to boot your USB before it tries to boot up windows. otherwise you won't be able to install kubuntu.

this can be done by entering your BIOS/UEFI and navigating to a point called something along the lines of "boot". from "boot order" to "booting devices" to "startup configuration", it could be called anything.

what'll be a common point though is that it'll list all your bootable devices. the topmost one is usually the one that boots up first, so if your usb is anywhere below that, make sure to drag and drop or otherwise move it to the top.

when you're done navigate to Save & Exit. your computer will then boot up kubuntu's install wizard. you'll be greeted with this:

shocker, i know, but click "Install Kubuntu" on the right.

step 4: kubuntu installation

this is a guided installation. just like when you're installing windows you'll be prompted when you need to make changes. if i remember correctly it's going to ask you for your preferred keyboard layout, a network connection, additional software you might want to install, and all of that is up to you.

but once you reach the point where it asks you where you want to install kubuntu we'll have to make a couple of important choices.

🚨 another important note 🚨

do NOT pick any of the top three options. they will overwrite your already existing windows installation.

click manual instead. we're going to point it to our unallocated disk space. hit continue. you will be shown another disk partition screen.

what you're looking for are your 30gb of free space. just like with the USB drive when we were working with rufus, make sure you're picking the right one. triple check at the very least. the chosen disk will get wiped.

click it until the screen "create a new partition" pops up.

change the following settings to:

New partition size in megabytes: 512

Use as: EFI System Partition

hit OK.

click your free space again. same procedure.

change the following settings to:

New partition size in megabytes: 8000 (*this might be different in your case, read on.)

Use As: Swap Area

hit OK

click your free space a third time. we need one more partition.

change the following settings to:

don't change anything about the partition size this time. we're letting it use up the rest of the resources.

Use as: Ext4 journaling system

Mount Point: /

you're done here as well.

*about the 8000 megabytes in the second step: this is about your RAM size. if you have 4gb instead type 4000, and so on.

once you're sure your configuration is good and ready to go, hit "Install Now". up until here you can go back and make changes to your settings. once you've clicked the button, there's no going back.

finally, select your timezone and create a user account. then hit continue. the installation should finish up... and you'll be good to go.

you'll be told to remove the USB drive from your computer and reboot your machine.

now when your computer boots up, you should end up on a black screen with a little bit of text in the top left corner. ubuntu and windows boot manager should be mentioned there. naturally, when you click ubuntu you will boot into your kubuntu. likewise if you hit windows boot manager your windows login screen will come up.

and that's that folks. go ham on messing around with your linux distro. customize it to your liking. make yourself familiar with the shell (on kubuntu, when you're on your desktop, hit CTRL+ALT+T).

for starters, you could feed it the first commands i always punch into fresh Linux installs:

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install vim

(you'll thank me for the vim one later)

turn your back on windows. taste freedom. nothing sexier than open source, baby.

sources (mainly for the pictures): 1, 2

further reading for the curious: 1, 2

linux basics (includes CLI commands)

kubuntu documentation (this is your new best friend. it'll tell you everything about kubuntu that you need to know.

and finally the promised kde/gnome meme:

#windows#linuxposting#had a long day at work and i had to type this twice and i'm struggling to keep my eyes open#not guaranteeing that i didn't skip a step or something in there#so if someone linux savvy spots them feel free to point them out so i can make fixes to this post accordingly#opensource posting

122 notes

·

View notes

Text

Did You Know: Scientists will analyze data from the Nancy Grace Roman Space Telescope in the cloud? (For most missions, research often happens on astronomers’ personal computers.) Claire Murray, a scientist at the Space Telescope Science Institute, and her colleague Manuel Sanchez, a cloud engineer, share how this space, known as the Roman Research Nexus, builds on previous missions’ online platforms:

Claire Murray: Roman has an extremely wide field of view and a fast survey speed. Those two facts mean the data volume is going to be orders of magnitude larger than what we're used to. We will enable users to interact with this gigantic dataset in the cloud. They will be able to log in to the platform and perform the same types of analysis they would normally do on their local machines.

Manuel Sanchez: The Transiting Exoplanet Survey Satellite (TESS) mission’s science platform, known as the Timeseries Integrated Knowledge Engine (TIKE), was our first try at introducing users to this type of workflow on the cloud. With TIKE, we have been learning researchers’ usage patterns. We can track the performance and metrics, which is helping us design the appropriate environment and capabilities for Roman. Our experience with other science platforms also helps us save time from a coding perspective. The code is basically the same for our platforms, but we can customize it as needed.

Read the full interview: https://www.stsci.edu/contents/annual-reports/2024/where-data-and-people-meet

#space#astronomy#science#stsci#universe#nasa#nasaroman#roman science#roman space telescope#data#cloud engineering#big data

20 notes

·

View notes

Text

I'm having a genuinely hard time focusing on an assignment for one of my classes. The professor gave us three short magazine articles from one of the IEEE magazines and wants us to summarize them. But all of them are colossal tech-bro circle-jerk corporate talk papers that I genuinely don't care what they say.

I feel like the actual best summary would be to point out that the authors are all leaders in business consultancy firms and therefore have a financial interest in convincing businesses that they need to change their IT infrastructure, show the number of times IoT, cloud-computing, AI, and efficiency appear in each article, point out that they never talk about how any of these changes will actually be any use to either the business or their user base beyond vague ideas like efficiency, and then conclude by posting a link to Weird Al's "Mission Statement".

25 notes

·

View notes

Text

AI’s energy use already represents as much as 20 percent of global data-center power demand, research published Thursday in the journal Joule shows. That demand from AI, the research states, could double by the end of this year, comprising nearly half of all total data-center electricity consumption worldwide, excluding the electricity used for bitcoin mining.

The new research is published in a commentary by Alex de Vries-Gao, the founder of Digiconomist, a research company that evaluates the environmental impact of technology. De Vries-Gao started Digiconomist in the late 2010s to explore the impact of bitcoin mining, another extremely energy-intensive activity, would have on the environment. Looking at AI, he says, has grown more urgent over the past few years because of the widespread adoption of ChatGPT and other large language models that use massive amounts of energy. According to his research, worldwide AI energy demand is now set to surpass demand from bitcoin mining by the end of this year.

“The money that bitcoin miners had to get to where they are today is peanuts compared to the money that Google and Microsoft and all these big tech companies are pouring in [to AI],” he says. “This is just escalating a lot faster, and it’s a much bigger threat.”

The development of AI is already having an impact on Big Tech’s climate goals. Tech giants have acknowledged in recent sustainability reports that AI is largely responsible for driving up their energy use. Google’s greenhouse gas emissions, for instance, have increased 48 percent since 2019, complicating the company’s goals of reaching net zero by 2030.

“As we further integrate AI into our products, reducing emissions may be challenging due to increasing energy demands from the greater intensity of AI compute,” Google’s 2024 sustainability report reads.

Last month, the International Energy Agency released a report finding that data centers made up 1.5 percent of global energy use in 2024—around 415 terrawatt-hours, a little less than the yearly energy demand of Saudi Arabia. This number is only set to get bigger: Data centers’ electricity consumption has grown four times faster than overall consumption in recent years, while the amount of investment in data centers has nearly doubled since 2022, driven largely by massive expansions to account for new AI capacity. Overall, the IEA predicted that data center electricity consumption will grow to more than 900 TWh by the end of the decade.

But there’s still a lot of unknowns about the share that AI, specifically, takes up in that current configuration of electricity use by data centers. Data centers power a variety of services—like hosting cloud services and providing online infrastructure—that aren’t necessarily linked to the energy-intensive activities of AI. Tech companies, meanwhile, largely keep the energy expenditure of their software and hardware private.

Some attempts to quantify AI’s energy consumption have started from the user side: calculating the amount of electricity that goes into a single ChatGPT search, for instance. De Vries-Gao decided to look, instead, at the supply chain, starting from the production side to get a more global picture.

The high computing demands of AI, De Vries-Gao says, creates a natural “bottleneck” in the current global supply chain around AI hardware, particularly around the Taiwan Semiconductor Manufacturing Company (TSMC), the undisputed leader in producing key hardware that can handle these needs. Companies like Nvidia outsource the production of their chips to TSMC, which also produces chips for other companies like Google and AMD. (Both TSMC and Nvidia declined to comment for this article.)

De Vries-Gao used analyst estimates, earnings call transcripts, and device details to put together an approximate estimate of TSMC’s production capacity. He then looked at publicly available electricity consumption profiles of AI hardware and estimates on utilization rates of that hardware—which can vary based on what it’s being used for—to arrive at a rough figure of just how much of global data-center demand is taken up by AI. De Vries-Gao calculates that without increased production, AI will consume up to 82 terrawatt-hours of electricity this year—roughly around the same as the annual electricity consumption of a country like Switzerland. If production capacity for AI hardware doubles this year, as analysts have projected it will, demand could increase at a similar rate, representing almost half of all data center demand by the end of the year.

Despite the amount of publicly available information used in the paper, a lot of what De Vries-Gao is doing is peering into a black box: We simply don’t know certain factors that affect AI’s energy consumption, like the utilization rates of every piece of AI hardware in the world or what machine learning activities they’re being used for, let alone how the industry might develop in the future.

Sasha Luccioni, an AI and energy researcher and the climate lead at open-source machine-learning platform Hugging Face, cautioned about leaning too hard on some of the conclusions of the new paper, given the amount of unknowns at play. Luccioni, who was not involved in this research, says that when it comes to truly calculating AI’s energy use, disclosure from tech giants is crucial.

“It’s because we don’t have the information that [researchers] have to do this,” she says. “That’s why the error bar is so huge.”

And tech companies do keep this information. In 2022, Google published a paper on machine learning and electricity use, noting that machine learning was “10%–15% of Google’s total energy use” from 2019 to 2021, and predicted that with best practices, “by 2030 total carbon emissions from training will reduce.” However, since that paper—which was released before Google Gemini’s debut in 2023—Google has not provided any more detailed information about how much electricity ML uses. (Google declined to comment for this story.)

“You really have to deep-dive into the semiconductor supply chain to be able to make any sensible statement about the energy demand of AI,” De Vries-Gao says. “If these big tech companies were just publishing the same information that Google was publishing three years ago, we would have a pretty good indicator” of AI’s energy use.

19 notes

·

View notes

Text

No-Google (fan)fic writing, Part 1: LibreOffice Writer

Storytime

The first documents and fanfictions I wrote on a computer were .doc documents written with Microsoft Word 98. At least those I remember.

From there, I sort of naturally graduated to following versions of Microsoft Word, the last one I’ve actively used to write texts of any considerable length (more than half a page) being Word 2007 (but only under duress from my employer).

That was partly due to the fact that the Microsoft Office suite has always been expensive and there were times I simply didn’t want to spend the money on it. So I started using OpenOffice Writer fairly early on, “graduating” to LibreOffice Writer once that was available.

Word versus Writer

What are the differences between Word (Microsoft) and Writer (LibreOffice)?

Cost

Firstly, Writer is free. It comes as part of the LibreOffice Suite, which has a replacement for almost every application Office has. The ones it hasn’t, you won’t need for writing fanfic, trust me.

So, +1 for being freely available.

Interface

Interface-wise – well, it might look a little old-fashioned to those used to Google docs and Word. Back in the day, it was mostly that the buttons looked differently. However, Writer did not adopt the “ribbon” Word has shipped and continues to have customisable bars. For me, that’s a huge +1 argument for using Writer over Word or Google docs, because I can edit these bars and only keep the buttons I actually need – unlike the Word ribbons, which drove me to despair and ultimately away from Word after 2007 appeared.

Features

Other than that, it really isn’t all that different from Word. You can use document structures like headings, subheadings, track changes, compare documents, footnotes, endnotes, everything else Word can do. It really is a proper, great replacement for Word – it even is mostly compatible with Word in that .doc and .docx documents can be opened with Writer, even if the layout may look a bit off.

So +1 – your old files are compatible with it.

File formats

Files written with Writer are stored as .odt (Open Document Text), but there are options for export into other formats, such as PDF, EPUB or XHTML. Exporting to AO3 is simple – copy the text you want, set the AO3 text editor to Rich Text and paste.

Easy +1.

Syncing

LibreOffice does not offer cloud-storage. So if you want your files available on several devices, you need a different solution. As I write more for this series, I’ll describe the different options in more detail, but Dropbox, GIT or, depending on which provider you’re using, your email providers cloud storage are options. OneDrive, if you mind Microsoft less than Google.

Or an old-fashioned USB in combination with an automatic backup application.*

Ease of use for Word/Google doc-users

As someone who came straight from Word (although a very old version) to Writer, I’ve always found it very easy to use. What I particularly like is that the interface is much less cluttered than the Word ribbons and I can customise the bars. In all honesty, if it weren’t for that cosmetic difference, I think many users wouldn’t be able to tell the difference between Writer and Word.

So if you’re just looking for something to replace Word or Google docs, Writer is definitely a good option.

*I’m not recommending USBs because I’m of the opinion that it’s a convenient solution. I’m doing it because I’m a cynic. Every time a company tells me I can have something for free, my first question will be “what will I be paying with instead?”

Because if I don’t pay money, I’ll pay with my data. That’s one of the main reasons I never started using Google. It’s just too good to be true, all those services for free.

So, you know, if you’re good with data being collected on you or you can’t afford to pay for a syncing service, by all means, use unpaid services. Just be aware of what comes with it. You will pay, one way or the other, with money or your data. Nothing in the world is for free, especially not those apps companies are trying to get you to use. Read No-Google (fan)fic writing, Part 2: Zettelkasten

Read No-Google (fan)fic writing, Part 3: LaTeχ

Read No-Google (fan)fic writing, Part 4: Markdown

Read No-Google (fan)fic writing, Part 5: Obsidian

#fanfiction#fanfic writing#fic writing#degoogle#degoogle your fics#degoogle your writing#software recommendations#no-google (fan)fic writing#resources#libreoffice writer

30 notes

·

View notes

Text

Hudson and Rex Episodes

It has come to my attention that Hudson and Rex episodes are not easily accessible to a lot of people, despite it being broadcasted in many countries. I was looking for a place to archive the episodes myself in good quality as a backup but up until recently, the 1080p rips were huge so it was an impossible feat. I finally found some mkv ones that are not as ginormous as the others, and I'd like to share them with the fandom.

Disclaimer: I did not do these rips or the transcoding. I haven't checked the episodes one by one to see if there are any faults with them, just a few as random tests, I also watched a few, and they were all good, subtitles were working and in sync, etc.

What you need to know before downloading:

The files are in mkv format and Mega, the host I've uploaded them on, does NOT have a player to play MKVs online. The links are for downloading, or alternatively transferring to your own Mega account, not for online streaming.

The video codec is HEVC, which is why the size of the episodes is not huge. That might affect some older computers which may not have this codec, though. Read about HEVC here https://en.wikipedia.org/wiki/High_Efficiency_Video_Coding and if it's missing, you can add the HEVC codec.

Same goes for your tv if you choose to play them in one (although you probably can't add the codec there). I generally recommend downloading one episode as a test. If it plays in your device, they all should play in that device.

Most of the files have forced English subtitles on them. I am unfamiliar with forced subtitles in general. You may have trouble removing them on a tv, maybe. I've tested them only using VLC on my computer and they can appear and disappear just fine when I choose so like normal subs do. Forced subtitles are not hard-coded subtitles.

Episodes S01E01 - S06E06 were all transcoded by one team as it was a pack, the rest by another, as the first pack was uploaded during this winter hiatus and there was no other upload by the first team for the rest. I only see small differences between the episodes, not worthy of a mention. I've kept the original file names, so you will know when the teams change if you're interested in that (team name is the last input on the title of the file).

The size of all the episodes in total is around 92GB. When you go to download them, each file will also display the size of it.

How to download (skip this if you've downloaded from Mega before):

Even if you have set your browser to ask you where to download the file, Mega will download the file you requested entirely before asking you were to save it. It's how their cloud service works. You don't need to download anything else to get these files, just right-click a file and click Download, and then Standard Download when the submenu opens. You do not need to download the Mega Desktop App, unless you want to download the entire folder at once as a ZIP file. I don't know how many concurrent downloads a free user gets on Mega, or limitations regarding the GBs per day on free users.

Mega suggests users download using Chrome or a Chromium based browser, however downloading the files one by one should work in any browser.

If you have a download manager, just load these folders in it and it will do the job better than your browser.

If you attempt this with a smartphone, then I highly suggest you download the Mega mobile app. I don't think the files will download to your phone otherwise.

Links:

These lead to each season's folder of episodes. Only copy the link below, do not copy the season identifier at the start of each line. Make sure you copy the entire link especially the S4 one which apparently continues in a second line.

S1: https://mega.nz/folder/1ZMTlbpY#DqS2V2KKgeajbINzx8c6Pg

S2: https://mega.nz/folder/kZE1yTTC#p29HrvXgahGXW-0rlzx77Q

S3: https://mega.nz/folder/0Bt3gBJL#hcX7tjU1GScmprTc0nkc0w

S4: https://mega.nz/folder/UQFD3SZZ#nbGJeLzH2IHLVpVFyK750A

S5: https://mega.nz/folder/ARkzUQbS#eS1Yy11x_DEPg3T2bD5ozw

S6: https://mega.nz/folder/lBUFnBwb#WszZvKLzfpRKVvuz5B78Nw

S7: https://mega.nz/folder/kJlEFCLJ#exM6rRVhPtNSULhvsjHWZg

About Season 7 rips: I will upload the first rip that is up so that we won't waste time, this is usually a HDTV rip by the release team SYNCOPY (so basically the episode as seen on tv without ads, usually with the promo, in 720p, no subtitles - subtitles will be added on the same folder in separate link, if any). Later, this will be replaced with 1080p links. Please, check the link periodically to find more links. The goal is to have 1080p Webrips around 1GB for each episode.

Other information:

I'll try to keep the links up as long as I can but I suggest keeping your own copies. Mega does not offer that amount of space for free, so this is a paid cloud service. I'm not looking for anyone to participate on the upkeep but there might be a day when these links will be taken down for any number of reasons. Personally, I don't trust the cloud. Keep local copies of anything you don't want to lose.

If these are reported, I will not be reuploading them and I assume that reporting may also take down my account with them so I will probably also not be able to be a paying customer of their service either way. So, keep the sharing of the links within the fandom. I will not tag this post, but I highly encourage reblogging it to spread the info.

I suggest that anyone who wants to share this with a lot of people should make their own cloud backup. The purpose of me uploading these links is, ironically, not piracy. The purpose is to make the episodes easily accessible to fans.

I will not upload these in other cloud services, if anyone wants to go upload these in google drive, for example, I'm not willing to risk it but of course, anyone else willing to do it is welcome to.

If anyone has questions or concerns, I'll be glad to answer them. Not everyone is familiar with hosting sites, but this is easier than a torrent. I'm sure I've forgotten things which to me may seem simple.

58 notes

·

View notes

Text

OpenAI’s ChatGPT exploded onto the scene nearly a year ago, reaching an estimated 100 million users in two months and setting off an A.I. boom. Behind the scenes, the technology relies on thousands of specialized computer chips. And in the coming years, they could consume immense amounts of electricity. A peer-reviewed analysis published Tuesday lays out some early estimates. In a middle-ground scenario, by 2027 A.I. servers could use between 85 to 134 terawatt hours (Twh) annually. That’s similar to what Argentina, the Netherlands and Sweden each use in a year, and is about 0.5 percent of the world's current electricity use.

[...]

The electricity needed to run A.I. could boost the world’s carbon emissions, depending on whether the data centers get their power from fossil fuels or renewable resources. In 2022, data centers that power all computers, including Amazon’s cloud and Google’s search engine, used about 1 to 1.3 percent of the world’s electricity. That excludes cryptocurrency mining, which used another 0.4 percent, though some of those resources are now being redeployed to run A.I.

161 notes

·

View notes

Text

PC Storage System

The Pokémon Storage System was invented by Bill in 1995 with Lanette as a co-developer of the software, designed for people to be able to store more than six Pokémon in a global database where their Pokémon could be converted into raw data and safely kept somewhere they could be easily accessed through interacting with a PC or an otherwise capable link in order to access the database. It is capable of storing both Pokémon kept in Poké Balls and Pokémon eggs in their natural states.

The Pokémon Storage System is managed my multiple different people globally in order to troubleshoot, improve, and maintain it. Bill maintains it in Kanto and Johto.

Celio maintains it in the Sevii Islands and also runs the Pokémon Network Centre on One Island which is responsible for providing a method of facilitating global trading. He also helped develop the Global Terminal in Johto and Sinnoh for this same purpose.

Lanette maintains it in Hoenn and is primarily in charge of the user interface and enabling personal Trainer customization of the Box System with wallpapers and giving Trainers the ability to change the names of boxes and the like as well as streamlining the process and making the interface more user-friendly.

Bebe maintains it in Sinnoh and actually built it from scratch as a computer technician based on the previous designs of the system by Bill and Lanette, earning their respect, and developing a way to make it so that the system can be accessed by Trainers from anywhere without the need to access a PC.

Amanita maintains it in mainland Unova and developed it based on the previous designs of Bill, Lanette, and Bebe, introducing a new feature of a Battle Box where Trainers can store a team they use specifically for battling and making it so that Trainers start out with eight boxes available to them and that each time each box is storing at least one Pokémon, the capacity will increase by another eight boxes and then by another eight boxes once the same conditions are met again, making the total capacity of Unova’s PC Storage System seven-hundred-and-twenty Pokémon per Trainer.

Cassius maintains it in Kalos and despite being a capable computer technician, he has made no significant contributions to the operations or design of the PC Storage System. In fact, his sole role is keeping it maintained and was personally tasked by Bill himself to take on that role.

Molayne maintains it in Alola and runs the Hokulani Observatory and, like Cassius, makes no significant contributions to the operations or design of the PC Storage System and simply maintains it.

Brigette (Lanette’s older sister) and Grand Oak (relation to Professor Samuel Oak unclear) manage the PC Storage System everywhere else and in every other capacity, typically on a more global scale. They maintain a more centralized PC Storage System that acts as the bridge between all the others and the network that facilitates global trading. Brigette is credited with upgrading the Pokémon Storage System with the ability to hold fifteen-hundred Pokémon per Trainer, as well as the ability to select and move multiple Pokémon at once. She is also the developer of the Bank System, which acts as an online cloud where Pokémon can be transferred if their Trainers have to move regions and need to access them from the local PC Storage System in their target region and other similar purposes. Grand Oak, however, is more interested in completing a comprehensive National Pokédex by collecting the data from Pokédex holders all over the globe into one central database.

Taglist:

@earth-shaker / @little-miss-selfships / @xelyn-craft / @sarahs-malewives / @brahms-and-lances-wife

-

@ashes-of-a-yume / @cherry-bomb-ships / @kiawren / @kingofdorkville / @bugsband

Let me know if you'd like to be added or removed from my taglist :3

11 notes

·

View notes

Text

It starts with him

What was once a promise of technology to allow us to automate and analyze the environments in our physical spaces is now a heap of broken ideas and broken products. Technology products have been deployed en masse, our personal data collected and sold without our consent, and then abandoned as soon as companies strip mined all the profit they thought they could wring out. And why not? They already have our money.

The Philips Hue, poster child of the smart home, used to work entirely on your local network. After all, do you really need to connect to the Internet to control the lights in your own house? Well you do now!Philips has announced it will require cloud accounts for all users—including users who had already purchased the hardware thinking they wouldn’t need an account (and the inevitable security breaches that come with it) to use their lights.

Will you really trust any promises from a company that unilaterally forces a change like this on you? Does the user actually benefit from any of this?

Matter in its current version … doesn’t really help resolve the key issue of the smart home, namely that most companies view smart homes as a way to sell more individual devices and generate recurring revenue.

It keeps happening. Stuff you bought isn’t yours because the company you bought it from can take away features and force you to do things you don’t want or need to do—ultimately because they want to make more money off of you. It’s frustrating, it’s exhausting, and it’s discouraging.

And it has stopped IoT for the rest of us in its tracks. Industrial IoT is doing great—data collection is the point for the customer. But the consumer electronics business model does not mesh with the expected lifespan of home products, and so enshittification began as soon as those first warranties ran out.

How can we reset the expectations we have of connected devices, so that they are again worthy of our trust and money? Before we can bring the promise back, we must deweaponize the technology.

Guidelines for the hardware producer

What we can do as engineers and business owners is make sure the stuff we’re building can’t be wielded as a lever against our own customers, and to show consumers how things could be. These are things we want consumers to expect and demand of manufacturers.

Control

Think local

Decouple

Open interfaces

Be a good citizen

1) Control over firmware updates.

You scream, “What about security updates!” But a company taking away a feature you use or requiring personal data for no reason is arguably a security flaw.

We were once outraged when intangible software products went from something that remained unchanging on your computer, to a cloud service, with all the ephemerality that term promises. Now they’re coming for our tangible possessions.

No one should be able to do this with hardware that you own. Breaking functionality is entirely what security updates are supposed to prevent! A better checklist for firmware updates:

Allow users to control when and what updates they want to apply.

Be thorough and clear as to what the update does and provide the ability to downgrade if needed.

Separate security updates from feature additions or changes.

Never force an update unless you are sure you want to accept (financial) responsibility for whatever you inadvertently break.

Consider that you are sending software updates to other people’s hardware. Ask them for permission (which includes respecting “no”) before touching their stuff!

2) Do less on the Internet.

A large part of the security issues with IoT products stem from the Internet connectivity itself. Any server in the cloud has an attack surface, and now that means your physical devices do.

The solution here is “do less”. All functionality should be local-only unless it has a really good reason to use the Internet. Remotely controlling your lights while in your own house does not require the cloud and certainly does not require an account with your personal information attached to it. Limit the use of the cloud to only the functions that cannot work without it.

As a bonus, less networked functionality means fewer maintenance costs for you.

3) Decouple products and services.

It’s fine to need a cloud service. But making a product that requires a specific cloud service is a guarantee that it can be enshittified at any point later on, with no alternative for the user owner.

Design products to be able to interact with other servers. You have sold someone hardware and now they own it, not you. They have a right to keep using it even if you shut down or break your servers. Allow them the ability to point their devices to another service. If you want them to use your service, make it worthwhile enough for them to choose you.

Finally, if your product has a heavy reliance on the cloud to work, consider enabling your users to self-host their own cloud tooling if they so desire. A lot of people are perfectly capable of doing this on their own and can help others do the same.

4) Use open and standard protocols and interfaces.

Most networked devices have no reason to use proprietary protocols, interfaces, and data formats. There are open standards with communities and software available for almost anything you could want to do. Re-inventing the wheel just wastes resources and makes it harder for users to keep using their stuff after you’re long gone. We did this with Twine, creating an encrypted protocol that minimized chatter, because we needed to squeeze battery life out of WiFi back when there weren’t good options.

If you do have a need for a proprietary protocol (and there are valid reasons to do so):

Document it.

If possible, have a fallback option that uses an open standard.