#database developers in UK

Explore tagged Tumblr posts

Text

Understanding the Importance of Database Validation

Database validation plays a crucial role in maintaining the accuracy and reliability of data. It involves verifying the integrity of data and ensuring that it meets certain predefined criteria. By validating the database, organizations can prevent errors, inconsistencies, and inaccuracies that could lead to serious consequences.

One of the key reasons why database validation is important is because it helps in maintaining data quality. Data quality refers to the accuracy, completeness, consistency, and reliability of data. Without proper validation, data quality can be compromised, leading to incorrect insights, poor decision-making and even financial losses.

Another important aspect of database validation is data security. Validating the database helps in identifying and mitigating security risks such as unauthorized access, data breaches, and data corruption. By implementing proper validation measures, organizations can ensure the confidentiality, integrity, and availability of their data.

In addition, database validation is essential for regulatory compliance. Many industries have strict regulations and standards regarding data management. By validating the database, organizations can ensure that they comply with these regulations and avoid legal penalties or reputational damage.

Overall, understanding the importance of database validation is crucial for organizations that rely on data for their operations. It helps in maintaining data quality, ensuring data security, and complying with regulatory requirements.

Implementing Data Integrity Checks

Implementing data integrity checks is an important step in database validation. Data integrity refers to the accuracy, consistency, and reliability of data. By implementing data integrity checks, organizations can identify and correct any inconsistencies or errors in the database.

There are different types of data integrity checks that can be implemented. One common approach is to use referential integrity, which ensures that relationships between tables are maintained. This involves setting up constraints and rules that prevent the creation of invalid relationships or the deletion of data that is referenced by other tables.

Another approach is to use data validation rules, which define the acceptable values and formats for specific fields. These rules can be applied during data entry or through batch processes to ensure that the data meets the required criteria. For example, a validation rule can be used to check that a phone number field contains only numeric characters and has a specific length.

Data integrity checks can also include data consistency checks, which verify that the data is consistent across different tables or systems. This can involve comparing data values, performing calculations, or checking for duplicates or missing data.

By implementing data integrity checks, organizations can ensure that the database remains accurate, consistent, and reliable. This not only improves data quality but also helps in preventing data corruption, data loss, and other potential issues.

Leveraging Automated Validation Tools

Leveraging automated validation tools can greatly simplify the process of database validation. These tools are designed to automate various aspects of the validation process, reducing the time and effort required.

One of the key benefits of automated validation tools is that they can perform comprehensive checks on large datasets quickly and accurately. These tools can analyze the data, identify errors or inconsistencies, and generate detailed reports. This allows organizations to quickly identify and resolve any issues, ensuring the accuracy and reliability of the database.

Automated validation tools also provide the advantage of repeatability and consistency. Once a validation process is defined, it can be easily repeated on a regular basis or whenever new data is added to the database. This ensures that the validation is consistently applied and reduces the risk of human errors or oversights.

Furthermore, automated validation tools often come with built-in validation rules and algorithms that can be customized to meet specific requirements. Organizations can define their own validation criteria and rules, ensuring that the tool aligns with their unique needs and data standards.

In summary, leveraging automated validation tools can streamline the database validation process, improve efficiency, and enhance the overall quality of the database.

Establishing Clear Validation Criteria

Establishing clear validation criteria is essential for effective database validation. Validation criteria define the specific requirements that data must meet to be considered valid. By establishing clear criteria, organizations can ensure consistency and accuracy in the validation process.

When establishing validation criteria, it is important to consider both the technical and business requirements. Technical requirements include data formats, data types, field lengths, and referential integrity rules. These requirements ensure that the data is structured correctly and can be processed and analyzed effectively.

Business requirements, on the other hand, define the specific rules and constraints that are relevant to the organization's operations. These requirements can vary depending on the industry, regulatory standards, and internal policies. For example, a financial institution may have specific validation criteria for customer account numbers or transaction amounts.

Clear validation criteria should also include error handling and exception handling procedures. These procedures define how the system should handle data that does not meet the validation criteria. It can involve rejecting the data, triggering notifications or alerts, or performing automatic data corrections.

By establishing clear validation criteria, organizations can ensure that the database is validated consistently and accurately. This helps in maintaining data quality, data integrity, and overall data reliability.

Regular Monitoring and Maintenance

Regular monitoring and maintenance are crucial for effective database validation. Database validation is not a one-time process but an ongoing effort to ensure the accuracy and reliability of the database.

Monitoring the database involves regularly checking for errors, inconsistencies, and security risks. This can be done through automated monitoring tools that generate alerts or notifications when issues are detected. It can also involve manual checks and reviews by database administrators or data analysts.

Maintenance activities include data cleansing, data updates, and system optimization. Data cleansing involves identifying and correcting any errors or inconsistencies in the data. This can include removing duplicate records, standardizing data formats, or resolving data conflicts.

Data updates are necessary to ensure that the database reflects the most up-to-date information. This can involve regular data imports or integrations with external systems. It is important to validate the updated data to ensure its accuracy and consistency.

System optimization involves fine-tuning the database performance and configuration. This can include optimizing queries, indexing tables, or allocating sufficient resources for the database server. Regular performance monitoring and tuning help in maintaining the efficiency and responsiveness of the database.

By regularly monitoring and maintaining the database, organizations can proactively identify and resolve any issues, ensuring the accuracy, reliability, and security of the data.

Keywords Tag: Telemarketing, Business Development, B2b Appointment Setting, B2b Telemarketing, B2b Data, Data Enrichment, Database Validation, Outsourcing Telemarketing, B2b Lead Generation Companies, Lead Generation Companies, Lead Generation Services, Lead Generation Company UK, B2b Lead Generation Service, B2b Lead Generation, Telesales Training

URLs:

https://gsa-marketing.co.uk/

https://gsa-marketing.co.uk/data-services/

https://gsa-marketing.co.uk/lead-generation/

https://gsa-marketing.co.uk/telemarketing-training/

https://gsa-marketing.co.uk/b2b-appointment-setting/

https://gsa-marketing.co.uk/b2b-telemarketing-services/

https://gsa-marketing.co.uk/data-services/data-validation/

https://gsa-marketing.co.uk/data-services/data-enrichment/

https://gsa-marketing.co.uk/10-telemarketing-tips-for-beginners/

https://gsa-marketing.co.uk/20-business-development-tips-to-get-you-more-business/

https://gsa-marketing.co.uk/in-house-vs-outsourced-telemarketing-which-is-better/

Source: https://seoe2zblogs.medium.com/understanding-the-importance-of-database-validation-b24fdddd006e

#Telemarketing#Business Development#B2b Appointment Setting#B2b Telemarketing#B2b Data#Data Enrichment#Database Validation#Outsourcing Telemarketing#B2b Lead Generation Companies#Lead Generation Companies#Lead Generation Services#Lead Generation Company UK#B2b Lead Generation Service#B2b Lead Generation#Telesales Training

0 notes

Text

Still standing

On the afternoon of April 14th, a hacker using a UK IP address exploited an out-of-date software package on one of 4chan's servers, via a bogus PDF upload. With this entry point, they were eventually able to gain access to one of 4chan's servers, including database access and access to our own administrative dashboard. The hacker spent several hours exfiltrating database tables and much of 4chan's source code. When they had finished downloading what they wanted, they began to vandalize 4chan at which point moderators became aware and 4chan's servers were halted, preventing further access.

Over the following days, 4chan's development team surveyed the damage, which to be frank, was catastrophic. While not all of our servers were breached, the most important one was, and it was due to simply not updating old operating systems and code in a timely fashion. Ultimately this problem was caused by having insufficient skilled man-hours available to update our code and infrastructure, and being starved of money for years by advertisers, payment providers, and service providers who had succumbed to external pressure campaigns.

We had begun a process of speccing new servers in late 2023. As many have suspected, until that time 4chan had been running on a set of servers purchased second-hand by moot a few weeks before his final Q&A, as prior to then we simply were not in a financial position to consider such a large purchase. Advertisers and payment providers willing to work with 4chan are rare, and are quickly pressured by activists into cancelling their services. Putting together the money for new equipment took nearly a decade.

In April of 2024 we had agreed on specs and began looking for possible suppliers. Money is always tight for us, and few companies were willing to sell us servers, so actually buying the hardware wasn’t a trivial problem. We managed to finalize a purchase in June, and had the new servers racked and online in July. Over the next few months we slowly moved functionality onto the new servers, but we had still been relying on the old servers for key functions. Everything about this process took much longer than intended, which is a recurring theme in this debacle. The free time that 4chan's development team had available to dedicate to 4chan was insufficient to update our software and infrastructure fast enough, and our luck ran out.

However, we have not been idle during our nearly two weeks of downtime. The server that was breached has been replaced, with the operating system and code updated to the latest versions. PDF uploads have been temporarily disabled on those boards that supported them, but they will be back in the near future. One slow but much beloved board, /f/ - Flash, will not be returning however, as there is no realistic way to prevent similar exploits using .swf files. We are bringing on additional volunteer developers to help keep up with the workload, and our team of volunteer janitors & moderators remains united despite the grievous violations some have suffered to their personal privacy.

4chan is back. No other website can replace it, or this community. No matter how hard it is, we are not giving up.

561 notes

·

View notes

Text

The Best News of Last Week - March 18

1. FDA to Finally Outlaw Soda Ingredient Prohibited Around The World

An ingredient once commonly used in citrus-flavored sodas to keep the tangy taste mixed thoroughly through the beverage could finally be banned for good across the US. BVO, or brominated vegetable oil, is already banned in many countries, including India, Japan, and nations of the European Union, and was outlawed in the state of California in October 2022.

2. AI makes breakthrough discovery in battle to cure prostate cancer

Scientists have used AI to reveal a new form of aggressive prostate cancer which could revolutionise how the disease is diagnosed and treated.

A Cancer Research UK-funded study found prostate cancer, which affects one in eight men in their lifetime, includes two subtypes. It is hoped the findings could save thousands of lives in future and revolutionise how the cancer is diagnosed and treated.

3. “Inverse vaccine” shows potential to treat multiple sclerosis and other autoimmune diseases

A new type of vaccine developed by researchers at the University of Chicago’s Pritzker School of Molecular Engineering (PME) has shown in the lab setting that it can completely reverse autoimmune diseases like multiple sclerosis and type 1 diabetes — all without shutting down the rest of the immune system.

4. Paris 2024 Olympics makes history with unprecedented full gender parity

In a historic move, the International Olympic Committee (IOC) has distributed equal quotas for female and male athletes for the upcoming Olympic Games in Paris 2024. It is the first time The Olympics will have full gender parity and is a significant milestone in the pursuit of equal representation and opportunities for women in sports.

Biased media coverage lead girls and boys to abandon sports.

5. Restored coral reefs can grow as fast as healthy reefs in just 4 years, new research shows

Planting new coral in degraded reefs can lead to rapid recovery – with restored reefs growing as fast as healthy reefs after just four years. Researchers studied these reefs to assess whether coral restoration can bring back the important ecosystem functions of a healthy reef.

“The speed of recovery we saw is incredible,” said lead author Dr Ines Lange, from the University of Exeter.

6. EU regulators pass the planet's first sweeping AI regulations

The EU is banning practices that it believes will threaten citizens' rights. "Biometric categorization systems based on sensitive characteristics" will be outlawed, as will the "untargeted scraping" of images of faces from CCTV footage and the web to create facial recognition databases.

Other applications that will be banned include social scoring; emotion recognition in schools and workplaces; and "AI that manipulates human behavior or exploits people’s vulnerabilities."

7. Global child deaths reach historic low in 2022 – UN report

The number of children who died before their fifth birthday has reached a historic low, dropping to 4.9 million in 2022.

The report reveals that more children are surviving today than ever before, with the global under-5 mortality rate declining by 51 per cent since 2000.

---

That's it for this week :)

This newsletter will always be free. If you liked this post you can support me with a small kofi donation here:

Buy me a coffee ❤️

Also don’t forget to reblog this post with your friends.

781 notes

·

View notes

Text

Also preserved in our archive

New research indicates that people who contracted COVID-19 early in the pandemic faced a significantly elevated risk of heart attack, stroke, and death for up to three years post-infection.

Those with severe cases saw nearly quadruple the risk, especially in individuals with A, B, or AB blood types, while blood type O was associated with lower risk. This finding highlights long-term cardiovascular threats for COVID-19 patients and suggests that severe cases may need to be considered as a new cardiovascular risk factor. However, further studies on more diverse populations and vaccinated individuals are needed to validate these results.

Long-Term Cardiovascular Risks Linked to COVID-19 Infection A recent study supported by the National Institutes of Health (NIH) found that COVID-19 infection significantly increased the risk of heart attack, stroke, and death for up to three years in unvaccinated people who contracted the virus early in the pandemic. This risk was observed in individuals with and without pre-existing heart conditions and confirms earlier research linking COVID-19 infection to a higher chance of cardiovascular events. However, this study is the first to indicate that the heightened risk may last as long as three years, especially for those infected during the first wave of the pandemic.

The study, published in the journal Arteriosclerosis, Thrombosis, and Vascular Biology, revealed that individuals who had COVID-19 early in the pandemic were twice as likely to experience cardiovascular events compared to those with no history of infection. For those with severe cases, the risk was nearly quadrupled.

“This study sheds new light on the potential long-term cardiovascular effects of COVID-19, a still-looming public health threat,” said David Goff, M.D., Ph.D., director for the Division of Cardiovascular Sciences at NIH’s National Heart, Lung, and Blood Institute (NHLBI), which largely funded the study. “These results, especially if confirmed by longer term follow-up, support efforts to identify effective heart disease prevention strategies for patients who’ve had severe COVID-19. But more studies are needed to demonstrate effectiveness.”

Genetic Factors and Blood Type’s Role in COVID-19 Complications The study is also the first to show that an increased risk of heart attack and stroke in patients with severe COVID-19 may have a genetic component involving blood type. Researchers found that hospitalization for COVID-19 more than doubled the risk of heart attack or stroke among patients with A, B, or AB blood types, but not in patients with O types, which seemed to be associated with a lower risk of severe COVID-19.

Scientists studied data from 10,000 people enrolled in the UK Biobank, a large biomedical database of European patients. Patients were ages 40 to 69 at the time of enrollment and included 8,000 who had tested positive for the COVID-19 virus and 2,000 who were hospitalized with severe COVID-19 between Feb. 1, 2020, and Dec. 31, 2020. None of the patients had been vaccinated, as vaccines were not available during that period.

The researchers compared the two COVID-19 subgroups to a group of nearly 218,000 people who did not have the condition. They then tracked the patients from the time of their COVID-19 diagnosis until the development of either heart attack, stroke, or death, up to nearly three years.

Higher Cardiovascular Risk in Patients With Severe Cases Accounting for patients who had pre-existing heart disease – about 11% in both groups – the researchers found that the risk of heart attack, stroke, and death was twice as high among all the COVID-19 patients and four times as high among those who had severe cases that required hospitalization, compared to those who had never been infected. The data further show that, within each of the three follow-up years, the risk of having a major cardiovascular event was still significantly elevated compared to the controls – in some cases, the researchers said, almost as high or even higher than having a known cardiovascular risk factor, such as Type 2 diabetes.

“Given that more than 1 billion people worldwide have already experienced COVID-19 infection, the implications for global heart health are significant,” said study leader Hooman Allayee, Ph.D., a professor of population and public health sciences at the University of Southern California Keck School of Medicine in Los Angeles. “The question now is whether or not severe COVID-19 should be considered another risk factor for cardiovascular disease, much like type 2 diabetes or peripheral artery disease, where treatment focused on cardiovascular disease prevention may be valuable.”

Allayee notes that the findings apply mainly to people who were infected early in the pandemic. It is unclear whether the risk of cardiovascular disease is persistent or may be persistent for people who have had severe COVID-19 more recently (from 2021 to the present).

Need for Broader Studies and Vaccine Impact on Risks Scientists state that the study was limited due to the inclusion of patients from only the UK Biobank, a group that is mostly white. Whether the results will differ in a population with more racial and ethnic diversity is unclear and awaits further study. As the study participants were unvaccinated, future studies will be needed to determine whether vaccines influence cardiovascular risk. Studies on the connection between blood type and COVID-19 infection are also needed as the mechanism for the gene-virus interaction remains unclear.

Study link: www.ahajournals.org/doi/10.1161/ATVBAHA.124.321001

#mask up#covid#pandemic#public health#wear a mask#covid 19#wear a respirator#still coviding#coronavirus#sars cov 2#long covid#heart health#covidー19#covid conscious#covid is airborne#covid isn't over#covid pandemic#covid19

66 notes

·

View notes

Text

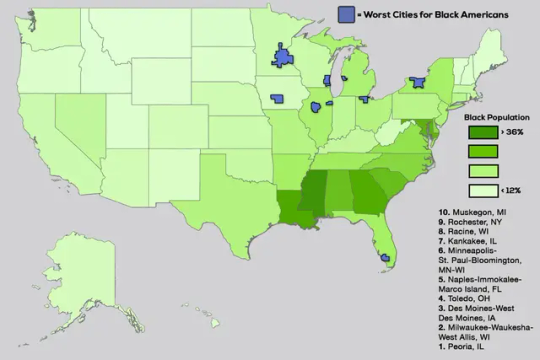

Where It’s Most Dangerous to Be Black in America

Black Americans made up 13.6% of the US population in 2022 and 54.1% of the victims of murder and non-negligent manslaughter, aka homicide. That works out, according to Centers for Disease Control and Prevention data, to a homicide rate of 29.8 per 100,000 Black Americans and four per 100,000 of everybody else.(1)

A homicide rate of four per 100,000 is still quite high by wealthy-nation standards. The most up-to-date statistics available from the Organization for Economic Cooperation and Development show a homicide of rate one per 100,000 in Canada as of 2019, 0.8 in Australia (2021), 0.4 in France (2017) and Germany (2020), 0.3 in the UK (2020) and 0.2 in Japan (2020).

But 29.8 per 100,000 is appalling, similar to or higher than the homicide rates of notoriously dangerous Brazil, Colombia and Mexico. It also represents a sharp increase from the early and mid-2010s, when the Black homicide rate in the US hit new (post-1968) lows and so did the gap between it and the rate for everybody else. When the homicide rate goes up, Black Americans suffer disproportionately. When it falls, as it did last year and appears to be doing again this year, it is mostly Black lives that are saved.

As hinted in the chart, racial definitions have changed a bit lately; the US Census Bureau and other government statistics agencies have become more open to classifying Americans as multiracial. The statistics cited in the first paragraph of this column are for those counted as Black or African American only. An additional 1.4% of the US population was Black and one or more other race in 2022, according to the Census Bureau, but the CDC Wonder (for “Wide-ranging Online Data for Epidemiologic Research”) databases from which most of the statistics in this column are drawn don’t provide population estimates or calculate mortality rates for this group. My estimate is that its homicide rate in 2022 was about six per 100,000.

A more detailed breakdown by race, ethnicity and gender reveals that Asian Americans had by far the lowest homicide rate in 2022, 1.6, which didn’t rise during the pandemic, that Hispanic Americans had similar homicide rates to the nation as a whole and that men were more than four times likelier than women to die by homicide in 2022. The biggest standout remained the homicide rate for Black Americans.

Black people are also more likely to be victims of other violent crime, although the differential is smaller than with homicides. In the 2021 National Crime Victimization Survey from the Bureau of Justice Statistics (the 2022 edition will be out soon), the rate of violent crime victimization was 18.5 per 1,000 Black Americans, 16.1 for Whites, 15.9 for Hispanics and 9.9 for Asians, Native Hawaiians and other Pacific Islanders. Understandably, Black Americans are more concerned about crime than others, with 81% telling Pew Research Center pollsters before the 2022 midterm elections that violent crime was a “very important” issue, compared with 65% of Hispanics and 56% of Whites.

These disparities mainly involve communities caught in cycles of violence, not external predators. Of the killers of Black Americans in 2020 whose race was known, 89.4% were Black, according to the FBI. That doesn’t make those deaths any less of a tragedy or public health emergency. Homicide is seventh on the CDC’s list of the 15 leading causes of death among Black Americans, while for other Americans it’s nowhere near the top 15. For Black men ages 15 to 39, the highest-risk group, it’s usually No. 1, although in 2022 the rise in accidental drug overdoses appears to have pushed accidents just past it. For other young men, it’s a distant third behind accidents and suicides.

To be clear, I do not have a solution for this awful problem, or even much of an explanation. But the CDC statistics make clear that sky-high Black homicide rates are not inevitable. They were much lower just a few years ago, for one thing, and they’re far lower in some parts of the US than in others. Here are the overall 2022 homicide rates for the country’s 30 most populous metropolitan areas.

Metropolitan areas are agglomerations of counties by which economic and demographic data are frequently reported, but seldom crime statistics because the patchwork of different law enforcement agencies in each metro area makes it so hard. Even the CDC, which gets its mortality data from state health departments, doesn’t make it easy, which is why I stopped at 30 metro areas.(2)

Sorting the data this way does obscure one key fact about homicide rates: They tend to be much higher in the main city of a metro area than in the surrounding suburbs.

But looking at homicides by metro area allows for more informative comparisons across regions than city crime statistics do, given that cities vary in how much territory they cover and how well they reflect an area’s demographic makeup. Because the CDC suppresses mortality data for privacy reasons whenever there are fewer than 10 deaths to report, large metro areas are good vehicles for looking at racial disparities. Here are the 30 largest metro areas, ranked by the gap between the homicide rates for Black residents and for everybody else.

The biggest gap by far is in metropolitan St. Louis, which also has the highest overall homicide rate. The smallest gaps are in metropolitan San Diego, New York and Boston, which have the lowest homicide rates. Homicide rates are higher for everybody in metro St. Louis than in metro New York, but for Black residents they’re six times higher while for everyone else they’re just less than twice as high.

There do seem to be some regional patterns to this mayhem. The metro areas with the biggest racial gaps are (with the glaring exception of Portland, Oregon) mostly in the Rust Belt, those with the smallest are mostly (with the glaring exceptions of Boston and New York) in the Sun Belt. Look at a map of Black homicide rates by state, and the highest are clustered along the Mississippi River and its major tributaries. Southern states outside of that zone and Western states occupy roughly the same middle ground, while the Northeast and a few middle-of-the-country states with small Black populations are the safest for their Black inhabitants.(3)

Metropolitan areas in the Rust Belt and parts of the South stand out for the isolation of their Black residents, according to a 2021 study of Census data from Brown University’s Diversity and Disparities Project, with the average Black person living in a neighborhood that is 60% or more Black in the Detroit; Jackson, Mississippi; Memphis; Chicago; Cleveland and Milwaukee metro areas in 2020 (in metro St. Louis the percentage was 57.6%). Then again, metro New York and Boston score near the top on another of the project’s measures of residential segregation, which tracks the percentage of a minority group’s members who live in neighborhoods where they are over-concentrated compared with White residents, so segregation clearly doesn’t explain everything.

Looking at changes over time in homicide rates may explain more. Here’s the long view for Black residents of the three biggest metro areas. Again, racial definitions have changed recently. This time I’ve used the new, narrower definition of Black or African American for 2018 onward, and given estimates in a footnote of how much it biases the rates upward compared with the old definition.

All three metro areas had very high Black homicide rates in the 1970s and 1980s, and all three experienced big declines in the 1990s and 2000s. But metro Chicago’s stayed relatively high in the early 2010s then began a rebound in mid-decade that as of 2021 had brought the homicide rate for its Black residents to a record high, even factoring in the boost to the rate from the definitional change.

What happened in Chicago? One answer may lie in the growing body of research documenting what some have called the “Ferguson effect,” in which incidents of police violence that go viral and beget widespread protests are followed by local increases in violent crime, most likely because police pull back on enforcement. Ferguson is the St. Louis suburb where a 2014 killing by police that local prosecutors and the US Justice Department later deemed to have been in self-defense led to widespread protests that were followed by big increases in St. Louis-area homicide rates. Baltimore had a similar viral death in police custody and homicide-rate increase in 2015. In Chicago, it was the October 2014 shooting death of a teenager, and more specifically the release a year later of a video that contradicted police accounts of the incident, leading eventually to the conviction of a police officer for second-degree murder.

It’s not that police killings themselves are a leading cause of death among Black Americans. The Mapping Police Violence database lists 285 killings of Black victims by police in 2022, and the CDC reports 209 Black victims of “legal intervention,” compared with 13,435 Black homicide victims. And while Black Americans are killed by police at a higher rate relative to population than White Americans, this disparity — 2.9 to 1 since 2013, according to Mapping Police Violence — is much less than the 7.5-to-1 ratio for homicides overall in 2022. It’s the loss of trust between law enforcement agencies and the communities they serve that seems to be disproportionately deadly for Black residents of those communities.

The May 2020 murder of George Floyd by a Minneapolis police officer was the most viral such incident yet, leading to protests nationwide and even abroad, as well as an abortive local attempt to disband and replace the police department. The Minneapolis area subsequently experienced large increases in homicides and especially homicides of Black residents. But nine other large metro areas experienced even bigger increases in the Black homicide rate from 2019 to 2022.

A lot of other things happened between 2019 and 2022 besides the Floyd protests, of course, and I certainly wouldn’t ascribe all or most of the pandemic homicide-rate increase to the Ferguson effect. It is interesting, though, that the St. Louis area experienced one of the smallest percentage increases in the Black homicide rate during this period, and it decreased in metro Baltimore.

Also interesting is that the metro areas experiencing the biggest percentage increases in Black residents’ homicide rates were all in the West (if your definition of West is expansive enough to include San Antonio). If this were confined to affluent areas such as Portland, Seattle, San Diego and San Francisco, I could probably spin a plausible-sounding story about it being linked to especially stringent pandemic policies and high work-from-home rates, but that doesn’t fit Phoenix, San Antonio or Las Vegas, so I think I should just admit that I’m stumped.

The standout in a bad way has been the Portland area, which had some of the longest-running and most contentious protests over policing, along with many other sources of dysfunction. The area’s homicide rate for Black residents has more than tripled since 2019 and is now second highest among the 30 biggest metro areas after St. Louis. Again, I don’t have any real solutions to offer here, but whatever the Portland area has been doing since 2019 isn’t working.

(1) The CDC data for 2022 are provisional, with a few revisions still being made in the causes assigned to deaths (was it a homicide or an accident, for example), but I’ve been watching for weeks now, and the changes have been minimal. The CDC is still using 2021 population numbers to calculate 2022 mortality rates, and when it updates those, the homicide rates will change again, but again only slightly. The metropolitan-area numbers also don’t reflect a recent update by the White House Office of Management and Budget to its list of metro areas and the counties that belong to them, which when incorporated will bring yet more small mortality-rate changes. To get these statistics from the CDC mortality databases, I clicked on “Injury Intent and Mechanism” and then on “Homicide”; in some past columns I instead chose “ICD-10 Codes” and then “Assault,” which delivered slightly different numbers.

(2) It’s easy to download mortality statistics by metro area for the years 1999 to 2016, but the databases covering earlier and later years do not offer this option, and one instead has to select all the counties in a metro area to get area-wide statistics, which takes a while.

(3) The map covers the years 2018-2022 to maximize the number of states for which CDC Wonder will cough up data, although as you can see it wouldn’t divulge any numbers for Idaho, Maine, Vermont and Wyoming (meaning there were fewer than 10 homicides of Black residents in each state over that period) and given the small numbers involved, I wouldn’t put a whole lot of stock in the rates for the Dakotas, Hawaii, Maine and Montana.

(https://www.washingtonpost.com/business/2023/09/14/where-it-s-most-dangerous-to-be-black-in-america/cdea7922-52f0-11ee-accf-88c266213aac_story.html)

139 notes

·

View notes

Text

The British government has announced plans to rollout mandatory Digital ID’s for all citizens who ‘wish to participate in society.’

Prime Minister Starmer, who met with Bill Gates recently to discuss the scheme, launched the Office for Digital Identities and Attribute (OfDIA) – a digital ID watchdog within the Department for Science, Innovation, and Technology, tasked with rolling out the mandatory digital ID’s under the leadership of chief executive Hannah Rutter.

Reclaimthenet.org reports: With this, the Labour government picked up where the Conservative one left off, considering that the Office was first announced by the previous cabinet in 2022 when it was envisaged as an “interim” entity for introducing digital ID in the UK.

“Convenience” is once again at the center of the way the authorities explain the need for such a push: Rutter is quoted as saying that instead of a “patchwork of paperwork” – and she’s referring to paperwork from both government and private entities – needed as proof of identity today, there is “a better way.”

“Digital identity can make people’s lives easier, and unlock billions of pounds of economic growth,” Rutter said, without further breaking down the numbers that helped her arrive at the “billions of pounds” figure.

The system OfDIA is in charge of does not include developing a government-issued ID card, and can be used on a voluntary basis, she continued.

Rutter made sure to address one of the criticisms regarding the security of such schemes – centralization – by saying that the system her office is working on does not have a centralized digital database, either.

16 notes

·

View notes

Note

hi — my grandma makes crochet stuff and was looking for an alternative to etsy that is more friendly for handmade crafts specifically. do you have any specific suggestions?

Hello!

Some of our members are working tirelessly to develop the Marketplace Accredidation Program (or MAP), which will function as a thorough and user-friendly database for finding the right marketplace for you -- but in the meantime, we do have a few alternatives I can list that a number of our members would recommend!

There's the Artisans Cooperative, a user/member owned cooperative which is very new and currently member-only, and which has quite a few members in the ISG. It may be worth keeping an eye out for when they open to non-member sellers if your grandma isn't able to become a member by either buying in ($1,000) or spending time earning "member points".

There's GoImagine, which Kristi wrote a blog post about in her Etsy Alterative series. This one donates all of its profits to charity and was the first marketplace to apply for accreditation (once we're ready to begin accreditation) with our MAP. This one isn't member restricted, but it is only available to sellers in the US.

The second marketplace to apply for accredidation is Mayfli, which is based in the UK. I couldn't tell you much else about it, but it does boast its sellers' handmade products.

There's also Ko-Fi, which doubles as a donation platform. We have an earlier blog post about it that goes over and rates it in comparison to Etsy. I myself have used it for tips, sales, and commissions; the fees are low to nonexistent, but you'll have to connect it to PayPal or Stripe to receive payments.

Then there's WooCommerce, which isn't a marketplace, but many of our members use it and have described it as a very good tool for setting up your own website if you're sufficiently tech savvy. This may only be useful for your grandmother if she has outside means of bringing customers to her shop.

We do also have a spreadsheet with the beginnings of an Etsy Alternatives Database, but I'm not sure if that's open to the public just yet. I'll reach out to our other members and add any comments they want to share in a reblog of this post!

#etsy alternatives#kofi#goimagine#woocommerce#artisans cooperative#mayfli#crocheting#asked and answered

43 notes

·

View notes

Text

To increase our understanding of our genetic blueprints researchers have put together a database of genes we know almost nothing about. While we know these genes exist and code for proteins, we have no idea what they're for. "It has become clear that scientific research tends to focus on well-studied proteins, leading to a concern that poorly understood genes are unjustifiably neglected," researchers from the MRC Laboratory of Molecular Biology (LMB) in the UK explain. "To address this, we have developed a publicly available and customizable 'Unknome database'."

Continue Reading.

62 notes

·

View notes

Text

What Is Linux Web Hosting? A Beginner's Guide

In the ever-evolving digital landscape, the choice of web hosting can significantly impact your online presence. One of the most popular options available is Linux web hosting. But what exactly does it entail, and why is it so widely preferred? This beginner’s guide aims to demystify Linux web hosting, its features, benefits, and how it stands against other hosting types.

Introduction to Web Hosting

Web hosting is a fundamental service that enables individuals and organisations to make their websites accessible on the internet. When you create a website, it consists of numerous files, such as HTML, images, and databases, which need to be stored on a server. A web host provides the server space and connectivity required for these files to be accessed by users online.

There are several types of web hosting, each designed to cater to different needs and budgets. Shared hosting is a cost-effective option where multiple websites share the same server resources. Virtual Private Server (VPS) hosting offers a middle ground, providing dedicated portions of a server for greater performance and control. Dedicated hosting provides an entire server exclusively for one website, ensuring maximum performance but at a higher cost. Cloud hosting uses multiple servers to balance the load and maximise uptime, offering a scalable solution for growing websites.

Web hosting services also include various features to enhance the performance and security of your website. These can range from basic offerings like email accounts and website builders to more advanced features like SSL certificates, automated backups, and DDoS protection. The choice of web hosting can significantly influence your website’s speed, security, and reliability, making it crucial to choose a solution that aligns with your specific requirements.

Understanding the different types of web hosting and the features they offer can help you make an informed decision that suits your needs. Whether you are running a personal blog, a small business website, or a large e-commerce platform, selecting the right web hosting service is a critical step in establishing a successful online presence.

What Is Linux Web Hosting?

Linux web hosting is a type of web hosting service that utilises the Linux operating system to manage and serve websites. Renowned for its open-source nature, Linux provides a stable and secure platform that supports a wide array of programming languages and databases, making it a favoured choice amongst developers and businesses. This hosting environment typically includes support for popular technologies such as Apache web servers, MySQL databases, and PHP scripting, which are integral to modern website development.

One of the distinguishing features of Linux web hosting is its cost-effectiveness. As an open-source system, Linux eliminates the need for costly licensing fees associated with proprietary software, thereby reducing overall hosting expenses. This makes it an attractive option for individuals and organisations operating on a budget.

Moreover, Linux is celebrated for its robust performance and high stability. Websites hosted on Linux servers experience less downtime and faster loading times, which are critical factors for maintaining user engagement and search engine rankings. The operating system’s extensive community of developers continuously works on updates and improvements, ensuring that Linux remains a cutting-edge choice for web hosting.

Linux web hosting also offers considerable flexibility and customisation options. Users have the freedom to configure their hosting environment to meet specific needs, whether they are running simple static websites or complex dynamic applications. This versatility extends to compatibility with various content management systems (CMS) like WordPress, Joomla, and Drupal, which often perform optimally on Linux servers.

In summary, Linux web hosting provides a reliable, secure, and cost-effective solution that caters to a diverse range of web hosting requirements. Its compatibility with essential web technologies and its inherent flexibility make it a preferred choice for many web developers and site owners.

Key Benefits of Linux Web Hosting

Linux web hosting offers several compelling advantages that contribute to its widespread adoption. One of its primary benefits is cost-effectiveness. The open-source nature of Linux eliminates the need for expensive licensing fees, allowing users to allocate their resources more efficiently. This makes it an ideal choice for individuals and organisations with budget constraints. Additionally, Linux is celebrated for its high stability and robust performance. Websites hosted on Linux servers often experience minimal downtime and faster loading speeds, which are essential for maintaining user engagement and achieving favourable search engine rankings.

Another significant benefit is the extensive community support that comes with Linux. The active community of developers and enthusiasts continuously works on updates, patches, and security enhancements, ensuring that Linux remains a secure and reliable platform for web hosting. This ongoing development also means that any issues or vulnerabilities are promptly addressed, offering peace of mind for website owners.

Flexibility is another key advantage of Linux web hosting. The operating system supports a wide range of programming languages, including PHP, Python, and Ruby, making it suitable for various types of web applications. Additionally, Linux servers are highly customisable, allowing users to configure their environment to meet specific needs, whether they are running simple static sites or complex dynamic applications.

Moreover, Linux web hosting is highly compatible with popular content management systems (CMS) like WordPress, Joomla, and Drupal. This compatibility ensures that users can easily deploy and manage their websites using these platforms, benefiting from their extensive plugin and theme ecosystems.

Lastly, Linux's superior security features are worth noting. The operating system is inherently secure and offers various built-in security measures. When combined with best practices such as regular updates and strong passwords, Linux web hosting provides a highly secure environment for any website.

Understanding Linux Distributions in Web Hosting

Linux comes in a variety of distributions, each tailored to meet specific needs and preferences. Among the most popular for web hosting are Ubuntu, CentOS, and Debian. Ubuntu is celebrated for its ease of use and extensive community support, making it a great choice for those new to Linux. CentOS, a favourite in enterprise environments, offers impressive stability and long-term support, which ensures a dependable hosting experience. Debian stands out with its robust package management system and commitment to open-source principles, providing a reliable and secure foundation.

Each distribution brings its own strengths to the table. For example, Ubuntu’s frequent updates ensure access to the latest features and security patches, while CentOS’s extended support cycles make it a solid choice for those requiring a stable, long-term hosting environment. Debian’s extensive repository of packages and minimalistic approach offers flexibility and customisation, catering to the needs of experienced users.

Selecting the right Linux distribution largely depends on your specific requirements and technical expertise. If you prioritise user-friendliness and a wealth of resources for troubleshooting, Ubuntu might be the ideal pick. On the other hand, if you need a rock-solid, stable platform for an enterprise-level application, CentOS could be more appropriate. For those seeking maximum control and a commitment to open-source principles, Debian is a compelling option.

Ultimately, understanding the nuances of these distributions will enable you to choose a Linux environment that aligns with your web hosting needs, ensuring optimal performance and reliability.

Linux Hosting vs Windows Hosting: A Comparative Analysis

When evaluating Linux hosting against Windows hosting, several critical factors come into play. Cost is a significant differentiator; Linux hosting is generally more affordable due to its open-source nature, which eliminates the need for expensive licensing fees. In contrast, Windows hosting often incurs additional costs related to proprietary software licenses.

Compatibility is another important aspect to consider. Linux hosting is renowned for its compatibility with a broad array of open-source software and applications, including popular content management systems like WordPress, Joomla, and Magento. These platforms typically perform better on Linux servers due to optimised server configurations. On the other hand, Windows hosting is the go-to option for websites that rely on Microsoft-specific technologies such as ASP.NET, MSSQL, and other .NET frameworks.

Performance and stability are also crucial elements in this comparison. Linux hosting often provides superior uptime and faster loading speeds due to the lightweight nature of the Linux operating system. The robust performance of Linux servers is further enhanced by the active community that continuously works on optimisations and security patches. Windows hosting, while also reliable, can sometimes be more resource-intensive, potentially affecting performance.

Customisation and control levels differ significantly between the two. Linux offers greater flexibility and customisation options, allowing users to tweak server settings and configurations extensively. This level of control is particularly beneficial for developers who need a tailored hosting environment. Conversely, Windows hosting is typically easier to manage for those familiar with the Windows operating system but may offer less flexibility in terms of customisation.

Security measures also vary between Linux and Windows hosting. Linux is often praised for its strong security features, which are bolstered by a large community dedicated to promptly addressing vulnerabilities. While Windows hosting is secure, it may require more frequent updates and maintenance to ensure the same level of protection.

Common Use Cases for Linux Web Hosting

Linux web hosting is versatile and caters to a broad range of applications, making it a popular choice across various sectors. One of the most common use cases is hosting blogs and personal websites, particularly those built on platforms like WordPress. The open-source nature of Linux and its compatibility with PHP make it an ideal environment for WordPress, which powers a significant portion of the web.

E-commerce websites also benefit greatly from Linux web hosting. Solutions like Magento, PrestaShop, and OpenCart often perform better on Linux servers due to their need for a robust, secure, and scalable hosting environment. The flexibility to configure server settings allows online store owners to optimise performance and ensure a smooth shopping experience for their customers.

Content Management Systems (CMS) such as Joomla and Drupal are another prime use case. These systems require reliable and flexible hosting solutions to manage complex websites with large amounts of content. Linux's support for various databases and scripting languages ensures seamless integration and optimal performance for CMS-based sites.

Developers frequently turn to Linux web hosting for custom web applications. The operating system supports a variety of programming languages, including Python, Ruby, and Perl, making it suitable for a wide array of development projects. Its command-line interface and extensive package repositories allow developers to install and manage software efficiently.

Additionally, Linux web hosting is commonly used for educational and non-profit websites. The low cost and high reliability make it a practical choice for schools, universities, and charitable organisations that need a dependable online presence without breaking the bank.

Setting Up a Linux Web Hosting Environment

Setting up a Linux web hosting environment can be straightforward with the right approach. Begin by selecting a reputable hosting provider that offers Linux-based plans. After signing up, you'll typically be granted access to a control panel, such as cPanel or Plesk, which simplifies the management of your hosting environment. Through the control panel, you can manage files, databases, email accounts, and more.

Next, if you're using a content management system (CMS) like WordPress, Joomla, or Drupal, you can often find one-click installation options within the control panel. This feature makes it easy to get your website up and running quickly. Additionally, ensure that you configure your domain name to point to your new hosting server, which usually involves updating your domain's DNS settings.

For those who prefer more control or are comfortable using the command line, you can manually set up your web server using SSH access. This method allows you to install and configure web server software like Apache or Nginx, as well as databases such as MySQL or PostgreSQL.

Regardless of the setup method you choose, it's crucial to secure your server from the outset. This includes setting up a firewall, enabling SSH key authentication for secure access, and regularly updating all software to protect against vulnerabilities. Regularly monitoring your server's performance and security logs can help you stay ahead of potential issues, ensuring a stable and secure hosting environment for your website.

Security Best Practices for Linux Web Hosting

Securing your Linux web hosting environment is paramount to safeguarding your website against potential threats. Begin by ensuring your server software and all installed applications are up to date. Regular updates often include patches for security vulnerabilities, making this a critical step. Utilise strong, unique passwords for all user accounts, and consider employing SSH key authentication for added security when accessing your server remotely.

Setting up a firewall is another essential measure. Tools like iptables or firewalld can help you configure firewall rules to control incoming and outgoing traffic, thereby reducing the risk of unauthorised access. Implementing intrusion detection systems (IDS), such as Fail2Ban, can provide an additional layer of security by monitoring and blocking suspicious activities.

Consider deploying an SSL certificate to encrypt data transmitted between your server and users' browsers. This not only enhances security but also boosts user trust and can improve your search engine rankings. Additionally, limit the use of root privileges; create separate user accounts with the necessary permissions to minimise potential damage in the event of a breach.

Regularly backup your data to mitigate the impact of data loss due to hardware failure, cyber-attacks, or human error. Automated backup solutions can simplify this process, ensuring your data is consistently protected. Monitoring your server's logs can also be invaluable for identifying unusual activity early. Tools like Logwatch or the ELK Stack can assist in log management and analysis, enabling you to take swift action if anomalies are detected.

Common Challenges and How to Overcome Them

Setting up and maintaining a Linux web hosting environment can present various challenges, especially for those new to the platform. One frequent issue is navigating the command line, which can be daunting for beginners. Engaging with online tutorials, forums, and communities like Stack Overflow can be invaluable for learning the basics and troubleshooting problems. Another common challenge is software incompatibility. Ensuring your web applications are compatible with the Linux distribution you choose is crucial; consulting documentation and support resources can help mitigate these issues.

Security configuration can also be a complex task. Implementing best practices such as setting up firewalls, regular updates, and using strong authentication methods requires a good understanding of Linux security principles. Managed hosting services can offer a solution here by handling these technical aspects for you, allowing you to focus on your website content.

Resource management is another area where users might struggle. Monitoring server performance and managing resources effectively ensures your website runs smoothly. Utilising monitoring tools and performance optimisation techniques can help you stay ahead of potential issues. Lastly, when it comes to server backups, regular, automated solutions are essential to prevent data loss and minimise downtime. Being proactive in addressing these challenges will ensure a more seamless and secure Linux web hosting experience.

Popular Control Panels for Linux Web Hosting

Control panels are invaluable for simplifying the management of your Linux web hosting environment. Among the most popular are cPanel, Plesk, and Webmin. cPanel is renowned for its intuitive interface and extensive feature set, making it a favourite among users who need a straightforward yet powerful management tool. Plesk offers robust functionality and supports both Linux and Windows servers, providing versatility for those who manage multiple server environments. Webmin stands out as a free, open-source option that allows comprehensive server management through a web interface, catering to those who prefer a customisable and cost-effective solution. Each control panel brings unique strengths, helping to streamline tasks such as file management, database administration, and security configurations.

Choosing the Right Linux Web Hosting Provider

Choosing the right Linux web hosting provider involves several key considerations. Firstly, evaluate the quality of customer support offered. Responsive and knowledgeable support can be invaluable, especially when troubleshooting technical issues or during the initial setup phase. Check if the provider offers 24/7 support and multiple contact methods such as live chat, email, and phone.

Another crucial factor is the security measures in place. Opt for providers that offer robust security features, including regular backups, SSL certificates, firewalls, and DDoS protection. These features help safeguard your website against potential threats and ensure data integrity.

Reliability and uptime guarantees are also vital. Aim for providers that offer at least a 99.9% uptime guarantee, as frequent downtimes can significantly affect your website’s accessibility and user experience. Additionally, look into the provider’s data centre infrastructure and redundancy measures, which can impact overall performance and reliability.

Scalability is another important aspect to consider. As your website grows, you’ll need the flexibility to upgrade your hosting plan seamlessly. Check if the provider offers scalable solutions, such as easy transitions to VPS or dedicated hosting, without causing disruptions to your site.

Lastly, consider the hosting plans and pricing structures available. While cost-effectiveness is a significant benefit of Linux web hosting, ensure the plans align with your specific needs. Compare the features, storage, bandwidth, and other resources included in different plans to find the best value for your money.

Reading customer reviews and seeking recommendations can also provide insights into the provider’s reputation and service quality. By carefully evaluating these factors, you can choose a Linux web hosting provider that meets your requirements and supports your online endeavours effectively.

Conclusion and Final Thoughts

Linux web hosting stands out as an optimal choice for both beginners and seasoned web developers. Its open-source nature provides an affordable, highly customisable, and secure environment, suitable for a diverse range of websites, from personal blogs to large e-commerce platforms. The extensive community support ensures ongoing improvements and prompt resolution of issues, contributing to its reliability and performance. Choosing the right hosting provider is crucial; look for robust security measures, excellent customer support, and scalability to accommodate your website's growth. By leveraging the strengths of Linux web hosting, you can build a resilient and efficient online presence that meets your specific needs and goals.

4 notes

·

View notes

Note

I must know about leabian apocalypse robot tony stark

[from this meme]

This document is an original story that's settled into the title of Le Morte d'Artificial Intelligence! The document title comes from the title of this one comix recap copperbadge did, a title which promised much about a comic which delivered exactly none of it. I picked up the idea of an artificially-intelligent android (well, gynoid, technically, if you want to get personal) masquerading as an irreverent, deeply ADHD inventor and titan of industry and moonlighting as a mechanised superhero, and ran with it.

And then, while I was developing a superteam (and a villain) for her to have an It's Complicated with, I realised that I couldn't set a story with superheroes in the UK and not drag King Arthur into it. And then a couple of other ideas that I had floating around without homes (an artificial lifeform in the shape of a human woman falls in love with a human woman, who then turns out to be a changeling; an extraordinarily self-indulgent never-to-see-the-light-of-day f!OC MCU fic) dovetailed beautifully into a plot to go along with this premise.

Have a sample:

If Elin hadn’t already known what was waiting for her at Piccadilly Circus, it would’ve been immediately obvious as soon as she flew within shouting distance. Even if the unnaturally brackish quality to the air composition, the subsonic hum, and the flood of fleeing people – and, strangely, chickens – hadn’t clued her in, the tentacles were visible even before the heaving, buckling remains of the plaza drew into view.

“Dammit,” Elin muttered, pulling up to leave the street farther below her. She remembered too well from the fight with Morgan – huge and cumbersome as they looked, those beasties moved fast. And their reach was always just a little longer than she’d calculated.

In seconds, she was hovering over the plaza, assessing the situation. Definitely not stalling, whatever Goldfinger might say. The plaza looked like it had cleared out, and people in black tactical gear stood around the barricades that uniformed police had started setting up around the perimeter. Though, as Elin passed over, she noticed a little knot of people in street clothes still huddled behind a double-decker bus at the far end. She also noticed that there was only one of Morgan’s horrorterrors this time. Thankfully, it looked like a small one. Well, a relatively small one. As horrorterrors went.

The rip it had made in the world was relatively small, too, but growing wider as the creature’s assortment of mismatched limbs forced their way through. As she passed above it, Elin caught a glimpse of a knot of eyes and teeth, roiling and gnashing somewhere far below what the actual street could allow. Maybe the creature was bigger than it looked, then. Probably a good idea to get that rift closed up before any more of it got through.

Elin took a moment to wonder about that, as she scanned the radio frequencies for the agent and Arthur’s comms. They’d assumed the beasties had needed Morgan to open the hole that had ripped open in the London Eye, and with good reason. But she’d been under lock and key at Elin’s apartment the whole time this rift would have been opening –

Elin filed the thought into a subfolder for later consideration. She’d just caught a sliver of MI5 chatter.

“Rook. Arthur.”

It was a moment before the agent’s voice crackled back. “Motherboard. This is meant to be a secure channel.”

Everything in Elin’s database said that the emotion the agent was barely suppressing was relief. If the Motherboard had a face, Elin would’ve put a smile on it. “Then maybe you should give me access so I don’t have to keep breaking in. What’s your six?”

“Please stop trying to use military jargon,” the agent said, sounding still just a little too relieved to really be as annoyed as she was pretending to be. “You’re terrible at it. Arthur’s on the monster, he could use air support. I’m clearing these idiots -” Her voice dissolved into a muffled argument, before cutting off entirely.

Elin didn’t wait. She swooped low over the creature, at an angle she knew would make the Motherboard’s silver casing flash in the sun, scanning the many eyes below her to see if any of them fixed on her. At the last moment, when it looked like she was going to smash straight into a rising claw, Elin kicked in the Motherboard’s thrusters and shot straight upwards, spiraling between two reaching tentacles so that they wound around each other. One sharp shove, and they toppled over, smashing into the wall of screens that wrapped around one of the buildings encircling the plaza. In what looked like slow motion, every single light in the screen burst, with a cascading shower of sparks and a sound like fireworks.

The tentacles that had caused the damage had already vanished, disappearing into insubstantial soap-bubble shimmers and popping on impact. But, even as Elin watched the carnage of an exploding Coke ad, in the corner of her visual field, another tentacle began to reform. One moment, it was nothing but a patch of empty air delineated by the way the falling sparks bounced off and around it. The next, it was a horribly fleshy appendage covered in downright obscene-looking suckers, as thick around as Elin was tall and moving way too fast for anything that bulky.

And it was shooting, at top speed, straight for the double-decker bus. And – Elin zoomed in to confirm what she realised she already knew – and the little knot of people who were still trapped behind it. Including the agent.

Elin dove down through the air towards the tentacle, checking the charge on her laser cannon. She’d only get one shot at it before it reached its target –

Something slammed into her back, knocking her somersaulting through the air. Sensors screamed, her internal gyroscope frantically recalibrating and recalibrating, until she smacked, hard, into the side of a building.

Diagnostics flashed past – right foot thrusters operating at 67% capacity, outer shell not yet breached but integrity compromised, battery drain increased significantly. Oh, and she was upside down and halfway through a stone wall. Another hit or two like that one would put her out, easy, before she even had a chance to shoot.

She’d have to pay more attention to all of the creature’s limbs. Its…apparently endless assortment of limbs. That seemed, in defiance of all known laws of physics, to be able to appear from and disappear into thin air.

“Cake,” Elin muttered to herself, wrenching one leg free from the masonry the monster’s blow had half-embedded her in. “Total cakewalk.” She had to engage thrusters briefly to get the other leg free, and, for two ominous seconds, went shooting at top speed towards the pavement below. Headfirst. “Absolute piece of -” Elin executed a neat midair flip, and caught herself with her feet hovering barely an inch above the asphalt. “- cake.”

“Hungry, Motherboard?” Arthur’s warm, genial voice echoed over the commlink. If the Motherboard had had eyes, Elin would’ve rolled them.

“Only for victory, your royal highness.” She scanned the plaza, shaking out her right foot until the thrusters clicked up to 98% capacity. Still not perfect, but at least she wouldn’t be flying in circles. A glance told her that the double-decker bus had vanished, but Arthur and his gleaming sword had joined the people who’d been hiding behind it. Clearly he’d gotten to the limb Elin had been too busy getting her ass kicked to take care of. “Or – wait, I don’t remember. Is that the one you’re only supposed to call princesses?”

“I’ll let you both eat cake once we’ve closed this portal,” the agent’s voice cut in, sharply. “Need I remind you I’ve still got three civilians, and now no cover.”

“Gotcha,” Elin said, leaping back into the air. She ducked under an enormous scorpion stinger and wove around a whiplike limb with a ball of spikes on one end, spotting the bright red of the double-decker bus clutched in a tentacle high overhead. “Be as annoying as possible.”

“Motherboard -” the agent started, sounding exasperated, but Elin muted the comm. She wasn’t interested in a lecture. She had a distraction to provide.

And the Motherboard, flashy and dramatic as she was, provided such good distractions.

7 notes

·

View notes

Text

Tech companies and privacy activists are claiming victory after an eleventh-hour concession by the British government in a long-running battle over end-to-end encryption.

The so-called “spy clause” in the UK’s Online Safety Bill, which experts argued would have made end-to-end encryption all but impossible in the country, will no longer be enforced after the government admitted the technology to securely scan encrypted messages for signs of child sexual abuse material, or CSAM, without compromising users’ privacy, doesn’t yet exist. Secure messaging services, including WhatsApp and Signal, had threatened to pull out of the UK if the bill was passed.

“It’s absolutely a victory,” says Meredith Whittaker, president of the Signal Foundation, which operates the Signal messaging service. Whittaker has been a staunch opponent of the bill, and has been meeting with activists and lobbying for the legislation to be changed. “It commits to not using broken tech or broken techniques to undermine end-to-end encryption.”

The UK government hadn’t specified the technology that platforms should use to identify CSAM being sent on encrypted services, but the most commonly-cited solution was something called client-side scanning. On services that use end-to-end encryption, only the sender and recipient of a message can see its content; even the service provider can’t access the unencrypted data.

Client-side scanning would mean examining the content of the message before it was sent—that is, on the user’s device—and comparing it to a database of CSAM held on a server somewhere else. That, according to Alan Woodward, a visiting professor in cybersecurity at the University of Surrey, amounts to “government-sanctioned spyware scanning your images and possibly your [texts].”

In December, Apple shelved its plans to build client-side scanning technology for iCloud, later saying that it couldn’t make the system work without infringing on its users’ privacy.

Opponents of the bill say that putting backdoors into people’s devices to search for CSAM images would almost certainly pave the way for wider surveillance by governments. “You make mass surveillance become almost an inevitability by putting [these tools] in their hands,” Woodward says. “There will always be some ‘exceptional circumstances’ that [security forces] think of that warrants them searching for something else.”

The UK government denies that it has changed its stance. Minister for tech and the digital economy, Paul Scully MP said in a statement: “Our position on this matter has not changed and it is wrong to suggest otherwise. Our stance on tackling child sexual abuse online remains firm, and we have always been clear that the Bill takes a measured, evidence-based approach to doing so.”

Under the bill, the regulator, Ofcom, will be able “to direct companies to either use, or make best efforts to develop or source, technology to identify and remove illegal child sexual abuse content—which we know can be developed,” Scully said.

Although the UK government has said that it now won’t force unproven technology on tech companies, and that it essentially won’t use the powers under the bill, the controversial clauses remain within the legislation, which is still likely to pass into law. “It’s not gone away, but it’s a step in the right direction,” Woodward says.

James Baker, campaign manager for the Open Rights Group, a nonprofit that has campaigned against the law’s passage, says that the continued existence of the powers within the law means encryption-breaking surveillance could still be introduced in the future. “It would be better if these powers were completely removed from the bill,” he adds.

But some are less positive about the apparent volte-face. “Nothing has changed,” says Matthew Hodgson, CEO of UK-based Element, which supplies end-to-end encrypted messaging to militaries and governments. “It’s only what’s actually written in the bill that matters. Scanning is fundamentally incompatible with end-to-end encrypted messaging apps. Scanning bypasses the encryption in order to scan, exposing your messages to attackers. So all ‘until it’s technically feasible’ means is opening the door to scanning in future rather than scanning today. It’s not a change, it’s kicking the can down the road.”

Whittaker acknowledges that “it’s not enough” that the law simply won’t be aggressively enforced. “But it’s major. We can recognize a win without claiming that this is the final victory,” she says.

The implications of the British government backing down, even partially, will reverberate far beyond the UK, Whittaker says. Security services around the world have been pushing for measures to weaken end-to-end encryption, and there is a similar battle going on in Europe over CSAM, where the European Union commissioner in charge of home affairs, Ylva Johannson, has been pushing similar, unproven technologies.

“It’s huge in terms of arresting the type of permissive international precedent that this would set,” Whittaker says. “The UK was the first jurisdiction to be pushing this kind of mass surveillance. It stops that momentum. And that’s huge for the world.”

12 notes

·

View notes

Text

Did you know there are services that offer free university-level modules, for free?

I am one of those people that are obsessed with learning, but structured courses are expensive. Not these. Here are some links if you, too, are obsessed with the pursuit of knowledge and want to learn something (for FREE!)

EdX is my personal favourite. It offers loads of subjects that are usually taken from university courses, and the ones that I have done are pretty good. These can all be taken for free, or you can pay £40 to take the assignments and recieve a graded certificate. Now, the catch here is that most of the courses are archived, which means that, occasionally, some of the links won't work and you have to be creative with the readings. I have found this to be a pretty minimal issue though, and it is worth it.

I recently took this EdX course about 19th Century literature and it was excellent. Some of the links were broken so I took some creative liberties with the readings, but I read all of the books discussed and used the course materials to critically analyse them as I did so. I didn't follow the course exactly, but it was still an enriching experience and something I wouldn't have thought to do otherwise.

Another good one is OpenLearn, which is a branch of the Open University. The OpenLearn courses are usually pretty good, although some can feel a bit lackluster and basic. Don't expect super in-depth courses, but they're good for an introduction to a subject, or to top up your skills in somethign you haven't done in a while. These offer free certificates of participation, so they're great if, say, you want a career change but you haven't studied data science since college. I enjoyed the classes I took, but I wouldn't say they were as challenging as EdX.

I recently took this OpenLearn course about Hadrian's Rome. Classical history is something I'm interested in and this was a great way to guide my study of an aspect of it.

Coursera is a very popular one. Similar to OpenLearn, these courses can sometimes be a little surface-level. These also have a much larger focus on building transferrable skills than developing cultural knowledge and learning for your own enrichment. Even so, there are some wonderful gems on there if you're willing to scroll through and find them. Coursera is also great because it has project-centred courses, where you work towards a finished product under the (virtual, recorded) guidance of an expert. Think of it like a corporate skillshare.

Last summer I took this Coursera course about screenwriting. It was project based, and I came out of it with a fully realised first draft of a pilot episode of a TV show. I realised through this process that maybe screenwriting wasn't for me, but it allowed me to get out of my comfort zone with writing and explore that avenue that I would have probably always wodered about.

OpenCulture is a good database of free courses that then redirects you to other websites, but you have to be willing to sift through the sludge with this one, as some are more worth your time than others.

Another good way to find free courses is to search for them on university websites. I have found that most US universities (and loads of UK ones too, though it is less common) offer free online courses. You have to be careful here, because sometimes they will say they are free but actually the 'free' part is viewing the syllabus.

You might be thinking 'what is the point of a course that doesn't get me a qualification?' and I'd say 'I get you, because I felt the same way,' but if you're anything like me and love learning, they're a godsend. Of course, there is nothing stopping you from finding all of the course content online, and I'd actually encourage you to do that alongside the courses, but knowing where to start is the difficult part. There is so much information to learn about any given topic that it can be overwhelming. These courses provide you with a structure that you can then use within your own wider research to learn about subjects you maybe don't want to commit to in a formal setting.

I'm not paid by any of these websites, I just think the monetisation of knowledge is wrong and awful and disgusting and anyway to beat that should be celebrated and shared and used as widely as possible.

Happy learning! :)

#learning#higher education#career#education#skills#knowledge#wisdom#understanding#writing#write#read#reading#books#humaities#science#history#culture#archaeology#literature#maths#study#studyblr#study motivation#study life#student#student life#studyspo#study blog

5 notes

·

View notes

Text

Also preserved on our archive

NIH-funded study focused on original virus strain, unvaccinated participants during pandemic.