#deepfake explained

Text

youtube

This video is all about the dangers of deepfake technology. In short, deepfake technology is a type of AI that is able to generate realistic, fake images of people. This technology has the potential to be used for a wide variety of nefarious purposes, from porn to political manipulation.

Deepfake technology has emerged as a significant concern in the digital age, raising alarm about its potential dangers and the need for effective detection methods. Deepfakes refer to manipulated or synthesized media content, such as images, videos, or audio recordings, that convincingly replicate real people saying or doing things they never did. While deepfakes can have legitimate applications in entertainment and creative fields, their malicious use poses serious threats to individuals, organizations, and society as a whole.

The dangers of deepfakes are not very heavily known by everyone, and this poses a threat. There is no guarantee that what you see online is real, and deepfakes have successfully lessened the gap between fake and real content. Even though the technology can be used for creating innovative entertainment projects, it is also being heavily misused by cybercriminals. Additionally, if the technology is not monitored properly by law enforcement, things will likely get out of hand quickly.

Deepfakes can be used to spread false information, which can have severe consequences for public opinion, political discourse, and trust in institutions. A realistic deepfake video of a public figure could be used to disseminate fabricated statements or actions, leading to confusion and the potential for societal unrest.

Cybercriminals can exploit deepfake technology for financial gain. By impersonating someone's voice or face, scammers could trick individuals into divulging sensitive information, making fraudulent transactions, or even manipulating people into thinking they are communicating with a trusted source.

Deepfakes have the potential to disrupt democratic processes by distorting the truth during elections or important political events. Fake videos of candidates making controversial statements could sway public opinion or incite conflict.

The Dangers of Deepfake Technology and How to Spot Them

#the dangers of deepfake technology and how to spot them#the dangers of deepfake technology#deepfake technology#deepfake#artificial intelligence#deep fake#LimitLess Tech 888#how are deepfakes dangerous#the dangers of deepfakes#how to spot a deepfake#deepfakes#deepfake explained#what is a deepfake#dangers of deepfake#deepfake video#effects of deepfakes#how deepfakes work#deepfakes explained 2023#deepfake dangers#deepfake ai technology#Youtube

0 notes

Text

youtube

This video is all about the dangers of deepfake technology. In short, deepfake technology is a type of AI that is able to generate realistic, fake images of people. This technology has the potential to be used for a wide variety of nefarious purposes, from porn to political manipulation.

Deepfake technology has emerged as a significant concern in the digital age, raising alarm about its potential dangers and the need for effective detection methods. Deepfakes refer to manipulated or synthesized media content, such as images, videos, or audio recordings, that convincingly replicate real people saying or doing things they never did. While deepfakes can have legitimate applications in entertainment and creative fields, their malicious use poses serious threats to individuals, organizations, and society as a whole.

The dangers of deepfakes are not very heavily known by everyone, and this poses a threat. There is no guarantee that what you see online is real, and deepfakes have successfully lessened the gap between fake and real content. Even though the technology can be used for creating innovative entertainment projects, it is also being heavily misused by cybercriminals. Additionally, if the technology is not monitored properly by law enforcement, things will likely get out of hand quickly.

Deepfakes can be used to spread false information, which can have severe consequences for public opinion, political discourse, and trust in institutions. A realistic deepfake video of a public figure could be used to disseminate fabricated statements or actions, leading to confusion and the potential for societal unrest.

Cybercriminals can exploit deepfake technology for financial gain. By impersonating someone's voice or face, scammers could trick individuals into divulging sensitive information, making fraudulent transactions, or even manipulating people into thinking they are communicating with a trusted source.

Deepfakes have the potential to disrupt democratic processes by distorting the truth during elections or important political events. Fake videos of candidates making controversial statements could sway public opinion or incite conflict.

The Dangers of Deepfake Technology and How to Spot Them

#the dangers of deepfake technology and how to spot them#the dangers of deepfake technology#deepfake technology#how to spot them#deepfake#artificial intelligence#deep fake#LimitLess Tech 888#how are deepfakes dangerous#the dangers of deepfakes#how to spot a deepfake#deepfakes#deepfake explained#what is a deepfake#dangers of deepfake#deepfake video#effects of deepfakes#how deepfakes work#deepfakes explained 2023#deepfake dangers#deepfake ai technology#Youtube

0 notes

Text

article from the english version of the Hankyoreh, September 3 2024

archive link

plain text:

Amid the recent proliferation of illegal deepfake pornography on the chat app Telegram, South Korean police have announced that they have begun a preliminary investigation of Telegram for aiding and abetting sex crimes. This is the first time Korean police have moved to investigate an overseas platform that has refused to cooperate in an investigation.

During a press conference on Monday, Woo Jong-soo, the chief of the police National Office of Investigation, announced, “Due to recent events, the Seoul Metropolitan Police Agency has begun a preliminary investigation of Telegram, looking at charges of aiding and abetting [in sex crimes].”

Noting that Telegram’s founder was arrested in France, Woo said Korean police would “inquire into ways to request cooperative assistance from French and international authorities in our investigation of Telegram.”

Telegram founder and CEO Pavel Durov was arrested at Le Bourget Airport outside Paris on Aug. 24 by French authorities. The charges against Durov are related to neglect in regard to the distribution of child sexual abuse material and prostitution that has occurred on Telegram channels.

Korean police are also looking to charge people who have made and programmed deepfake bots. The Seoul Metropolitan Police Agency’s cybercrime division is currently conducting preliminary investigations of eight Telegram rooms that produced illegal deepfakes.

In the case of a chat room that produced deepfakes of female soldiers, the room disappeared immediately after media reports of its activity, leaving police investigators without any clues.

“The Telegram chat that produced and distributed deepfakes of female soldiers disappeared on the same day its activities were reported by the press. We will do our best to recover any clues available,” Woo said.

Media reports on such Telegram chat rooms also led to a sudden explosion of victim reports throughout the country. Authorities received 88 reports of deepfake crimes from Aug. 26 to 29. So far, 24 suspects have been identified. Considering that 297 deepfake crimes were reported from January to July of this year, this was a sharp increase.

“Similar to the #MeToo movement, victims are realizing that they have suffered punishable crimes. In the past, people would have just assumed there’s nothing they can do, but now they’re coming forward and reporting it,” Woo added.

“A considerable amount of reports include the identity of the perpetrator.”

To boost efficiency, police are also emphasizing the importance of expanding the scope of undercover operations. Current regulations permit undercover investigations only when it comes to digital sex crimes that target minors, and undercover officers who wish to conceal their identity need prior approval from their department chief.

“Recently, digital sex crimes have expanded to not only target teenagers and children but also adults, yet current regulations bar undercover operations when adult victims are involved. Expediency is key in ongoing investigations, but many processes need prior approval from superiors,” Woo said.

“We need to expand the scope of undercover operations so we can investigate criminals targeting adults. We will also push for policies that allow undercover officers to conceal their personal identities when needed and attain the necessary bureaucratic approval after the fact.”

By Lee Ji-hye, staff reporter

#doesn’t say too much new info/explain a lot I’m just posting for posterity before getting in to it more when I have free time later#south korea#Deepfake crimes#telegram

24 notes

·

View notes

Text

There's a marketplace for deepfakes in online forums. People post requests for videos to be made of their wives, neighbours and co-workers and - unfathomable as it might seem - even their mothers, daughters and cousins.

Content creators respond with step-by-step instructions - what source material they'll need, advice on which filming angles work best, and price tags for the work.

A deepfake content creator based in south-east England, Gorkem, spoke to the BBC anonymously. He began creating celebrity deepfakes for his own gratification - he says they allow people to "realise their fantasies in ways that really wasn't [sic] possible before."

Later, Gorkem moved on to deepfaking women he was attracted to, including colleagues at his day job who he barely knew.

"One was married, the other in a relationship," he says. "Walking into work after having deepfaked these women - it did feel odd, but I just controlled my nerves. I can act like nothing's wrong - no-one would suspect."

Realising he could make money from what he refers to as his "hobby", Gorkem started taking commissions for custom deepfakes. Gathering footage from women's social media profiles provides him with plenty of source material. He says he even recently deepfaked a woman using a Zoom call recording.

"With a good amount of video, looking straight at the camera, that's good data for me. Then the algorithm can just extrapolate from that and make a good reconstruction of the face on the destination video."

He accepts "some women" could be psychologically harmed by being deepfaked, but seems indifferent about the potential impact of the way he is objectifying them.

"They can just say, 'It's not me - this has been faked.' They should just recognise that and get on with their day.

"From a moral standpoint I don't think there's anything that would stop me," he says. "If I'm going to make money from a commission I would do it, it's a no brainer ... [but] if I could be traced online I would stop there and probably find another hobby."

#the entire article is worth reading - they interview a victim and the creator of deepfakes too#but it's nice to see these scumbags explain what they're doing in their own words#ladies be really careful about posting pictures of yourselves online#radblr

18 notes

·

View notes

Text

i need to know

does someone know how they did the animation sequence for the game??

because it looks really... expensive? or well done but also the faces glitch like an ai thing or deepfake

and also there's a real person suddenly that doesn't interact with the previous characters

i just need to know why, whats the behind the scenes reason

#my dear gangster oppa#something tells me they used some animation already made and used deepfake to change the faces#because i can't explain the way it looks that way

3 notes

·

View notes

Text

listen I didn’t even think the Mandalorian season premiere was even all that bad but like it’s time to wrap it up on Star Wars. Let’s close shop. I think we’ve done everything we can here.

#mfw my race of hyper-adept space warriors armed with long-range weapons cannot stay away from a big alligator’s mouth and tail#also what was up with the space pirates who wanted to drink beer??? in a school???? and like that was their whole schtick??????#the Mandalorian#star wars#the sets for Navarro and Mon Cala looked really good at least#the alien prosthetics also finally looked nice!#unbelievable choice not explaining to the audience why Baby Yogurt is back instead of hanging out with deepfake Luke

5 notes

·

View notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Quote

[Keanu] Reeves said a recent conversation about “The Matrix” with a 15-year-old put things into a terrifying perspective. The actor explained to the teenager that his character, Neo, is fighting for what’s real. The teenager scoffed and said, “Who cares if it’s real?”

“People are growing up with these tools: We’re listening to music already that’s made by AI in the style of Nirvana, there’s NFT digital art,” Reeves said. “It’s cool, like, Look what the cute machines can make! But there’s a corporatocracy behind it that’s looking to control those things. Culturally, socially, we’re gonna be confronted by the value of real, or the non-value. And then what’s going to be pushed on us? What’s going to be presented to us?”

“It’s this sensorium. It’s spectacle. And it’s a system of control and manipulation,” Reeves continued. “We’re on our knees looking at cave walls and seeing the projections, and we’re not having the chance to look behind us.”

Keanu Reeves Slams Deepfakes, Film Contract Prevents Digital Edits - Variety

37K notes

·

View notes

Note

In your post about Taeil you mentioned “the telegram situation, and korean women constantly being in danger” I was hoping you could explain what you meant? Thank you and also for speaking out for the the victim. It’s a horrible situation.

hello, i will do my best to explain!

so currently there is a movement happening in sk, with women going on strike, protesting and coming forward about them being exploited, blackmailed and assaulted by men. this is a very common, every day occurrence and a lot of people tie this back to the declining birth rate in korea, and why women are refusing to procreate with korean men. with one of the most heinous thing being that there is an app called telegram, where basically you can create chat rooms, and in one of these chat rooms, there is thousands of men degrading women, talking about vile and disgusting things regarding women, and sending deepfakes (hyper realistic pictures/videos with real life peoples features/voices) of p*rn with different women’s/childrens faces in them, including minors. this has caused immense turmoil in these women’s lives, and unfortunately in sk, not much is done when it comes to the men responsible and holding them accountable. they overlook these issues and many women are afraid to come forward, as they are usually threatened, blackmailed or ignored and disregarded by the police when they report these crimes. the men in question who are doing this, are unfortunately also in positions of power sometimes and are part of these groups targeting women, and many try to silence their victims.

recently, it came to light that the actor ahn bo hyun, who formerly dated blackpink’s jisoo, was caught subscribing to a korean youtube channel, where the creator is a raging misogynist and anti-feminist, and that reflects in the content he posts. this same person, because of his actions, caused a young woman and her mother to both end their lives, as his content and accusations were affecting them personally. they felt as if they had no choice but to unalive themselves. there was an instance with a woman being beat almost to death by a man, and a ten year old who demanded n*des from a 6 year old. imagine that.

to provide more context, here are some links from twitter i’ve added if you want to read more about it. you can click the pictures! hope this helps!

157 notes

·

View notes

Text

The creation of sexually explicit "deepfake" images is to be made a criminal offence in England and Wales under a new law, the government says.

Under the legislation, anyone making explicit images of an adult without their consent will face a criminal record and unlimited fine.

It will apply regardless of whether the creator of an image intended to share it, the Ministry of Justice (MoJ) said.

And if the image is then shared more widely, they could face jail.

A deepfake is an image or video that has been digitally altered with the help of Artificial Intelligence (AI) to replace the face of one person with the face of another.

Recent years have seen the growing use of the technology to add the faces of celebrities or public figures - most often women - into pornographic films.

Channel 4 News presenter Cathy Newman, who discovered her own image used as part of a deepfake video, told BBC Radio 4's Today programme it was "incredibly invasive".

Ms Newman found she was a victim as part of a Channel 4 investigation into deepfakes.

"It was violating... it was kind of me and not me," she said, explaining the video displayed her face but not her hair.

Ms Newman said finding perpetrators is hard, adding: "This is a worldwide problem, so we can legislate in this jurisdiction, it might have no impact on whoever created my video or the millions of other videos that are out there."

She said the person who created the video is yet to be found.

Under the Online Safety Act, which was passed last year, the sharing of deepfakes was made illegal.

The new law will make it an offence for someone to create a sexually explicit deepfake - even if they have no intention to share it but "purely want to cause alarm, humiliation, or distress to the victim", the MoJ said.

Clare McGlynn, a law professor at Durham University who specialises in legal regulation of pornography and online abuse, told the Today programme the legislation has some limitations.

She said it "will only criminalise where you can prove a person created the image with the intention to cause distress", and this could create loopholes in the law.

It will apply to images of adults, because the law already covers this behaviour where the image is of a child, the MoJ said.

It will be introduced as an amendment to the Criminal Justice Bill, which is currently making its way through Parliament.

Minister for Victims and Safeguarding Laura Farris said the new law would send a "crystal clear message that making this material is immoral, often misogynistic, and a crime".

"The creation of deepfake sexual images is despicable and completely unacceptable irrespective of whether the image is shared," she said.

"It is another example of ways in which certain people seek to degrade and dehumanise others - especially women.

"And it has the capacity to cause catastrophic consequences if the material is shared more widely. This Government will not tolerate it."

Cally Jane Beech, a former Love Island contestant who earlier this year was the victim of deepfake images, said the law was a "huge step in further strengthening of the laws around deepfakes to better protect women".

"What I endured went beyond embarrassment or inconvenience," she said.

"Too many women continue to have their privacy, dignity, and identity compromised by malicious individuals in this way and it has to stop. People who do this need to be held accountable."

Shadow home secretary Yvette Cooper described the creation of the images as a "gross violation" of a person's autonomy and privacy and said it "must not be tolerated".

"Technology is increasingly being manipulated to manufacture misogynistic content and is emboldening perpetrators of Violence Against Women and Girls," she said.

"That's why it is vital for the government to get ahead of these fast-changing threats and not to be outpaced by them.

"It's essential that the police and prosecutors are equipped with the training and tools required to rigorously enforce these laws in order to stop perpetrators from acting with impunity."

286 notes

·

View notes

Text

for all its (apparently many?) flaws, i really enjoyed the fallout show, and i'm ride or die for maximus, obviously. but one of the things i enjoyed about lucy's arc isn't that she wasn't necessarily proved RIGHT or WRONG about her own moral code, she didn't learn that either kindness is its own reward or that niceness is suicidal in a fight for survival.

what she learned, i am pretty sure, is that context matters. you can't actually help people if you don't know anything about them. you can't enact justice if you don't know what the case on trial is. you can't come in out of nowhere and make snap decisions and be anything more than one more complication in a situation that was fucked up long before you were born.

that's what we see over and over: she comes in out of nowhere, she makes an attempt to help based on her immediate assumption of what's going on, and then everything continues to be dangerous and complicated and fucked up. she doesn't let the stoners explain that some ghouls will genuinely try to eat you the minute they get the chance, and she pays for it. she jumps to the wrong conclusion in vault 4 because not everyone who looks like a monster IS a monster, and she pays for it. yeah a lot of the time cooper is abusing her for his own satisfaction, but when she's a free agent she's a loose canon and it's not because the show is punishing her for TRYING to do the right thing. it's because the show is punishing her for jumping to conclusions.

this show gets a lot of laughs from Fish Out Of Water situations, but i think that even though cooper explicitly says "you'll change up here and not for the better, you'll become corrupted and selfish just to survive" that's not the real message. what lucy learns is how important it is to hear people out, meet them where they're at, and get the full story.

that's why the final confrontation with her father is so important. she hears everyone out. she gets the full story. she listens to all of it. and then she acts with full knowledge of situation. that's what the wasteland taught her: not to be cruel, not to be selfish, but that taking the time to understand what's actually going on really matters.

this is a show that's incredibly concerned with truth and lies. everyone is lying to each other and themselves. scenes change over and over as they're recontextualized. love and hate and grief and hope are just motives in a million interconnected shell games, not redeeming justifications. maximus's many compounded falsehoods are approved of by his own superior, who finds a corrupt pawn more useful than an honorable one. cooper finds out his wife has her own private agenda and this betrayal keeps him going for centuries. lucy's entire society is artificial and from the moment they find out they're not safe and maybe never have been, all the vault dwellers are scrambling to deal with that.

ANYWAY. i just think it's neat. sci fi is a lens to analyze our present through a hypothetical future, and i think it's pretty significant for this current age we live in, where we're all grappling with misinformation, conspiracy theories, propaganda, and deepfakes, there's a huge anxiety over how hard it can be to find the truth out about anything. i think the show suggests that it's always worth the work to try.

#fallout#ive seen critiques that it's fascist and reactionary and i can't speak to that#since i don't play the games#but for what i saw i liked it and thought it made some good points#spoilers

183 notes

·

View notes

Text

IdolMinji x idolreader hcs

A/n: I swear I just need to fill the time I'm writing bullyminji!! hope you like it!

Warnings: hate, body Shame and the rest is all cute!

You met by being placed as MCs together

She accidentally created her own ship when she said "I want to say something but I'm super shy" to you in front of the cameras

he does challenge with her songs with you whenever a new comeback arrived

You and she started dating and had no experience with it

Made you sing omg in their anchor

At awards shows she takes your hand and stays alone with you while leaving her members , and your members together

asked danielle for help on how to throw a picnic for the two of you

She arranged to meet you at a restaurant and the restaurant was filled with paparazzis

when dispatch posted a photo of you two holding hands that was the end for you

New jeans boy stans gave you massive hate, so when you went live the comments were from users "Newjeanscuties753" or "Minjiunnie11"

started to compare your body with other members

you open a live with one of your members, when you position the camera the numbers are growing every second, you come across comments saying that you missed them and the other member laughs and murmurs "wow.. Have we spent so long without opening a live?" she says jokingly as she focuses her gaze on the camera and fixes her bangs, the more people come in, the more people talk about you, so the comments are divided between "stop harassing Reader!" and "Leave our minji alone!" and then the atmosphere gets heavy and your member looks at you until they see that you don't say anything else "well, do you want to know about what we are planning to do?" trying to get excited, you just nod and mumble as you pull out your phone "let's make a cake for unnie's birthday!!" the member starts to clap and you just smile as you read the comments that start to fill up more, the member narrows her eyes and bite her lower lip gently, You can feel a knot forming when the comments get heavy and you pick up your phone and put it in front of the member who gently opens her eyes and looks at the camera in disguise, then she smiles and turns off the live stream.

Minji comforted you more than anything, when they decided to collaborate on MAMA, they made you dance hype boy on purpose

backstage she had to ask hyein to pretend to cry so she could talk to you

when she saw how nervous you were she hugged you for a few minutes until she heard danielle calling her

She is very protective, she takes care of new jeans then she is protective without knowing it, but this is all because she loves you

On your birthday she called you to her dorm and all your members and the new jeans were there

won't reveal your relationship for fear of people talking about your body

sued a man who created an account posting deepfake of you

Haerin loves it when you go to see Minji because she makes a lot of food to impress you

She's secretly a loser and when you play card games she takes pity on you and lets you win, not even bothering to see Hyein and Hanni's grumbles.

loves to kiss your cheek

when they put your group and hers up for interview and the interviewer asked you "what's it like to be the least popular in your group, and only be famous because of a rumor?" the atmosphere became tense, she could feel Haerin next to her stopping looking at the camera and looking sideways at you as she saw you blush and play with your fingers as you stumbled over your words "i..hm... I admire my fans so much be-" and then as if a god had appeared, your leader interrupts you "well... I think she's the second most famous in our group... and has this rumor already been explained?.." your leader gives an exaggeratedly fake smile and minji could feel her members sighing and their shoulders relaxing when their leader defended you

She arranged to travel to Paris with you, but when you arrived an hour after her at the Europort, the paparazzi were already there, and it was on all the gossip sites.

loves buying furry coats for you

Sometimes she calls you "bro" and at the same moment, without you questioning, he apologizes and kisses your cheek

gave you a Chanel kit as a gift

When you're passing by a store and see a cute outfit, she buys it for you

makes you sit on her lap when it's too cold to put your arms around her waist

Sometimes she has a fit and starts mumbling things in Korean

she bought you several gifts, but he made you wear a set that she almost knelt down on, and when you wore it she was in love again (this.

wrote one of the parts of ditto thinking of you

fans captured your group's album with your signature on her shelf

hyein sometimes calls you "unnie girlfriend" without meaning to and minji feels like putting her head in the ground

bought minecraft for you to play and made mods so her bed has your face

#kim minji imagines#newjeans minji#kim minji x reader#kim minji#kim minji smut#minji x reader#new jeans layouts#new jeans headers#newjeans imagines#new jeans imagine#new jeans imagines#newjeans x reader#newjeans scenarios#new jeans x reader#new jeans#nwjns#⋆。 Headcanons. ᯓᝰ.ᐟ

183 notes

·

View notes

Text

For everyone recommending Busuu as an alternative for Duolingo: stop.

It uses AI voices (as in literally the "sigma chad guy voice used on TikTok).

Plus, today I recieved a deepfake video on a listening exercise (speakers' facial expressions didn't match their tone or what they were saying, those unnatural circular head movements that most deepfake videos do, very periodic blinking and arm movement, eye level never changes).

Additionally, I'm pretty sure that the German course to learn Dutch is just a translation of the English version, because the German instructions were often weird/unnecessary/explaining stuff you wouldn't have to explain to a German speaker but to an English speaker.

Also on some translations they straight-up forgot to change the English translation to a German one.

At this point it just feels like we have to abandon the concept of language learning apps completely because they're all starting to use AI (and AI does *not* understand language).

tldr: Busuu is just as bad as Duolingo because it uses AI translations, voices, and videos

154 notes

·

View notes

Text

archive link - a victim of the Seoul National University men’s “deepfake porn” chatrooms posted about here previously shares her statement to the court. this article is originally in english, coming from the Hankyoreh english version news site

plain text:

An SNU graduate victimized by malicious, sexually explicit deepfakes of her made by classmates appeals to the court to hold those responsible for the torment of her and women like her for the pain they have inflicted

In a Telegram channel with around 1,300 members, there are individual chat rooms for 70 colleges and universities across the country. The bolded text are names of universities. (captures from Telegram)

“We’re not whores or sluts; we don’t exist to satisfy somebody’s sexual urges. We’re dignified human beings, each with our own careers and dreams.”

That’s part of the statement that Ruma (a pseudonym) intends to submit to a Korean court. Ruma is one of several graduates of Seoul National University whose faces appeared on sexually explicit deepfakes that were produced and distributed by men they had studied with at university.

In July 2021, Ruma was sent pornographic deepfakes displaying her face by an anonymous individual on Telegram. In May 2024, three years after Ruma first learned about the crime, two perpetrators, both graduates of her university, were arrested. The perpetrators are currently on trial, charged with violating the Act on Special Cases Concerning the Punishment of Sexual Crimes.

Ruma first reported the crime to the police in July 2021, but for nearly two years, four separate police stations that looked into the case were unable to catch the perpetrators.

Nevertheless, Ruma didn’t give up. Instead, she teamed up with other victims to track down the criminals. “I was just hoping that nobody else would have to deal with this kind of pain,” she explained.

There have been several reports about the illegal distribution of pornographic deepfakes in certain university communities. The typical pattern of behavior goes like this: Pornographic deepfakes are created using photographs of “friends” or “acquaintances” at school or in the community, distributed on Telegram and shared with the victims as a form of bullying.

These victims are not to blame for what are obviously sex crimes. Nevertheless, they often feel unable to talk about the grievous harm they have suffered from people they know from their schools, jobs and local communities.

There seems to be a lack of awareness in Korean society about the pain suffered by individuals whose photographs have been manipulated to create these sexually explicit images. That is why we are sharing with our readers the statement that Ruma will be submitting to the Seoul Central District Court.

Ruma’s statement to the court

Your Honors,

First of all, I would like to express my sincere gratitude for allowing me to share my story as a victim before the court. While preparing my statement, I reflected on the three years and one month that have passed from the time I was first harmed until the present.

That was when dozens of pornographic images digitally altered to include my face and videos of men masturbating to them were dropped in my lap by an anonymous account, when I saw multiple perpetrators insulting and mocking me in a chatroom where my photographs and personal information had been shared, and when, not long after that, I came to realize that all this had been perpetrated by people I’d studied with at university.

It turned out that while I’d been working on my doctorate overseas with the hope of shedding light on the lives and language of the underprivileged and helping to improve our schools and other institutions, my own university acquaintances had been calling me a “cum bucket,” “whore” and “slave” behind my back. Confronted with that fact, the world I thought I’d known came crashing down around me.

It was a nightmare having to face people whenever I woke up in the morning. For the first time since I was born, I found myself thinking I didn’t want to live in this world any longer. There’s a single reason I have nevertheless persevered in tracking down these criminals and bringing them to justice: Nobody should have to suffer as I have. Nobody should be objectified simply for being a woman. And nobody should be treated as a tool for soothing the inferiority complex of people such as the defendants in this case.

We’re not whores or sluts; we don’t exist to satisfy somebody’s sexual urges. We’re dignified human beings, each with our own careers and dreams. We must no longer remain silent when people, having forgotten those facts, eagerly commit wicked crimes that they attempt to justify by being online, in the arrogant assumption they will not be caught, and with contempt for the judicial system. We must not condone such people because they undermine trust in our society and devastate the lives of their victims.

Your Honors, if I may speak as the individual who has suffered more than anyone else because of this incident, undoing that harm could take years — indeed, it may take the rest of my life. My personal information and photographs, along with the deepfakes based on them, have already been distributed to any number of random people, and I’ve been suffering from post-traumatic stress disorder for more than three years now. In addition, I’ll have to spend the rest of my life in fear and anxiety that numerous people who were involved in the crime but have not been apprehended may still be out there somewhere, still making use of the deepfakes of me.

For those reasons, it is urgent that these two defendants serve a prison sentence that fits their crime and that measures be taken to ensure that even after their release, they will live responsibly without harming other people. That’s the only way I will be able to regain faith in society and recover the strength to go on living. Your Honors, the judgment you render will be the first critical step in that process of recovery.

In consideration of the immense harm this incident has caused me and the dozens of other victims, the many people in our circles of friends and family members, and beyond that, our society as a whole, I earnestly petition you to give the defendants the most severe punishment available, without any clemency.

By Park Hyun-jung, staff reporter

#south korea#misogyny#seoul national university#deepfake crimes#deepfake ai#korean feminism#telegram#디지털 성범죄

79 notes

·

View notes

Note

AITA for not being entirely negative about AI?

05/16/2024

Just before anyone scrolls down just to vote YTA, please hear me out: I'm not an AI bro, I am a hobbyist artist, I do not use generative AI, I know that it's all mostly based off stolen work and that's obviously Bad.

That being said, I am also an IT major so I understand the technology behind it as well as the industry using it. Because of this I understand that at this point it is very, very unlikely that AI art will ever go away, I feel like the best deal out of it that actual artists can get out of it is a compromise on what is and isn't allowed to be used for machine learning. I would love to be proven wrong though and I'm still hoping the lawsuits against Open AI and others will set a precedent for favouring artists over the technology.

Now, to the meat of this ask: I was talking in a discord sever with my other artist friends some of which are actually professionals (all around same age as me) and the topic of discussion was just how much AI art sucks, mostly concerning the fact that another artist we like (but don't know personally) had their works stolen and used in AI. The conversation then developed into talking about how hard it is to get a job in the industry where we live and how AI is now going to make that even worse. That's when I said something along the lines of:

"In an ideal world, artists would get paid for all the works of theirs that are in AI learning databases so they can have easy passive income and not have to worry about getting jobs at shitty companies that wouldn't appreciate them anyway."

To me that seemed like a pretty sensible take. I mean, if could just get free money every month for (consensually) putting a few dozens of my pieces in some database one time, I honestly would probably leave IT and just focus on art full time since that's always been my passion whereas programming is more of a "I'm good at it but not that excited about doing it, but it pays well so whatever".

My friends on the other hand did not share the sentiment, saying that in an ideal world AI art would be outlawed and the companies hiring them would not be shitty. I did agree about the companies being less shitty, but disagreed about AI being outlawed. I said that the major issue with AI are the copyright concerns so if tech companies were just forced to get artist's full permission to using their work first as well as providing monetary compensation there really wouldn't be anything wrong with using the technology (when concerning stylized AI art, not deepfakes or realistic AI images as those have a completely different slew of moral issues).

This really pissed a few of them off and they accused me of defending AI art. I had to explain to them that I wasn't defending AI art as it was NOW, because I know that the way it works NOW is very harmful, I was just saying that as an IDEAL scenario, not even something I think is particularly realistic, but something I think would be cool if it were actually possible. The rest of the argument was honestly just spinning in circles with me trying to explain the same points and them being outraged at the fact that I'm not 100% wholeheartedly bashing even the mere concept of AI until I just got frustrated and left the conversation.

It's been about a week and I haven't spoken to the friends I had that argument with since then. I still interact on the server and I see them interacting there too but we just kinda avoid each other. It's making me rethink the whole situation and wonder if I really was in the wrong for saying that and if I should just apologize.

134 notes

·

View notes

Text

PITO, artist of the BL manhwa "Leave the Work to Me!", and author and artist of the GL manhwas "My Joy" and "Her Pet", all licensed by Lezhin, just admited to having used revenge porn of a Korean girl as art references for his manhwa. Read his statement on Naver here (archived in case he deletes it).

As Korean activist sikpang on Twitter has spoken about, PITO used a "porn video" as a reference (and meme) for his manhwa. However, that video was actually a well-known illegal recording ("molka"). The following comment was written by the real victim of the illegal recording, saying it had ruined her life. Eventually, she committed suicide, but even then, Korean men continued to mock her by referring to the leaked video as her "last work".

PITO has stated that the webtoon platform instructed him to practice drawing for an adult webtoon, and that his contract was abusive. But naturally, the company never instructed him to use this kind of material as artistic reference. Read a detailed thread by Korean feminist activist cat here.

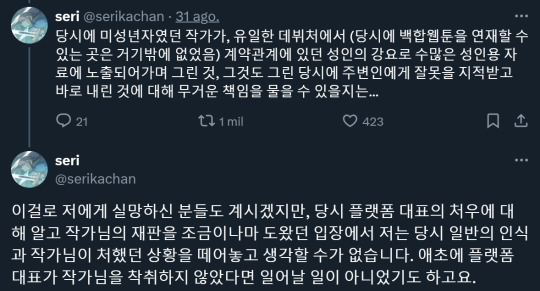

Seri, author and artist of the GL manhwa (considered by many a feminist work) "Her Tale of Shim Chong" (Tappytoon), and adapter of the manhwa "My S-Class Hunters" (Webtoon), advocated for PITO in a now partially deleted Twitter thread, saying that 10 years ago illegal recordings ("molka") were not a crime. This is patently false, as Korean users have pointed out. The movement against online sexual harassment in Korea goes as far as 1997, and at the time legislation against sexual crimes was in place.

What seems to be worse is that anti-sex crimes activists have (allegedly) been blocked by multiple manhwa authors.

The situation around women's rights in Korea is dire. Recently, many women, including high school students, have reported that they have been victims of deepfakes (AI-generated pornography using their faces). These crimes seem to mostly take place on Telegram channels, and estimated numbers indicate that there are at least 220.000 offenders. Here is a good post explaining current events.

Previous related incidents that you might have heard of are the Nth Room case and The Burning Sun scandal.

I want to make it clear that I'm not calling for a boycott of any of the authors involved. What you do with your time and money is your personal decision. I just wanted to bring people's attention to this issue on Tumblr, since there are barely any posts about it. But in my case, I'll never be able to read these works anymore. It especially hurts in the case of "Her Tale of Shim Chong", as it was always regarded as the best historical yuri manhwa, and many readers found it to be a powerful feminist story. Personally, I won't be able to recommend it ever again.

#PITO#Leave the Work to Me!#seri#her tale of shim chong#her shimchong#her shim chong#her shimcheong#manhwa#gl manhwa#bl manhwa#My S-Class Hunters#my s class hunters#the s class that i raised#s classes that i raised#Korea#feminism

46 notes

·

View notes