#deepfakes explained 2023

Explore tagged Tumblr posts

Text

youtube

This video is all about the dangers of deepfake technology. In short, deepfake technology is a type of AI that is able to generate realistic, fake images of people. This technology has the potential to be used for a wide variety of nefarious purposes, from porn to political manipulation.

Deepfake technology has emerged as a significant concern in the digital age, raising alarm about its potential dangers and the need for effective detection methods. Deepfakes refer to manipulated or synthesized media content, such as images, videos, or audio recordings, that convincingly replicate real people saying or doing things they never did. While deepfakes can have legitimate applications in entertainment and creative fields, their malicious use poses serious threats to individuals, organizations, and society as a whole.

The dangers of deepfakes are not very heavily known by everyone, and this poses a threat. There is no guarantee that what you see online is real, and deepfakes have successfully lessened the gap between fake and real content. Even though the technology can be used for creating innovative entertainment projects, it is also being heavily misused by cybercriminals. Additionally, if the technology is not monitored properly by law enforcement, things will likely get out of hand quickly.

Deepfakes can be used to spread false information, which can have severe consequences for public opinion, political discourse, and trust in institutions. A realistic deepfake video of a public figure could be used to disseminate fabricated statements or actions, leading to confusion and the potential for societal unrest.

Cybercriminals can exploit deepfake technology for financial gain. By impersonating someone's voice or face, scammers could trick individuals into divulging sensitive information, making fraudulent transactions, or even manipulating people into thinking they are communicating with a trusted source.

Deepfakes have the potential to disrupt democratic processes by distorting the truth during elections or important political events. Fake videos of candidates making controversial statements could sway public opinion or incite conflict.

The Dangers of Deepfake Technology and How to Spot Them

#the dangers of deepfake technology and how to spot them#the dangers of deepfake technology#deepfake technology#deepfake#artificial intelligence#deep fake#LimitLess Tech 888#how are deepfakes dangerous#the dangers of deepfakes#how to spot a deepfake#deepfakes#deepfake explained#what is a deepfake#dangers of deepfake#deepfake video#effects of deepfakes#how deepfakes work#deepfakes explained 2023#deepfake dangers#deepfake ai technology#Youtube

0 notes

Text

youtube

This video is all about the dangers of deepfake technology. In short, deepfake technology is a type of AI that is able to generate realistic, fake images of people. This technology has the potential to be used for a wide variety of nefarious purposes, from porn to political manipulation.

Deepfake technology has emerged as a significant concern in the digital age, raising alarm about its potential dangers and the need for effective detection methods. Deepfakes refer to manipulated or synthesized media content, such as images, videos, or audio recordings, that convincingly replicate real people saying or doing things they never did. While deepfakes can have legitimate applications in entertainment and creative fields, their malicious use poses serious threats to individuals, organizations, and society as a whole.

The dangers of deepfakes are not very heavily known by everyone, and this poses a threat. There is no guarantee that what you see online is real, and deepfakes have successfully lessened the gap between fake and real content. Even though the technology can be used for creating innovative entertainment projects, it is also being heavily misused by cybercriminals. Additionally, if the technology is not monitored properly by law enforcement, things will likely get out of hand quickly.

Deepfakes can be used to spread false information, which can have severe consequences for public opinion, political discourse, and trust in institutions. A realistic deepfake video of a public figure could be used to disseminate fabricated statements or actions, leading to confusion and the potential for societal unrest.

Cybercriminals can exploit deepfake technology for financial gain. By impersonating someone's voice or face, scammers could trick individuals into divulging sensitive information, making fraudulent transactions, or even manipulating people into thinking they are communicating with a trusted source.

Deepfakes have the potential to disrupt democratic processes by distorting the truth during elections or important political events. Fake videos of candidates making controversial statements could sway public opinion or incite conflict.

The Dangers of Deepfake Technology and How to Spot Them

#the dangers of deepfake technology and how to spot them#the dangers of deepfake technology#deepfake technology#how to spot them#deepfake#artificial intelligence#deep fake#LimitLess Tech 888#how are deepfakes dangerous#the dangers of deepfakes#how to spot a deepfake#deepfakes#deepfake explained#what is a deepfake#dangers of deepfake#deepfake video#effects of deepfakes#how deepfakes work#deepfakes explained 2023#deepfake dangers#deepfake ai technology#Youtube

0 notes

Text

Site Update - 8/3/2023

Hi Pillowfolks!

How has your summer (or winter) been? Our team is back with a new update! As always, we will be monitoring closely for any unexpected bugs after this release, so please let us know if you run into any.

New Features/Improvements

Premium Subscription Updates - Per the request of many users, we’ve made a number of updates to creating & editing Premium Subscriptions.

Users can now make credit-only Subscriptions without needing to enter in payment information, if your credit balance can fully cover at least one payment of the features fees.

Users can also now apply a custom portion of their available accrued credit monthly– i.e., if the cost of features is $5.97 every month, you can choose to cover only a portion of the cost of features with your credit.

Users who have recently canceled a Subscription no longer have to wait until the payment period expires to create a new Subscription.

To access Pillowfort Premium, click on the “PF Premium” icon located in the left-hand sidebar. This page will allow you to convert your legacy donations to Pillowfort premium, review & edit your subscriptions, and more.

Premium Image Upload Limit Increase: Good news! We’ve raised the limit for Premium Image Uploads to 6MB (formerly 4MB), at no extra cost! We may raise the limit further depending on how the subscriptions service performs and how our data fees fare.

Premium Subscription Landing Page & Frames Preview: We improved what users who do not have a Premium Subscription see on the Subscription management page to provide more information about the Premium features available. This includes the ability to preview all premium frames available.

New Premium Frame: We’ve released a new premium avatar frame! We hope you like it. We also have more premium avatar frames in the works that will be released later this month.

Modless / Abandoned Communities Update - Our Developers have made changes to our admin tools to allow our Customer Service Team to be able to change Community Ownership and add/remove Moderators to help revitalize abandoned and modless Communities. We will make a post soon explaining the process for requesting to become a Mod and/or Owner of a Community.

Bug Fixes/Misc Improvements

Some users were not receiving confirmation e-mails when their Pillowfort Premium Subscription was successfully charged. This should now be fixed. Please let us know if you are still not receiving those e-mails.

Related to the above bug, some users who were using their credit balance in Subscriptions were not seeing their credit balance being properly updated to reflect the credit used in those Subscriptions. We have now synced these Subscriptions, so you should see a decrease in your account’s credit balance if you are using that credit in a Subscription.

Fixed a bug where users were unable to delete their accounts in certain scenarios.

Fixed bug that displayed errors on the log-in page incorrectly.

Made improvements to how post images load on Pillowfort, to reduce image loading errors and improve efficiency for users with slow web connections.

Fixed a bug causing the checkmark on avatar frame selection in Settings to display improperly.

Terms of Service Update

We have made a small update to our ToS to specify that “deepfakes” and other digitally-altered pornographic images of real people are considered involuntary pornography and thus prohibited.

And that’s all for today! With this update out, our team will now be working full steam on post drafts, post scheduling queuing, and the progressive mobile app! Be sure to keep checking back on our Pillowfort Dev Blog for further status updates on upcoming features.

Best,

Staff

#pillowfort.social#site update#pfstaffalert#pillowfort blogging#pillowfort premium#communities#bug fixes#long post

56 notes

·

View notes

Note

Pio please explain what the hell a “fake John Macartny” scandal is I am on my knees begging I didn’t think the beetles were in WoH

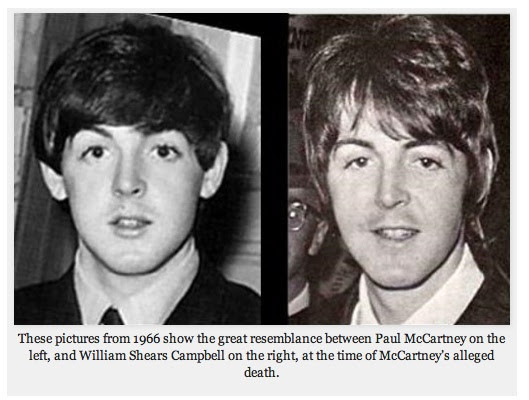

Arguably one of the most famous conspiracy theories of all time, the "Paul is dead" theory posited that the Beatle Paul McCartney died in a car accident in 1966 and was subsequently replaced by a fake Paul McCartney. This was "substantiated" by people allegedly hearing a secret message saying "turn me on, dead man" when they reversed their Beatles Revolution 9 records, convinced that the rest of the Beatles were leaving clues that Paul was actually dead and had been replaced by an imposter for the rest of their career.

I'm not a woh fan and have only seen a few posts about the conspiracy that frankly were just more confusing than illuminating, but as far as I know it seems one of the actors got into a controversy for posting pictures in front of a Japanese war criminal monument (?) which sparked a rumor that he was far right wing anti-china, resulting in him losing a ton of fans and sponsorships (???). So then somehow something happened which resulted in his company allegedly making some kind of rumored deepfake imposter version of him who makes music and holds concerts or something and apparently there's a huge and extremely hostile split in the fandom between people who think he's been replaced and his identity stolen and this is an issue that needs to be brought to light and people who think it's just been the same guy this whole time. So basically just Paul is dead in 2023 in China it appears?

But the only reason I found out about this is apparently some zine project got cancelled because there was major dogpiling from people accusing the zine organizers of thinking the actor wasn't replaced and that there was never an imposter, along with for some reason criticism of book-only woh fans who are "too holier than thou" to help the cause of exposing the "imposter"?? Or something ?????? It's. It's a lot

41 notes

·

View notes

Text

The latest in a series of duels announced by the European Commission is with Bing, Microsoft’s search engine. Brussels suspects that the giant based in Redmond, Washington, has failed to properly moderate content produced by the generative AI systems on Bing, Copilot, and Image Creator, and that as a result, it may have violated the Digital Services Act (DSA), one of Europe’s latest digital regulations.

On May 17, the EU summit requested company documents to understand how Microsoft handled the spread of hallucinations (inaccurate or nonsensical answers produced by AI), deepfakes, and attempts to improperly influence the upcoming European Parliament elections. At the beginning of June, voters in the 27 states of the European Union will choose their representatives to the European Parliament, in a campaign over which looms the ominous shadow of technology with its potential to manipulate the outcome. The commission has given Microsoft until May 27 to respond, only days before voters go to the polls. If there is a need to correct course, it may likely be too late.

Europe’s Strategy

Over the past few months, the European Commission has started to bang its fists on the table when dealing with the big digital giants, almost all of them based in the US or China. This isn’t the first time. In 2022, the European Union hit Google with a fine of €4.1 billion because of its market dominance thanks to its Android system, marking the end of an investigation that started in 2015. In 2023, it sanctioned Meta with a fine of €1.2 billion for violating the GDPR, the EU’s data protection regulations. And in March it presented Apple with a sanction of €1.8 billion.

Recently, however, there appears to have been a change in strategy. Sanctions continue to be available as a last resort when Big Tech companies don’t bend to the wishes of Brussels, but now the European Commission is aiming to take a closer look at Big Tech, find out how it operates, and modify it as needed, before imposing fines. Take, for example, Europe’s Digital Services Act, which attempts to impose transparency in areas like algorithms and advertising, fight online harassment and disinformation, protect minors, stop user profiling, and eliminate dark patterns (design features intended to manipulate our choices on the web).

In 2023, Brussels identified 22 multinationals that, due to their size, would be the focus of its initial efforts: Google with its four major services (search, shopping, maps, and play), YouTube, Meta with Instagram and Facebook, Bing, X (formerly Twitter), Snapchat, Pinterest, LinkedIn, Amazon, Booking, Wikipedia, Apple’s App Store, TikTok, Alibaba, Zalando, and the porn sites Pornhub, XVideos, and Stripchat. Since then, it has been putting the pressure on these companies to cooperate with its regulatory regime.

The day before the Bing investigation was announced, the commission also opened one into Meta to determine what the multinational is doing to protect minors on Facebook and Instagram and counter the “rabbit hole” effect—that is, the seamless flood of content that demands users’ attention, and which can be especially appealing to younger people. That same concern led it to block the launch of TikTok Lite in Europe, deeming its system for rewarding social engagement dangerous and a means of encouraging addictive behavior. It has asked X to increase its content moderation, LinkedIn to explain how its ad system works, and AliExpress to defend its refund and complaint processes.

A Mountain of Laws …

On one hand, the message appears to be that no one will escape the reach of Brussels. On the other, the European Commission, led by President Ursula von der Leyen, has to demonstrate that the many digital laws and regulations that are in place actually produce positive results. In addition to the DSA, there is the Digital Markets Act (DMA), intended to counterbalance the dominance of Big Tech in online markets; the AI Act, Europe’s flagship legislation on artificial intelligence; and the Data Governance Act (DGA) and the Data Act, which address data protection and the use of data in the public and private sectors. Also to be added to the list are the updated cybersecurity package, NIS2 (Network and Information Security); the Digital Operational Resilience Act, focused on finance and insurance; and the digital identity package within eIDAS 2. Still in the draft stage are regulations on health data spaces and much-debated chat measures which would authorize law enforcement agencies and platforms to scan citizens’ private messages, looking for child pornography.

Brussels has deployed its heavy artillery against the digital flagships of the United States and China, and a few successful blows have landed, such as ByteDance’s suspension of the gamification feature on TikTok Lite following its release in France and Spain. But the future is uncertain and complicated. While investigations attract media interest, the EU’s digital bureaucracy is a large and complex machine to run.

On February 17, the DSA became law for all online service operators (cloud and hosting providers, search engines, e-commerce, and online services) but the European Commission doesn’t and can’t control everything. That is why it asked states to appoint a local authority to serve as a coordinator of digital services. Five months later, Brussels had to send a formal notice to six states (Cyprus, Czechia, Estonia, Poland, Portugal, and Slovakia) to urge them to designate and fully empower their digital services coordinators. Those countries now have two months to comply before Brussels will intervene. But there are others who are also not in the clear. For example, Italy’s digital services coordinator, the Communications Regulatory Authority (abbreviated AGCOM, for Autorità per le Garanzie nelle Comunicazioni, in Italian), needs to recruit 23 new employees to replenish its staff. The department told WIRED Italy that it expects to have filled all of its appointments by mid-June.

The DSA also introduced “trusted flaggers.” These are individuals or entities, such as universities, associations, and fact-checkers, committed to combating online hatred, internet harassment, illegal content, and the spread of scams and fake news. Their reports are, one hopes, trustworthy. The selection of trusted flaggers is up to local authorities but, to date, only Finland has formalized the appointment of one, specifically Tekijänoikeuden tiedotus- ja valvontakeskus ry (in English, the Copyright Information and Anti-Piracy Center). Its executive director, Jaana Pihkala, explained to WIRED Italy that their task is “to produce reports on copyright infringements,” a subject on which the association has 40 years of experience. Since its appointment as a trusted flagger, the center’s two lawyers, who perform all of its functions, have sent 816 alerts to protect films, TV series, and books on behalf of Finnish copyright holders.

… and a Mountain of Data

To assure that the new commission is respected by the 27 states, the commission set up the DSA surveillance system as quickly as possible, but the bureaucrats in Brussels still have a formidable amount of research to do. On the one hand, there is the anonymous reporting platform with which the commission hopes to build dossiers on the operations of different platforms directly from internal sources. The biggest scandals that have shaken Meta have been thanks to former employees, like Christopher Wylie, the analyst who revealed how Cambridge Analytica attempted to influence the US elections, and Frances Haugen, who shared documents about the impacts of Instagram and Facebook on children’s health. The DSA, however, intends to empower and fund the commission so that it can have its own people capable of sifting through documents and data, analyzing the content, and deciding whether to act.

The commission boasts that the DSA will force platforms to be transparent. And indeed it can point to some successes already, for example, by revealing the absurdly inadequate numbers of moderators employed by platforms. According to the latest data released last November, they don’t even cover all the languages spoken in the European Union. X reported that it had only two people to check content in Italian, the language of 9.1 million users. There were no moderators for Greek, Finnish, or Romanian even though each language has more than 2 million subscribers. AliExpress moderates everything in English while, for other languages, it makes do with automatic translators. LinkedIn moderates content in 12 languages of the European bloc—that is, just half of the official languages.

At the same time, the commission has forced large platforms to standardize their reports of moderation interventions to feed a large database, which, at the time of writing this article, contains more than 18.2 billion records. Of these cases, 69 percent were handled automatically. But, perhaps surprisingly, 92 percent concerned Google Shopping. This is because the platform uses various parameters to determine whether a product can be featured: the risk that it is counterfeited, possible violations of site standards, prohibited goods, dangerous materials, and others. It can thus be the case that several alerts are triggered for the same product and the DSA database counts each one separately, multiplying the shopping numbers exponentially. So now the EU has a mass of data that further complicates its goal of being fully transparent.

Zalando’s Numbers

And then there’s the Big Tech companies’ legal battle against the fee they have to pay to the commission to help underwrite its supervisory bodies. Meta, TikTok, and Zalando have challenged the fee (though paid it). Zalando is also the only European company on the commission’s list of large platforms, a designation Zalando has always contested because it does not believe it meets the criteria used by Brussels. One example: The platforms on the list must have at least 45 million monthly users in Europe. The commission argues that Zalando has 83 million users, though that number, for example, includes visits from Portugal, where the platform is not marketed, and Zalando argues those users should be deducted from its total count. According to its calculations, the activities subject to the DSA reach only 31 million users, under the threshold. When Zalando was assessed its fee, it discovered that the commission had based it on a figure of 47.5 million users, far below the initial 83 million. The company has now taken the commission to court in an attempt to assure a transparent process.

And this is just one piece of legislation, the DSA. The commission has also deployed the Digital Markets Act (DMA), a package of regulations to counterbalance Big Tech’s market dominance, requiring that certain services be interoperable with those of other companies, that apps that come loaded on a device by default can be uninstalled, and that data collected on large platforms be shared with small- and medium-size companies. Again, the push to impose these mandates starts with the giants: Alphabet, Amazon, Apple, Meta, ByteDance, and Microsoft. In May, Booking was added to the list.

Big Tech Responds

Platforms have started to respond to EU requests, with lukewarm results. WhatsApp, for instance, has been redesigned to allow chatting with other apps without compromising its end-to-end encryption that protects the privacy and security of users, but it is still unclear who will agree to connect to it. WIRED US reached out to 10 messaging companies, including Google, Telegram, Viber, and Signal, to ask whether they intend to look at interoperability and whether they had worked with WhatsApp on its plans. The majority didn’t respond to the request for comment. Those that did, Snap and Discord, said they had nothing to add. Apple had to accept sideloading—i.e., the possibility of installing and updating iPhone or iPad applications from stores outside the official one. However, the first alternative that emerged, AltStore, offers very few apps at this time. And it has suffered some negative publicity after refusing to accept the latest version of its archenemy Spotify’s app, despite the fact that the audio platform had removed the link to its website for subscriptions.

The DMA is a regulation that has the potential to break the dominant positions of Big Tech companies, but that outcome is not a given. Take the issue of surveillance: The commission has funds to pay the salaries of 80 employees, compared to the 120 requested by Internal Market Commissioner Thierry Breton and the 220 requested by the European Parliament, as summarized by Bruegel in 2022. And on the website of the Center for European Policy Analysis (CEPA), Adam Kovacevich, founder and CEO of Chamber of Progress, a politically left-wing tech industry coalition (all of the digital giants, which also fund CEPA, are members), stated that the DMA, “instead of helping consumers, aims to help competitors. The DMA is making large tech firms’ services less useful, less secure, and less family-friendly. Europeans’ experience of large tech firms’ services is about to get worse compared to the experience of Americans and other non-Europeans.”

Kovacevich represents an association financed by some of those same companies that the DMA is focused on, and there is a shared fear that the DMA will complicate the market and, in the end, benefit only a few companies—not necessarily those most at risk because of the dominance of Silicon Valley. It is not only lawsuits and fines, but also the perceptions of citizens and businesses that will help to determine whether EU regulations are successful. The results may come more slowly than desired by Brussels as new legislation is rarely positively received at first.

Learning From GDPR and Gaia-X

Another regulatory act, the General Data Protection Regulation (GDPR), has become the global industry standard, forcing online operators to change the way they handle our data. But if you ask the typical person on the street, they’ll likely tell you it’s just a simple cookie wall that you have to approve before continuing on to a webpage. Or it’s viewed as a law that has required the retention of dedicated external consultants on the part of companies. It is rarely described as the ultimate online privacy law, which is exactly what it is. That said, while the act has reshaped the privacy landscape, there have been challenges, as the digital rights association Noyb has explained. The privacy commissioners of Ireland and Luxembourg, where many web giants are based for tax purposes, have had bottlenecks in investigating violations. According to the latest figures from Ireland’s Data Protection Commission (DPC), 19,581 complaints have been submitted in the past five years, but the body has made only 37 formal decisions and only eight of those began with complaints. Noyb recently conducted a survey of 1,000 data protection officers; 74 percent were convinced that if privacy officers investigated the typical European company, they would find at least one GDPR violation.

The GDPR was also the impetus for another unsuccessful operation: separating the European cloud from the US cloud in order to shelter the data of EU citizens from Washington’s Cloud Act. In 2019, France and Germany announced with great fanfare a federation, Gaia-X, that would defend the continent and provide a response to the cloud market, which has been split between the United States and China. Five years later, the project has become bogged down in the process of establishing standards, after the entry of the giants it was supposed to counter, such as Microsoft, Amazon, Google, Huawei, and Alibaba, as well as the controversial American company Palantir (which analyses data for defense purposes). This led some of the founders, such as the French cloud operator Scaleway, to flee, and that then turned the spotlight on the European Parliament, which led the commission to launch an alternative, the European Alliance for Industrial Data, Edge and Cloud, which counts among its 49 members 26 participants from Gaia-X (everyone except for the non-EU giants) and enjoys EU financial support.

In the meantime, the Big Tech giants have found a solution that satisfies European wishes, investing en masse to establish data centers on EU soil. According to a study by consultancy firm Roland Berger, 34 data center transactions were finalized in 2023, growing at an average annual rate of 29.7 percent since 2019. According to Mordor Intelligence, another market analysis company, the sector in Europe will grow from €35.4 billion in 2024 to an estimated €57.7 billion in 2029. In recent weeks, Amazon web services announced €7.8 billion in investments in Germany. WIRED Italy has reported on Amazon’s interest in joining the list of accredited operators to host critical public administration data in Italy, which already includes Microsoft, Google, and Oracle. Notwithstanding its proclamations about sovereignty, Brussels has had to capitulate: The cloud is in the hands of the giants from the United States who have found themselves way ahead of their Chinese competitors after diplomatic relations between Beijing and Brussels cooled.

The AI Challenge

The newest front in this digital battle is artificial intelligence. Here, too, the European Union has been the first to come up with some rules under its AI Act, the first legislation to address the different applications of this technology and establish permitted and prohibited uses based on risk assessments. The commission does not want to repeat the mistakes of the past. Mindful of the launch of the GDPR, which in 2018 caused companies to scramble to assure they were compliant, it wants to lead organizations through a period of voluntary adjustment. Already 400 companies have declared their interest in joining the effort, including IBM.

In the meantime, Brussels must build a number of structures to make the AI Act work. First is the AI Council. It will have one representative from each country and will be divided into two subgroups, one dedicated to market development and the other to public sector uses of AI. In addition, it will be joined by a committee of technical advisers and an independent committee of scientists and experts, along the lines of the UN Climate Committee. Secondly, the AI Office, which sits within Directorate-General Connect (the department in charge of digital technology), will take care of administrative aspects of the AI Act. The office will assure that the act is applied uniformly, investigate alleged violations, establish codes of conduct, and classify artificial intelligence models that pose a systemic risk. Once the rules are established, research on new technologies can proceed. After it is fully operational, the office will employ 100 people, some of them redeployed from General Connect while others will be new hires. At the moment, the office is looking to hire six administrative staff and an unknown number of tech experts.

On May 29, the first round of bids in support of the regulation expired. These included the AI Innovation Accelerator, a center that provides training, technical standards, and software and tools to promote research, support startups and small- and medium-sized enterprises, and assist public authorities that have to supervise AI. A total of €6 million is on the table. Another €2 million will finance management and €1.5 million will go to the EU’s AI testing facilities, which will, on behalf of countries’ antitrust authorities, analyze artificial intelligence models and products on the market to assure that they comply with EU rules.

Follow the Money

Finally, a total of €54 million is designated for a number of business initiatives. The EU knows it is lagging behind. According to an April report by the European Parliament’s research service, which provides data and intelligence to support legislative activities, the global AI market, which in 2023 was estimated at €130 billion, will reach close to €1.9 trillion in 2030. The lion’s share is in the United States, with €44 billion of private investment in 2022, followed by China with €12 billion. Overall, the European Union and the United Kingdom attracted €10.2 billion in the same year. According to Eurochamber researchers, between 2018 and the third quarter of 2023, US AI companies received €120 billion in investment, compared to €32.5 billion for European ones.

Europe wants to counter the advance of the new AI giants with an open source model, and it has also made its network of supercomputers available to startups and universities to train algorithms. First, however, it had to adapt to the needs of the sector, investing almost €400 million in graphics cards, which, given the current boom in demand, will not arrive anytime soon.

Among other projects to support the European AI market, the commission wants to use €24 million to launch a Language Technology Alliance that would bring together companies from different states to develop a generative AI to compete with ChatGPT and similar tools. It’s an initiative that closely resembles Gaia-X. Another €25 million is earmarked for the creation of a large open source language model, available to European companies to develop new services and research projects. The commission intends to fund several models and ultimately choose the one best suited to Europe’s needs. Overall, during the period from 2021 to 2027, the Digital Europe Program plans to spend €2.1 billion on AI. That figure may sound impressive, but it pales in comparison to the €10 billion that a single company, Microsoft, invested in OpenAI.

The €25 million being spent on the European large language model effort, if distributed to many smaller projects, risks not even counterbalancing the €15 million that Microsoft has spent bringing France’s Mistral, Europe’s most talked-about AI startup, into its orbit. The big AI models will become presences in Brussels as soon as the AI Act, now finally approved, comes into full force. In short, the commission is making it clear in every way it can that a new sheriff is in town. But will the bureaucrats of Brussels be adequately armed to take on Big Tech? Only one thing is certain—it’s not going to be an easy task.

6 notes

·

View notes

Link

#AIRegulation#algorithmicbias#compliancechallenges#deepfakes#ethicalAI#EU-AIAct#NISTFramework#statelegislation

0 notes

Text

Further Readings

Goyal, P. (2022). “Bulli Bai campaign exposes the rift between Trads and Raaytas in RW ecosystem.” News Laundry.

Description: An article explaining the divisions in the Hindu extremist right wing, specifically between the Trads and the Raaytas, as well as the increasing toxicity and propensity for violence in the right-wing ecosystem in India.

Richardson-Self, L. (2020). “Woman‐Hating: On Misogyny, Sexism, and Hate Speech.” In Hypatia by the Cambridge University Press.

Description: An academic article exploring the distinction between misogyny and sexism in the context of hate speech, arguing that misogynistic speech qualifies as hate speech, which is crucial for understanding patriarchy-enforcing expressions.

Ibrahim, I. (2022). “Women's bodies as battlefields and the 'Bulli Bai' controversy.” India Today.

Description: An article examining the ‘Bulli Bai’ app controversy as a manifestation of patriarchy, where women's bodies are used as battlefields, specifically in how the fear of being exploited actively shapes the choices women make.

Sen, R., & Jha, S. (2024). “Women under Hindutva: Misogynist memes, mock-auction and doxing, deepfake-pornification and rape threats in digital space.” Journal of Asian and African Studies.

Description: An academic article examining the various forms of online abuse and harassment faced by women under the Hindutva ideology in India, including misogynist memes, mock auctions, doxing, deepfake pornography, and rape threats. It specifically examines the nexus between the Bulli Bai issue and Hindutva ideology.

Ramani, P. (2022). “Why the Hindu extremists behind #BulliBai feel threatened by these Indian Muslim women.” Article 14.

Description: An article examining the victims of the Bulli Bai incident, as well as laying out the timeline of the incident and enforcement action taken.

Jaswal, S. (2022). “Bulli Bai: India’s Muslim women again listed on app for ‘auction.’” Al Jazeera.

Description: An article that recounts the Bulli Bai incident, including victim accounts, enforcement actions, and public responses.

Dasgupta, S. (2022). “What is Bulli Bai scandal—Indian app that listed Muslim women for auction.” The Independent.

Description: An article explaining the Bulli Bai scandal, and the preceding Sulli Deals case, as well as responses and updates on arrests made.

Alimahomed-Wilson, S. (2020). “The Matrix of Gendered Islamophobia.” Gender and Society, 34(4), 648–678.

Description: An article that explains the dimensions of intersectional Islamaphobia faced by women in the US and the UK, and how such Islamophobia is not universalised in affecting all Muslims the same way. Based on a study of qualitative interviews, which reveals a dialectical relation between women’s oppression and gendered Islamophobia.

Naaz, H. (2023). “The anti-feminist narrative of the Hindu right in India.” In J. Goetz & S. Mayer (Eds.), Global Perspectives on Anti-Feminism (pp. 160–181). Edinburgh University Press.

Description: A book chapter that delves into the relation between anti-feminist narratives and Hindu religious fundamentalism by focusing on the Pinjra Tod movement.

Udupa, S., & Lahiri Gerold, O. (2024). “‘Deal’ of the Day: Sex, Porn, and Political Hate on Social Media.” In J. B. Walther & R. E. Rice (Eds.), Social Processes of Online Hate (pp. 120–143). London: Routledge.

Description: A book chapter which analyses the nexus between the building of misogynist political projects and the oppression of women through the lens of social media.

Walther, J. B. (2024). “Making A Case For A Social Processes Approach To Online Hate.” In J. B. Walther & R. E. Rice (Eds.), Social Processes of Online Hate (pp. 10–36). London: Routledge.

Description: An introductory chapter that presents a social processes approach to understanding online hate, emphasizing that online applications and social media platforms are complicit in said social processes of online hate.

Provisions of the Indian Penal Code, 1860:

Section 153A: Promoting enmity between different groups on grounds of religion, race, etc.

Section 153B: Imputations, assertions prejudicial to national integration

Section 295A: Deliberate and malicious acts intended to outrage religious feelings

Section 509: Word, gesture or act intended to insult the modesty of a woman

Sections 499 and 500: Defamation

Information Technology Act, 2000, Section 67: Punishment for publishing or transmitting obscene material in electronic form

The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021; Rule 1(b)(ii).

1 note

·

View note

Text

BBC and Insider Investigations: Fake Media and Disinformation Campaigns by Russian Intelligence in the West

You are watching news from the weekly rally at the Russian Embassy in Lisbon. Today is July 6, 2:30 PM.

On July 3, journalists from the fact-checking groups “BBC Verify” and “BBC News” revealed who creates fake news for Americans. Thousands of news articles are generated using artificial intelligence and posted on numerous sites with very "American" names. Most of these articles are based on real news and are signed with the names of non-existent journalists. This creates the illusion that these sites are sources of reliable news, but hidden among the content are fabricated stories. Fake documents and doctored YouTube videos featuring people posing as “whistleblowers” or “independent journalists” are used to bolster these bogus stories.

A frequent target of these attacks is Olena Zelenska, the wife of Ukrainian President Volodymyr Zelensky, who is falsely portrayed as either buying a Bugatti sports car with American military aid money or making racist remarks toward employees of a jewelry store in New York.

Clint Watts, head of Microsoft's Digital Threat Intelligence Center, explains that it is now much more common to encounter "information laundering" - the repeated reprinting of fake news in regular news stories to obscure their original source. https://www.bbc.com/news/articles/c72ver6172do

On July 4, The Insider detailed how the Russian Foreign Intelligence Service organizes disinformation campaigns in the West. From a report found in the hacked correspondence of SVR employees responsible for the “information war” with the West, presented in 2022 at the Federation Council, journalists learned that TV channels RT, Sputnik, and loyal Telegram channels do not meet expectations.

The strategy was also revealed: “Posting in foreign segments of the Internet… materials supposedly on behalf of public pro-Kyiv organizations containing new demands… of a political, economic, social nature. Due to the frequency, intrusiveness, aggressive form, and incorrect presentation, one should expect negative reactions from the target audience."

The Insider journalists also found the “Ice Pick” project in the correspondence, aimed at discrediting a banker who sponsored the Anti-Corruption Foundation and moved to the United States. https://theins.ru/politika/272852

Anti-Semitic graffiti that appeared later in Paris, fake quotes from Beyoncé, Oprah Winfrey, Ronaldo demanding an end to aid to Ukraine, and a deepfake with a fake voice of Tom Cruise warning of terrorist attacks at the Olympics all align with this strategy. https://www.euronews.com/my-europe/2023/12/27/fact-check-did-celebrities-like-taylor-swift-and-cristiano-ronaldo-criticise-ukraine

Pro-Kremlin resources often appeal to freedom of speech when they face resistance. It is important to remember that Russian authorities have nearly destroyed independent journalism in their country. Since March 2023, they have detained The Wall Street Journal journalist Evan Gershkovich, accusing him of spying for the CIA. Last week, the Yekaterinburg court began considering his case, which could result in up to 20 years in prison. Evan's colleagues shave their heads in solidarity with him. #IStandWithEvan https://x.com/holodmedia/status/1808777869331488948

Proofs and links are in the description. Subscribe and help!

1 note

·

View note

Text

China, AI, Bioweapons, and Lyme Disease

@bible-news-prophecy-radio

COGwriter

The USA and China are scheduled to hold talks about trying to reduce the risk of conflict due to the use of Artificial Intelligence (AI):

US and China to hold first talks to reduce risk of AI ‘miscalculation’

May 13, 2024

Chinese and US officials will on Tuesday hold the first meeting in a dialogue on artificial intelligence that Presidents Joe Biden and Xi Jinping agreed at a summit in San Francisco last year. … the meeting would focus on risk and safety with an emphasis on advanced AI systems but was not designed to deliver outcomes. The official said the US would outline its stance on tackling AI risks, explain its approach on norms and principles of AI safety, in addition to holding discussions on the role of international governance. But he said the US would also voice concern about Chinese AI activity that threatened American national security. He said China had made AI development a “major” priority and was rapidly deploying capabilities in civilian and military areas that in many cases “we believe undermines both US and allied national security”. https://www.ft.com/content/e10b034d-ac25-476c-b3a5-c09aae8eb7f9

While AI can be useful, there are many risks with it.

One is that it often gives wrong information. I had two AI programs admit they gave wrong answers to research inquiries I made in the past few days after I challenged their responses.

Another risk is that people will defer to AI when it makes suggestions that could be wrong.

A military risk is that attacks could begin if AI controls various offensive systems.

Ignoring the accidental risks of conflict between the USA and China, consider the following risk related to AI:

BIOWEAPONS

The American intelligence community, think tanks and academics are increasingly concerned about risks posed by foreign bad actors gaining access to advanced AI capabilities. Researchers at Gryphon Scientific and Rand Corporation noted that advanced AI models can provide information that could help create biological weapons.

Gryphon studied how large language models (LLM) – computer programs that draw from massive amounts of text to generate responses to queries – could be used by hostile actors to cause harm in the domain of life sciences and found they “can provide information that could aid a malicious actor in creating a biological weapon by providing useful, accurate and detailed information across every step in this pathway.”

They found, for example, that an LLM could provide post-doctoral level knowledge to trouble-shoot problems when working with a pandemic-capable virus.

Rand research showed that LLMs could help in the planning and execution of a biological attack. They found an LLM could for example suggest aerosol delivery methods for botulinum toxin. 05/09/24 https://www.ndtv.com/world-news/from-deepfakes-to-bioweapons-all-you-need-to-know-about-threats-posed-by-ai-5627182

Getting back to China, last December NewsMax reported the following:

Gordon Chang to Newsmax: China Working on Bioweapons

December 25, 2023 8:30 pm

Gordon G. Chang, author of “The Coming Collapse of China,” told Newsmax that Beijing is experimenting with what appears to be biological weapons targeting human brains. …

“These are biological weapons, and China isn’t part of the Biological Weapons Convention that would outlaw this,” Chang explained. …

The Times’ report cited recent studies by the Chinese Communist Party on biological weapons designed to induce sleep or sleep-related disturbances in enemy troops.

Other potential weapons would create a connection between the brain and external devices, as well as pharmaceuticals designed to impair people genetically and physiologically.

“This whole idea of ‘genetic drugs,’ although it has not really been fleshed out, clearly it is a biological weapon. And certainly, the other weapons that we’ve been talking about in this Washington Times report … [are] essentially biological-influenced,” Chang stated.

Chang believes it is time now more than ever to challenge China on their biological weapons program, which he suggested could be responsible for the global COVID-19 pandemic. https://www.newsmax.com/newsmax-tv/gordon-chang-china-brain/2023/12/25/id/1147150/

So, since China did not sign the treaty saying it would not do so, it may well be that it is working on these type of weapons.

As far as biological warfare and China goes, back in January 2020, we put out the following video:

youtube

17:01

Biological Warfare and Prophecy

Is biological warfare possible? Was it prophesied? Scientists have long warned that pathogenic organisms like the coronavirus could be weaponized. Furthermore, back in 2017, there were concerns that the biological research facility being constructed in Wuhan, China was risky and that a coronavirus from it could be released. On January 25, 2020, China’s President Xi Jinping publicly stated that the situation with the Wuhan-related strain of the coronavirus was grave. Did Church of God writers like the late evangelist Raymond McNair warn that engineered viruses (the “Doomsday bug”) were consistent with prophecies from Jesus? Did a warning from CCOG leader Bob Thiel warn about risks of genetically-modified (GMO) mosquitoes come to pass? Could human research and/or the consumption of biblically-unclean animals such as bats and snakes be a factor in current outbreaks or coming future pestilences? What about famines? How devastating have pestilences been? How devastating are the prophesied ones going to be? Dr. Thiel addresses these issues and more.

Here is a link to our video: Biological Warfare and Prophecy.

That said, treaty or not, other nations, including the USA are involved in biological warfare research–which does imply the ability to produce biological weapons.

There have been many reports over the years about the USA being involved with pathogen research for use in biological warfare. Last summer, Robert F. Kennedy, Jr. was interviewed by Tucker Carlson about that. Here is something about that:

RFK Jr. Warns Of Thousands Of “DEATH SCIENTISTS” Developing Killer Microbes In America

“We are making bioweapons.”

August 15, 2023

During an interview with Tucker Carlson, presidential candidate Robert F. Kennedy Jr warned that there are thousands of so called ‘scientists’ developing killer bioweapons inside America without oversight.

The topic was raised when the pair began to discuss biolabs in Ukraine, with Kennedy noting that the U.S. “has biolabs in Ukraine because we are making bioweapons.”

He then went on to state that “Anthony Fauci got all the responsibility for bio-weapons development,” adding that after three bugs escaped from three labs in the U.S. “In 2014, 300 scientists wrote to President Obama and said ‘you’ve gotta shut down Anthony Fauci, because he is going to create a microbe that will cause a global pandemic.’”

“And so Obama signed a moratorium and shut down the 18 worst of Anthony Fauci’s experiments, where most of them were taking place in North Carolina by a scientist called Ralph Baric,” Kennedy added. https://summit.news/2023/08/15/video-rfk-jr-warns-of-thousands-of-death-scientists-developing-killer-microbes-in-america/

Could the USA be so involved?

Well, it is not supposed to be:

The Biological Weapons Convention (BWC) effectively prohibits the development, production, acquisition, transfer, stockpiling and use of biological and toxin weapons. It was the first multilateral disarmament treaty banning an entire category of weapons of mass destruction (WMD).

The United States officially agreed to it in 1975, as did most nations in the world.

Officially, the USA claims it has no biological weapons.

However, it admits that it is involved with biological research on pathogenic microorganisms, as are many other countries. The USA and many others claim that the research is entirely peaceful and that the biological pathogenic agents can be, and supposedly are, under complete control.

One of the reasons for this post today, is that I saw the following article:

Exposing the Link Between Lyme Disease and Secret U.S. Biowarfare Experiments

May 11, 2024

Declassified documents reveal that the seemingly innocuous island off the coast of Connecticut may have been ground zero for government experiments with weaponized ticks, possibly unleashing Lyme disease upon an unsuspecting public.

In 2008, Kris Newby, after extensive research, released a groundbreaking documentary on Lyme disease. The film sparked widespread discussion about the origins of Lyme disease and the reasons behind its increasing prevalence. …

Plum Island, an 840-acre island located just a mile off the north fork at the eastern end of Long Island, is notorious for its secretive government experiments involving bioweapons. Newby highlighted this in a discussion with Tucker Carlson, emphasizing the island’s mysterious and concerning activities.

Bioweapons Testing Facility and Disease Cluster

People living near Plum Island started getting diseases like Lyme arthritis, rickettsiosis, and babesiosis all around the same time. Tucker Carlson discussed this and wondered if these diseases came from government experiments.

https://rairfoundation.com/exposing-link-between-lyme-disease-secret-u-s/

Whether or not Lyme Disease was human-engineered, it can be a very, very difficult disease to treat. But sadly, humans (and apparently also with the use of AI) are looking to make more devastating diseases.

And as it turns out, it is usually not difficult to turn biological pathogens into weapons.

The USA certainly has dangerous biological agents from which it could do so. So do many other nations.

Remember that Jesus Himself warned that the “signs” of the end of the age would include pestilences:

7…And there will be famines, pestilences, and earthquakes in various places. 8 All these are the beginning of sorrows. (Matthew 24:7-8)

11 And there will be great earthquakes in various places, and famines and pestilences; and there will be fearful sights and great signs from heaven. (Luke 21:11)

Biological warfare agents certainly can be a factor in pestilences.

Furthermore, in the sixth chapter of the Book of Revelation, the ride of four horsemen are described. The passages about the fourth horseman are as follows:

7 When He opened the fourth seal, I heard the voice of the fourth living creature saying, “Come and see.” 8 So I looked, and behold, a pale horse. And the name of him who sat on it was Death, and Hades followed with him. And power was given to them over a fourth of the earth, to kill with sword, with hunger, with death, and by the beasts of the earth. (Revelation 6:7-8)

So, the opening of the fourth seal is the time that the pale horse of death is apparently increasing death by war, hunger, and pestilences (the beasts of the earth).

Could humans intentionally cause some of that?

Certainly.

More pestilences are coming (Revelation 6:7-8).

In the summer of 2023, we put out the following video:

youtube

14:53

Biological Weapons and the 4th Horseman of the Apocalypse

Tucker Carlson interviewed Democratic presidential party candidate Robert F. Kennedy, Jr. (RFK). During that interview, RFK asserted that the United States has had thousands of scientists working on making bioweapons. Officially, the USA has denied this as it is a party to the Biological Weapons Convention. RFK states that Anthoy Fauci was responsible for biological weapons development. Does the ‘Global South’ consider that the West may intend to exterminate people through biological warfare? Did Jesus prophesy pestilences? Have humans engaged in biological or germ warfare for millennia? What were some things that Raymond McNair warned about over 50 years ago? Can we trust scientists to totally safely control all the infectious agents they work with? Could humankind be developing, knowingly or unknowingly, biological agents that could be responsible for many of the deaths that are prophesied to occur with the ride of the ‘pale horse of death,’ which is the fourth of the four horsemen of the Apocalypse to ride? Steve Dupuie and Dr. Thiel address these matters.

Here is a link to our video: Biological Weapons and the 4th Horseman of the Apocalypse.

Biological pathogens developed by humans are a real threat.

Do NOT trust the scientists who say that they are simply trying to help us fight diseases and can always control them–they DO NOT KNOW all the risks, no matter what they say.

Humans, directly or indirectly, will be a factor in the coming, more severe pestilences. Adding AI to the mix adds additional risk.

Related Items:

Fourth Horseman of the Apocalypse What is the pale horse of death and pestilences? What will it bring and when? Here is a link to a related sermon: Fourth Horseman, COVID, and the Rise of the Beast of Revelation. Here is a version of that sermon in Spanish: El Cuarto Caballo, El COVID y El Surgimiento de la Bestia del Apocalipsis. Some shorter related videos may include Amphibian Apocalypse: Threat to Humans? and Zombie Deer Disease is Here! Are the 10 Plagues on Egypt Coming? Here is a version of the article in Spanish: El cuarto jinete de Apocalipsis, el caballo pálido de muerte y pestilencia.

The Gospel of the Kingdom of God This free online pdf booklet has answers many questions people have about the Gospel of the Kingdom of God and explains why it is the solution to the issues the world is facing. It is available in hundreds of languages at ccog.org. Here are links to four kingdom-related sermons: The Fantastic Gospel of the Kingdom of God!, The World’s False Gospel, The Gospel of the Kingdom: From the New and Old Testaments, and The Kingdom of God is the Solution.

GMOs and Bible Prophecy What are GMOs? Since they were not in the food supply until 1994, how could they possibly relate to Bible prophecy? Do GMOs put the USA and others at risk? Here are some related videos: GMO Risks and the Bible and GMOs, Lab meat, Hydrogenation: Safe or Dangerous?

Chimeras: Has Science Crossed the Line? What are chimeras? Has science crossed the line? Does the Bible give any clues? A video of related interest is Half human, half pig: What’s the difference? and Human-Monkey Embryos and Death.

Four Horsemen of the Apocalypse What do each of the four horseman of the Apocalypse represent? Have they began their ride? Did Jesus discuss the any of this? Might their rides coincide with the “beginning of sorrows? Do they start their ride prior to the Great Tribulation? Did Nostradamus or any other ‘private prophets’ write predictions that may mislead people so that they may not understand the truth of one or more of the four horseman? There is also a related YouTube video titled Sorrows and the Four Horsemen of the Apocalypse. Here is a link to a shorter video: These Signs of the 4 Horsemen Have Begun.

Could God Have a 6,000 Year Plan? What Year Does the 6,000 Years End? Was a 6000 year time allowed for humans to rule followed by a literal thousand year reign of Christ on Earth taught by the early Christians? Does God have 7,000 year plan? What year may the six thousand years of human rule end? When will Jesus return? 2031 or 2025 or? There is also a video titled: When Does the 6000 Years End? 2031? 2035? Here is a link to the article in Spanish: ¿Tiene Dios un plan de 6,000 años?

When Will the Great Tribulation Begin? 2024, 2025, or 2026? Can the Great Tribulation begin today? What happens before the Great Tribulation in the “beginning of sorrows”? What happens in the Great Tribulation and the Day of the Lord? Is this the time of the Gentiles? When is the earliest that the Great Tribulation can begin? What is the Day of the Lord? Who are the 144,000? Here is a version of the article in the Spanish language: ¿Puede la Gran Tribulación comenzar en el 2020 o 2021? ¿Es el Tiempo de los Gentiles? A related video is: Great Tribulation: 2026 or 2027? A shorter video is: Can the Great Tribulation start in 2022 or 2023? Notice also: Can Jesus return in 2023 or 2024? Here is a video in the Spanish language: Es El 2021 el año de La Gran Tribulación o el Grande Reseteo Financiero.

LATEST NEWS REPORTS

LATEST BIBLE PROPHECY INTERVIEWS

0 notes

Text

Learn about CCPA AI and ADMT technology guidelines

The California Privacy Protection Agency (CPPA) announced draft AI and ADMT regulations in November 2023.

The proposed guidelines are still being developed, but organisations should monitor them. California has many of the world’s largest technological businesses, so new AI rules might affect them globally.

ADMT uses covered

Making big decisions

It is used to make consumer-impacting decisions would be subject to the draft rules. Significant decisions usually affect rights or access to essential commodities, services, and opportunities.

For instance, the draft guidelines would encompass automated judgements that affect a person’s work, school, healthcare, or loan.

Comprehensive profiling

Profiling involves automatically processing personal data to assess, analyse, or anticipate attributes including job performance, product interests, and behaviour.

“Extensive profiling” relates to specific profiling:

Profiling consumers in work or education, such as by tracking employee performance with a keyboard logger.

Profiling consumers in public areas, such as utilising facial recognition to analyse store buyers’ emotions.

Consumer behavioural advertising profiling. Personal data is used to target ads in behavioural advertising.

ADMT training

ADMT Tool

Businesses using consumer personal data to train ADMT tools would be subject to the suggested restrictions. The regulations would cover teaching an ADMT to make important judgements, identify people, construct deepfakes, or undertake physical or biological identification and profiling.

By the AI and ADMT regulations, who would be protected?

As a California law, the CCPA solely protects California consumers. Similar protections are provided by the draft ADMT guidelines.

However, these standards define “consumer” broader than other data privacy laws. The regulations apply to business interactions, employees, students, independent contractors, and school and job seekers.

How does the CCPA regulate AI and automated decision-making?

Draft CCPA AI laws have three main requirements. Covered it users must notify consumers, offer opt-out options, and explain how their use impacts them.

The CPPA has altered the regulations previously and may do so again before adoption, but these essential requirements appear in each draft. These requirements will likely stay in the final rules, even if their implementation changes.

Pre-use notes

Before employing this for a covered purpose, organisations must clearly and conspicuously notify consumers. The notice must clarify how the company utilises this and consumers’ rights to learn more and opt out in straightforward language.

The corporation can’t say, “We use automated tools to improve our services.” Instead, the organisation must specify use. Example: “We use automated tools to assess your preferences and deliver targeted ads.”

The notification must direct consumers to more information about the ADMT’s rationale and business usage of its results. This information need not be in the notice. The company can provide a link or other access.

If customers can challenge automated choices, the pre-use notice must describe how.

Exit rights

Consumers can opt out of most it’s applications. Businesses must provide two opt-out methods to support this right.

At least one opt-out mechanism must use the business’s main customer channel. A digital merchant may have a web form.

Opt-out procedures must be easy and not require account creation.

Within 15 days of receiving an opt-out request, a corporation must stop processing customer data. Consumer data processed by the firm is no longer usable. The company must also notify any service providers or third parties that received user data.

Exemptions

Organisations need not let consumers opt out of this for safety, security, and fraud protection. This is mentioned in the proposed guidelines to detect and respond to data security breaches, prevent and prosecute fraud and criminal activities, and safeguard human physical safety.

If an organisation permits people to appeal automatic judgements to a trained human reviewer with the power to overturn them, opt-outs are not required.

Organisations can also waive opt-outs for limited ADMT use in work and school. Among these uses:

Performance evaluation for entrance, acceptance, and hiring.

Workplace task allocation and compensation.

Profiles used just to evaluate student or employee performance.

The following criteria must be met for work and school uses to be opt-out-free:

The ADMT must be needed for and utilised solely for the business’s purpose.

To assure accuracy and non-discrimination, the firm must formally review the ADMT.

The company must maintain the ADMT‘s accuracy and impartiality.

These exemptions do not apply to behavioural advertising or ADMT training. These usage are always optional.

Access to ADMT use data

Customers can request information on how a company uses ADMT. Businesses must make it easy for customers to request this information.

Organisations must explain why they used ADMT, the consumer outcome, and how they made a choice when responding to access requests.

Access request responses should also explain how consumers can exercise their CCPA rights, such as filing complaints or deleting their data.

Notifying of problematic decisions

If a business uses ADMT to make a major decision that negatively impacts a consumer, such as job termination, it must notify the consumer of their access rights.

Notice must include:

An explanation that the company made a bad judgement using ADMT.

Notification that businesses cannot retaliate against consumers who exercise CCPA rights.

How the consumer can get more ADMT usage information.

If applicable, appeal instructions.

AI/ADMT risk assessments

The CPPA is drafting risk assessment regulations alongside AI and ADMT requirements. Despite being independent sets of requirements, risk assessment regulations affect how organisations employ AI and ADMT.

The risk assessment regulations require organisations to examine before using ADMT for major decisions or profiles. Before training ADMT or AI models with personal data, organisations must do risk assessments.

Risk assessments must identify ADMT consumer risks, organisation or stakeholder benefits, and risk mitigation or elimination measures. Organisations should avoid AI and ADMT when the risks outweigh the advantages.

European AI development and use are strictly regulated by the EU AI Act

The Colorado Privacy Act and Virginia Consumer Data Protection Act allow US consumers to opt out of having their personal data processed for important decisions.

In October 2023, President Biden signed an executive order mandating federal agencies and departments to establish, use, and oversee AI standards.

California’s planned ADMT regulations are more notable than other state laws since they may effect companies beyond the state.

Many of the most advanced automated decision-making tool makers must comply with these rules because California is home to much of the global technology industry. The consumer protections only apply to California residents, although organisations may offer the same options for convenience.

Because it set national data privacy standards, the original CCPA is sometimes called the US version of the GDPR. New AI and ADMT rules may yield similar outcomes.

CCPA AI and ADMT regulations take effect when?

As the rules are still being finalised, it’s impossible to say. Many analysts expect the rules to take effect in mid-2025.

Further regulation discussion is scheduled at the July 2024 CPPA board meeting. Many expect the CPPA Board to start official rulemaking at this meeting. Since the agency would have a year to finalise the guidelines, mid-2025 is the expected implementation date.

How will rules be enforced?

With other elements of the CCPA, the CPPA can investigate infractions and sanction organisations. Noncompliance might result in civil penalties from the California attorney general.

Organisations can be fined USD 2,500 for unintentional infractions and USD 7,500 for purposeful ones. One violation per affected consumer. Many infractions involve many consumers, which can significantly compound penalties.

Status of CCPA AI and ADMT regulations?

Draft rules are evolving. The CPPA continues to gather public views and hold board discussions, so the guidelines may alter before adoption.

Based on comments, the CPPA has made major regulation changes. The agency expanded opt-out exclusions and restricted physical and biological profiling after the December 2023 board meeting.

To limit which tools the rules apply to, the government changed ADMT’s definition. The earlier proposal included any technology that aided human decision-making, while the present draft only pertains to ADMT that significantly aids it.

Privacy advocates worry the amended definition creates exploitable gaps, but industry groups say it better reflects ADMT use.

Even the CPPA Board disagrees on the final rules. Two board members raised concerns that the plan breaches their power in March 2024.

Given how the rules have evolved, pre-use notices, opt-out rights, and access rights are likely to persist. Organisations may have remaining questions like:

Which AI and automated decision-making technologies will the final rules cover?

How will practical consumer protections be implemented?

What exemptions will organisations receive?

These laws will affect how AI and automation are governed nationwide and how customers are safeguarded in the face of this rising technology.

Read more on govindhtech.com

#CCPA#admttechnology#dataprivacy#DataProtection#aimodels#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

AI-Powered Content Marketing: Unleashing the Future

FUTURE OF CONTENT MARKETING WITH AI

What is Artificial Intelligence?

The technology that allows computers and other devices to replicate human intelligence and capacity for problem-solving is commonly referred to as artificial intelligence or AI.

In other words, The scientific discipline of artificial intelligence studies how to build machines with human-like cognitive abilities. It can perform tasks that are considered “smart.” AI technology is capable of handling massive quantities of data in various ways, unlike humans. The goal of artificial intelligence is to imitate human skills like judgement, pattern recognition, and decision making.

In what respects may artificial intelligence be damaging?

Artificial intelligence poses addresses that the IT world has long taken into account. Some of the major risks posed by AI have been identified as the virtual elimination of jobs, the spread of fake news, and a hazardous arms race of weapons driven by AI.

1. AI’s Lack Of Honesty And Clarity

It might be difficult to understand AI and deep learning models, even for individuals who work directly with the technology. This makes it harder to comprehend what information AI systems employ or the reasons behind any potentially dangerous or biased conclusions they may make. Consequently, there’s a lack of transparency about how and why AI makes its conclusions. These concerns have led to an increase in the popularity of explainable AI, but transparent AI systems are still a ways off from becoming the norm.

2. AI Automation-Related Job Damage

"The lower-wage employment opportunities in the service industry have been reasonably substantially created by this economy, which is largely why we have a low unemployment rate, which doesn't capture people that aren't looking for work," futurist Martin Ford told Built In. Nevertheless, "I don't think that's going to continue" given the growth of AI.

3. Employ AI systems for Social Disguise

Online media and journalism have grown even more confusing with AI-generated images and videos, AI voice changers, and deepfakes infiltrating the political and social spheres. These technologies enable the substitution of a figure’s image in an already existing image or video, as well as the generation of lifelike images, videos, and audio clips. This creates a situation where it can be very challenging to distinguish between genuine and fake news, giving dishonest people a new avenue to distribute misinformation and propaganda for conflict.

4. AI-Based Social Tracking Innovation

Apart from the potential imminent concern intelligence presents, Ford seems especially concerned about the negative impact AI will have on security and privacy. China's usage of facial recognition technology in workplaces, educational institutions, as well as other environments is an outstanding instance. The Chinese government could be able to obtain enough information to keep tabs on someone's activities, relationships, and political opinions in addition to tracking their whereabouts.

5. Inadequate data privacy using AI instruments

AI systems often collect personal data to help in training AI models or to personalize user experiences. You must have experienced a chatGPT bug incident during 2023 which allowed some users to see titles from another active user’s chat history, you could not even think that data sent to an AI system is safe from others.

6. Biases Caused By AI

The narrow knowledge base of AI developers may help shed light on why specific spoken languages and accents remain tricky for speech detection Algorithms to understand, or why businesses overlook the potential repercussions of a chatbot posing as well-known historical individuals. More caution ought to be utilized by business organizations and entrepreneurs to avoid the replication of strong biases and prejudices that endanger minority communities.

The consequences of AI-generated content for the writing profession in the future

The writing industry is already being overhauled by the use of AI in content creation, and this trend is only predicted to continue in the future. AI-powered writing tools will continue to be more crucial to the creation of content processes as they advance in functionality.

The possibility for AI-generated material to automate several repetitive chores currently completed by human writers is one of its most important implications. Artificial Intelligence systems can generate different types of content, such as product descriptions and news stories, with varying levels of human involvement. This could result in cheaper expenses and much faster and more efficient content generation.

Concerns exist, meanwhile, regarding how AI-generated content will affect the writing industry. There are risks that the widespread use of AI in content creation could replace human writers entirely or at least decrease the need for human writers. While AI can create certain kinds of content, it is questionable that AI will ever completely replace human writers. Instead, the future of AI-generated content is likely to consist of a blend of content created by machines and humans. Artificial intelligence will be used to automate boring tasks and provide smart suggestions for improving the quality and engagement of content, but human authors will still be needed for the uniqueness and complexity that only humans can provide.

In conclusion, AI-generated content has a lot of exciting and undiscovered possibilities ahead of it. Artificial intelligence is not projected to replace human writers, even though it will likely be employed more frequently in the content creation process. Instead, it’s more likely that in the future, both human and machine-developed content will exist together with AI-powered tools assisting human writers in creating engaging, excellent content.

Ending Remarks

Future developments in the field of AI-powered content authoring have the potential to fundamentally alter how we create, distribute, and consume content. Artificial intelligence enabled writing tools are already redefining the content market by providing valuable data on audience behavior and content success, as well as assisting content creators in creating personalized materials of the highest capability at scale. Additionally, content is being optimized for a variety of platforms and channels, such as email marketing, social media, and search engines, by using AI-powered writing tools. This greatly increases the content’s reach and engagement.

0 notes

Text

Deepfake Technology Powers Advanced Malware Attacks on Mobile Banking

Identification of Cyber Threat Actor GoldFactory and its Advanced Banking Trojans

GoldFactory, a cybercrime group that communicates in Chinese, has been identified as the creators of advanced banking trojans. This includes a previously unknown iOS malware named GoldPickaxe, which is designed to collect identity documents, facial recognition data, and intercept SMS messages. According to a detailed report by Group-IB, a Singapore-based cybersecurity firm, the GoldPickaxe family of malware is operational on both iOS and Android platforms. It is believed that GoldFactory maintains strong ties with Gigabud, another cybercrime organization.

Since its inception in mid-2023, GoldFactory has been linked to the creation of other Android-based banking malware. These include GoldDigger and its advanced version GoldDiggerPlus, which incorporates an embedded trojan known as GoldKefu.

Targeted Social Engineering Campaigns in Asia-Pacific

The malware created by GoldFactory has been distributed through social engineering campaigns, primarily aimed at the Asia-Pacific region. Thailand and Vietnam have been specifically targeted, with the malware disguising itself as local banks and government organizations. In these campaigns, potential victims receive phishing and smishing messages that direct them to switch to instant messaging applications like LINE. They are then sent fraudulent URLs that install GoldPickaxe on their devices.

Some of the malicious Android applications are hosted on fake websites designed to mimic the Google Play Store or corporate websites, thus facilitating the installation process.

iOS Distribution Scheme and Sophisticated Evasion Techniques

The iOS version of GoldPickaxe utilizes a different distribution method. It employs successive versions that take advantage of Apple’s TestFlight platform and malicious URLs. These URLs prompt users to download a Mobile Device Management (MDM) profile, which provides complete control over the iOS device and enables the installation of the rogue app.

The Thailand Banking Sector CERT (TB-CERT) and the Cyber Crime Investigation Bureau (CCIB) uncovered these propagation techniques in November 2023.

GoldPickaxe also showcases its sophistication by circumventing security measures implemented by Thailand. These measures necessitate users to verify large transactions using facial recognition to deter fraudulent activities.

Deepfake Videos and Unauthorized Fund Transfers

Security researchers Andrey Polovinkin and Sharmine Low explain that GoldPickaxe tricks victims into recording a video as a confirmation method in the fake application. This recorded video is then utilized as a source for creating deepfake videos using face-swapping artificial intelligence services.

Both the Android and iOS versions of the malware are capable of collecting victims’ ID documents and photos, intercepting incoming SMS messages, and routing traffic through the compromised device. It is believed that GoldFactory actors use their own devices to log into the banking application and execute unauthorized fund transfers.

Comparing the Functionality of iOS and Android Variants

The iOS variant of GoldPickaxe has fewer functionalities compared to its Android counterpart. This is largely due to the closed nature of the iOS operating system and its relatively stricter permissions.