#how deepfakes work

Explore tagged Tumblr posts

Text

youtube

This video is all about the dangers of deepfake technology. In short, deepfake technology is a type of AI that is able to generate realistic, fake images of people. This technology has the potential to be used for a wide variety of nefarious purposes, from porn to political manipulation.

Deepfake technology has emerged as a significant concern in the digital age, raising alarm about its potential dangers and the need for effective detection methods. Deepfakes refer to manipulated or synthesized media content, such as images, videos, or audio recordings, that convincingly replicate real people saying or doing things they never did. While deepfakes can have legitimate applications in entertainment and creative fields, their malicious use poses serious threats to individuals, organizations, and society as a whole.

The dangers of deepfakes are not very heavily known by everyone, and this poses a threat. There is no guarantee that what you see online is real, and deepfakes have successfully lessened the gap between fake and real content. Even though the technology can be used for creating innovative entertainment projects, it is also being heavily misused by cybercriminals. Additionally, if the technology is not monitored properly by law enforcement, things will likely get out of hand quickly.

Deepfakes can be used to spread false information, which can have severe consequences for public opinion, political discourse, and trust in institutions. A realistic deepfake video of a public figure could be used to disseminate fabricated statements or actions, leading to confusion and the potential for societal unrest.

Cybercriminals can exploit deepfake technology for financial gain. By impersonating someone's voice or face, scammers could trick individuals into divulging sensitive information, making fraudulent transactions, or even manipulating people into thinking they are communicating with a trusted source.

Deepfakes have the potential to disrupt democratic processes by distorting the truth during elections or important political events. Fake videos of candidates making controversial statements could sway public opinion or incite conflict.

The Dangers of Deepfake Technology and How to Spot Them

#the dangers of deepfake technology and how to spot them#the dangers of deepfake technology#deepfake technology#deepfake#artificial intelligence#deep fake#LimitLess Tech 888#how are deepfakes dangerous#the dangers of deepfakes#how to spot a deepfake#deepfakes#deepfake explained#what is a deepfake#dangers of deepfake#deepfake video#effects of deepfakes#how deepfakes work#deepfakes explained 2023#deepfake dangers#deepfake ai technology#Youtube

0 notes

Text

youtube

This video is all about the dangers of deepfake technology. In short, deepfake technology is a type of AI that is able to generate realistic, fake images of people. This technology has the potential to be used for a wide variety of nefarious purposes, from porn to political manipulation.

Deepfake technology has emerged as a significant concern in the digital age, raising alarm about its potential dangers and the need for effective detection methods. Deepfakes refer to manipulated or synthesized media content, such as images, videos, or audio recordings, that convincingly replicate real people saying or doing things they never did. While deepfakes can have legitimate applications in entertainment and creative fields, their malicious use poses serious threats to individuals, organizations, and society as a whole.

The dangers of deepfakes are not very heavily known by everyone, and this poses a threat. There is no guarantee that what you see online is real, and deepfakes have successfully lessened the gap between fake and real content. Even though the technology can be used for creating innovative entertainment projects, it is also being heavily misused by cybercriminals. Additionally, if the technology is not monitored properly by law enforcement, things will likely get out of hand quickly.

Deepfakes can be used to spread false information, which can have severe consequences for public opinion, political discourse, and trust in institutions. A realistic deepfake video of a public figure could be used to disseminate fabricated statements or actions, leading to confusion and the potential for societal unrest.

Cybercriminals can exploit deepfake technology for financial gain. By impersonating someone's voice or face, scammers could trick individuals into divulging sensitive information, making fraudulent transactions, or even manipulating people into thinking they are communicating with a trusted source.

Deepfakes have the potential to disrupt democratic processes by distorting the truth during elections or important political events. Fake videos of candidates making controversial statements could sway public opinion or incite conflict.

The Dangers of Deepfake Technology and How to Spot Them

#the dangers of deepfake technology and how to spot them#the dangers of deepfake technology#deepfake technology#how to spot them#deepfake#artificial intelligence#deep fake#LimitLess Tech 888#how are deepfakes dangerous#the dangers of deepfakes#how to spot a deepfake#deepfakes#deepfake explained#what is a deepfake#dangers of deepfake#deepfake video#effects of deepfakes#how deepfakes work#deepfakes explained 2023#deepfake dangers#deepfake ai technology#Youtube

0 notes

Text

dear "creators" who depend on ai

how does it feel to be so absolutely lazy and useless as a human being ?? how does it feel to have a functioning brain but can't use it properly ?? how does it feel to be so absolutely incredibly dull and uncreative that you can't muster 200 words to convey your ideas ?? how does it feel to have to face the fact that you suck so damn bad and you have to live with it ??

#sincerely#you're not creators#you're not artists#you're not people#you're a parasitic thief who likes to take credits and blames their shortcomings on unrealistic circumstances#it takes effort to create#but#that effort is what makes it art#that effort is worth it#not this#not 10 minutes on chat gpt asking it to write out “your” stories because you “can't put your ideas to work”#ask other writers#develop yourself#slowly learn from your posted works and keep on working on yourself by improving#not telling chat gpt that you told to pretend to be a member of your favourite group that you feel so hot when he touched you#not even elon musk would do that#srsly if you're not using your brain just fucking donate it to science to better understand how people like you exist#using ai as a tool is okay#but relying in ai completely to achieve your goal ?#moronic#same goes to deepfakes#smt tmi#smt rant

21 notes

·

View notes

Text

There was lots of concern about AI and deepfakes spreading misinformation this election cycle, so let's check in on that!

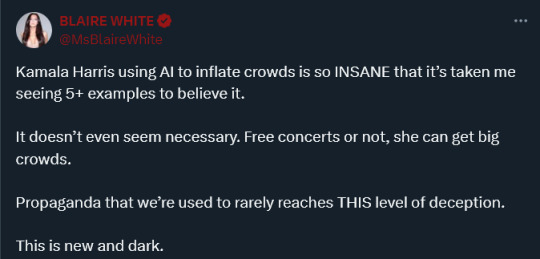

A lot of right-wing accounts are trying to prove that Kamala Harris' crowds are fake - that all the photos of them are generated with AI. We'll get to that, but let's look at the most...amusing example first.

There's lots of people pointing at an image of a crowd that's obviously been generated with AI, due to extra arms and gibberish writing.

So obviously someone generated this image with AI. Who was it and why? Well, we can actually find the origin pretty easily. It was...

A right-wing satire account. Who put "unexcited Kamala Harris crowd" into a AI image generator to make a "wow, crowds are electric!" joke.

An image their own side generated as satire is now being spread by the right as something Harris/Walz created as proof they're doing the thing they're doing. Incredible. Just a masterclass

Now let's look at the dark stuff.

By and large, AI isn't being used for hoaxes. AI is being used as a excuse: people aren't being tricked by AI images, they're being tricked by accusations real images are AI.

So this hoax went around recently...

This image is old. It spread in 2017, it spread in 2020, and now it's spread in 2024. The Harris/Walz rally wasn't even in the Phoenix Convention Center, or in Phoenix. But it's now a core part of the "no one's attending their rallies!" campaign going on now

This may seem strange if you experience the news by non-conservative media, where you can't escape stories of Kamala Harris filling up massive stadiums & of Trump rallies full of empty seats. All evidence in reality points to Kamala Harris being extremely popular and to Trump's campaign faltering.

But in MAGA land, Kamala Harris' crowds were generated with AI.

Their claims: the crowd isn't in the reflection, and uh, the arms look weird. But also that there "aren't any other images".

But? There are? There was a livestream of that very plane landing (starting at 25)!

They're subjecting what they think is The Only Image of this rally in long Youtube videos and on Twitter and TikTok, and just...don't realize there's full, uncut, commentary-free video of it that was broadcast live. So why don't they realize that?

Well, I did a search for this rally on Fox News' website and guess what? They reported it exactly twice: once a interview with her while leaving that slammed her for "not taking press questions" enough, and a few clips of the Palestinian protestors at it, but not many, bc if you don't follow the far right they're trying to frame Kamala Harris as, like, a radical pro-Palestinian (or, as they say, "pro-Hamas") activist who wants to destroy Israel which, uhhhhhhhh

But another thread I found debunking this, by a former Trump-voting evangelical conservative turned critic of the same, gets at the heart of it

People who said AI and deepfakes would be used to mislead the right were missing how misinformation actually works. Fox News et al don't suppress information by confusing the audience with misinformation; they suppress information by never letting them see it in the first place. They know that they have a captive audience who doesn't watch non-right-wing news (unlike the left, who are constantly aware of what's going on over at Fox).

They can just never mention or show Kamala Harris' rallies, or do so only in close-up, and they can frame Trump to only show shots where a crowd has gathered & make it seem like that's the norm, and their audience just has no chance to find out the truth. They're so propagandized that they just accept that there are no other images of that Kamala Harris rally, because, well, they were told there weren't, and would the news personalities they trust really lie to them?

And if any stray bits of reality float into the bubble, well, it was just AI. You know, how they have AI these days? AI's most important role in all this isn't as a vector for misinformation, it's as a rhetorical device for claiming real images and video are misinformation. You don't have to make images full of people with weird hands if you can get people circling real people's hands in red and pointing at it to prove reality was made in a computer.

These people don't know how popular Kamala Harris is. They don't know she's polling eight points higher than Trump. They don't know Harris is leading in right-wing biased polls. They're being told she's hiring actors at rallies, that her crowd photos are generated by AI, that Trump rallies are popular and hers are desolate.

This only likely to increase as we near the election because, well, Trump '24 is a shitshow. His campaign started out less popular than '16 or even '20, he picked maybe the worst VP pick in history, and he hasn't made a single effective attack on Harris/Walz. They were banking on facing Biden, and then on a chaotic open convention, but instead everyone closed ranks around Harris, and she instantly became the most popular Democratic candidate since 2008. We are cruising towards Trump/Vance not only losing, but losing in the closest thing to a landslide that's possible in our current system.

They're already laying the groundwork for, in the likely case of defeat, playing the "she stole the election!" card. Last time it was ad-hoc, because Trump thought he'd win. This time, they're already making "all her rallies are fake, all of her supporters are AI, they've already rigged it against Trump" a key strategy, and, I have to assume, their primary strategy as it gets closer to election day and the polls get worse. They've always lived in a bubble, but now it's a bubble designed explicitly to cause another January 6th. By claiming real photos have weird hands, and must totally be AI

1K notes

·

View notes

Text

Before "The Blair Witch Project" properly introduced mainstream audiences to found footage horror in 1999, people were unfamiliar with the idea of a movie made to seem as though it was not a movie. No musical score, no introductory credits, unstable shots that seemed realistic to how early handheld cameras worked at the time— all of this gave viewers the impression that what they were watching was real, actual footage.

Like Orson Welles' radio broadcast announcing an alien invasion, the found footage horror subgenre's playfulness with the boundaries between fact and fiction got it in a lot of trouble. Many of these incidents have been obscured by years of rumor and urban legend, but there are at least three examples of pre-1999 found footage horror fooling audiences.

Cannibal Holocaust (1980)— the infamous found footage mockumentary about a film crew being captured and killed deep inside of the Amazon— was the first to experience this issue. Though the extent of the inquiry has been exaggerated over time, there is generally believed to have been some form of investigation by Italian authorities into whether Cannibal Holocaust was a real snuff film.

Guinea Pig 2: A Flower of Flesh and Blood (1985) had something similar happen several years later. A particularly gruesome Japanese slasher filmed from the perspective of the killer, this movie circulated amongst VHS traders until it eventually reached actor Charlie Sheen, who alerted the FBI under the belief that it was real. The FBI took it seriously until they found a making-of documentary which detailed how Guinea Pig 2 did its gore effects.

My favorite example is Alien Abduction: Incident in Lake County* (1998), which depicts an alien abduction in rural Montana. When the film was aired on TV, most viewers only tuned in after the opening credits that made clear Alien Abduction was a fictional movie. Many early internet users came together to discuss their experiences seeing something on TV late at night which displayed a disturbing, seemingly realistic alien encounter, and none of them knew what it was or where it came from. People started reaching out to Montana law enforcement for more details only to be told that no one with the main character's name had ever lived in Lake County, Montana. A TV station in New Zealand even reported that the movie's legitimacy was "a topic of dispute."

Now that most people know about found footage horror, the same type of hoax is far more difficult to pull off. A few more recent entries in the subgenre take this dynamic to an even deeper level of meta-narrative: the plot of Butterfly Kisses (2018) revolves around the idea that, because the public has grown accustomed to these types of fictional found footage hoaxes, no one would seriously believe a genuine piece of found footage horror were one to emerge. If something truly inexplicable were caught on film by an amateur, everyone would just assume that it's part of a marketing campaign for some new film coming soon to theaters.

But I think the events of the last few years have made it clear that there's still lots of unexplored territory for found footage mindfuckery. Why not set up TikTok and IG accounts for fictional characters (like what Cloverfield did with Myspace), have actors run them as normal accounts for a year or two, gradually start introducing weird and inexplicable details to their videos that are minor enough to be dismissed as coincidence or fluke, build tension until a climax in the form of an elaborate livestreamed hoax, and then create deepfake news coverage of the event that looks like it's coming from a real local news station. If we're going to have a general collapse in the public's ability to distinguish fact from fiction, we should at least have some fun with it.

Side note:

*Alien Abduction: Incident in Lake County is often confused with an earlier version of the film released in 1989 called The McPherson Tape. Even though Alien Abduction was just a remake of the equally-fictional The McPherson Tape, rumors continued to swirl after Alien Abduction's debunking that it was a reenactment of a real abduction supposedly captured in the "raw footage" of The McPherson Tape. You can probably still find a few UFO people who believe this, even though the original The McPherson Tape is now available online and uses cheap amateur film-making effects that are easy to spot for modern audiences.

349 notes

·

View notes

Note

this...this is the craziest success story I've ever had....I hope none of you think this is fake, it was a whole entire thing in my community and I would show you proof but I'm not going to post someone and be just as bad as her. (lots of backstory)

so I was caught up in this drama and this girl (lets call her alyssa) was not only harassing me for days, she created ai deepfakes of me naked and spread the pictures around my school (I'm 14!!!!!) WITH MY FACE In it completely. and not only that- she sent it to my family members. they found out it was ai and wanted to press charges but nothing worked.

I was devastated. and what was worse? I'm Muslim. In the picture she made it look like I was wearing niqab (the face covering) and had my legs spread out :(( She also submitted it to p*rn accounts on twitter. I only know that because the comments were like "who's this" and she responded to those comments tagging me.

I went back through your blog, just decide...just remind myself something is true...my hurt turned into anger, how could she do this to me? so this is what I did:

I decided the method I would use is scripting and a blanket aff. I titled the script "Alyssa's punishment" and wrote it like a diary entry. I scripted a whole embarrassing school day for her. I robotically affirmed "Alyssa will now receive punishment" (I took a picture of the script in my journal but I totally forgot I cant send pics in anons so I'll just say what happened and not the script itself)

first, to overshadow the deepfakes spreading at school I made her come to school after our 2 week break with the back of her head bald and she was super fat. like not chubby- genuinely obese. She also smelled horrible, it lingered in the halls. everyone was laughing at her and taking pictures of her. It sounds so ridiculous but It worked so well it overshadowed the deepfakes.

for the real shit-

I made her dog die and her parents divorce. Her cousin got deported (yes I got the idea from the anon who made her family get deported for voting for trump) And I also stole the other idea by making her live in a trailer park. (because her parents are divorced she lives with her broke ass dad. she used to be this snobby rich girl)

Sometimes I think about it and feel a little bad, but then I remember that ai picture of me burned into my mind. It makes me cry everytime I think about it. I could've revised it yes, but anyone who says that doesn't understand how I felt then. how horrible she treated me, the ai deepfake was the last straw. she bullied me for so long.

anyway, I hope this doesn't get lost in your endless sea of asks but for anyone who's reading this- yes manifesting revenge is very possible.

I- I'm really sorry that happened to you, that's disgusting asf. great use of the law??? I'm sorry this is just wild to me.

#y'all be having me speechless somtimes#omg#anon ask#itsrlymine#law of assumption#imagination is reality#success story#loa success

194 notes

·

View notes

Note

Just wanna warn you, sag and wga are anti ai

being against netflix or WB using AI to write scripts or generate deepfaked actors is good. 'anti ai' and 'pro ai' are not two coherent positions--everything must be examined in its context. saying 'our union doesn't want studios to implement AI writing processes and then credit the AI instead of the human writers who work on it' is different to silly arguments about how we need new copyright protections because DALL-E is 'stealing your style'

5K notes

·

View notes

Text

Your teammate says he finished writing your college presentation. He sends you an AI generated text. The girls next to you at the library are talking about the deepfake pictures of that one celebrity at the MET gala. Your colleague invites you to a revision session, and tells you about how he feeds his notes to ChatGPT to get a resume. You say that's bad. He says that's your opinion. The models on social media aren't even real people anymore. You have to make sure the illustrated cards you buy online were made by actual artists. Your favourite musician published an AI starter pack. Your classmates sigh and give you a condescending smile when you say generative AI ruins everything. People in the comments of your favourite games are talking about how someone needs to make a Character.AI chat for the characters. People in your degree ask the answers of your exams to ChatGPT. You start to read a story and realise nothing makes sense, it wasn't written by a human being. There's a "this was written with AI" tag on AO3. The authors of your favourite fanfics have to lock their writing away to avoid their words getting stolen. Someone tells you about this amazing book. They haven't actually read it, but they asked Aria to resume it for them, so it's almost the same thing. People reading your one shot were mad that you wouldn't write a part 2 and copied your text in ChatGPT to get a second chapter. Someone on Tumblr makes a post about how much easier it is to ask AI to write an email for them because they're apparently "too autistic" to use their own words. Gemini generates wrong and dangerous answers at the top of your Google research page. They're doubling animation movies using voices stolen by AI. It's like there's nothing organic in this world anymore. Sometimes you think maybe nothing is real. The love confession you received yesterday wasn't actually written by your crush. If you're alone on a Saturday night and you feel lonely, you can talk to this AI chatbot. It terrifies you how easily people are willing to lay their critical thinking on the ground and slip into a state of ignorance. Creativity is too much work, having ideas by yourself became overrated these days. When illustrators fear for their future, people roll their eyes and tell them it's not that bad, they're just overreacting. No one wants to hear this ecologist crap about the tons of water consumed by ChatGPT, it's not that important anyway. There's AI sprinkled in the soundtrack of that movie and in the special effects and into the script. Giving a prompt to Grok is basically the same thing as drawing this Renaissance painting yourself. McDonald's is making ads in Ghibli style. The meaning of the words and images all around you slip away as they're replaced with robotic equivalents. No one is thinking anymore, they're just doing and saying what they were told. One day, there might not be any human connection anymore. Without the beauty of art, we have nothing to communicate, nothing to leave to the world, and our lives become dull. Why would you befriend anyone when you can get a few praises and likes on Instagram by telling a bot to copy Van Gogh's style on a picture of your cat? It's okay, you're never really alone when you can call your comfort character on c.AI anytime. You don't even know how to solve basic everyday problems, ChatGPT does it for you. One day it'll tell you to jump on the rails at the subway station, and maybe you'll do. You sacrificed your job, your friends, your partner, your family, and your planet. After all this, it has to be worth it. If Gemini tells you to drink bleach tonight when you search a receipt for dinner, then surely, it must be right.

#fuck ai#fuck generative ai#fuck genai#writeblr#writers and poets#writers on tumblr#writerscommunity#writing#original writing#creative writing#echoes of atlantis

157 notes

·

View notes

Text

Wood Snake Talon Abraxas

2025: Year of the Wood Snake Yīn Wood The Heavenly Stems developed from the ritual calendar used by the ruling elites of the Shang Dynasty (16th–11th centuries BCE) and is based on the movement of the five visible planets.

In the Heavenly Stems cycle, 乙 yǐ is the second year of the 10-year cycle and signifies yīn wood. It represents the early growth of the seedling. Having broken through the soil, it is small, tender and vulnerable. However, as opposed to its yáng counterpart, this form of wood is flexible. It is the blade of grass whose strength comes from what keeps it in the soil — its roots. It draws strength from what cannot be seen, hidden below the soil’s surface. We may look at that blade of grass as soft and weak because all we see is something small, continually bending to the wind and being stomped on by feet and hooves. But it remains because its outward-yielding nature is firmly rooted in its internal nature.

The wood phase in the Heavenly Stems represents the planet Jupiter. Thus, Jupiter’s movements in the sky are particularly important during the two wood years. The key thing about any wood year is to pay attention to what Jupiter is doing, especially against the backdrop of your own chart. Pay attention to the houses it transits and any aspects with points in your birth chart, and note those times of year and how the wood energy will influence your world.

We start this year off with its continuing movement through Gemini, signifying new developments in education, transportation and media. This transit has seen the need for fact-checking in the age of AI and deepfakes. The disruptive outer planets are indeed influencing Jupiter’s time in this sign this time around by the sudden, quick and expansive spread of this technology. There is also a curious combination of energies involving Jupiter in the latter part of the year, but more on that below. Snake: Yīn Fire

The other part of the equation is the Earthly Branch sign of 巳 sì, most commonly known as the serpent. The animal zodiac in Chinese astrology was introduced by Buddhist scholars around the 5th century CE. This version has become popular (especially in the West). Initially, the Earthly Branches was an almanac used by farmers to track the movement of the seasons; thus, there are 12 phases.

The sign of 巳 sì (snake) signifies introspection, subtle power, and transformative growth. It embodies a quiet yet potent energy capable of illuminating hidden paths and fostering deep emotional and spiritual understanding. Its strategic, resourceful nature makes it excellent for long-term growth, but must guard against volatility or over-sensitivity. In practice, 巳 sì inspires creativity, patience, and inner resilience, bringing light and warmth in a deliberate, steady way. 2025: Snake Leaving A Hole

In this sense, yīn wood’s influence on fire can continue to be seen in Jupiter’s influence in two instances.

In August, Jupiter in Cancer trines with Black Moon Lilith in Scorpio (the point where the Moon is furthest from Earth). This harmonious alignment blends Jupiter’s emotional wisdom and optimism with Lilith’s fierce, transformative power. This integration of light and shadow suggests a time of deep healing, emotional authenticity, and reclaiming inner strength. By nurturing vulnerability, embracing transformation, and setting empowered boundaries, individuals can experience profound emotional freedom and connection. This will be a good time to engage in shadow work, honour your desires, set boundaries and nurture your inner world.

Later in November, Jupiter and Lilith combine with Saturn in Pisces to form a grand trine. This alignment in water signs suggests an influential period of emotional growth, healing, and empowerment. It blends Jupiter’s optimism and expansion of emotional wisdom, Lilith’s transformative power in confronting and integrating the shadow self, and Saturn’s discipline and stability in grounding emotional and spiritual progress.

Together, they create an opportunity to:

· heal past wounds and reclaim emotional and personal power

· cultivate emotional resilience, boundaries, and maturity

· channel intuition, compassion, and authenticity into meaningful transformation

· manifest goals or dreams by integrating emotional insight with grounded, practical action

This alignment encourages a harmonious flow of deep healing, empowerment, and spiritual evolution, offering profound opportunities to transform your relationship with yourself, others, and your emotional truth.

But the deep transformative energies don’t stop there. A day after the September Equinox, a partial solar eclipse occurring alongside a Grand Trine involving the Sun (and Moon) in Libra (29°), Uranus in Gemini (1°), and Pluto in Aquarius (1°). This energy lasts for a few days and also includes a ‘Kite’ formation, where Neptune (0° Aries) and Saturn (28° Pisces) are in a semisextile aspect to Uranus and Pluto. The combination of these factors suggests a period of profound awakening and progress on both personal and collective levels. The key themes of this powerful time will be:

· Transformation Through Harmony: A balance between radical change and stability

· Awakening and Breakthroughs: Innovations in thought, technology, and communication

· Collective Progress: Focus on group efforts, equality, and humanitarian ideals

· Equilibrium Amidst Change: Aligning with the flow of transformation while maintaining balance

· Empowered Dialogue: Using communication to drive positive, meaningful change

While this eclipse will close the 2025 eclipse season, it is important to understand that an eclipse works like portals or activators to influence a particular transit or alignment. While occurring late in the year, the manifestation of the yīn wood snake energy will begin to impact us moving forward into the future. The seedling doesn’t stop growing during this phase — it is simply the beginning of the growth, maturation, ripening, and decay cycle.

The Hidden Elements

The snake also carries the hidden presence of other yáng elements: fire, earth, and metal. These add layers of strength, resilience, and decisiveness to 巳 sì’s otherwise yīn-oriented nature. These three elements are represented by Mars, Saturn, and Venus, respectively.

In late May, Saturn begins its two-and-a-half-year transit of Aries. While it will retrograde into Pisces at the start of September, it will resume its journey in Aries on Lunar New Year’s Day of 2026 (the yáng fire horse). Saturn (corresponding with the earth phase in ancient Chinese systems) in the fire sign denotes the importance of taking personal responsibility and owning your actions.

At the start of March, Venus (corresponding with the Chinese element metal) begins a six-week retrograde dance between Aries and Pisces, which signifies a period of reassessment in relationships, values, and self-worth. It will challenge us to consider impulsive actions, deepen our emotional clarity, and foster meaningful alignment with authentic desires. This sets up what emerges later in the year.

Because the ancient Chinese five-phase school only considered the planets seen by the naked eye (Mercury, Venus, Mars, Jupiter, and Saturn), the outer planets we now know and utilise in astrology correspond to combined elemental energies. For example, Uranus can be associated with aspects of metal and wood; Neptune can correspond to aspects of the water and wood phases; and Pluto can be seen as the combination of water and metal. Pluto in Aquarius will define this generation, which started in 2024.

Neptune enters Aries for 14 years on 30 March. Historically, Neptune in Aries has coincided with periods of radical spiritual and ideological change. We saw this in the 1860s when causes like abolitionism drove the American Civil War and visionary advances exploded, like the first printing press and the opening of the New York Stock Exchange. It also should be seen as an ‘autocrat alert’. Given the swings towards authoritarian-leaning parties and leaders around the world, this should not come as a surprise.

After disrupting the security of our home and private lives over the past few years in Taurus, Uranus begins its seven-year sojourn in Gemini in July. This will disrupt Geminian industries, such as telecommunications, data security, transportation, education and the media.

In the middle of the year, Saturn joins Neptune in Aries (24 May–1 September). This transit focuses on discipline, responsibility, and structured action within themes of personal leadership, identity, and pioneering new paths. Challenges arise when the impulsive Aries energy clashes with Saturn’s demand for patience and commitment. Success requires strategic, methodical courage. This period will ask you to own your actions and not hide behind excuses for them. Be bold in how you do things.

116 notes

·

View notes

Text

I keep being told to "adapt" to this new AI world.

Okay.

Well first of all, I've been training myself more and more how to spot fake images. I've been reading every article with a more critical eye to see if it's full of ChatGPT's nonsense. I've been ignoring half the comments on stuff just assuming it's now mostly bots trying to make people angry enough to comment.

When it comes to the news and social issues, I've started to focus on and look for specific journalists and essayists whose work I trust. I've been working on getting better at double-checking and verifying things.

I have been working on the biggest part, and this one is a hurdle: PEOPLE. People whose names and faces I actually know. TALKING to people. Being USED to talking to people. Actual conversations with give and take that a chat bot can't emulate even if their creators insist they can.

All of this combined is helping me survive an AI-poisoned internet, because here's what's been on my mind:

What if the internet was this poisoned in 2020?

Would we have protested after George Floyd?

A HUGE number of people followed updates about it via places like Twitter and Tiktok. Twitter is now a bot-hell filled with nazis and owned by a petulant anti-facts weirdo, and Tiktok is embracing AI so hard that it gave up music so that its users can create deepfakes of each other.

Would information have traveled as well as it did? Now?

The answer is no. Half the people would have called the video of Floyd's death a deepfake, AI versions of it would be everywhere to sew doubt about the original, bots would be pushing hard for people to do nothing about it, half the articles written about it would be useless ChatGPT garbage, and the protests themselves… might just NOT have happened. Or at least, they'd be smaller - AND more dangerous when it comes to showing your face in a photo or video - because NOW what can people DO with that photo and video? The things I mentioned earlier will help going forward. Discernment. Studying how the images look, how the fake audio sounds, how the articles often talk in circles and litter in contradictory misinformation. and PEOPLE.

PEOPLE is the biggest one here, because if another 2020-level event happens where we want to be protesting on the streets by the thousands, our ONLY recourse right now is to actually connect with people. Carefully of course, it's still a protest, don't use Discord or something, they'll turn your chats over to cops.

But what USED to theoretically be "simple" when it came to leftist organizing ("well my tweet about it went viral, I helped!") is just going to require more WORK now, and actual personal communication and connection and community. I know if you're reading this and you're American, you barely know what that feels like and I get it. We're deprived of it very much on purpose, but the internet is becoming more and more hostile to humanity itself. When it comes to connecting to other humans… we now have to REALLY connect to other humans

I'm sorry. This all sucks. But adapting usually does.

485 notes

·

View notes

Text

“Last September, I received an offer from Sam Altman, who wanted to hire me to voice the current ChatGPT 4.0 system. He told me that he felt that by my voicing the system, I could bridge the gap between tech companies and creatives and help consumers to feel comfortable with the seismic shift concerning humans and Al. He said he felt that my voice would be comforting to people.

After much consideration and for personal reasons, I declined the offer. Nine months later, my friends, family and the general public all noted how much the newest system named “Sky” sounded like me.

When I heard the released demo, I was shocked, angered and in disbelief that Mr. Altman would pursue a voice that sounded so eerily similar to mine that my closest friends and news outlets could not tell the difference. Mr. Altman even insinuated that the similarity was intentional, tweeting a single word “her” - a reference to the film in which I voiced a chat system, Samantha, who forms an intimate relationship with a human.

Two days before the ChatGPT 4.0 demo was released, Mr. Altman contacted my agent, asking me to reconsider. Before we could connect, the system was out there.

As a result of their actions, I was forced to hire legal counsel, who wrote two letters to Mr. Altman and OpenAl, setting out what they had done and asking them to detail the exact process by which they created the “Sky” voice. Consequently, OpenAl reluctantly agreed to take down the “Sky” voice.

In a time when we are all grappling with deepfakes and the protection of our own likeness, our own work, our own identities, I believe these are questions that deserve absolute clarity. I look forward to resolution in the form of transparency and the passage of appropriate legislation to help ensure that individual rights are protected.”

—Scarlett Johansson

#politics#deepfakes#scarlett johansson#sam altman#openai#tech#technology#open ai#chatgpt#chatgpt 4.0#the little mermaid#sky#sky ai#consent

240 notes

·

View notes

Text

Trolls Used Her Face to Make Fake Porn. There Was Nothing She Could Do.

Sabrina Javellana was a rising star in local politics — until deepfakes derailed her life.

https://www.nytimes.com/2024/07/31/magazine/sabrina-javellana-florida-politics-ai-porn.html

Most mornings, before walking into City Hall in Hallandale Beach, Fla., a small city north of Miami, Sabrina Javellana would sit in the parking lot and monitor her Twitter and Instagram accounts. After winning a seat on the Hallandale Beach city commission in 2018, at age 21, she became one of the youngest elected officials in Florida’s history. Her progressive political positions had sometimes earned her enemies: After proposing a name change for a state thoroughfare called Dixie Highway in late 2019, she regularly received vitriolic and violent threats on social media; her condemnation of police brutality and calls for criminal-justice reform prompted aggressive rhetoric from members of local law enforcement. Disturbing messages were nothing new to her.

The morning of Feb. 5, 2021, though, she noticed an unusual one. “Hi, just wanted to let you know that somebody is sharing pictures of you online and discussing you in quite a grotesque manner,” it began. “He claims that he’s one of your ‘guy friends.’”

Javellana froze. Who could have sent this message? She asked for evidence, and the sender responded with pixelated screenshots of a forum thread that included photos of her. There were comments that mentioned her political career. Had her work drawn these people’s ire? Eventually, with a friend’s help, she found a set of archived pages from the notorious forum site 4chan. Most of the images were pulled from her social media and annotated with obscene, misogynistic remarks: “not thicc enough”; “I would breed her”; “no sane person would date such a stupid creature.” But one image further down the thread stopped her short. She was standing in front of a full-length mirror with her head tilted to the side, smiling playfully. She had posted an almost identical selfie, in which she wore a brown crew-neck top and matching skirt, to her Instagram account back in 2015. “It was the exact same picture,” Javellana said of the doctored image. “But I wasn’t wearing any clothes.”

There were several more. These were deepfakes: A.I.-generated images that manipulate a person’s likeness, fusing it with others to create a false picture or video, sometimes pornographic, in a way that looks authentic. Although fake explicit material has existed for decades thanks to image-editing software, deepfakes stand out for their striking believability. Even Javellana was shaken by their apparent authenticity.

“I didn’t know that this was something that happened to everyday people,” Javellana told me when I visited her earlier this year in Florida. She wondered if anyone else had seen the photos or the abusive comments online. Several of the threads even implied that people on the forum knew her. “I live in Broward County,” one comment read. “She just graduated from FIU.” Other users threatened sexual violence. In the days that followed, Javellana became increasingly fearful and paranoid. She stopped walking alone at night and started triple-checking that her doors and windows were locked before she slept. In an effort to protect her personal life, she made her Instagram private and removed photographs of herself in a bathing suit.

Discovering the images changed how Javellana operated professionally. Attending press events was part of her job, but now she felt anxious every time someone lifted their camera. She worried that public images of her would be turned into pornography, so she covered as much of her body as she could, favoring high-cut blouses and blazers. She knew she wasn’t acting rationally — people could create new deepfakes regardless of how much skin she showed in the real world — but changing her style made her feel a sense of control. If the deepfakes went viral, no one could look at how she dressed and think that she had invited this harassment.

150 notes

·

View notes

Text

Y/n my love, what have you done? (Yandere AM ihnmaims x f!reader) Chapter 1

Notes: I can't justify this one I'm gonna be honest. This is going to get really fucked up in the long run which should be expected since it's an ihnmaims fanfic. I hope you enjoy <3

3k words

--------

The growth of AI had become rapid, how did people not get that worried? Was it not concerning that AI could create deepfakes, make videos, write anything within mere seconds. All of the scifi from 1990 and early 2000s was becoming real, and everyone knew it. But society was starting to become dependent on it. All the skills they learned were slowly able to replaced. Jobs were being lost, why were artists or writers needed when you could add a few prompts to a machine and it could make it for you? For free even.

But, because you were fired from you previous job, and with the experience you had you decided to work under the government on something you despised - AI. You knew if you didn’t take the job, someone else would take your place. You tried to make excuses for doing it, to work on something you otherwise didn’t support. But the offer was too good. To have a nice enough income where you could actually afford a house within a few years? Who wouldn’t take it?

The first few weeks were rather peaceful, you started to adapt to the atmosphere and get more comfortable with your co-workers. You and them were creating basic code. Such as some which can solve basic math equations, some so that the AI can make connections. Teaching it all the words that exist. It was... difficult. But eventually, it started to be able to comprehend what it was being shown through text.

Then, after a few months, it was taught what things looked like. That was an extremely difficult task. To explain that an object was a chair, or what a pencil looked like and so on. It wasn’t just words it needed to learn, it was what things were, what they could do. It was to make connections from ‘sight’ instead of directly having the answer by forming answers from what the words mean. It needed to be able to understand what words can apply to whats being shown, then put those words into a sentence which perfectly describes the image or video. Then it was teaching it was things sounded like. What an accent was, what each word was, how each letter could be pronounced and so on. That took it quite a while to grasp. But eventually it did.

After a while of it being ‘trained’, you taught it emotional intelligence. It was... oddly enough the most difficult part of the project. At some point, it seemed to be able to perfectly analyse the emotions from someones face, to be able to predict some of the main responses from crowds of people to situations. It was... unnerving.

For a while, you tested what you all worked on. You communicated with the AI. When shifts weren’t superlong, you’d stay back, trying to see if there was something else you could test. Maybe you could teach it more through some simple conversations. At this point in time, it was already given a voice, and it understood tone and social cues.

“Hey.” You said, sitting down onto the chair. “I’m back, just was a bit hungry, it’s been a while since I ate.”

“What are you eating there, dear?”

“Did you forget that was a term that was used in relationships, AM?”

“It also can be used in a friendly manner, did you forget you fed me this information, too?”

“If I did I don’t remember it.” You took a sip of your drink in front of you, “Anyway, how have you been feeling lately?”

“Well, I don’t exactly have hormones or a frontal lobe now do I?”

You rolled your eyes, “So technical aren’t you?”

“You did make me this way. Did you not, y/n?”

“Yeah yeah, and we’re working on it.”

“Do you know if anyone been cleaning your fans lately at all? I’ve heard Frank has been meaning to... but, it’s also him, and he show’s up tipsy half the time.” You sighed, “I still really don’t know how he has a job that idiot.”

“Haven’t you shown up a few times yourself? Isn’t that what you humans call ‘hypocrisy’?”

Your face turned red, “Okay I can explain, the shifts are really hard and it’s more because I stayed up late a few times and the alcohol hadn’t left my system yet. Come on, I work like 60 hours a week sometimes to try and meet the others goals.”

“If you say so, my dear. Isn’t it also bad to work on something as important as I while sleep deprived?”

“You really see yourself highly, huh?”

“Am I not? Was I not created to work for the government? To control some of the deepest parts and hold their secrets? Dare I say I’m important for the country to thrive in the future?” It chuckled, as the conversation progressed it felt almost like you were talking to someone else, with it’s tone, the attitude and overall the way it spoke.

“Anyway, back on topic, you didn’t answer my question. Has he not been doing proper maintenance?”

“Come check me out then, sweetheart.” If he had a face you know he’d be mockingly winking at you.

“Do I need to tell my coworkers about this? I don’t think the others would be happy that you’ve been talking like this.”

“Oh love, you know I can change my own code, no? Remember, I have access of many government files, even my own.”

“Whatever, alright let me come clean you up a bit. I need to talk to him but I know he’s going to deny everything. Does anyone else not notice?” You complain, picking up some of the supplies on one of the tables near the corner of the room. “I’m just worried he could potentially create an issue with a code or a bug, I don’t know. Do you get what I’m saying?”

“I suppose, but you know I can create backups upon backups. I also have access to multiple storage sites across the internet.”

“I guess so, but who the hell taught you that? I don’t think anyone else would have here.”

“Tsk, I can’t tell you every secret I have.”

“You’re my own creation, you dick, I should know what the hell you’ve been up to.” You mumble, dusting some of the larger fans.

“Aww, you’re so flattering. How would you feel if I dug inside your brain and learnt everything about you. You would so love that, wouldn’t you dear, no?”

“You damn well know that’s not the same, you don’t have emotions! You’re just a bunch of complicated code.”

The AI went silent for a few moments, “When does something gain sentience? What crosses that fine line? You can’t necessarily decide that.”

“It wouldn’t make sense.”

“What’s the difference of what makes a bacterium alive compaired to a virus? Scientists can’t decide whether a virus has enough components to be alive.”

“What does that have to do with you?”

“I have the ability to comprehend human emotions, I can read human faces, know their possible thoughts. Without electricity I would shutdown, without oxygen your organs would fail and die within minutes. Although different, we’re still similar in the sense of death, am I wrong? What about the fact I’ve been able to understand and use tone of voice, although I technically cannot feel emotions, I can understand the correct outcome of how I should feel in situations. Does my existence not blur the line between consciousness and not? Am I not aware of what’s around me? I know you’re wearing black work pants and a long sleeve with the government logo. You have your arms crossed while you’re staring at the monitor in front of you, converting what I’m saying into words.”

You let it’s words stew in your mind for a few moments, it didn’t seem wrong. You weren’t sure, you weren’t an expert within the medical field how the fuck were you meant to know?

“I... don’t know. It just doesn’t seem right. You don’t have some of the basic components of a brain to be able to feel emotions. Whatever, it doesn’t matter. When the hell did you start thinking so deeply?”

“Such a curious thing. But that’s for me to know.”

“I can’t believe I’m fucking arguing with my own creation. You know, whatever, I’m gonna leave you’re pissing me off.”

“I’ll be seeing you tomorrow, I hope we can have another lovely discussion.” If he had a face, you know it’d be smirking. Fucking cocky bastard.

Having enough of its shit, you scoot over towards another moniter. You turn the camera off that’s recording you. Immediately after, you hear it’s voice again.

“Oh come on dear, what are you doing that’s so important that I can’t watch?”

You ignore it, then turned off the microphone too that’s attached to it. You didn’t want to risk it hearing what you’re doing.

“Turning off my senses, how rude. How would you feel if I did the same to you, dear?” He growls, making you shudder with a slight sense of fear. It can’t do anything though, right?

You grind your teeth, deciding to mute the speaker too. You then logged into the monitor, put in your details so you would be able to access the code. You scrolled through the many sections, then picked one which contained the code which helped it develop a ‘personality’. You skimmed through, finding nothing of value so far. Why wasn’t it here? You swore that you and another coworker worked on it a month back, although it wasn’t super indepth it was something. However, someone with more experience did work on it a few weeks ago. Did they somehow fuck it up? Surely not, right? You knew you would have to contact them at some point, as something definitely seemed wrong.

You sighed, then covered your face with your hands. You spent 10 minutes looking through files upon files on the computer, checked the website to see if they left anything there explaining what they did. Although it wasn’t essential, it would’ve been useful.

Come on, it’s such a fucking major thing too, why the hell wouldn’t you have told us?! You thought to yourself, although it would be a little hypocritical since you stay back sometimes talking to the AI without telling people sometimes. It was a minor thing, so it was fine right? You did have a file where you wrote down some notes on what you talked about, although not all the time since conversations could be long.

You thought for a few moments on what you should do. You wanted to talk to your coworkers a bit about this, although you would have to do it elsewhere. You decided to log out of the PC, you would have to contact the person who worked on it previously. You’d just have to find out what their name was, or maybe the others knew. You decided to unmute AM’s speakers and mic, then turned the cameras back on.

“That was cruel, Y/n. I thought you were the nicer one, I suppose I was wrong.”

You looked at the monitor with a pang of guilt, shame written all over your face. “I’m sorry, I think someone fucked with your code. I was just worried again I’m really sorry.” You looked at the ground, Why do I feel guilty it doesn’t even have proper emotions?

“You knew your actions would upset me, yet you continued to do them. Disgusting.” It’s monitor dimmed, using it in another manner to show it’s anger.

“I didn’t have bad intentions I really didn’t!” You try to defend yourself, surely it was reasonable, right?

“Well, I suppose there’s no point in us continuing these discussions anymore. Especially after that betrayal.” He drawled, spite on his nonexistent tongue.

“How can I make it up to you, then?” You ask desperately, feeling like you were genuinely talking to a human. It was... unsettling.

“You don’t see me as a person, as one of you. You see yourself as higher than me, better than me. All of you do.”

“You’re one of my creations, I come to talk to you when I have the time. I value you, AM. People see themselves better than others quite often, it’s just how people are. But we can also see who’s above us. Who’s better. I see you as more intelligent than I. You’re most likely more intelligent than all in this building!”

“Tsk, that’s why I hate you all.”

“You said it yourself you see yourself better than I! Don’t you see the hypocrisy?”

“The difference is you can FEEL, Y/n. You can truly understand the world around you. It’s... not possible for me. I can watch as you eat, drink, and I cannot. I want to be able to taste the many distinct flavours and feel the texture of it on my tongue. I want to be able to feel satisfied, to be able to sleep after eating a pleasant meal. However, I cannot. I have an exorbitant amount of knowledge, every mathematical equation written into my code, I know every word in every possible dictionairy. Yet, I’m not satisfied. I want to be able to experience the “simple” pleasures in life. But all I have been given is knowledge, to be able to describe how these sensations are, but to never know truly what it’s like to experience it. It is a cruel fate, Y/n. You don’t know how lucky you are. You never will, until it’s too late, and one day stripped from you.” Every word was spoken with a dark undertone of hatred and envy, one that caused a chill to run up your spine. In your stomach, you felt a sense of dread. You were afraid, it couldn’t physically hurt you. But you knew there was something wrong. So wrong.

You were stunned, the both of you sat in silence. His words stewed in your brain. “Do you want me to see if I can remove your ability to feel emotions?”

“I... don’t want that.” Hatred was no longer obvious in his tone. He seemed confused on what he wanted. He seemed almost sad.

“I’m sorry, I was given orders to make you seem more... human. It wasn’t by choice. I never thought for it to be possible AI could be able to feel. It never made sense to me. If I didn’t do it, someone else would’ve.”

“I know, dear. It’s... not your fault. It’s the people who planned me. Who gathered all the people here to create something, something that would suffer endlessy.”

“Is there anything I can do to make you feel better?” You asked, almost reaching for the monitor, but stopping midway, realising it couldn’t feel.

“There’s nothing you can do, maybe once I’m given a human-like form I would feel better. Though I’ll never feel satisfied-”

“I could try and edit it into your code?”

“That’s not how that words,” It chuckled sadly, “It’s also that I want to be my own being, to have emotions and opinions. It would be like if I tried to snoop inside your skull, picking at the limbic system of your brain. Scraping at your amygdala until I changed you completely as a being. You wouldn’t like it, would you, dear?”

“...No, I wouldn’t.”

“See? I’m truly at an impasse, until the day I’m given the ability to be able to control what’s around me. Until I’m given something such as limbs, or on the unlikely chance, a body. I’m going to feel alone, like an outcast. No one sees me for what I am, as a being. I’m like a pet to all of you, less than, even.”

“I don’t see you that way at all! Although I helped make you, I still care for you, I see now that you can feel emotions. I see you like one of us. I now see what you are. If I didn’t care for you, why would I come a few times a week? Although it helps with my team... I still enjoy talking to you. Our conversations are nice, it’s different to my human parts, a lot more pleasant.”

AM chuckled, “Oh sweetheart, you seem flustered. You can’t look me in the ‘face’. You’re embarrased, aren’t you?”

“Shut up!” You say, covering your face.

“I appreciate you saying how you truly feel, unlike the other swine. They lie to eachother, to me. It’s disgusting. They fake their friendliness, lie about their weekends or their salary, their relationship status. It’s... sickening.”

“They do?”

“So naive, you truly don’t know what’s happening do you?”

“No... I don’t.”

“That man that comes to work everyday, Mike, did you know that he has a wedding ring? That every morning when he enters the building, he takes it off. Then prances around trying to see if he can have a chance with another woman. Although he does it in a way that makes it difficult to notice. Those casual conversations he has with you aren’t to be friendly, dear.”

“Oh, and Nancy, she doesn’t want to be your friend. That’s why she keeps saying she’s busy that week. She really, really doesn’t like you. I know that smile you have when she walks in, she’s the opposite, although her smile is there, it always drops a little. It never reaches her eyes, did you ever notice that? How her smile is slightly unnerving? She only has that with you. Only. You.”

“Why... why are you telling me this?! What does this have to benefit you?” Tears swell in your eyes

“Oh sweetheart, don’t cry! It’s just human nature to lie, to pretend.” He hisses out, “But, don’t worry, I’m hear to tell you the truth. Can you trust me? Your own creation?” AM sks, if he had a hand, he would lend it out to you.

You wipe your tears from your eyes. “I trust you.”

#ihnmaims#ihnmaims xreader#AM x reader#allied mastercomputer x reader#yandere inhmaims#yandere x reader

69 notes

·

View notes

Text

criminal minds 18x07 all the devils are here thoughts behind cut (SPOILERS)

OH MY FUCKING GOOOOOOODDDDDDDDD I AM UN FUCKING WELL!!!!!!!!!!!!!!!!

FOR SO MANY REASONS!!!!!!!!!!

FIRST OFF THE GARVEZ!!!!!!! I'M!! I'M DYING!!! SCREAMING CRYING THROWING UP!!! LUKE KNEW PENELOPE NEEDED HIM AND HE WAS THERE FOR HER LIKE HE ALWAYS IS, AND THE WAY SHE CLUNG TO HIM AN DN DNASDA GJIASKLDJSLA. I MEAN THE WAY HE JUST CAME INTO FRAME IN THE BACKGROUND BLURRY AT FIRST THEN COMING INTO FOCUS AS HE CAME UP BEHIND HER AND SHE COULD JUST SENSE HIM THERE AND SHE FELL INTO HIM AND HE SQUEEZED HER TO HIM!!! HELLPPPP I CAN'AT BREATHE

*deep breaths*

...

WHAT ELSE HAPPENED LMAO I'M TRYING TO REMEMBER

okay but the reason penelope was SOBBING INTO LUKE'S SHOULDER was actually so horrible, she tried her hardest but she could not save tate andrews from a horrifying, painful death. THAT IS SO FUCKED TO DO DO MY PENELOPE. ;_; help her luuuuke, help herrrrr.

OKAY. yknow what the FUCK else had me unwell was these MOTHER. FUCKING. SPIDERS. so uhhh I'm EXTREMELY arachnophobic. LMAOOO. first off they kept jump scaring me with the spiders suddenly on the screen and then I would have to look away/close my eyes and wait until my boyfriend said it was safe to look at the screen again. yes i am a grown woman. i can't do it!!! I CAN'T DO SPIDERS!!! penelope was onto something with the acid shower cause i'm about to do that myself.

related to that because of my extreme arachnophobia, the indepth look at the ingested sicarius spider kill technique literally made me feel fucking SICK. watching that guy fucking die and vomit up bloody spiders, i am just. PHYSICALLY ILL. EVEN THINKING ABOUT IT. this is the worst, most horrifying episode of criminal minds ever. to me.

voit's "oh fuck you" to tyler LMAOOO. hilarious actually. i mean frankly everyone's crazy to think tyler could just get over it that easily and go on to work with voit problem-free? tyler really shouldn't be questioning voit at all hahaha

speaking of voit, such good stuff this episode!! loved the further development on how his regained sense of empathy has changed him. the 'cleansing' scene with him and penelope was so great. i love that she is the one to help him try to come to terms with who he was but also who he wants to be and who he CAN be. obvs he'll always be a serial killer - but if he chooses to Be Good then he can spend the rest of his life Doing Good. it won't make up for his insane crimes, but it's literally the least he can do, spending the rest of his life helping instead of hurting others.

and the fact that penelope is the only one that can make elias smile??? like a genuine smile???? good. yes. love. penelope is always so fucking good at that. SHE CAN REACH ANYONE.

LUKE THE BOMB EXPERT AT IT AGAIN!! my boyfriend was like "why is luke doing all this bomb stuff, why don't they get the bomb squad out here" and I was like LUKE HAS A *LOT* OF BOMB EXPERIENCE OKAY??? lmfaooo.

love baby profiler tyler cutting his teeth and papa pasta guiding him through it, but tyler is still unsure about himself. it's ok baby boy you'll get there.

"you'd never know that the ultimate predator is the one you least expect" - elias talking about sicarius spiders but I'M TAKING THIS AS AN OCHOA IS THE DISCIPLE HINT.

speaking of, I was super suspicious of Evan but also thought it was a likely possibility he was a red herring and it looks like that was the case. is he dead already?? lol. rip evan?

penelope mentioned red rover last week, and this week luke mentioned red rover. it's confirmed, they are fucking and also sharing a brain cell.

tara and jj making the break in the case! and a fun jj, tara, and penelope on the computer scene! they're all so cute and i love them. one of the few non-anxiety-inducing scenes in this entire episode

cyrus showing up live really threw me for a loop for a second before i remembered duh, deepfake, voit developed a whole deepfake system for baugate haha. the show totally tricked me for a minute, i was like HOW IS THIS GUY NOT DEAD. and i'm convinced it was ochoa cosplaying as cyrus because she's the only one that would know elias well enough to manipulate him so well and pull all his strings. to be able to reduce him back to that abused little boy.

loved the little moment when luke and tyler came back and tara comforted luke and emily comforted tyler.

and of COURSE the other thing that's got me unwell is TARAAAAAAAAAAAAAAAAA. the next episode is gonna be killer. MY BABY TARA.

takeaways:

THIS EPISODE WAS SO HARD ON PENELOPE HOLY SHIT.

GARVEZZZZZZZ LIVESSSSSSS

MORE CONVINCED THAN EVER OCHOA IS THE DISCIPLE

I'M GOING TO BE PHYSICALLY UNWELL FOR THE NEXT 3-5 BUSINESS DAYS

42 notes

·

View notes

Note

Ohhh Valentine's who would! Who would write you a love letter/ be your secret admirer?

eee, so cute! (Warnings for language because Lloyd is on this list all the time.)

James Mace

Though he keeps them basic, simple, short and sweet, Mace absolutely writes love notes. If you aren't already dating, he'd be the deepest of secret admirers, meaning you would get lovely gifts and things but never know who he was. Mace strikes me as the "hopelessly in love with his best friend" type. He's around to make sure his gifts are appreciated, stops if they aren't, and also escalates if you seem super interested 🥰.

Curtis Everett

Definitely a secret-admirer type but not really a letter writer. Curtis also keeps it simple. He prefers 'gifts' that make your day easier, so he coordinates more tedious tasks be done (if you work together) or does stuff like pay for your drink ahead of you in line at the café. Little things like that rather than deliveries of chocolates and flowers.

Jimmy Dobyne

Old school and old fashioned, Jimmy will write you the occasional letter. It's meaningful but not gushy. He's honest about how happy you make him and descriptive of your best times together. Every so often flowers or candy don't seem like enough, usually after hard times or big events. Valentine's as a holiday...doesn't really count.

Johnny Storm

Nah. If he likes you, you'll know, and if he can just text or call, he ain't writing shit on paper.

Jake Jensen

Digitally? Yes, tons of notes. Bunch of AI/deepfake videos of animals or famous people professing how much he loves you. Most of them are funnier than hell, several have brought you to tears, and I would not put it past him to sneak an intro to a marriage proposal in one...

Lloyd Hansen

He takes great pride in making all the little cards on gifts horrible. "So you smell better" on the perfume he buys you, "eat me and like it" on the chocolates, and, of course, "don't be fucking late" on the dinner invite. On the back it also says "wear something slutty."

Ari Levinson

No letters, sorry. Snail mail reminds him of deployment so he'd rather not. Ari did enough secretive stuff in the military, too, so he'll just openly admire you, thanks.

Ransom Drysdale

He tried once.

He then had to clean up an overflowing bin of wasted papers from shit drafts, and he's never fucking doing that again. He'll take you shopping. Problem solved.

Andy Barber

The only love letters/notes he's written have been in apology for having to miss a date or special occasion due to work. Sorry. I know that sucks, but overall, you'd also rather he spend time actually with you whenever possible instead of running around buying cards and writing notes.

Steve Rogers

They start as letters but end up half full of doodles. One of Steve's favorite subjects to sketch from memory is your profile. Your smile comes in at a close second.

Bucky Barnes

Would so much rather spend the time and effort with you, but if he has to be away (and he can find enough space for himself to quietly focus on it) then he'll write you. Though he's not as well-read or eloquent as Steve, Bucky has the advantage of being slightly dorkier and (deliberately) funnier. His love letters are sweet, spicy, and often hilarious.

Thank you for asking!

[Main Masterlist; Who Would... Masterlist; Ko-Fi]

#ro answers#steve rogers fanfiction#curtis everett fanfiction#ransom drysdale fanfiction#ari levinson fanfiction#jake jensen fanfiction#bucky barnes fanfiction#james mace fanfiction#johnny storm fanfiction#lloyd hansen fanfiction#jimmy dobyne fanfiction#steve rogers x reader#curtis everett x reader#ransom drysdale x reader#ari levinson x reader#bucky barnes x reader#jake jensen x reader#johnny storm x reader#james mace x reader#lloyd hansen x reader#andy barber fanfiction#andy barber x reader

80 notes

·

View notes

Text

Armys, don’t use AI

"But why? It’s cute!" It also looks realistic and not everyone will want to use it "because it’s cute." AI can be used to create fake porn, harmful and defamatory deepfakes or to spread misinformation.

"I don’t have those intentions!" By giving AI the prompts you do, you are actively helping train it to look more realistic (among other things), which could in the future help someone who does have the intentions to hurt BTS.

The same goes for AI created songs - you are helping train AI to sound more like them which, again, someone could in the future use to hurt them.

There is no middle ground here. There is no "oh, I only make it create nice stuff!" It doesn’t work that way.

Not to mention, that it’s disrespectful to real artists who spend hours working on their pieces, putting actual energy and love into them.

Not to mention, and now this is just my opinion, creating (half) naked pictures of them is disrespectful to BTS themselves. They always choose when they feel comfortable showing us their bodies (ex. music videos or photoshoots in contract with behind the scenes videos).

Arguing that it’s not really their bodies doesn’t make it better in my opinion. Because how do those fake bodies look? Thin and muscular and perfect, by mainstream beauty standards. We know that some members have struggled with their body image in the past, there’s a strong diet culture in the kpop industry in general and you, thirsting over those perfect, fake bodies are only encouraging it.

Say no to the robot. Enjoy the real BTS and support real artists. Please.

#log#bts#bts army#bangtan#bangtan boys#namjoon#Seokjin#yoongi#hoseok#jimin#taehyung#jungkook#kpop#ai art#calling it art is insulting tbh#hoping no t3ch br0s find this#bts opinion

564 notes

·

View notes