#distributed database architecture

Explore tagged Tumblr posts

Text

Data Unbound: Embracing NoSQL & NewSQL for the Real-Time Era.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in Explore how NoSQL and NewSQL databases revolutionize data management by handling unstructured data, supporting distributed architectures, and enabling real-time analytics. In today’s digital-first landscape, businesses and institutions are under mounting pressure to process massive volumes of data with greater speed,…

#ACID compliance#CIO decision-making#cloud data platforms#cloud-native data systems#column-family databases#data strategy#data-driven applications#database modernization#digital transformation#distributed database architecture#document stores#enterprise database platforms#graph databases#horizontal scaling#hybrid data stack#in-memory processing#IT modernization#key-value databases#News#NewSQL databases#next-gen data architecture#NoSQL databases#performance-driven applications#real-time data analytics#real-time data infrastructure#Sanjay Kumar Mohindroo#scalable database solutions#scalable systems for growth#schema-less databases#Tech Leadership

0 notes

Text

Explore These Exciting DSU Micro Project Ideas

Explore These Exciting DSU Micro Project Ideas Are you a student looking for an interesting micro project to work on? Developing small, self-contained projects is a great way to build your skills and showcase your abilities. At the Distributed Systems University (DSU), we offer a wide range of micro project topics that cover a variety of domains. In this blog post, we’ll explore some exciting DSU…

#3D modeling#agricultural domain knowledge#Android#API design#AR frameworks (ARKit#ARCore)#backend development#best micro project topics#BLOCKCHAIN#Blockchain architecture#Blockchain development#cloud functions#cloud integration#Computer vision#Cryptocurrency protocols#CRYPTOGRAPHY#CSS#data analysis#Data Mining#Data preprocessing#data structure micro project topics#Data Visualization#database integration#decentralized applications (dApps)#decentralized identity protocols#DEEP LEARNING#dialogue management#Distributed systems architecture#distributed systems design#dsu in project management

0 notes

Text

Decentralization: Beyond Finance and Into Freedom

The Age of Centralized Limits

For decades, we’ve lived in a world dominated by centralized systems. From financial institutions to healthcare and governance, centralized structures have dictated how we interact, innovate, and even live our lives. But cracks in these systems have become increasingly visible. Financial crises reveal the fragility of our monetary systems. Data breaches and surveillance expose the vulnerabilities of centralized technology. Censorship and institutional corruption highlight the dangers of entrusting too much power to a few.

These failures beg the question: Is there an alternative? Enter decentralization. Often associated with Bitcoin and cryptocurrency, decentralization offers more than financial freedom. It represents a paradigm shift that can empower individuals across multiple facets of life. Could decentralization be the key to unlocking both individual sovereignty and a more equitable future? Let’s explore.

Bitcoin as the Spark

The story of decentralization begins with Bitcoin. In 2009, Bitcoin introduced a revolutionary idea: a financial system that doesn’t rely on central banks or intermediaries. At its core, Bitcoin isn’t just about money; it’s about freedom. It empowers individuals to own their wealth without fear of censorship, seizure, or devaluation by external forces.

Bitcoin’s success has ignited a broader movement. Its decentralized architecture—a network maintained by individuals rather than a single entity—has inspired innovators to apply this model beyond finance. Today, the philosophy of decentralization is being explored in healthcare, energy, governance, and more.

Decentralization Beyond Finance

Decentralization is proving to be a transformative force across industries:

Healthcare

Imagine owning your medical data. Decentralized health systems built on blockchain technology allow patients to control their records, ensuring privacy and security while eliminating reliance on vulnerable centralized databases. These systems also enable transparent research funding, connecting donors directly with projects and reducing administrative overhead.

Energy

The future of energy lies in peer-to-peer networks. Decentralized energy systems, such as microgrids, empower communities to generate, share, and trade renewable energy. Households with solar panels can sell excess power directly to neighbors, bypassing utility companies. This model not only promotes sustainability but also reduces dependency on monopolistic providers.

Governance

What if voting systems were transparent, tamper-proof, and accessible to everyone? Decentralized governance tools leverage blockchain to create trustless voting platforms, ensuring fair elections and greater civic participation. These systems can also decentralize decision-making, enabling communities to self-govern without bureaucratic interference.

By decentralizing these critical systems, we can create a world where power is distributed more equitably, fostering innovation and resilience.

Individual Freedom at the Core

At its heart, decentralization is about empowering individuals. It shifts control from institutions to people, promoting self-sovereignty in every aspect of life.

Financial Independence: With Bitcoin, you can store and transfer wealth without intermediaries. This is especially critical for people in countries with unstable currencies or oppressive regimes.

Privacy and Censorship Resistance: Decentralized platforms offer alternatives to surveillance-heavy social media and communication tools. Users regain control over their data and speech, free from corporate or governmental censorship.

Permissionless Innovation: Decentralization removes gatekeepers, enabling anyone to create, share, and participate without needing approval from centralized authorities.

This shift isn’t just technological; it’s philosophical. Decentralization aligns with the fundamental human desire for autonomy and self-determination.

Challenges and Opportunities

The road to decentralization isn’t without hurdles. Scalability remains a technical challenge for many decentralized networks. Public understanding is another barrier; many people still view decentralization as complex or niche. Institutional resistance is perhaps the most formidable obstacle, as powerful entities are unlikely to relinquish control easily.

Yet, these challenges present opportunities. Education is key to demystifying decentralization. Innovation will address technical limitations, as we’ve seen with Bitcoin’s Lightning Network and other scaling solutions. And as public demand for transparency and fairness grows, institutions may be compelled to adopt decentralized models.

The Decentralized Path Forward

Decentralization is more than a technological trend; it’s a movement towards a freer, fairer world. By embracing decentralized systems, we can dismantle the inefficiencies and inequities of centralization, empowering individuals and communities to take control of their futures.

The decentralized revolution is just beginning. Whether it’s through Bitcoin, decentralized energy grids, or tamper-proof voting systems, the possibilities are endless. The question isn’t if decentralization will reshape the world, but how quickly it will happen.

The time to act is now. Explore, experiment, and advocate for decentralization in your own life and community. Together, we can build a future that prioritizes individual sovereignty and collective progress—a future where freedom truly thrives.

Take Action Towards Financial Independence

If this article has sparked your interest in the transformative potential of Bitcoin, there's so much more to explore! Dive deeper into the world of financial independence and revolutionize your understanding of money by following my blog and subscribing to my YouTube channel.

🌐 Blog: Unplugged Financial Blog Stay updated with insightful articles, detailed analyses, and practical advice on navigating the evolving financial landscape. Learn about the history of money, the flaws in our current financial systems, and how Bitcoin can offer a path to a more secure and independent financial future.

📺 YouTube Channel: Unplugged Financial Subscribe to our YouTube channel for engaging video content that breaks down complex financial topics into easy-to-understand segments. From in-depth discussions on monetary policies to the latest trends in cryptocurrency, our videos will equip you with the knowledge you need to make informed financial decisions.

👍 Like, subscribe, and hit the notification bell to stay updated with our latest content. Whether you're a seasoned investor, a curious newcomer, or someone concerned about the future of your financial health, our community is here to support you on your journey to financial independence.

Support the Cause

If you enjoyed what you read and believe in the mission of spreading awareness about Bitcoin, I would greatly appreciate your support. Every little bit helps keep the content going and allows me to continue educating others about the future of finance.

Donate Bitcoin: bc1qpn98s4gtlvy686jne0sr8ccvfaxz646kk2tl8lu38zz4dvyyvflqgddylk

#Decentralization#Bitcoin#BlockchainTechnology#IndividualFreedom#SelfSovereignty#FutureOfFinance#Empowerment#PeerToPeer#SustainableEnergy#TransparentGovernance#CensorshipResistance#Innovation#DecentralizedRevolution#TechForGood#DigitalFreedom#cryptocurrency#financial empowerment#finance#globaleconomy#digitalcurrency#financial experts#blockchain#financial education#unplugged financial

2 notes

·

View notes

Text

𝗛𝗼𝘄 𝗪𝗲𝗯𝟯 𝗥𝗲𝘃𝗼𝗹𝘂𝘁𝗶𝗼𝗻𝗶𝘇𝗲𝘀 𝗧𝗿𝗮𝗱𝗶𝘁𝗶𝗼𝗻𝗮𝗹 𝗦𝗲𝗮𝗿𝗰𝗵 𝗘𝗻𝗴𝗶𝗻𝗲𝘀

The emergence of Web3 marks a significant paradigm shift in the digital landscape, transforming how information is accessed, shared, and verified. To fully comprehend Web3's impact on search technology, it's essential to examine the limitations of traditional search engines and explore how Web3 addresses these challenges.

Problems of Traditional Search Engines

Centralization of Data: Traditional search engines rely on centralized databases, controlled by a single entity, raising concerns about data privacy, censorship, and manipulation.

Privacy Concerns: Users' search data is often collected, analyzed, and used for targeted advertising, sparking serious privacy concerns and a growing desire for anonymity.

Algorithmic Bias: Search algorithms can perpetuate biases, influencing information visibility and compromising neutrality.

Data Authenticity and Quality: Information authenticity and quality can be questionable, facilitating the spread of misinformation and fake news.

Dependence on Internet Giants: A few dominant companies control the search engine market, leading to concentrated power and potential monopolistic practices.

Limited Customization and Personalization: While some personalization exists, it's primarily driven by engagement goals rather than relevance or unbiased information.

Web3: A Solution to Traditional Search Engine Limitations

Web3, built on blockchain technology, decentralized applications (dApps), and a user-centric web, offers innovative solutions:

Decentralization of Data: Web3 distributes data across networks, reducing risks associated with centralized control.

Enhanced Privacy and Anonymity: Blockchain technology enables encrypted searches and user data, providing anonymity and reducing personal data exploitation.

Reduced Algorithmic Bias: Decentralized search engines employ transparent algorithms, minimizing bias and allowing community involvement.

Improved Data Authenticity: Blockchain's immutability and transparency enhance information authenticity, verifying sources and accuracy.

Diversification of Search Engines: Web3 encourages diverse search engines, breaking monopolies and fostering innovation.

Customization and Personalization: Web3 offers personalized search experiences while respecting user privacy, using smart contracts and decentralized storage.

Tokenization and Incentives: Web3 introduces models for incentivizing content creation and curation, rewarding users and creators with tokens.

Interoperability and Integration: Web3's architecture promotes seamless integration among services and platforms.

Challenges

While Web3 offers solutions, challenges persist:

Technical Complexity: Blockchain and decentralized technologies pose adoption barriers.

User Adoption: Transitioning from traditional to decentralized search engines requires behavioral shifts.

Adot: A Web3 Search Engine Pioneer

Adot exemplifies Web3's potential in reshaping the search engine landscape:

Empowering AI with Web3: Adot optimizes AI for Web3, enhancing logical reasoning and knowledge integration.

Mission of Open Accessibility: Adot aims to make high-quality data universally accessible, surpassing traditional search engines.

User and Developer Empowerment: Adot rewards users for contributions and encourages developers to create customized search engines.

Conclusion

Web3 revolutionizes traditional search engines by addressing centralization, privacy concerns, and algorithmic biases. While challenges remain, Web3's potential for a decentralized, transparent, and user-empowered web is vast. As Web3 technologies evolve, we can expect a significant shift in how we interact with online information.

About Adot

Adot is building a Web3 search engine for the AI era, providing users with real-time, intelligent decision support.

Join the Adot Revolutionary journey:

1. Website: https://a.xyz

2. X (Twitter) handle https://x.com/Adot_web3?t=UWoRjunsR7iM1ueOKLfIZg&s=09

3. https://t.me/+McB7Gs2I-qoxMDM1

2 notes

·

View notes

Text

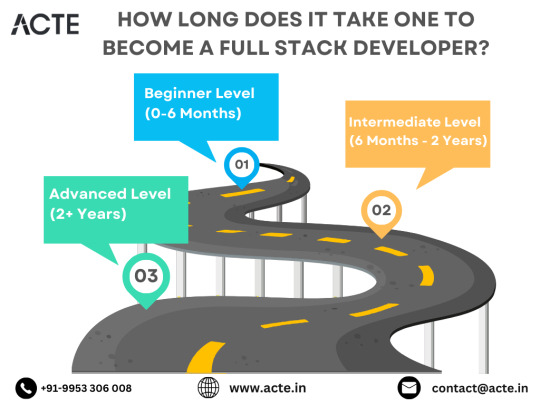

The Roadmap to Full Stack Developer Proficiency: A Comprehensive Guide

Embarking on the journey to becoming a full stack developer is an exhilarating endeavor filled with growth and challenges. Whether you're taking your first steps or seeking to elevate your skills, understanding the path ahead is crucial. In this detailed roadmap, we'll outline the stages of mastering full stack development, exploring essential milestones, competencies, and strategies to guide you through this enriching career journey.

Beginning the Journey: Novice Phase (0-6 Months)

As a novice, you're entering the realm of programming with a fresh perspective and eagerness to learn. This initial phase sets the groundwork for your progression as a full stack developer.

Grasping Programming Fundamentals:

Your journey commences with grasping the foundational elements of programming languages like HTML, CSS, and JavaScript. These are the cornerstone of web development and are essential for crafting dynamic and interactive web applications.

Familiarizing with Basic Data Structures and Algorithms:

To develop proficiency in programming, understanding fundamental data structures such as arrays, objects, and linked lists, along with algorithms like sorting and searching, is imperative. These concepts form the backbone of problem-solving in software development.

Exploring Essential Web Development Concepts:

During this phase, you'll delve into crucial web development concepts like client-server architecture, HTTP protocol, and the Document Object Model (DOM). Acquiring insights into the underlying mechanisms of web applications lays a strong foundation for tackling more intricate projects.

Advancing Forward: Intermediate Stage (6 Months - 2 Years)

As you progress beyond the basics, you'll transition into the intermediate stage, where you'll deepen your understanding and skills across various facets of full stack development.

Venturing into Backend Development:

In the intermediate stage, you'll venture into backend development, honing your proficiency in server-side languages like Node.js, Python, or Java. Here, you'll learn to construct robust server-side applications, manage data storage and retrieval, and implement authentication and authorization mechanisms.

Mastering Database Management:

A pivotal aspect of backend development is comprehending databases. You'll delve into relational databases like MySQL and PostgreSQL, as well as NoSQL databases like MongoDB. Proficiency in database management systems and design principles enables the creation of scalable and efficient applications.

Exploring Frontend Frameworks and Libraries:

In addition to backend development, you'll deepen your expertise in frontend technologies. You'll explore prominent frameworks and libraries such as React, Angular, or Vue.js, streamlining the creation of interactive and responsive user interfaces.

Learning Version Control with Git:

Version control is indispensable for collaborative software development. During this phase, you'll familiarize yourself with Git, a distributed version control system, to manage your codebase, track changes, and collaborate effectively with fellow developers.

Achieving Mastery: Advanced Phase (2+ Years)

As you ascend in your journey, you'll enter the advanced phase of full stack development, where you'll refine your skills, tackle intricate challenges, and delve into specialized domains of interest.

Designing Scalable Systems:

In the advanced stage, focus shifts to designing scalable systems capable of managing substantial volumes of traffic and data. You'll explore design patterns, scalability methodologies, and cloud computing platforms like AWS, Azure, or Google Cloud.

Embracing DevOps Practices:

DevOps practices play a pivotal role in contemporary software development. You'll delve into continuous integration and continuous deployment (CI/CD) pipelines, infrastructure as code (IaC), and containerization technologies such as Docker and Kubernetes.

Specializing in Niche Areas:

With experience, you may opt to specialize in specific domains of full stack development, whether it's frontend or backend development, mobile app development, or DevOps. Specialization enables you to deepen your expertise and pursue career avenues aligned with your passions and strengths.

Conclusion:

Becoming a proficient full stack developer is a transformative journey that demands dedication, resilience, and perpetual learning. By following the roadmap outlined in this guide and maintaining a curious and adaptable mindset, you'll navigate the complexities and opportunities inherent in the realm of full stack development. Remember, mastery isn't merely about acquiring technical skills but also about fostering collaboration, embracing innovation, and contributing meaningfully to the ever-evolving landscape of technology.

#full stack developer#education#information#full stack web development#front end development#frameworks#web development#backend#full stack developer course#technology

9 notes

·

View notes

Text

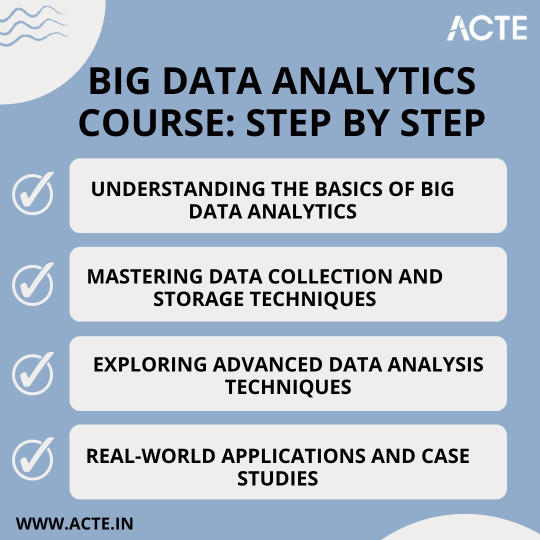

From Beginner to Pro: A Game-Changing Big Data Analytics Course

Are you fascinated by the vast potential of big data analytics? Do you want to unlock its power and become a proficient professional in this rapidly evolving field? Look no further! In this article, we will take you on a journey to traverse the path from being a beginner to becoming a pro in big data analytics. We will guide you through a game-changing course designed to provide you with the necessary information and education to master the art of analyzing and deriving valuable insights from large and complex data sets.

Step 1: Understanding the Basics of Big Data Analytics

Before diving into the intricacies of big data analytics, it is crucial to grasp its fundamental concepts and methodologies. A solid foundation in the basics will empower you to navigate through the complexities of this domain with confidence. In this initial phase of the course, you will learn:

The definition and characteristics of big data

The importance and impact of big data analytics in various industries

The key components and architecture of a big data analytics system

The different types of data and their relevance in analytics

The ethical considerations and challenges associated with big data analytics

By comprehending these key concepts, you will be equipped with the essential knowledge needed to kickstart your journey towards proficiency.

Step 2: Mastering Data Collection and Storage Techniques

Once you have a firm grasp on the basics, it's time to dive deeper and explore the art of collecting and storing big data effectively. In this phase of the course, you will delve into:

Data acquisition strategies, including batch processing and real-time streaming

Techniques for data cleansing, preprocessing, and transformation to ensure data quality and consistency

Storage technologies, such as Hadoop Distributed File System (HDFS) and NoSQL databases, and their suitability for different types of data

Understanding data governance, privacy, and security measures to handle sensitive data in compliance with regulations

By honing these skills, you will be well-prepared to handle large and diverse data sets efficiently, which is a crucial step towards becoming a pro in big data analytics.

Step 3: Exploring Advanced Data Analysis Techniques

Now that you have developed a solid foundation and acquired the necessary skills for data collection and storage, it's time to unleash the power of advanced data analysis techniques. In this phase of the course, you will dive into:

Statistical analysis methods, including hypothesis testing, regression analysis, and cluster analysis, to uncover patterns and relationships within data

Machine learning algorithms, such as decision trees, random forests, and neural networks, for predictive modeling and pattern recognition

Natural Language Processing (NLP) techniques to analyze and derive insights from unstructured text data

Data visualization techniques, ranging from basic charts to interactive dashboards, to effectively communicate data-driven insights

By mastering these advanced techniques, you will be able to extract meaningful insights and actionable recommendations from complex data sets, transforming you into a true big data analytics professional.

Step 4: Real-world Applications and Case Studies

To solidify your learning and gain practical experience, it is crucial to apply your newfound knowledge in real-world scenarios. In this final phase of the course, you will:

Explore various industry-specific case studies, showcasing how big data analytics has revolutionized sectors like healthcare, finance, marketing, and cybersecurity

Work on hands-on projects, where you will solve data-driven problems by applying the techniques and methodologies learned throughout the course

Collaborate with peers and industry experts through interactive discussions and forums to exchange insights and best practices

Stay updated with the latest trends and advancements in big data analytics, ensuring your knowledge remains up-to-date in this rapidly evolving field

By immersing yourself in practical applications and real-world challenges, you will not only gain valuable experience but also hone your problem-solving skills, making you a well-rounded big data analytics professional.

Through a comprehensive and game-changing course at ACTE institute, you can gain the necessary information and education to navigate the complexities of this field. By understanding the basics, mastering data collection and storage techniques, exploring advanced data analysis methods, and applying your knowledge in real-world scenarios, you have transformed into a proficient professional capable of extracting valuable insights from big data.

Remember, the world of big data analytics is ever-evolving, with new challenges and opportunities emerging each day. Stay curious, seek continuous learning, and embrace the exciting journey ahead as you unlock the limitless potential of big data analytics.

17 notes

·

View notes

Text

Going Over the Cloud: An Investigation into the Architecture of Cloud Solutions

Because the cloud offers unprecedented levels of size, flexibility, and accessibility, it has fundamentally altered the way we approach technology in the present digital era. As more and more businesses shift their infrastructure to the cloud, it is imperative that they understand the architecture of cloud solutions. Join me as we examine the core concepts, industry best practices, and transformative impacts on modern enterprises.

The Basics of Cloud Solution Architecture A well-designed architecture that balances dependability, performance, and cost-effectiveness is the foundation of any successful cloud deployment. Cloud solutions' architecture is made up of many different components, including networking, computing, storage, security, and scalability. By creating solutions that are tailored to the requirements of each workload, organizations can optimize return on investment and fully utilize the cloud.

Flexibility and Resilience in Design The flexibility of cloud computing to grow resources on-demand to meet varying workloads and guarantee flawless performance is one of its distinguishing characteristics. Cloud solution architecture create resilient systems that can endure failures and sustain uptime by utilizing fault-tolerant design principles, load balancing, and auto-scaling. Workloads can be distributed over several availability zones and regions to help enterprises increase fault tolerance and lessen the effect of outages.

Protection of Data in the Cloud and Security by Design

As data thefts become more common, security becomes a top priority in cloud solution architecture. Architects include identity management, access controls, encryption, and monitoring into their designs using a multi-layered security strategy. By adhering to industry standards and best practices, such as the shared responsibility model and compliance frameworks, organizations may safeguard confidential information and guarantee regulatory compliance in the cloud.

Using Professional Services to Increase Productivity Cloud service providers offer a variety of managed services that streamline operations and reduce the stress of maintaining infrastructure. These services allow firms to focus on innovation instead of infrastructure maintenance. They include server less computing, machine learning, databases, and analytics. With cloud-native applications, architects may reduce costs, increase time-to-market, and optimize performance by selecting the right mix of managed services.

Cost control and ongoing optimization Cost optimization is essential since inefficient resource use can quickly drive up costs. Architects monitor resource utilization, analyze cost trends, and identify opportunities for optimization with the aid of tools and techniques. Businesses can cut waste and maximize their cloud computing expenses by using spot instances, reserved instances, and cost allocation tags.

Acknowledging Automation and DevOps Important elements of cloud solution design include automation and DevOps concepts, which enable companies to develop software more rapidly, reliably, and efficiently. Architects create pipelines for continuous integration, delivery, and deployment, which expedites the software development process and allows for rapid iterations. By provisioning and managing infrastructure programmatically with Infrastructure as Code (IaC) and Configuration Management systems, teams may minimize human labor and guarantee consistency across environments.

Multiple-cloud and hybrid strategies In an increasingly interconnected world, many firms employ hybrid and multi-cloud strategies to leverage the benefits of many cloud providers in addition to on-premises infrastructure. Cloud solution architects have to design systems that seamlessly integrate several environments while ensuring interoperability, data consistency, and regulatory compliance. By implementing hybrid connection options like VPNs, Direct Connect, or Express Route, organizations may develop hybrid cloud deployments that include the best aspects of both public and on-premises data centers. Analytics and Data Management Modern organizations depend on data because it fosters innovation and informed decision-making. Thanks to the advanced data management and analytics solutions developed by cloud solution architects, organizations can effortlessly gather, store, process, and analyze large volumes of data. By leveraging cloud-native data services like data warehouses, data lakes, and real-time analytics platforms, organizations may gain a competitive advantage in their respective industries and extract valuable insights. Architects implement data governance frameworks and privacy-enhancing technologies to ensure adherence to data protection rules and safeguard sensitive information.

Computing Without a Server Server less computing, a significant shift in cloud architecture, frees organizations to focus on creating applications rather than maintaining infrastructure or managing servers. Cloud solution architects develop server less programs using event-driven architectures and Function-as-a-Service (FaaS) platforms such as AWS Lambda, Azure Functions, or Google Cloud Functions. By abstracting away the underlying infrastructure, server less architectures offer unparalleled scalability, cost-efficiency, and agility, empowering companies to innovate swiftly and change course without incurring additional costs.

Conclusion As we come to the close of our investigation into cloud solution architecture, it is evident that the cloud is more than just a platform for technology; it is a force for innovation and transformation. By embracing the ideas of scalability, resilience, and security, and efficiency, organizations can take advantage of new opportunities, drive business expansion, and preserve their competitive edge in today's rapidly evolving digital market. Thus, to ensure success, remember to leverage cloud solution architecture when developing a new cloud-native application or initiating a cloud migration.

1 note

·

View note

Text

Discuss How Web Development Services Can Benefit Startups

Web Development for Startups: Discuss how web development services can benefit startups, from MVP development to scaling a digital presence.

Startups, often characterized by limited resources and a passion for innovation, have a unique set of challenges and opportunities. In the digital age, web development services are a vital component for startups looking to bring their vision to life and achieve growth. In this article, we’ll explore how web development services can benefit startups, from Minimum Viable Product (MVP) development to scaling a robust digital presence.

1. MVP Development: Turning Ideas into Reality

Minimum Viable Product (MVP) development is a cornerstone for startups. It involves creating a simplified version of your product with the core features that solve a specific problem or address a unique need. Web development plays a crucial role in this initial phase by:

Rapid Prototyping: Web developers can quickly create functional prototypes that allow startups to test and validate their ideas with minimal investment.

User Feedback: MVPs are an opportunity to gather valuable user feedback, enabling startups to refine and improve their product based on real-world usage.

Cost Efficiency: Developing a web-based MVP is often more cost-effective than building a complete mobile application or software platform, making it an ideal starting point for startups with limited budgets.

2. Scalability: Preparing for Growth

As startups gain traction and user demand increases, scalability becomes a critical concern. Effective web development services can help startups prepare for growth by:

Scalable Architecture: Web developers can design and implement scalable architecture that allows the platform to handle increased traffic and data without performance degradation.

Database Optimization: Proper database design and optimization are essential for ensuring that the system can grow smoothly as more users and data are added.

Load Balancing: Load balancing distributes web traffic across multiple servers, ensuring that the system remains responsive and available, even during high-demand periods.

3. Mobile Responsiveness: Reaching a Wider Audience

With mobile device usage surpassing desktops, it’s essential for startups to have a web presence that is responsive and mobile-friendly. Web development services can ensure that your website or web application:

Adapts to Different Screens: A responsive design ensures that your site functions and looks good on various devices and screen sizes, from smartphones to tablets.

Improved User Experience: Mobile-responsive websites provide a seamless and enjoyable user experience, enhancing engagement and reducing bounce rates.

Enhanced SEO: Google and other search engines prioritize mobile-responsive websites in search results, potentially boosting your startup’s visibility.

4. User-Centric Design: Building Trust and Loyalty

A user-centric design is essential for startups looking to build trust and loyalty. Web development can help in the following ways:

Intuitive User Interface (UI): A well-designed UI simplifies user interaction and navigation, making the product user-friendly.

User Experience (UX): An optimized UX ensures that users enjoy using your product, leading to increased satisfaction and loyalty.

Branding and Consistency: Web developers can create a design that reflects your brand identity and maintains consistency throughout the user journey.

5. Security and Data Protection: Safeguarding User Information

For startups, maintaining the security and privacy of user data is paramount. Web development services can ensure that your platform is secure by:

Implementing Encryption: Secure Sockets Layer (SSL) encryption and other security measures protect data transmitted between users and your server.

Data Backup and Recovery: Regular data backups and recovery procedures are vital for safeguarding information and minimizing data loss in case of unexpected events.

Authentication and Authorization: Implementing robust authentication and authorization mechanisms ensures that only authorized users can access sensitive data.

Conclusion: The Road to Startup Success

Web development is a driving force behind the success of startups. From MVP development to scalability, mobile responsiveness, user-centric design, security, and privacy, web development services provide the foundation for startups to innovate and grow. By partnering with skilled web developers and investing in their digital presence, startups can create a compelling and competitive edge in today’s dynamic business landscape.

Source:

#kushitworld#india#saharanpur#itcompany#seo#seo services#webdevelopment#digitalmarketing#websitedesigning

4 notes

·

View notes

Text

Advanced Techniques in Full-Stack Development

Certainly, let's delve deeper into more advanced techniques and concepts in full-stack development:

1. Server-Side Rendering (SSR) and Static Site Generation (SSG):

SSR: Rendering web pages on the server side to improve performance and SEO by delivering fully rendered pages to the client.

SSG: Generating static HTML files at build time, enhancing speed, and reducing the server load.

2. WebAssembly:

WebAssembly (Wasm): A binary instruction format for a stack-based virtual machine. It allows high-performance execution of code on web browsers, enabling languages like C, C++, and Rust to run in web applications.

3. Progressive Web Apps (PWAs) Enhancements:

Background Sync: Allowing PWAs to sync data in the background even when the app is closed.

Web Push Notifications: Implementing push notifications to engage users even when they are not actively using the application.

4. State Management:

Redux and MobX: Advanced state management libraries in React applications for managing complex application states efficiently.

Reactive Programming: Utilizing RxJS or other reactive programming libraries to handle asynchronous data streams and events in real-time applications.

5. WebSockets and WebRTC:

WebSockets: Enabling real-time, bidirectional communication between clients and servers for applications requiring constant data updates.

WebRTC: Facilitating real-time communication, such as video chat, directly between web browsers without the need for plugins or additional software.

6. Caching Strategies:

Content Delivery Networks (CDN): Leveraging CDNs to cache and distribute content globally, improving website loading speeds for users worldwide.

Service Workers: Using service workers to cache assets and data, providing offline access and improving performance for returning visitors.

7. GraphQL Subscriptions:

GraphQL Subscriptions: Enabling real-time updates in GraphQL APIs by allowing clients to subscribe to specific events and receive push notifications when data changes.

8. Authentication and Authorization:

OAuth 2.0 and OpenID Connect: Implementing secure authentication and authorization protocols for user login and access control.

JSON Web Tokens (JWT): Utilizing JWTs to securely transmit information between parties, ensuring data integrity and authenticity.

9. Content Management Systems (CMS) Integration:

Headless CMS: Integrating headless CMS like Contentful or Strapi, allowing content creators to manage content independently from the application's front end.

10. Automated Performance Optimization:

Lighthouse and Web Vitals: Utilizing tools like Lighthouse and Google's Web Vitals to measure and optimize web performance, focusing on key user-centric metrics like loading speed and interactivity.

11. Machine Learning and AI Integration:

TensorFlow.js and ONNX.js: Integrating machine learning models directly into web applications for tasks like image recognition, language processing, and recommendation systems.

12. Cross-Platform Development with Electron:

Electron: Building cross-platform desktop applications using web technologies (HTML, CSS, JavaScript), allowing developers to create desktop apps for Windows, macOS, and Linux.

13. Advanced Database Techniques:

Database Sharding: Implementing database sharding techniques to distribute large databases across multiple servers, improving scalability and performance.

Full-Text Search and Indexing: Implementing full-text search capabilities and optimized indexing for efficient searching and data retrieval.

14. Chaos Engineering:

Chaos Engineering: Introducing controlled experiments to identify weaknesses and potential failures in the system, ensuring the application's resilience and reliability.

15. Serverless Architectures with AWS Lambda or Azure Functions:

Serverless Architectures: Building applications as a collection of small, single-purpose functions that run in a serverless environment, providing automatic scaling and cost efficiency.

16. Data Pipelines and ETL (Extract, Transform, Load) Processes:

Data Pipelines: Creating automated data pipelines for processing and transforming large volumes of data, integrating various data sources and ensuring data consistency.

17. Responsive Design and Accessibility:

Responsive Design: Implementing advanced responsive design techniques for seamless user experiences across a variety of devices and screen sizes.

Accessibility: Ensuring web applications are accessible to all users, including those with disabilities, by following WCAG guidelines and ARIA practices.

full stack development training in Pune

2 notes

·

View notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

AWS Certified Solutions Architect Associate Certification in Ottawa: Kick-Start Your Cloud Career

The Increasing Worth of Cloud Certification

Cloud computing is now a part of IT infrastructure, where all organizations across the globe use cloud computing platforms to gain scalability, security, and efficiency. Amazon Web Services (AWS) is the leader in cloud services, with an enormous collection of tools that can be utilized to assist organizations in developing and maintaining applications. Therefore, AWS certifications are now most sought after by the employer as well as the global IT professional.

The most sought-after certifications provided by AWS is the AWS Certified Solutions Architect Associate Certification. The certification is an affirmation of the applicant's ability to create secure and reliable applications on the AWS platform. To professionals in Ottawa, the certification is highly beneficial considering the city's widespread use of cloud technology among businesses.

Why Ottawa is an Ideal Place for AWS Certification

Capital of Canada, Ottawa, also boasts a bustling technology industry in both the public and private sectors. With ministries of the federal government at the higher tier and technology companies investing in digital growth, Ottawa continues to require highly skilled cloud experts in the city.

Industry experts who are AWS Ottawa certified are best suited to gain many career opportunities. Companies in the country actively employ cloud architects, systems engineers, DevOps engineers, and consultants who will apply scalable solutions on the basis of AWS-based technology. As the city will keep on getting new technology investments, the demand for AWS capability will remain high.

Learning about the AWS Certified Solutions Architect Associate Certification

AWS Certified Solutions Architect Associate Certification is meant for experienced practitioners who know how to create distributed applications. It focuses on abilities to build highly available, scalable, secure, and cost-effective systems on AWS services.

In order to get certified, they must undergo an examination which determines their capability for designing solutions according to customer requirements, adopting best practices, and invoking suitable AWS services which are most appropriate in solving a specific use case. Certification is also carried out by dealing with networking, storage, compute, security, and database services in order to introduce trained personnel to some idea regarding AWS architecture.

How to Prepare for Certification in Ottawa

Practice hands-on is not just preparation for AWS Certified Solutions Architect Associate, but Ottawa has it all to prepare for the exam from online preparation to classroom training and hybrids. Ottawa offers courses to learn in your way whether you learn best on your own or from a teacher.

Aspirants also have an opportunity to learn from AWS free and paid material, such as whitepapers, tutorials, documentation, and practice exams. Use of the AWS Free Tier is also a great method of gaining hands-on experience by taking advantage of cloud resources directly within a live environment. This combination of formal education and experimentation goes to work to provide sufficient confidence and competency to pass the exam successfully.

AWS Certification Training Opportunities in Ottawa

Top training institutions in Ottawa provide comprehensive course training to help the candidates prepare for the AWS Certified Solutions Architect Associate certification exam. They include colleges based in Ottawa, private training providers, and offshore schools providing instructor-led training courses online or onsite in Ottawa.

Additionally, most of these programs are for working professionals who are flexible with respect to time too. This is convenient for candidates since they are able to arrange training against work or other commitments and resume their certification.

Exam Format and What to Expect

The AWS Certified Solutions Architect Associate certification test is provided as multiple-choice and multiple-response questions. The test is written to determine knowledge and use of knowledge to real-life situations. The test is approximately 130 minutes in duration and could be administered via the internet or within an Ottawa certified test location.

Types of questions in the test are generally a situation and wish the candidate to express the ideal architecture solution based on the aid of AWS services. The formula is that it needs the candidate to know the concepts of AWS and comparing and contrasting solution roads. Knowing the ways different AWS services collaborate with each other and how they respond and try fulfilling some business necessities is very essential in a pass in an examination.

Career Opportunities After Certification

AWS Certified Solutions Architect Associate Certification can open new doors of opportunity in Ottawa career in new technology. Cloud solution designer, cloud engineer, system architect, DevOps, and AWS consultant are only a few among them. Deploying and designing cloud solutions, performance optimization of infrastructure, and security best practices compliance are some of their responsibilities.

AWS-certified professionals who operate in Ottawa either in the government or the private sector will still find enough opportunity to get into because federal agencies continue shifting services into the cloud, and a high level of demand still exists for AWS-certified experts. Multi-nationals companies, as well as start-ups across the area, still require to roll out AWS as their part of a digital strategy.

AWS-Certified Professionals' Potential Earning in Ottawa

AWS certified Ottawa programmers are the most paid employees in the technology industry. Base salary for individuals with the AWS Certified Solutions Architect Associate certificate typically ranges between $80,000 CAD per year. Pay rates go as high as $120,000 CAD and above based on years of experience and level of expertise.

Employers accept AWS certifications as evidence of technical ability and career advancement commitment. Therefore, the credential can be utilized by future employees to act as bargaining chips in attempting to negotiate for pay or promotion. Certification also makes one eligible to be considered for leadership positions on projects or special work with greater revenue opportunities.

The Broader Impact of AWS Certification

Aside from the short-term career boost, being AWS Certified Solutions Architect Associate Certification impacts career development in the long run. It is a stepping stone to other top-level AWS certifications like the AWS Certified Solutions Architect Professional or the AWS Certified DevOps Engineer. These will further develop your skills and propel your cloud architecture career to great heights.

Certification also gets professionals current with rapidly changing technologies. AWS continuously changing services and best practices, and certified professionals stand a better chance of being innovation leaders. Such a culture of ongoing learning is priceless in the very competitive technology industry in Ottawa.

Final Thoughts: Take the Next Step Toward Certification

AWS Certified Solutions Architect Associate is a benefit to any information technology expert willing to take the career to new heights in Ottawa's ever-evolving technology environment. With cloud deployment increasing and pressure rising on trained experts, having cloud training has never been a better time.

With so many certifications out there to get into in Ottawa and so much potential to be an expert in cloud and get employable, becoming certified through this process can set you apart from others in today's knowledge-based economy. As an AWS Solutions Architect Associate being a known IT professional or even in the process of gaining cloud expertise is a good step towards security and secure future.

0 notes

Text

Common Mistakes to Avoid When Hiring a NoSQL Engineer

Many tech businesses are switching from traditional relational databases to NoSQL solutions due to the rise of big data and real-time applications. Employing the correct engineer may make or break your data architecture, regardless of whether you're utilizing Couchbase, Redis, Cassandra, or MongoDB. Many teams still make mistakes in the hiring process.

Here are the most typical blunders to steer clear of—and what to do instead—if you intend to hire NoSQL engineers.

Smart Hiring Starts with Clear Expectations and the Right Evaluation Strategy

Focusing only on tool familiarity

It's simple to believe that understanding a particular NoSQL database, such as MongoDB or DynamoDB, is sufficient. However, true proficiency extends beyond syntax. Data modelling, consistency trade-offs, segmentation, and indexing across many systems are all things that a competent NoSQL developer should be aware of.

Ask them how they would create a schema for your use case or deal with scalability issues in a distributed setting rather than just what technologies they have used.

Overlooking use case alignment

NoSQL databases are not all made equal. Some excel in document storage or graph traversal, while others are excellent for quick key-value access. Make sure the NoSQL engineers you hire have experience with the kind of system your project requires.

For instance, employing someone with solely batch-oriented system experience could lead to problems later on if your product needs real-time analytics. Match their experience to your business objectives and architecture.

Ignoring performance optimization skills

NoSQL engineering includes a significant amount of performance-under-load design. However, a lot of interviews don't evaluate a candidate's ability to locate and address bottlenecks.

Seek out engineers who can explain sharding, replication, cache techniques, and query profiling. As your data grows, they are the abilities that keep systems operating quickly and consistently.

Not testing problem-solving skills

Because NoSQL systems frequently lack the rigid structure of SQL-based ones, their designs may be more complex. Asking abstract questions or concentrating solely on theory is a mistake that many tech companies make.

Present real-world examples instead. How would they transfer SQL data to NoSQL? In a dispersed setting, how would they manage conflicting records? This aids in identifying real-world, practical experience.

Relying only on resumes or generic platforms

Resumes frequently fail to convey a person's collaborative or thought processes. Make sure the IT recruitment platform you're utilizing has resources that assist you to evaluate real skill rather than just job titles, such as technical tests, project portfolios, or references.

You have a higher chance of hiring NoSQL developers with proven abilities if you use platforms that are specifically designed for data-centric roles.

Not considering cross-functional collaboration

NoSQL developers frequently collaborate with analysts, DevOps, and backend teams. They should be able to convert data requirements into scalable solutions and connect with various stakeholders.

Make sure your NoSQL hire knows how to expose and format data for downstream analytics if your company intends to hire data scientists in the future.

Closing Speech

There is more to hiring a NoSQL developer than simply crossing off a list of technologies. Finding someone who can accurately model data, address performance problems, and adjust to changing business requirements is the key.

It's crucial for software organizations that deal with big, flexible data systems to steer clear of these common blunders. Take your time, make use of the appropriate resources, such as a reliable IT recruitment platform, and ensure that the individual you choose is capable of more than just writing queries; they should be able to help you develop your long-term data strategy.

0 notes

Text

Pass AWS SAP-C02 Exam in First Attempt

Crack the AWS Certified Solutions Architect - Professional (SAP-C02) exam on your first try with real exam questions, expert tips, and the best study resources from JobExamPrep and Clearcatnet.

How to Pass AWS SAP-C02 Exam in First Attempt: Real Exam Questions & Tips

Are you aiming to pass the AWS Certified Solutions Architect – Professional (SAP-C02) exam on your first try? You’re not alone. With the right strategy, real exam questions, and trusted study resources like JobExamPrep and Clearcatnet, you can achieve your certification goals faster and more confidently.

Overview of SAP-C02 Exam

The SAP-C02 exam validates your advanced technical skills and experience in designing distributed applications and systems on AWS. Key domains include:

Design Solutions for Organizational Complexity

Design for New Solutions

Continuous Improvement for Existing Solutions

Accelerate Workload Migration and Modernization

Exam Format:

Number of Questions: 75

Type: Multiple choice, multiple response

Duration: 180 minutes

Passing Score: Approx. 750/1000

Cost: $300

AWS SAP-C02 Real Exam Questions (Real Set)

Here are 5 real-exam style questions to give you a feel for the exam difficulty and topics:

Q1: A company is migrating its on-premises Oracle database to Amazon RDS. The solution must minimize downtime and data loss. Which strategy is BEST?

A. AWS Database Migration Service (DMS) with full load only B. RDS snapshot and restore C. DMS with CDC (change data capture) D. Export and import via S3

Answer: C. DMS with CDC

Q2: You are designing a solution that spans multiple AWS accounts and VPCs. Which AWS service allows seamless inter-VPC communication?

A. VPC Peering B. AWS Direct Connect C. AWS Transit Gateway D. NAT Gateway

Answer: C. AWS Transit Gateway

Q3: Which strategy enhances resiliency in a serverless architecture using Lambda and API Gateway?

A. Use a single Availability Zone B. Enable retries and DLQs (Dead Letter Queues) C. Store state in Lambda memory D. Disable logging

Answer: B. Enable retries and DLQs

Q4: A company needs to archive petabytes of data with occasional access within 12 hours. Which storage class should you use?

A. S3 Standard B. S3 Intelligent-Tiering C. S3 Glacier D. S3 Glacier Deep Archive

Answer: D. S3 Glacier Deep Archive

Q5: You are designing a disaster recovery (DR) solution for a high-priority application. The RTO is 15 minutes, and RPO is near zero. What is the most appropriate strategy?

A. Pilot Light B. Backup & Restore C. Warm Standby D. Multi-Site Active-Active

Answer: D. Multi-Site Active-Active

Click here to Start Exam Recommended Resources to Pass SAP-C02 in First Attempt

To master these types of questions and scenarios, rely on real-world tested resources. We recommend:

✅ JobExamPrep

A premium platform offering curated practice exams, scenario-based questions, and up-to-date study materials specifically for AWS certifications. Thousands of professionals trust JobExamPrep for structured and realistic exam practice.

✅ Clearcatnet

A specialized site focused on cloud certification content, especially AWS, Azure, and Google Cloud. Their SAP-C02 study guide and video explanations are ideal for deep conceptual clarity.Expert Tips to Pass the AWS SAP-C02 Exam

Master Whitepapers – Read AWS Well-Architected Framework, Disaster Recovery, and Security best practices.

Practice Scenario-Based Questions – Focus on use cases involving multi-account setups, migration, and DR.

Use Flashcards – Especially for services like AWS Control Tower, Service Catalog, Transit Gateway, and DMS.

Daily Review Sessions – Use JobExamPrep and Clearcatnet quizzes every day.

Mock Exams – Simulate the exam environment at least twice before the real test.

🎓 Final Thoughts

The AWS SAP-C02 exam is tough—but with the right approach, you can absolutely pass it on the first attempt. Study smart, practice real exam questions, and leverage resources like JobExamPrep and Clearcatnet to build both confidence and competence.

#SAPC02#AWSSAPC02#AWSSolutionsArchitect#AWSSolutionsArchitectProfessional#AWSCertifiedSolutionsArchitect#SolutionsArchitectProfessional#AWSArchitect#AWSExam#AWSPrep#AWSStudy#AWSCertified#AWS#AmazonWebServices#CloudCertification#TechCertification#CertificationJourney#CloudComputing#CloudEngineer#ITCertification

0 notes

Text

How to Optimize Databases for Scalability from Day 1| 2025 Guide

Introduction

Scaling a digital product without database foresight is like building a tower without a foundation. It may stand for a while, but it won’t survive pressure. If you want your application to scale with confidence, you must optimize databases for scalability right from the planning phase!

What it means,Selecting the right technologies, modeling data intentionally, and aligning performance expectations with long-term architectural goals.

From startups preparing for growth to enterprises modernizing legacy systems, the principles are the same: Scalable performance doesn’t start at scale,it starts during setup!

In this blog, we’ll explore the strategies, design patterns, and decisions that ensure your database layer doesn’t just support growth but accelerates it!

Why Optimizing Databases for Scalability Matters From Day 1?

Mostly, businesses think about scaling their system only after traffic surges;

But by then, it’s usually too late. A fully scalable system handles heavy workloads and survives, but databases that aren’t built with scaling in mind become silent bottlenecks,slowing performance, increasing response times, and risking system failure just when user demand is rising.

The irony? The most critical scaling problems usually begin with small oversights in the early architecture phase:

poor schema design,

lack of indexing strategy, or

choosing the wrong database engine altogether.

These not-so-worthwhile decisions regarding databases for scalability don’t hurt at launch. But over time, they manifest as fragile joins, sluggish queries, and outages that cost both revenue and user trust.

Starting with scalability as a design principle means recognizing the role your data layer plays,not just in storing information but in sustaining business performance.

"You don’t scale databases when you grow; you grow because your databases are already built to scale."

Understanding the Different Types of System Scalability

When planning for future growth, it’s essential to understand how your system will scale. Adopting the right scalability path introduces unique engineering trade-offs that directly affect performance, resilience, and cost over time.

Types of System Scalability

Vertical Scalability

Vertical scaling refers to enhancing a single server’s capacity by adding more memory, CPU, or storage. While it’s easy to implement early on, it's inherently limited. Over time, you’ll become bound by the physical capacity of a single machine, and costs will grow exponentially as you move toward high-performance hardware.

Horizontal Scalability

This approach distributes workloads across multiple machines or nodes. It enables dynamic scaling by adding/removing resources as needed,making it more suitable for cloud-native systems, large-scale platforms, and distributed databases. However, it requires early architectural planning and operational maturity.

Challenges To Optimize Databases for Scalability

One of the biggest mistakes businesses make is assuming scalability will auto-operate with time. But the earlier you scale poorly, the faster your system becomes brittle.

Here are a few early-stage scaling challenges within the system when scalability isn't planned from day one:

Single Point of Failure:

Without horizontal scaling or replication, your database becomes a bottleneck and a risk to uptime.

Lack of Data Partitioning Strategy:

As data grows, unpartitioned tables cause slow reads and writes,especially under concurrent access.

Improper Indexing:

Skipping index design early leads to query lag, full table scans, and long-term maintenance pain.

Assuming Traffic Uniformity:

Businesses underestimate how read/write patterns shift as the application scales, leading to overloaded services.

Delayed System Monitoring:

Without early observability, issues appear only after users are impacted, making root cause analysis much harder.

Real scalability requires foresight, analyzing usage patterns, building room for flexibility, and choosing scaling methods that suit your data structure,it's not something just your initial timeline strategy.

Schema Design To Optimize Databases for Scalability

A scalable database begins with a schema that anticipates change. While schema design often starts with immediate functionality in mind,tables, relationships, and constraints,the real challenge lies in ensuring those structures remain efficient as the data volume and complexity grow.

The Right Way to Schema Methodology

Normalize Awareness, Denormalize Intent

Businesses often over-normalize to avoid redundancy, but negligence can kill performance at scale. On the other hand, blind denormalization can lead to bloated storage and data inconsistency. Striking the right balance requires understanding your application’s read/write patterns and future query behavior.

Plan for Index Evolution

Indexing is not just about speed,it’s about adaptability. What works for 100 users might slow down drastically for 10,000. Schema design must allow for the addition of compound indexes, partial indexes, and even filtered indexes as usage evolves. The key is in designing your tables knowing that indexes will change over time.

Design for Flexibility, Not Fragility

Rigid schemas resist change and do not adapt easily, hence, businesses struggle a lot with them. To address this, use fields that support variation, like JSON columns in PostgreSQL or document structures in MongoDB, when anticipating fluid requirements. This allows your application to evolve without costly migrations or schema overhauls.

Anticipate Data Volume

Schema design should not just represent logic,it must reflect scale tolerance. You must first decide in yourself whether your system is worth enough to serve a larger audience, and ask yourself questions like,

What happens when this table grows 100x?

Will your queries still return in milliseconds?

Will storage costs stay manageable?

Designing APIs & Mobile Systems That Scale

Scalability isn’t just about backend systems,it’s deeply tied to how your product interacts with users, especially through APIs and mobile interfaces. Ignoring such touchpoints during early database planning often leads to invisible friction that only surfaces when it’s too late.

Scalability Considerations in API Design

Your APIs act as the gateways to your database. Poorly designed APIs,those that allow heavy joins, unfiltered bulk data calls, or lack pagination,can unintentionally overload the database layer.

A scalable API controls query scope, handles versioning, and prevents N+1 query patterns. If your APIs don’t align with your data model, no amount of backend optimization can save you under high load.

Scalability Considerations for Mobile Apps

Mobile users often operate in low-bandwidth, high-latency environments. This makes data fetching, sync operations, and caching far more critical.

Design mobile-facing services to be lightweight, cache-aware, and tolerant to intermittent connectivity. Use batched reads, compressed payloads, and sync queuing to reduce the database strain caused by mobile churn and retries.

Signs Your Application Needs to Scale

Sometimes, performance degradation creeps in quietly. Long-running queries, delayed API responses, increased memory usage, or slow mobile syncs are often early signs that your application needs to scale.

If your team is writing more workarounds than improvements,or you’re afraid to touch core tables because of breakage risks,it’s time to rethink your system architecture.

Infrastructure and Tools That Support Scaling

A well-structured schema and carefully selected database engine are only as effective as the infrastructure that supports them. As your application grows, infrastructure becomes the silent enabler,or barrier,to sustainable database performance, depending upon how you’ve planned to scale it, or not! Choosing the right tools and deployment strategies early helps you avoid costly migrations and reactive firefighting later on!

Managed Services vs. Self-Hosted

Managed database services like Amazon RDS, Azure SQL, and Google Cloud Spanner offer automatic backups, scaling, replication, and failover with minimal configuration. For businesses focused on building products rather than managing infrastructure, these platforms provide a clear advantage. However, self-hosted setups still suit companies needing full control, compliance-specific setups, or cost optimization at scale.

Sharding and Replication

Sharding splits data across multiple nodes, enabling horizontal scaling and isolation of high-volume workloads. Replication, on the other hand, ensures availability and load distribution by duplicating data across regions or read replicas. Together, they form the backbone of database scaling for high-concurrency systems.

Caching Layers

Adding tools like Redis or Memcached helps offload repetitive read operations from your database, drastically improving response time. Caching frequently accessed data, authentication tokens, or even full query results minimizes database strain during traffic spikes.

Monitoring and Observability

Without real-time insights, even scalable systems can fall behind. Tools like Prometheus, Grafana, Datadog, and pg_stat_statements help track performance metrics, query slowdowns, and resource bottlenecks. Observability is not a luxury within a system,it’s a necessity to make intelligent scaling decisions.

Legacy Upgrade Paths

Many businesses struggle with outdated systems that weren’t designed with scale in mind. Here, affordable solutions for legacy system upgrades come into play. For example, introducing read replicas, extracting services into microservices, or gradually transitioning to cloud-native databases etc. Incremental modernization allows you to gain performance without rewriting everything at once.

Bottomline

Database bottlenecks don’t appear overnight. They build silently,through overlooked indexing, rigid schema choices, and wrong infrastructure scalability decisions implemented with short-term convenience in mind. By the time symptoms show, the cost of correction is steep,not just technically, but operationally and financially as well.

To truly optimize databases for scalability, the process must begin before the first record is stored. From choosing the right architecture and data model to aligning APIs, mobile experiences, and infrastructure,scaling is not a one-time adjustment but a long-term strategy to continue with.

The most resilient systems to optimize databases for scalability anticipate growth, respect complexity, and adopt the right tools at the right time. Whether you're modernizing a legacy platform or building from the ground up, thoughtful database design remains the cornerstone of sustainable scale.

FAQs

What are the risks of ignoring database scalability in early-stage development?

Neglecting scalability often leads to slow queries, frequent outages, and data integrity issues as your application grows. Over time, these issues disrupt user experience and make system maintenance expensive. Delayed optimization usually involves painful migrations and performance degradation, costing a hefty sum of money.

What are the signs my current application needs to scale its database?

Common signs include slow response times, increasing query load, high CPU/memory usage, and growing replication lag. If you’re adding more users but also experiencing delays or errors, these are clear signs your application needs to scale. Regulated monitoring of the system with advanced tools can reveal these patterns before they escalate and hinder its progress.

Can legacy systems be optimized for scalability without a full rewrite?

Yes. Businesses can apply affordable solutions for legacy system upgrades, like introducing read replicas, refactoring slow queries, applying caching layers, or incrementally shifting to scalable architectures. Strategic modernization often delivers strong results with lower risk, eliminating unnecessary complete rewrites.

#Optimize databases for scalability#Scalability considerations in API design#Databases for scalability

0 notes

Text

Top 5 Proven Strategies for Building Scalable Software Products in 2025

Building scalable software products is essential in today's dynamic digital environment, where user demands and data volumes can surge unexpectedly. Scalability ensures that your software can handle increased loads without compromising performance, providing a seamless experience for users. This blog delves into best practices for building scalable software, drawing insights from industry experts and resources like XillenTech's guide on the subject.

Understanding Software Scalability

Software scalability refers to the system's ability to handle growing amounts of work or its potential to accommodate growth. This growth can manifest as an increase in user traffic, data volume, or transaction complexity. Scalability is typically categorized into two types:

Vertical Scaling: Enhancing the capacity of existing hardware or software by adding resources like CPU, RAM, or storage.

Horizontal Scaling: Expanding the system by adding more machines or nodes, distributing the load across multiple servers.

Both scaling methods are crucial, and the choice between them depends on the specific needs and architecture of the software product.

Best Practices for Building Scalable Software

1. Adopt Micro Services Architecture

Micro services architecture involves breaking down an application into smaller, independent services that can be developed, deployed, and scaled separately. This approach offers several advantages:

Independent Scaling: Each service can be scaled based on its specific demand, optimizing resource utilization.

Enhanced Flexibility: Developers can use different technologies for different services, choosing the best tools for each task.

Improved Fault Isolation: Failures in one service are less likely to impact the entire system.

Implementing micro services requires careful planning, especially in managing inter-service communication and data consistency.

2. Embrace Modular Design

Modular design complements micro services by structuring the application into distinct modules with specific responsibilities.

Ease of Maintenance: Modules can be updated or replaced without affecting the entire system.

Parallel Development: Different teams can work on separate modules simultaneously, accelerating development.

Scalability: Modules experiencing higher demand can be scaled independently.

This design principle is particularly beneficial in MVP development, where speed and adaptability are crucial.

3. Leverage Cloud Computing

Cloud platforms like AWS, Azure, and Google Cloud offer scalable infrastructure that can adjust to varying workloads.

Elasticity: Resources can be scaled up or down automatically based on demand.

Cost Efficiency: Pay-as-you-go models ensure you only pay for the resources you use.

Global Reach: Deploy applications closer to users worldwide, reducing latency.

Cloud-native development, incorporating containers and orchestration tools like Kubernetes, further enhances scalability and deployment flexibility.

4. Implement Caching Strategies

Caching involves storing frequently accessed data in a temporary storage area to reduce retrieval times. Effective caching strategies:

Reduce Latency: Serve data faster by avoiding repeated database queries.

Lower Server Load: Decrease the number of requests hitting the backend systems.

Enhance User Experience: Provide quicker responses, improving overall satisfaction.

Tools like Redis and Memcached are commonly used for implementing caching mechanisms.

5. Prioritize Continuous Monitoring and Performance Testing

Regular monitoring and testing are vital to ensure the software performs optimally as it scales.

Load Testing: Assess how the system behaves under expected and peak loads.