#fabric rest api

Explore tagged Tumblr posts

Text

Fabric Rest API ahora en SimplePBI

La Data Web trae un regalo para esta navidad. Luego de un gran tiempo de trabajo, hemos incorporado una gran cantidad de requests provenientes de la API de Fabric a la librería SimplePBI de python . Los llamados globales ya están en preview y hemos intentado abarcar los más destacados.

Este es el comienzo de un largo camino de desarrollo que poco a poco intentar abarcar cada vez más categorías para facilitar el uso como venimos haciendo con Power Bi hace años.

Este artículo nos da un panorama de que hay especificamente y como comenzar a utilizarla pronto.

Para ponernos en contexto comenzamos con la teoría. SimplePBI es una librería de Python open source que vuelve mucho más simple interactuar con la PowerBi Rest API. Ahora incorpora también Fabric Rest API. Esto significa que no tenemos que instalar una nueva librería sino que basta con actualizarla. Esto podemos hacerlo desde una consola de comandos ejecutando pip siempre y cuando tengamos python instalado y PIP en las variables de entorno. Hay dos formas:

pip install --upgrade SimplePBI pip install -U SimplePBI

Necesitamos una versión 1.0.1 o superior para disponer de la nueva funcionalidad.

Pre requisitos

Tal como lo hacíamos con la PowerBi Rest API, lo primero es registrar una app en azure y dar sus correspondientes permisos. De momento, todos los permisos de Fabric se encuentran bajo la aplicación delegada "Power Bi Service". Podes ver este artículo para ejecutar el proceso: https://blog.ladataweb.com.ar/post/740398550344728576/seteo-powerbi-rest-api-por-primera-vez

Características

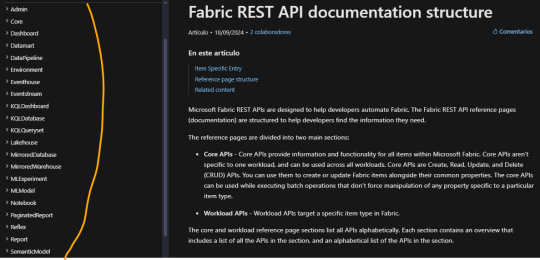

La nueva incorporación intentará cubrir principalmente dos categorías indispensables de la Rest API. Veamos la documentación para guiarnos mejor: https://learn.microsoft.com/en-us/rest/api/fabric/articles/api-structure

A la izquierda podemos ver todas las categorías bajo las cuales consultar u operar siempre y cuando tengamos permisos. Fabric ha optado por denominar "Items" a cada tipo de contenido creable en su entorno. Por ejemplo un item podría ser un notebook, un modelo semántico o un reporte. En este primer release, hemos decidido enfocarnos en las categorías más amplias. Estamos hablando de Admin y Core. Cada una contiene una gran cantidad métodos. Una enfocada en visión del tenant y otro en operativo de la organización. Admin contiene subcategorías como domains, items, labels, tenant, users, workspaces. En core encontraremos otra como capacities, connections, deployment pipelines, gateways, items, job scheduler, long running operations, workspaces.

La forma de uso es muy similar a lo que simplepbi siempre ha presentado con una ligera diferencia en su inicialización de objeto, puesto que ahora tenemos varias clases en un objeto como admin o core.

Para importar llamaremos a fabric desde simplepbi aclarando la categoría deseada

from simplepbi.fabric import core

Para autenticar vamos a necesitar valores de la app registrada. Podemos hacerlo por service principal con un secreto o nuestras credenciales. Un ejemplo para obtener un token que nos permita utilizar los objetos de la api con service principal es:

t = token.Token(tenant_id, app_client_id, None, None, app_secret_key, use_service_principal=True)

Vamos intentar que las categorías de la documentación coincidan con el nombre a colocar luego de importar. Sin embargo, puede que algunas no coincidan como "Admin" de fabric no puede usarse porque ya existe en simplepbi. Por lo tanto usariamos "adminfab". Luego inicializamos el objeto con la clase deseada de la categoría de core.

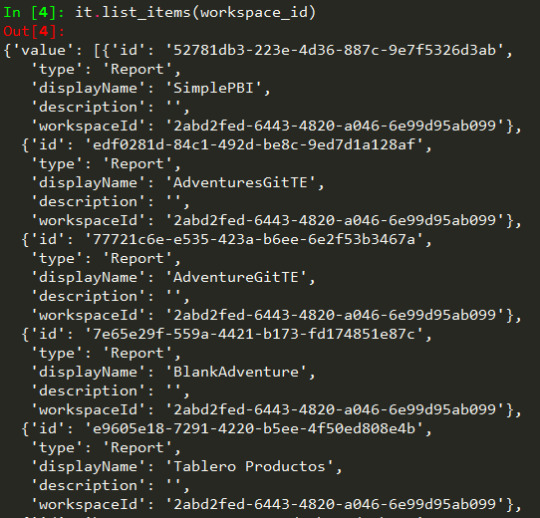

it = core.Items(t.token)

De este modo tenemos accesibilidad a cada método en items de core. Por ejemplo listarlos:

Consideraciones

No todos los requests funcionan con Service Principal. La documentación especifica si podremos usar dicha autenticación o no. Leamos con cuidado en caso de fallas porque podría no soportar ese método.

Nuevos lanzamientos en core y admin. Nos queda un largo año en que buscaremos tener esas categorías actualizadas y poco a poco ir planificando bajo prioridad cuales son las más atractivas para continuar.

Para conocer más, seguirnos o aportar podes encontrarnos en pypi o github.

Recordemos que no solo la librería esta incorporando estos requests como preview sino también que la Fabric API esta cambiando cada día con nuevos lanzamientos y modificaciones que podrían impactar en su uso. Por esto les pedimos feedback y paciencia para ir construyendo en comunidad una librería más robusta.

#fabric#microsoftfabric#fabric tutorial#fabric training#fabric tips#fabric rest api#power bi rest api#fabric python#ladataweb#simplepbi#fabric argentina#fabric cordoba#fabric jujuy

0 notes

Text

The modder argument is a fallacy.

We've all heard the argument, "a modder did it in a day, why does Mojang take a year?"

Hi, in case you don't know me, I'm a Minecraft modder. I'm the lead developer for the Sweet Berry Collective, a small modding team focused on quality mods.

I've been working on a mod, Wandering Wizardry, for about a year now, and I only have the amount of new content equivalent to 1/3 of an update.

Quality content takes time.

Anyone who does anything creative will agree with me. You need to make the code, the art, the models, all of which takes time.

One of the biggest bottlenecks in anything creative is the flow of ideas. If you have a lot of conflicting ideas you throw together super quickly, they'll all clash with each other, and nothing will feel coherent.

If you instead try to come up with ideas that fit with other parts of the content, you'll quickly run out and get stuck on what to add.

Modders don't need to follow Mojang's standards.

Mojang has a lot of standards on the type of content that's allowed to be in the game. Modders don't need to follow these.

A modder can implement a small feature in 5 minutes disregarding the rest of the game and how it fits in with that.

Mojang has to make sure it works on both Java and Bedrock, make sure it fits with other similar features, make sure it doesn't break progression, and listen to the whole community on that feature.

Mojang can't just buy out mods.

Almost every mod depends on external code that Mojang doesn't have the right to use. Forge, Fabric API, and Quilt Standard Libraries, all are unusable in base Minecraft, as well as the dozens of community maintained libraries for mods.

If Mojang were to buy a mod to implement it in the game, they'd need to partially or fully reimplement it to be compatible with the rest of the codebase.

Mojang does have tendencies of *hiring* modders, but that's different than outright buying mods.

Conclusion

Stop weaponizing us against Mojang. I can speak for almost the whole modding community when I say we don't like it.

Please reblog so more people can see this, and to put an end to the modder argument.

#minecraft#minecraft modding#minecraft mods#moddedminecraft#modded minecraft#mob vote#minecraft mob vote#minecraft live#minecraft live 2023#content creation#programming#java#c++#minecraft bedrock#minecraft community#minecraft modding community#forge#fabric#quilt#curseforge#modrinth

1K notes

·

View notes

Text

our forever world <3 ⊹˚. * . ݁₊ ⊹ ⊹˚. * . ݁₊

click below to see details <3 ⊹˚. * . ݁₊ ⊹

Hello! These are some screenshots my boyfriend took of me running around our long-term modded survival minecraft world! If you would like me to post the mods I have added (Fabric API thru curseforge for version 1.21.4), please let me know, and I'll gladly do so!

I can also tell you more details if you so please, like what resource packs, settings, etc. These are all running pretty smoothly for me, and I have a geekom mini pc! So, if you'd like some low-pc friendly mods for quality of life and cozy-casual gameplay, I'd be more than happy to give the creators some love and share their work!

I can also post some builds and updates on here as we progress, if that's something you guys would like to see.

Thanks for reading! Have a lovely rest of your day.

#minecraft screenshots#minecraft youtuber#minecraft build#minecraft server#minecraft#mine#forever world#worldbuilding#modpack#minecraft modpack#modded#minecraft mods#music#aesthetic#cottagecore#cottage aesthetic#cozycore#cozy aesthetic#cozy vibes#sandbox games#survivalworld#survival games#forever#boyfriend#cute#cozy games#stardew valley#fantasy#medieval#show us your builds

22 notes

·

View notes

Text

No. 4: HALLUCINATIONS

Hypnosis | Sensory Deprivation | “You’re still alive in my head.” (Billy Lockett, More)

OC Whump

Hi, here is my contribution no.4 for Whumptober !

A bit of context : When he was younger, the Ensorceleur fled his home and met a man who drew him into his mercenary army. He trusted this man completely, without realizing that their relationship was anything but healthy. After years of committing atrocities on behalf of his mentor, he finally opened his eyes and left. But the experience have definitely left a mark.

If you have any questions, I'd obviously be more than happy to answer! Also, English isn't my first language, so i apologize for any mistake. Check the tags for TW and enjoy !

The world moved around without him seeming to belong to it. His body seemed to be in a different space-time, heavy and slow, while a complex choreography of fluid movements seemed to take place around. A thick, heavy fabric limited his movements and separated him from the rest of the world. On a deeper level, the Ensorceleur recognized the effects of an active substance, probably an opioid administered to calm the raging pain that had taken hold of his decomposing right arm. This recognition, however, didn't allow him to act on the consequences, which didn't help the swarm of agitated persons next to him to calm down.

Standing next to his shivering friend who was clearly in a state of shock, Api struggled to retain any vestiges of composure.

-If there's one fucking piece of information that's correct and accurate in his file, it's that he reacts badly to opioids !

-It wasn't in his file, sir ! Retorted the young apprentice on the verge of tears.

-Then who messed with the files ?!

-I did the best I could with what I had, sir !

-Damn it!

At his wits end, the healer turned away and took a deep breath to calm himself. Well, at least the drug seemed to have greatly reduced the physical pain, which was the primary objective. On the negative side, the mercenary looked more distressed than Api had ever seen him.

The Ensorceleur buried his head in his knees with a moan, drawing his attention. The man who treated a show of weakness as the worst thing that could happen to him moaned. The healer dropped to one knee, hesitantly bringing his hands up to the other man. The problem with trying to heal an Entity completely drugged and trained to kill was that the slightest miscalculated gesture could have dramatic consequences.

-Easy, breathed a voice behind his ear before he could make contact with his friend.

Crouching beside him, Bryan regarded the Ensorceleur with a worried expression.

-If possible, avoid touching him. He sometimes reacts...violently, when he's not in his normal state.

-Has anything like this ever happened before ? inquired the healer cautiously.

The guild leader hesitated visibly, because...

-With his metabolism, yes, from time to time...Don't look at me like that ! he quickly defended himself against the healer's glare. We tried to get his cooperation on several potential treatment plans when necessary, when he was in top form, and he always refused ! Except that once he was injured, we had no choice but to try and treat him with what little medical history we had. So yes, sometimes things got out of hand, and I've seen him in that kind of state before.

The Ensorceleur muttered a series of garbled words incomprehensible to them, and Bryan winced.

-Well, maybe not like this. His reactions to opioids are one of the pieces of information he's shared with us on his own.

-Hey. I need you to focus on us and try to communicate how you're feeling. I have a drug with an antagonistic effect that may help you feel better, but with your strange metabolism, I'd rather we let the effect wear off on its own. But I need to know how you feel, Api said slowly and distinctly to his patient.

The Ensorceleur could have answered him. He could have told him immediately to give him the strongest possible dose of his magic product. In fact, he would probably have begged him to do so, had he been able to hear what Api was saying.

But the ghostly hand resting on the back of his neck like tthe executioner guillotine had ensured that his undivided attention went to the only person in the room worthy of it.

Didn’t I taught you that showing weakness is the best way to get others to stab you in the back ?

Not real. He wasn't. He was drugged, and he absolutely had to hold onto that thought. At all costs.

You've never been one to hide behind lies. But I guess that's what you needed to keep hiding behind Silver Shein's back like a scared child.

The hand had more weight now, nails digging into flesh.

It's pathetic. You look like a beaten dog. But I suppose my disgust is normal. Few artists are ever satisfied with their creations.

The Ensorceleur exhaled the liquid lead in his lungs in a long, hoarse hiss and tried to convince himself that the hand on the back of his neck was more reassuring than terrifying, whether it belonged to Api or Bryan, or even Freya, who distrusted him but wouldn't hurt him for no reason, least of all in front of Bryan's eyes.

He forced himself to open his eyes and stare at Api's anxious face hovering in front of him. Whatever he felt behind him wasn't real. Just a hallucination brought on by the painkiller. Nothing that could hurt him, just a conspiracy from his brain and senses. If he concentrated on Api's features, on his reassuring presence, then the hallucinations would have a harder time dragging him into the dark corner of his consciousness where they resided.

Except that a pale face burst into his field of vision, blocking out his friend's view. The Enchanter gasped and threw himself backwards. His skull hit the wall with a thud and a flash of white flashed into his retina for a second, just a second ; that was enough.

A leather-gloved iron fist closed around his neck, strangling the scream. A weight much heavier than it should have crushed his hips, pinning him to the ground, and Magister leaned over him, smiling broadly, his pupils two black holes dripping ink onto his face.

Perhaps your brother's son would make a better canvas...or a better receptacle !

The man's face melted, lengthened a little, and his hair grew and lightened until a mass of curls frame familiar features. A grotesque parody of Lucien laughed in his face, before vomiting black, stale blood onto his chest. The Ensorceleur received a few drops in his mouth and audibly choked, struggling to free himself from his mentor's grasp.

-No. N-no...

He’s choking

Even now, you don't beg. Is there anything that could make you give up your misplaced pride ? Are they so insignificant to you, those you claim to protect ?

-Nooo...

We'll see, whispered the abomination with his nephew’s face. We'll see how quickly you fall at his feet...

When I've repaired your mistake and got my new suit of flesh, finished Magister, his mentor, master, friend and executioner.

Through the delirious terror (not for himself, never for himself, because his master would never hurt him, but the others, the insignificant...) that clouded his mind, he became aware of an increasingly acute pain in his arm. He resumed his pitiful attempts to free himself. He was the Ensorceleur, he had to fight, to keep going, to do the only thing he was good at...

But he had never been able to make even a violent gesture towards Magister.

You love me more than you've ever loved anyone.

Warm breath on his nose. Ice-blue eyes, punctuated with shadows and shades, so close he could almost see the constellations formed by the black flakes in the iris.

I'll try to sedate him

Watch his arm

Moist warmth on his cheeks, distant and impersonal. Emotions blunted and others too vivid to comprehend that clash and leave him torn, barely able to put together the pieces that make him the Ensorceleur.

I love you.

A sharp but localized pain in his arm.

I forgive you.

The last image to followed him into the muddy waters of unconsciousness were those icy eyes. Or...warm brown, perhaps?

He prefered this softer brown.

L'Ensorceleur let himself be drawn under the surface, where neither ghosts nor memories can follow him.

You belong to me, after all.

#whumptober2024#no.4#Hallucinations#You’re still alive in my head#OC#fic#fantasy settings#angst#Drugged whumpee#Implied past-abuse#Implied unhealthy relationship#Probably an inappropriate way to handle this type of situation

3 notes

·

View notes

Text

Flash Based Array Market Emerging Trends Driving Next-Gen Storage Innovation

The flash based array market has been undergoing a transformative evolution, driven by the ever-increasing demand for high-speed data storage, improved performance, and energy efficiency. Enterprises across sectors are transitioning from traditional hard disk drives (HDDs) to solid-state solutions, thereby accelerating the adoption of flash based arrays. These storage systems offer faster data access, higher reliability, and scalability, aligning perfectly with the growing needs of digital transformation and cloud-centric operations.

Shift Toward NVMe and NVMe-oF Technologies

One of the most significant trends shaping the FBA market is the shift from traditional SATA/SAS interfaces to NVMe (Non-Volatile Memory Express) and NVMe over Fabrics (NVMe-oF). NVMe technology offers significantly lower latency and higher input/output operations per second (IOPS), enabling faster data retrieval and processing. As businesses prioritize performance-driven applications like artificial intelligence (AI), big data analytics, and real-time databases, NVMe-based arrays are becoming the new standard in enterprise storage infrastructures.

Integration with Artificial Intelligence and Machine Learning

Flash based arrays are playing a pivotal role in enabling AI and machine learning workloads. These workloads require rapid access to massive datasets, something that flash storage excels at. Emerging FBAs are now being designed with built-in AI capabilities that automate workload management, improve performance optimization, and enable predictive maintenance. This trend not only enhances operational efficiency but also reduces manual intervention and downtime.

Rise of Hybrid and Multi-Cloud Deployments

Another emerging trend is the integration of flash based arrays into hybrid and multi-cloud architectures. Enterprises are increasingly adopting flexible IT environments that span on-premises data centers and multiple public clouds. FBAs now support seamless data mobility and synchronization across diverse platforms, ensuring consistent performance and availability. Vendors are offering cloud-ready flash arrays with APIs and management tools that simplify data orchestration across environments.

Focus on Energy Efficiency and Sustainability

With growing emphasis on environmental sustainability, energy-efficient storage solutions are gaining traction. Modern FBAs are designed to consume less power while delivering high throughput and reliability. Flash storage vendors are incorporating technologies like data reduction, deduplication, and compression to minimize physical storage requirements, thereby reducing energy consumption and operational costs. This focus aligns with broader corporate social responsibility (CSR) goals and regulatory compliance.

Edge Computing Integration

The rise of edge computing is influencing the flash based array market as well. Enterprises are deploying localized data processing at the edge to reduce latency and enhance real-time decision-making. To support this, vendors are introducing compact, rugged FBAs that can operate reliably in remote and harsh environments. These edge-ready flash arrays offer high performance and low latency, essential for applications such as IoT, autonomous systems, and smart infrastructure.

Enhanced Data Security Features

As cyber threats evolve, data security has become a critical factor in storage system design. Emerging FBAs are being equipped with advanced security features such as end-to-end encryption, secure boot, role-based access controls, and compliance reporting. These features ensure the integrity and confidentiality of data both in transit and at rest. Additionally, many solutions now offer native ransomware protection and data immutability, enhancing trust among enterprise users.

Software-Defined Storage (SDS) Capabilities

Software-defined storage is redefining the architecture of flash based arrays. By decoupling software from hardware, SDS enables greater flexibility, automation, and scalability. Modern FBAs are increasingly adopting SDS features, allowing users to manage and allocate resources dynamically based on workload demands. This evolution is making flash storage more adaptable and cost-effective for enterprises of all sizes.

Conclusion

The flash based array market is experiencing dynamic changes fueled by technological advancements and evolving enterprise needs. From NVMe adoption and AI integration to cloud readiness and sustainability, these emerging trends are transforming the landscape of data storage. As organizations continue their journey toward digital maturity, FBAs will remain at the forefront, offering the speed, intelligence, and agility required for future-ready IT ecosystems. The vendors that innovate in line with these trends will be best positioned to capture market share and lead the next wave of storage evolution.

0 notes

Text

Challenges with Enterprise AI Integration—and How to Overcome Them

Enterprise AI is no longer experimental. It’s operational. From predictive maintenance and process optimization to hyper-personalized experiences, large organizations are investing heavily in AI to unlock productivity and long-term advantage. But what looks promising in a POC often meets resistance, complexity, or underperformance at enterprise scale.

Integrating AI into core systems, workflows, and decision-making layers isn’t about layering models—it’s about aligning technology with infrastructure, data, compliance, and business priorities. And for most enterprises, that’s where the friction starts.

Here’s a breakdown of the most common challenges businesses face during AI integration—and how the most resilient enterprises are solving them:

1. Legacy Systems and Data Silos

Enterprise environments rarely start from scratch. Legacy systems run mission-critical processes. Departmental silos own fragmented data. And AI models often struggle to integrate with monolithic, outdated tech stacks.

What works:

API-first strategies to create interoperability between AI modules and legacy systems—without deep refactoring.

Building a centralized data fabric that unifies siloed data stores and provides real-time access across teams.

Introducing AI middleware layers that can abstract complexity and serve as a modular intelligence layer over existing infrastructure.

Read More: Can AI Agents Be Integrated With Existing Enterprise Systems

2. Model Governance, Compliance, and Explainability

In industries like finance, healthcare, and insurance, it’s not just about accuracy. It’s about transparency, auditability, and the ability to explain how a decision was made. Black-box AI can trigger compliance flags and stall adoption.

What works:

Implementing ModelOps frameworks to standardize model lifecycle management—training, deployment, monitoring, and retirement.

Embedding explainable AI (XAI) principles into model development to ensure decisions can be interpreted by stakeholders and auditors.

Running scenario testing and audit trails to meet regulatory standards and reduce risk exposure.

3. Organizational Readiness and Change Management

AI isn’t just a technology shift—it’s a culture shift. Teams need to trust AI outcomes, understand when to act on them, and adapt workflows. Without internal buy-in, AI gets underused or misused.

What works:

Creating AI playbooks and training paths for business users, not just data scientists.

Setting up cross-functional AI councils to govern use cases, ethical boundaries, and implementation velocity.

Demonstrating quick wins through vertical-specific pilots that solve visible business problems and show ROI.

4. Data Privacy, Security, and Cross-Border Compliance

AI initiatives can get stuck navigating enterprise security policies, data residency requirements, and legal obligations across jurisdictions. Especially when models require access to sensitive, proprietary, or regulated data.

What works:

Leveraging federated learning for training on distributed data sources without moving the data.

Using anonymization and encryption techniques at both rest and transit levels.

Working with cloud providers with built-in compliance tools for HIPAA, GDPR, PCI DSS, etc., to reduce overhead.

5. Scalability and Performance Under Load

Many AI models perform well in test environments but start failing at production scale—when latency, real-time processing, or large concurrent users push the system.

What works:

Deploying models in containerized environments (Kubernetes, Docker) to allow elastic scaling based on load.

Optimizing inference speed using GPU acceleration, edge computing, or lightweight models like DistilBERT instead of full-scale LLMs.

Monitoring model performance metrics in real-time, including latency, failure rates, and throughput, as part of observability stacks.

6. Misalignment Between Tech and Business

Even sophisticated models can fail if they don’t directly support core business goals. Enterprises that approach AI purely from an R&D angle often find themselves with outputs that aren’t actionable.

What works:

Building use-case-first roadmaps, where AI initiatives are directly linked to OKRs, cost savings, or growth targets.

Running joint design sprints between AI teams and business units to co-define the problem and solution scope.

Measuring success not by model metrics (like accuracy), but by business outcomes (like churn reduction or claim processing time).

Key Takeaway

Enterprise AI integration isn’t just about building smarter models—it’s about aligning people, data, governance, and infrastructure. The enterprises that are seeing real returns are the ones that solve upstream complexity early: breaking silos, standardizing operations, and building trust across the board. AI doesn’t deliver returns in isolation—it scales when it’s embedded where decisions happen.

0 notes

Text

Which are the Best Technology and Stacks for Blockchain Development?

The Digital palpitation of Blockchain

The world is still being rewritten by lines of law flowing through blockchain networks. From banking to supply chain and indeed healthcare, the monumental plates of technology are shifting — and blockchain is at the center.

Why Choosing the Right Tech mound Matters

In the blockchain realm, your tech mound is not just a toolkit; it’s your legion. Picking the wrong combination can lead to security loopholes, scalability agonies, or simply development backups. In discrepancy, the right mound empowers invention, adaptability, and lightning-fast performance.

A regard Into Blockchain’s Core Principles

Blockchain is basically a distributed tally. inflexible, transparent, and decentralized. Every decision about development tech must round these foundational values.

Public vs Private Blockchains Know the Battleground

Public blockchains like Ethereum are open, permissionless, and unsure. Private blockchains like Hyperledger Fabric offer permissioned access, suitable for enterprises and healthcare CRM software inventors looking for regulated surroundings.

Top Programming Languages for Blockchain Development

Reliability The Ethereum Favorite

erected specifically for Ethereum, Solidity is the language behind smart contracts. Its tight integration with Ethereum’s armature makes it a no- brainer for inventors entering this space.

Rust The Arising hustler

Lightning-fast and memory-safe, Rust is dominating in ecosystems like Solana and Polkadot. It offers fine- granulated control over system coffers a gift for blockchain masterminds.

Go Concurrency Champion

Go, or Golang, stands out for its simplicity and robust concurrency support. habituated considerably in Hyperledger Fabric, Go helps gauge distributed systems without breaking a sweat.

JavaScript & TypeScript Web3 Wizards

From UI to connecting smart contracts, JavaScript and TypeScript continue to dominate frontend and dApp interfaces. Paired with libraries like Web3.js or Ethers.js, they bring the Web3 macrocosm alive.

Smart Contract Platforms The smarts Behind the Chain

Ethereum

The undisputed leader. Its vast ecosystem and inventor community make it a top choice for smart contract development.

Solana

Known for blazing speed andultra-low freights, Solana supports Rust and C. Ideal for high- frequence trading and DeFi apps.

Frontend Technologies in Blockchain Apps

Reply and Angular UX Anchors

Both fabrics give interactive, scalable stoner interfaces. React’s element- grounded design fits impeccably with dApp armature.

and Ethers.js

They're the islands between your blockchain and cybersurfer. Web3.js supports Ethereum natively, while Ethers.js offers a lighter and further intuitive API.

Backend Technologies and APIs

Perfect for handling multiple connections contemporaneously, Node.js is extensively used in dApps for garçon- side scripting.

A minimalist backend frame, Express.js integrates painlessly with APIs and Web3 libraries.

GraphQL

In a data- driven ecosystem, GraphQL enables briskly, effective queries compared to REST APIs.

Blockchain fabrics and Tools

Truffle Suite

Complete ecosystem for smart contract development collecting, testing, and planting.

Databases in Blockchain Systems

IPFS( InterPlanetary train System)

A peer- to- peer storehouse result that decentralizes train storehouse, essential for apps demanding off- chain data.

BigchainDB

Blending blockchain features with NoSQL database capabilities, BigchainDB is knitter- made for high- outturn apps.

Essential DevOps Tools

Docker

Ensures harmonious surroundings for development and deployment across machines.

Kubernetes

Automates deployment, scaling, and operation of containerized operations.

Jenkins

The robotization backbone of nonstop integration and delivery channels in blockchain systems.

Security Considerations in Blockchain Tech Stacks

Security is n’t a point. It’s a necessity. From contract checkups to secure portmanteau integrations and sale confirmation, every subcaste needs underpinning.

Tech mound for Blockchain App Development A Complete Combo

Frontend React Web3.js

Backend Node.js Express GraphQL

Smart Contracts reliability( Ethereum) or Rust( Solana)

fabrics Hardhat or Truffle

Database IPFS BigchainDB

DevOps Docker Kubernetes Jenkins

This tech stack for blockchain app development provides dexterity, scalability, and enterprise- readiness.

Part of Consensus Algorithms

evidence of Work

Secure but energy- ferocious. PoW is still used by Bitcoin and other heritage systems.

evidence of Stake

Energy-effective and fast. Ethereum’s transition to PoS marked a vital shift.

Delegated Proof of Stake

Used by platforms like EOS, this model adds governance layers through tagged validators.

Part of Artificial Intelligence in Banking and Blockchain Synergy

AI and blockchain are reconsidering banking. Fraud discovery, threat modeling, and smart contracts are now enhanced by machine literacy. The role of artificial intelligence in banking becomes indeed more potent when intermingled with blockchain’s translucency.

Blockchain in Healthcare A Silent Revolution

Hospitals and pharma titans are integrating blockchain to track case records, medicine authenticity, and insurance claims.

Healthcare CRM Software Developers Leading the Change

By bedding blockchain features in CRM platforms, companies are enhancing data sequestration, concurrence shadowing, and real- time health analytics. In this invention surge, healthcare CRM software developers Leading the Change are setting new norms for secure and effective case operation.

Popular Blockchain Use Cases Across diligence

Finance Smart contracts, crypto holdalls

Supply Chain Tracking goods from origin to shelf

Voting Tamper- proof digital choices

Gaming NFTs and digital power

Challenges in Blockchain App Development

Interoperability, scalability, energy operation, and evolving regulations challenge indeed the stylish inventors.

The Future of Blockchain Development Tech Stacks

We'll see confluence AI, IoT, and edge computing will integrate with blockchain heaps, making apps smarter, briskly, and indeed more decentralized.

0 notes

Text

Harnessing API & EDI Synergy for Omnichannel Commerce—Boomi Shows the Way

Digital-native buyers expect seamless experiences across web, mobile, and storefronts. Achieving that requires more than solid APIs; it demands Boomi EDI Integration behind the scenes—details here https://preludesys.com/enterprise-integration-services/boomi-integration/edi/.

The Omnichannel Challenge E-commerce engines thrive on JSON/REST, while legacy distributors still trade X12 documents. Bridging those paradigms without adding latency is critical for order accuracy and customer satisfaction.

Boomi’s Dual-Mode Advantage

Unified platform: manage APIs and EDI flows from the same dashboard—one skill set, one SLA.

Event-driven triggers: convert an inbound EDI 850 into an internal REST call, updating inventory instantly.

Scalable micro-gateways: secure edge deployments keep latency in check during flash-sale peaks.

Revenue Impact Retailers pairing EDI with real-time APIs see:

20 % faster order fulfillment through instant stock reservation.

15 % uplift in upsell revenue via timely cross-channel promotions.

Roadmap for Decision Makers

Assess integration maturity—inventory existing EDI maps and API endpoints.

Adopt a phased rollout—pilot high-volume SKUs, then expand to all product lines.

Leverage managed services—a seasoned microsoft fabric consulting company (if broader analytics are needed) or a Boomi MSP can monitor and optimize flows.

Conclusion

Today’s omnichannel retail success depends on more than great UX—it hinges on fast, accurate data flow across modern and legacy systems. Boomi’s unified platform for API and EDI integration bridges the gap between e-commerce platforms and traditional trading partners, ensuring real-time responsiveness without added complexity.

By combining event-driven architecture with scalable gateways and a single management interface, Boomi empowers retailers to fulfill faster, promote smarter, and operate more efficiently. It’s not just about integration—it’s about delivering seamless experiences that drive revenue and loyalty.

0 notes

Text

Securing the Cloud: Compliance and Security Best Practices for DevOps Teams

In the era of cloud-native development and rapid CI/CD pipelines, DevOps teams are expected to ship code faster than ever. However, speed must never come at the expense of security and compliance. As cyber threats evolve and regulatory requirements grow more complex, integrating security best practices into your cloud DevOps workflows is no longer optional—it’s critical.

At Salzen Cloud, we help organizations embed security and compliance into the fabric of their DevOps processes, ensuring safe, fast, and audit-ready deployments in cloud environments.

The Security Challenge in Cloud DevOps

Traditional security models often operate in isolation, enforcing checkpoints late in the development lifecycle. In cloud-native environments, this results in bottlenecks and missed vulnerabilities. DevSecOps—or “shifting security left”—is the modern approach where security is integrated early and continuously throughout the development pipeline.

The challenge lies in balancing automation, scalability, and security without compromising agility. This is especially important in multi-cloud or hybrid cloud setups where misconfigurations, insecure APIs, and unmonitored assets can introduce risk.

Why Compliance Is Critical in the Cloud

Enterprises must comply with regulations like GDPR, HIPAA, SOC 2, ISO 27001, or PCI-DSS, depending on their industry. These frameworks demand data protection, access control, audit logging, encryption, and more.

For DevOps teams, meeting compliance means:

Ensuring cloud infrastructure is configured securely

Managing identity and access controls across services

Logging and auditing every change or deployment

Automating compliance checks across the pipeline

Best Practices for Cloud Security and Compliance in DevOps

1. Integrate Security into CI/CD Pipelines

Embed automated security scans for code, containers, and infrastructure templates directly into your CI/CD process. Tools like Snyk, Checkmarx, SonarQube, and Aqua Security can catch vulnerabilities before code reaches production.

2. Use Infrastructure as Code (IaC) Securely

IaC tools like Terraform, Pulumi, or AWS CloudFormation allow rapid, consistent cloud provisioning—but they must be secured. Scan your IaC templates for misconfigurations (e.g., open security groups or public S3 buckets) using tools like TFSec or Checkov.

3. Enforce Least Privilege and Role-Based Access

Use IAM roles with minimal privileges, regularly audit permissions, and apply role-based access control (RBAC) for managing users and services.

4. Automate Compliance Checks

Frameworks like Open Policy Agent (OPA) and AWS Config Rules let you enforce custom policies and detect drifts in real time. Pair these with automated alerts to maintain continuous compliance.

5. Encrypt Everything—At Rest and In Transit

Use cloud-native tools for encryption (e.g., AWS KMS, Azure Key Vault) and enforce SSL/TLS for all communication. Make key rotation and secrets management part of your automation strategy.

6. Enable Logging and Real-Time Monitoring

Services like AWS CloudTrail, Azure Monitor, or Google Cloud Operations Suite enable complete observability. Forward logs to SIEM tools for better correlation, threat detection, and auditing.

DevOps + Security = DevSecOps

By treating security as code and embedding it into your pipeline, you transform DevOps into DevSecOps—a collaborative culture where developers, operations, and security professionals work together to build safer systems.

Salzen Cloud helps organizations:

Automate security scans and compliance audits

Build secure CI/CD pipelines

Manage cloud identity and access at scale

Enable real-time monitoring and threat detection

Achieve compliance with industry standards

Final Thoughts

In modern cloud environments, security and compliance aren’t barriers—they’re enablers of trust, resilience, and long-term growth. DevOps teams that proactively integrate these best practices into their cloud workflows can deliver software faster without compromising safety.

At Salzen Cloud, we empower teams to secure every layer of their cloud—from infrastructure to deployment—through automation, monitoring, and expert guidance.

Ready to make security and compliance a competitive advantage? Get in touch with Salzen Cloud and start building safer, faster, and more compliant DevOps pipelines today.

0 notes

Text

What’s New in Azure Data Factory? Latest Features and Updates

Azure Data Factory (ADF) has introduced several notable enhancements over the past year, focusing on expanding data movement capabilities, improving data flow performance, and enhancing developer productivity. Here’s a consolidated overview of the latest features and updates:

Data Movement Enhancements

Expanded Connector Support: ADF has broadened its range of supported data sources and destinations:

Azure Table Storage and Azure Files: Both connectors now support system-assigned and user-assigned managed identity authentication, enhancing security and simplifying access management.

ServiceNow Connector: Introduced in June 2024, this connector offers improved native support in Copy and Lookup activities, streamlining data integration from ServiceNow platforms.

PostgreSQL and Google BigQuery: New connectors provide enhanced native support and improved copy performance, facilitating efficient data transfers.

Snowflake Connector: Supports both Basic and Key pair authentication for source and sink, offering flexibility in secure data handling.

Microsoft Fabric Warehouse: New connectors are available for Copy, Lookup, Get Metadata, Script, and Stored Procedure activities, enabling seamless integration with Microsoft’s data warehousing solutions.

Data Flow and Processing Improvements

Spark 3.3 Integration: In April 2024, ADF updated its Mapping Data Flows to utilize Spark 3.3, enhancing performance and compatibility with modern data processing tasks.

Increased Pipeline Activity Limit: The maximum number of activities per pipeline has been raised to 80, allowing for more complex workflows within a single pipeline.

Developer Productivity Features

Learning Center Integration: A new Learning Center is now accessible within the ADF Studio, providing users with centralized access to tutorials, feature updates, best practices, and training modules, thereby reducing the learning curve for new users.

Community Contributions to Template Gallery: ADF now accepts pipeline template submissions from the community, fostering collaboration and enabling users to share and leverage custom templates.

Enhanced Azure Portal Design: The Azure portal features a redesigned interface for launching ADF Studio, improving discoverability and user experience.

Upcoming Features

Looking ahead, several features are slated for release in Q1 2025:

Dataflow Gen2 Enhancements:

CI/CD and Public API Support: Enabling continuous integration and deployment capabilities, along with programmatic interactions via REST APIs.

Incremental Refresh: Optimizing dataflow execution by retrieving only changed data, with support for Lakehouse destinations.

Parameterization and ‘Save As’ Functionality: Allowing dynamic dataflows and easy duplication of existing dataflows for improved efficiency.

Copy Job Enhancements:

Incremental Copy without Watermark Columns: Introducing native Change Data Capture (CDC) capabilities for key connectors, eliminating the need for specifying incremental columns.

CI/CD and Public API Support: Facilitating streamlined deployment and programmatic management of Copy Job items.

These updates reflect Azure Data Factory’s commitment to evolving in response to user feedback and the dynamic data integration landscape. For a more in-depth exploration of these features, you can refer to the official Azure Data Factory documentation.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Note

hey! would love to know the info on any mods you use for your forever world! ❤️ xx

Hello!

thank you so much for asking!

I'm going to eventually make a carrd similar to my bio one (pinned post) with an updated list of my modpacks or resource packs. However, for now, I'll list them out and include pictures here for your viewing!

modpack overview

⊹˚. * . ݁₊ ⊹

Firstly, here's the modpack overview. It's for the latest version of the game (which I regret because it made a lot of mods I wanted incompatible). I was new to modding. Most of these work for older versions if you so choose to experiment with that!

It's also ran through fabric, which is used for more cozy-vanilla friendly mods, whereas forge or something else is used for more game-changing mods.

I do add content to this modpack kinda often, so I'll make updated posts if I add thing non-cosmetic.

⊹˚. * . ݁₊ ⊹

client mods

⊹˚. * . ݁₊ ⊹

So these are "client mods," which to my understanding only impact your gaming and not the server or world.

So some of these download automatically with other mods, like the entity ones and I think sodium.

I downloaded "BadOptimizations", "ImmediatelyFast"," and "Iris Shaders" myself because the first two helped my gameplay run smoother on a low quality PC, and the last one is needed to run the shaders I chose. You can experiment with that, too, but I know most people use Iris.

actual mods

⊹˚. * . ݁₊ ⊹

okay, so these are all the rest of them underneath client mods. I'm not going to go into much detail for each one, I'll just kind of generally group them together. If you have any specific questions about any or want a link, comment !!

Most of them are self-explanatory, so I'll talk about the random ones that aren't.

--

ModernFix, FPS Reducer, Architectury, are all similar to the optimization mods but they alter game stuff to make your game run better.

--

Essential make it similar to bedrock when it comes to hosting single-player worlds and having a social menu. You can send screenshots and dm on there.

Mod Menu adds a mod button to your menu screen. Allows you to access mods and configure them, especially if you have a configure mod added.

--

Text and Fabric API are needed to run the other mods.

--

resource packs

⊹˚. * . ݁₊ ⊹

these are the current resource packs I have added. Since there's so little, I'll go through them all.

--

Borderless Glass, self explanatory. Makes windows borderless and looks more seamless and realistic.

Better leaves add a bushiness to the leaves blocks.

Dynamic surround sounds adds some really nice and immersion noises to the game, by far a favorite.

Circle Sun and Moon

Fresh animations are so cool that they add more realistic animations to the mobs in game!

Grass flowers and flowering crops add flower textures to the blocks and the crops in the game, super cute!

shaders

⊹˚. * . ݁₊ ⊹

Lastly, these are the shaders I currently have on. Tweak around with the video settings on it. All the options look good !

--

I hope this was insightful and answered all your questions! Have a blessed day.

dms open!! send any other questions <3

Corinthians 9:7

"Each of you should give what you have decided in your heart to give, not reluctantly or under compulsion, for God loves a cheerful giver."

#minecraft#minecraft build#minecraft mods#minecraft screenshots#minecraft youtuber#survivalworld#writing#forever world#mc#sqftash

2 notes

·

View notes

Text

Exploring the Key Components of Cisco ACI Architecture

Cisco ACI (Application Centric Infrastructure) is a transformative network architecture designed to streamline data center management by enabling centralized automation and policy-driven management. In this blog, we will explore the key components of Cisco ACI architecture, highlighting how it facilitates seamless connectivity, scalability, and flexibility within modern data centers.

Whether you are a network professional or a beginner, Cisco ACI training offers invaluable insights into mastering the complexities of ACI. Understanding its core components is essential for optimizing performance and ensuring a secure, efficient network environment. Let's dive into the foundational elements that make up this powerful solution.

Introduction to Cisco ACI Architecture

Cisco ACI is a software-defined networking (SDN) solution designed for modern data centers. It provides a centralized framework to manage networks through a policy-based approach, enabling administrators to automate workflows and enforce application-centric policies efficiently. The architecture is built on a fabric model, incorporating a spine-leaf topology for optimized communication.

Core Components of Cisco ACI

Application Policy Infrastructure Controller (APIC):

The brain of the Cisco ACI architecture.

Manages and monitors the fabric while enforcing policies.

Provides a centralized RESTful API for automation and integration.

Leaf and Spine Switches:

Spine Switches: Handle high-speed inter-leaf communication.

Leaf Switches: Connect endpoints such as servers, storage devices, and other network resources.

Together, they create a low-latency, highly scalable topology.

Endpoint Groups (EPGs):

Logical groupings of endpoints that share similar application or policy requirements.

Simplify the application of policies across connected devices.

Cisco ACI Fabric

The ACI fabric forms the backbone of the architecture, ensuring seamless communication between components. It is based on a spine-leaf topology, where each leaf connects to every spine.

Features:

Uses VXLAN (Virtual Extensible LAN) for overlay networking.

Ensures scalability with distributed intelligence and endpoint learning.

Provides high availability and fault tolerance.

Benefits:

Eliminates bottlenecks with uniform traffic distribution.

Simplifies network operations through centralized management.

Policy Model in Cisco ACI

The policy-driven approach is central to Cisco ACI’s architecture. It enables administrators to define how applications and endpoints interact, reducing complexity and errors.

Key Elements:

Tenants: Logical units for resource isolation.

EPGs: Define groups of endpoints with shared policies.

Contracts and Filters: Govern communication between EPGs.

Advantages:

Provides consistency across the network.

Enhances security by enforcing predefined policies.

Tenants in Cisco ACI

Tenants are fundamental to Cisco ACI’s multi-tenancy model. They provide logical segmentation of resources within the network.

Types of Tenants:

Management Tenant: Manages the ACI fabric and infrastructure.

Common Tenant: Shares resources across multiple users or applications.

Custom Tenants: Created for specific business units or use cases.

Benefits:

Enables secure isolation of resources.

Simplifies management in multi-tenant environments.

Contracts and Filters

Contracts and filters define how endpoints within different EPGs interact.

Contracts:

Specify traffic rules between EPGs.

Include criteria such as protocols, ports, and permissions.

Filters:

Provide granular control over traffic flow.

Allow administrators to define specific policies for allowed or denied communication.

Endpoint Discovery and Learning

Cisco ACI simplifies network operations with dynamic endpoint discovery.

How It Works:

Leaf switches identify endpoints by tracking MAC and IP addresses.

Updates to the fabric are automatic, reflecting real-time changes.

Benefits:

Reduces manual intervention.

Ensures efficient resource utilization and adaptability.

Role of VXLAN in Cisco ACI

VXLAN is a critical technology in Cisco ACI, enabling overlay networking for scalable data centers.

Features:

Encapsulates Layer 2 traffic over a Layer 3 network.

Supports up to 16 million VLANs for extensive segmentation.

Benefits:

Enhances network flexibility and scalability.

Simplifies workload mobility without reconfiguring the physical network.

Integrations with External Networks

Cisco ACI integrates seamlessly with legacy and external networks, ensuring smooth interoperability.

Connectivity Options:

Layer 2 Out (L2Out): Provides direct Layer 2 connectivity to external devices.

Layer 3 Out (L3Out): Establishes Layer 3 routing to external systems.

Advantages:

Facilitates gradual migration to ACI.

Bridges new and existing network infrastructures.

Advantages of Cisco ACI Architecture

Scalability: Supports rapid growth with a spine-leaf topology.

Automation: Reduces complexity with policy-driven management.

Security: Enhances protection through segmentation and controlled interactions.

Flexibility: Adapts to hybrid and multi-cloud environments.

Efficiency: Simplifies network operations, reducing administrative overhead.

Conclusion

In conclusion, understanding the key components of Cisco ACI architecture is essential for designing and managing modern data center networks. By integrating software and hardware, ACI offers a scalable, secure, and automated network environment.

With its centralized policy model and simplified management, Cisco ACI transforms network operations. For those looking to gain in-depth knowledge and practical skills in Cisco ACI, enrolling in a Cisco ACI course can be a valuable step toward mastering this powerful technology and staying ahead in the ever-evolving world of networking.

0 notes

Text

Microsoft SQL Server 2025: A New Era Of Data Management

Microsoft SQL Server 2025: An enterprise database prepared for artificial intelligence from the ground up

The data estate and applications of Azure clients are facing new difficulties as a result of the growing use of AI technology. With privacy and security being more crucial than ever, the majority of enterprises anticipate deploying AI workloads across a hybrid mix of cloud, edge, and dedicated infrastructure.

In order to address these issues, Microsoft SQL Server 2025, which is now in preview, is an enterprise AI-ready database from ground to cloud that applies AI to consumer data. With the addition of new AI capabilities, this version builds on SQL Server’s thirty years of speed and security innovation. Customers may integrate their data with Microsoft Fabric to get the next generation of data analytics. The release leverages Microsoft Azure innovation for customers’ databases and supports hybrid setups across cloud, on-premises datacenters, and edge.

SQL Server is now much more than just a conventional relational database. With the most recent release of SQL Server, customers can create AI applications that are intimately integrated with the SQL engine. With its built-in filtering and vector search features, SQL Server 2025 is evolving into a vector database in and of itself. It performs exceptionally well and is simple for T-SQL developers to use.Image credit to Microsoft Azure

AI built-in

This new version leverages well-known T-SQL syntax and has AI integrated in, making it easier to construct AI applications and retrieval-augmented generation (RAG) patterns with safe, efficient, and user-friendly vector support. This new feature allows you to create a hybrid AI vector search by combining vectors with your SQL data.

Utilize your company database to create AI apps

Bringing enterprise AI to your data, SQL Server 2025 is a vector database that is enterprise-ready and has integrated security and compliance. DiskANN, a vector search technology that uses disk storage to effectively locate comparable data points in massive datasets, powers its own vector store and index. Accurate data retrieval through semantic searching is made possible by these databases’ effective chunking capability. With the most recent version of SQL Server, you can employ AI models from the ground up thanks to the engine’s flexible AI model administration through Representational State Transfer (REST) interfaces.

Furthermore, extensible, low-code tools provide versatile model interfaces within the SQL engine, backed via T-SQL and external REST APIs, regardless of whether clients are working on data preprocessing, model training, or RAG patterns. By seamlessly integrating with well-known AI frameworks like LangChain, Semantic Kernel, and Entity Framework Core, these tools improve developers’ capacity to design a variety of AI applications.

Increase the productivity of developers

To increase developers’ productivity, extensibility, frameworks, and data enrichment are crucial for creating data-intensive applications, such as AI apps. Including features like support for REST APIs, GraphQL integration via Data API Builder, and Regular Expression enablement ensures that SQL will give developers the greatest possible experience. Furthermore, native JSON support makes it easier for developers to handle hierarchical data and schema that changes regularly, allowing for the development of more dynamic apps. SQL development is generally being improved to make it more user-friendly, performant, and extensible. The SQL Server engine’s security underpins all of its features, making it an AI platform that is genuinely enterprise-ready.

Top-notch performance and security

In terms of database security and performance, SQL Server 2025 leads the industry. Enhancing credential management, lowering potential vulnerabilities, and offering compliance and auditing features are all made possible via support for Microsoft Entra controlled identities. Outbound authentication support for MSI (Managed Service Identity) for SQL Server supported by Azure Arc is introduced in SQL Server 2025.

Additionally, it is bringing to SQL Server performance and availability improvements that have been thoroughly tested on Microsoft Azure SQL. With improved query optimization and query performance execution in the latest version, you may increase workload performance and decrease troubleshooting. The purpose of Optional Parameter Plan Optimization (OPPO) is to greatly minimize problematic parameter sniffing issues that may arise in workloads and to allow SQL Server to select the best execution plan based on runtime parameter values supplied by the customer.

Secondary replicas with persistent statistics mitigate possible performance decrease by preventing statistics from being lost during a restart or failover. The enhancements to batch mode processing and columnstore indexing further solidify SQL Server’s position as a mission-critical database for analytical workloads in terms of query execution.

Through Transaction ID (TID) Locking and Lock After Qualification (LAQ), optimized locking minimizes blocking for concurrent transactions and lowers lock memory consumption. Customers can improve concurrency, scalability, and uptime for SQL Server applications with this functionality.

Change event streaming for SQL Server offers command query responsibility segregation, real-time intelligence, and real-time application integration with event-driven architectures. New database engine capabilities will be added, enabling near real-time capture and publication of small changes to data and schema to a specified destination, like Azure Event Hubs and Kafka.

Azure Arc and Microsoft Fabric are linked

Designing, overseeing, and administering intricate ETL (Extract, Transform, Load) procedures to move operational data from SQL Server is necessary for integrating all of your data in conventional data warehouse and data lake scenarios. The inability of these conventional techniques to provide real-time data transfer leads to latency, which hinders the development of real-time analytics. In order to satisfy the demands of contemporary analytical workloads, Microsoft Fabric provides comprehensive, integrated, and AI-enhanced data analytics services.

The fully controlled, robust Mirrored SQL Server Database in Fabric procedure makes it easy to replicate SQL Server data to Microsoft OneLake in almost real-time. In order to facilitate analytics and insights on the unified Fabric data platform, mirroring will allow customers to continuously replicate data from SQL Server databases running on Azure virtual machines or outside of Azure, serving online transaction processing (OLTP) or operational store workloads directly into OneLake.

Azure is still an essential part of SQL Server. To help clients better manage, safeguard, and control their SQL estate at scale across on-premises and cloud, SQL Server 2025 will continue to offer cloud capabilities with Azure Arc. Customers can further improve their business continuity and expedite everyday activities with features like monitoring, automatic patching, automatic backups, and Best Practices Assessment. Additionally, Azure Arc makes SQL Server licensing easier by providing a pay-as-you-go option, giving its clients flexibility and license insight.

SQL Server 2025 release date

Microsoft hasn’t set a SQL Server 2025 release date. Based on current data, we can make some confident guesses:

Private Preview: SQL Server 2025 is in private preview, so a small set of users can test and provide comments.

Microsoft may provide a public preview in 2025 to let more people sample the new features.

General Availability: SQL Server 2025’s final release date is unknown, but it will be in 2025.

Read more on govindhtech.com

#MicrosoftSQLServer2025#DataManagement#SQLServer#retrievalaugmentedgeneration#RAG#vectorsearch#GraphQL#Azurearc#MicrosoftAzure#MicrosoftFabric#OneLake#azure#microsoft#technology#technews#news#govindhtech

0 notes

Text

API architectural styles determine how applications communicate. The choice of an API architecture can have significant implications on the efficiency, flexibility, and robustness of an application. So it is very important to choose based on your application's requirements, not just what is often used. Let’s examine some prominent styles:

REST https://drp.li/what-is-a-rest-api-z7lk…

GraphQL https://drp.li/graphql-how-does-it-work-z7l…

SOAP https://drp.li/soap-how-does-it-work-z7lk…

gRPC gRPC https://drp.li/what-is-grpc-z7lk…

WebSockets https://drp.li/webhooks-and-websockets-z7lk…

MQTT MQTT https://drp.li/automation-with-mqtt-z7lk…

API architectural styles are strategic choices that influence the very fabric of application interactions. to learn more : https://drp.li/state-of-the-api-report-z7dp…

0 notes

Text

Cisco Nexus Vulnerability Let Hackers Execute Arbitrary Commands on Vulnerable Systems

A critical vulnerability has been discovered in Cisco’s Nexus Dashboard Fabric Controller (NDFC), potentially allowing hackers to execute arbitrary commands on affected systems. This flaw, identified as CVE-2024-20432, was first published on October 2, 2024. Its CVSS score of 9.9 indicates its severe impact. Vulnerability Details The vulnerability resides in the Cisco NDFC’s REST API […] The post…

0 notes

Text

The MERN Stack Journey Crafting Dynamic Web Applications

This course initiates an engaging expedition into the realm of MERN stack development course. The MERN stack, comprising MongoDB, Express.js, React, and Node.js, stands as a potent fusion of technologies empowering developers to construct dynamic and scalable web applications. Whether you're a novice venturing into web development or a seasoned professional aiming to broaden your expertise, this course equips you with the requisite knowledge and hands-on practice to master the MERN stack.

Diving into MongoDB the Foundation of Data Management

Our journey commences with MongoDB, a premier NoSQL database offering versatility and scalability in managing extensive datasets. We delve into the essentials of MongoDB, encompassing document-based data structuring, CRUD operations, indexing techniques, and aggregation methodologies. By course end, you'll possess a firm grasp on harnessing MongoDB for data storage and retrieval within MERN stack applications.

Constructing Robust Backend Services with Express.js

Subsequently, we immerse ourselves in Express.js, a concise web application framework for Node.js, to fabricate the backend infrastructure of our MERN stack applications. You'll acquire proficiency in crafting RESTful APIs with Express.js, handling HTTP transactions, integrating middleware for authentication and error management, and establishing connectivity with MongoDB for database interactions. Through practical exercises and projects, you'll cultivate adeptness in constructing resilient and scalable backend systems using Express.js.

Crafting Dynamic User Interfaces with React

Transitioning to the frontend domain, we harness the capabilities of React, a JavaScript library for crafting user interfaces. In MERN stack development course you’ll master the art of formulating reusable UI components, managing application state via React hooks and context, processing user input and form submissions, and implementing client-side routing mechanisms for single-page applications. Upon course completion, you'll possess the prowess to develop dynamic and interactive user interfaces utilizing react.

Orchestrating Full-Stack Development with Node.js

In the concluding segment of the course, we synergize all components, leveraging Node.js to orchestrate full-stack MERN applications. You'll learn to establish frontend-backend connectivity via RESTful APIs, handle authentication and authorization intricacies, integrate real-time data updates utilizing WebSockets, and deploy applications to production environments. Through a series of hands-on projects, you'll fortify your understanding of MERN stack development course, emerging as a proficient practitioner in crafting dynamic web applications.

Going on the MERN Stack Mastery Journey

Upon completing this course, you'll possess an encompassing comprehension of MERN stack development course and the adeptness to craft dynamic and scalable web applications from inception. Whether you're envisioning a career in web development, aspiring to launch a startup venture, or simply seeking to enhance your skill repertoire, mastery of the MERN stack unlocks myriad opportunities in the contemporary landscape of web development. Are you prepared to embark on this transformative journey and emerge as a MERN stack virtuoso? Let's commence this exhilarating expedition!

Data Management with MongoDB

MongoDB serves as the database component of the MERN stack. Here, we'll discuss schema design, CRUD operations, indexing, and data modeling techniques to effectively manage your application's data.

Securing Your MERN Application

Security is paramount in web development. This section covers best practices for securing your MERN application, including authentication, authorization, input validation, and protecting against common vulnerabilities.

Deployment and Scaling Strategies

Once your MERN application is ready, it's time to deploy it to a production environment. We'll explore deployment options, containerization with Docker, continuous integration and deployment (CI/CD) pipelines, and strategies for scaling your application as it grows.

Troubleshooting and Debugging

Even the well-designed applications encounter bugs and errors. Here, we'll discuss strategies for troubleshooting and debugging your MERN application, including logging, error handling, and using developer tools for diagnosing issues.

Advanced Topics and Next Steps

To further expand your MERN stack skills, this section covers advanced topics such as server-side rendering with React.js, GraphQL integration, micro services architecture, and exploring additional libraries and frameworks to enhance your development workflow.

Continuous Integration and Deployment (CI/CD)

Automating the deployment process can streamline your development workflow and ensure consistent releases. In this section, we'll delve into setting up a CI/CD pipeline for your MERN application using tools like Jenkins, Travis CI, or GitLab CI. You'll learn about automated testing, deployment strategies, and monitoring your application in production.

Conclusion

MERN stack development course offers a robust and efficient framework for building modern web applications. By leveraging MongoDB, Express.js, React.js, and Node.js, developers can create highly scalable, responsive, and feature-rich applications. The modular nature of the stack allows for easy integration of additional technologies and tools, enhancing its flexibility and functionality. Additionally, the active community support and extensive documentation make MERN stack development accessible and conducive to rapid prototyping and deployment. As businesses continue to demand dynamic and interactive web solutions, mastering the MERN stack remains a valuable skill for developers looking to stay at the forefront of web development trends.

0 notes