#knowledge base software

Explore tagged Tumblr posts

Text

(he's autistic to me)

#master sol#my gifs#only tagging this as sol for reasons#but fine to rb#he's probs just flustered but his mannerisms during this entire scene were so recognisable for me#during the whole talk when i first saw this scene and of course looking back it makes more sense why he avoided eye contact that much#but still let me hc my favs#this gif is bad quality but i'm too tired to get my laptop with my gif editing software aka capcut rn#but it's not really abt the gif itself anyway but more about my association#feeling kinda weird abt posting this since i'm still waiting for my assesment and actually i don't think it's autism after all so maybe#i should hold off on posts like this since if i'm indeed not autistic then i have just been making assumptions based on limited online info#and some stuff my tutor told me - at least he knows actual autistic ppl as opposed to me and has more nuanced knowledge#but it was literally the thought that immediatly came to me bc the way he avoided eyecontact is very familiar to me#so me thinking of him as autistic is based on similarlty to myself except if i'm not autistic then it's just cherry picked stuff about myse#that fits a cliche so yeah kinda meh#anyway a bit of a rant#should be assessed in a few weeks time now hopefully

14 notes

·

View notes

Text

My experience in impulse buying game development software has given me great knowledge

#my knowledge....#rpg maker is THE tool for a simple rpg#you can go pretty far with it#not too far but enough for a simple rpg#specially if you are a beginner#Game Maker is good for people who know a little code but not enough to go all out#but it wants your money soo bad#the one that fnaf was made in is also pretty easy#Unity has everything youve ever dreamed of doing but it's difficult. for pros only#All Lua based software is in the middle ground. very code heavy#The golden rule: if you see a software that doesnt have an active and supportive community RUN AWAY#LOOK AT ANOTHER ONE#a lot of these tend to be very limited and cause you trouble down the line#trust stuff thats known to be used. like ren'py. Godot. Pico8 even#that also means theres a lot of tutorials out there

22 notes

·

View notes

Text

am having the weirdest issue w/ this dvd i bought online, where like, it plays but the video is deeply fucked up and jerky/skipping therefore unsyncing it from the audio (which is fine). BUT. only on the xbox. i found one single solitary review saying it ended up working fine on a regular dvd player and lo and behold, tried it on my dad's laptop and it's true! How? Why? Who Fucking Knows! Now i gotta go dig our fucking oldass dvd player outta basement storage bc lord knows neither my new laptop nor my old laptop has one -_-

it's fuckin weird, tho, right? Like, there were other amazon reviews mentioning the issue but just the one about the xbox vs dvd drive thing, and there were def other ppl positively reviewing the dvd itself and not just the movie (they mentioned the extra features). i also kept finding one-off comments on various reddit threads that mention the issue but never any resolution. i even logged on to facebook (gag) on my computer and scrolled alllllll the way back to 2017/2018 on the movie's official fb page and again. just the occasional comment about the issue and like 1 single reply on 1 comment mentioning the xbox thing ://///

#also its just. not a gr8 quality dvd? its NOT a bootleg but u could be forgiven 4 thinking so based on packaging#and the video is a lot more grainy than when i watched on streaming. AND theres no captions !!!! unforgivable imho#it shouldnt be a region issue bc wouldnt it just. not play then? plus its an american release anyways#im very annoyed tbh and i want a replacement not a refund but it doesnt give me that option on amazon.....#the aforementioned fb comment reply said that updating the xbox's firmware/software might resolve the issue#but we already have ours set to update automatically so it Should be up to date anyways......#ah well. literally only bought it bc of extra scenes/bts stuff but still :( be nice 2 not have 2 find and set up a whole nother device.....#ended up buying a digital copy plus the 2 others in the trilogy since they didnt even get physical releases.....#blegh. hate this digital movie library shit. i dont even know the signin for the microsoft account bc its a shared console!#i DID see some1 online say that they ripped the dvd and could then play THAT on the xbox but that is. beyond my limited knowledge#bro i dont even buy blurays idk wtf im doing here#the fb page does still seem 2 be updating abt recent projects but the website isnt up any longer so. 🤷#its not a Big Name Movie ig they have outright said on the fb page that eg. they couldnt afford 2 also do a bluray release or smth idek#this whole thing is baffling honestly

2 notes

·

View notes

Text

https://aurosks.com/the-best-ways-to-use-auros-for-product-development/

Explore the best ways to utilize Auros Knowledge Systems for product development. This page details how Auros supports Agile processes, APQP, design reviews, engineering standards, CAD design, knowledge-based engineering, regulatory compliance, systems engineering, and virtual build assessment. Learn how Auros can streamline workflows, improve collaboration, and enhance efficiency in your product development efforts.

For more details, visit Auros Knowledge Systems.

#enterprise knowledge management software#manufacturing engineering#software for knowledge management#manufacturing project management software#engineering knowledge management#knowledge based engineering

0 notes

Text

A fun thing about computer skills is that as you have more of them, the number of computer problems you have doesn't go down.

This is because as a beginner, you have troubles because you don't have much knowledge.

But then you learn a bunch more, and now you've got the skills to do a bunch of stuff, so you run into a lot of problems because you're doing so much stuff, and only an expert could figure them out.

But then one day you are an expert. You can reprogram everything and build new hardware! You understand all the various layers of tech!

And your problems are now legendary. You are trying things no one else has ever tried. You Google them and get zero results, or at best one forum post from 1997. You discover bugs in the silicon of obscure processors. You crash your compiler. Your software gets cited in academic papers because you accidently discovered a new mathematical proof while trying to remote control a vibrator. You can't use the wifi on your main laptop because you wrote your own uefi implementation and Intel has a bug in their firmware that they haven't fixed yet, no matter how much you email them. You post on mastodon about your technical issue and the most common replies are names of psychiatric medications. You have written your own OS but there arent many programs for it because no one else understands how they have to write apps as a small federation of coroutine-based microservices. You ask for help and get Pagliacci'd, constantly.

But this is the natural of computer skills: as you know more, your problems don't get easier, they just get weirder.

33K notes

·

View notes

Text

youtube

We are excited to present to you a comprehensive video tour of our brand-new knowledgebase portal. In this video, we'll guide you through the vast array of tutorials and videos that delve into the powerful functionality of Lanteria HR. Our knowledge base is designed to provide our valued customers with in-depth insights and step-by-step instructions on how to make the most of our HR management solution.

#lanteria#lanteria hr#new lanteria knowledge base site tour#lanteria hr quick guide#hr software#employee self service#employee self service portal#video tutorials for employee#employee training#employee training and development#employee training video#hr management system#human resource management#HR system#hr system#HR processes#what are hr processes#knowledge base site tour on lanteria#best hrms for human resource management in 2023#how to use hr system#Youtube

0 notes

Text

The conversation around AI is going to get away from us quickly because people lack the language to distinguish types of AI--and it's not their fault. Companies love to slap "AI" on anything they believe can pass for something "intelligent" a computer program is doing. And this muddies the waters when people want to talk about AI when the exact same word covers a wide umbrella and they themselves don't know how to qualify the distinctions within.

I'm a software engineer and not a data scientist, so I'm not exactly at the level of domain expert. But I work with data scientists, and I have at least rudimentary college-level knowledge of machine learning and linear algebra from my CS degree. So I want to give some quick guidance.

What is AI? And what is not AI?

So what's the difference between just a computer program, and an "AI" program? Computers can do a lot of smart things, and companies love the idea of calling anything that seems smart enough "AI", but industry-wise the question of "how smart" a program is has nothing to do with whether it is AI.

A regular, non-AI computer program is procedural, and rigidly defined. I could "program" traffic light behavior that essentially goes { if(light === green) { go(); } else { stop();} }. I've told it in simple and rigid terms what condition to check, and how to behave based on that check. (A better program would have a lot more to check for, like signs and road conditions and pedestrians in the street, and those things will still need to be spelled out.)

An AI traffic light behavior is generated by machine-learning, which simplistically is a huge cranking machine of linear algebra which you feed training data into and it "learns" from. By "learning" I mean it's developing a complex and opaque model of parameters to fit the training data (but not over-fit). In this case the training data probably includes thousands of videos of car behavior at traffic intersections. Through parameter tweaking and model adjustment, data scientists will turn this crank over and over adjusting it to create something which, in very opaque terms, has developed a model that will guess the right behavioral output for any future scenario.

A well-trained model would be fed a green light and know to go, and a red light and know to stop, and 'green but there's a kid in the road' and know to stop. A very very well-trained model can probably do this better than my program above, because it has the capacity to be more adaptive than my rigidly-defined thing if the rigidly-defined program is missing some considerations. But if the AI model makes a wrong choice, it is significantly harder to trace down why exactly it did that.

Because again, the reason it's making this decision may be very opaque. It's like engineering a very specific plinko machine which gets tweaked to be very good at taking a road input and giving the right output. But like if that plinko machine contained millions of pegs and none of them necessarily correlated to anything to do with the road. There's possibly no "if green, go, else stop" to look for. (Maybe there is, for traffic light specifically as that is intentionally very simplistic. But a model trained to recognize written numbers for example likely contains no parameters at all that you could map to ideas a human has like "look for a rigid line in the number". The parameters may be all, to humans, meaningless.)

So, that's basics. Here are some categories of things which get called AI:

"AI" which is just genuinely not AI

There's plenty of software that follows a normal, procedural program defined rigidly, with no linear algebra model training, that companies would love to brand as "AI" because it sounds cool.

Something like motion detection/tracking might be sold as artificially intelligent. But under the covers that can be done as simply as "if some range of pixels changes color by a certain amount, flag as motion"

2. AI which IS genuinely AI, but is not the kind of AI everyone is talking about right now

"AI", by which I mean machine learning using linear algebra, is very good at being fed a lot of training data, and then coming up with an ability to go and categorize real information.

The AI technology that looks at cells and determines whether they're cancer or not, that is using this technology. OCR (Optical Character Recognition) is the technology that can take an image of hand-written text and transcribe it. Again, it's using linear algebra, so yes it's AI.

Many other such examples exist, and have been around for quite a good number of years. They share the genre of technology, which is machine learning models, but these are not the Large Language Model Generative AI that is all over the media. Criticizing these would be like criticizing airplanes when you're actually mad at military drones. It's the same "makes fly in the air" technology but their impact is very different.

3. The AI we ARE talking about. "Chat-gpt" type of Generative AI which uses LLMs ("Large Language Models")

If there was one word I wish people would know in all this, it's LLM (Large Language Model). This describes the KIND of machine learning model that Chat-GPT/midjourney/stablediffusion are fueled by. They're so extremely powerfully trained on human language that they can take an input of conversational language and create a predictive output that is human coherent. (I am less certain what additional technology fuels art-creation, specifically, but considering the AI art generation has risen hand-in-hand with the advent of powerful LLM, I'm at least confident in saying it is still corely LLM).

This technology isn't exactly brand new (predictive text has been using it, but more like the mostly innocent and much less successful older sibling of some celebrity, who no one really thinks about.) But the scale and power of LLM-based AI technology is what is new with Chat-GPT.

This is the generative AI, and even better, the large language model generative AI.

(Data scientists, feel free to add on or correct anything.)

3K notes

·

View notes

Text

Noted I definetly will eventually!

Does anyone want to do my new google form

#my statistical and data analysis knowledge level is mega base level as of now#so i suspect its gonna be awhile before id get any meaningful use out of any decent statistical software#but im sure eventually the need for better software will definetly Arise#self rb

155 notes

·

View notes

Text

Oh boy, VaM is kind of a trial and error experience LOL I couldn't really show you how to use the interface and stuff without a whole video or something, but it's not THAT difficult to get a hang of if you just give yourself a day or two to play around, not to mention the number of tutorials you find out there. Luckily, if you only want to use it as a reference software that makes the process far easier (to this day I have no idea how to animate on that thing, since that's not what I use it for)

As for how I use it, it's pretty self explanatory - if there's a complicated pose I want to draw but I'm either having trouble with it, or just want to double-check angles/anatomy, I will use it as a resource! I use for most of my "proper" pieces (y'know, the nicer looking ones) and every once in a while for my silly comics if I'm having trouble with a pose.

Lets use this drawing for example (the character on top of DU drow belongs to @namespara )

I don't draw a lot of mud-wrestling (shocking, I know) but I had an idea of the kind of pose I wanted them to be in. So the very first thing I did was make a rough sketch of what I was envisioning:

I often do a rough sketch first, even If I know I'm going to be pulling the program up because A) It's less tedious than adjusting the models over and over again until I pick a pose and B) because sometimes I'll decide I don't need the reference, after all, and so that's 30 minutes I'll have spared myself of playing around on the software.

Now, this is a pretty complicated pose! It's in a weird angle and the bodies are making contact in ways I'm not used to depicting, so I did choose to whip out VaM for this one. I went into the program and after some messing around, I flopped my little dolls together like this:

Now something really cool about VaM is that you can completely customize your models, and if you have the patience, I would definitely encourage you to do so! Obviously, you don't have to make picture perfect replicas of every single character you have, but as you can see here I have made a DU drow "decoy" to help me better understand some of his features when I draw him: he has a strong brow, a short nose, a square jawline - these are all going to look a very specific way from certain angles, and I might not always be sure of how to draw it right! So it's useful to have models that bear SOME semblance to the character so you can better understand how different viewpoints will affect their bone structure and mass.

Also thank fucking god for the elf-ear slider. Figuring out how to draw those shits from certain angles was a huge pain in the ass when I started drawing DnD races.

So, with the reference in hand, I go over the sketch again:

Now you may notice that I don't stick to the reference 100%. There's three reasons for this:

posing on VaM is tedious as hell. You can get something incredibly natural looking and picture-perfect to reference from if you wish, but it's going to take you hours to do. So, for the most part I just slap guys together until the results are "close enough" and use that.

In my opinion, you should always aim to ENHANCE your reference material, not replicate it exactly!

While VaM is a PRETTY DANG GOOD source of anatomical reference, it isn't perfect, I often supplement it with further reference from real life resources or make tweaks based on my own knowledge where I catch it falling short (and, antithetical to what I just said, I sometimes fuck the anatomy up further on purpose if I think it looks better that way LOL it's all jazz baby).

Then lines, color, yada yada. I don't have a tutorial on that and I don't think I could make one, because my process is chaotic as hell, but I do at times use Virt-a-mate as loose reference for lighting too when coloring - waaaaayyyy less so however, because that process is even more tedious and I feel like I often get better results by just winging it. It is a feature of the program though, and I'm sure it would be helpful for someone who has a difficult time visualizing lights and shadows. I only started using this program a few months ago, so I happened to already have a pretty good understanding of that kind of thing and just don't personally feel like I get much out of that particular mechanic.

Here's a few other examples of pieces that I made reference for (WARNING: Suggestive)

Now, for the question many of you may want to ask:

"Can I trace this junk?"

And to that, I say: Buddy, you can do whatever the hell you want with the reference material you created.

However,

If your goal is to learn and improve your art, and to recreate realistic proportions and anatomy from memory, tracing won't help you.

Developing your own style, your muscle memory, and personal technique will all be hindered by choosing to trace instead of drawing from observation, so I would encourage against it. Hell - even when tracing is employed as a technique, it's usually by high-skill realism & concept artists who are looking to either cut some corners, save time, or just double-check their own proportions in order to improve further - if you try tracing as a beginner, you will most definitely find the result to still look stiff and "off".

So trust me, there is so much more to be gained from drawing from observation. Make note of tangents, compare proportions, use all the elements of the picture to dictate where and how things should go - it will be a far more rewarding experience.

Hopefully this has been helpful! VaM is a really cheap program (you get it on the guys' patreon for I think 8 dollars, just google it!) and it's definitely been worth my money as an artist since I found it. Learning to use it can be a little intimidating at first glance, but as I said above you only really need a day plus one or two tutorials to get a hang of the interface.

A fair warning though, IT IS A SOFTWARE MADE FOR VIRTUAL SEX/ADULT ANIMATION So when looking it up expect to see a some spicy content.

#Funfact THIS is the post that got me flagged last time so i'm really tempting fate right now LOL#ask#art#tutorial#resource

688 notes

·

View notes

Text

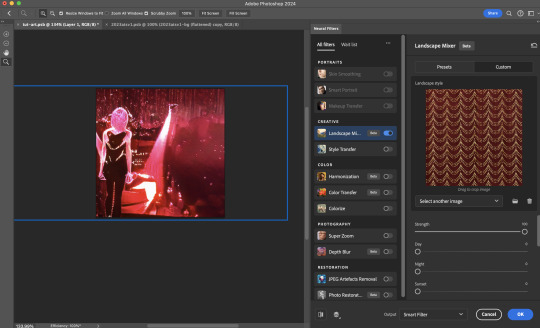

Neural Filters Tutorial for Gifmakers by @antoniosvivaldi

Hi everyone! In light of my blog’s 10th birthday, I’m delighted to reveal my highly anticipated gifmaking tutorial using Neural Filters - a very powerful collection of filters that really broadened my scope in gifmaking over the past 12 months.

Before I get into this tutorial, I want to thank @laurabenanti, @maines , @cobbbvanth, and @cal-kestis for their unconditional support over the course of my journey of investigating the Neural Filters & their valuable inputs on the rendering performance!

In this tutorial, I will outline what the Photoshop Neural Filters do and how I use them in my workflow - multiple examples will be provided for better clarity. Finally, I will talk about some known performance issues with the filters & some feasible workarounds.

Tutorial Structure:

Meet the Neural Filters: What they are and what they do

Why I use Neural Filters? How I use Neural Filters in my giffing workflow

Getting started: The giffing workflow in a nutshell and installing the Neural Filters

Applying Neural Filters onto your gif: Making use of the Neural Filters settings; with multiple examples

Testing your system: recommended if you’re using Neural Filters for the first time

Rendering performance: Common Neural Filters performance issues & workarounds

For quick reference, here are the examples that I will show in this tutorial:

Example 1: Image Enhancement | improving the image quality of gifs prepared from highly compressed video files

Example 2: Facial Enhancement | enhancing an individual's facial features

Example 3: Colour Manipulation | colourising B&W gifs for a colourful gifset

Example 4: Artistic effects | transforming landscapes & adding artistic effects onto your gifs

Example 5: Putting it all together | my usual giffing workflow using Neural Filters

What you need & need to know:

Software: Photoshop 2021 or later (recommended: 2023 or later)*

Hardware: 8GB of RAM; having a supported GPU is highly recommended*

Difficulty: Advanced (requires a lot of patience); knowledge in gifmaking and using video timeline assumed

Key concepts: Smart Layer / Smart Filters

Benchmarking your system: Neural Filters test files**

Supplementary materials: Tutorial Resources / Detailed findings on rendering gifs with Neural Filters + known issues***

*I primarily gif on an M2 Max MacBook Pro that's running Photoshop 2024, but I also have experiences gifmaking on few other Mac models from 2012 ~ 2023.

**Using Neural Filters can be resource intensive, so it’s helpful to run the test files yourself. I’ll outline some known performance issues with Neural Filters and workarounds later in the tutorial.

***This supplementary page contains additional Neural Filters benchmark tests and instructions, as well as more information on the rendering performance (for Apple Silicon-based devices) when subject to heavy Neural Filters gifmaking workflows

Tutorial under the cut. Like / Reblog this post if you find this tutorial helpful. Linking this post as an inspo link will also be greatly appreciated!

1. Meet the Neural Filters!

Neural Filters are powered by Adobe's machine learning engine known as Adobe Sensei. It is a non-destructive method to help streamline workflows that would've been difficult and/or tedious to do manually.

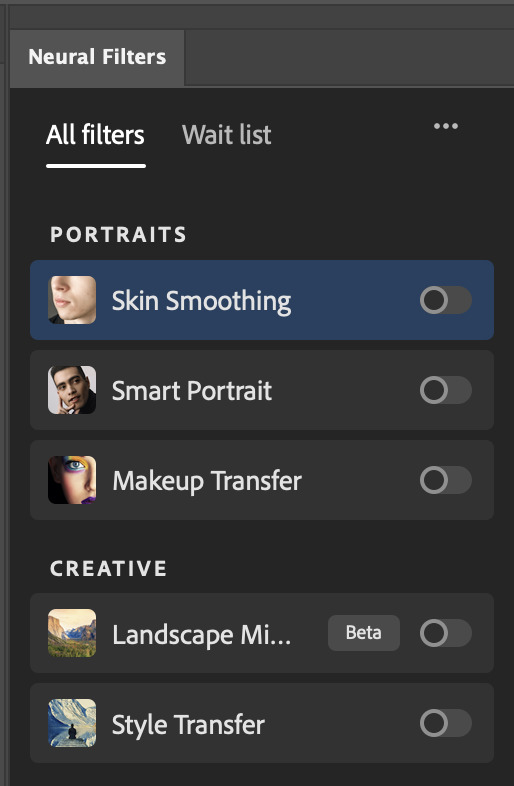

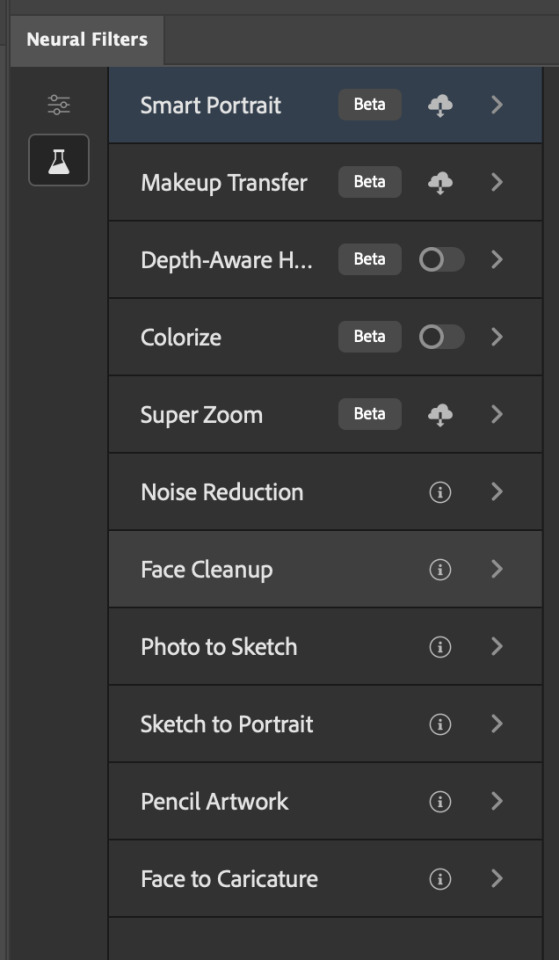

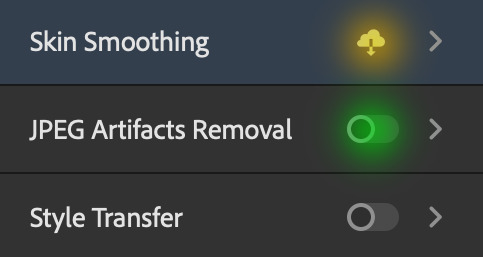

Here are the Neural Filters available in Photoshop 2024:

Skin Smoothing: Removes blemishes on the skin

Smart Portrait: This a cloud-based filter that allows you to change the mood, facial age, hair, etc using the sliders+

Makeup Transfer: Applies the makeup (from a reference image) to the eyes & mouth area of your image

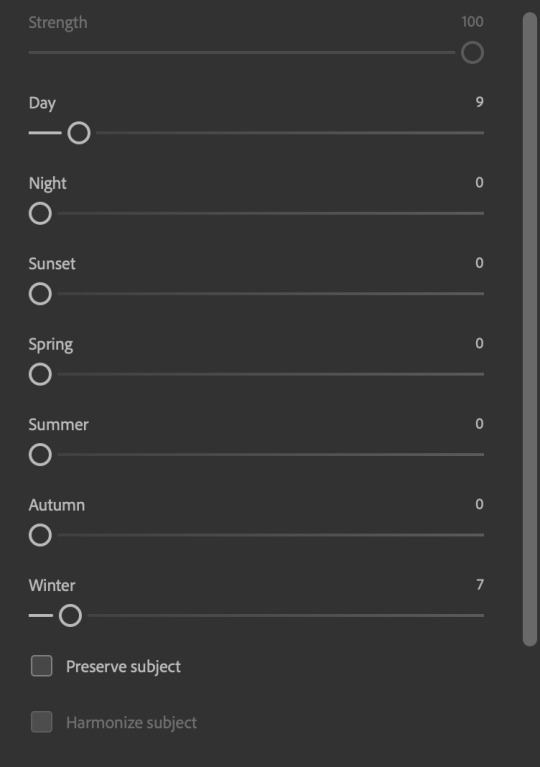

Landscape Mixer: Transforms the landscape of your image (e.g. seasons & time of the day, etc), based on the landscape features of a reference image

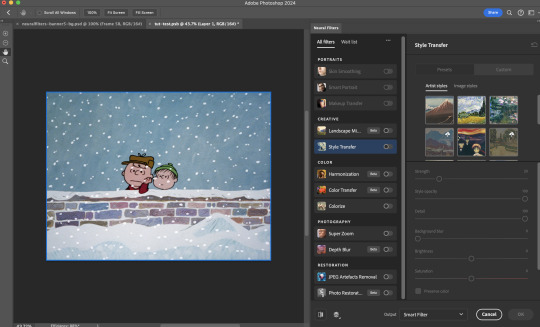

Style Transfer: Applies artistic styles e.g. texturings (from a reference image) onto your image

Harmonisation: Applies the colour balance of your image based on the lighting of the background image+

Colour Transfer: Applies the colour scheme (of a reference image) onto your image

Colourise: Adds colours onto a B&W image

Super Zoom: Zoom / crop an image without losing resolution+

Depth Blur: Blurs the background of the image

JPEG Artefacts Removal: Removes artefacts caused by JPEG compression

Photo Restoration: Enhances image quality & facial details

+These three filters aren't used in my giffing workflow. The cloud-based nature of Smart Portrait leads to disjointed looking frames. For Harmonisation, applying this on a gif causes Neural Filter timeout error. Finally, Super Zoom does not currently support output as a Smart Filter

If you're running Photoshop 2021 or earlier version of Photoshop 2022, you will see a smaller selection of Neural Filters:

Things to be aware of:

You can apply up to six Neural Filters at the same time

Filters where you can use your own reference images: Makeup Transfer (portraits only), Landscape Mixer, Style Transfer (not available in Photoshop 2021), and Colour Transfer

Later iterations of Photoshop 2023 & newer: The first three default presets for Landscape Mixer and Colour Transfer are currently broken.

2. Why I use Neural Filters?

Here are my four main Neural Filters use cases in my gifmaking process. In each use case I'll list out the filters that I use:

Enhancing Image Quality:

Common wisdom is to find the highest quality video to gif from for a media release & avoid YouTube whenever possible. However for smaller / niche media (e.g. new & upcoming musical artists), prepping gifs from highly compressed YouTube videos is inevitable.

So how do I get around with this? I have found Neural Filters pretty handy when it comes to both correcting issues from video compression & enhancing details in gifs prepared from these highly compressed video files.

Filters used: JPEG Artefacts Removal / Photo Restoration

Facial Enhancement:

When I prepare gifs from highly compressed videos, something I like to do is to enhance the facial features. This is again useful when I make gifsets from compressed videos & want to fill up my final panel with a close-up shot.

Filters used: Skin Smoothing / Makeup Transfer / Photo Restoration (Facial Enhancement slider)

Colour Manipulation:

Neural Filters is a powerful way to do advanced colour manipulation - whether I want to quickly transform the colour scheme of a gif or transform a B&W clip into something colourful.

Filters used: Colourise / Colour Transfer

Artistic Effects:

This is one of my favourite things to do with Neural Filters! I enjoy using the filters to create artistic effects by feeding textures that I've downloaded as reference images. I also enjoy using these filters to transform the overall the atmosphere of my composite gifs. The gifsets where I've leveraged Neural Filters for artistic effects could be found under this tag on usergif.

Filters used: Landscape Mixer / Style Transfer / Depth Blur

How I use Neural Filters over different stages of my gifmaking workflow:

I want to outline how I use different Neural Filters throughout my gifmaking process. This can be roughly divided into two stages:

Stage I: Enhancement and/or Colourising | Takes place early in my gifmaking process. I process a large amount of component gifs by applying Neural Filters for enhancement purposes and adding some base colourings.++

Stage II: Artistic Effects & more Colour Manipulation | Takes place when I'm assembling my component gifs in the big PSD / PSB composition file that will be my final gif panel.

I will walk through this in more detail later in the tutorial.

++I personally like to keep the size of the component gifs in their original resolution (a mixture of 1080p & 4K), to get best possible results from the Neural Filters and have more flexibility later on in my workflow. I resize & sharpen these gifs after they're placed into my final PSD composition files in Tumblr dimensions.

3. Getting started

The essence is to output Neural Filters as a Smart Filter on the smart object when working with the Video Timeline interface. Your workflow will contain the following steps:

Prepare your gif

In the frame animation interface, set the frame delay to 0.03s and convert your gif to the Video Timeline

In the Video Timeline interface, go to Filter > Neural Filters and output to a Smart Filter

Flatten or render your gif (either approach is fine). To flatten your gif, play the "flatten" action from the gif prep action pack. To render your gif as a .mov file, go to File > Export > Render Video & use the following settings.

Setting up:

o.) To get started, prepare your gifs the usual way - whether you screencap or clip videos. You should see your prepared gif in the frame animation interface as follows:

Note: As mentioned earlier, I keep the gifs in their original resolution right now because working with a larger dimension document allows more flexibility later on in my workflow. I have also found that I get higher quality results working with more pixels. I eventually do my final sharpening & resizing when I fit all of my component gifs to a main PSD composition file (that's of Tumblr dimension).

i.) To use Smart Filters, convert your gif to a Smart Video Layer.

As an aside, I like to work with everything in 0.03s until I finish everything (then correct the frame delay to 0.05s when I upload my panels onto Tumblr).

For convenience, I use my own action pack to first set the frame delay to 0.03s (highlighted in yellow) and then convert to timeline (highlighted in red) to access the Video Timeline interface. To play an action, press the play button highlighted in green.

Once you've converted this gif to a Smart Video Layer, you'll see the Video Timeline interface as follows:

ii.) Select your gif (now as a Smart Layer) and go to Filter > Neural Filters

Installing Neural Filters:

Install the individual Neural Filters that you want to use. If the filter isn't installed, it will show a cloud symbol (highlighted in yellow). If the filter is already installed, it will show a toggle button (highlighted in green)

When you toggle this button, the Neural Filters preview window will look like this (where the toggle button next to the filter that you use turns blue)

4. Using Neural Filters

Once you have installed the Neural Filters that you want to use in your gif, you can toggle on a filter and play around with the sliders until you're satisfied. Here I'll walkthrough multiple concrete examples of how I use Neural Filters in my giffing process.

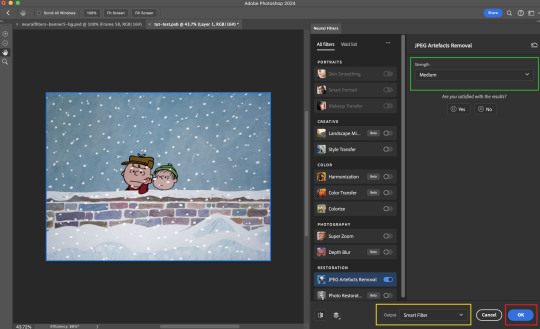

Example 1: Image enhancement | sample gifset

This is my typical Stage I Neural Filters gifmaking workflow. When giffing older or more niche media releases, my main concern is the video compression that leads to a lot of artefacts in the screencapped / video clipped gifs.

To fix the artefacts from compression, I go to Filter > Neural Filters, and toggle JPEG Artefacts Removal filter. Then I choose the strength of the filter (boxed in green), output this as a Smart Filter (boxed in yellow), and press OK (boxed in red).

Note: The filter has to be fully processed before you could press the OK button!

After applying the Neural Filters, you'll see "Neural Filters" under the Smart Filters property of the smart layer

Flatten / render your gif

Example 2: Facial enhancement | sample gifset

This is my routine use case during my Stage I Neural Filters gifmaking workflow. For musical artists (e.g. Maisie Peters), YouTube is often the only place where I'm able to find some videos to prepare gifs from. However even the highest resolution video available on YouTube is highly compressed.

Go to Filter > Neural Filters and toggle on Photo Restoration. If Photoshop recognises faces in the image, there will be a "Facial Enhancement" slider under the filter settings.

Play around with the Photo Enhancement & Facial Enhancement sliders. You can also expand the "Adjustment" menu make additional adjustments e.g. remove noises and reducing different types of artefacts.

Once you're happy with the results, press OK and then flatten / render your gif.

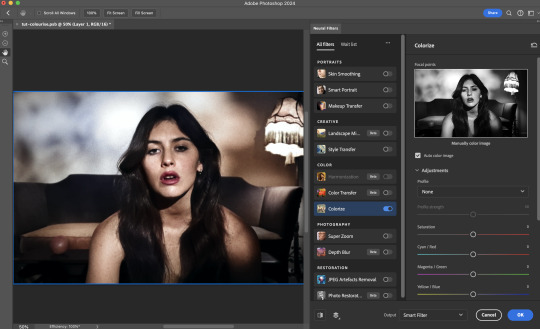

Example 3: Colour Manipulation | sample gifset

Want to make a colourful gifset but the source video is in B&W? This is where Colourise from Neural Filters comes in handy! This same colourising approach is also very helpful for colouring poor-lit scenes as detailed in this tutorial.

Here's a B&W gif that we want to colourise:

Highly recommended: add some adjustment layers onto the B&W gif to improve the contrast & depth. This will give you higher quality results when you colourise your gif.

Go to Filter > Neural Filters and toggle on Colourise.

Make sure "Auto colour image" is enabled.

Play around with further adjustments e.g. colour balance, until you're satisfied then press OK.

Important: When you colourise a gif, you need to double check that the resulting skin tone is accurate to real life. I personally go to Google Images and search up photoshoots of the individual / character that I'm giffing for quick reference.

Add additional adjustment layers until you're happy with the colouring of the skin tone.

Once you're happy with the additional adjustments, flatten / render your gif. And voila!

Note: For Colour Manipulation, I use Colourise in my Stage I workflow and Colour Transfer in my Stage II workflow to do other types of colour manipulations (e.g. transforming the colour scheme of the component gifs)

Example 4: Artistic Effects | sample gifset

This is where I use Neural Filters for the bulk of my Stage II workflow: the most enjoyable stage in my editing process!

Normally I would be working with my big composition files with multiple component gifs inside it. To begin the fun, drag a component gif (in PSD file) to the main PSD composition file.

Resize this gif in the composition file until you're happy with the placement

Duplicate this gif. Sharpen the bottom layer (highlighted in yellow), and then select the top layer (highlighted in green) & go to Filter > Neural Filters

I like to use Style Transfer and Landscape Mixer to create artistic effects from Neural Filters. In this particular example, I've chosen Landscape Mixer

Select a preset or feed a custom image to the filter (here I chose a texture that I've on my computer)

Play around with the different sliders e.g. time of the day / seasons

Important: uncheck "Harmonise Subject" & "Preserve Subject" - these two settings are known to cause performance issues when you render a multiframe smart object (e.g. for a gif)

Once you're happy with the artistic effect, press OK

To ensure you preserve the actual subject you want to gif (bc Preserve Subject is unchecked), add a layer mask onto the top layer (with Neural Filters) and mask out the facial region. You might need to play around with the Layer Mask Position keyframes or Rotoscope your subject in the process.

After you're happy with the masking, flatten / render this composition file and voila!

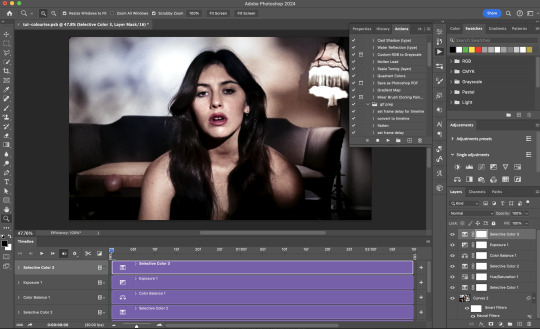

Example 5: Putting it all together | sample gifset

Let's recap on the Neural Filters gifmaking workflow and where Stage I and Stage II fit in my gifmaking process:

i. Preparing & enhancing the component gifs

Prepare all component gifs and convert them to smart layers

Stage I: Add base colourings & apply Photo Restoration / JPEG Artefacts Removal to enhance the gif's image quality

Flatten all of these component gifs and convert them back to Smart Video Layers (this process can take a lot of time)

Some of these enhanced gifs will be Rotoscoped so this is done before adding the gifs to the big PSD composition file

ii. Setting up the big PSD composition file

Make a separate PSD composition file (Ctrl / Cmmd + N) that's of Tumblr dimension (e.g. 540px in width)

Drag all of the component gifs used into this PSD composition file

Enable Video Timeline and trim the work area

In the composition file, resize / move the component gifs until you're happy with the placement & sharpen these gifs if you haven't already done so

Duplicate the layers that you want to use Neural Filters on

iii. Working with Neural Filters in the PSD composition file

Stage II: Neural Filters to create artistic effects / more colour manipulations!

Mask the smart layers with Neural Filters to both preserve the subject and avoid colouring issues from the filters

Flatten / render the PSD composition file: the more component gifs in your composition file, the longer the exporting will take. (I prefer to render the composition file into a .mov clip to prevent overriding a file that I've spent effort putting together.)

Note: In some of my layout gifsets (where I've heavily used Neural Filters in Stage II), the rendering time for the panel took more than 20 minutes. This is one of the rare instances where I was maxing out my computer's memory.

Useful things to take note of:

Important: If you're using Neural Filters for Colour Manipulation or Artistic Effects, you need to take a lot of care ensuring that the skin tone of nonwhite characters / individuals is accurately coloured

Use the Facial Enhancement slider from Photo Restoration in moderation, if you max out the slider value you risk oversharpening your gif later on in your gifmaking workflow

You will get higher quality results from Neural Filters by working with larger image dimensions: This gives Neural Filters more pixels to work with. You also get better quality results by feeding higher resolution reference images to the Neural Filters.

Makeup Transfer is more stable when the person / character has minimal motion in your gif

You might get unexpected results from Landscape Mixer if you feed a reference image that don't feature a distinctive landscape. This is not always a bad thing: for instance, I have used this texture as a reference image for Landscape Mixer, to create the shimmery effects as seen in this gifset

5. Testing your system

If this is the first time you're applying Neural Filters directly onto a gif, it will be helpful to test out your system yourself. This will help:

Gauge the expected rendering time that you'll need to wait for your gif to export, given specific Neural Filters that you've used

Identify potential performance issues when you render the gif: this is important and will determine whether you will need to fully playback your gif before flattening / rendering the file.

Understand how your system's resources are being utilised: Inputs from Windows PC users & Mac users alike are welcome!

About the Neural Filters test files:

Contains six distinct files, each using different Neural Filters

Two sizes of test files: one copy in full HD (1080p) and another copy downsized to 540px

One folder containing the flattened / rendered test files

How to use the Neural Filters test files:

What you need:

Photoshop 2022 or newer (recommended: 2023 or later)

Install the following Neural Filters: Landscape Mixer / Style Transfer / Colour Transfer / Colourise / Photo Restoration / Depth Blur

Recommended for some Apple Silicon-based MacBook Pro models: Enable High Power Mode

How to use the test files:

For optimal performance, close all background apps

Open a test file

Flatten the test file into frames (load this action pack & play the “flatten” action)

Take note of the time it takes until you’re directed to the frame animation interface

Compare the rendered frames to the expected results in this folder: check that all of the frames look the same. If they don't, you will need to fully playback the test file in full before flattening the file.†

Re-run the test file without the Neural Filters and take note of how long it takes before you're directed to the frame animation interface

Recommended: Take note of how your system is utilised during the rendering process (more info here for MacOS users)

†This is a performance issue known as flickering that I will discuss in the next section. If you come across this, you'll have to playback a gif where you've used Neural Filters (on the video timeline) in full, prior to flattening / rendering it.

Factors that could affect the rendering performance / time (more info):

The number of frames, dimension, and colour bit depth of your gif

If you use Neural Filters with facial recognition features, the rendering time will be affected by the number of characters / individuals in your gif

Most resource intensive filters (powered by largest machine learning models): Landscape Mixer / Photo Restoration (with Facial Enhancement) / and JPEG Artefacts Removal

Least resource intensive filters (smallest machine learning models): Colour Transfer / Colourise

The number of Neural Filters that you apply at once / The number of component gifs with Neural Filters in your PSD file

Your system: system memory, the GPU, and the architecture of the system's CPU+++

+++ Rendering a gif with Neural Filters demands a lot of system memory & GPU horsepower. Rendering will be faster & more reliable on newer computers, as these systems have CPU & GPU with more modern instruction sets that are geared towards machine learning-based tasks.

Additionally, the unified memory architecture of Apple Silicon M-series chips are found to be quite efficient at processing Neural Filters.

6. Performance issues & workarounds

Common Performance issues:

I will discuss several common issues related to rendering or exporting a multi-frame smart object (e.g. your composite gif) that uses Neural Filters below. This is commonly caused by insufficient system memory and/or the GPU.

Flickering frames: in the flattened / rendered file, Neural Filters aren't applied to some of the frames+-+

Scrambled frames: the frames in the flattened / rendered file isn't in order

Neural Filters exceeded the timeout limit error: this is normally a software related issue

Long export / rendering time: long rendering time is expected in heavy workflows

Laggy Photoshop / system interface: having to wait quite a long time to preview the next frame on the timeline

Issues with Landscape Mixer: Using the filter gives ill-defined defined results (Common in older systems)--

Workarounds:

Workarounds that could reduce unreliable rendering performance & long rendering time:

Close other apps running in the background

Work with smaller colour bit depth (i.e. 8-bit rather than 16-bit)

Downsize your gif before converting to the video timeline-+-

Try to keep the number of frames as low as possible

Avoid stacking multiple Neural Filters at once. Try applying & rendering the filters that you want one by one

Specific workarounds for specific issues:

How to resolve flickering frames: If you come across flickering, you will need to playback your gif on the video timeline in full to find the frames where the filter isn't applied. You will need to select all of the frames to allow Photoshop to reprocess these, before you render your gif.+-+

What to do if you come across Neural Filters timeout error? This is caused by several incompatible Neural Filters e.g. Harmonisation (both the filter itself and as a setting in Landscape Mixer), Scratch Reduction in Photo Restoration, and trying to stack multiple Neural Filters with facial recognition features.

If the timeout error is caused by stacking multiple filters, a feasible workaround is to apply the Neural Filters that you want to use one by one over multiple rendering sessions, rather all of them in one go.

+-+This is a very common issue for Apple Silicon-based Macs. Flickering happens when a gif with Neural Filters is rendered without being previously played back in the timeline.

This issue is likely related to the memory bandwidth & the GPU cores of the chips, because not all Apple Silicon-based Macs exhibit this behaviour (i.e. devices equipped with Max / Ultra M-series chips are mostly unaffected).

-- As mentioned in the supplementary page, Landscape Mixer requires a lot of GPU horsepower to be fully rendered. For older systems (pre-2017 builds), there are no workarounds other than to avoid using this filter.

-+- For smaller dimensions, the size of the machine learning models powering the filters play an outsized role in the rendering time (i.e. marginal reduction in rendering time when downsizing 1080p file to Tumblr dimensions). If you use filters powered by larger models e.g. Landscape Mixer and Photo Restoration, you will need to be very patient when exporting your gif.

7. More useful resources on using Neural Filters

Creating animations with Neural Filters effects | Max Novak

Using Neural Filters to colour correct by @edteachs

I hope this is helpful! If you have any questions or need any help related to the tutorial, feel free to send me an ask 💖

#photoshop tutorial#gif tutorial#dearindies#usernik#useryoshi#usershreyu#userisaiah#userroza#userrobin#userraffa#usercats#userriel#useralien#userjoeys#usertj#alielook#swearphil#*#my resources#my tutorials

533 notes

·

View notes

Text

ever wonder why spotify/discord/teams desktop apps kind of suck?

i don't do a lot of long form posts but. I realized that so many people aren't aware that a lot of the enshittification of using computers in the past decade or so has a lot to do with embedded webapps becoming so frequently used instead of creating native programs. and boy do i have some thoughts about this.

for those who are not blessed/cursed with computers knowledge Basically most (graphical) programs used to be native programs (ever since we started widely using a graphical interface instead of just a text-based terminal). these are apps that feel like when you open up the settings on your computer, and one of the factors that make windows and mac programs look different (bc they use a different design language!) this was the standard for a long long time - your emails were served to you in a special email application like thunderbird or outlook, your documents were processed in something like microsoft word (again. On your own computer!). same goes for calendars, calculators, spreadsheets, and a whole bunch more - crucially, your computer didn't depend on the internet to do basic things, but being connected to the web was very much an appreciated luxury!

that leads us to the eventual rise of webapps that we are all so painfully familiar with today - gmail dot com/outlook, google docs, google/microsoft calendar, and so on. as html/css/js technology grew beyond just displaying text images and such, it became clear that it could be a lot more convenient to just run programs on some server somewhere, and serve the front end on a web interface for anyone to use. this is really very convenient!!!! it Also means a huge concentration of power (notice how suddenly google is one company providing you the SERVICE) - you're renting instead of owning. which means google is your landlord - the services you use every day are first and foremost means of hitting the year over year profit quota. its a pretty sweet deal to have a free email account in exchange for ads! email accounts used to be paid (simply because the provider had to store your emails somewhere. which takes up storage space which is physical hard drives), but now the standard as of hotmail/yahoo/gmail is to just provide a free service and shove ads in as much as you need to.

webapps can do a lot of things, but they didn't immediately replace software like skype or code editors or music players - software that requires more heavy system interaction or snappy audio/visual responses. in 2013, the electron framework came out - a way of packaging up a bundle of html/css/js into a neat little crossplatform application that could be downloaded and run like any other native application. there were significant upsides to this - web developers could suddenly use their webapp skills to build desktop applications that ran on any computer as long as it could support chrome*! the first applications to be built on electron were the late code editor atom (rest in peace), but soon a whole lot of companies took note! some notable contemporary applications that use electron, or a similar webapp-embedded-in-a-little-chrome as a base are:

microsoft teams

notion

vscode

discord

spotify

anyone! who has paid even a little bit of attention to their computer - especially when using older/budget computers - know just how much having chrome open can slow down your computer (firefox as well to a lesser extent. because its just built better <3)

whenever you have one of these programs open on your computer, it's running in a one-tab chrome browser. there is a whole extra chrome open just to run your discord. if you have discord, spotify, and notion open all at once, along with chrome itself, that's four chromes. needless to say, this uses a LOT of resources to deliver applications that are often much less polished and less integrated with the rest of the operating system. it also means that if you have no internet connection, sometimes the apps straight up do not work, since much of them rely heavily on being connected to their servers, where the heavy lifting is done.

taking this idea to the very furthest is the concept of chromebooks - dinky little laptops that were created to only run a web browser and webapps - simply a vessel to access the google dot com mothership. they have gotten better at running offline android/linux applications, but often the $200 chromebooks that are bought in bulk have almost no processing power of their own - why would you even need it? you have everything you could possibly need in the warm embrace of google!

all in all the average person in the modern age, using computers in the mainstream way, owns very little of their means of computing.

i started this post as a rant about the electron/webapp framework because i think that it sucks and it displaces proper programs. and now ive swiveled into getting pissed off at software services which is in honestly the core issue. and i think things can be better!!!!!!!!!!! but to think about better computing culture one has to imagine living outside of capitalism.

i'm not the one to try to explain permacomputing specifically because there's already wonderful literature ^ but if anything here interested you, read this!!!!!!!!!! there is a beautiful world where computers live for decades and do less but do it well. and you just own it. come frolic with me Okay ? :]

*when i say chrome i technically mean chromium. but functionally it's same thing

462 notes

·

View notes

Text

I'm doing a periodic maintenance task for work, where I have to rebuild the container environment that our materials science computations get run in to update the versions of some software that we use, and I'm struck by just how much scientific knowledge is packed into this singularity image file that represents the container.

It takes like an hour to compile on modern hardware, from an Ubuntu (ha! the built in spell check in Ubuntu insists on capitalizing itself) base image with dozens of computational materials science simulation packages and supporting dependencies. These all have to be downloaded, compiled, linked, and installed in such a way that they can mutually interface, and take data and return results in the formats we use.

Once the whole thing is built, the final image is something like a gigabyte in size--5x that if we also install CUDA. I know that 1 Gb doesn't sound like that much data these days, some internet connections can download that in less than a second, but think about it:

This isn't a move or an image or a song. This archive contains almost no actual data. That gigabyte of space is pretty much entirely code instead; much of it compiled, executable code at that.

A gigabyte of materials science simulation Code, a billion characters worth of instructions, specifying the very best established practices for modeling the behavior of materials at the atomic level.

I know the digital age is old news, but I can't get over that level of cumulative effort. It represents tens of thousands of person-months of effort collectively, but I'm just sitting here watching my computer assemble it from a recipe that's short enough for me to read and sensibly edit, and distill that down into a finely-tuned piece of precision apparatus that fits on a flash drive.

And that isn't even the important part of our research! That's just all the shit we need installed on the system as background dependencies to be able to run our custom simulation code on top of that! Its so complex that at this point, its easier to run an entire (limited) virtual machine with our stuff installed in it than to try and convince every supercomputer cluster we work with to install every package separately and keep up with updates.

In case you're wondering what a day in the life of a computational materials physicist looks like: trying to do upgrades on incredibly complex machinery that you cannot touch or see. At least this time it doesn't also have to stay running while I do it...

#materials science#computational materials science#computer coding#physics#software#work stuff#computational physics#materials physics

57 notes

·

View notes

Text

https://aurosks.com/why-do-you-need-a-knowledge-management-system/

Discover the importance of a Knowledge Management System (KMS) in preventing knowledge loss and boosting productivity. This page from Auros Knowledge Systems outlines how a KMS improves time efficiency, reduces recurring errors, enhances decision-making, fosters better communication, accelerates innovation, and aids quick problem-solving. By organizing and managing documentation effectively, systems like Auros help mitigate the significant costs of poor knowledge management and enhance overall productivity. Learn more at Auros Knowledge Systems!

#knowledge based engineering#engineering knowledge management#manufacturing project management software#software for knowledge management#enterprise knowledge management software#manufacturing engineering

0 notes

Text

very sick of what im calling Tourist Opinions on image generation that SOUND nuanced and actually educated bc its not immediately giving into the Total Hysteria about AI, but that are actually just as completely shallow and uneducated as any other. like this is something you only say it you have Zero knowledge of imagegen outside of chatgpt (i mean even midjourney lets you easily turn off their automatic style filter!). this is like making sweeping statements about the usefulness of photo editing software based on your knowledge of faceapp. like genuinely embarrassing to say this and embarrassing people keep acting like this kind of opinion is remotely worth listening to. 🍅 🍅 🍅 🍅

49 notes

·

View notes

Note

Hi! Hello! I'm not sure if I can make a request, but if I can here's my request!

Can you do an LED mask reader who has a workshop underneath the base that the 141 doesn't know about (except Price, he approved it he just didn't tell the others, he didn't tell Shepherd too)

And when someone breaks something (like a gadget) they tell them to come to their workshop so they can fix it

It's okay if you don't do this! I just really like the idea :)

YES YES YES PLS THIS IS SO CUTE!! (Also PLEASE don't be afraid to invade my askbox, it's always open for brainrot, requests and the such~) Unfortunately I couldn't really incorporate the mask into this, just reader being a lil gremlin I hope that's okay 😭

The base has bunkers in case of an emergency and evacuation, but there are some passages and dead-ends that have become completely neglected. Price doesn't know how the hell you caught wind of those abandoned rooms but with his authority combined with Laswell's, they manage to allocate a space for you without the knowledge of any stuffy generals like Shepherd.

While it takes some months until anyone else in the 141 is invited to your underground workshop, they do know something is up. One minute you're around and then the next you've disappeared and unreachable (the first few weeks when you cleaned up the bunkers there was absolutely no signal underground). However they had enough faith in you and Price's lack of concern was signal enough to calm down.

It was only when Soap had come back from a mission, he could only groan in despair at his battered hardware. He's normally a clean demolitions expert, but a mission going south quicker than he could blink meant that his typical tools had succumbed to the explosions he set off. Unable to say no to Johnny's pout as he looked around at everyone like a kicked puppy, you eventually give him a reassuring pat on the back.

"See me downstairs, I'll fix it."

... what?

Johnny - as well as Gaz and Ghost who watched the exchange - just stare at you silently as you walk away. Downstairs? You mean the run down evacuation tunnels that are so run down and poorly maintained they're probably more of a death trap than whatever could be up above? But sure enough, you walk in the direction to one of the known entrances to the bunkers and they hastily chase after you (Price also following a little behind because he just knows this is going to be entertaining).

When they find you downstairs, even Price is in awe of what you've done with the place. It's filled with various forms of high-end tech. An impressive blend of both software running automatically on clean screens and gritty hardware that's sprawled across various workbenches and occasionally forgotten on the ground. There's only a singular hanging light at the center of the ceiling, but with a fresh bulb and the ambient light of all your other technology, the place is lit more than enough.

"Bloody hell..." Kyle pulls away from the rest of the 141 and joins you, his eyes following the curves and dips of a nearby piece of machinery he has never seen before but the general shape has him half convinced it's a bloody bomb.

"Like what you see?" You turn to the rest of the task force. You can't stop yourself from straightening your back in pride as the boys were clearly in awe of your handiwork.

"You were hiding this from us?" Simon asks. His voice always has a bite but you could tell that he was just stupefied, his question not just directed to you as he shoots a look to Price who stifles a smug smirk.

"We had some spare space," Price explains. "Thought it could use the renovation."

"Renovation? You rebuilt this from the ground up," Johnny exclaims, taking in the room as if it was a hidden hoard of treasures.

"Say, you'd let us pay you a visit down here, yeah?" Kyle turns back to you, eyes gleaming. The rest of the task force join in their own way. Johnny's nodding enthusiastically, John cocks an eyebrow at you, and even Simon tilts his head in curiosity, waiting for your next words.

"Hm..." you look away, bringing a finger to your chin and tapping it in contemplation. Eventually you let out a huff as you snatch Johnny's broken gear from his hands and start shooing them out. "I'll have to think about it. I'll get back to you in five to seven business days."

Johnny starts animatedly protesting but lets himself be pushed by you out of the door. Kyle laughs while Price hushes them all. Below all the commotion was an underlying understanding and agreement. You don't even need to say it aloud but they'll all certainly be crashing at your underground workshop and they were more than welcome to. In truth, as much as you loved having your private workshop, the only thing that could make it better was entrusting it with the dearest people in your lives.

Call of Duty Masterlist

#call of duty x reader#cod x reader#task force 141 x reader#call of duty#john price x reader#captain price x reader#ghost x reader#simon riley x reader#soap x reader#john mactavish x reader#gaz x reader#kyle garrick x reader#/*avery actually writes*/#/*avery checks the mailbox*/

783 notes

·

View notes