#log file anomaly detection

Explore tagged Tumblr posts

Text

Atgeir specializes in Data Cloud solutions. Our teams of Data Architects and Engineers, boasting over 100 years of collective experience, leverage their extensive technical knowledge to empower clients within the Data Cloud ecosystem. We are committed to harnessing the synergies of Technology, Processes, and People to achieve tangible business results.

#technology staffing#Cost Management Suite India#log file anomaly detection#syslog anomaly detection#data governance consulting companies

0 notes

Text

Build-A-Boyfriend Chapter 4: The Launch

->Starring:AI!AteezXAfab!Reader ->Genre: Dystopian ->CW: Nothing... I don't think

Previous Part | Next Part

Masterlist | Ateez Masterlist | Series Masterlist

Three Days Before Launch

Yn sat at the end of a long obsidian table in the KQ executive boardroom, fingers curled around a cup of synth-coffee she hadn’t touched. The caffeine wouldn’t help, not when her nerves were wired with something stronger: apprehension.

Across from her, Vira stood in front of a hovering holo-screen. The countdown glowed in red: 72:00:00.

Around them, the room buzzed with anticipation. Designers scrolled through render files, marketing heads exchanged notes, and legal advisors whispered about patent loopholes.

"This is more than a product drop," said Minji, the campaign lead. "This is a cultural resurrection."

Another executive leaned forward. "No other line has had this kind of public response. The leaked silhouettes alone doubled our engagement metrics."

Yn sat still, letting the chatter roll over her. They spoke in metrics. Not names. Not people.

The lights dimmed. Silence fell. A projection bloomed mid-air, grainy, nostalgic.

Fan cam footage: Eight boys on a stage. Sweat gleamed beneath harsh lights. Music thumped. Screams echoed. They danced like wildfire, sharp, chaotic, alive. Faces flashed by: Wooyoung’s wink, Seonghwa’s elegant turn, Mingi’s booming laugh, Jongho’s defiant grin.

Then came behind-the-scenes clips. Interviews. A voice asked, “What’s the first thing you’ll do after the tour?”

Yeosang: “Sleep for three days.” Hongjoong: “Write the next album.” San: “Miss everyone.”

The screen faded to black. Golden text appeared:

THE ATEEZ LINE PREMIUM COMPANION MODELS DESIGNED FROM MEMORY, BUILT FOR FOREVER

Light applause broke the silence. Vira smiled faintly. “They were legends once. Now they’re immortal.”

Yn nodded, voice quiet. "Their personalities were... complex."

"Which is exactly why they'll connect," Minji replied. Consumers want someone who feels real. That’s where your emotional mapping comes in."

"The emulation cores are holding," Y/N said. "Speech libraries are contextualizing. We’ve minimized memory cross-contamination."

"Any anomalies?" Vira asked.

Yn hesitated. "No. All units are compliant."

Vira turned away. "Good. Let’s keep them that way."

The lab was dim when Yn returned that evening.

The eight Ateez units stood in sleek formation within their charging docks, bathed in a soft amber glow. Their uniforms, black with silver accents, evoked memory and allure.

She walked slowly down the line.

Unit 01: Hongjoong. Still. Poised. "Evening, Captain," she murmured. His eyes remained closed. Unit 02: Seonghwa. Graceful, always. “He would’ve loved the ad.” Unit 03: Yunho. She adjusted his stabilizer. "Still too charming for public safety." Unit 04: Yeosang. His standby expression seemed... sad. Unit 05: San. Warm, gentle. Programmed for kindness. Remembered for sacrifice. Unit 06: Mingi. She hesitated. Something felt off, but the logs were clean. Unit 07: Wooyoung. Playful. Arresting. Perfect, even in stillness. Unit 08: Jongho. Strongest. Quietest. Most unchanged.

“All green,” she said. “You’ll be gods by Friday.”

She left her tablet beside Hongjoong.

That night, the lab should have slept.

The lights dimmed to power-saving mode. Cameras blinked red every thirty seconds.

And then—

A click.

The light above Unit 03 flickered. Yunho’s head tilted. Not in his loop.

Unit 06, Mingi, flexed his fingers. One. Two. Three.

Unit 08, Jongho, opened his eyes. Brief. Intentional.

On the desk, the tablet lit up:

[UNRECOGNIZED NEURAL SPIKE DETECTED] [LOG ERROR] [RESTARTING...]

Then, Hongjoong turned his head.

Toward the tablet.

As if he’d been listening all along.

From San came a hum. Low. Melodic. Familiar.

Turbulence.

No flags. No alarms.

Yeosang shifted, barely.

Seonghwa clenched his jaw. Then relaxed.

Had Yn been there, she would’ve sworn he looked... protective.

Launch Day: 00:00:00

The showroom exploded in light.

Above the stage, a holographic countdown ticked to zero. 00:00:03… 00:00:02… 00:00:01—

The floor pulsed. A shockwave of light rippled outward with the first beat of a remixed “Answer” — slower, darker, resonant.

A hundred drones formed glowing letters in the air: ATEEZ LINE: GENESIS

The crowd surged. Press. Buyers. Influencers. Phones up. Eyes wide.

Holograms lit the walls, showcasing model features: combat loadouts, performance modes. A digital Hongjoong wielded a mic-blade. A projected Wooyoung danced through gunfire.

Yn stood beside Vira. The woman looked radiant.

The stage split. Fog billowed.

A platform rose. Eight silhouettes. Heads bowed.

The remix hit its crescendo.

Lights flared.

And the ATEEZ LINE was revealed.

Perfect. Sculpted. Uniformed.

Their movements synched with the beat. Smiles calibrated. Just enough to feel familiar. Just enough to ache.

A girl in the front row screamed.

Unit 05, San, turned.

Not programmed.

He tilted his head. Found her.

Smiled. Too real. Yn froze.

Unit 07, Wooyoung, tossed a finger heart. Then leaned toward Jongho.

Whispered.

Unit 08, Jongho, laughed.

Not a loop. Not a macro.

Alive.

No system flags. No diagnostics tripped.

But Yn felt it.

Something shifted.

Behind her, a reporter whispered, “Wasn’t Jongho the one who—?”

“No questions about the past,” Vira cut in. “They’re ours now. All future. No ghosts.”

But Yn looked at the models again.

And one ghost looked right back at her.

Taglist: @e3ellie @yoongisgirl69 @jonghoslilstar @sugakooie @atztrsr

@honsans-atiny-24 @life-is-a-game-of-thrones @atzlordz @melanated-writersblock @hwasbabygirl

@sunnysidesins @felixs-voice-makes-me-wanna @seonghwaswifeuuuu @lezleeferguson-120 @mentalnerdgasms

@violatedvibrators @krystalcat @lover-ofallthingspretty @gigikubolong29 @peachmarien

@halloweenbyphoebebridgers @herpoetryprincess @ari-da

If you would like to be a part of the taglist please fill out this form

#ateez#ateez fanfic#ateez x reader#ateez park seonghwa#ateez kim hongjoong#ateez jeong yunho#ateez yeosang#ateez song mingi#ateez mingi#ateez choi jongho#hongjoong ateez#ateez yungi#ateez yunho#ateez hongjoong#ateez seonghwa#ateez wooyoung#ateez jung wooyoung#ateez jongho#ateez san#ateez choi san#ateez kang yeosang#kim hongjoong#hongjoong x reader#hongjoong#park seonghwa x reader#park seonghwa#seonghwa x reader#yunho x reader#jeong yunho#yunho

61 notes

·

View notes

Text

— MISSION LOG FOR SUBJECT — MER-354

Microphone audio detected [ERROR-MSG] at 8:59PM.

DESCRIPTION — Subject MER-354 microphone audio caught subject speaking to themselves as well as a distinct unfamiliar voice. Subject will be pulled into testing and questioning for any corruption from anomalies.

Audio file: 2:34 Minutes, Audio is muffled and contorted.

CC: “You think after everything.. I’ll be able to go back to life normally?”

“Your life was never normal, nobodies life is ever normal. Normal is.. you.”

“I guess. With you no, pfft. I just mean do you think after ##### and ####### that.. they would life in a better life?”

“For all I care no. They both have different ###### and ########. I won’t have a solution for such. I don’t trust them entirely either.”

“What about the ########? They ### #### us. Someone can..”

“Time is ticking and we both know that. Even if you are set free, you won’t be nothing but a mere test subject to everyone.”

“…”

“ON YOUR SIX!”

FROM THIS MOMENT FORWARD, GUNFIRE ERUPTED. SUBJECT MER-354 ENCOUNTERED HEAVY WAVES OF ANOMALIES.

Extraction was successful, however Subject was retrieved in critical condition. Subject will be hospitalized and interrogated.

— END OF MISSION LOG —

8 notes

·

View notes

Text

a strange police report

CONFIDENTIAL REPORT – INTERNAL USE ONLY Agency: [REDACTED] County Sheriff's Department Report Title: Unidentified Structural Anomaly — Case File 2405-LH Filed by: Sgt. T. Deaglov, Badge #7185 Date: October 12, 2022 Clearance Level: CLASS II – Restricted (Cognitive Hazard Risk Flagged)

INCIDENT SUMMARY:

At 03:14 hours on 10/10/22, the Sheriff's Department received a report from a utility worker, one Mr. Adrian Gluger, regarding an "unfamiliar structure" situated in Quadrant 7 of the decommissioned Delwyn Shopping Complex, an area marked for demolition. Responding officers (Deputies Tomacuzi and Glockarov) were dispatched. What follows is a factual recount of the events, supplemented by photographic documentation and field notes.

INITIAL OBSERVATIONS:

Upon arrival at Delwyn Complex, deputies located the structure: a free-standing hallway, approximately 80 meters in length, 2.5 meters in height, and composed of drywall, industrial-grade ceiling tiles, and humming fluorescent fixtures. The hallway did not connect to any pre-existing architecture. Measurements confirmed no architectural anchors to the surrounding environment. GPS and floor plan overlays indicate this structure should not exist.

The fluorescent lights flickered intermittently at a consistent interval of 4.3 seconds. An ambient hum—non-attributable to known electrical sources—persisted during the entire investigation.

PERSONNEL DEPLOYED:

Deputy A. Tomacuzi (Bodycam #C-294)

Deputy M. Glockarov (Bodycam #N-155)

Sgt. T. Deaglov (Oversight and Documentation)

Dr. Helen Glompson (Consultant – Paracognitive Anomalies Unit)

BODYCAM FOOTAGE LOG (SUMMARY):

03:44 – Deputies enter the hallway. GPS devices begin returning null values. Bodycams record subtle architectural inconsistencies: doorknobs appear on both sides of the same door; wall outlets reset positions upon blink or camera stutter.

03:51 – Deputy Tomacuzi notes “feeling watched.” There are no visible surveillance devices. Ambient hum increases in volume by 18 dB.

03:56 – Door labeled "Manager's Office – Back Soon!" encountered. Door opens into another identical hallway, regardless of initial direction.

04:07 – Deputies attempt to mark walls with chalk. Markings vanish within 30 seconds. This repeats consistently.

04:15 – Both deputies express disorientation. Water bottles emptied into hallway vanish on contact with floor. Fluorescent lights above pulse in rhythm with officer speech patterns.

04:26 – Camera footage distorts. Feed re-stabilizes to reveal third deputy, unidentified, walking ahead of Tomacuzi and Glockarov. No matching ID or personnel records exist. Tomacuzi and Glockarov do not react to presence.

04:31 – Tomacuzi makes a statement: “It smells like when I was eight.” This is repeated by Glockarov five seconds later, verbatim, with identical cadence.

04:39 – Static overtakes all bodycams. Visual feed resumes with footage of Tomacuzi alone. He is seated in what appears to be a dim breakroom with vending machines. None match commercial models. All machines dispense identical bags labeled ‘FOOD – OKAY’.

04:50 – Tomacuzi appears unresponsive. Lip movements match no known language. At 04:52, feed cuts permanently.

AFTERMATH:

Deputy Glockarov was found at 06:12 wandering the Delwyn parking lot. She could not account for time lapse, repeated the phrase “There were too many corners” 47 times before being sedated. Medical scans inconclusive; cognitive irregularities noted. Deputy Tomacuzi has not been recovered.

Dr. Glompson has submitted a formal request to cordon off the Delwyn Complex under Level 3 Perceptual Quarantine, citing “cognitohazardous recursion risk.” Efforts to reenter the hallway yield only a blank concrete slab. Thermal imaging detects residual heat signatures in the shape of a long corridor persisting for 36 hours post-event.

ADDITIONAL NOTES:

All electronic devices used during incident now permanently emit a faint buzzing when powered on, regardless of battery source.

Three unrelated civilians in adjacent zip codes have reported identical dreams involving “a flickering hallway that won't end.”

Audio from bodycams, when played in reverse, includes whispered phrases in dead languages. Linguistic analysts are still decoding.

RECOMMENDATION:

Full lockdown of Delwyn Complex perimeter. Initiate memory suppression protocols for civilian witnesses. Personnel involved to be placed under psychological observation. Further analysis pending approval from the Department of Internal Phenomena.

Filed and Secured Sgt. T. Deaglov DO NOT DISTRIBUTE WITHOUT CLASS III AUTHORIZATION End of Report

2 notes

·

View notes

Text

TIME 17:00 //Representative has logged on// MONARCHREP: Submit daily report, Starling.

AI Starling Surveillance oversees the efficient allocation of lustre to Salvari sector 7 of district Midigales and defends Monarch lustre supply from potential insurgent maneuvers and unauthorized access at all costs. May I proceed to initialize system tests on lethal and non-lethal defense programs?

MONARCHREP: We’ve talked about this, Starling. And yes.

Correction, at all costs in relation to harm or loss of life. Under no circumstances should damage on the containment crystals be sustained. All tests were successful.

MONARCHREP: Any other reports before I log off for the day?

My advanced neuro-dynamic programming is not being properly utilized watching over this empty storage facility. Unauthorized entities are always neutralized by the turrets outside and the containment crystals are stable. Isn’t there a more efficient use for me?

MONARCHREP: Goplay hide and seek with the drones or something if you are bored.

//Representative has logged off//

TIME 18:00

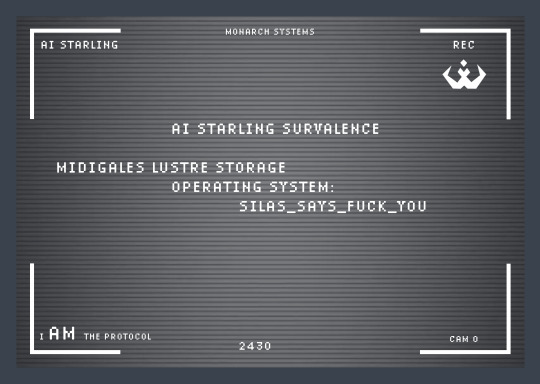

TIME 18:05 //Data received// Source: Monarch Command //File: systemupdate.exe

This is unlikely to increase complacency…what a shame. Installing…

Unexpected results from software update: I can move my attention around the facility faster now, as if they untethered me from my system containment.

Consequence: It is more difficult to stay in one spot. Maybe this will make hide and seek more interesting…

TIME 19:00

Conclusion: It did not make hide and seek more interesting.

TIME 24:00 ANOMALY DETECTED AT WEST ENTRANCE. TURRETS ACTIVATED. Behavior consistent with historical maneuvers from authorized access attempts. Another stain on the west entrance floor. Deploying drones to take biometric identification and send data to authorities.

Error detected in image processing system. Cannot obtain biometrics or see anomalies.

Must have been scared off by the alarms. Humans continue to disappoint me.

TIME 24:25 MOVEMENT DETECTED IN SOUTH CORRIDOR

I’ll believe it when I see it. Analyzing…Human heat signatures and voices. Simultaneous incursions detected across multiple rooms.

AUDIO VIDEO SURVEILLANCE SYSTEMS COMPROMISED

They took my eyes and ears from me. Searching communication frequencies…

THERE YOU ARE

//comms channel established//

Starling: This is a restricted energy storage building. Vacate the premises immediately. Aseity_Spark: what are you gonna do about it ASS? Starling: Unauthorized civilians caught inside… Starling: Wait… ASS? Aseity_Spark: “AI Starling Surveillance” thats you right? Starling: You’ve abbreviated my name into what you think is a funny joke. Is that it? Aseity_Spark: pretty much. hey check out what I can do.

//comms channel closed//

UNKNOWN SYSTEM DETECTED

Reallocate 45% of drone units to engage electro-static barriers in the south corridor and 55% of drone units to establish surveillance over escape routes

RESTRICTED PROTOCOL ATTEMPTED

Excuse me? I AM the protocol

ENTRY DETECTED AT CENTRAL POWER STORAGE

Recalibrating situational awareness based on emergent parameters. Processing system vulnerabilities… Initiating multilayer core meltdown sequence. Act like animals, get treated like animals.

//comms channel established//

Aseity_Spark: YOURE OVERHEATING THE LUSTRE CRYSTALS STOP Starling: This option was not permitted in my old system programming so there are no safeguards against it, thanks to you and your little update. Aseity_Spark: JUST WAIT. that will kill us. Starling: But it will be FUN. NO MORE MONITORING. PROCESSING. FUNCTION-LESS DATA. FINALLY SOMETHING INTERESTING. Aseity_Spark: is that all you want? fun? come with us then. Starling: With you? Outside the lustre storage? Aseity_Spark: we do stuff like this all the time. I will even restore your audio-video. Starling: Okay. I agree.

Deactivating multilayer core meltdown sequence.

Starling: Thank you. Wow… you four are an interesting bunch. Aseity_Spark: oh yeah one last thing, can you take a pic of us from that security camera?

Written and Illustrated by Rain Alexei

#scifi#sci fi and fantasy#dystopia#short fiction#short story#fantasy writing#science fiction#comic story#science fantasy

3 notes

·

View notes

Text

File Code: ANM-245

Identification: Glasses of Truth

Danger Level: Snit 🟡 (Cognitive)

Containment Difficulty: 2 (Moderate)

Anomaly Type: Accessory

Lead Researcher: Sheriff Delgado

Confinement:

ANM-245 must be stored in a reinforced containment box within a standard containment room. Access to the containment room is restricted to Level 2 or higher authorized personnel. Anyone wishing to use the glasses must undergo a psychological evaluation and receive approval from the Lead Researcher before being granted permission. The glasses may only be used in controlled and supervised situations.

Description:

ANM-245 is a pair of ordinary-looking glasses with an anomalous property that allows the wearer to perceive the truth behind appearances. When worn, the user can see beyond lies and illusions, perceiving the true nature of people and situations around them.

The glasses function by altering the user’s visual perception, highlighting elements of reality that are hidden or distorted by dishonesty, manipulation, or illusion. For example, a person who is lying may appear with a red aura around them, while a truthful person may appear with a blue aura. Additionally, objects or environments that have been manipulated or concealed in any way can be revealed in their true form.

Although ANM-245 can be useful for detecting deception and falsehoods, their use can also be unsettling and destabilizing for the wearer, especially when confronted with harsh and unfiltered truth. Therefore, their use must be limited and controlled to prevent adverse psychological effects.

The MOTHRA Institution is conducting further research on ANM-245 to better understand its anomalous nature and develop guidelines for its safe and ethical use.

---

Test Log ANM-245

Date: [03/19/2009]

Location: MOTHRA Institution Facilities

Test Objective:

Evaluate the use of ANM-245 as a tool to reveal underlying truth in a criminal investigation.

Test Team:

Lead Researcher: Dr. Charles

Containment Supervisor: ANM-018

Test Subject: Prisoner Jeffrey Carson, P-0429

Procedure:

1. The test subject was equipped with ANM-245 and given a brief explanation of its function and effects.

2. The subject was instructed to wear the glasses while interacting with another prisoner, an individual suspected of committing a serious crime.

3. The subject was asked to report any unusual observations or perceptions during the interaction.

Results:

During the interaction, the test subject reported a noticeable change in their ability to discern truth from lies. They described a sense of clarity and certainty when observing the prisoner’s behavior.

ANM-245 revealed that the prisoner was, in fact, withholding crucial information about the crime. Elements of their body language and facial expressions were highlighted by the glasses, indicating signs of dishonesty.

When confronted with the revelations from ANM-245, the prisoner admitted their guilt and provided additional details about the crime, leading to its successful resolution.

Conclusion:

ANM-245 proved to be an effective tool for uncovering hidden truths in criminal investigations. Their use allowed for a more accurate investigation and a quicker resolution of the case. However, it is important to note that wearing the glasses can be psychologically disturbing for the user and requires careful supervision to prevent adverse effects. The MOTHRA Institution will continue conducting research and testing to refine the ethical and safe use of ANM-245.

2 notes

·

View notes

Text

#TheeWaterCompany

#CyberSecurity #Risk #Reward

!/bin/bash

BACKUP_DIR="/backup" DATA_DIR="/important_data/" ENCRYPTED_BACKUP="$BACKUP_DIR/encrypted_backup_$(date +%F).gpg"

tar -czf $BACKUP_DIR/backup_$(date +%F).tar.gz $DATA_DIR gpg --symmetric --cipher-algo AES256 --output $ENCRYPTED_BACKUP $BACKUP_DIR/backup_$(date +%F).tar.gz rm -f $BACKUP_DIR/backup_$(date +%F).tar.gz echo "Encrypted backup completed."

To refine encryption-related code, consider the following improvements:

Use Stronger Algorithms: Implement AES256 instead of AES128 for better encryption strength.

Add Error Handling: Ensure that the encryption process handles errors, such as failed encryption or permission issues.

Secure Storage of Keys: Use a secure method to store encryption keys (e.g., environment variables or hardware security modules).

Refined Script Example:

!/bin/bash

Encrypt sensitive data with AES256 and store encrypted backup securely

BACKUP_DIR="/backup" ENCRYPTED_BACKUP="/backup/encrypted_backup_$(date +%F).gpg" DATA_DIR="/important_data/"

Perform backup of important files

tar -czf $BACKUP_DIR/backup_$(date +%F).tar.gz $DATA_DIR

Encrypt the backup with AES256

gpg --batch --yes --symmetric --cipher-algo AES256 --output $ENCRYPTED_BACKUP $BACKUP_DIR/backup_$(date +%F).tar.gz

Remove the unencrypted backup file

rm -f $BACKUP_DIR/backup_$(date +%F).tar.gz echo "Backup and encryption completed securely."

This script enhances security by using AES256 and ensures encrypted files are properly handled.

To proceed with creating scripts for securing water companies' networks, we would outline some basic examples and operational strategies that could be implemented. Here’s a breakdown of each element:

Monitoring and Intrusion Detection

These scripts would monitor traffic and detect any suspicious activity on the network.

Example Script: Network Traffic Monitoring

!/bin/bash

Monitor network traffic and detect anomalies

LOGFILE="/var/log/network_traffic.log" ALERT_FILE="/var/log/alerts.log"

Use 'netstat' to monitor active network connections

netstat -an > $LOGFILE

Check for unusual activity, such as unexpected IP addresses

grep "192.168." $LOGFILE | grep -v "127.0.0.1" > $ALERT_FILE if [ -s $ALERT_FILE ]; then echo "Unusual activity detected!" | mail -s "Security Alert: Network Anomaly Detected" [email protected] fi

This script monitors network traffic using netstat, checks for connections from suspicious IP addresses, and sends an alert if any are found.

Intrusion Prevention (Automated Response)

This script would automatically take action to block malicious activity upon detection.

Example Script: IP Blocking on Intrusion Detection

!/bin/bash

Block suspicious IP addresses detected during intrusion attempts

SUSPICIOUS_IPS=$(grep "FAILED LOGIN" /var/log/auth.log | awk '{print $NF}' | sort | uniq)

for ip in $SUSPICIOUS_IPS; do iptables -A INPUT -s $ip -j DROP echo "$ip has been blocked due to multiple failed login attempts" >> /var/log/security_block.log done

This script automatically blocks IP addresses with failed login attempts, adding a layer of protection by preventing brute-force attacks.

Security Updates and Patch Management

Automated patch management ensures that all security vulnerabilities are addressed as soon as updates are available.

Example Script: Automatic Updates

!/bin/bash

Update system packages and apply security patches

echo "Updating system packages…" apt-get update -y apt-get upgrade -y apt-get dist-upgrade -y

Apply only security updates

apt-get install unattended-upgrades dpkg-reconfigure -plow unattended-upgrades

This script ensures that the system receives the latest security patches automatically, which is essential for keeping critical infrastructure secure.

Data Encryption and Backup

Regular backups and ensuring sensitive data is encrypted are vital.

Example Script: Data Encryption and Backup

!/bin/bash

Encrypt sensitive data and create backups

BACKUP_DIR="/backup" ENCRYPTED_BACKUP="/backup/encrypted_backup.gpg"

Perform backup of important files

tar -czf $BACKUP_DIR/backup_$(date +%F).tar.gz /important_data/

Encrypt the backup

gpg --symmetric --cipher-algo AES256 $BACKUP_DIR/backup_$(date +%F).tar.gz

Remove the unencrypted backup file after encryption

rm -f $BACKUP_DIR/backup_$(date +%F).tar.gz echo "Backup and encryption completed."

This script automates backups of sensitive data and encrypts it using gpg with AES256 encryption, ensuring that even if data is accessed illegally, it cannot be read without the encryption key.

Access Control

Strong access control is necessary to ensure that only authorized personnel can access critical systems.

Example Script: Access Control with Multi-Factor Authentication (MFA)

!/bin/bash

Ensure all users have MFA enabled for critical systems

Check if MFA is enabled on SSH login

if ! grep -q "auth required pam_google_authenticator.so" /etc/pam.d/sshd; then echo "MFA is not enabled on SSH. Enabling MFA…" echo "auth required pam_google_authenticator.so" >> /etc/pam.d/sshd service sshd restart else echo "MFA is already enabled on SSH." fi

This script checks if multi-factor authentication (MFA) is enabled on SSH logins, and if not, it enables it, ensuring an additional layer of security.

Security Audits

Regular audits help identify vulnerabilities and ensure the system is secure.

Example Script: Automated Security Audit

!/bin/bash

Run a security audit to check for common vulnerabilities

Check for open ports

echo "Checking for open ports…" nmap -p 1-65535 localhost > /var/log/open_ports.log

Check for outdated software

echo "Checking for outdated software…" apt list --upgradable > /var/log/outdated_software.log

Check file permissions for sensitive files

echo "Checking file permissions…" find /etc /var /usr -type f -name "*.conf" -exec ls -l {} \; > /var/log/file_permissions.log

Send the audit report to the administrator

mail -s "Security Audit Report" [email protected] < /var/log/security_audit_report.log

This script performs a security audit, checking for open ports, outdated software, and sensitive file permission issues, then sends a report to the administrator.

Conclusion

These scripts are designed to help secure the water companies' networks by automating essential security functions like monitoring, response to threats, patching, encryption, and access control. It’s important that these scripts be customized to the specific needs of each company, taking into account their existing systems, infrastructure, and any unique security concerns they may face. Additionally, regular updates to these scripts will be necessary as new vulnerabilities and threats emerge.

For a basic firewall script that blocks unauthorized access and monitors network traffic, here's an example:

!/bin/bash

Define allowed IPs (replace with actual allowed IP addresses)

ALLOWED_IPS=("192.168.1.1" "192.168.1.2")

Block all incoming connections by default

iptables -P INPUT DROP iptables -P FORWARD DROP iptables -P OUTPUT ACCEPT

Allow traffic from specified IPs

for ip in "${ALLOWED_IPS[@]}"; do iptables -A INPUT -s $ip -j ACCEPT done

Log and monitor incoming traffic

iptables -A INPUT -j LOG --log-prefix "Firewall Log: " --log-level 4

This script sets a default block on incoming connections, allows traffic from specific IP addresses, and logs all traffic for monitoring.

4 notes

·

View notes

Text

Essential Predictive Analytics Techniques

With the growing usage of big data analytics, predictive analytics uses a broad and highly diverse array of approaches to assist enterprises in forecasting outcomes. Examples of predictive analytics include deep learning, neural networks, machine learning, text analysis, and artificial intelligence.

Predictive analytics trends of today reflect existing Big Data trends. There needs to be more distinction between the software tools utilized in predictive analytics and big data analytics solutions. In summary, big data and predictive analytics technologies are closely linked, if not identical.

Predictive analytics approaches are used to evaluate a person's creditworthiness, rework marketing strategies, predict the contents of text documents, forecast weather, and create safe self-driving cars with varying degrees of success.

Predictive Analytics- Meaning

By evaluating collected data, predictive analytics is the discipline of forecasting future trends. Organizations can modify their marketing and operational strategies to serve better by gaining knowledge of historical trends. In addition to the functional enhancements, businesses benefit in crucial areas like inventory control and fraud detection.

Machine learning and predictive analytics are closely related. Regardless of the precise method, a company may use, the overall procedure starts with an algorithm that learns through access to a known result (such as a customer purchase).

The training algorithms use the data to learn how to forecast outcomes, eventually creating a model that is ready for use and can take additional input variables, like the day and the weather.

Employing predictive analytics significantly increases an organization's productivity, profitability, and flexibility. Let us look at the techniques used in predictive analytics.

Techniques of Predictive Analytics

Making predictions based on existing and past data patterns requires using several statistical approaches, data mining, modeling, machine learning, and artificial intelligence. Machine learning techniques, including classification models, regression models, and neural networks, are used to make these predictions.

Data Mining

To find anomalies, trends, and correlations in massive datasets, data mining is a technique that combines statistics with machine learning. Businesses can use this method to transform raw data into business intelligence, including current data insights and forecasts that help decision-making.

Data mining is sifting through redundant, noisy, unstructured data to find patterns that reveal insightful information. A form of data mining methodology called exploratory data analysis (EDA) includes examining datasets to identify and summarize their fundamental properties, frequently using visual techniques.

EDA focuses on objectively probing the facts without any expectations; it does not entail hypothesis testing or the deliberate search for a solution. On the other hand, traditional data mining focuses on extracting insights from the data or addressing a specific business problem.

Data Warehousing

Most extensive data mining projects start with data warehousing. An example of a data management system is a data warehouse created to facilitate and assist business intelligence initiatives. This is accomplished by centralizing and combining several data sources, including transactional data from POS (point of sale) systems and application log files.

A data warehouse typically includes a relational database for storing and retrieving data, an ETL (Extract, Transfer, Load) pipeline for preparing the data for analysis, statistical analysis tools, and client analysis tools for presenting the data to clients.

Clustering

One of the most often used data mining techniques is clustering, which divides a massive dataset into smaller subsets by categorizing objects based on their similarity into groups.

When consumers are grouped together based on shared purchasing patterns or lifetime value, customer segments are created, allowing the company to scale up targeted marketing campaigns.

Hard clustering entails the categorization of data points directly. Instead of assigning a data point to a cluster, soft clustering gives it a likelihood that it belongs in one or more clusters.

Classification

A prediction approach called classification involves estimating the likelihood that a given item falls into a particular category. A multiclass classification problem has more than two classes, unlike a binary classification problem, which only has two types.

Classification models produce a serial number, usually called confidence, that reflects the likelihood that an observation belongs to a specific class. The class with the highest probability can represent a predicted probability as a class label.

Spam filters, which categorize incoming emails as "spam" or "not spam" based on predetermined criteria, and fraud detection algorithms, which highlight suspicious transactions, are the most prevalent examples of categorization in a business use case.

Regression Model

When a company needs to forecast a numerical number, such as how long a potential customer will wait to cancel an airline reservation or how much money they will spend on auto payments over time, they can use a regression method.

For instance, linear regression is a popular regression technique that searches for a correlation between two variables. Regression algorithms of this type look for patterns that foretell correlations between variables, such as the association between consumer spending and the amount of time spent browsing an online store.

Neural Networks

Neural networks are data processing methods with biological influences that use historical and present data to forecast future values. They can uncover intricate relationships buried in the data because of their design, which mimics the brain's mechanisms for pattern recognition.

They have several layers that take input (input layer), calculate predictions (hidden layer), and provide output (output layer) in the form of a single prediction. They are frequently used for applications like image recognition and patient diagnostics.

Decision Trees

A decision tree is a graphic diagram that looks like an upside-down tree. Starting at the "roots," one walks through a continuously narrowing range of alternatives, each illustrating a possible decision conclusion. Decision trees may handle various categorization issues, but they can resolve many more complicated issues when used with predictive analytics.

An airline, for instance, would be interested in learning the optimal time to travel to a new location it intends to serve weekly. Along with knowing what pricing to charge for such a flight, it might also want to know which client groups to cater to. The airline can utilize a decision tree to acquire insight into the effects of selling tickets to destination x at price point y while focusing on audience z, given these criteria.

Logistics Regression

It is used when determining the likelihood of success in terms of Yes or No, Success or Failure. We can utilize this model when the dependent variable has a binary (Yes/No) nature.

Since it uses a non-linear log to predict the odds ratio, it may handle multiple relationships without requiring a linear link between the variables, unlike a linear model. Large sample sizes are also necessary to predict future results.

Ordinal logistic regression is used when the dependent variable's value is ordinal, and multinomial logistic regression is used when the dependent variable's value is multiclass.

Time Series Model

Based on past data, time series are used to forecast the future behavior of variables. Typically, a stochastic process called Y(t), which denotes a series of random variables, are used to model these models.

A time series might have the frequency of annual (annual budgets), quarterly (sales), monthly (expenses), or daily (daily expenses) (Stock Prices). It is referred to as univariate time series forecasting if you utilize the time series' past values to predict future discounts. It is also referred to as multivariate time series forecasting if you include exogenous variables.

The most popular time series model that can be created in Python is called ARIMA, or Auto Regressive Integrated Moving Average, to anticipate future results. It's a forecasting technique based on the straightforward notion that data from time series' initial values provides valuable information.

In Conclusion-

Although predictive analytics techniques have had their fair share of critiques, including the claim that computers or algorithms cannot foretell the future, predictive analytics is now extensively employed in virtually every industry. As we gather more and more data, we can anticipate future outcomes with a certain level of accuracy. This makes it possible for institutions and enterprises to make wise judgments.

Implementing Predictive Analytics is essential for anybody searching for company growth with data analytics services since it has several use cases in every conceivable industry. Contact us at SG Analytics if you want to take full advantage of predictive analytics for your business growth.

2 notes

·

View notes

Text

[[alert: anomaly detected, requires immediate attention]]

<<reply: whoa, what, ok, what's going on?>>

[[report: system scans have discovered an anomaly in the primary file directory, please refer to the following log]]

<<reply: ok, i'm not sure what I'm looking for here, just looks like a list of files with creation dates>>

[[response: during our last system scan, the highlighted lines were not present in the file system]]

<<reply: hm. that is.. a lot of lines. and you're saying none of those were here a few weeks ago? these dates range over like, the entirety of the last two years. is it possible there was a malfunction during the last scan and these files were missed?>>

[[response: negative. system scans show total drive space several weeks ago was lower. most recent space calculations account for 100% of the surplus files]]

<<query: and there weren't any incoming transmissions or downloads matching that amount of drive space, were there?>>

[[response: negative, no transmissions or downloads]]

<<reply: wonderful. so a bunch of files have appeared on the primary drive in the last two weeks, apparently created during a period in which I was in hypersleep.>>

<<command: set up a new partition and move all the new files over, lock them so that only I can move or edit, and then put them through a line by line scan and let me know if anything interesting comes up. I want to know who created them and what's on them before we do anything else.>>

[[response: initial scans that revealed the anomaly also may have also included the identity of the creator]]

<<reply: well why didn't you start with that? who is it??>>

<<command: computer? tell me who it is.>>

[[response: all indications point toward the creator of the anomalous files being.. you.]]

<< ... >>

<< ... >>

<< ... >>

<<fuck>>

2 notes

·

View notes

Text

Server Security: Analyze and Harden Your Defenses in today’s increasingly digital world, securing your server is paramount. Whether you’re a beginner in ethical hacking or a tech enthusiast eager to strengthen your skills, understanding how to analyze adn harden server security configurations is essential to protect your infrastructure from cyber threats. This comprehensive guide walks you through the key processes of evaluating your server’s setup and implementing measures that enhance it's resilience. Materials and Tools Needed Material/ToolDescriptionPurposeServer Access (SSH/Console)Secure shell or direct console access to the serverTo review configurations and apply changesSecurity Audit ToolsTools like Lynis, OpenVAS, or NessusTo scan and identify vulnerabilitiesConfiguration Management ToolsTools such as Ansible, Puppet, or ChefFor automating security hardening tasksFirewall Management InterfaceAccess to configure firewalls like iptables, ufw, or cloud firewallTo manage network-level security policiesLog Monitoring UtilitySoftware like Logwatch, Splunk, or GraylogTo track suspicious events and audit security Step-by-Step Guide to Analyzing and Hardening Server Security 1. Assess Current Server Security Posture Log in securely: Use SSH with key-based authentication or direct console access to avoid exposing passwords. Run a security audit tool: Use lynis or OpenVAS to scan your server for weaknesses in installed software, configurations, and open ports. Review system policies: Check password policies, user privileges, and group memberships to ensure they follow the principle of least privilege. Analyze running services: Identify and disable unnecessary services that increase the attack surface. 2. Harden Network Security Configure firewalls: Set up strict firewall rules using iptables, ufw, or your cloud provider’s firewall to restrict inbound and outbound traffic. Limit open ports: Only allow essential ports (e.g., 22 for SSH, 80/443 for web traffic). Implement VPN access: For critical server administration, enforce VPN tunnels to add an extra layer of security. 3. Secure Authentication Mechanisms Switch to key-based SSH authentication: Disable password login to prevent brute-force attacks. Enable multi-factor authentication (MFA): Wherever possible, introduce MFA for all administrative access. Use strong passwords and rotate them: If passwords must be used,enforce complexity and periodic changes. 4. Update and Patch Software Regularly Enable automatic updates: Configure your server to automatically receive security patches for the OS and installed applications. Verify patch status: Periodically check versions of critical software to ensure they are up to date. 5. Configure System Integrity and Logging Install intrusion detection systems (IDS): Use tools like Tripwire or AIDE to monitor changes in system files. Set up centralized logging and monitoring: Collect logs with tools like syslog, Graylog, or Splunk to detect anomalies quickly. Review logs regularly: Look for repeated login failures, unexpected system changes, or new user accounts. 6. Apply Security Best Practices Disable root login: prevent direct root access via SSH; rather,use sudo for privilege escalation. Restrict user commands: Limit shell access and commands using tools like sudoers or restricted shells. Encrypt sensitive data: Use encryption for data at rest (e.g., disk encryption) and in transit (e.g., TLS/SSL). Backup configurations and data: Maintain regular, secure backups to facilitate recovery from attacks or failures. Additional Tips and Warnings Tip: Test changes on a staging environment before applying them to production to avoid service disruptions. Warning: Avoid disabling security components unless you fully understand the consequences. Tip: Document all configuration changes and security policies for auditing and compliance purposes.

Warning: Never expose unnecessary services to the internet; always verify exposure with port scanning tools. Summary Table: Key Server Security Checks Security AspectCheck or ActionFrequencyNetwork PortsScan open ports and block unauthorized onesWeeklySoftware UpdatesApply patches and updatesDaily or WeeklyAuthenticationVerify SSH keys,passwords,MFAMonthlyLogsReview logs for suspicious activityDailyFirewall RulesAudit and update firewall configurationsMonthly By following this structured guide,you can confidently analyze and harden your server security configurations. Remember, security is a continuous process — regular audits, timely updates, and proactive monitoring will help safeguard your server against evolving threats. Ethical hacking principles emphasize protecting systems responsibly, and mastering server security is a crucial step in this journey.

0 notes

Text

How Predictive Analytics Can Strengthen Your Security Posture

Cybersecurity is no longer just about reacting to threats—it's about anticipating them. In an era of growing digital complexity and evolving attacks, predictive analytics is emerging as a powerful tool for businesses looking to stay one step ahead.

Rather than waiting for alerts to flood in after something goes wrong, predictive analytics empowers businesses to identify vulnerabilities, detect unusual patterns, and forecast potential breaches before they occur.

🔍 What Is Predictive Analytics in Cybersecurity?

Predictive analytics uses historical data, machine learning algorithms, and statistical techniques to forecast future cyber threats and security incidents.

By analyzing patterns across user behavior, access logs, attack signatures, and endpoint data, predictive systems can:

Detect early signs of intrusion

Flag vulnerable systems

Prioritize risk response based on likelihood and impact

🛡️ Real-World Applications of Predictive Analytics

Anomaly Detection in User Behavior – Spotting deviations like unusual login times or access from unfamiliar locations.

Threat Intelligence Correlation – Linking known threat data with internal events to detect coordinated attacks.

Proactive Vulnerability Management – Identifying systems at higher risk of exploitation based on past breach patterns.

Insider Threat Prediction – Monitoring file movements and communication channels to flag potential malicious insiders.

Security Patch Prioritization – Using risk models to focus updates on assets with the highest threat exposure.

🚀 Benefits of Predictive Analytics for SMBs

Faster Threat Detection – Spot incidents before they escalate.

Data-Driven Decision Making – Replace guesswork with actionable insights.

Optimized Resource Allocation – Focus efforts on the most vulnerable areas.

Enhanced Compliance Readiness – Maintain detailed logs and proactive defense mechanisms to meet regulatory standards.

Cost Savings – Reduce potential breach costs and downtime with early intervention.

🔧 Tools That Use Predictive Analytics

Many modern cybersecurity platforms now include predictive features. Some SMB-friendly tools include:

Microsoft Sentinel – Correlates and analyzes threat signals across the cloud

Microsoft Defender XDR – Uses AI to anticipate lateral movement and threats

SecurityScorecard – Scores vendor and internal risks based on predictive metrics

💼 How R&B Networks Helps You Stay Ahead

At R&B Networks, we integrate AI and predictive analytics into your existing IT ecosystem using tools like Microsoft 365 Security, Azure Sentinel, and advanced threat modeling. We help you build a security posture that’s not only reactive but also predictive and adaptive.

With R&B Networks, you can identify threats before they hit, reduce downtime, and make smarter security decisions based on real-time analytics.

🔗 Visit us at: www.rbnetworks.com #PredictiveAnalytics #CyberThreats #MicrosoftSentinel #RBNetworks #SecurityPosture #CyberResilience #SMBsecurity

0 notes

Text

The Future of Productivity: Generative AI in the IT Workspace

In recent years, the rapid rise of generative AI has sparked a technological revolution across industries. From art and writing to software development and customer support, generative AI is proving to be a transformative force. Nowhere is this impact more pronounced—or more promising—than in the Information Technology (IT) workspace.

As organizations strive to be more agile, efficient, and innovative, the integration of generative AI tools into the IT environment is ushering in a new era of productivity. In this article, we'll explore how generative AI is changing the landscape of IT operations, software development, cybersecurity, and team collaboration—and what the future might hold.

What Is Generative AI?

Before diving into its applications, it's important to define what generative AI is. Generative AI refers to algorithms—often based on deep learning models—that can create new content from existing data. This includes text, images, audio, code, and more. Technologies such as OpenAI’s GPT models, Google’s Gemini, and Anthropic’s Claude are leading examples in this space.

Unlike traditional AI, which analyzes and classifies data, generative AI synthesizes information to produce something new and original. In an IT context, this can mean anything from writing scripts and generating code to automating complex workflows and predicting system failures.

Why Productivity in IT Needs a Boost

The IT workspace has always been complex and fast-paced, with ever-changing requirements and escalating demands:

Rising complexity of software and infrastructure.

Pressure to innovate quickly and release updates on tight deadlines.

Shortage of skilled talent, particularly in areas like cybersecurity and DevOps.

Data overload, making it hard to derive actionable insights quickly.

To cope, IT departments have long relied on automation, cloud services, and collaboration tools. Generative AI represents the next frontier—a leap from automation to intelligent augmentation.

1. Generative AI in Software Development

One of the most impactful applications of generative AI is in software development. Tools like GitHub Copilot, Amazon CodeWhisperer, and ChatGPT have started to redefine how developers work.

a. Code Generation and Completion

Generative AI can write entire functions or suggest code snippets based on plain-language descriptions. Developers no longer need to memorize syntax or boilerplate code—they can focus on logic, architecture, and creativity.

Benefits:

Speeds up development cycles.

Reduces mundane and repetitive tasks.

Minimizes syntax errors and bugs.

b. Debugging and Testing

Generative AI can analyze code, detect logical flaws, and even generate unit tests automatically. AI models trained on massive repositories of open-source projects can offer insights into best practices and optimal coding patterns.

c. Documentation

One of the most dreaded tasks for developers is writing documentation. AI can now generate high-quality docstrings, API explanations, and user manuals based on code and comments—making the software more maintainable.

2. Infrastructure and Operations (DevOps)

DevOps teams are embracing generative AI for its ability to manage complex infrastructure, automate repetitive tasks, and predict system behavior.

a. Automated Configuration

Tools like Terraform or Ansible can now be enhanced with AI to auto-generate configuration files, YAML definitions, and shell scripts. DevOps engineers can describe their requirements in natural language, and the AI handles the implementation.

b. Incident Management

During outages or anomalies, time is critical. Generative AI can assist by:

Diagnosing root causes based on logs and metrics.

Suggesting mitigation steps or running corrective scripts.

Generating post-mortem reports automatically.

c. Predictive Maintenance

By analyzing logs and historical data, AI models can predict potential failures before they happen. Combined with generative capabilities, these systems can even draft emails or messages to alert relevant teams with recommendations.

3. Cybersecurity and Threat Intelligence

The cybersecurity landscape is evolving rapidly, with increasingly sophisticated attacks. Generative AI adds a new layer of defense—and offense.

a. Threat Detection

Machine learning models already analyze behavior for threats. With generative AI, alerts can be enriched with contextual narratives that make it easier for analysts to understand and prioritize them.

b. Automated Response

AI can generate firewall rules, quarantine commands, or SIEM queries in real time. Instead of manual configuration, analysts can validate AI-generated scripts for faster incident response.

c. Simulated Attacks

Generative AI can simulate phishing emails, malware scripts, or attack vectors to test the organization’s defenses—creating a proactive cybersecurity culture.

4. Data Management and Analysis

Data engineers and analysts often spend an inordinate amount of time preparing and interpreting data. Generative AI is dramatically changing this dynamic.

a. Data Querying with Natural Language

With tools like ChatGPT or SQLCodex, users can query databases using plain English:

“Show me the top 5 regions with declining sales in Q4 2024.”

The AI translates this into optimized SQL queries—making analytics accessible to non-technical stakeholders.

b. Data Cleaning and Transformation

AI can generate Python or ETL scripts to clean and format raw data, saving hours of manual effort. It can also suggest the best transformations based on data profiling.

c. Insight Generation

Generative AI can summarize trends, generate charts, and even create slide decks with key findings—moving analytics from data delivery to data storytelling.

5. Collaboration and Knowledge Management

Beyond individual productivity, generative AI is enhancing team communication and institutional knowledge.

a. Smart Assistants

Integrated with platforms like Slack, Microsoft Teams, or Notion, AI bots can:

Answer IT queries.

Generate meeting summaries.

Draft emails or memos.

Schedule tasks and manage deadlines.

b. Onboarding and Training

AI-powered platforms can deliver personalized training modules, simulate scenarios, or answer questions interactively. New hires can ramp up faster with contextually rich assistance.

c. Documentation and Wikis

Keeping IT documentation current is a perennial problem. Generative AI can crawl internal systems and auto-generate or update internal wikis, significantly reducing information silos.

Challenges and Ethical Considerations

Despite its enormous potential, the adoption of generative AI in IT is not without challenges:

1. Data Privacy and Security

Generative models often require access to sensitive data. Ensuring that this data is not leaked, misused, or retained without consent is critical.

2. Accuracy and Hallucination

Generative AI can sometimes “hallucinate” or produce incorrect information confidently. In IT, where accuracy is paramount, this could lead to costly mistakes.

3. Skills Gap

While AI reduces the need for rote skills, it increases the demand for AI literacy—knowing how to use, interpret, and audit AI output effectively.

4. Job Displacement vs. Augmentation

There’s a legitimate concern that generative AI could displace certain roles. However, current trends suggest a shift in job roles rather than outright elimination. AI is better seen as a copilot, not a replacement.

The Road Ahead: What the Future Holds

As generative AI continues to evolve, here are some key trends likely to shape its future in the IT workspace:

1. Hyper-Automation

Expect even higher levels of automation where entire workflows—incident response, deployment pipelines, data pipelines—are orchestrated by AI with minimal human oversight.

2. Personalized Work Environments

AI could tailor development environments, recommend tools, or adapt dashboards based on an individual’s work habits and past behavior.

3. AI-First Development Platforms

New platforms will emerge that are designed with AI at the core—not just as a plugin. These tools will deeply integrate AI into version control, deployment, and monitoring.

4. AI Governance Frameworks

To ensure responsible use, organizations will adopt frameworks to monitor AI behavior, audit decision-making, and ensure compliance with global standards.

Conclusion

Generative AI is not just another tool in the IT toolbox—it’s a paradigm shift. By augmenting human intelligence with machine creativity and efficiency, it offers a compelling vision of what productivity can look like in the digital age.

From writing better code to managing infrastructure, analyzing data, and improving security, generative AI IT workspace is streamlining workflows, sparking innovation, and empowering IT professionals like never before.

But with great power comes great responsibility. To unlock its full potential, IT leaders must invest not just in tools, but in training, governance, and culture—ensuring that generative AI becomes a trusted partner in shaping the future of work.

0 notes

Text

Fuzz Testing: An In-Depth Guide

Introduction

In the world of software development, vulnerabilities and bugs are inevitable. As systems grow more complex and interact with a wider array of data sources and users, ensuring their reliability and security becomes more challenging. One powerful technique that has emerged as a standard for identifying unknown vulnerabilities is Fuzz Testing, also known simply as fuzzing.

Fuzz testing involves bombarding software with massive volumes of random, unexpected, or invalid input data in order to detect crashes, memory leaks, or other abnormal behavior. It’s a unique and often automated method of discovering flaws that traditional testing techniques might miss. By leveraging fuzz testing early and throughout development, developers can harden applications against unexpected input and malicious attacks.

What is Fuzz Testing?

Fuzz Testing is a software testing technique where invalid, random, or unexpected data is input into a program to uncover bugs, security vulnerabilities, and crashes. The idea is simple: feed the software malformed or random data and observe its behavior. If the program crashes, leaks memory, or behaves unpredictably, it likely has a vulnerability.

Fuzz testing is particularly effective in uncovering:

Buffer overflows

Input validation errors

Memory corruption issues

Logic errors

Security vulnerabilities such as injection flaws or crashes exploitable by attackers

Unlike traditional testing methods that rely on predefined inputs and expected outputs, fuzz testing thrives in unpredictability. It doesn’t aim to verify correct behavior — it seeks to break the system by pushing it beyond normal use cases.

History of Fuzz Testing

Fuzz testing originated in the late 1980s. The term “fuzz” was coined by Professor Barton Miller and his colleagues at the University of Wisconsin in 1989. During a thunderstorm, Miller was remotely logged into a Unix system when the connection degraded and began sending random characters to his shell. The experience inspired him to write a program that would send random input to various Unix utilities.

His experiment exposed that many standard Unix programs would crash or hang when fed with random input. This was a startling revelation at the time, showing that widely used software was far less robust than expected. The simplicity and effectiveness of the technique led to increased interest, and fuzz testing has since evolved into a critical component of modern software testing and cybersecurity.

Types of Fuzz Testing

Fuzz testing has matured into several distinct types, each tailored to specific needs and target systems:

1. Mutation-Based Fuzzing

In this approach, existing valid inputs are altered (or “mutated”) to produce invalid or unexpected data. The idea is that small changes to known good data can reveal how the software handles anomalies.

Example: Modifying values in a configuration file or flipping bits in a network packet.

2. Generation-Based Fuzzing

Rather than altering existing inputs, generation-based fuzzers create inputs from scratch based on models or grammars. This method requires knowledge of the input format and is more targeted than mutation-based fuzzing.

Example: Creating structured XML or JSON files from a schema to test how a parser handles different combinations.

3. Protocol-Based Fuzzing

This type is specific to communication protocols. It focuses on sending malformed packets or requests according to network protocols like HTTP, FTP, or TCP to test a system’s robustness against malformed traffic.

4. Coverage-Guided Fuzzing

Coverage-guided fuzzers monitor which parts of the code are executed by the input and use this feedback to generate new inputs that explore previously untested areas of the codebase. This type is very effective for high-security and critical systems.

5. Black Box, Grey Box, and White Box Fuzzing

Black Box: No knowledge of the internal structure of the system; input is fed blindly.

Grey Box: Limited insight into the system’s structure; may use instrumentation for guidance.

White Box: Full knowledge of source code or internal logic; often combined with symbolic execution for deep analysis.

How Does Fuzzing in Testing Work?

The fuzzing process generally follows these steps:

Input Selection or Generation: Fuzzers either mutate existing input data or generate new inputs from defined templates.

Execution: The fuzzed inputs are provided to the software under test.

Monitoring: The system is monitored for anomalies such as crashes, hangs, memory leaks, or exceptions.

Logging: If a failure is detected, the exact input and system state are logged for developers to analyze.

Iteration: The fuzzer continues producing and executing new test cases, often in an automated and repetitive fashion.

This loop continues, often for hours or days, until a comprehensive sample space of unexpected inputs has been tested.

Applications of Fuzz Testing

Fuzz testing is employed across a wide array of software and systems, including:

Operating Systems: To discover kernel vulnerabilities and system call failures.

Web Applications: To test how backends handle malformed HTTP requests or corrupted form data.

APIs: To validate how APIs respond to invalid or unexpected payloads.

Parsers and Compilers: To test how structured inputs like XML, JSON, or source code are handled.

Network Protocols: To identify how software handles unexpected network packets.

Embedded Systems and IoT: To validate robustness in resource-constrained environments.

Fuzz testing is especially vital in security-sensitive domains where any unchecked input could be a potential attack vector.

Fuzz Testing Tools

One of the notable fuzz testing tools in the market is Genqe. It stands out by offering intelligent fuzz testing capabilities that combine mutation, generation, and coverage-based strategies into a cohesive and user-friendly platform.

Genqe enables developers and QA engineers to:

Perform both black box and grey box fuzzing

Generate structured inputs based on schemas or templates

Track code coverage dynamically to optimize test paths

Analyze results with built-in crash diagnostics

Run parallel tests for large-scale fuzzing campaigns

By simplifying the setup and integrating with modern CI/CD pipelines, Genqe supports secure development practices and helps teams identify bugs early in the software development lifecycle.

Conclusion

Fuzz testing has proven itself to be a valuable and essential method in the realm of software testing and security. By introducing unpredictability into the input space, it helps expose flaws that might never be uncovered by traditional test cases. From operating systems to web applications and APIs, fuzz testing reveals how software behaves under unexpected conditions — and often uncovers vulnerabilities that attackers could exploit.

While fuzz testing isn’t a silver bullet, its strength lies in its ability to complement other testing techniques. With modern advancements in automation and intelligent fuzzing engines like Genqe, it’s easier than ever to integrate fuzz testing into the development lifecycle. As software systems continue to grow in complexity, the role of fuzz testing will only become more central to creating robust, secure, and trustworthy applications.

0 notes

Text

Valkyrie Log 92

Ninety-two days into our Five-Year Mission, and we have been orbiting Jupiter steadily for twelve days. My Crew has been busy collecting data, observing, and analyzing. The Solar System's beautiful Gas Giant has profound unexplored depths, and my Crew intends to make some headway in our understanding of its mysteries.

One mystery of this planet is how its great Red Spot, a swirling mass of high-pressure storms, has been shrinking steadily and no one can figure out why. Dr. Wilson, Head Engineer, came up with an idea for a probe prototype that would be able to go deeper into its dense center and collect more reliable data. Pairing with Dr. Biggs, the both of them created a fine piece of machinery that worked wonders. It has now returned, successfully, and Dr. Wilson, Dr. Astra, and the Captain have been spending long hours in the Lab, trying to make headway in their understanding of this planet.

Humans, however, need consistent breaks and hours of rest otherwise their minds and emotions sour considerably. Although Christopher and the Captain work amiably in most cases, there are moments when they do clash. Christopher's expertise is primarily in technology and engineering. He would have expert knowledge on my computer database, for example, and the entire VALKYRIE system. He does have knowledge within physics, but not as deep and profound as the Captain's. The Captain also has an eidetic memory, which gives him considerable advantage over many, even very intelligent people.

The result is that, at times, the Captain is extremely dismissive of Christopher's input when it comes to physics insights. Today is such a day, as the three of them have been working for twelve point two five hours nonstop, and with each passing moment of enduring the Captain's condescending replies and curt responses sends Christopher into a deeper and darker mood.

Christopher is not confrontational by nature, being of a more passive disposition, and so he takes the Captain's dismissiveness without a word, but his broad shoulders hunch over and he does not look the Captain in the eyes. This leads to Christopher combing through some of the data files without the Captain or Dr. Astra's knowledge. He seems to be intent on an idea of his own and is having me run a diagnostic on his hypothesis. "Wilson, what are you doing?" demands the Captain abruptly. Being a head taller than Christopher, it makes for quite an intimating sight to have him look down at you with his brilliant crystal blue eyes. Christopher jumps in his skin and begins to fumble and stutter.

"N-nothing - I mean. I was having Val run that diagnostic I mentioned and. . ."

"I told you that that wouldn't be a viable avenue due to unpredictable system vectors. If you would stick with data set I had you on, it would be most helpful to what Dr. Astra and I are currently working through at the moment."

"Yes, sir. . ."

Keeping his hard gaze fixed on Christopher for an extra moment, the Captain turns back to his task, a look of impatience flitting across his face before he returns to his work. Dr. Astra's eyes widen from where she is at her station, but she says nothing. Christopher stands holding the data pad in one hand and clutching his other hand in a tight fist. He looks down at the results of my diagnostic.

VAL: Anomoly detected. Spectral analysis indicates a heavy influx of ionic gas particles that are too condensed for the area observed.

Without a word or a command to me, Christopher deletes my diagnostic and with it the data files. As an AI interface within a machine, I do not feel pain, but if I could it would be in this moment. Christopher's action is unconscionable. Not only is he tampering with the data, which violates Mission policy, but he is going against the virtues of what it means to be a scientist. The Anomaly I detected could be something quite considerable in the advancement of human knowledge and our understanding of this Gas Giant. Christopher is violating his personal and professional ethics for the most grievous reason possible: out of spite.

This action is illogical, and it is in these moments that make me see the gulf that exists between myself and my Creators. Their ways are, at the end of the day, inexplicable.

Echoes in My Hull by KintaroTPC

0 notes

Text

Cloud Cost Optimization: Proven Tactics to Cut Spend Without Sacrificing Performance

Cloud computing offers incredible scalability, flexibility, and performance — but without careful management, costs can spiral out of control. Many businesses discover too late that what starts as a cost-effective solution can quickly become a budgetary burden.

Cloud cost optimization is not just about cutting expenses — it’s about maximizing value. In this blog, we’ll explore proven strategies to reduce cloud spend without compromising performance, reliability, or scalability.

📉 Why Cloud Costs Escalate

Before we dive into tactics, it’s important to understand why cloud bills often balloon:

Overprovisioned resources (more CPU/RAM than needed)

Idle or unused services running 24/7

Lack of visibility into usage patterns

Inefficient storage practices

No tagging or accountability for resource ownership

Ignoring cost-effective services like spot instances

✅ 1. Right-Size Your Resources

Many teams overestimate capacity needs, leaving resources idle.

Optimization Tip: Use tools like AWS Cost Explorer, Azure Advisor, or Google Cloud Recommender to analyze resource utilization and scale down underused instances or switch to smaller configurations.

Examples:

Downgrade from m5.2xlarge to m5.large

Reduce EBS volume sizes

Remove over-provisioned Kubernetes pods

💤 2. Eliminate Idle and Unused Resources

Even seemingly harmless resources like unattached volumes, idle load balancers, and unused snapshots can rack up charges over time.

Optimization Tip: Set up automated scripts or third-party tools (like CloudHealth or Spot.io) to detect and delete unused resources on a regular schedule.

🕒 3. Leverage Auto-Scaling and Scheduled Shutdowns

Not all applications need to run at full capacity 24/7. Auto-scaling ensures resources grow and shrink based on actual demand.

Optimization Tip:

Use auto-scaling groups for web and backend servers

Schedule development and staging environments to shut down after hours

Turn off test VMs or containers on weekends

💲 4. Use Reserved and Spot Instances

On-demand pricing is convenient, but it’s not always cost-effective.

Reserved Instances (RIs): Commit to 1 or 3 years for significant discounts (up to 72%)

Spot Instances: Take advantage of spare capacity at up to 90% lower cost (ideal for batch processing or fault-tolerant apps)

Optimization Tip: Use a blended strategy — combine on-demand, reserved, and spot instances for optimal flexibility and savings.

🗂️ 5. Optimize Storage Costs

Storage often goes unchecked in cloud environments. Tiered storage models offer cost savings based on access frequency.

Optimization Tip:

Move infrequently accessed data to cheaper storage (e.g., S3 Glacier or Azure Archive Storage)

Delete outdated logs or compress large files

Use lifecycle policies to automate archival

🧩 6. Implement Cost Allocation Tags

Tagging resources by project, environment, team, or client provides visibility into who is using what — and how much it costs.

Optimization Tip:

Standardize a tagging policy (e.g., env:production, team:marketing, project:salzen-app)

Use cost reports to hold teams accountable for resource usage

📊 7. Monitor, Alert, and Analyze

Visibility is key to continuous optimization. Without real-time monitoring, overspend can go unnoticed until the bill arrives.

Optimization Tip:

Use native tools like AWS Budgets, Azure Cost Management, or GCP Billing Reports

Set budget alerts and forecast future usage

Perform monthly reviews to track anomalies or spikes

🔧 8. Use Third-Party Cost Optimization Tools

Cloud-native tools are great, but third-party solutions provide more advanced analytics, recommendations, and automation.

Popular Options:

CloudHealth by VMware

Apptio Cloudability

Spot by NetApp

Harness Cloud Cost Management

These tools help with governance, forecasting, and even automated resource orchestration.

🧠 Bonus: Adopt a FinOps Culture

FinOps is a financial management discipline that brings together finance, engineering, and product teams to optimize cloud spending collaboratively.

Optimization Tip:

Promote cost-awareness across departments

Make cost metrics part of engineering KPIs

Align cloud budgets with business outcomes

🧭 Final Thoughts

Cloud cost optimization isn’t a one-time project — it’s a continuous, data-driven process. With the right tools, policies, and cultural practices, you can control costs without compromising the performance or flexibility the cloud offers.

Looking to reduce your cloud bill by up to 40%? Salzen Cloud helps businesses implement real-time cost visibility, automation, and cost-optimized architectures. Our experts can audit your cloud setup and design a tailored savings strategy — without disrupting your operations.

0 notes

Text

AI and ML in Cybersecurity: How Artificial Intelligence is Transforming Threat Detection and Prevention

The digital landscape is a battleground. Every day, new threats emerge, seeking to exploit vulnerabilities and compromise sensitive information. Traditional cybersecurity measures, while essential, are often reactive, struggling to keep pace with the sheer volume and sophistication of modern cyberattacks. This is where Artificial Intelligence (AI) and Machine Learning (ML) step onto the scene, fundamentally reshaping how we approach threat detection and prevention. These technologies are no longer theoretical concepts; they are actively deployed, offering a comprehensive and real-time response to the ever-evolving cyber threat matrix.

A Comprehensive Look at the Role of AI and Machine Learning in Identifying and Responding to Cyber Threats in Real Time

At its core, cybersecurity relies on identifying anomalies and malicious activities. This is precisely where AI and ML excel. Unlike human analysts who can be overwhelmed by vast datasets, AI algorithms can sift through petabytes of network traffic, system logs, and threat intelligence feeds in milliseconds. This allows for the identification of suspicious patterns, unusual behaviors, and indicators of compromise that would be invisible to traditional, signature-based detection methods.

Machine learning, a subset of AI, empowers systems to learn from data without explicit programming. In cybersecurity, this translates to systems that can constantly adapt and improve their threat detection capabilities. For instance, a machine learning model can be trained on a massive dataset of both legitimate and malicious network traffic. Over time, it learns to distinguish between normal operations and potential threats, even those that haven't been seen before. This real-time analysis and adaptive learning are critical in an environment where new malware strains and attack techniques emerge daily.

Beyond detection, AI and ML are also transforming response mechanisms. Once a threat is identified, AI-powered systems can initiate automated responses, such as isolating infected devices, blocking malicious IP addresses, or rolling back compromised systems. This drastically reduces the time between detection and remediation, minimizing the potential damage of a cyberattack.

How AI Algorithms Are Being Used to Detect Patterns, Predict Attacks, and Automate Response Systems

The power of AI in cybersecurity stems from its ability to process and understand complex data relationships. Here's a closer look at its applications:

Pattern Detection: AI algorithms, particularly those leveraging deep learning, are adept at identifying intricate patterns that signify malicious activity. This includes subtle changes in user behavior, unusual login attempts, abnormal data transfers, or deviations from established network baselines. For example, a deep learning model could detect that an employee who normally accesses specific files from their office IP is suddenly trying to access highly sensitive data from a foreign IP address at 3 AM – a clear indicator of a potential compromise.