#opengl triangles

Explore tagged Tumblr posts

Text

homura rasterization

#art#digital art#digital painting#madoka magica#puella magi madoka magica#pmmm#homura#homura akemi#medibang#my art#mizpah.art#opengl triangles

1K notes

·

View notes

Text

I have an idea on how to handle rendering in Project Special K in a general way but without constantly cycling state for every goddamn cliff or whatnot.

(You should be aware that the cliffs have separate mesh parts for the grass and rock bits, so they can have different textures and shaders. Yes, even the shaders, because the grass dynamically changes color through the year and switches to a snowy base texture in winter. And that's saying nothing about the rivers!)

So instead of having Model::Draw do so directly, have it put the required data (texture set, VAO, matrices) for each mesh into a special structure, which goes into a bucket. When the bucket gets too big or is manually flushed, its contents are first sorted by VAO, then texture, and then actually rendered in that order.

(For those who don't know: a Vertex Attribute Object is what defines a mesh in OpenGL, binding together a Vertex Buffer Object (the vertex data cloud), an Element Buffer Object (indices, so you can have two connected triangles reuse their common vertices), and the attribute data (how to interpret the VBO) into one easily-reusable thing. At least that's how I understand it by now.)

So when drawing for example the ground for an acre with a few cliff tiles, the bucket would be filled with mostly plain ground tiles, and those cliff tiles of various connectivity and rotations. The plain ground has one VAO and one texture, while the cliffs have two VAOs and textures each, and different cliffs have different VAOs because a straight side part is of course not the same as a corner part.

Sorting by VAO, "emptying" the bucket would draw the plain ground, the rocky sides of the straight cliffs, the sides of the corner cliffs, and the grassy ends of the cliffs separately, but grouped exactly like that, with the groups appearing in whatever order the VAOs were assigned a number by OpenGL.

That reduces the number of state-changing calls (binding textures, VAOs, shaders etc) considerably compared to drawing the entire tile as a whole! Imagine having a full acre's worth of ground tiles (16×16) where some are flat grassy ground, some are stone paths, some are cliffs of some shape, and some some a river. Naively going through the map from left to right top to bottom, worst case scenario you'd have to switch out a new set of VAO, shader, and texture binds for every individual tile.

And if you want to force the same order as Animal Crossing New Horizons (which is apparently: players and villagers, objects, buildings, ground, sky, translucent bits, weather effects), just empty the bucket in preparation, render the players and villagers, empty the bucket again, render the placed and dropped objects, empty the bucket...

Note that in that sentence "render" means "put the request to render in the bucket" and "empty" means "sort then actually render the bucket's contents".

Does any of that make sense?

10 notes

·

View notes

Text

When whipping up Railgun in two weeks' time for a game jam, I aimed to make the entire experience look and feel as N64-esque as I could muster in that short span. But the whole game was constructed in Godot, a modern engine, and targeted for PC. I just tried to look the part. Here is the same bedroom scene running on an actual Nintendo 64:

I cannot overstate just how fucking amazing this is.

Obviously this is not using Godot anymore, but an open source SDK for the N64 called Libdragon. The 3D support is still very much in active development, and it implements-- get this-- OpenGL 1.1 under the hood. What the heck is this sorcery...

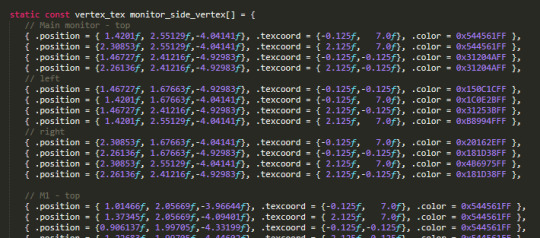

UH OH, YOU'VE BEEN TRAPPED IN THE GEEK ZONE! NO ESCAPE NO ESCAPE NO ESCAPE EHUEHUHEUHEUHEUHUEH While there is a gltf importer for models, I didn't want to put my faith in a kinda buggy importer with an already (in my experience) kinda buggy model format. I wanted more control over how my mesh data is stored in memory, and how it gets drawn. So instead I opted for a more direct solution: converting every vertex of every triangle of every object in the scene by fucking hand.

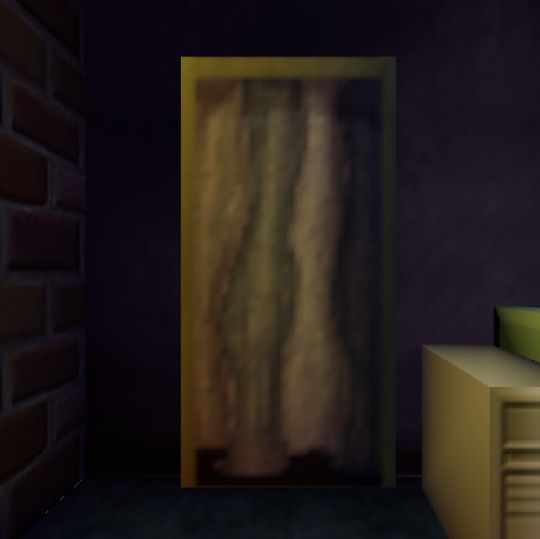

THERE ARE NEARLY NINE HUNDRED LINES OF THIS SHIT. THIS TOOK ME MONTHS. And these are just the vertices. I had to figure out triangle drawing PER VERTEX. You have to construct each triangle counterclockwise in order for the front of the face to be, well, the front. In addition, starting the next tri with the last vertex of the previous tri is the most efficient, so I plotted out so many diagrams to determine how to most efficiently draw each mesh. And god the TEXTURES. When I painted the textures for this scene originally, I went no larger than 64 x 64 pixels for each. The N64 has an infamously minuscule texture cache of 4kb, and while there were some different formats to try and make the most of it, I previously understood this resolution to be the maximum. Guess what? I was wrong! You can go higher. Tall textures, such as the closet and hallway doors, were stored as 32 x 64 in Godot. On the actual N64, however, I chose the CI4 texture format, aka 4-bit color index. I can choose a palette of 16 colors, and in doing so bump it up to 48 x 84.

On the left, the original texture in Godot at 32 x 64px. On the right, an updated texture on the N64 at 48 x 84px. Latter screenshot taken in the Ares emulator.

The window, previously the same smaller size, is now a full 64 x 64 CI4 texture mirrored once vertically. Why I didn't think of this previously in Godot I do not know lol

Similarly, the sides of the monitors in the room? A single 32 x 8 CI4 texture. The N64 does a neat thing where you can specify the number of times a texture repeats or mirrors on each axis, and clip it afterwards. So I draw a single vent in the texture, mirror it twice horizontally and 4 times vertically, adjusting the texture coordinates so the vents sit toward the back of the monitor.

The bookshelf actually had to be split up into two textures for the top and bottom halves. Due to the colorful array of books on display, a 16 color palette wasn't enough to show it all cleanly. So instead these are two CI8 textures, an 8-bit color index so 256 colors per half!! At a slightly bumped up resolution of 42 x 42. You can now kind of sort of tell what the mysterious object on the 2nd shelf is. It's. It is a sea urchin y'all it is in the room of a character that literally goes by Urchin do ddo you get it n-

also hey do u notice anything coo,l about the color of the books on each shelf perhaps they also hjint at things about Urchin as a character teehee :3c I redid the ceiling texture anyways cause the old one was kind of garbage, (simple noise that somehow made the edges obvious when tiled). Not only is it still 64px, but it's now an I4 texture, aka 4-bit intensity. There's no color information here, it's simply a grayscale image that gets blended over the vertex color. So it's half the size in memory now! Similarly the ceiling fan shadow now has a texture on it (it was previously just a black polygon). The format is IA4, or 4-bit intensity alpha. 3 bits of intensity (b/w), 1 bit of alpha (transparency). It's super subtle but it now has some pleasing vertex colors that compliment the lighting in the room!

Left, Godot. Right, N64. All of the texture resolutions either stayed the same, or got BIGGER thanks to the different texture formats the N64 provides. Simply put:

THE SCENE LOOKS BETTER ON THE ACTUAL N64.

ALSO IT RUNS AT 60FPS. MOSTLY*. *It depends on the camera angle, as tried to order draw calls of everything in the scene to render as efficiently as I could for most common viewing angles. Even then there are STILL improvements I know I can make, particularly with disabling the Z-buffer for some parts of the room. And I still want to add more to the scene: ambient sounds, and if I can manage it, the particles of dust that swirl around the room. Optimization is wild, y'all. But more strikingly... fulfilling a childhood dream of making something that actually renders and works on the first video game console I ever played? Holy shit. Seeing this thing I made on this nearly thirty-year-old console, on this fuzzy CRT, is such a fucking trip. I will never tire of it.

46 notes

·

View notes

Text

on the one hand, codeblr is full of productivity motivation posts and cute bulleted notetaking. and it's cool to see people learning! one of the things i love about programming is that anyone can learn it, there's a much lower barrier to entry than many other stem fields where you need to go to grad school or something to become proficient.

but what i want is like. unhinged system programmers making bootloaders that print "die" and then brick your computer. i want posts written by a transfem in cat ears who hasn't showered in weeks about how they found a bug in opengl that renders pyramids inside out and how they used it to see inside laura croft's triangle titties. i want to see bespoke operating systems written for nokia brick phones that run doom. where is that side of codeblr

#and i'm not kidding#codeblr#progblr#i'm trying my best but tbh i am just not that good at programming

37 notes

·

View notes

Text

Surprise! I am learning graphics programming again.

This time I am directly learning Sokol which are super lightweight c headers (good for Zig which I plan on making my game in) for platform independant graphics (and sound and other stuff). And I am getting the THEORY for graphics from learnopengl.com and all of the opengl examples have been ported to sokol and i've been cross referencing with those.

All I want to learn is quads, texturing, and custom buffers (textures again).

For those unfamiliar with graphics, basically you define geometry with vertices (which make up triangles), and you use shaders (which are just programs that run on the gpu) to transform that geometry into pixels on a screen. At this stage you can apply input colors and sample textures and stuff.

Also instead of my farming game, I am thinking of making a smaller scale game that I may talk about more here soon.

For just a teaser, it's gonna be a horror game???

12 notes

·

View notes

Text

ogrumm replied to your post

Huh, are you projecting the triangles? Looks like something is getting transformed

so the way this shader works is that i manually draw a quad inside the opengl clipping box aligned right up against z = -1 at the far clipping plane, with no perspective matrix applied. and then i multiply the given points (which are just like, V3 ±1 ±1 -1) by the inverse of the perspective*view matrix to convert them into 'world space', and then i convert those to spherical coordinates to project the celestial sphere as seen from that particular point.

the actual problem in play here was that i was just transposing the view matrix when i set it. b/c i wasn't sure if my code was doing the thing i expected wrt opengl's column-major matrices. so, same exact calculations except one of these has a matrix transposed:

i can't say why the broken version looks precisely the way it does, just, the transposition was definitely the thing wrong there. now i have lat/long lines as expected!

4 notes

·

View notes

Text

Mesh topologies: done!

Followers may recall that on Thursday I implemented wireframes and Phong shading in my open-source Vulkan project. Both these features make sense only for 3-D meshes composed of polygons (triangles in my case).

The next big milestone was to support meshes composed of lines. Because of how Vulkan handles line meshes, the additional effort to support other "topologies" (point meshes, triangle-fan meshes, line-strip meshes, and so on) is slight, so I decided to support those as well.

I had a false start where I was using integer codes to represent different topologies. Eventually I realized that defining an "enum" would be a better design, so I did some rework to make it so.

I achieved the line-mesh milestone earlier today (Monday) at commit ce7a409. No screenshots yet, but I'll post one soon.

In parallel with this effort, I've been doing what I call "reconciliation" between my Vulkan graphics engine and the OpenGL engine that Yanis and I wrote last April. Reconciliation occurs when I have 2 classes that do very similar things, but the code can't be reused in the form of a library. The idea is to make the source code of the 2 versions as similar as possible, so I can easily see the differences. This facilitates porting features and fixes back and forth between the 2 versions.

I'm highly motivated to make my 2 engine repos as similar as possible: not just similar APIs, but also the same class/method/variable names, the same coding style, similar directory structures, and so on. Once I can easily port code back and forth, my progress on the Vulkan engine should accelerate considerably. (That's how the Vulkan project got user-input handling so quickly, for instance.)

The OpenGL engine will also benefit from reconciliation. Already it's gained a model-import package. That's something we never bothered to implement last year. Last month I wrote one for the Vulkan engine (as part of the tutorial) and already the projects are similar enough that I was able to port it over without much difficulty.

#open source#vulkan#java#software development#accomplishments#github#3d graphics#coding#jvm#3d mesh#opengl#polygon#3d model#making progress#work in progress#topology#milestones

2 notes

·

View notes

Text

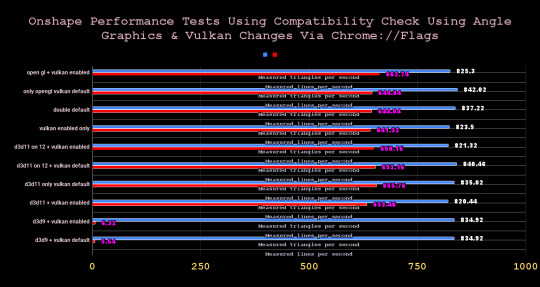

So!!!

The big reveal is that Vulkan makes everything preform worse, except for using d3d9 & only for a terrible bump in measured lines

All of these are million(s +) for the numbers averaged & written down

Use d3d11 on 12, using chrome, for the best average when taking into account a AMD RYZEN 7 5800x 8 core processor, 64gb of ram, & a Nvidia GeForce RTX 3080

Use OpenGL for slightly better measured triangles

Use OpenGl with Vulkan for outright best measured lines

Double default on chrome doesn't get anything to become the best average, but it is close.

Check the averages in the link shared for the results of everything.

#science#onshape#cad design#google chrome#angle graphics#vulkan#performance metrics#strange aeons#3d printing

0 notes

Text

Assignment #1 – OpenGL and JOGL

Overview This objective of this assignment is to insure that you are sufficiently familiar with the structure of applications written in JOGL, OpenGL, and GLSL, that we will be using this semester. The assignment is to make several relatively simple modifications to Program 2.6 from the textbook. Your program will draw an isosceles triangle that moves around the screen in various ways, and…

0 notes

Text

Happy New Year!! I missed a day again #24

Hope you all had a safe and fun new years/eve. I know I did. First one since turning 21, so also my first* time being drunk. It's pretty fun I think.

Like it says in the title, I missed a day again. I want to make not of it, but I don't really think it's too big of a deal. I was having fun. It's okay to have a day off y'know.

Anyways, into the meat.

Rust, and specifically the Rust toolchain is the best workflow I've ever experienced. Better than C++ by lightyears, and even better than Java. I haven't been entirely problem free, but every problem was clear and I could fix it in about 10 minutes or less. I don't fully know the language and it's features, so I'm relying a lot on the tutorial I'm following for correct syntax and whatnot, but it's intuitive enough that I can read it with my level of programming experience (not expert, not novice, idk exactly).

I need to do the usual tutorial pathway for any new language eventually, but for now, getting the OpenGL "hello, triangle" program written is technically done. In about 6 hours of programming across 2 days. Maybe less.

It took easily 20-30 hours across 2 weeks for me to get to this same point in the Vulkan with C++ "Hello, triangle" tutorial. And that's not counting my first attempt where I learned what I needed to know about the C++ toolchain, and how to set up my workspace, and all the little tiny details that break everything when they're wrong. That first attempt was probably over 100 hours over the course of a month.

AND I DIDN'T EVEN GET THE STUPID THING TO WORK.

If you want to make a game or application, and you are struggling to get your programs to compile or work or whatever bs, please look into Rust. It's beautiful.

Anyway, that said, this week is supposed to be the one where I make a multimedia project. I think I'll try and draw something and make a little guy in a room that can jump around once I get through the rest of the tutorial. I hope it covers loading textures and in-depth toolchain configuration and whatnot.

I don't think I can confidently animate anything, at least not in Krita. Who or whatever this character is is probably just gonna be a ball that rolls around or something.

Trying to handle collisions in a way that isn't incredibly hackey is an interesting problem I think. I think the technical term for it would be a "collision system", but that's gay, and I am gay, so I will be calling it that. The collision system will take an array of collision objects specified in some external file and parse them, then using some algorithm (probably just normal testing on some simple polygons, but possibly a path-traced collision detection approach could be feasible), calculate collisions on them.

That's a problem for either tomorrow or Friday though. For right now, I am incredibly exhausted, so I will be going to bed soon.

I'm so excited about Rust and OpenGL. I can't believe how much I've been hampering myself, and all because of a misinterpretation of my own goals.

1 note

·

View note

Text

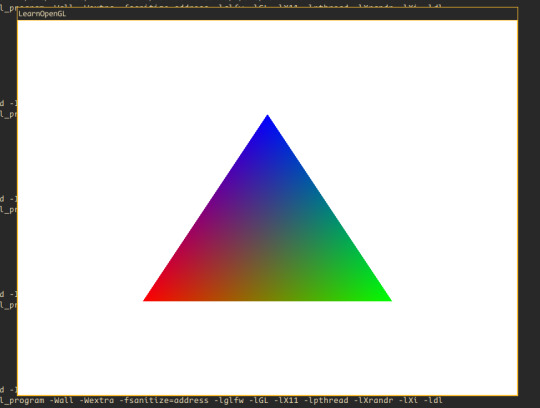

learning to do stuff with OpenGL. here's a blobby triangle I made :D

1 note

·

View note

Text

recently I've been learning how to use vulkan, not for any particular reason, i just thought it'd be interesting.

the vulkan docs have an official tutorial that you can follow that first starts off with creating a rainbow triangle, as you would in something like OpenGL

you wanna guess how many lines of code this rainbow triangle is?

the tutorial in the vulkan docs has you write ~900 lines of code. for that. maybe 500-600 if you remove the error handling and assume some things about the user's setup.

most of it is setup, so it's not like it'll take another 600 lines to add another triangle, but that's still crazy to me. i think an opengl hello world is like 100 lines.

1 note

·

View note

Text

RLNET? Why are you using API that has been deprecated in OpenGL since 2003?? Guess you're really going for that authentic old-style development workflow.

Anyway, I'm nearly completely done with the conversion to .NET 8.0 with RLNET's functionality. Making the jump to rendering each cell with shaders instead of direct triangle rendering has been the largest jump of functionality. Once that's done, I'll be refactoring a bit of the functionality in there to start utilizing more modern features of .NET 8.0.

0 notes

Text

Personal Statement

This is my mostly autobiographical, but partially fictional personal statement written as though I were in high school again, applying for Stanford, my undergrad. It is set in 2011.

—

Sis went to John Hopkins. When her acceptance letter arrived in the mail, mom cried because it wasn’t from Harvard. Sis ignored mom’s heartfelt congratulations and put the first fat green checkbox on the list of colleges she had applied to. Dad, whose most intimate display of affection ’til date was a pat on the shoulder, put an arm around sobbing mom and said: it’s okay, we have one more chance. I couldn’t look, but I knew they were looking at me. I was busy forcing an acidic geyser back into my stomach. I swear, I gave serious consideration to staying in our living-now-study-room, just to state for the record that we had not yet received any word from Harvard. But that would have just reminded them that I was uninterested in applying there anyway.

I’m not interested in college at all, in fact. If I had confidence that this world would accept me for who I was and what I could do, independent of who stamped a damn sheet of paper with my name on it, I wouldn’t go to college at all. But dad tried to put my idealism to rest, telling me that twenty years from now every software engineer like him would be out of a job, outsourced to India. Do you want to go to India? He asked me this every third dinner my sophomore year like it was a fact. My decision to major in computer science was doomed to be about as financially prudent as mom’s Mrs. degree in English Lit. It didn’t matter even if the sheet of paper was to be earned from the legendary Stanford. Look: we got our degrees from Seoul National University, the Harvard of South Korea, but dad was stuck at a decade old startup that had long since lost any conception of “start” or “up” and mom still didn’t consistently know when “a” or “the” was supposed to appear before her nouns.

I admit I’m an ungrateful child to two kind, loving, and capable parents who thought it was a good idea to prep a Harvard kid from what is now officially a desert, both in terms of rainfall and intellectual vitality. I grew up in Tracy. This Central Valley town is about as cringeworthy as its slogan “Think Inside the Triangle”, proudly splashed across the city website made by its one librarian. And inside the triangle I loved, because when none of your friends know what acronyms like AIME or ACT mean, high school is bliss. Expectations are below sea level.

But eventually, I learned that I was statistically 100 points disadvantaged at the SAT compared to the kids next door who grew up thirty minutes away in Livermore. To me, who was desperately trying to salvage my complete lack of reading and writing fundamentals, this sounded like a free hundred points my parents preferred to squeeze out of my blood, sweat, and tears instead of theirs. Dad even worked in Livermore for God’s sake! When I brought this up after dinner one night, Seoul-raised city dad gave me a pat on the shoulder. He told me that every year, the kid with the national highest score on the suneung, or the Korean equivalent of the SAT, always came from a shigol. Shigol means “a small rural town", but in this context, it translates into “bum-fuck-middle-of-nowhere”.

Fine. Ok. I was kind of that kid. I miraculously scored a 2360 despite my most desperate efforts to study less and spend more time with my music or my code, which let me tell you, was not easy, because software engineering dad did not like me coding.

I got into programming through my love for video games and my dad’s hate for both subjects. I was at a friends house in fourth grade, together doing insane twirls in Tony Hawk’s Pro Skater 2 when dad suggested that rather than playing games, I could make them. Of course, it was so obvious! With dad’s help, I installed Visual Studio Code 2003, the C++ SDK, plopped open an OpenGL book, and went to work. In retrospect, I’m impressed that I fought this thing for a whole year, eventually making something that resembled Astroid Blaster except with strangely colored squares, triangles, and circles that didn’t always collide correctly despite my self-taught geometry. Dad was a moderately helpful figure who would explain things twice and would then get frustrated when he had to explain for a third time that in order to rotate this square, I had to multiply a spatial transformation matrix to each of its coordinate vectors.

By sixth grade, I had graduated into a more efficient game development paradigm: “modding”. I went into the Starcraft: Brood War engine where rather than programming something from scratch, I simply wrote code that allowed me to edit how the game behaved. I made increasingly sophisticated games, moving to Warcraft 3 and eventually Starcraft 2, all hidden from dad, who believed that if I was going to be shipped to India, I should at least go knowing C++, not some useless scripting language for a video game. Mom didn’t really know what to think. I suspect she was not convinced I would be sent to India. Regardless, since this activity appeared substantially more productive than playing video games, she let me code after school until dad came home from coding all day at his C++ job at which point I had to stop coding.

I later understood dad’s love for me in all of this: he really just wanted a future for me where I wasn’t half as miserable as he was. And until about sophomore year, I admit I really didn’t have a plan for the future. I just did things… because. Maybe if I hadn’t flipped my life upside down, I really was going to end up in India. Thankfully, a really strange and upside-down idea in the shape of Ayn Rand flipped me inside out. Maybe I should value the energy, passion, and enthusiasm for the things I loved regardless of whether it’d send me to Harvard. Maybe this is what it meant to live. First on my list was music.

I remember coming back to my ninth year of piano lessons, telling my piano teacher Lauren that I was now really, I mean really, into Maurice Ravel. I opened the sheet music, its binding worn via repetition to the point where it would practically open itself to Une Barque Sur L’ocean. I had played this piece so many times on my Yamaha throughout that August, the whole month of “piano summer break”. Even my normally understanding Sis complained about the repetition. Lauren sat silent for a good long while after I had finished playing through it. I didn’t think much of it at the time, except that it was unusual for my normally critical teacher to say nothing. Two weeks ago, while writing recommendation letters for me, she told me that it was the moment I had graduated from being good at piano to being a pianist.

Piano became my emotional dictionary, spanning the sorrows of Ondine to the euphoria of L’isle Joyeuse. Mom even asked me if I would consider going to Harvard to study music. No, I told her, this is just something I really want to do. This badly? You do this just for fun? Yes, because this music expresses the joy and misery of being alive. Her confusion made me wonder if this desert town was truly hot enough to bleach any sense of purpose and identity.

I want to come to Stanford, not to escape this triangle, but to actually further challenge my flame for life. I have not come this far to imagine that I will encounter less adversity in my objective to pursue what I love. I want you to know that I will take no pleasure in the conformity of converting my soul into homework assignments, group projects, and final exams. I’ll major in computer science because I have to and refuse to take a class in music because I have principles. If you’re okay with that, please send me an acceptance letter. You’ll probably make mom cry, but don’t worry. We’ve all been through much worse.

0 notes

Text

so first up some explanations about Unity & coding in general just cause ik it can be confusing. This probably isnt like 100% accurate explanations, its just the way I think about it and I am Technically a professional software developer that knows a bit about unity.

Shaders themselves are written in a language called OpenGL - they're actually a pretty weird kind of code? Basically, all a shader does is take in one value, and output another. Like a basic RGB shader might take in a Vec3(red value, green value, blue value) like Vec3(255, 0, 0), and then just just output that exactly. That's what an 'unlit' shader does. The shader is still necessary because thats the piece of code that tells the GPU what to do when its given a 3D object.

I'm sure most folks playing video games know this to a degree, but all 3D shapes in digital space are typically made up of a ton of triangles linked together. Those triangle faces are made up of vertices, and the explanation of how all thats calculated and figured out by your computer is personally boring so I'm not going to bother with it, but it is relevant to how shader code works. Most shaders need to know where in digital space they're calculating and where stuff like light sources are.

All of the fancy bits of lighting in a shader are really just equations using the point in space, the location of light sources, and commonly, the color of the base texture of the 3D object to determine the final color of the object after its processed.

This is one of the purposes of "UV mapping" an object's texture actually - it's how you tell the computer where the color on a 2D image relates to a location on a 3D object

-

in Unity specifically, most stuff is handled through different kinds of objects. I think part of the original draw of Unity as an engine was the ability to package up any combination of assets and send them to someone else? Since as long as you hold all the different pieces of an object, anyone can use it. Even different scripts can be attached to different objects in many ways - thats why you might hear about crazy sounding things like "every object that moves is actually a door pretending very hard to not be a door" out of context for a game. That "Door" script just happens to swing exactly the same way a falling platform needs to, so why bother writing a second script to do the same thing.

-

For shaders, and this I think is common for most things that render in 3D not just Unity, they are typically packaged into "Material" Objects. this is your basic out of the box Unity material

Unity does let you directly edit their shader code but its kind of a mess to look at so here's a basic shader from the site "ShaderToy"

if someone wants a break down of whats happening there lmk this is just to show you its all math, always has been.

Materials in any 3D program can be put on as many objects as you want - so the same metal material can be applied to anything metallic - and the color and texture for individual objects can be still be different. This is where the issues with Shining Nikki's skin materials come into play; most likely, they just used the same exact material as the base skin tone on all the skin tone without testing or caring how it interacted with the other textures.

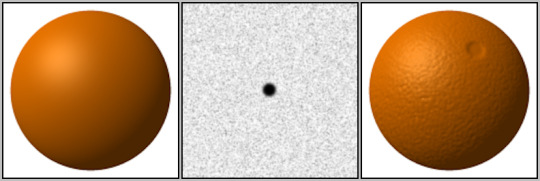

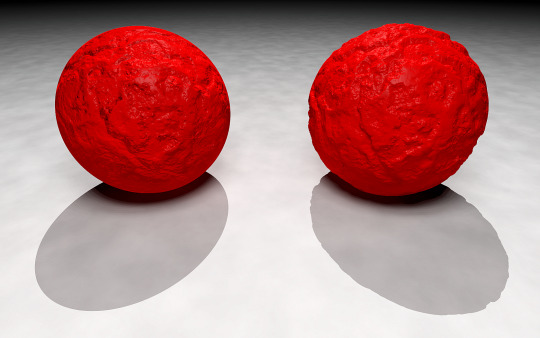

Skin is a very difficult thing to replicate - as @alenadragonne mentioned on Miss K's post, the shader/material effects often involve several layers put on top of each other in a funky math way to get that feeling of softness. Theres also other effects that are used as well. A "Bump map" masks where lighting shines to replicate the feeling of texture in the lighting, and a "Displacement map" makes it visually appear as though the bumps and cracks in a texture were actually modeled

shamelessly taken from Wikipedia:

this is how a bump map breaks up the lighting

and this is how a displacement map changes the shape of the base object

I dont actually know much about subsurface scattering when it comes to coding shaders, but you can see the effect of it in real life pretty easily!

cursed au canceled i want to refresh my memory on shaders and talk about it more now

12 notes

·

View notes

Text

YEAHHHH HELLO TRIANGLE WOOO

I still only vaguely know how this appeared on my screen so im gonna practice getting to this point, and try to memorize it.

For those who have no idea how graphics work, with opengl you define vertices (or corners) and colors (other stuff too but just colors for now) and pass them to the gpu. The gpu runs these things called "shaders" which are really just programs that run on the gpu.

Said shader processes the vertices you gave it, and then it hands it off to the "fragment" shader which turns a collection of vertices into pixels on the screen.

I'm pretty sure that's how it works anyway.

19 notes

·

View notes