#s3 bucket in aws

Explore tagged Tumblr posts

Text

Amazon Simple Storage Service Tutorial | AWS S3 Bucket Explained with Example for Cloud Developer

Full Video Link https://youtube.com/shorts/7xbakEXjvHQ Hi, a new #video on #aws #simplestorageservice #s3bucket #cloudstorage is published on #codeonedigest #youtube channel. @java #java #awscloud @awscloud #aws @AWSCloudIndia #Cloud #

Amazon Simple Storage Service (Amazon S3) is an object storage service that offers industry-leading scalability, data availability, security, and performance. Customers of all sizes and industries can use Amazon S3 to store and protect any amount of data. Amazon S3 provides management features so that you can optimize, organize, and configure access to your data to meet your specific business,…

View On WordPress

#amazon s3#amazon s3 bucket#amazon s3 tutorial#amazon web services#aws#aws cloud#aws s3#aws s3 bucket#aws s3 bucket tutorial#aws s3 interview questions and answers#aws s3 object lock#aws s3 object lock governance mode#aws s3 object storage#aws s3 tutorial#aws storage services#cloud computing#s3 bucket#s3 bucket creation#s3 bucket in aws#s3 bucket policy#s3 bucket public access#s3 bucket tutorial#simple storage service (s3)

0 notes

Note

It sucks that your pixiv was taken down hope that you can use another site/app for all of your beautiful art!!! maybe discord or dropbox?

No worries! I've mirrored everything to AO3 so things can be properly age-restricted/tagged/etc (I really liked that Pixiv had mature content settings, so I was looking for that in a replacement.) I'm using an AWS S3 bucket for hosting (it's linked in all the works, and there's a simple navigation system in place if you want to poke around.)

#like a year ago i was trying to hammer that s3 bucket into a gallery website lol.....#eventually i gave up bc i dont have nearly enough practice with aws or webdev in general to make that happen#and let me tell you. your s3 bucket does NOT want to be a website. IT REALLY DOESNT#anyway. i chose ao3 and aws because theyre both ok with hosting any content FOREVER#so i'll never have to deal with getting nuked again unless something very significant happens at amazon (god forbid)#asks

40 notes

·

View notes

Text

Spring Cloud 2025.0.0-RC1 (aka Northfields) has been released

Estimated reading time: 2 minutes Spring Cloud 2025.0.0-RC1 release has updates in several modules. These include Spring Cloud Config, Spring Cloud Gateway, Spring Cloud Task, Spring Cloud Stream, Spring Cloud Function & More. Following article details the changes in the release and what modules are affected. Info About Release Release TrainSpring Cloud 2025.0.0 Release Candidate 1…

#AWS S3 buckets#Spring Cloud Config#Spring Cloud Function#Spring Cloud Gateway#Spring Cloud Stream#Spring Cloud Task

0 notes

Text

Python program to download files recursively from AWS S3 bucket to Databricks DBFS

S3 is a popular object storage service from Amazon Web Services. It is a common requirement to download files from the S3 bucket to Azure Databricks. You can mount object storage to the Databricks workspace, but in this example, I am showing how to recursively download and sync the files from a folder within an AWS S3 bucket to DBFS. This program will overwrite the files that already exist. The…

#boto3#python program to download files from Aws s3 bucket to databricks dbfs#python program to recursively download files and folders from an AWS S3 bucket in Azure Databricks

0 notes

Text

Quick Learn & Get Code Samples for PHP AWS S3 - How to Use AWS S3 Bucket in PHP to Store and Manage files. It offers a wide range of programming sample code and demo videos for PHP AWS S3, Laravel, How to Use AWS S3 Bucket, and API development, PHP to Store and Manage files and other website languages.

To know more visit How to Use AWS S3 Bucket in PHP to Store

0 notes

Text

Backup Repository: How to Create Amazon S3 buckets

Amazon Simple Storage Service (S3) is commonly used for backup and restore operations. This is due to its durability, scalability, and features tailored for data management. Here’s why you should use S3 for backup and restore. In this guide, you will learn baout “Backup Repository: How to Create Amazon S3 buckets”. Please see how to Fix Microsoft Outlook Not Syncing Issue, how to reset MacBook…

View On WordPress

#Amazon S3#Amazon S3 bucket#Amazon S3 buckets#AWS s3#AWS S3 Bucket#Backup Repository#Object Storage#s3#S3 Bucket#S3 bucket policy#S3 Objects

0 notes

Text

AWS's Predictable Bucket Names Make Accounts Easier to Crack

Source: https://www.darkreading.com/threat-intelligence/aws-cdk-default-s3-bucket-naming-pattern-lets-adversaries-waltz-into-admin-access

More info: https://www.aquasec.com/blog/aws-cdk-risk-exploiting-a-missing-s3-bucket-allowed-account-takeover/

10 notes

·

View notes

Text

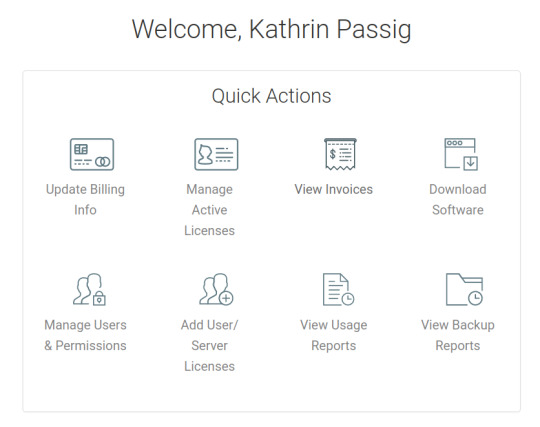

2. Juli 2024

Mein Backup ist weg, aber wenigstens auf eine preisgünstige Art

Seit ein paar Wochen habe ich eine neue Kreditkarte. Jetzt bekomme ich eine Nachricht, dass 35 Cents durch "AWS EMEA" nicht abgebucht werden konnten, weil die Kreditkartendaten nicht mehr stimmen. Das sagt mir nichts. Eine Suche im Internet ergibt, dass es sich um Amazon Web Services handelt. Das sagt mir immer noch nicht sehr viel. Habe ich die mal benutzt?

Auf gut Glück gebe ich "aws.amazon.com" ein und versuche mich dort mit meiner Mailadresse einzuloggen. Noch bevor ich zur Passwortabfrage vorgedrungen bin, teilt mir die Seite mit, dass ich bitte "Passwort vergessen" wählen und mir ein neues Passwort vergeben soll. Ich klicke auf "Passwort vergessen" und bekomme eine Mail mit einem sehr langen, nicht anklickbaren Link. Ich kopiere den Link in meinen Browser und kann mir dann ein neues Passwort aussuchen. Aber nicht irgendeines, sondern eins mit ... ich ignoriere die Details, öffne meinen Passwortmanager und lasse den ein neues Passwort generieren. Das gebe ich zwei Mal ein. Dann kann ich mich einloggen, bin aber immer noch nicht drin. Erst muss ich meine Mailadresse verifizieren, das heißt, eine weitere Bestätigungsmail abwarten und auf den Link darin klicken. Dann muss ich meine Telefonnummer verifizieren. Dazu bekomme ich einen sechsstelligen Zahlencode und einen automatischen Anruf. Eine nicht ganz menschlich klingende Stimme fordert mich zur Eingabe des Zahlencodes auf. Jetzt kann ich mich einloggen und nachsehen, wofür die 35 Cent abgebucht werden sollten.

Auf dem Handy ist die Ansicht so defekt, dass ich gar nichts herausfinden kann. Ich logge mich auf dem Laptop ein und erfahre, dass ich erst meine Mailadresse ein zweites Mal verifizieren soll. Das kann ich aber auch überspringen und irgendwann in den nächsten 30 Tagen noch erledigen.

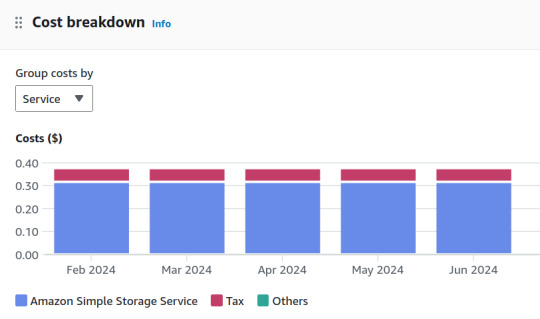

Ich zahle offenbar für den "Amazon Simple Storage Service":

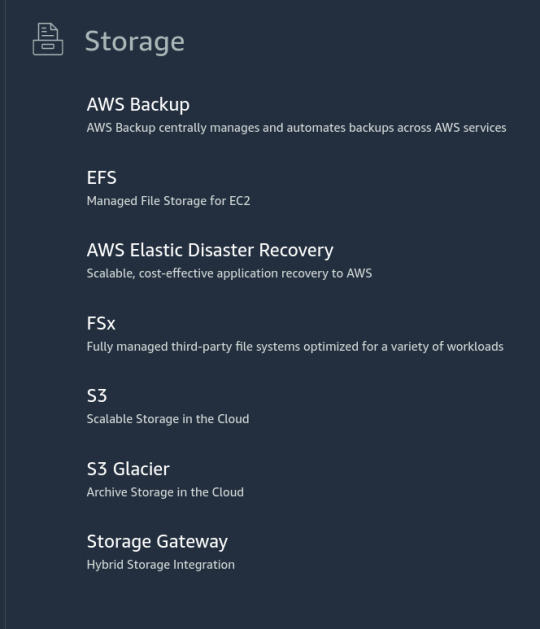

Aber was ist drin in dieser Storage? Auf der Seite "Storage" kommt mir nichts bekannt vor.

Am ehesten vielleicht S3, denke ich, denn der Rest kommt mir noch unbekannter vor. Unter S3 besitze ich tatsächlich einen "General Purpose Bucket". Das klingt praktisch.

Mein Eimer hat einen langen kryptischen Namen. Darin ist ein Ordner "default". Im Ordner "default" sind viele andere Ordner mit langen kryptischen Namen. Keiner davon enthält irgendwas Verständliches.

Vielleicht kann ich ja herausfinden, seit wann ich diese 35 Cent im Monat bezahle, und den Grund so identifizieren, denke ich. Aber die Übersicht über die Abrechnungen reicht nur ungefähr ein Jahr zurück.

In meinem Passwortmanager hat sich in der Zwischenzeit ein vager Zusammenhang zwischen Amazon AWS und JungleDisk ergeben, einem Cloud-Backup-Ding, das ich in einer sehr grauen Vorzeit einmal benutzt habe. Es ist so lange her, dass es das Techniktagebuch noch nicht gab, weshalb ich nirgends nachlesen kann, was ich eigentlich damit gemacht habe. JungleDisk ist inzwischen verkauft oder umbenannt worden. Die Seite www.myjungledisk.com weigert sich, mich zu erkennen.

Ich sehe in meinen Mails nach. Offenbar habe ich mich bei JungleDisk 2010 angemeldet und 2017 mal eine Nachricht über Änderungen bekommen. Diese Nachricht enthält einen anderen Link, unter dem ich mich auch wirklich einloggen kann.

Der Menüpunkt "Online Disks" sieht vielversprechend aus.

Aber alle Punkte führen nur zu der Aufforderung, mir einen Amazon S3 Account zuzulegen (habe ich doch schon) oder den Support zu kontaktieren (will ich nicht).

Keine Ahnung, was in diesem Backup drin war und ob es sich um einzigartige, wertvolle, unwiederbringliche Daten handelt. Schon für das Techniktagebuch würde ich eigentlich gern weiterforschen, aber alle weiteren Schritte wirken noch viel verwickelter als die bisherigen und ich werde schon beim Drübernachdenken ganz lustlos. Ich glaube, ich lasse alles so, wie es jetzt ist. Irgendwann wird wegen der nicht bezahlten 35 Cent irgendwas gekündigt werden, und dann werde ich auf das unbekannte Backup noch weniger zugreifen können als jetzt schon.

(Kathrin Passig)

11 notes

·

View notes

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes

Text

Centralizing AWS Root access for AWS Organizations customers

Security teams will be able to centrally manage AWS root access for member accounts in AWS Organizations with a new feature being introduced by AWS Identity and Access Management (IAM). Now, managing root credentials and carrying out highly privileged operations is simple.

Managing root user credentials at scale

Historically, accounts on Amazon Web Services (AWS) were created using root user credentials, which granted unfettered access to the account. Despite its strength, this AWS root access presented serious security vulnerabilities.

The root user of every AWS account needed to be protected by implementing additional security measures like multi-factor authentication (MFA). These root credentials had to be manually managed and secured by security teams. Credentials had to be stored safely, rotated on a regular basis, and checked to make sure they adhered to security guidelines.

This manual method became laborious and error-prone as clients’ AWS systems grew. For instance, it was difficult for big businesses with hundreds or thousands of member accounts to uniformly secure AWS root access for every account. In addition to adding operational overhead, the manual intervention delayed account provisioning, hindered complete automation, and raised security threats. Unauthorized access to critical resources and account takeovers may result from improperly secured root access.

Additionally, security teams had to collect and use root credentials if particular root actions were needed, like unlocking an Amazon Simple Storage Service (Amazon S3) bucket policy or an Amazon Simple Queue Service (Amazon SQS) resource policy. This only made the attack surface larger. Maintaining long-term root credentials exposed users to possible mismanagement, compliance issues, and human errors despite strict monitoring and robust security procedures.

Security teams started looking for a scalable, automated solution. They required a method to programmatically control AWS root access without requiring long-term credentials in the first place, in addition to centralizing the administration of root credentials.

Centrally manage root access

AWS solve the long-standing problem of managing root credentials across several accounts with the new capability to centrally control root access. Two crucial features are introduced by this new capability: central control over root credentials and root sessions. When combined, they provide security teams with a safe, scalable, and legal method of controlling AWS root access to all member accounts of AWS Organizations.

First, let’s talk about centrally managing root credentials. You can now centrally manage and safeguard privileged root credentials for all AWS Organizations accounts with this capability. Managing root credentials enables you to:

Eliminate long-term root credentials: To ensure that no long-term privileged credentials are left open to abuse, security teams can now programmatically delete root user credentials from member accounts.

Prevent credential recovery: In addition to deleting the credentials, it also stops them from being recovered, protecting against future unwanted or unauthorized AWS root access.

Establish secure accounts by default: Using extra security measures like MFA after account provisioning is no longer necessary because member accounts can now be created without root credentials right away. Because accounts are protected by default, long-term root access security issues are significantly reduced, and the provisioning process is made simpler overall.

Assist in maintaining compliance: By centrally identifying and tracking the state of root credentials for every member account, root credentials management enables security teams to show compliance. Meeting security rules and legal requirements is made simpler by this automated visibility, which verifies that there are no long-term root credentials.

Aid in maintaining compliance By systematically identifying and tracking the state of root credentials across all member accounts, root credentials management enables security teams to prove compliance. Meeting security rules and legal requirements is made simpler by this automated visibility, which verifies that there are no long-term root credentials. However, how can it ensure that certain root operations on the accounts can still be carried out? Root sessions are the second feature its introducing today. It provides a safe substitute for preserving permanent root access.

Security teams can now obtain temporary, task-scoped root access to member accounts, doing away with the need to manually retrieve root credentials anytime privileged activities are needed. Without requiring permanent root credentials, this feature ensures that operations like unlocking S3 bucket policies or SQS queue policies may be carried out safely.

Key advantages of root sessions include:

Task-scoped root access: In accordance with the best practices of least privilege, AWS permits temporary AWS root access for particular actions. This reduces potential dangers by limiting the breadth of what can be done and shortening the time of access.

Centralized management: Instead of logging into each member account separately, you may now execute privileged root operations from a central account. Security teams can concentrate on higher-level activities as a result of the process being streamlined and their operational burden being lessened.

Conformity to AWS best practices: Organizations that utilize short-term credentials are adhering to AWS security best practices, which prioritize the usage of short-term, temporary access whenever feasible and the principle of least privilege.

Full root access is not granted by this new feature. For carrying out one of these five particular acts, it offers temporary credentials. Central root account management enables the first three tasks. When root sessions are enabled, the final two appear.

Auditing root user credentials: examining root user data with read-only access

Reactivating account recovery without root credentials is known as “re-enabling account recovery.”

deleting the credentials for the root user Eliminating MFA devices, access keys, signing certificates, and console passwords

Modifying or removing an S3 bucket policy that rejects all principals is known as “unlocking” the policy.

Modifying or removing an Amazon SQS resource policy that rejects all principals is known as “unlocking a SQS queue policy.”

Accessibility

With the exception of AWS GovCloud (US) and AWS China Regions, which do not have root accounts, all AWS Regions offer free central management of root access. You can access root sessions anywhere.

It can be used via the AWS SDK, AWS CLI, or IAM console.

What is a root access?

The root user, who has full access to all AWS resources and services, is the first identity formed when you create an account with Amazon Web Services (AWS). By using the email address and password you used to establish the account, you can log in as the root user.

Read more on Govindhtech.com

#AWSRoot#AWSRootaccess#IAM#AmazonS3#AWSOrganizations#AmazonSQS#AWSSDK#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

Ansible Collections: Extending Ansible’s Capabilities

Ansible is a powerful automation tool used for configuration management, application deployment, and task automation. One of the key features that enhances its flexibility and extensibility is the concept of Ansible Collections. In this blog post, we'll explore what Ansible Collections are, how to create and use them, and look at some popular collections and their use cases.

Introduction to Ansible Collections

Ansible Collections are a way to package and distribute Ansible content. This content can include playbooks, roles, modules, plugins, and more. Collections allow users to organize their Ansible content and share it more easily, making it simpler to maintain and reuse.

Key Features of Ansible Collections:

Modularity: Collections break down Ansible content into modular components that can be independently developed, tested, and maintained.

Distribution: Collections can be distributed via Ansible Galaxy or private repositories, enabling easy sharing within teams or the wider Ansible community.

Versioning: Collections support versioning, allowing users to specify and depend on specific versions of a collection. How to Create and Use Collections in Your Projects

Creating and using Ansible Collections involves a few key steps. Here’s a guide to get you started:

1. Setting Up Your Collection

To create a new collection, you can use the ansible-galaxy command-line tool:

ansible-galaxy collection init my_namespace.my_collection

This command sets up a basic directory structure for your collection:

my_namespace/

└── my_collection/

├── docs/

├── plugins/

│ ├── modules/

│ ├── inventory/

│ └── ...

├── roles/

├── playbooks/

├── README.md

└── galaxy.yml

2. Adding Content to Your Collection

Populate your collection with the necessary content. For example, you can add roles, modules, and plugins under the respective directories. Update the galaxy.yml file with metadata about your collection.

3. Building and Publishing Your Collection

Once your collection is ready, you can build it using the following command:

ansible-galaxy collection build

This command creates a tarball of your collection, which you can then publish to Ansible Galaxy or a private repository:

ansible-galaxy collection publish my_namespace-my_collection-1.0.0.tar.gz

4. Using Collections in Your Projects

To use a collection in your Ansible project, specify it in your requirements.yml file:

collections:

- name: my_namespace.my_collection

version: 1.0.0

Then, install the collection using:

ansible-galaxy collection install -r requirements.yml

You can now use the content from the collection in your playbooks:--- - name: Example Playbook hosts: localhost tasks: - name: Use a module from the collection my_namespace.my_collection.my_module: param: value

Popular Collections and Their Use Cases

Here are some popular Ansible Collections and how they can be used:

1. community.general

Description: A collection of modules, plugins, and roles that are not tied to any specific provider or technology.

Use Cases: General-purpose tasks like file manipulation, network configuration, and user management.

2. amazon.aws

Description: Provides modules and plugins for managing AWS resources.

Use Cases: Automating AWS infrastructure, such as EC2 instances, S3 buckets, and RDS databases.

3. ansible.posix

Description: A collection of modules for managing POSIX systems.

Use Cases: Tasks specific to Unix-like systems, such as managing users, groups, and file systems.

4. cisco.ios

Description: Contains modules and plugins for automating Cisco IOS devices.

Use Cases: Network automation for Cisco routers and switches, including configuration management and backup.

5. kubernetes.core

Description: Provides modules for managing Kubernetes resources.

Use Cases: Deploying and managing Kubernetes applications, services, and configurations.

Conclusion

Ansible Collections significantly enhance the modularity, distribution, and reusability of Ansible content. By understanding how to create and use collections, you can streamline your automation workflows and share your work with others more effectively. Explore popular collections to leverage existing solutions and extend Ansible’s capabilities in your projects.

For more details click www.qcsdclabs.com

#redhatcourses#information technology#linux#containerorchestration#container#kubernetes#containersecurity#docker#dockerswarm#aws

2 notes

·

View notes

Video

youtube

Upload File to AWS S3 Bucket using Java AWS SDK | Create IAM User & Poli...

0 notes

Text

Journey to AWS Proficiency: Unveiling Core Services and Certification Paths

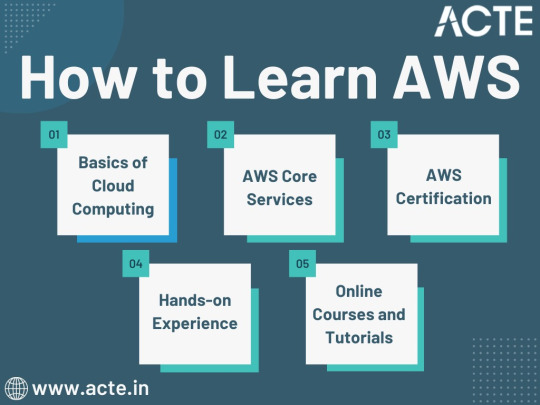

Amazon Web Services, often referred to as AWS, stands at the forefront of cloud technology and has revolutionized the way businesses and individuals leverage the power of the cloud. This blog serves as your comprehensive guide to understanding AWS, exploring its core services, and learning how to master this dynamic platform. From the fundamentals of cloud computing to the hands-on experience of AWS services, we'll cover it all. Additionally, we'll discuss the role of education and training, specifically highlighting the value of ACTE Technologies in nurturing your AWS skills, concluding with a mention of their AWS courses.

The Journey to AWS Proficiency:

1. Basics of Cloud Computing:

Getting Started: Before diving into AWS, it's crucial to understand the fundamentals of cloud computing. Begin by exploring the three primary service models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Gain a clear understanding of what cloud computing is and how it's transforming the IT landscape.

Key Concepts: Delve into the key concepts and advantages of cloud computing, such as scalability, flexibility, cost-effectiveness, and disaster recovery. Simultaneously, explore the potential challenges and drawbacks to get a comprehensive view of cloud technology.

2. AWS Core Services:

Elastic Compute Cloud (EC2): Start your AWS journey with Amazon EC2, which provides resizable compute capacity in the cloud. Learn how to create virtual servers, known as instances, and configure them to your specifications. Gain an understanding of the different instance types and how to deploy applications on EC2.

Simple Storage Service (S3): Explore Amazon S3, a secure and scalable storage service. Discover how to create buckets to store data and objects, configure permissions, and access data using a web interface or APIs.

Relational Database Service (RDS): Understand the importance of databases in cloud applications. Amazon RDS simplifies database management and maintenance. Learn how to set up, manage, and optimize RDS instances for your applications. Dive into database engines like MySQL, PostgreSQL, and more.

3. AWS Certification:

Certification Paths: AWS offers a range of certifications for cloud professionals, from foundational to professional levels. Consider enrolling in certification courses to validate your knowledge and expertise in AWS. AWS Certified Cloud Practitioner, AWS Certified Solutions Architect, and AWS Certified DevOps Engineer are some of the popular certifications to pursue.

Preparation: To prepare for AWS certifications, explore recommended study materials, practice exams, and official AWS training. ACTE Technologies, a reputable training institution, offers AWS certification training programs that can boost your confidence and readiness for the exams.

4. Hands-on Experience:

AWS Free Tier: Register for an AWS account and take advantage of the AWS Free Tier, which offers limited free access to various AWS services for 12 months. Practice creating instances, setting up S3 buckets, and exploring other services within the free tier. This hands-on experience is invaluable in gaining practical skills.

5. Online Courses and Tutorials:

Learning Platforms: Explore online learning platforms like Coursera, edX, Udemy, and LinkedIn Learning. These platforms offer a wide range of AWS courses taught by industry experts. They cover various AWS services, architecture, security, and best practices.

Official AWS Resources: AWS provides extensive online documentation, whitepapers, and tutorials. Their website is a goldmine of information for those looking to learn more about specific AWS services and how to use them effectively.

Amazon Web Services (AWS) represents an exciting frontier in the realm of cloud computing. As businesses and individuals increasingly rely on the cloud for innovation and scalability, AWS stands as a pivotal platform. The journey to AWS proficiency involves grasping fundamental cloud concepts, exploring core services, obtaining certifications, and acquiring practical experience. To expedite this process, online courses, tutorials, and structured training from renowned institutions like ACTE Technologies can be invaluable. ACTE Technologies' comprehensive AWS training programs provide hands-on experience, making your quest to master AWS more efficient and positioning you for a successful career in cloud technology.

8 notes

·

View notes

Text

AWS Solutions Architect Certification Journey: A Comprehensive Guide

Embarking on the path to becoming an AWS Certified Solutions Architect - Associate requires a meticulous and strategic approach. This extensive guide offers a step-by-step breakdown of essential steps to ensure effective preparation for this esteemed certification.

1. Grasp Exam Objectives: Initiate your preparation by thoroughly reviewing the official AWS Certified Solutions Architect - Associate exam guide. This roadmap outlines the key domains, concepts, and skills to be evaluated during the exam. A clear understanding of these objectives sets the tone for a focused and effective study plan.

2. Establish a Solid AWS Foundation: Build a robust understanding of core AWS services, infrastructure, and shared responsibility models. Dive into online courses, AWS documentation, and insightful whitepapers. Gain proficiency in essential services such as compute, storage, databases, and networking, laying the groundwork for success.

3. Hands-On Practicality: While theoretical knowledge is crucial, practical experience is where comprehension truly flourishes. Leverage the AWS Free Tier to engage in hands-on exercises. Execute tasks like instance management, security group configuration, S3 bucket setup, and application deployment. Practical experience not only reinforces theoretical knowledge but also provides valuable real-world insights.

4. Enroll in Online Learning Platforms: Capitalize on reputable online courses designed specifically for AWS Certified Solutions Architect preparation. Platforms like A Cloud Guru, Coursera, or the official AWS Training provide structured content, hands-on labs, and practice exams. These courses offer a guided learning path aligned with certification objectives, ensuring a comprehensive and effective preparation experience.

5. Delve into AWS Whitepapers: Uncover a wealth of knowledge in AWS whitepapers and documentation. Explore documents such as the Well-Architected Framework, AWS Best Practices, and Security Best Practices. These resources offer deep insights into architecting on AWS and come highly recommended in the official exam guide.

6. Embrace Practice Exam Simulators: Evaluate your knowledge and readiness through practice exams and simulators available on platforms like Whizlabs, Tutorials Dojo, or the official AWS practice exam. Simulated exam environments not only familiarize you with the exam format but also pinpoint areas that may require additional review.

7. Engage in AWS Communities: Immerse yourself in the AWS community. Participate in forums, discussion groups, and social media channels. Share experiences, seek advice, and learn from the collective wisdom of the community. This engagement provides valuable insights into real-world scenarios and enriches your overall preparation.

8. Regularly Review and Reinforce: Periodically revisit key concepts and domains. Reinforce your understanding through diverse methods like flashcards, summarization, or teaching concepts to others. An iterative review process ensures that knowledge is retained and solidified.

9. Effective Time Management: Develop a well-structured study schedule aligned with your commitments. Prioritize topics based on their weight in the exam guide and allocate ample time for hands-on practice. Efficient time management ensures a balanced and thorough preparation journey.

10. Final Preparation and Confidence Boost: As the exam approaches, conduct a final review of key concepts. Revisit practice exams to bolster confidence and ensure familiarity with the exam interface. Confirm your ease in navigating the AWS Management Console and understanding the exam format.

In conclusion, the journey to mastering AWS Certified Solutions Architect - Associate requires strategic planning and dedicated effort. Success is not merely about passing an exam but about acquiring practical skills applicable in real-world scenarios. Approach this preparation journey with commitment, practical engagement, and a mindset of continuous learning within the ever-evolving AWS ecosystem. By navigating this dynamic landscape, you are not just preparing for a certification; you are laying the foundation for a successful career as an AWS Solutions Architect.

2 notes

·

View notes

Text

tng update time!! i'm behind, oops, four episodes this time. saturday we did "the vengeance factor" and "the defector" and last night we did "the hunted" and "the high ground"

SIDE BAR one of those first two eps has flashing lights and i forgot which one. if you know PLEEEASE tell me so i can put it on my warnings section in my spreadsheet :(

the vengeance factor: surprisingly good! i keep being surprised when tng is good fr but we are in it now ig

i like how chill riker and deanna are when one of them has a passing interest in someone else. they're not totally exes because she'll still hold his hand and call him beloved when he's dying, but neither are they together, especially in a monogamous way. i'm telling you they're polyam fr put worf in that sandwich!!!

once again i thought i knew where something was going and once again i was wrong. i was so sure riker would tell this blonde assassin chick that he was so tall and so gentle and didn't she want to come to the side of peace for him? but she was like actually no <3 and made him kill her fr. he was so fucked up about it. second clode encounter of the space babes for riker where he has potentially accumulated trauma points. we will see if this trend develops further or if he remains down to clown

the defector: i kind of loved this one. unfortunately i think those giant square shoulders on the romulan uniforms fuck.

the shakespeare at the start of this one was wack but i'm happy i identified patrick stewart as one of the extras. by his voice, naturally

tng seems to be in the era of actually letting bad anf cuked up things happen. i felt so awful for this romulan dude by the end and THEN to find out it was a trick made it even worse.

and what REALLY fucks me up about this one, aside from the obvious, is that obviously the letter is never going to get delivered to romulus. we all know that, right? according to star trek (2009) which takes place in 2258, spock prime tells us that in 2387 ("129 years from now...") romulus bites it. tng s3 takes place in 2366. in 21 short years romulus is toast and there's no way things get peaceful enough before then that they get to deliver this letter. this dude was like i did it all so my daughter would grow up AND SHE'S NOT GROWING UP, HOMIE. she's getting VAPORIZED with nero's wife!!!

the hunted: there was a brainwashed supersoldier with ptsd in this episode and i don't want to talk about it

no i'm just kidding we can talk about it. soldier aside, it was a good political episode re: america's general lack of concern for veterans once they come home. hilariously awful that nothing has changed since 1990 or whenever this aired.

one of my favorite parts of this episode was near the beginning when picard was SHOCKED, almost comically so, that this soldier guy had eluded THEEE enterprise. catherine said it was because the enterprise is a bucket of bolts (and i heap curses upon her for this disrespect) but i said it was because they kleft it to worf, wesley, and data. and as much as i deeply adore all 3 characters there was not one adult in that situation. love and light.

the fight scenes in this were VERY exciting. i loved watching them all run around trying to outsmart each other. a fun adventure a fine set of ethics and a little brainwashed guy. 10/10

the high ground: i'll be honest, after one heavy political episode and the previous two before that having been "if two guys were on the moon and one of them killed the other with a rock would that be fucked up or what" kind of episodes i was NOT in the mood for another Political Statement, especially when they um. fell just a LEETLE bit short of the mark. i wanted something light to space out the heaviness a little

HOWEVER...beverly crusher.

my GIRRRLLLL i did NOT know she had it in her!!!!!!

she popped the fuck off in this episode!!! she was GREAT! did you guys know gates mcfadden could ACT? i genuinely did not know! but she can and she did! choking back tears when wesley was threatened! cold fury at her captors! you could genuinely believe mister terrorist could fall in love with a woman like that

so much of the time beverly has been A Woman or A Mother that she lacked any other sort of personality. and when she did have a personality it seemed that the people writing her were always trying to make her enough like bones that we like her but not so much like him that she feels like a bad knock-off (like pulaski) and then they never hit the mark

but THIS EPISODE...she did it. i really wasn't thinking about bones at all, except for the beginning when she refused to leave the wounded even in the interest of her own safety. she was incredible. i was shocked. where has this woman been for the past two and a half seasons?!

that said, the uh. statement in this episode seemed a little vague. "terrorism bad"? sure yeah but what else? are we saying "don't sympathize with terrorist because they're assholes" or are we saying "people only resort to terrorism when other avenues have been closed off to them"? why is the terrorist so handsome? why is riker cooperating with the cops? i like that they were like "you can't just come in here and deliver medical supplies to ONE SIDE and claim to be neutral" bc so true re: real life world superpowers but like then what? at the end when the kid out down his gun but that fucking cop lady killed the handsome terrorist should riker not have gone "you see those people as savages but today you were the only one killing people"?

like this episode seemed to have a lot of questions and ideas to put forth and then answered/resolved absolutely zero of them. i'd give it a pass if not for beverly. again, she was fucking incredible

anyway, tonight we do "deja q" (DREAD.........) and "a matter of perspective."

#personal#star trek blogging#tng lb#i'm finally a beverly enjoyer..........#this means there's no one left on the main cast that i dislike. HUGE feat#i never thought i'd get here#might be time to do another character ranking soon

5 notes

·

View notes

Text

PHP AWS S3 - How to Use AWS S3 Bucket in PHP to Store and Manage files

Quick Learn & Get Code Samples for PHP AWS S3 - How to Use AWS S3 Bucket in PHP to Store and Manage files. It offers a wide range of programming sample code and demo videos for PHP AWS S3, Laravel, How to Use AWS S3 Bucket, and API development, PHP to Store and Manage files and other website languages.

#PHP AWS S3#How to Use AWS S3 Bucket in PHP to Store#Integrate Moneris Payment Gateway#Sample Code Video Demo

0 notes