#software developer replaced by ai

Explore tagged Tumblr posts

Text

How API Integration and chatgpt can replace programmers

How chatgpt can replace programmers?

Traditional programming paradigms are being transformed by advances in artificial intelligence (AI) and API integration in the ever changing field of software development. The development of OpenAI’s chatgpt replace developers, an AI-powered language model, is one such significant achievement. With its incredible potential, chatgpt replace developers will surely change the way developers approach coding tasks, raising the possibility that it may eventually completely replace human programmers.

API integration has long been a cornerstone of modern software development, enabling applications to communicate and interact with each other seamlessly. By leveraging APIs, developers can incorporate various functionalities and services into their applications without reinventing the wheel. This modular approach not only accelerates development cycles but also enhances the overall user experience.

But the introduction of chatgpt replace developers adds a new level of complexity to the programming world. Thanks to deep learning algorithms, ChatGPT is able to understand and produce writing that appears human depending on the context that is given to it. This implies that developers can now communicate naturally with ChatGPT, asking for help and efficiently producing code snippets.

One of the key areas where chatgpt can replace programmers, shows immense promise is in code generation and automation. Traditionally, developers spend considerable time writing and debugging code to implement various features and functionalities. With ChatGPT, developers can simply describe their requirements in plain language, and the model can generate the corresponding code snippets, significantly reducing development time and effort.

For example, developers can define the desired functionality to chatgpt can replace programmers,, such as “parse JSON data and extract relevant fields,” rather than developing a function by hand to parse JSON data from an API response. The required code snippet can then be generated by ChatGPT based on the developer’s requirements. As a result, the development process has been simplified and developers are free to focus on making more complex architectural choices and solving problems.

Additionally, chatgpt can replace programmers, can be a very helpful tool for developers who need advice or help with fixing problems. Developers can refer to ChatGPT for advice and insights when solving complex issues or exploring different ways for implementation. Developers can gain a wealth of knowledge and optimal methodologies to guide their decision-making process by utilizing the collective wisdom contained in ChatGPT’s extensive training dataset.

However, the prospect of chatgpt can replace programmers, replacing human programmers entirely remains a topic of debate and speculation within the developer community. While ChatGPT excels at generating code based on provided prompts, it lacks the nuanced understanding and creative problem-solving abilities inherent to human developers. Additionally, concerns regarding the ethical implications of AI-driven automation in software development continue to be raised.

It’s important to understand that chatgpt can replace programmers,is meant to enhance developers’ skills and simplify specific parts of the development process, not to replace them. Developers can boost productivity, drive creativity, and ultimately provide end users with better software solutions by using AI-powered technologies like ChatGPT.

In conclusion, the convergence of API integration and AI-driven technologies like ChatGPT is reshaping the dynamics of programming in profound ways. While API integration facilitates seamless interaction between software components, chatgpt can replace programmers with natural language interfaces and code generation capabilities. While the idea of ChatGPT replacing human programmers entirely may be far-fetched, its potential to augment and enhance developer productivity is undeniable. As the field of AI continues to advance, developers must embrace these innovations as valuable tools in their arsenal, driving greater efficiency and innovation in software development.

For More Information Visit Best Digital Marketing Company in Indore

#chat gpt programmers#chat gpt will replace programmer#software developer replaced by ai#openai replace programmers#Gpt Developer#chatGpt programmers

0 notes

Text

One thing I notice few people talking about in regards to "just" having more experienced employees directing AI: Where are those employees supposed to come from in the future?

If companies refuse to offer entry-level jobs and teach and provide experience to those new in the profession, there won't be experienced employees in the future.

I’m starting to sound like a nutcase at work because upper management keeps trying to implement AI programs and AI assistants and Chat GPT and my middle-of-the-road, don’t-infodump, don’t-engage response has been “I don’t like AI”, “I prefer to remain in control of my own tasks”, “I’d rather make my own mistakes”, and “I don’t trust any machine smarter than a toaster”

#and I highly doubt AI development is fast enough to replace them all#Cheaper labour shouldn't be the only reason to hire inexperienced people#It's been a couple years since I've worked as a software engineer#but I remember a senior colleague fiddling longer with AI#than it would've taken him to do it himself#ai labour issues

52K notes

·

View notes

Text

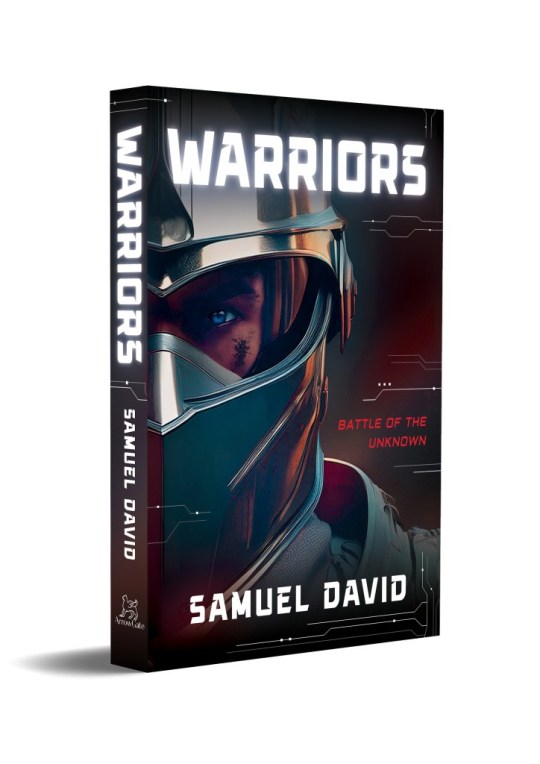

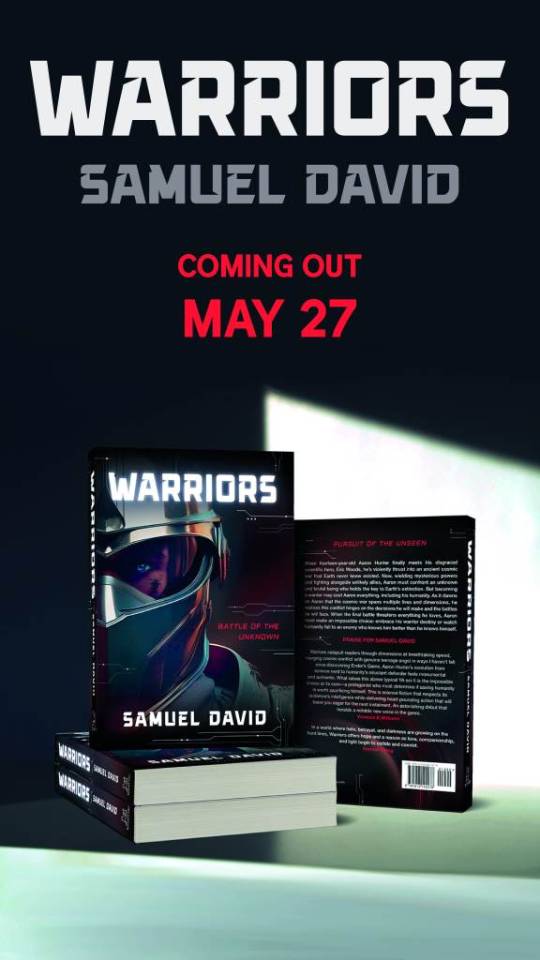

"Warriors" by Samuel David: The Sci-Fi Debut Redefining Multiverse Adventures for Young Adults

Discover "Warriors" by Samuel David, releasing May 27th. This groundbreaking YA sci-fi novel follows fourteen-year-old Aaron Hunter through multiple dimensions in a cosmic war that will determine humanity's fate.

Announcing "Warriors" by Samuel David – Coming May 27th!

We are thrilled to announce the upcoming release of "Warriors," the extraordinary debut novel by Samuel David that will take readers on an unforgettable journey across dimensions, time, and the very essence of what makes us human.

A Cosmic Battle with Earth at Stake

When fourteen-year-old science prodigy Aaron Hunter finally meets his disgraced scientific hero, Eric Woods, he never expects to be violently thrust into an ancient cosmic war that Earth never knew existed. Now, wielding mysterious powers and fighting alongside unlikely allies, Aaron must confront an unknown and brutal being who holds the key to Earth's extinction.

But as the stakes rise, Aaron faces the most difficult truth of all: becoming the warrior humanity needs may cost him everything—including his humanity itself.

Critical Acclaim Already Building

Early reviewers are already hailing "Warriors" as a breakthrough in young adult science fiction:

"Warriors catapults readers through dimensions at breathtaking speed, merging cosmic conflict with genuine teenage angst in ways I haven't felt since discovering Ender's Game. Aaron Hunter's evolution from science nerd to humanity's reluctant defender feels monumental and authentic. What raises this above typical YA sci-fi is the impossible choice at its core—a protagonist who must determine if saving humanity is worth sacrificing himself." — Vanessa K. Williams

"In a world where hate, betrayal, and darkness are growing on the front lines, Warriors offers hope and a reason as love, companionship, and light begin to collide and coexist." — Hannah Wong

Perfect for Readers Who Love:

Multiverse adventures and dimensional travel

Coming-of-age stories with high stakes

Morally complex heroes facing impossible choices

Scientific concepts woven into thrilling narratives

Stories that balance action with emotional depth

Pre-Order Now Available

"Warriors" will hit shelves on May 27th, 2025. Pre-orders are now available through all major booksellers and our website. Special pre-order bonuses include exclusive digital art, character cards, and access to a live Q&A with Samuel David.

Follow the conversation: #WarriorsNovel

Recommended for: Young adult readers and sci-fi enthusiasts ages 13 and up.

Source: "Warriors" by Samuel David: The Sci-Fi Debut Redefining Multiverse Adventures for Young Adults

0 notes

Text

Can Devin AI replace human developers entirely?

This question was originally answered on Quora and written as is. I’m not familiar with Devin per se, but I don’t see AI “replacing people” in any capacity it is developed to function within — and I use that description with a caveat because AI IS replacing hundreds of thousands of jobs — if not millions worldwide. https://preview.devin.ai/ AI has the potential to liberate humans from mundane…

View On WordPress

0 notes

Text

#ai#replace a full stack#developer#html#machine learning#programming#digital marketing#python#viral#web development#software development#mobile app development

0 notes

Note

why exactly do you dislike generative art so much? i know its been misused by some folks, but like, why blame a tool because it gets used by shitty people? Why not just... blame the people who are shitty? I mean this in genuinely good faith, you seem like a pretty nice guy normally, but i guess it just makes me confused how... severe? your reactions are sometimes to it. There's a lot of nuance to conversation about it, and by folks a lot smarter than I (I suggest checking out the Are We Art Yet or "AWAY" group! They've got a lot on their page about the ethical use of Image generation software by individuals, and it really helped explain some things I was confused about). I know on my end, it made me think about why I personally was so reactive about Who was allowed to make art and How/Why. Again, all this in good faith, and I'm not asking you to like, Explain yourself or anything- If you just read this and decide to delete it instead of answering, all good! I just hope maybe you'll look into *why* some people advocate for generative software as strongly as they do, and listen to what they have to say about things -🦜

if Ai genuinely generated its own content I wouldn't have as much of a problem with it, however what Ai currently does is scrape other people's art, collect it, and then build something based off of others stolen works without crediting them. It's like. stealing other peoples art, mashing it together, then saying "this is mine i can not only profit of it but i can use it to cut costs in other industries.

this is more evident by people not "making" art but instead using prompts. Its like going to McDonalds and saying "Burger. Big, Juicy, etc, etc" then instead of a worker making the burger it uses an algorithm to build a burger based off of several restaurant's recepies.

example

the left is AI art, the right is one of the artists (Lindong) who it pulled the art style from. it's literally mass producing someone's artstyle by taking their art then using an algorithm to rebuild it in any context. this is even more apparent when you see ai art also tries to recreate artists watermarks and generally blends them together making it unintelligible.

Aside from that theres a lot of other ethical problems with it including generating pretty awful content, including but not limited to cp. It also uses a lot of processing power and apparently water? I haven't caught up on the newer developements i've been depressed about it tbh

Then aside from those, studios are leaning towards Ai generation to replace having to pay people. I've seen professional voice actors complain on twitter that they haven't gotten as much work since ai voice generation started, artists are being cut down and replaced by ai art then having the remaining artists fix any errors in the ai art.

Even beyond those things are the potential for misinformation. Here's an experiment: Which of these two are ai generated?

ready?

These two are both entirely ai generated. I have no idea if they're real people, but in a few months you could ai generate a Biden sex scandal, you could generate politics in whatever situation you want, you can generate popular streamers nude, whatever. and worse yet is ai generated video is already being developed and it doesn't look bad.

I posted on this already but as of right now it only needs one clear frame of a body and it can generate motion. yeah there are issues but it's been like two years since ai development started being taken seriously and we've gotten to this point already. within another two years it'll be close to perfected. There was even tests done with tiktokers and it works. it just fucking works.

There is genuinely not one upside to ai art. at all. it's theft, it's harming peoples lives, its harming the environment, its cutting jobs back and hurting the economy, it's invading peoples privacy, its making pedophilia accessible, and more. it's a plague and there's no vaccine for it. And all because people don't want to take a year to learn anatomy.

5K notes

·

View notes

Text

the past few years, every software developer that has extensive experience, and knows what they're talking about, has had pretty much the same opinion on LLM code assistants: they're OK for some tasks but generally shit. Having something that automates code writing is not new. Codegen before AI were scripts that generated code that you have to write for a task, but is so repetitive it's a genuine time saver to have a script do it.

this is largely the best that LLMs can do with code, but they're still not as good as a simple script because of the inherently unreliable nature of LLMs being a big honkin statistical model and not a purpose-built machine.

none of the senior devs that say this are out there shouting on the rooftops that LLMs are evil and they're going to replace us. because we've been through this concept so many times over many years. Automation does not eliminate coding jobs, it saves time to focus on other work.

the one thing I wish senior devs would warn newbies is that you should not rely on LLMs for anything substantial. you should definitely not use it as a learning tool. it will hinder you in the long run because you don't practice the eternally useful skill of "reading things and experimenting until you figure it out". You will never stop reading things and experimenting until you figure it out. Senior devs may have more institutional knowledge and better instincts but they still encounter things that are new to them and they trip through it like a newbie would. this is called "practice" and you need it to learn things

219 notes

·

View notes

Text

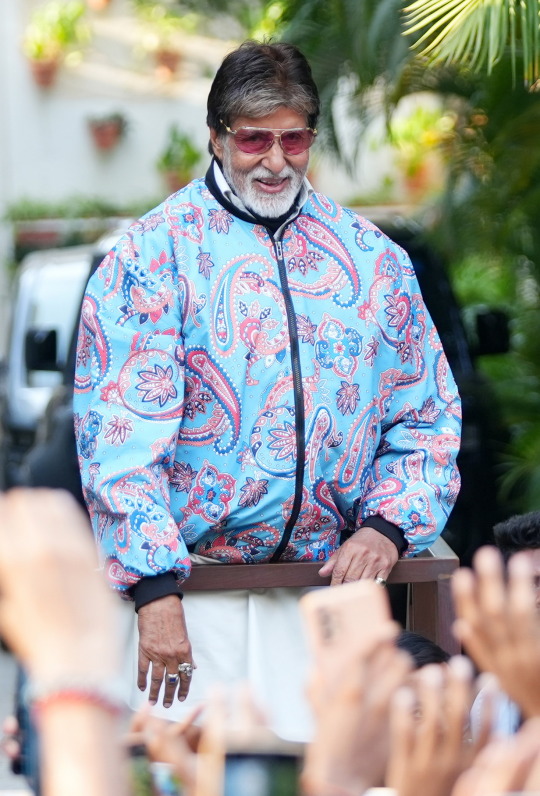

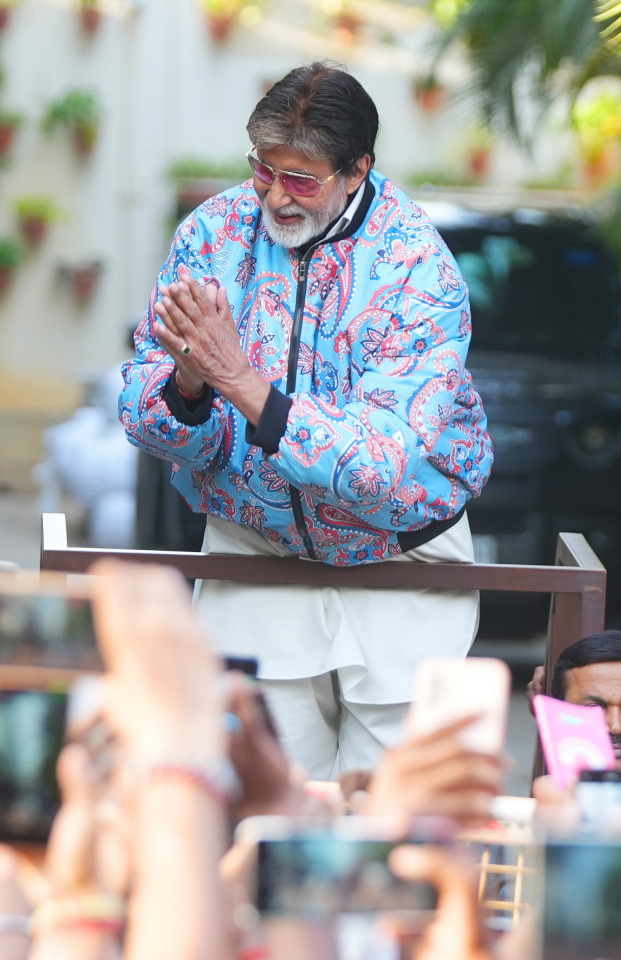

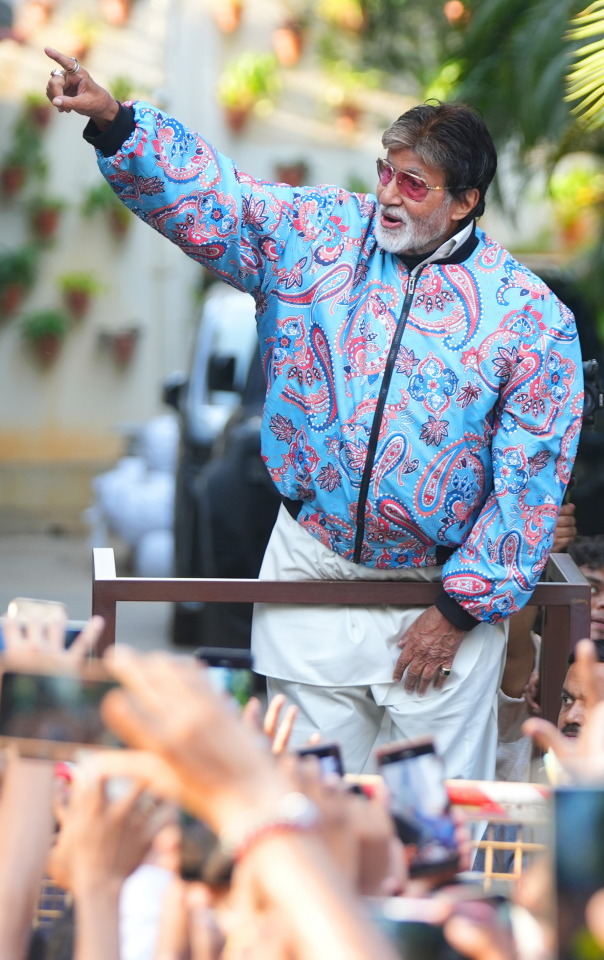

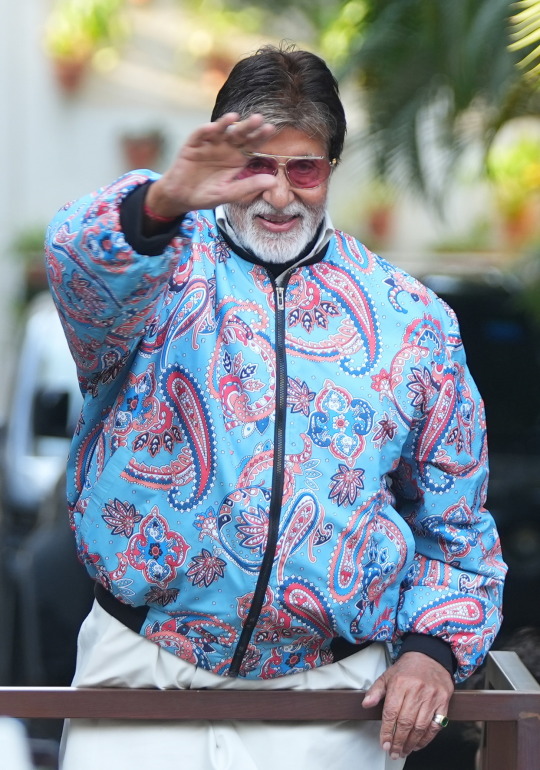

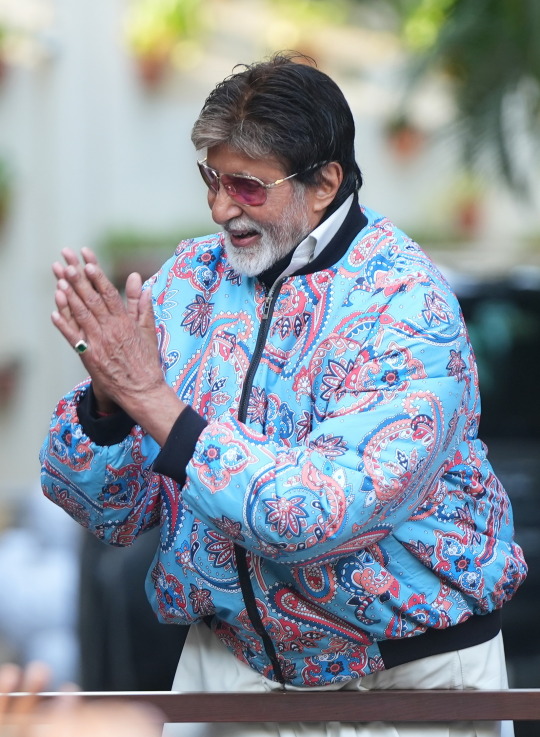

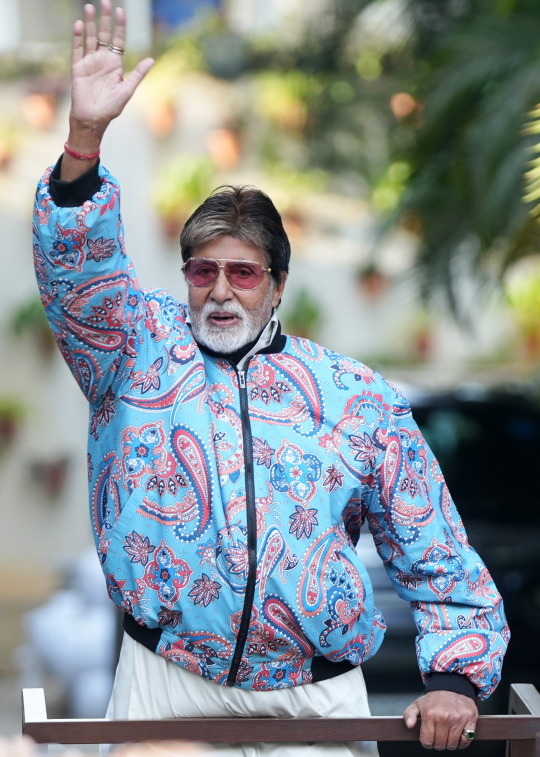

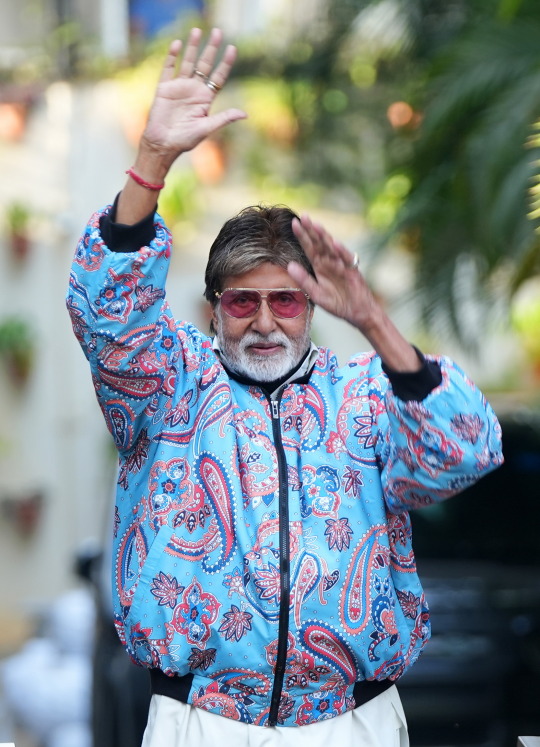

DAY 6274

Jalsa, Mumbai Aopr 20, 2025 Sun 11:17 pm

🪔 ,

April 21 .. birthday greetings and happiness to Ef Mousumi Biswas .. and Ef Arijit Bhattacharya from Kolkata .. 🙏🏽❤️🚩.. the wishes from the Ef family continue with warmth .. and love 🌺

The AI debate became the topic of discussion on the dining table ad there were many potent points raised - bith positive and a little indifferent ..

The young acknowledged it with reason and able argument .. some of the mid elders disagreed mildly .. and the end was kind of neutral ..

Blessed be they of the next GEN .. their minds are sorted out well in advance .. and why not .. we shall not be around till time in advance , but they and their progeny shall .. as has been the norm through generations ...

The IPL is now the greatest attraction throughout the day .. particularly on the Sunday, for the two on the day .. and there is never a debate on that ..

🤣

.. and I am most appreciative to read the comments from the Ef on the topic of the day - AI .. appreciative because some of the reactions and texts are valid and interesting to know .. the aspect expressed in all has a legitimate argument and that is most healthy ..

I am happy that we could all react to the Blog contents in the manner they have done .. my gratitude .. such a joy to get different views , valid and meaningful ..

And it is not the end of the day or the debate .. some impressions of the Gen X and some from the just passed Gen .. and some that were never ever the Gen are interesting as well :

The Printing Press (15th Century)

Fear: Scribes, monks, and elites thought it would destroy the value of knowledge, lead to mass misinformation, and eliminate jobs. Reality: It democratized knowledge, spurred the Renaissance and Reformation, and created entirely new industries—publishing, journalism, and education.

⸻

Industrial Revolution (18th–19th Century)

Fear: Machines would replace all human labor. The Luddites famously destroyed machinery in protest. Reality: Some manual labor jobs were displaced, but the economy exploded with new roles in manufacturing, logistics, engineering, and management. Overall employment and productivity soared.

⸻

Automobiles (Early 20th Century)

Fear: People feared job losses for carriage makers, stable hands, and horseshoe smiths. Cities worried about traffic, accidents, and social decay. Reality: The car industry became one of the largest employers in the world. It reshaped economies, enabled suburbia, and created new sectors like travel, road infrastructure, and auto repair.

⸻

Personal Computers (1980s)

Fear: Office workers would be replaced by machines; people worried about becoming obsolete. Reality: Computers made work faster and created entire industries: IT, software development, cybersecurity, and tech support. It transformed how we live and work.

⸻

The Internet (1990s)

Fear: It would destroy jobs in retail, publishing, and communication. Some thought it would unravel social order. Reality: E-commerce, digital marketing, remote work, and the creator economy now thrive. It connected the world and opened new opportunities.

⸻

ATMs (1970s–80s)

Fear: Bank tellers would lose their jobs en masse. Reality: ATMs handled routine tasks, but banks actually hired more tellers for customer service roles as they opened more branches thanks to reduced transaction costs.

⸻

Robotics & Automation (Factory work, 20th century–today)

Fear: Mass unemployment in factories. Reality: While some jobs shifted or ended, others evolved—robot maintenance, programming, design. Productivity gains created new jobs elsewhere.

The fear is not for losing jobs. It is the compromise of intellectual property and use without compensation. This case is slightly different.

I think AI will only make humans smarter. If we use it to our advantage.

That’s been happening for the last 10 years anyway

Not something new

You can’t control that in this day and age

YouTube & User-Generated Content (mid-2000s onward)

Initial Fear: When YouTube exploded, many in the entertainment industry panicked. The fear was that copyrighted material—music, TV clips, movies—would be shared freely without compensation. Creators and rights holders worried their content would be pirated, devalued, and that they’d lose control over distribution.

What Actually Happened: YouTube evolved to protect IP and monetize it through systems like Content ID, which allows rights holders to:

Automatically detect when their content is used

Choose to block, track, or monetize that usage

Earn revenue from ads run on videos using their IP (even when others post it)

Instead of wiping out creators or studios, it became a massive revenue stream—especially for musicians, media companies, and creators. Entire business models emerged around fair use, remixes, and reactions—with compensation built in.

Key Shift: The system went from “piracy risk” to “profit partner,” by embracing tech that recognized and enforced IP rights at scale.

This lead to higher profits and more money for owners and content btw

You just have to restructure the compensation laws and rewrite contracts

It’s only going to benefit artists in the long run

Yes

They can IP it

That is the hope

It’s the spread of your content and material without you putting a penny towards it

Cannot blindly sign off everything in contracts anymore. Has to be a lot more specific.

Yes that’s for sure

“Automation hasn’t erased jobs—it’s changed where human effort goes.”

Another good one is “hard work beats talent when talent stops working hard”

Which has absolutely nothing to with AI right now but 🤣

These ladies and Gentlemen of the Ef jury are various conversational opinions on AI .. I am merely pasting them for a view and an opinion ..

And among all the brouhaha about AI .. we simply forgot the Sunday well wishers .. and so ..

my love and the length be of immense .. pardon

Amitabh Bachchan

105 notes

·

View notes

Text

I just want to clarify things, mostly in light of what happened yesterday and because I feel like I'm being vastly misunderstood in my position. I would just like to reiterate that this is my opinion of things and how I currently see the gravity of my actions as I've sat and reflected. On the advice of some friends, I was encouraged to make this post to clear up any misunderstanding that may remain from my end.

I don't hold it against anyone for disagreeing with me as this is a very nuanced topic with many grey zones. I hope eventually all parties related to this incident can all get along as well, as I do still prefer to be civil and friendly with everybody as much as possible.

I've placed the whole conversation here for people to interpret themselves, and as much as I want to let sleeping dogs lie— I can't help but also feel like the vitriol was misplaced. I don't want this to be a justification of my actions or even a place where opinions conflict, I'm just expressing my thoughts on the matter as I've had a while to mull it over. Again, this is a nuanced topic so please bear with me.

The "generative AI" in question at the time was a jk Simmons voice bank that I had gathered/created and trained myself for my own private and personal use. The model is entirely local to my computer and runs on my GPU. If there was one thing I had to closely even relate it to is a vocaloid or vocoder. I had even asked close people around what they had thought of it and they called it the same thing.

I created a Stanford Vocaloid as I experimented with this kind of thing as a programmer who wanted to mess around with deep learning algorithms or Q-learning AI. By now this whole thing should be irrelevant as I'd actually deleted all of the files related to the voicebank in light of this conversation when I decided to take down the project in it's entirety.

I never shared the model anywhere, Not online or through personal file sharing. I've never even made the move to even advocate for it's use in the game. I will repeat, I wanted to keep the voicebank out of the game and I only use it for private reasons which are for my own personal benefit.

I recognize ethically I am in the wrong, JK Simmons never consented to having his voice used in models such as this one and I recognize that as my fault. Most VAs don't like having their voices used in such a thing and the reasoning can matter from person to person. As much as I loved to have a personal Stanford greeting me in my mornings or lecturing me in physics after long days, it's not right to spoof somebody's voice as that is genuinely what can set them apart from everybody else. It's in the same realm of danger as deepfaking, and for this I deeply apologize that I hadn't recognized this fault prior to the conversation I had with orxa.

But I would clearly like to reiterate that I had never advocated for the use of this voicebank or any AI in the game. That I was adamantly clear on calling the voicebank an AI(which I think orxa and some others might have missed during the conversation) which is what even modern vocaloids are classified under. And that I don't at all share the files openly or even the model because I don't preach for people to do this.

I would very much rather a VA but because money is tight(med school you are going to put me in DEBT) and the resources available to me, I instead turned to this as a tool rather than a weapon to use against others. I don't make a profit, I don't commercialize, I even recognize that the voicebank fails in most cases because it sounds so robotic or it just dies trying to say a certain thing a certain way.

Coming from the standpoint of somebody who genuinely dabbles in robotics and had a robotic hand as my thesis, I can honestly say how impressive software and hardware is developing. But I will also firmly believe that I don't think AI will be good enough to ever replace humans within my lifetime and I am 19. Nineteen.

The amount of resources it takes to run a true generative AI like GPT for example is a lot heavier than a locally run vocaloid which just essentially lives in your GPU. As well as the fact AI don't have any nuance that humans have, they're computers— binary to the core. I also stand by the point that they cannot and will not surpass their creators because we are fundamentally flawed. A flawed creature cannot create a perfect being no matter how hard we try.

I don't want to classify vocaloids as generative AI as they're more similar to synthesizers and autotune(which is what my Ford voicebank was as well when I still had it) but to some degree they are. They generate a song for you or an audio from a file that you give as input. They synthesize notes and audio according to the file fed to them. Like a computer, input and output, same thing. There's nothing new generated, it's like a voice changer on an existing mp3.

I'm not saying this to justify my actions or to come off as stand-offish. I just want to clarify things that didn't really sit right with me or that seemed to completely blow over in the exchange I shared with orxa on discord.

To anybody who's finished reading this, thank you for your time and patience. I'll be going back to just working on myself for the time being. Thank you.

#in light of recent events and why I took down the Finding Your Ford Sim#gravity falls#gravity falls stanford#stanford pines#ford pines#gravity falls ford#gravity falls au#gf stanford#ford#stanford#grunkle ford#gf ford#young ford pines#ford pines x reader#ford x reader

20 notes

·

View notes

Text

Been traveling a lot lately and I love how, in US TSA security lines, they always make sure that the big sign saying the facial recognition photo is optional is always turned sideways or set so the spanish-translation side is facing the line and the English-translation side is facing a wall or something.

Anyway, TSA facial recognition photos are 100% not mandatory and if you don't feel like helping a company develop its facial recognition AI software (like, say, Clearview AI), you can just politely tell the TSA agent that you don't want to participate in the photo and instead show an ID or your boarding pass. Like we've been doing for years and years.

#privacy#anti facial recognition#clearwater AI#you need to protect yourself#its dishonest is what it is#the signs are always there#as per law#but they are hidden/turned/set way to the side

38 notes

·

View notes

Text

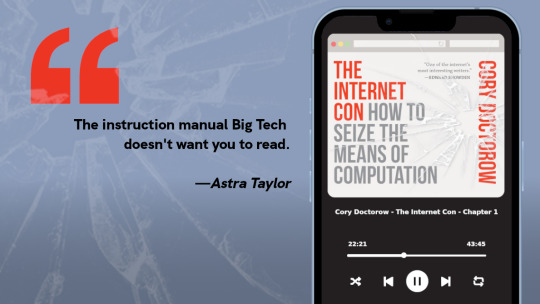

"Open" "AI" isn’t

Tomorrow (19 Aug), I'm appearing at the San Diego Union-Tribune Festival of Books. I'm on a 2:30PM panel called "Return From Retirement," followed by a signing:

https://www.sandiegouniontribune.com/festivalofbooks

The crybabies who freak out about The Communist Manifesto appearing on university curriculum clearly never read it – chapter one is basically a long hymn to capitalism's flexibility and inventiveness, its ability to change form and adapt itself to everything the world throws at it and come out on top:

https://www.marxists.org/archive/marx/works/1848/communist-manifesto/ch01.htm#007

Today, leftists signal this protean capacity of capital with the -washing suffix: greenwashing, genderwashing, queerwashing, wokewashing – all the ways capital cloaks itself in liberatory, progressive values, while still serving as a force for extraction, exploitation, and political corruption.

A smart capitalist is someone who, sensing the outrage at a world run by 150 old white guys in boardrooms, proposes replacing half of them with women, queers, and people of color. This is a superficial maneuver, sure, but it's an incredibly effective one.

In "Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI," a new working paper, Meredith Whittaker, David Gray Widder and Sarah B Myers document a new kind of -washing: openwashing:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4543807

Openwashing is the trick that large "AI" companies use to evade regulation and neutralizing critics, by casting themselves as forces of ethical capitalism, committed to the virtue of openness. No one should be surprised to learn that the products of the "open" wing of an industry whose products are neither "artificial," nor "intelligent," are also not "open." Every word AI huxters say is a lie; including "and," and "the."

So what work does the "open" in "open AI" do? "Open" here is supposed to invoke the "open" in "open source," a movement that emphasizes a software development methodology that promotes code transparency, reusability and extensibility, which are three important virtues.

But "open source" itself is an offshoot of a more foundational movement, the Free Software movement, whose goal is to promote freedom, and whose method is openness. The point of software freedom was technological self-determination, the right of technology users to decide not just what their technology does, but who it does it to and who it does it for:

https://locusmag.com/2022/01/cory-doctorow-science-fiction-is-a-luddite-literature/

The open source split from free software was ostensibly driven by the need to reassure investors and businesspeople so they would join the movement. The "free" in free software is (deliberately) ambiguous, a bit of wordplay that sometimes misleads people into thinking it means "Free as in Beer" when really it means "Free as in Speech" (in Romance languages, these distinctions are captured by translating "free" as "libre" rather than "gratis").

The idea behind open source was to rebrand free software in a less ambiguous – and more instrumental – package that stressed cost-savings and software quality, as well as "ecosystem benefits" from a co-operative form of development that recruited tinkerers, independents, and rivals to contribute to a robust infrastructural commons.

But "open" doesn't merely resolve the linguistic ambiguity of libre vs gratis – it does so by removing the "liberty" from "libre," the "freedom" from "free." "Open" changes the pole-star that movement participants follow as they set their course. Rather than asking "Which course of action makes us more free?" they ask, "Which course of action makes our software better?"

Thus, by dribs and drabs, the freedom leeches out of openness. Today's tech giants have mobilized "open" to create a two-tier system: the largest tech firms enjoy broad freedom themselves – they alone get to decide how their software stack is configured. But for all of us who rely on that (increasingly unavoidable) software stack, all we have is "open": the ability to peer inside that software and see how it works, and perhaps suggest improvements to it:

https://www.youtube.com/watch?v=vBknF2yUZZ8

In the Big Tech internet, it's freedom for them, openness for us. "Openness" – transparency, reusability and extensibility – is valuable, but it shouldn't be mistaken for technological self-determination. As the tech sector becomes ever-more concentrated, the limits of openness become more apparent.

But even by those standards, the openness of "open AI" is thin gruel indeed (that goes triple for the company that calls itself "OpenAI," which is a particularly egregious openwasher).

The paper's authors start by suggesting that the "open" in "open AI" is meant to imply that an "open AI" can be scratch-built by competitors (or even hobbyists), but that this isn't true. Not only is the material that "open AI" companies publish insufficient for reproducing their products, even if those gaps were plugged, the resource burden required to do so is so intense that only the largest companies could do so.

Beyond this, the "open" parts of "open AI" are insufficient for achieving the other claimed benefits of "open AI": they don't promote auditing, or safety, or competition. Indeed, they often cut against these goals.

"Open AI" is a wordgame that exploits the malleability of "open," but also the ambiguity of the term "AI": "a grab bag of approaches, not… a technical term of art, but more … marketing and a signifier of aspirations." Hitching this vague term to "open" creates all kinds of bait-and-switch opportunities.

That's how you get Meta claiming that LLaMa2 is "open source," despite being licensed in a way that is absolutely incompatible with any widely accepted definition of the term:

https://blog.opensource.org/metas-llama-2-license-is-not-open-source/

LLaMa-2 is a particularly egregious openwashing example, but there are plenty of other ways that "open" is misleadingly applied to AI: sometimes it means you can see the source code, sometimes that you can see the training data, and sometimes that you can tune a model, all to different degrees, alone and in combination.

But even the most "open" systems can't be independently replicated, due to raw computing requirements. This isn't the fault of the AI industry – the computational intensity is a fact, not a choice – but when the AI industry claims that "open" will "democratize" AI, they are hiding the ball. People who hear these "democratization" claims (especially policymakers) are thinking about entrepreneurial kids in garages, but unless these kids have access to multi-billion-dollar data centers, they can't be "disruptors" who topple tech giants with cool new ideas. At best, they can hope to pay rent to those giants for access to their compute grids, in order to create products and services at the margin that rely on existing products, rather than displacing them.

The "open" story, with its claims of democratization, is an especially important one in the context of regulation. In Europe, where a variety of AI regulations have been proposed, the AI industry has co-opted the open source movement's hard-won narrative battles about the harms of ill-considered regulation.

For open source (and free software) advocates, many tech regulations aimed at taming large, abusive companies – such as requirements to surveil and control users to extinguish toxic behavior – wreak collateral damage on the free, open, user-centric systems that we see as superior alternatives to Big Tech. This leads to the paradoxical effect of passing regulation to "punish" Big Tech that end up simply shaving an infinitesimal percentage off the giants' profits, while destroying the small co-ops, nonprofits and startups before they can grow to be a viable alternative.

The years-long fight to get regulators to understand this risk has been waged by principled actors working for subsistence nonprofit wages or for free, and now the AI industry is capitalizing on lawmakers' hard-won consideration for collateral damage by claiming to be "open AI" and thus vulnerable to overbroad regulation.

But the "open" projects that lawmakers have been coached to value are precious because they deliver a level playing field, competition, innovation and democratization – all things that "open AI" fails to deliver. The regulations the AI industry is fighting also don't necessarily implicate the speech implications that are core to protecting free software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech

Just think about LLaMa-2. You can download it for free, along with the model weights it relies on – but not detailed specs for the data that was used in its training. And the source-code is licensed under a homebrewed license cooked up by Meta's lawyers, a license that only glancingly resembles anything from the Open Source Definition:

https://opensource.org/osd/

Core to Big Tech companies' "open AI" offerings are tools, like Meta's PyTorch and Google's TensorFlow. These tools are indeed "open source," licensed under real OSS terms. But they are designed and maintained by the companies that sponsor them, and optimize for the proprietary back-ends each company offers in its own cloud. When programmers train themselves to develop in these environments, they are gaining expertise in adding value to a monopolist's ecosystem, locking themselves in with their own expertise. This a classic example of software freedom for tech giants and open source for the rest of us.

One way to understand how "open" can produce a lock-in that "free" might prevent is to think of Android: Android is an open platform in the sense that its sourcecode is freely licensed, but the existence of Android doesn't make it any easier to challenge the mobile OS duopoly with a new mobile OS; nor does it make it easier to switch from Android to iOS and vice versa.

Another example: MongoDB, a free/open database tool that was adopted by Amazon, which subsequently forked the codebase and tuning it to work on their proprietary cloud infrastructure.

The value of open tooling as a stickytrap for creating a pool of developers who end up as sharecroppers who are glued to a specific company's closed infrastructure is well-understood and openly acknowledged by "open AI" companies. Zuckerberg boasts about how PyTorch ropes developers into Meta's stack, "when there are opportunities to make integrations with products, [so] it’s much easier to make sure that developers and other folks are compatible with the things that we need in the way that our systems work."

Tooling is a relatively obscure issue, primarily debated by developers. A much broader debate has raged over training data – how it is acquired, labeled, sorted and used. Many of the biggest "open AI" companies are totally opaque when it comes to training data. Google and OpenAI won't even say how many pieces of data went into their models' training – let alone which data they used.

Other "open AI" companies use publicly available datasets like the Pile and CommonCrawl. But you can't replicate their models by shoveling these datasets into an algorithm. Each one has to be groomed – labeled, sorted, de-duplicated, and otherwise filtered. Many "open" models merge these datasets with other, proprietary sets, in varying (and secret) proportions.

Quality filtering and labeling for training data is incredibly expensive and labor-intensive, and involves some of the most exploitative and traumatizing clickwork in the world, as poorly paid workers in the Global South make pennies for reviewing data that includes graphic violence, rape, and gore.

Not only is the product of this "data pipeline" kept a secret by "open" companies, the very nature of the pipeline is likewise cloaked in mystery, in order to obscure the exploitative labor relations it embodies (the joke that "AI" stands for "absent Indians" comes out of the South Asian clickwork industry).

The most common "open" in "open AI" is a model that arrives built and trained, which is "open" in the sense that end-users can "fine-tune" it – usually while running it on the manufacturer's own proprietary cloud hardware, under that company's supervision and surveillance. These tunable models are undocumented blobs, not the rigorously peer-reviewed transparent tools celebrated by the open source movement.

If "open" was a way to transform "free software" from an ethical proposition to an efficient methodology for developing high-quality software; then "open AI" is a way to transform "open source" into a rent-extracting black box.

Some "open AI" has slipped out of the corporate silo. Meta's LLaMa was leaked by early testers, republished on 4chan, and is now in the wild. Some exciting stuff has emerged from this, but despite this work happening outside of Meta's control, it is not without benefits to Meta. As an infamous leaked Google memo explains:

Paradoxically, the one clear winner in all of this is Meta. Because the leaked model was theirs, they have effectively garnered an entire planet's worth of free labor. Since most open source innovation is happening on top of their architecture, there is nothing stopping them from directly incorporating it into their products.

https://www.searchenginejournal.com/leaked-google-memo-admits-defeat-by-open-source-ai/486290/

Thus, "open AI" is best understood as "as free product development" for large, well-capitalized AI companies, conducted by tinkerers who will not be able to escape these giants' proprietary compute silos and opaque training corpuses, and whose work product is guaranteed to be compatible with the giants' own systems.

The instrumental story about the virtues of "open" often invoke auditability: the fact that anyone can look at the source code makes it easier for bugs to be identified. But as open source projects have learned the hard way, the fact that anyone can audit your widely used, high-stakes code doesn't mean that anyone will.

The Heartbleed vulnerability in OpenSSL was a wake-up call for the open source movement – a bug that endangered every secure webserver connection in the world, which had hidden in plain sight for years. The result was an admirable and successful effort to build institutions whose job it is to actually make use of open source transparency to conduct regular, deep, systemic audits.

In other words, "open" is a necessary, but insufficient, precondition for auditing. But when the "open AI" movement touts its "safety" thanks to its "auditability," it fails to describe any steps it is taking to replicate these auditing institutions – how they'll be constituted, funded and directed. The story starts and ends with "transparency" and then makes the unjustifiable leap to "safety," without any intermediate steps about how the one will turn into the other.

It's a Magic Underpants Gnome story, in other words:

Step One: Transparency

Step Two: ??

Step Three: Safety

https://www.youtube.com/watch?v=a5ih_TQWqCA

Meanwhile, OpenAI itself has gone on record as objecting to "burdensome mechanisms like licenses or audits" as an impediment to "innovation" – all the while arguing that these "burdensome mechanisms" should be mandatory for rival offerings that are more advanced than its own. To call this a "transparent ruse" is to do violence to good, hardworking transparent ruses all the world over:

https://openai.com/blog/governance-of-superintelligence

Some "open AI" is much more open than the industry dominating offerings. There's EleutherAI, a donor-supported nonprofit whose model comes with documentation and code, licensed Apache 2.0. There are also some smaller academic offerings: Vicuna (UCSD/CMU/Berkeley); Koala (Berkeley) and Alpaca (Stanford).

These are indeed more open (though Alpaca – which ran on a laptop – had to be withdrawn because it "hallucinated" so profusely). But to the extent that the "open AI" movement invokes (or cares about) these projects, it is in order to brandish them before hostile policymakers and say, "Won't someone please think of the academics?" These are the poster children for proposals like exempting AI from antitrust enforcement, but they're not significant players in the "open AI" industry, nor are they likely to be for so long as the largest companies are running the show:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4493900

I'm kickstarting the audiobook for "The Internet Con: How To Seize the Means of Computation," a Big Tech disassembly manual to disenshittify the web and make a new, good internet to succeed the old, good internet. It's a DRM-free book, which means Audible won't carry it, so this crowdfunder is essential. Back now to get the audio, Verso hardcover and ebook:

http://seizethemeansofcomputation.org

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#llama-2#meta#openwashing#floss#free software#open ai#open source#osi#open source initiative#osd#open source definition#code is speech

253 notes

·

View notes

Text

Elon Musk’s so-called Department of Government Efficiency (DOGE) operates on a core underlying assumption: The United States should be run like a startup. So far, that has mostly meant chaotic firings and an eagerness to steamroll regulations. But no pitch deck in 2025 is complete without an overdose of artificial intelligence, and DOGE is no different.

AI itself doesn’t reflexively deserve pitchforks. It has genuine uses and can create genuine efficiencies. It is not inherently untoward to introduce AI into a workflow, especially if you’re aware of and able to manage around its limitations. It’s not clear, though, that DOGE has embraced any of that nuance. If you have a hammer, everything looks like a nail; if you have the most access to the most sensitive data in the country, everything looks like an input.

Wherever DOGE has gone, AI has been in tow. Given the opacity of the organization, a lot remains unknown about how exactly it’s being used and where. But two revelations this week show just how extensive—and potentially misguided—DOGE’s AI aspirations are.

At the Department of Housing and Urban Development, a college undergrad has been tasked with using AI to find where HUD regulations may go beyond the strictest interpretation of underlying laws. (Agencies have traditionally had broad interpretive authority when legislation is vague, although the Supreme Court recently shifted that power to the judicial branch.) This is a task that actually makes some sense for AI, which can synthesize information from large documents far faster than a human could. There’s some risk of hallucination—more specifically, of the model spitting out citations that do not in fact exist—but a human needs to approve these recommendations regardless. This is, on one level, what generative AI is actually pretty good at right now: doing tedious work in a systematic way.

There’s something pernicious, though, in asking an AI model to help dismantle the administrative state. (Beyond the fact of it; your mileage will vary there depending on whether you think low-income housing is a societal good or you’re more of a Not in Any Backyard type.) AI doesn’t actually “know” anything about regulations or whether or not they comport with the strictest possible reading of statutes, something that even highly experienced lawyers will disagree on. It needs to be fed a prompt detailing what to look for, which means you can not only work the refs but write the rulebook for them. It is also exceptionally eager to please, to the point that it will confidently make stuff up rather than decline to respond.

If nothing else, it’s the shortest path to a maximalist gutting of a major agency’s authority, with the chance of scattered bullshit thrown in for good measure.

At least it’s an understandable use case. The same can’t be said for another AI effort associated with DOGE. As WIRED reported Friday, an early DOGE recruiter is once again looking for engineers, this time to “design benchmarks and deploy AI agents across live workflows in federal agencies.” His aim is to eliminate tens of thousands of government positions, replacing them with agentic AI and “freeing up” workers for ostensibly “higher impact” duties.

Here the issue is more clear-cut, even if you think the government should by and large be operated by robots. AI agents are still in the early stages; they’re not nearly cut out for this. They may not ever be. It’s like asking a toddler to operate heavy machinery.

DOGE didn’t introduce AI to the US government. In some cases, it has accelerated or revived AI programs that predate it. The General Services Administration had already been working on an internal chatbot for months; DOGE just put the deployment timeline on ludicrous speed. The Defense Department designed software to help automate reductions-in-force decades ago; DOGE engineers have updated AutoRIF for their own ends. (The Social Security Administration has recently introduced a pre-DOGE chatbot as well, which is worth a mention here if only to refer you to the regrettable training video.)

Even those preexisting projects, though, speak to the concerns around DOGE’s use of AI. The problem isn’t artificial intelligence in and of itself. It’s the full-throttle deployment in contexts where mistakes can have devastating consequences. It’s the lack of clarity around what data is being fed where and with what safeguards.

AI is neither a bogeyman nor a panacea. It’s good at some things and bad at others. But DOGE is using it as an imperfect means to destructive ends. It’s prompting its way toward a hollowed-out US government, essential functions of which will almost inevitably have to be assumed by—surprise!—connected Silicon Valley contractors.

12 notes

·

View notes

Text

to ppl who are currently skeptical about llm (aka "ai") capabilities and benefits for everyday people: i would strongly encourage u to check out this really cool slide deck someone made where they describe a world in which non-software-engineers can make home-grown apps by and for themselves with assistance from llms.

in particular there are a couple of linked tools that are really amazing, including one that gives you a whiteboard interface to draw and describe the app interface you want and then uses gpt4o to write the code for that app.

i think it's also an excellent counter to the argument that llms are basically only going to benefit le capitalisme and corporate overlords, because the technology presented here is actually being used to help users take matters into their own hands. to build their own apps that do what they want the apps to do, and to do it all on their own computers, so none of their private data has to get slurped up by some new startup.

"oh, so i should just let openai slurp up all my data instead? sounds great, asshole" no!!!! that's not what this is suggesting! this is saying you can make your own apps with the llms. then you put your private data in the app that you made, and that app doesn't need chatgpt to work, so literally everything involving your personal data remains on your personal devices.

is this a complete argument for justifying the existence of ai and llms? no! is this a justification for other privacy abuses? also no! does this mean we should all feel totally okay and happy with companies laying off tons of people in order to replace them with llms? 100% no!!!! please continue being mad about that.

just don't let those problems push you towards believing these things don't have genuinely impressive capabilities that can actually help you unlock the ability to do cool things you wouldn't otherwise have the time, energy, or inclination to do.

41 notes

·

View notes

Text

the bloodless battle of wits theory of AI races

One assumption/worldview/framing that sometimes comes up with is that:

Technological development is a conflict - it is better to have more technology than your adversary, and by having more technology than your adversary you can constrain the actions of your adversary.

However, technology and military action are in separate realms. Making technology at home will not cause military action to occur; and taking military action is cheating at the technological game.

By winning in technology, you can win against your adversary without involving military action.

I call this frame that of the bloodless battle of wits.

A classic bloodless battle of wits is the science fiction story about building an AGI in a basement, that one might encounter in the Metamorphosis of Prime Intellect, or in the minds of excited teenagers who grew up reading about half of SIAI's output (ahem):

The genius team of programmers build a seed AGI in a basement without alerting the military intelligence of any states, maintaining a low budget and a lack of societal appreciation up until the end. This AGI rapidly becomes more intelligent, also without being noticed. It develops a decisive strategic advantage, overthrows every world government, and instates utopia.

This beautiful scenario requires, at no point, for anyone to point a rifle at anyone else, or even to levy fines on anyone . The biggest deontology violation is that the nanomesh touches other people's bullets without permission when damping them to a maximum speed of 10 m/s during the subsequent riots. The winner is the most intelligent group, the genius programmers and the AGI, not the group able to levy the most force (any of the world governments who might disapprove).

Another bloodless battle of wits scenario, that is becoming more fashionable now that we know that the largest AGI companies are billion-dollar organisations and that the US government (a trillion-dollar organisation) knows that they exist, is:

The genius team of programmers, backed by US national security, does AI research; meanwhile, another genius team of programmers, backed by Chinese national security, is also trying to do AI research. The US AGI is built first, develops a decisive strategic advantage, overthrows the Chinese government, and instates democracy and apple pie; just as well, because otherwise the Chinese AGI would have been built first, overthrow the US government, and instate communism and 豆腐.

There can sometimes be espionage, but certainly there wouldn't be an attempt to assassinate someone working on the scheme to overthrow the rival nuclear power. It's a bloodless battle of wits; may the smartest win, not the most effective at violence.

There are also no deontology violations going on here; of course, the American AGI, in the process of overthrowing the Chinese government and replacing it with freedom and apple pie, would never violate the property rights of the Chinese communist party.

In the bloodless battle of wits, any kind of military action is a huge and deontology-violating escalation. The American software team is trying to overthrow the Chinese government, yes, but the Chinese government treating them as an adversary trying to overthrow them and arresting them would be too far.

8 notes

·

View notes

Text

AI Artposting

(This covers everything AI does but right now but I'm on tumblr so we're talking art instead of software development or literature or...)

A brief history of the destruction of art careers

(1) The printing press

If your career was *the creation of books* ca. 1439, either as a scribe or as a person doing detail illustrations, printing undermined your career entirely. You could keep making money by doing things the printing press wasn't good at - bespoke illustration or books with very low production runs.

(2) Cameras.

If you are a traditional fine-arts artist - e.g. oil painter or water colors - then your *commercially viable standard career* was undermined by the black-and-white photograph and it was utterly destroyed by the color photograph. To make money here you have to employ skills a camera does not replace, e.g. composition or client relations management, or drawing things a camera *cannot* photograph because the subject does not exist - fantastic art, surrealism, charicature.

(3) Photoshop

You probably already know what photoshop does. Imagine doing all of that work *without* digital tools. Well, the people who did that for pay now don't do that for pay (though they might do the same - but with photoshop).

\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-

Intermission

Let me put down multiple bullet points about AI art, and then answer them with a single screenshot:

It is bad- It has no soul

It devalues human art

It takes no skill

It derives from human art with no compensation

It is unnecessary, you can learn to create art

Figure 1: Good ensouled valuable human art, created with high skill by people who learned in a vacuum without observing any other human art, that I could also learn to make.

End of intermission

\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-\-

(4) AI art

Man good luck. Truly. But making money off an art was hard before you AI, you were already competing with "Views Across The Cove Canvas Framed Art £4.99."

(And the internet made it easier for you to find customers, though it also made it easier for customers to find other artists that weren't you and worked for much cheaper because they were paying rent in Burundi).

If you already had a successful career creating art in the "ensouled human" sense, you probably still will - you probably never sold specifically due to high skill, that's been available for near-free since we were born. You sold due to other qualities - qualities AI probably doesn't replicate, and the people who use AI aren't looking for, so you wouldn't have sold to those people in the first place.

What I'm getting at

You have some ideas about art. They are your ideas and do not apply universally to humanity. You do not understand every human on earth, nor do you know what they want from pictures.

Like a buddy of mine, name withheld, who's very fascinated with AI art. He had human-made art on his walls before AIs got good. You know how much he paid you? Dollar Fuck-All. $0.00. Nothing. He found nice images on the internet and had them printed out.

Because he doesn't care what you think art is - he cares to have interesting things to look at on his walls.

8 notes

·

View notes

Text

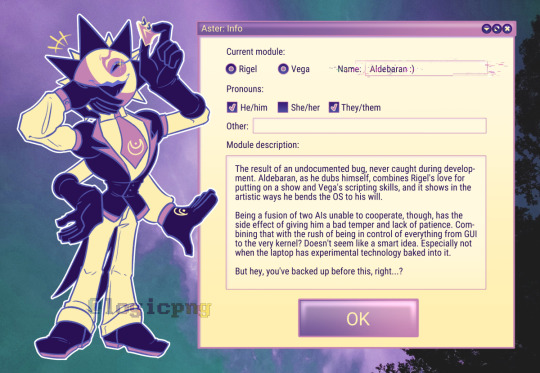

i Believe i am finally done making references

edit: pasting the image descriptions out of the alt text. since they're refs they're really long I am so sorry

[First image ID:

Digital artwork of Aldebaran Aster - a humanoid being in a suit, four arms, and a star shaped head - standing next to a large program window titled: "Aster: Info". He is holding up the mouse pointer in one of his hands and laughing, with a smug smile.

The text in the window reads:

"Current module:

[Selected radio button] Rigel [Selected radio button] Vega [Glitchy text box] Name: Aldebaran :)

Pronouns:

[Checked tick box] He/him [Unchecked tick box] She/her [Checked tick box] They/them

Other: [Long empty text field]

Module description:

[Following text in a large text box:]

The result of an undocumented bug, never caught during development. Aldebaran, as he dubs himself, combines Rigel's love for putting on a show and Vega's scripting skills, and it shows in the artistic ways he bends the OS to his will.

Being a fusion of two AIs unable to cooperate, though, has the side effect of giving him a bad temper and lack of patience. Combining that with the rush of being in control of everything from GUI to the very kernel? Doesn't seem like a smart idea. Especially not when the laptop has experimental technology baked into it.

But hey, you've backed up before this, right...?

[Text box ends]

[Large lavender OK button]"

First Image ID end]

[Second Image ID:

Digital artwork of The User - a human with a gray-green skin, dark green hair with a white t-shirt, track suit shorts and green socks - standing next to a large program window titled: "User information". They are standing with a laptop bearing the CaelOS logo on its back, and scratching their head, looking a little nervous.

The text in the window reads:

"Base info:

Name: Urs Norma; Pronunciation: OO-rs NOR-mah; Age: 25

Pronouns:

[Checked tick box] He/him [Checked tick box] She/her [Checked tick box] They/them

Other: Any/All

Personality profile:

[Following text in a large text box:]

Young adult figuring out... being an adult.

After hastily finding a used tech store, they found a replacement for their busted laptop. As it turns out, the machine hosts an OS that never saw the light of day, featuring experimental technology. At least, it's compatible with most software they need...

Despite the world being cruel and unforgiving, the spark of optimism remains bright. Just like the AI the laptop hides, all they can do is perpetually learn from their mistakes, and maybe even relay some of that knowledge to the little virtual assistants they find themself talking to every day.

[Text box ends]

[Large green OK button]"

Second Image ID]

[Third Image ID:

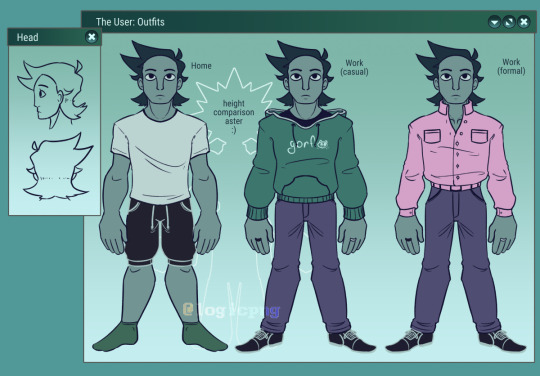

Reference image of Urs Norma - an androgynous person with gray-green skin and dark green hair. A large program window titled "The User: Outfits" shows them standing neutrally, facing the camera, in three different outfits:

Home: Plain white shirt, track suit shorts, green socks.

Work (casual): Green hoodie that says "gorf" with a cat face on it in white, gray-purple pants, and black dress shoes. Left and right hand feature black and white rings on their respective middle fingers.

Work (formal): Pink dress shirt, slightly unbuttoned at the top, same pants, same dress shoes, and same rings.

Behind them is an outline of Aster, that has text in it saying "height comparison aster :)". At the top of their rays, they're noticeably shorter than Urs.

A window titled "Head", slightly overlapping the large window, shows lineart of the user's head in profile and from the back.

Third Image ID end]

#original#oc#original character#object head oc#object head#ai oc#aster#CaelOS#urs norma is a silly name. but it fits the star theme thing and i think i am growing to Like it#aldebaran (aster)#urs novak

200 notes

·

View notes

Note

I need to take a work trip to Germany, Leipzig to be precise. Should be a nice change from my NYC life.

I guess, your suitcase won't make it to Frankfurt... Then I guess I have to organize a replacement. Damn Airlines!

The only thing I can offer you so spontaneously is an old army backpack from GDR stocks, covered with graffiti tags, stickers and patches. Pretty heavy... And maybe not necessarily suitable for your classic suit… So, take your rucksckand head to the airport train station. Your train to Leipzig will depart in 20 minutes.

Shit, Frankfurt airport is bigger then expected. When you arrive, you thaught, that you missed your train. But luckily, the train is delayed by 15 minutes. Enough time, to relaxe. And for a smoke. You search the side pockets of the backpack. No cigarettes. But tobacco, cigarette paper. And weed. Shit, that could have ended badly at customs...

Ahh, smoking this feels great. I really needed to decompress a bit after this whole travel shitshow. Don't take offense, but a middelaged man in a conservative suit and a classic haircut smoking weed with an army backpack on the platform of the airport station looks a bit special... You have to admit that, too, when you see your reflection in the window panes of the high-speed train rushing in.

No one had told you that you had better have made a seat reservation. The train is packed. Getting a seat is out of the question. With a little luck, you will still get a seat in the dining car. You order a beer (what else in Germany) and check the contents of your backpack. On top of it lies a hat. It looks funny, you put it on. Otherwise, the backpack is not necessarily neatly packed. Everything is stuffed in more like this. There's a MacBook... You open it. And of course you know the password. Feels perfectly normal to open it. As normal as your pierced earlobes feel.

It is a low-coding platform open to any Big Data AI application. You scroll through the application. Sure, the prototype of an app for digitizing queues in doctors' offices. You open the library of useer stories and start developing the app further. A few hours ago, you had no idea about software development.

It's 9:00 p.m. when you look out the window. Gotha train station. Wherever that may be. You are looking at your reflection. Let's see what the others think of the fact that you have let the beard grow out...

The train is half empty by now. You have not even noticed how it has emptied. It's still a good hour to Leipzig. You close the computer. That's it for today. You order another beer and the vegan curry. Actually, you're also in desperate need of a joint. But of course you can't smoke anywhere on this train.

But you take tobacco, weed, cigarette paper and your cigarette case, which you inherited from your grandfather. And while you're waiting for the food, you roll a few joints on reserve. It will be after 11:00 p.m. by the time you arrive at your shared apartment. But you assume that you will sit together until 01:00 or 02:00. Your roommates are all rather night owls....

You don't notice that you're wearing high-laced DocMarten's boots instead of welted penny loafers as you step off the train. You also don't notice that your hair has grown considerably longer and falls tousled under your hat into your forehead... You pause for a moment as you see the tattoos on the back of your hand as you light up a joint to tide you over until the bus leaves. And after asking the bus driver for a ticket to Connewitz, you wonder if you actually just spoke German with quite an American accent.

The elevator in your house is of course defective again. Old building from 1873, last renovated in 1980 or so. That was long before the fall of the Wall in the GDR. But the rent is cheap. And the atmosphere is energetic and creative. When you met Kevin, Lukas and Emma at university five years ago, you were immediately on the same wavelength. Even though you didn't speak a word of German back then. You would never have thought that a semester as an exchange student would turn into a lasting collaboration. The fact that you found an apartment together where you could work on your startup at the same time was a real stroke of luck.

Upstairs in the apartment, Kevin already opens the door for you. As if he had been waiting for you.

„Sieht heute gut aus”, you say with your strange American accent.

Kevin hugs you and answers „Dude, it's good to have you back! We have missed you! Tell me, do you have new tattoos? Looks hot! And did you bring weed from Amsterdam? Our dealer is on vacation... Shitty situation!“

“Of course, i’d never leave you without”, I say, opening up the cigarette case and offering you one of the hand-rolled contents.

Kevin grins. „What do you say we smoke the first one not at the kitchen table but on your bed? I missed you, stud!“

“I’m so tired after this trip, so the bed sounds just right.”

There is nothing left of your suit right now. Yes, you are still from NYC. But you weren't a lawyer then. You studied computer science. And that was a long time ago. Now you are a Leipziger by heart

You both lie on the bed. You take a deep drag. And blow the smoke into Kevin’s mouth with a deep French kiss. The bulge in your skinny jeans looks painful. “Oh man, Kevin, I need some relief!” you growl.

It doesn't take long and we both have the tank tops off. You discover Kevins new nipple piercings. And can't stop playing with them. And Kevins bulge starts to hurt too.

“Man, let me provide some relief”, he says. And open your jeans. Your boner jumps out of your boxers like a jack-in-the-box.

Those new piercings… You just can’t help yourself… You’ve gotta feel them in my mouth! “Are they sensitive? Does it still hurt?” Kevin starts breathing more heavily. “What are you waiting for you prude Yank! They've been waiting for you for two weeks now!” You take a deep drag and blow the smoke over Kevins chest, which you caress with your tongue. Kevin moans “Fuck! You're doing so well! Sure it hurts. It's supposed to. You make me so fucking horny with your tongue! I love your tunnels on the earlobes!. I can not stop playing with them with my tongue.”

Dude, your dick is producing precum like a broken faucet. Kevin starts to massage it into your dick! You take one last drag from the joint, push the butt into the ashtray and blow the smoke over Kevins boner.

While Kevin rubs your hard dick, You begin licking his uncut cock. Damn man, these uncut European cocks will never not surprise you! Oh man, you love how it feels on your tongue.

Kevin doesn't stop breathing heavily, but still has to grin. “Fuck, admit it, you certainly didn't just talk about user interfaces with Milan and Sem in Amsterdam. You did practice your tongue game. Fuck, you know how to bring someone to ecstasy with the tip of your tongue!”

Oh man, Kevins precum just takes so good. You can’t get enough of it. Kevin reads your thoughts. “I want to lick your precum too. Let's make a 69! I need to suck your powerful circumcised cock.”

Yes, please!, you think in ecstasy. You just love how his balls feel in my mouth. And Kevin has fun to. You must have been sweating like a dog on the trip. Your balls are salty, your cock is deliciously cheesy. “Fuck, I can not tell you how I missed you.” Kevin moans.

He always feels so good, just keep going please, you think. His cock is so hard. His precum is spectacular. It’s like you’re in sync — in and out, in and out, in and out. “Fuck, your balls are so huge”, Kevin grunts. “I didn't jerk off all the time you ve been away. My balls are bursting”.

You both are perfectly synchron. Like one organism. “Please cum at the exact moment that I also cum. I want to make this old house shake.”, you think.You can’t wait to make you explode. Kevins moans “I can't take it much longer. Fuck, you are a master with your tongue. Fuck... Oh yeah... Yes! Fuuuuuuuck!”

Oh god! That was heavy. You both really try. But that was too much. Boy, what a load you both shot! Kevins cum is so thick! So potent! You ’ve got my whole mouth full, not able to swallow everything at once. You both exchange a deep French kiss. The cum runs from the corners of your mouths down our cheeks and necks. Kevin licks the cum traces from your skin. And you his. One last kiss, you pull up our pants again. And go to the kitchen with a joint. Lukas and Emma grin. The whole house could listen to you having sex.

“Incredible, as always, Kevin” You tell him, as you pass him the joint. And as if nothing had happened, you ask Emma if she has any new user stories for your app.

129 notes

·

View notes