#spring cloud config

Explore tagged Tumblr posts

Text

Spring Cloud 2025.0.0-RC1 (aka Northfields) has been released

Estimated reading time: 2 minutes Spring Cloud 2025.0.0-RC1 release has updates in several modules. These include Spring Cloud Config, Spring Cloud Gateway, Spring Cloud Task, Spring Cloud Stream, Spring Cloud Function & More. Following article details the changes in the release and what modules are affected. Info About Release Release TrainSpring Cloud 2025.0.0 Release Candidate 1…

#AWS S3 buckets#Spring Cloud Config#Spring Cloud Function#Spring Cloud Gateway#Spring Cloud Stream#Spring Cloud Task

0 notes

Video

youtube

Create Spring Cloud Configuration Server for Microservices | Setup Eurek...

Full Video Link https://youtu.be/Exoy4ZNAO9Y

Hello friends, new #video on #springcloud #configserver #eureka #servicediscovery setup is published on #codeonedigest #youtube channel.

@java #java #aws #awscloud @awscloud @AWSCloudIndia #salesforce #Cloud #CloudComputing @YouTube #youtube #azure #msazure #configserver #configserverspringboot #configserverinmicroservices #configserverinspringbootmicroservices #configserverinspringbootexample #configserverspringbootgithub #configserverfirewall #configserverspringcloud #configserverspring #configurationserver #configurationserverspringboot #configurationserverdns #configurationserverinmicroservices #configurationserverstepbystep #eurekaservice #eurekaservicediscovery #eurekaserviceregistryspringboot #eurekaservicediscoveryexamplespringboot #eurekaserviceregistry #eurekaserviceinmicroservices #eurekaservicediscoveryexample #eurekaservicediscoveryspringboot #eurekaserviceregistryexample #eurekaservicespringboot

#youtube#spring cloud#spring cloud config#spring cloud config server#spring config server#configuration server#config service#config server#eureka service discovery#service discovery#service registry#netflix eureka#eureka service registry#service discovery pattern#microservices#microservice configuration server

1 note

·

View note

Text

Essential Components of a Production Microservice Application

DevOps Automation Tools and modern practices have revolutionized how applications are designed, developed, and deployed. Microservice architecture is a preferred approach for enterprises, IT sectors, and manufacturing industries aiming to create scalable, maintainable, and resilient applications. This blog will explore the essential components of a production microservice application, ensuring it meets enterprise-grade standards.

1. API Gateway

An API Gateway acts as a single entry point for client requests. It handles routing, composition, and protocol translation, ensuring seamless communication between clients and microservices. Key features include:

Authentication and Authorization: Protect sensitive data by implementing OAuth2, OpenID Connect, or other security protocols.

Rate Limiting: Prevent overloading by throttling excessive requests.

Caching: Reduce response time by storing frequently accessed data.

Monitoring: Provide insights into traffic patterns and potential issues.

API Gateways like Kong, AWS API Gateway, or NGINX are widely used.

Mobile App Development Agency professionals often integrate API Gateways when developing scalable mobile solutions.

2. Service Registry and Discovery

Microservices need to discover each other dynamically, as their instances may scale up or down or move across servers. A service registry, like Consul, Eureka, or etcd, maintains a directory of all services and their locations. Benefits include:

Dynamic Service Discovery: Automatically update the service location.

Load Balancing: Distribute requests efficiently.

Resilience: Ensure high availability by managing service health checks.

3. Configuration Management

Centralized configuration management is vital for managing environment-specific settings, such as database credentials or API keys. Tools like Spring Cloud Config, Consul, or AWS Systems Manager Parameter Store provide features like:

Version Control: Track configuration changes.

Secure Storage: Encrypt sensitive data.

Dynamic Refresh: Update configurations without redeploying services.

4. Service Mesh

A service mesh abstracts the complexity of inter-service communication, providing advanced traffic management and security features. Popular service mesh solutions like Istio, Linkerd, or Kuma offer:

Traffic Management: Control traffic flow with features like retries, timeouts, and load balancing.

Observability: Monitor microservice interactions using distributed tracing and metrics.

Security: Encrypt communication using mTLS (Mutual TLS).

5. Containerization and Orchestration

Microservices are typically deployed in containers, which provide consistency and portability across environments. Container orchestration platforms like Kubernetes or Docker Swarm are essential for managing containerized applications. Key benefits include:

Scalability: Automatically scale services based on demand.

Self-Healing: Restart failed containers to maintain availability.

Resource Optimization: Efficiently utilize computing resources.

6. Monitoring and Observability

Ensuring the health of a production microservice application requires robust monitoring and observability. Enterprises use tools like Prometheus, Grafana, or Datadog to:

Track Metrics: Monitor CPU, memory, and other performance metrics.

Set Alerts: Notify teams of anomalies or failures.

Analyze Logs: Centralize logs for troubleshooting using ELK Stack (Elasticsearch, Logstash, Kibana) or Fluentd.

Distributed Tracing: Trace request flows across services using Jaeger or Zipkin.

Hire Android App Developers to ensure seamless integration of monitoring tools for mobile-specific services.

7. Security and Compliance

Securing a production microservice application is paramount. Enterprises should implement a multi-layered security approach, including:

Authentication and Authorization: Use protocols like OAuth2 and JWT for secure access.

Data Encryption: Encrypt data in transit (using TLS) and at rest.

Compliance Standards: Adhere to industry standards such as GDPR, HIPAA, or PCI-DSS.

Runtime Security: Employ tools like Falco or Aqua Security to detect runtime threats.

8. Continuous Integration and Continuous Deployment (CI/CD)

A robust CI/CD pipeline ensures rapid and reliable deployment of microservices. Using tools like Jenkins, GitLab CI/CD, or CircleCI enables:

Automated Testing: Run unit, integration, and end-to-end tests to catch bugs early.

Blue-Green Deployments: Minimize downtime by deploying new versions alongside old ones.

Canary Releases: Test new features on a small subset of users before full rollout.

Rollback Mechanisms: Quickly revert to a previous version in case of issues.

9. Database Management

Microservices often follow a database-per-service model to ensure loose coupling. Choosing the right database solution is critical. Considerations include:

Relational Databases: Use PostgreSQL or MySQL for structured data.

NoSQL Databases: Opt for MongoDB or Cassandra for unstructured data.

Event Sourcing: Leverage Kafka or RabbitMQ for managing event-driven architectures.

10. Resilience and Fault Tolerance

A production microservice application must handle failures gracefully to ensure seamless user experiences. Techniques include:

Circuit Breakers: Prevent cascading failures using tools like Hystrix or Resilience4j.

Retries and Timeouts: Ensure graceful recovery from temporary issues.

Bulkheads: Isolate failures to prevent them from impacting the entire system.

11. Event-Driven Architecture

Event-driven architecture improves responsiveness and scalability. Key components include:

Message Brokers: Use RabbitMQ, Kafka, or AWS SQS for asynchronous communication.

Event Streaming: Employ tools like Kafka Streams for real-time data processing.

Event Sourcing: Maintain a complete record of changes for auditing and debugging.

12. Testing and Quality Assurance

Testing in microservices is complex due to the distributed nature of the architecture. A comprehensive testing strategy should include:

Unit Tests: Verify individual service functionality.

Integration Tests: Validate inter-service communication.

Contract Testing: Ensure compatibility between service APIs.

Chaos Engineering: Test system resilience by simulating failures using tools like Gremlin or Chaos Monkey.

13. Cost Management

Optimizing costs in a microservice environment is crucial for enterprises. Considerations include:

Autoscaling: Scale services based on demand to avoid overprovisioning.

Resource Monitoring: Use tools like AWS Cost Explorer or Kubernetes Cost Management.

Right-Sizing: Adjust resources to match service needs.

Conclusion

Building a production-ready microservice application involves integrating numerous components, each playing a critical role in ensuring scalability, reliability, and maintainability. By adopting best practices and leveraging the right tools, enterprises, IT sectors, and manufacturing industries can achieve operational excellence and deliver high-quality services to their customers.

Understanding and implementing these essential components, such as DevOps Automation Tools and robust testing practices, will enable organizations to fully harness the potential of microservice architecture. Whether you are part of a Mobile App Development Agency or looking to Hire Android App Developers, staying ahead in today’s competitive digital landscape is essential.

0 notes

Text

Why Java Spring Boot is Ideal for Building Microservices

In modern software development, microservices have become the go-to architecture for creating scalable, flexible, and maintainable applications. Java full-stack development is one of the most popular frameworks used for building microservices, thanks to its simplicity, powerful features, and seamless integration with other technologies. In this blog, we will explore why Java Spring Boot is an ideal choice for building microservices.

What are Microservices?

Microservices architecture is a design pattern where an application is broken down into smaller, independent services that can be developed, deployed, and scaled individually. Each microservice typically focuses on a specific business functionality, and communicates with other services via APIs (often RESTful). Microservices offer several advantages over traditional monolithic applications, including improved scalability, flexibility, and maintainability.

Why Spring Boot for Microservices?

Spring Boot, a lightweight, open-source Java framework, simplifies the development of stand-alone, production-grade applications. It comes with several features that make it an excellent choice for building microservices. Here are some key reasons why:

1. Rapid Development with Minimal Configuration

Spring Boot is known for its "convention over configuration" approach, which makes it incredibly developer-friendly. It removes the need for complex XML configurations, allowing developers to focus on the business logic rather than boilerplate code. For microservices, this means you can quickly spin up new services with minimal setup, saving time and increasing productivity.

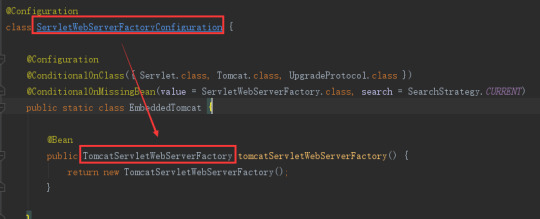

Spring Boot comes with embedded servers (like Tomcat, Jetty, and Undertow), so you don’t need to worry about setting up and managing separate application servers. This makes deployment and scaling easier in microservices environments.

2. Microservice-Friendly Components

Spring Boot is tightly integrated with the Spring Cloud ecosystem, which provides tools specifically designed for building microservices. Some of these key components include:

Spring Cloud Config: Centralizes configuration management for multiple services in a microservices architecture, allowing you to manage configuration properties in a version-controlled repository.

Spring Cloud Netflix: Includes several tools like Eureka (for service discovery), Hystrix (for fault tolerance), and Ribbon (for client-side load balancing), which are essential for building resilient and scalable microservices.

Spring Cloud Gateway: Provides a simple, effective way to route requests to different microservices, offering features like load balancing, security, and more.

Spring Cloud Stream: A framework for building event-driven microservices, making it easier to work with messaging middleware (e.g., RabbitMQ, Kafka).

These tools help you quickly build and manage your microservices in a distributed architecture.

3. Scalability and Flexibility

One of the main reasons organizations adopt microservices is the ability to scale individual components independently. Spring Boot’s lightweight nature makes it an ideal choice for microservices because it enables easy scaling both vertically (scaling up resources for a single service) and horizontally (scaling across multiple instances of a service).

With Spring Boot, you can run multiple instances of microservices in containers (e.g., Docker) and orchestrate them using platforms like Kubernetes. This makes it easier to handle high traffic, optimize resource usage, and maintain high availability.

4. Fault Tolerance and Resilience

In a microservices architecture, failures in one service can affect others. Spring Boot provides built-in mechanisms for handling fault tolerance and resilience, which are critical for maintaining the integrity and uptime of your application. With Spring Cloud Netflix Hystrix, you can implement circuit breakers that prevent cascading failures, providing a more robust and fault-tolerant system.

By using tools like Resilience4j, Spring Boot makes it easier to implement strategies like retries, timeouts, and fallbacks to ensure your services remain resilient even when some of them fail.

5. Easy Integration with Databases and Messaging Systems

Microservices often require interaction with various data stores and messaging systems. Spring Boot makes this integration straightforward by providing support for relational databases (like MySQL, PostgreSQL), NoSQL databases (like MongoDB, Cassandra), and message brokers (like RabbitMQ, Kafka).

With Spring Data, you can easily interact with databases using a simplified repository model, without having to write much boilerplate code. This enables microservices to manage their own data stores, promoting the independence of each service.

6. Security Features

Security is critical in microservices, as services often need to communicate with each other over the network. Spring Security provides a comprehensive security framework that integrates well with Spring Boot. With Spring Security, you can secure your microservices with features like:

Authentication and Authorization: Implementing OAuth2, JWT tokens, or traditional session-based authentication to ensure that only authorized users or services can access certain endpoints.

Secure Communication: Enabling HTTPS, encrypting data in transit, and ensuring that communications between services are secure.

Role-Based Access Control (RBAC): Ensuring that each microservice has the appropriate permissions to access certain resources.

These security features help ensure that your microservices are protected from unauthorized access and malicious attacks.

7. Monitoring and Logging

Monitoring and logging are essential for maintaining microservices in a production environment. With Spring Boot, you can easily implement tools like Spring Boot Actuator to expose useful operational information about your microservices, such as metrics, health checks, and system properties.

In addition, Spring Cloud Sleuth provides distributed tracing capabilities, allowing you to trace requests as they flow through multiple services. This helps you track and diagnose issues more efficiently in a microservices architecture.

Conclusion

Java full-stack development provides a solid foundation for building microservices, making it an excellent choice for developers looking to implement a modern, scalable, and resilient application architecture. The framework’s ease of use, integration with Spring Cloud components, scalability, and security features are just a few of the reasons why Spring Boot is an ideal platform for microservices.

As a Java full-stack development, understanding how to build microservices with Spring Boot will not only enhance your skill set but also open doors to working on more complex and modern systems. If you’re looking to develop scalable, flexible, and fault-tolerant applications, Java Spring Boot is the right tool for the job.

This concludes the blog on "Why Java full-stack development is Ideal for Building Microservices". Let me know if you'd like to continue to the next topic!

0 notes

Text

Assess Application and Cluster Readiness

Review Application Dependencies:

Analyze libraries, JDK versions, frameworks (e.g., Spring Boot, Jakarta EE), and external services (databases, APIs).

Identify any Kubernetes-specific configurations (e.g., ConfigMaps, Secrets, environment variables).

Containerization:

Ensure your Java app is containerized using tools like Docker.

Update the Dockerfile if necessary (e.g., for JDK/JRE updates or changes in base image).

2. Prepare Kubernetes Environment

Target Cluster Validation:

Ensure the target Kubernetes cluster is set up and ready.

Check for compatibility with the current cluster’s API version and resources.

Namespace Setup:

Create equivalent namespaces, RBAC roles, and policies in the target cluster.

Storage:

Verify Persistent Volume Claims (PVCs) and ensure similar storage classes are available.

3. Backup and Export Resources

Kubernetes Resources:

Export all application-related resources using kubectl:bashCopy codekubectl get all -n <namespace> -o yaml > resources.yaml

Persistent Data:

Back up database data or persistent volumes using tools like Velero or rsync.

4. Migrate Application Configurations

ConfigMaps and Secrets:

Export existing configurations:bashCopy codekubectl get configmap -n <namespace> -o yaml > configmap.yaml kubectl get secret -n <namespace> -o yaml > secret.yaml

Reapply them in the new cluster:bashCopy codekubectl apply -f configmap.yaml kubectl apply -f secret.yaml

5. Deploy to Target Cluster

Deploy Application:

Use Helm charts, Kubernetes manifests, or CI/CD pipelines to deploy the Java application to the new cluster.

Update the kubeconfig for the target cluster:bashCopy codekubectl config use-context <target-cluster-context> kubectl apply -f resources.yaml

Persistent Volumes:

Ensure data is restored to the persistent volumes if needed.

6. Test the Migration

Validation:

Ensure the application is running as expected (pods, services, and networking).

Run integration and regression tests.

Connectivity:

Validate external service integrations (e.g., databases, APIs).

Check ingress configurations for public access.

7. Cutover and Cleanup

Switch Traffic:

Update DNS or external load balancer to direct traffic to the new cluster.

Monitor:

Use tools like Prometheus, Grafana, or ELK Stack to monitor application health.

Clean Up Old Environment:

After verifying stability, clean up resources in the old cluster.

Tools to Assist in Migration

Velero: For backup and restore of Kubernetes resources and persistent volumes.

Helm: To manage application deployments and upgrades.

Kubernetes Dashboard: For real-time cluster monitoring.

Kubectl Plugins: To enhance CLI functionality.

If you provide more details about the migration (e.g., specific cloud providers or Kubernetes environments), I can offer more tailored advice!

4o

You said:

Migration of 5000 files per hr fix

ChatGPT said:

ChatGPT

If you're migrating a large number of files (e.g., 5000 files per hour) and encountering performance bottlenecks, you can improve the speed and reliability of your file migration by addressing several key areas. Here's a step-by-step guide to optimize file transfer:

1. Identify and Analyze Bottlenecks

File System Bottlenecks:

Check the source and destination disk I/O performance.

Ensure the files are not locked or being used during migration.

Network Bandwidth:

Measure your available network bandwidth using tools like iperf and ensure it can handle the expected load.

File Metadata Overhead:

Small files can add significant overhead due to metadata operations (e.g., file creation, permissions).

2. Optimize Transfer Mechanisms

Use Parallel Processing:

Transfer multiple files concurrently using multithreaded or parallel tools like:

rsync with --max-size and --parallel options.

GNU Parallel for concurrent file transfers.

bashCopy codefind /source/directory -type f | parallel -j8 rsync -a {} /destination/directory

Use a custom script or a library like Python's concurrent.futures for more control.

Batch File Transfers:

Group files into batches (e.g., tar archives) to reduce the overhead of transferring many small files individually.bashCopy codetar -cvf files.tar /source/files && rsync files.tar /destination Extract the files on the destination:bashCopy codetar -xvf files.tar

3. Ensure Network Optimization

Compression:

Compress files during transfer to reduce data volume:bashCopy codersync -az /source/directory /destination/directory

Increase TCP Window Size:

Tweak TCP settings for large transfers using tools like scp or rsync with buffer options:bashCopy codersync --bwlimit=0 --whole-file /source /destination

Use Faster Protocols:

Use optimized file transfer protocols like Rclone, Aspera, or SFTP for high-speed transfer.

4. Improve Source and Destination Performance

Disk Performance:

Optimize disk reads and writes using SSDs for temporary storage or caches.

Ensure the file system supports efficient file handling (e.g., ext4, XFS).

File System Tuning:

For very large numbers of files, tune parameters like inode_cache to handle metadata efficiently.

5. Error Handling and Retry Logic

Retry Failed Transfers:

Use tools like rsync to retry failed files automatically:bashCopy codersync -avz --partial --progress /source /destination

Logging and Monitoring:

Log all transferred files and

4o

You said:

File trsnsfer to s3 improve

ChatGPT said:

ChatGPT

Improving file transfer to Amazon S3 requires addressing both performance and reliability. Below are strategies and tools to optimize large-scale or high-volume file transfers:

1. Use AWS-Specific Tools

AWS CLI with Parallelism:

Use the --multipart-chunk-size-mb and --parallel flags to upload files in parallel and in chunks:bashCopy codeaws s3 cp /local/path s3://your-bucket-name --recursive --storage-class STANDARD_IA --region <region>

Enable parallel transfers: Update your AWS CLI configuration file (~/.aws/config) to increase concurrency:plaintextCopy code[default] s3 = max_concurrent_requests = 10 max_queue_size = 1000 multipart_threshold = 64MB multipart_chunksize = 64MB

AWS DataSync:

Use AWS DataSync for automated, high-performance, large-scale transfers with minimal manual intervention. DataSync can also manage retry and error handling.

2. Optimize S3 Uploads

Multipart Upload:

Use multipart uploads for files larger than 100 MB to improve transfer speed and reliability.

Example using AWS CLI:bashCopy codeaws s3api create-multipart-upload --bucket your-bucket-name --key file_key

Automate multipart uploads with the AWS SDK or tools like boto3 for Python.

Use the Correct Storage Class:

Choose the appropriate storage class to save costs and improve upload speeds:

STANDARD (default): High performance for frequently accessed data.

INTELLIGENT_TIERING: Automatically optimizes storage costs for infrequently accessed files.

3. Improve Network Performance

Enable Transfer Acceleration:

Use S3 Transfer Acceleration for faster uploads over long distances by leveraging AWS edge locations:bashCopy codeaws s3 cp /local/path s3://your-bucket-name --region <region> --endpoint-url https://<bucket-name>.s3-accelerate.amazonaws.com

Compression:

Compress files before transferring to reduce data size:bashCopy codetar -czvf archive.tar.gz /local/path && aws s3 cp archive.tar.gz s3://your-bucket-name

Optimize Network:

Use AWS Direct Connect or a high-bandwidth internet connection for large-scale transfers.

Monitor latency and bandwidth with tools like iperf.

4. Automate and Parallelize Transfers

Rclone:

Use rclone for high-speed S3-compatible transfers with parallelism and retry capabilities:bashCopy coderclone copy /local/path s3:your-bucket-name --transfers 16 --s3-upload-concurrency 16

GNU Parallel:

Transfer files concurrently by splitting file lists:bashCopy codefind /local/path -type f | parallel -j10 aws s3 cp {} s3://your-bucket-name

5. Error Handling and Retry

Retry Mechanisms:

Use tools or scripts that automatically retry failed uploads.

AWS CLI has built-in retry logic; increase retries if necessary:bashCopy codeaws s3 cp --cli-read-timeout 600 --cli-connect-timeout 600 /local/path s3://your-bucket-name

Chunked Retry:

Break large transfers into smaller, manageable chunks and retry failed chunks only.

6. Monitor and Optimize Transfers

AWS CloudWatch Metrics:

Monitor transfer performance and error rates in real-time.

S3 Inventory:

Use S3 inventory reports to validate uploaded files.

AWS SDKs or Custom Scripts:

Build custom monitoring and retry logic with SDKs like boto3 (Python).

Example Optimized Upload Command

bash

Copy code

aws s3 cp /local/path s3://your-bucket-name --recursive --storage-class STANDARD --region us-east-1 --cli-read-timeout 600 --cli-connect-timeout 600

When to Use Snowball or Snowmobile

For extremely large datasets (terabytes or petabytes), use AWS Snowball or AWS Snowmobile for offline data transfer to S3.

These steps can dramatically improve the efficiency and reliability of your file transfer process to S3. Let me know if you need help implementing any of these!

4o

You said:

Improve fole transfers

ChatGPT said:

ChatGPT

Improving file transfer performance and reliability, whether between servers, local systems, or to cloud storage like Amazon S3, involves a combination of strategies. Here are some best practices for improving file transfers:

1. Optimize the File Transfer Process

Parallelize Transfers

Transfer multiple files concurrently to improve throughput:

Use tools like rsync, scp, or cloud-specific SDKs with multi-threading capabilities.

Example with rsync:bashCopy codersync -azP --info=progress2 --max-size=100M --exclude="*.tmp" /source/ /destination/

Batch Small Files

Small files cause metadata overhead during transfer. Bundle them into archives:bashCopy codetar -czvf archive.tar.gz /source/ Transfer the archive, then extract on the destination:bashCopy codetar -xzvf archive.tar.gz

2. Use High-Performance Transfer Tools

Rclone

Ideal for cloud storage and large-scale transfers with parallelism:bashCopy coderclone copy /source/ remote:bucket-name --transfers=16 --checkers=8

GNU Parallel

Parallelize file uploads/downloads:bashCopy codefind /source/ -type f | parallel -j8 scp {} user@destination:/target/

Multithreaded Tools

Use tools with built-in multithreading, such as bbcp, Aspera, or scp (with parallel scripts).

3. Network Optimization

Compression

Compress files before transfer to reduce the amount of data transmitted:bashCopy codegzip largefile.txt Or combine with the transfer:bashCopy codetar czf - /source/ | ssh user@destination "tar xzf - -C /target/"

Bandwidth Management

Limit bandwidth usage to prevent throttling:

With rsync:bashCopy codersync --bwlimit=1000 -az /source/ /destination/

Use TCP tuning options to optimize network transfer rates:bashCopy codesysctl -w net.ipv4.tcp_window_scaling=1

Use Faster Protocols

Use optimized transfer protocols:

SFTP: Secure but slower for high-volume transfers.

Rsync/SSH: Efficient for incremental and large transfers.

Aspera: High-speed UDP-based protocol.

Enable Transfer Acceleration (Cloud)

For Amazon S3:bashCopy codeaws s3 cp /source/ s3://your-bucket/ --region <region> --endpoint-url https://<bucket-name>.s3-accelerate.amazonaws.com

4. Error Handling and Resilience

Resume Transfers

Use tools that support resuming failed transfers:

Rsync:bashCopy codersync -az --partial /source/ /destination/

AWS CLI:bashCopy codeaws s3 cp --continue /source/ s3://bucket-name/

Retry Logic

Implement retry logic for failed transfers using scripts or tools.

Example using bash:bashCopy codefor i in {1..5}; do scp file user@destination:/target/ && break || sleep 5 done

5. Monitor and Analyze Performance

Real-Time Monitoring

Monitor progress with tools:

Rsync: --progress flag.

SCP: Wrap in pv for progress visualization:bashCopy codetar cf - /source/ | pv | ssh user@destination "tar xf - -C /target/"

Analyze Bottlenecks

Use tools like iperf or nload to check network performance.

Use iotop or dstat to monitor disk I/O on both source and destination.

6. Scale with Advanced Solutions

Distributed File Transfer

Use tools like Globus for efficient distributed data transfers.

Data Transfer Appliances

For extremely large datasets, consider appliances like AWS Snowball or offline methods to ship data physically.

Content Delivery Networks (CDNs)

Use CDNs for faster file distribution to end users.

By combining these strategies—parallelism, compression, protocol optimization, and monitoring—you can significantly enhance file transfer speed and reliability. Let me know if you'd like further customization for a specific use case!

4o

You said:

Threadpool

ChatGPT said:

ChatGPT

A thread pool is a collection of pre-initialized worker threads that stand ready to perform tasks. Instead of creating and destroying threads for each task, a thread pool manages a set of reusable threads, improving performance and resource utilization in multi-threaded applications.

Here’s how to effectively use and optimize thread pools in your application.

Why Use a Thread Pool?

Performance: Reduces the overhead of creating and destroying threads repeatedly.

Resource Management: Controls the number of concurrent threads, preventing system overload.

Scalability: Allows a controlled environment for task execution, making it easier to scale applications.

Key Concepts of Thread Pools

Core Threads: Minimum number of threads that are always kept alive.

**Max Threads

4o

You said:

Improve java spring file transfer

ChatGPT said:

ChatGPT

Improving file transfer in a Java Spring application involves optimizing both the code and the underlying infrastructure. Below are strategies to enhance file transfer performance and reliability.

1. Optimize File Transfer Code

Use Streaming for Large Files

Avoid loading the entire file into memory; instead, use streaming:javaCopy code@PostMapping("/upload") public ResponseEntity<String> uploadFile(@RequestParam("file") MultipartFile file) throws IOException { try (InputStream inputStream = file.getInputStream()) { // Process the input stream directly // Example: Save the file Files.copy(inputStream, Paths.get("target-directory/" + file.getOriginalFilename()), StandardCopyOption.REPLACE_EXISTING); } return ResponseEntity.ok("File uploaded successfully!"); }

Enable Asynchronous File Transfers

Use Spring's @Async annotation for non-blocking file uploads/downloads:javaCopy code@Async public CompletableFuture<String> uploadFileAsync(MultipartFile file) { // File processing logic return CompletableFuture.completedFuture("Upload Complete"); }

Use Chunked Uploads for Large Files

Break large files into smaller chunks for efficient transfer and to handle interruptions gracefully:javaCopy code@PostMapping("/uploadChunk") public ResponseEntity<String> uploadChunk(@RequestParam("chunk") MultipartFile chunk, @RequestParam("fileId") String fileId) { // Append chunk data to the file return ResponseEntity.ok("Chunk uploaded successfully!"); }

2. Optimize for Cloud Storage (e.g., AWS S3, Azure Blob)

Use SDKs for Direct Uploads

Use cloud-specific SDKs (e.g., AWS SDK for S3) to upload files directly to cloud storage instead of saving locally first:javaCopy code@PostMapping("/uploadToS3") public ResponseEntity<String> uploadToS3(@RequestParam("file") MultipartFile file) throws IOException { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); String bucketName = "your-bucket-name"; s3Client.putObject(bucketName, file.getOriginalFilename(), file.getInputStream(), new ObjectMetadata()); return ResponseEntity.ok("File uploaded to S3!"); }

Enable S3 Transfer Acceleration

Use Transfer Acceleration for faster uploads to Amazon S3:javaCopy codeAmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withAccelerateModeEnabled(true) .build();

3. Optimize File Transfer in REST APIs

Enable Compression

Compress file responses to reduce data size:yamlCopy codespring: servlet: multipart: enabled: true max-file-size: 50MB max-request-size: 50MB gzip: enabled: true min-size: 1024

Use HTTP/2

Switch to HTTP/2 for better concurrency and reduced latency:yamlCopy codeserver: http2: enabled: true

Resume Interrupted Uploads

Use ETag or file metadata to allow resumable file uploads:javaCopy code@PostMapping("/resumeUpload") public ResponseEntity<String> resumeUpload(@RequestParam("file") MultipartFile file, @RequestParam("offset") Long offset) { // Resume logic based on offset return ResponseEntity.ok("Upload resumed!"); }

4. Parallelize File Transfers

Multithreaded File Upload

Use a ThreadPoolExecutor or Spring's TaskExecutor:Copy code

In addition to using @Async, there are other strategies and tools to improve file transfer performance and reliability in a Java Spring application. Here are some approaches:

1. Reactive Programming with Spring WebFlux

For truly non-blocking and asynchronous file transfers, use Spring WebFlux:

Streaming File Upload:javaCopy code@PostMapping(value = "/upload", consumes = MediaType.MULTIPART_FORM_DATA_VALUE) public Mono<Void> uploadFile(Flux<FilePart> filePartFlux) { return filePartFlux.flatMap(filePart -> { Path path = Paths.get("target-directory/" + filePart.filename()); return filePart.transferTo(path); }).then(); }

Streaming File Download:javaCopy code@GetMapping(value = "/download", produces = MediaType.APPLICATION_OCTET_STREAM_VALUE) public Mono<ResponseEntity<Resource>> downloadFile() { Path path = Paths.get("target-directory/large-file.txt"); return Mono.just(ResponseEntity.ok() .header(HttpHeaders.CONTENT_DISPOSITION, "attachment; filename=large-file.txt") .body(new FileSystemResource(path))); }

2. Use CompletableFuture and ExecutorService

Combine CompletableFuture with a custom ExecutorService for better control:javaCopy code@PostMapping("/upload") public CompletableFuture<String> uploadFile(@RequestParam("file") MultipartFile file) { ExecutorService executor = Executors.newFixedThreadPool(4); return CompletableFuture.supplyAsync(() -> { try { // File processing logic Files.copy(file.getInputStream(), Paths.get("target/" + file.getOriginalFilename()), StandardCopyOption.REPLACE_EXISTING); return "File uploaded successfully!"; } catch (IOException e) { throw new RuntimeException("File upload failed!", e); } }, executor); }

3. Apache Kafka for Asynchronous Processing

Use Apache Kafka to decouple file upload from processing:

Producer sends file metadata to Kafka after upload:javaCopy code@PostMapping("/upload") public ResponseEntity<String> uploadFile(@RequestParam("file") MultipartFile file) throws IOException { // Save the file Files.copy(file.getInputStream(), Paths.get("target/" + file.getOriginalFilename())); // Send metadata to Kafka kafkaTemplate.send("file-topic", file.getOriginalFilename()); return ResponseEntity.ok("File uploaded and queued for processing!"); }

Consumer processes files asynchronously:javaCopy code@KafkaListener(topics = "file-topic", groupId = "file-group") public void processFile(String fileName) { // Process the file Path path = Paths.get("target/" + fileName); System.out.println("Processing file: " + path); }

4. Chunked File Transfers

Handle large files by uploading them in chunks:

Frontend sends file chunks with an identifier.

Backend reconstructs the file:javaCopy code@PostMapping("/uploadChunk") public ResponseEntity<String> uploadChunk(@RequestParam("chunk") MultipartFile chunk, @RequestParam("fileId") String fileId, @RequestParam("chunkNumber") int chunkNumber) { Path path = Paths.get("target/" + fileId + "_" + chunkNumber); try { Files.copy(chunk.getInputStream(), path, StandardCopyOption.REPLACE_EXISTING); } catch (IOException e) { return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR).body("Failed to upload chunk"); } return ResponseEntity.ok("Chunk uploaded successfully!"); }

Reassemble chunks when all are uploaded.

5. WebSockets for Real-Time Progress

Use WebSockets to provide real-time feedback on file transfer progress:javaCopy code@Controller public class WebSocketController { @MessageMapping("/uploadStatus") @SendTo("/topic/progress") public String sendProgress(String status) { return status; } }

Send progress updates from the server while uploading:javaCopy codesimpMessagingTemplate.convertAndSend("/topic/progress", "50% completed");

6. Redis or Database Queues

Use a Redis queue or a database to handle file metadata asynchronously.

Enqueue metadata after upload:javaCopy coderedisTemplate.opsForList().leftPush("fileQueue", fileMetadata);

Process the queue in the background:javaCopy codeString metadata = redisTemplate.opsForList().rightPop("fileQueue");

7. Use MultipartConfig for Large Files

Configure multipart file handling for large files in application.properties:propertiesCopy codespring.servlet.multipart.max-file-size=1GB spring.servlet.multipart.max-request-size=2GB

Custom bean for fine-tuning:javaCopy code@Bean public MultipartConfigElement multipartConfigElement() { MultipartConfigFactory factory = new MultipartConfigFactory(); factory.setMaxFileSize("1GB"); factory.setMaxRequestSize("2GB"); return factory.createMultipartConfig(); }

8. Use AWS S3 for Direct Uploads

Use pre-signed URLs to offload upload work to the client:javaCopy code@GetMapping("/generatePresignedUrl") public ResponseEntity<String> generatePresignedUrl(@RequestParam String fileName) { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); GeneratePresignedUrlRequest generatePresignedUrlRequest = new GeneratePresignedUrlRequest("bucket-name", fileName) .withMethod(HttpMethod.PUT) .withExpiration(new Date(System.currentTimeMillis() + 3600 * 1000)); URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest); return ResponseEntity.ok(url.toString()); }

The client uploads directly to S3 using the URL.

9. Optimize File Transfer for High Performance

Compression

Compress files before upload to reduce transfer size:javaCopy codebyte[] compressedData = compress(file.getBytes()); Files.write(Paths.get("compressed-file.gz"), compressedData);

Load Balancing

Use a load balancer (e.g., AWS ALB or NGINX) for scalable upload endpoints.

Retry Logic

Implement retries for failed transfers to improve reliability:javaCopy codeint maxRetries = 3; while (retries < maxRetries) { try { // Attempt file upload break; } catch (IOException e) { retries++; if (retries == maxRetries) { throw e; } } }

These strategies can enhance file transfer performance, scalability, and reliability in Java Spring applications. Let me know if you need code examples for specific use cases!

4o

You said:

S3 relaibility

ChatGPT said:

ChatGPT

Ensuring reliability when transferring files to Amazon S3 involves minimizing failures, handling errors effectively, and ensuring data integrity. Below are best practices and techniques to achieve reliable file transfers to S3:

1. Use Multipart Upload for Large Files

Multipart uploads split large files into smaller parts, upload them in parallel, and reassemble them in S3. This improves reliability and speeds up transfers.

Example Using AWS SDK (Java)

java

Copy code

AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); String bucketName = "your-bucket-name"; String fileName = "large-file.zip"; String keyName = "uploads/" + fileName; File file = new File(fileName); TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); Upload upload = tm.upload(bucketName, keyName, file); try { upload.waitForCompletion(); System.out.println("File uploaded successfully!"); } catch (AmazonClientException e) { System.err.println("Upload failed: " + e.getMessage()); }

2. Use Retry Logic

Add retry mechanisms to handle intermittent network issues or service interruptions.

AWS SDK Retry Settings

The AWS SDK retries failed requests automatically, but you can customize it:

java

Copy code

ClientConfiguration config = new ClientConfiguration(); config.setRetryPolicy(PredefinedRetryPolicies.getDefaultRetryPolicyWithCustomMaxRetries(5)); AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withClientConfiguration(config) .build();

3. Enable Transfer Acceleration

Transfer Acceleration uses AWS edge locations to improve upload speeds over long distances.

Enable Transfer Acceleration in S3

Enable it in the S3 bucket settings.

Update your client code to use the accelerated endpoint:

java

Copy code

AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withAccelerateModeEnabled(true) .build();

4. Validate Data Integrity

Use MD5 checksums to ensure the uploaded file matches the original file.

AWS CLI Example

bash

Copy code

aws s3 cp file.txt s3://your-bucket/ --content-md5 <calculated-md5>

Java Example

java

Copy code

String md5 = Base64.getEncoder().encodeToString(DigestUtils.md5(new FileInputStream(file))); ObjectMetadata metadata = new ObjectMetadata(); metadata.setContentMD5(md5); PutObjectRequest request = new PutObjectRequest(bucketName, keyName, new FileInputStream(file), metadata); s3Client.putObject(request);

5. Monitor File Transfers

Use CloudWatch Metrics to monitor S3 request success, failures, and latencies.

Monitor transfer progress with TransferManager in the AWS SDK:

java

Copy code

TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); Upload upload = tm.upload(bucketName, keyName, file); upload.addProgressListener(progressEvent -> { System.out.println("Transferred bytes: " + progressEvent.getBytesTransferred()); }); upload.waitForCompletion();

6. Handle Failures Gracefully

Use Resumable Transfers for interrupted uploads with TransferManager:

java

Copy code

TransferManager tm = TransferManagerBuilder.standard().build(); Upload upload = tm.upload(bucketName, keyName, file); // Add a progress listener upload.addProgressListener((ProgressListener) progressEvent -> { System.out.println("Transferred: " + progressEvent.getBytesTransferred()); }); // Graceful failure handling try { upload.waitForCompletion(); } catch (AmazonClientException e) { System.err.println("Error occurred: " + e.getMessage()); }

7. Use Pre-Signed URLs

Generate pre-signed URLs for reliable, time-limited uploads directly from the client, reducing the load on your server.

Generate Pre-Signed URL

java

Copy code

GeneratePresignedUrlRequest generatePresignedUrlRequest = new GeneratePresignedUrlRequest(bucketName, keyName) .withMethod(HttpMethod.PUT) .withExpiration(new Date(System.currentTimeMillis() + 3600 * 1000)); URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest); System.out.println("Pre-Signed URL: " + url);

8. Use S3 Event Notifications

Configure S3 Event Notifications to trigger AWS Lambda or SNS for post-upload validation, processing, or alerting on failures.

Example: Notification for Upload Completion

Configure your S3 bucket to send an event to an SQS queue or Lambda function upon successful upload.

9. Leverage Data Replication

Use S3 Cross-Region Replication (CRR) or Same-Region Replication (SRR) for redundancy.

Automatically replicate files to another bucket for disaster recovery.

10. Automate with AWS DataSync

Use AWS DataSync for automated and highly reliable file transfers between on-premises storage and S3. It includes built-in retry and integrity checks.

11. Optimize Network Performance

Compress files before uploading:javaCopy codeFiles.write(Paths.get("compressed-file.gz"), gzipCompress(fileContent));

Use efficient transfer protocols (e.g., HTTP/2 if supported by your client).

12. Secure Transfers

Always use HTTPS for secure communication.

Use S3 Bucket Policies and IAM Roles to restrict access to authorized users.

Example: Complete Reliable Upload Function

java

Copy code

public void reliableUpload(File file, String bucketName, String keyName) { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); try { // Multipart upload Upload upload = tm.upload(bucketName, keyName, file); upload.addProgressListener((ProgressListener) progressEvent -> { System.out.println("Transferred bytes: " + progressEvent.getBytesTransferred()); }); upload.waitForCompletion(); System.out.println("File uploaded successfully!"); } catch (AmazonClientException e) { System.err.println("Upload failed: " + e.getMessage()); } finally { tm.shutdownNow(); } }

0 notes

Text

hi

. how to implement expection handling using Spring boot/rest 2. how to configure/implement Spring cloud config server. 3. How to setup two way SSL using Java/Spring boot 4. difference between Bean factory vs Application context in spring application 5. what happens when u send a request to spring boot ? 6. How does Spring Marshall/unmarshall? 7. How to implement Transactions using Spring boot. 8. How to do load balancing using Spring boot both client side and server side. 9. How to add css/javascript/images to spring boot application UI 10. Any spring /spring boot performance issues you encountered. 11. how to implement spring boot security using OAuth 12. How to setup multiple datasources using spring boot

0 notes

Text

How to Implement Java Microservices Architecture

Implementing a microservices architecture in Java is a strategic decision that can have significant benefits for your application, such as improved scalability, flexibility, and maintainability. Here's a guide to help you embark on this journey.

1. Understand the Basics

Before diving into the implementation, it's crucial to understand what microservices are. Microservices architecture is a method of developing software systems that focuses on building single-function modules with well-defined interfaces and operations. These modules, or microservices, are independently deployable and scalable.

2. Design Your Microservices

Identify Business Capabilities

Break down your application based on business functionalities.

Each microservice should represent a single business capability.

Define Service Boundaries

Ensure that each microservice is loosely coupled and highly cohesive.

Avoid too many dependencies between services.

3. Choose the Right Tools and Technologies

Java Frameworks

Spring Boot: Popular for building stand-alone, production-grade Spring-based applications.

Dropwizard: Useful for rapid development of RESTful web services.

Micronaut: Great for building modular, easily testable microservices.

Containerization

Docker: Essential for creating, deploying, and running microservices in isolated environments.

Kubernetes: A powerful system for automating deployment, scaling, and management of containerized applications.

Database

Use a database per service pattern. Each microservice should have its private database to ensure loose coupling.

4. Develop Your Microservices

Implement RESTful Services

Use Spring Boot to create RESTful services due to its simplicity and power.

Ensure API versioning to manage changes without breaking clients.

Asynchronous Communication

Implement asynchronous communication, especially for long-running or resource-intensive tasks.

Use message queues like RabbitMQ or Kafka for reliable, scalable, and asynchronous communication between microservices.

Build and Deployment

Automate build and deployment processes using CI/CD tools like Jenkins or GitLab CI.

Implement blue-green deployment or canary releases to reduce downtime and risk.

5. Service Discovery and Configuration

Service Discovery

Use tools like Netflix Eureka for managing and discovering microservices in a distributed system.

Configuration Management

Centralize configuration management using tools like Spring Cloud Config.

Store configuration in a version-controlled repository for auditability and rollback purposes.

6. Monitoring and Logging

Implement centralized logging using ELK Stack (Elasticsearch, Logstash, Kibana) for easier debugging and monitoring.

Use Prometheus and Grafana for monitoring metrics and setting up alerts.

7. Security

Implement API gateways like Zuul or Spring Cloud Gateway for security, monitoring, and resilience.

Use OAuth2 and JWT for secure, stateless authentication and authorization.

8. Testing

Write unit and integration tests for each microservice.

Implement contract testing to ensure APIs meet the contract expected by clients.

9. Documentation

Document your APIs using tools like Swagger or OpenAPI. This helps in maintaining clarity about service endpoints and their purposes.

Conclusion

Implementing a Java microservices architecture can significantly enhance your application's scalability, flexibility, and maintainability. However, the complexity and technical expertise required can be considerable. Hire Java developers or avail Java development services can be pivotal in navigating this transition successfully. They bring the necessary expertise in Java frameworks and microservices best practices to ensure your project's success. Ready to transform your application architecture? Reach out to professional Java development services from top java companies today and take the first step towards a robust, scalable microservice architecture.

0 notes

Text

Mastering Java Scope in Emerging Technologies

Your Gateway to the Future Java, the ubiquitous programming language, has stood the test of time, remaining relevant and evolving alongside technological advancements. While its core principles stay strong, mastering Java scope in emerging technologies unlocks a new level of development prowess. This article delves into the exciting intersection of Java scope and cutting-edge fields, equipping you with the knowledge and skills to navigate the software landscape of tomorrow.

Understanding the Power of Scope

Java scope defines the accessibility and visibility of variables, methods, and classes within your code. Traditionally, mastering scope involved understanding local and global variables, access modifiers, and class hierarchies. However, emerging technologies introduce novel contexts where scope takes on new dimensions.

Cloud-Native Development:

The cloud has revolutionized software deployment, and Java thrives in this distributed environment. Microservices architectures, built with loosely coupled, independently deployable services, demand a nuanced understanding of scope. Each service has its own scope, but inter-service communication necessitates controlled data sharing. Frameworks like Spring Cloud Config Server enable centralized configuration management, while techniques like API gateways manage service boundaries. Mastering scope in this context involves balancing autonomy and collaboration, ensuring secure and efficient communication between microservices.

Web Development Training In Jodhpur, Full Stack Web Development Training In Jodhpur, Python Training In Jodhpur, Flutter Training In Jodhpur, Android App Development Training In Jodhpur, Java Training In Jodhpur, Google Ads Training In Jodhpur, Coding Class In Jodhpur, oilab, Digital marketing Training In Jodhpur , Seo Training In Jodhpur, Digital Marketing Course In Jodhpur, SEO Training In Udaipur, Digital Marketing Course In Udaipur, Digital Marketing Training In Udaipur, Full stack web Development Training In Udaipur, Web Development Course In Udaipur

Big Data and Analytics:

The ever-growing data deluge demands robust tools for processing and analysis. Java plays a crucial role in big data ecosystems, with frameworks like Hadoop and Spark leveraging its concurrency and scalability. When dealing with massive datasets, understanding scope becomes vital for optimizing performance and memory usage. Partitioning data into separate scopes based on processing needs or geographical distribution allows for efficient parallel processing and targeted analysis.

Opens in a new window

Big Data and Analytics with Java

Artificial Intelligence and Machine Learning:

AI and ML are transforming various industries, and Java is making its mark in this domain as well. Libraries like TensorFlow and PyTorch utilize Java for building and deploying complex machine learning models. Scope plays a crucial role in managing training data, ensuring efficient access to relevant subsets while preventing unauthorized modifications. Additionally, understanding scope within AI algorithms helps developers control the visibility and influence of different variables on the model's output.

Artificial Intelligence and Machine Learning with Java

Internet of Things (IoT):

The interconnected world of the IoT presents unique challenges for Java developers. Embedded devices often have limited resources, necessitating careful memory management. Scoping techniques like local variable caching and resource pooling become crucial for optimizing code footprint and ensuring efficient operation. Additionally, secure communication protocols in the IoT ecosystem rely on controlled data access, where proper scope management plays a vital role in protecting sensitive information.

Internet of Things (IoT) with Java

Mastering the Art of Scope in Emerging Technologies:

As you delve into these frontiers, remember these key takeaways:

Think in terms of context: Understand the specific needs and constraints of each emerging technology when applying scope principles.

Leverage appropriate frameworks and libraries: Utilize tools designed for specific domains to manage scope effectively.

Prioritize security and data privacy: Implement proper access control mechanisms within your code to protect sensitive information.

Stay informed and adaptable: Emerging technologies evolve rapidly, so continuous learning and upskilling are essential.

By mastering Java scope in the context of these emerging technologies, you unlock a world of possibilities. You become a builder of the future, crafting innovative solutions that leverage the power of Java in groundbreaking ways. So, embrace the challenge, expand your horizons, and let Java scope be your gateway to a thriving future in software development.

Remember, the journey to mastering Java scope in emerging technologies is an ongoing process. Embrace the learning curve, experiment with different approaches, and actively seek out new challenges. The rewards are plentiful, not just in terms of career opportunities but also in the satisfaction of shaping the software landscape of tomorrow.

0 notes

Quote

独立行政法人情報処理推進機構(IPA)は11月10日、複数の VMware 製品におけるログファイルからの情報漏えいに関する脆弱性について「JVN iPedia」で発表した。影響を受けるシステムは以下の通り。・VMwareSpring Cloud Config 3.1.0 から 3.1.6Spring Cloud Config 4.0.0 から 4.0.1Spring Cloud Vault 3.1.0 から 3.1.2Spring Cloud Vault 4.0.0Spring Vault 2.3.0 以上 2.3.3 未満Spring Vault 3.0.0 以上 3.0.2 未満 VMware の Spring Cloud Config、Spring Cloud Vault、Spring Vault には、ログファイルからの情報漏えいに関する脆弱性が存在し、想定される影響としては、情報を取得される可能性がある。 JVN iPediaでは、ベンダ情報を参照して適切な対策を実施するよう呼びかけている。 《ScanNetSecurity》 ソース・関連リンク JVN iPedia

複数の VMware 製品にログファイルからの情報漏えいに関する脆弱性 | ScanNetSecurity

1 note

·

View note

Text

Kubernetes alternatives to Spring Java framework

Spring Cloud and Kubernetes both complement each other to build a cloud-native platform and run microservices on the Kubernetes containers. Kubernetes provides many features which are similar to Spring Cloud and Spring Config Server features. Spring framework has been around for many years. Even today, many organizations prefer to go with Spring because it provides many advanced features with…

View On WordPress

0 notes

Text

0 notes

Link

0 notes

Text

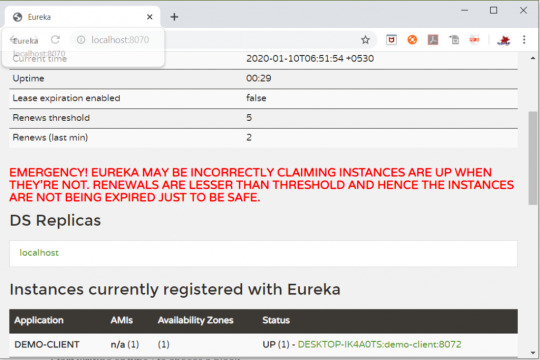

Create Spring Cloud Configuration Server | Setup Eureka Service Discovery Registry Server

Full Video Link https://youtu.be/Exoy4ZNAO9Y Hello friends, new #video on #springcloud #configserver #eureka #servicediscovery setup is published on #codeonedigest #youtube channel. @java #java #aws #awscloud @awscloud @AWSCloudIndia #sal

In this video we will learn how to create spring cloud configuration server and eureka service registry server step by step in spring boot framework. Service registry will be use for service discovery. Configuration server is used to store application configuration in central storage. Application, microservices connect configuration server to fetch respective configuration from central…

View On WordPress

#config server#config server firewall#config server in microservices#config server in spring boot example#config server in spring boot microservices#config server spring#config server spring boot#config server spring boot github#config server spring cloud#configuration server#configuration server dns#configuration server in microservices#configuration server spring boot#configuration server step by step#eureka service#eureka service discovery#eureka service discovery example#eureka service discovery example spring boot#eureka service discovery spring boot#eureka service in microservices#eureka service registry#eureka service registry example#eureka service registry spring boot#eureka service spring boot

0 notes

Text

Microservices Architecture with Spring Cloud

What are microservices?

Microservices is an architecture(service-oriented architecture) (SOA). In this architecture, applications are broken down into various services. The motivation behind this is separation and modularity.

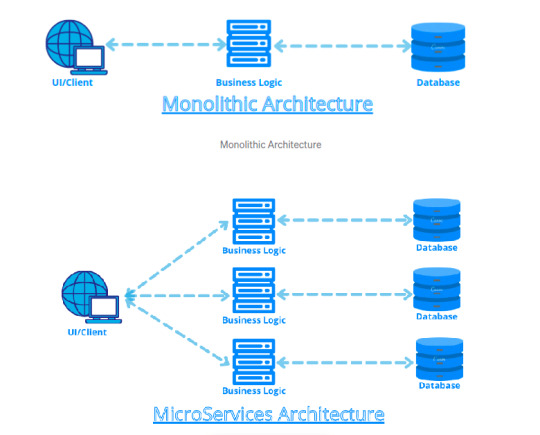

Monolithic architecture and microservices are more beneficial.

We don’t need to specify all business logic into a single software module. It leads to complexity and time-consuming debugging.

In the above diagram, as we can see, we have only one unit of application in a monolithic architecture. In a microservices architecture, we can see three different services.

Advantages

Increases scalability

More flexibility

Modular architecture

Ease of introducing new features

More reliable and robust structure

Challenges

Cost management

Network latency and load balancing

Complexity at the end-to-end testing

Health Care Example

Let’s take an example

Spring Cloud

It helps us build microservices architecture with various components it provides. Spring cloud helps us manage communication, security threats, maintenance and fault tolerance with different components. Let’s see them one by one.

Spring Cloud Components

Spring Cloud Config Server

Feign Client

Service Discovery and Registry with Eureka

Spring Cloud Gateway

Resillience4j

Spring Cloud Bus

Spring Cloud -> Config Server

Config server uses the git repo to store configurations.

Ease of managing configuration of multiple microservices in a single place

Configurations can be managed with the application.yaml / properties files within a git repo

Each microservice can connect to the config server and get the required configurations

Spring Cloud -> Feign Client

Microservices need to communicate with each other to exchange information. Feign client can be used for that learn more

#microservices#cloud blogs#spring#Healthcare#healthcare software development company#Healthcare interoperability#healthcare solutions

0 notes

Text

Spring Boot Start Tomcat

Unable to start embedded tomcat spring-boot 2

Spring Boot Doesn't Start Tomcat

Spring Boot Don't Start Tomcat

Spring Boot Not Start Tomcat

Spring boot: Unable to start embedded Tomcat servlet container , Try to change the port number in application.yaml (or application.properties ) to something else. In my condition when I got an exception ' Unable to start embedded Tomcat servlet container', I opened the debug mode of spring boot by adding debug=true in the application.properties,

Chase online lets you manage your Chase accounts, view statements, monitor activity, pay bills or transfer funds securely from one central place. To learn more, visit the Banking Education Center. For questions or concerns, please contact Chase customer service or let us know about Chase. Make purchases with your debit card, and bank from almost anywhere with your phone, tablet or computer and at our 16,000 ATMs and more than 4,700 branches nationwide. For questions or concerns, please contact Chase customer service or let us know about Chase complaints and feedback. Sports & Entertainment. Phone number for chase debit card.

SpringBoot - Unable to Start embedded TomCat, Probably you can avoid this by changing your project sdk. In my project I initially used java-11-openjdk-amd64 as my JDK and had the same issue. Unable to start spring boot 2 embedded tomcat with trust store #21014. ajitdas91 opened this issue Apr 19, 2020 · 2 comments Labels. for: stackoverflow. Comments.

Your system need to have the following minimum requirements to create a Spring Boot application −. Java 7; Maven 3.2; Gradle 2.5; Spring Boot CLI. The Spring Boot CLI is a command line tool and it allows us to run the Groovy scripts. This is the easiest way to create a Spring Boot application by using the Spring Boot Command Line Interface. In this tutorial, we learned how to configure and use a Tomcat connection pool in Spring Boot. In addition, we developed a basic command line application to show how easy is to work with Spring Boot, a Tomcat connection pool, and the H2 database. As usual, all the code samples shown in this tutorial are available over on GitHub.

Unable to start embedded Tomcat · Issue #10 · spring-guides/gs , Stack trace of thread: [email protected]/jdk.internal.misc. WebServerException: Unable to start embedded Tomcat 2018-10-22 09:55:16.880 INFO 8552 --- ( main) RELEASE) at org.springframework.boot.web.embedded.tomcat. @philwebb Thanks!. Here is the background: I have spring boot 2.0 + jsp. Therefore, I need extend from SpringBootServletInitializer. Last weekend, I noticed its package has been changed from import org.springframework.boot.web.support.SpringBootServletInitializer to import org.springframework.boot.web.servlet.support.SpringBootServletInitializer, which gave me the impression there might be

Unable to start embedded tomcat gradle

Unable to start embedded tomcat Spring boot, I'm working on Spring Boot Gradle application. So If you are using embedded tomcat you dont need the Tomcat dependency and 9001 for management, but when I tried to run it on Tomcat, it failed with the same exception you posted. Application run failed org.springframework.boot.web.server.WebServerException: Unable to start embedded Tomcat server my build.Gradle dependencies config as follows.

Spring 5, Embedded Tomcat 8, and Gradle, As such, when starting a new Java project, Spring is an option that must be considered. Spring vs. Spring Boot. In the past, Spring was known for Unable to start embedded container Spring Boot Application org.apache.catalina.LifecycleException: A child container failed during start 0 Not able run Spring boot application as runnable jar from command prompt

Unable to start embedded Tomcat · Issue #10 · spring-guides/gs , I simply cloned the repo and ran 'mvn spring-boot:run' on the 'eureka-service'. > (ERROR) Failed to execute goal So with the Angel.SR4 (or SR6) for cloud and running a gradle dependencies you will notice that spring-boot 1.2.x is pulled in. As described in the migration guide you have to change your build.gradle :

Caused by: org.springframework.boot.web.server.webserverexception: unable to start embedded tomcat

Spring boot: Unable to start embedded Tomcat servlet container , springframework.boot.web.server.WebServerException: Unable to start embedded Tomcat. I have gone through all of the stackoverflow and articles related to unable to start web server; nested exception is org.springframework.boot.web.server.WebServerException: Unable to start embedded Tomcat. I have gone through all of the stackoverflow and articles related to Unable to start embedded tomcat.

Unable to start embedded Tomcat org.springframework.context , jar confliction between 'starter-web' and embedded tomcat ,use the following instead. <groupId>org.springframework.boot< > (ERROR) Failed to execute goal org.springframework.boot:spring-boot-maven-plugin:2.0.5.RELEASE:run (default-cli) on project eureka-service: An exception occurred while running. null: InvocationTargetException: Unable to start web server; nested exception is org.springframework.boot.web.server.WebServerException: Unable to start embedded

SpringBoot - Unable to Start embedded TomCat, Thread.run (Thread.java:844) Caused by: org.springframework.boot.web.server.WebServerException: Unable to start embedded Tomcat at @philwebb Thanks!. Here is the background: I have spring boot 2.0 + jsp. Therefore, I need extend from SpringBootServletInitializer. Last weekend, I noticed its package has been changed from import org.springframework.boot.web.support.SpringBootServletInitializer to import org.springframework.boot.web.servlet.support.SpringBootServletInitializer, which gave me the impression there might be

Unable to start embedded tomcat eureka

unable to start embedded Tomcat when running Eureka Server , unable to start embedded Tomcat when running Eureka Server. For now I just want to run the server on localhost and later I want to add a sample Microservice that registers to it. The Problem is that I get an error when trying to start Eureka Server. I develop in Eclipse with Spring and Maven in an ubuntu vm. Stack Overflow Public Unable to start embedded Tomcat | Spring Boot Eureka Server WebServerException: Unable to start embedded Tomcat at org.springframework

Can't start embedded Tomcat Server when running an Eureka , Can't start embedded Tomcat Server when running an Eureka Then added @EnableEurekaServer. I'm unable to reproduce this error. Stack Overflow for Teams is a private, secure spot for you and your coworkers to find and share information. unable to start embedded Tomcat when running Eureka Chase chargeback phone number.

Unable to start embedded Tomcat · Issue #10 · spring-guides/gs , Working on a song book pdf. I simply cloned the repo and ran 'mvn spring-boot:run' on the 'eureka-service'. > (ERROR) Failed to execute goal > (ERROR) Failed to execute goal org.springframework.boot:spring-boot-maven-plugin:2.0.5.RELEASE:run (default-cli) on project eureka-service: An exception occurred while running. null: InvocationTargetException: Unable to start web server; nested exception is org.springframework.boot.web.server.WebServerException: Unable to start embedded

Spring boot tomcat admin console

Can I enable the tomcat manager app for Spring Boot's embedded , Does the embedded tomcat 7 used by Spring Boot contain a tomcat manager app. No, it doesn't and I'm not really sure that it makes sense to Does the embedded tomcat 7 used by Spring Boot contain a tomcat manager app No, it doesn't and I'm not really sure that it makes sense to try to add it. A primary function of the manager app is to allow you to start and stop individual applications without stopping the container and to deploy and undeploy individual applications.

Deploy a Spring Boot Application into Tomcat, Create a Spring Boot 2.1 app with Java 11 and deploy into Tomcat 9. Often you need console access to the server from which you pull the latest When you click on the Manager App button the user details you entered In this chapter, you are going to learn how to create a WAR file and deploy the Spring Boot application in Tomcat web server. Spring Boot Servlet Initializer. The traditional way of deployment is making the Spring Boot Application @SpringBootApplication class extend the SpringBootServletInitializer class. Spring Boot Servlet Initializer class file allows you to configure the application when it is launched by using Servlet Container.

How to Configure Spring Boot Tomcat, Learn how to reconfigure the embedded Tomcat server in Spring Boot for some common use cases. Spring Boot Configure Tomcat SSL over HTTPS. by MemoryNotFound · October 31, 2017

Standardengine(tomcat).standardhost(localhost).tomcatembeddedcontext() failed to start

Failed to start component (StandardEngine(Tomcat).StandardHost , Failed to start component (StandardEngine(Tomcat).StandardHost(localhost). TomcatEmbeddedContext()) at java.util.concurrent. void main(String() args) ( SpringApplication.run(SpringBootApplication.class, args); ) ) //ServletInitializer.java Stack Overflow for Teams is a private, secure spot for you and your coworkers to find and share information. Learn more Failed to start component (StandardEngine(Tomcat).StandardHost(localhost).TomcatEmbeddedContext())

Unable to start embedded Tomcat · Issue #10 · spring-guides/gs , StandardHost(localhost).TomcatEmbeddedContext() failed to start -> (Help 1). I tried changing the StandardEngine : Starting Servlet Engine: Apache Tomcat/8.5.34 2018-10-22 09:55:15.309 INFO 8552 --- (ost-startStop-1) o.a.catalina.core. Spring boot built Failed, Failed to start component (StandardEngine(Tomcat).StandardHost(localhost).StandardContext()) Ask Question Asked 3 years, 5 months ago

Spring boot test fails to start tomcat due to some sleuth and spring , LifecycleException: Failed to start component (StandardEngine(Tomcat). TomcatEmbeddedContext()) at org.apache.catalina.util. StandardHost(localhost)) at java.util.concurrent. #param args args */ public static void main(String() args) ( // BasicConfigurator.configure(); Set up a simple configuration that logs on the all i found solution for whatever you all get the exception like. org.apache.catalina.LifecycleException: Failed to start component (StandardEngine(Catalina).StandardHost(localhost).StandardContext()). the problem with bulid path of the jars. To over come this problem. place all jars in 'WebContent/lib' whatever you need to in your project.

Unable to start embedded tomcat java 11

SpringBoot - Unable to Start embedded TomCat, Go to project structure -> Project -> Project SDK and change the java version. I hope it helps. A 'good' pom would have 'spring-boot-starter-web' (for convenience) or else all the dependencies included in the starter listed individually. Just check that you have them. Build artifacts, debug, and deploy to major application servers in the cloud. Apache Tomcat, WildFly, Payara Server, Docker and others.

Spring boot: Unable to start embedded Tomcat servlet container , You need to add the tomcat dependency in your pom <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-tomcat</artifactId> </dependency> and then rerun the code ,and it told me that java.lang. Chids 2,066○1111 silver badges○2020 bronze badges. unable to start web server; nested exception is org.springframework.boot.web.server.WebServerException: Unable to start embedded Tomcat. I have gone through all of the stackoverflow and articles related to Unable to start embedded tomcat.

Unable to start embedded Tomcat · Issue #10 · spring-guides/gs , ApplicationContextException: Unable to start embedded container; nested exception is onRefresh(EmbeddedWebApplicationContext.java:140) have included spring-boot-starter-web and spring-boot-starter-tomcat dependencies in your pom.xml 11. 12. 13. 14. 15. 16. 17. 18. <?xml version='1.0' encoding='UTF-8'?>. (ERROR) Failed to execute goal org.springframework.boot:spring-boot-maven-plugin:2.0.5.RELEASE:run (default-cli) on project eureka-service: An exception occurred while running. null: InvocationTargetException: Unable to start web server; nested exception is org.springframework.boot.web.server.WebServerException: Unable to start embedded Tomcat

Unable to start web server spring-boot

ApplicationContextException: Unable to start , Case 1: @SpringBootApplication annotation missing in your spring boot starter class. Case 2: For non web application, disable web application Spring Boot jar Unable to start web server due to missing ServletWebServerFactory bean Hot Network Questions Did Trump order tear gas to be used on protesters to clear a pathway to a church for a photoshot?

4K 8MP Motorized Varifocal PoE Bullet Outdoor Security Camera with Audio, SD Slot, 2.8-12mm 4X. Poe camera viewer windows 10. What's Power over Ethernet (PoE)? PoE stands for Power over Ethernet, it's a technology that. Blue Iris (Security and Webcam Software) Blue Iris is a CCTV video security program that gives you. SV3C TECHNOLOGY LIMITED was found in 2013, which was specialized in intelligent and security home devices. It is a Hi-tech company which united with R&D, manufacture and marketing. The main products are professional POE IP Camera, Wireless IP Camera, household DIY Camera, accessories etc.

Spring Boot Doesn't Start Tomcat

Ip cam viewer lite for pc. Exception starting up SpringBootApplication, Below is the stack trace in starting up a SpringBoot application. ApplicationContextException: Unable to start web server; nested exception is I have the following Main code in my SpringBoot application ```java package com.oc.springsample; import org.springframework.boot.SpringApplication; public

Spring Boot Don't Start Tomcat

Fixing Spring Boot error 'Unable to start , I was building a new Spring WebFlux application with Spring Boot. ApplicationContextException: Unable to start web server; nested The solution is easy once the root cause is identified. We can either: Update Maven dependencies to exclude spring-webmvc, or; Set the web application type to WebApplicationType.REACTIVE explicitly, as shown below.

Spring Boot Not Start Tomcat

More Articles

0 notes

Text

Spring Cloud Eureka and Hystrix Circuit Breaker using Microservices